Abstract

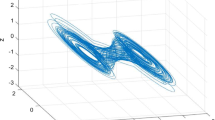

In this paper, an input-to-state stability (ISS) approach is used to derive a new robust weight learning algorithm for dynamic neural networks with external disturbance. Based on linear matrix inequality (LMI) formulation, the ISS learning algorithm is presented to not only guarantee exponential stability but also reduce the effect of an external disturbance. It is shown that the design of the ISS learning algorithm can be achieved by solving LMI, which can be easily facilitated by using some standard numerical packages. A numerical example is presented to demonstrate the validity of the proposed learning algorithm.

Similar content being viewed by others

References

Gupta, M.M., Jin, L., Homma, N.: Static and Dynamic Neural Networks. Wiley-Interscience, New York (2003)

Kelly, D.G.: Stability in contractive nonlinear neural networks. IEEE Trans. Biomed. Eng. 3, 241–242 (1990)

Matsouka, K.: Stability conditions for nonlinear continuous neural networks with asymmetric connections weights. Neural Netw. 5, 495–500 (1992)

Liang, X.B., Wu, L.D.: A simple proof of a necessary and sufficient condition for absolute stability of symmetric neural networks. IEEE Trans. Circuits Syst. I 45, 1010–1011 (1998)

Sanchez, E.N., Perez, J.P.: Input-to-state stability (ISS) analysis for dynamic neural networks. IEEE Trans. Circuits Syst. I 46, 1395–1398 (1999)

Chu, T., Zhang, C., Zhang, Z.: Necessary and sufficient condition for absolute stability of normal neural networks. Neural Netw. 16, 1223–1227 (2003)

Chu, T., Zhang, C.: New necessary and sufficient conditions for absolute stability of neural networks. Neural Netw. 20, 94–101 (2007)

Rovithakis, G.A., Christodoulou, M.A.: Adaptive control of unknown plants using dynamical neural networks. IEEE Trans. Syst. Man Cybern. 24, 400–412 (1994)

Jagannathan, S., Lewis, F.L.: Identification of nonlinear dynamical systems using multilayered neural networks. Automatica 32, 1707–1712 (1996)

Suykens, J.A.K., Vandewalle, J., De Moor, B.: Lur’e systems with multilayer perceptron and recurrent neural networks; absolute stability and dissipativity. IEEE Trans. Autom. Control 44, 770–774 (1999)

Yu, W., Li, X.: Some stability properties of dynamic neural networks. IEEE Trans. Circuits Syst. I 48, 256–259 (2001)

Chairez, I., Poznyak, A., Poznyak, T.: New sliding-mode learning law for dynamic neural network observer. IEEE Trans. Circuits Syst. II 53, 1338–1342 (2006)

Yu, W., Li, X.: Passivity analysis of dynamic neural networks with different time-scales. Neural Process. Lett. 25, 143–155 (2007)

Rubio, J.J., Yu, W.: Nonlinear system identification with recurrent neural networks and dead-zone Kalman filter algorithm. Neurocomputing 70, 2460–2466 (2007)

Suykens, J.A.K., Vandewalle, J., De Moor, B.: NL q theory: checking and imposing stability of recurrent neural networks for nonlinear modelling. IEEE Trans. Signal Process. 45, 2682–2691 (1997)

Sontag, E.D.: Smooth stabilization implies coprime factorization. IEEE Trans. Autom. Control 34, 435–443 (1989)

Sontag, E.D.: Further facts about input to state stabilization. IEEE Trans. Autom. Control 35, 473–476 (1990)

Jiang, Z.P., Teel, A., Praly, L.: Small-gain theorem for ISS systems and applications. Math. Control Signals Syst. 7, 95–120 (1994)

Sontag, E.D., Wang, Y.: On characterizations of the input-to-state stability property. Syst. Control Lett. 24, 351–359 (1995)

Christofides, P.D., Teel, A.R.: Singular perturbations and input-to-state stability. IEEE Trans. Autom. Control 41, 1645–1650 (1996)

Sontag, E.D.: Comments on integral variants of ISS. Syst. Control Lett. 34, 93–100 (1998)

Teel, A.R.: Connections between Razumikhin-type theorems and the ISS nonlinear small gain theorem. IEEE Trans. Autom. Control 43, 960–964 (1998)

Xie, W., Wen, C., Li, Z.: Input-to-state stabilization of switched nonlinear systems. IEEE Trans. Autom. Control 46, 1111–1116 (2001)

Angeli, D., Nesic, D.: Power characterizations of input-to-state stability and integral input-to-state stability. IEEE Trans. Autom. Control 46, 1298–1303 (2001)

Boyd, S., Ghaoui, L.E., Feron, E., Balakrishinan, V.: Linear Matrix Inequalities in Systems and Control Theory. SIAM, Philadelphia (1994)

Gahinet, P., Nemirovski, A., Laub, A.J., Chilali, M.: LMI Control Toolbox. The Mathworks Inc., New York, (1995)

Hopfield, J.J.: Neurons with grade response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. USA 81, 3088–3092 (1984)

Albert, A.E.: Regression and the Moore-Penrose Pseudoinverse. Academic Press, New York (1972)

Strang, G.: Introduction to Applied Mathematics. Wellesley Cambridge Press, Cambridge (1986)

Hunt, K.J., Sbarbaro, D., Zbikowski, R., Gawthrop, P.J.: Neural networks for control systems—a survey. Automatica 28, 1083–1112 (1992)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ahn, C.K. Robust stability of recurrent neural networks with ISS learning algorithm. Nonlinear Dyn 65, 413–419 (2011). https://doi.org/10.1007/s11071-010-9901-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-010-9901-5