Abstract

This paper is concerned with a class of fuzzy BAM cellular neural networks with distributed leakage delays and impulses. By applying differential inequality techniques, we establish some sufficient conditions which ensure the exponential stability of such fuzzy BAM cellular neural networks. An example is given to illustrate the effectiveness of the theoretical results. The results obtained in this article are completely new and complement the previously known studies.

Similar content being viewed by others

1 Introduction

In recent years, a lot of authors pay much attention to dynamics of bidirectional associative memory (BAM) neural networks due to their potential application prospect in many disciplines such as pattern recognition, automatic control engineering, optimization problems, image processing, speed detection of moving objects and so on [1–4]. Since time delays usually occur in neural networks due to the finite switching of amplifiers in practical implementation, and the time delay may result in oscillation and instability of system, many researchers investigate the dynamical nature of delayed BAM neural networks. For example, Xiong et al. [5] discussed the stability of two-dimensional neutral-type Cohen-Grossberg BAM neural networks, Zhang et al. [6] investigated the global stability and synchronization of Markovian switching neural networks with stochastic perturbation and impulsive delay. Some novel generic criteria for Markovian switching neural networks with stochastic perturbation and impulsive delay are derived by establishing an extended Halanay differential inequality on impulsive dynamical systems, in addition, some sufficient conditions ensuring synchronization are established, Wang et al. [7] made a detailed analysis on the exponential stability of delayed memristor-based recurrent neural networks with impulse effects. By using an impulsive delayed differential inequality and Lyapunov function, several exponential and uniform stability criteria of the impulsive delayed memristor-based recurrent neural networks are obtained. Li et al. [8] studied the existence and stability of pseudo almost periodic solution for neutral type high-order Hopfield neural networks with delays in leakage terms on time scales. Applying the exponential dichotomy of linear dynamic equations on time scales, a fixed point theorem, and the theory of calculus on time scales, the authors established some sufficient conditions for the existence and global exponential stability of pseudo almost periodic solutions for the model. For more related work, we refer the reader to [9–20].

Some authors argue that a typical time delay called leakage (or ‘forgetting’) delay may occur in the negative feedback term of the neural networks model (these terms are variously known as forgetting or leakage terms) and have a great impact on the dynamics of neural networks [21–30]. For example, time delay in the stabilizing negative feedback term has a tendency to destabilize a system [31], Balasubramanianm et al. [32] pointed out that the existence and uniqueness of the equilibrium point are independent of time delays and initial conditions. In real world, uncertainty or vagueness is unavoidable. Thus it is necessary to introduce the fuzzy operator into the neural networks. In 2011, Balasubramaniam et al. [33] considered the global asymptotic stability of the following BAM fuzzy cellular neural networks with time delay in the leakage term, discrete and unbounded distributed delays:

The meaning of all the parameters of system (1.1) can be found in [33]. By applying the quadratic convex combination method, reciprocal convex approach, Jensen integral inequality, and linear convex combination technique, Balasubramaniam et al. [33] obtained several sufficient conditions to ensure the global asymptotic stability of the equilibrium point of system (1.1).

Considering that time-varying delays in the leakage terms inevitably occur in electronic neural networks due to the unavoidable finite switching speed of amplifiers [34], Li et al. [34] considered the existence and exponential stability of an equilibrium point for the following fuzzy BAM neural networks with time-varying delays in leakage terms on time scales:

where \({\mathbb{T}}\) is a time scale. Applying fixed point theorem and differential inequality techniques, Li et al. [34] obtained the sufficient condition which ensures the existence and global exponential stability of an equilibrium point for system (1.2). Noticing that the impulsive perturbations usually occur in neural networks, Li and Li [35] investigated the exponential stability of the following BAM fuzzy cellular neural networks with time-varying delays in leakage terms and impulses:

By applying differential inequality techniques, Li and Li [35] established some sufficient conditions which guarantee the exponential stability of model (1.3).

Here we would like to point out that neural networks usually have spatial natures due to the presence of an amount of parallel pathways of a variety of axon sizes and lengths. It is reasonable to introduce continuously distributed delays over a certain duration of time such that the distant past has less influence compared with the recent behavior of the state [1, 36]. Inspired by the analysis above, in this paper we consider the following fuzzy BAM neural networks with distributed leakage delays and impulses:

which is a revised version of model (1.3). Here \(x_{i}(t)\) and \(y_{j}(t)\) are the states of the ith neuron and the jth neuron at time t, \(g_{i}(t)\) and \(f_{j}(t)\) denote the activation functions of the ith neuron and the jth neuron at time t, \(\mu_{i}\) and \(\omega_{j}\) denote the inputs of the ith neuron and the jth neuron, \(A_{i}(t)\) and \(B_{j}(t)\) denote the bias of the ith neuron and the jth neuron at time t, \(a_{i}(t)\) and \(b_{j}(t)\) represent the rates with which the ith neuron and the jth neuron at time t will reset their potential to the resting state in isolation when disconnected from the networks and external inputs, \(a_{ij}(t)\), \(b_{ij}(t)\), \(d_{ji}(t)\), and \(p_{ji}(t)\) denote the connection weights of the feedback template at time t and \(c_{ij}(t)\), \(q_{ji}(t)\) denote the connection weights of the feedforward template at time t, \(\gamma_{ji}(t)\) and \(\eta_{ji}(t)\) denote the connection weights of the delays fuzzy feedback MIN template at time t and the delays fuzzy feedback MAX template at time t, \(T_{ij}\), \(R_{ij}\), and \(H_{ji}\), \(S_{ij}\) are the elements of the fuzzy feedforward MIN template and fuzzy feedforward MAX template, ⋀ and ⋁ denote the fuzzy AND and fuzzy OR operators, \(0\leq\tau(t)\leq\tau\) and \(0\leq\rho(t)\leq \rho\) denote the transmission delays at time t, \(\triangle x_{i}(t_{k})=x_{i}(t_{k}^{+})-x_{i}(t_{k}^{-})\), \(\triangle y_{j}(t_{k})=y_{j}(t_{k}^{+})-y_{j}(t_{k}^{-})\) are the impulses at moments \(t_{k}\) and \(t_{1}< t_{2}<\cdots\) is a strictly increasing sequence such that \(\lim_{k\rightarrow\infty}t_{k}=+\infty\), \(k_{j}(s)\geq0\), and \(k_{i}(s)\geq0 \) are the feedback kernels and satisfy \(\int_{0}^{+\infty}k_{j}(s)\,ds=1\), \(\int_{0}^{+\infty}k_{i}(s)\,ds=1\), \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\).

Our main object of this article is by applying differential inequality techniques to analyze the exponential stability of model (1.4). We expect that this study of the exponential stability of model (1.4) has important theoretical value and tremendous potential for application in designing the BAM cellular neural networks with distributed leakage delays.

Let R and \(R^{+}\) denote the set of all real numbers and nonnegative real numbers, respectively. For the sake of simplification, we introduce the notations as follows: \(f^{+}=\sup_{t\in R}|f(t)|\), \(f^{-}=\inf_{t\in R}|f(t)|\), where \(f: R\rightarrow R\) is a continuous function.

The initial value of system (1.4) is given by

where \(\varphi_{i}(s),\psi_{j}(s)\in C((-\infty,0],R)\), \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\).

Throughout this paper, we assume that the following conditions are satisfied.

-

(H1)

For \(i = 1, 2, \ldots, n\), \(j = 1, 2, \ldots,m\), \(f_{j},g_{i}\in C(R,R)\) and there exist positive constants \(L_{j}^{f}\) and \(L_{i}^{g} \) such that

$$\bigl\vert f_{j}(u)-f_{j}(v)\bigr\vert \leq L_{j}^{f}\vert u-v\vert ,\qquad \bigl\vert g_{i}(u)-g_{i}(v)\bigr\vert \leq L_{i}^{g} \vert u-v\vert $$for \(u,n\in R\).

-

(H2)

For \(i = 1, 2, \ldots, n\), \(j = 1, 2, \ldots,m\), \(a_{i}(t)>0\) and \(b_{j}(t)>0\) for \(t\in R\).

The remainder of the paper is organized as follows: in Section 2, we introduce a useful definition and a lemma. In Section 3, some sufficient conditions which ensure the exponential stability of model (1.4) are established. In Section 3, an example which illustrates the theoretical findings is given. A brief conclusion is drawn in Section 4.

2 Preliminaries

In order to obtain the main result of this paper, we shall first state a definition and a lemma which will be useful in proving the main result.

Definition 2.1

Let \(u^{*}=(x_{1}^{*},x_{2}^{*},\ldots,x_{n}^{*},y_{1}^{*},y_{2}^{*},\ldots,y_{m}^{*})^{T}\) be a solution of system (1.4) with initial value \(\phi^{*}=(\varphi_{1}^{*},\varphi_{2}^{*},\ldots,\varphi_{n}^{*},\psi_{1}^{*},\psi _{2}^{*},\ldots,\psi_{m}^{*})^{T}\), there exists a constant \(\lambda>0\) that, for every solution \(u(t)=(x_{1}(t), x_{2}(t),\ldots, x_{n}(t),y_{1}(t),y_{2}(t),\ldots,y_{m}(t))^{T}\) of equation (1.4) with initial value \(\phi(s)=(\varphi_{1}(s),\varphi_{2}(s),\ldots,\varphi_{n}(s), \psi_{1}(s),\psi_{2}(s), \ldots,\psi_{m}(s))^{T}\), satisfies

where \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\).

Lemma 2.1

[37]

Let x and y be two states of system (1.4). Then

and

3 Exponential stability

In this section, we will consider the exponential stability of system (1.4).

Theorem 3.1

Let \(u^{*}=(x_{1}^{*},x_{2}^{*},\ldots,x_{n}^{*},y_{1}^{*},y_{2}^{*},\ldots,y_{m}^{*})^{T}\) be a solution of system (1.4) with initial value \(\phi^{*}=(\varphi_{1}^{*},\varphi_{2}^{*},\ldots,\varphi_{n}^{*},\psi_{1}^{*},\psi _{2}^{*},\ldots,\psi_{m}^{*})^{T}\) In addition to (H1) and (H2), assume that:

-

(H3)

For \(i=1,2,\ldots, n\), \(j=1,2,\ldots,m\), \(t\in R\),

$$\left \{ \textstyle\begin{array}{l} -a_{i}(t)\int_{0}^{\infty}h_{i}(s)\,ds +a_{i}^{+}\int_{0}^{\infty}h_{i}(s)s \,ds \\ \quad {}+\sum_{j=1}^{m} [(a_{ij}^{+}+b_{ij}^{+})+(\alpha_{ij}^{+}+\beta _{ij}^{+})\int_{0}^{+\infty}k_{j}(t-s) \,ds ]L_{j}^{f}< 0, \\ -b_{j}(t)\int_{0}^{\infty}l_{j}(s)\,ds +b_{j}^{+}\int_{0}^{\infty}l_{j}(s)s \,ds \\ \quad {}+\sum_{i=1}^{n} [(d_{ji}^{+}+p_{ji}^{+})+(\gamma_{ji}^{+}+\eta _{ji}^{+})\int_{0}^{+\infty}k_{i}(t-s) \,ds ]L_{i}^{g}< 0. \end{array}\displaystyle \right . $$ -

(H4)

For \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\), \(k=1,2,\ldots\) ,

$$\begin{aligned}& I_{k}\bigl(x_{i}(t_{k})\bigr)=- \theta_{ik}x_{i}(t_{k}),\quad 0\leq \theta_{ik}\leq 2, \\& J_{k}\bigl(y_{j}(t_{k})\bigr)=- \vartheta_{jk}y_{j}(t_{k}), \quad 0\leq \vartheta_{jk}\leq2. \end{aligned}$$

Then system (1.4) is exponentially stable.

Proof

Let \(u(t)=(x_{1}(t), x_{2}(t),\ldots, x_{n}(t),y_{1}(t),y_{2}(t),\ldots,y_{m}(t))^{T}\) of equation (1.4) with initial value \(\phi(s)=(\varphi_{1}(s),\varphi_{2}(s),\ldots,\varphi_{n}(s), \psi_{1}(s),\psi_{2}(s), \ldots,\psi_{m}(s))^{T}\). Set

and

For \(t>0\), \(t\neq t_{k}\), \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\), \(k=1,2,\ldots\) , it follows from (H4), (1.4), (3.1), and (3.2) that

and

By (3.4), we have

Now we define continuous functions \(\Psi_{i}(\varsigma)\) (\(i=1,2,\ldots,n\)) and \(\Lambda_{j}(\varsigma)\) (\(j=1,2,\ldots,m\)) as follows:

Then we have

In view of the continuity of \(\Psi_{i}(\varsigma)\) (\(i=1,2,\ldots,n\)) and \(\Lambda_{j}(\varsigma)\) (\(j=1,2,\ldots,m\)), then there exists a positive constant λ such that

where \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\). Let

It follows from (3.9) that

where \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\). Let

It follows, for \(t\in(-\infty,0]\), \(t\neq t_{k}\), and \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\), that

Next we prove, for \(t>0\) and \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\), that

If (3.13) does not hold true, then there exist \(i\in\{1,2,\ldots,n\}\), \(j\in\{1,2,\ldots,m\}\), and a first time \(t^{*}>0\) such that one of the following cases (3.14)-(3.21) is satisfied:

If (3.14) holds, then according to (H3), (3.8), and (3.10), we have

which is a contradiction. Thus (3.14) is not hold true. If (3.15) holds, then according to (H3), (3.8), and (3.11), we have

which is also a contradiction, thus (3.15) does not hold true. In a similar way, we can also prove that (3.16)-(3.21) do not hold true. On the other hand, by (3.5) and (3.6), we get

where \(i=1,2,\ldots,n\), \(j=1,2,\ldots,m\), \(k=1,2,\ldots\) . It follows from (3.13) and (3.24) that we have

Therefore system (1.4) is exponentially stable. The proof of Theorem 3.1 is completed. □

Remark 3.1

Duan and Huang [1] investigated the global exponential stability of fuzzy BAM neural networks with distributed delays and time-varying delays in the leakage terms, the model (1.1) in [1] does not involves distributed leakage delays and impulses. In this paper, we studied the exponential stability of system (1.4) with distributed leakage delays and impulses. System (1.4) is more general than those of numerous previous works. All the results obtained in [1] cannot be applicable to model (1.4) to obtain the exponential stability of system (1.4). From this viewpoint, our results on the exponential stability for fuzzy BAM neural networks are essentially new and complement previously known results to some extent.

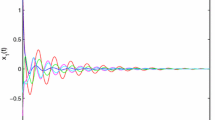

4 Examples

In this section, we present an example to verify the analytical predictions obtained in the previous section. Consider the following fuzzy BAM cellular neural networks with distributed leakage delays and impulses:

where

where \(i,j=1,2\). Then

It is easy to check that (H1) and (H2) hold. In addition, we have

which implies that (H3) holds. Thus all the conditions in Theorem 3.1 are satisfied. Then we can conclude that system (4.1) is exponentially stable.

5 Conclusions

In the present paper, we are concerned with a class of fuzzy BAM cellular neural networks with distributed leakage delays and impulses. A set of sufficient conditions to ensure the exponential stability of such fuzzy BAM cellular neural networks with distributed leakage delays and impulses are established by applying differential inequality techniques. It is shown that distributed leakage delays and impulses play an important role in exponential stability of the neural networks. The results obtained in this paper are completely new and complement the previously known work of [34, 35].

References

Duan, LD, Huang, LH: Global exponential stability of fuzzy BAM neural networks with distributed delays and time-varying delays in the leakage terms. Neural Comput. Appl. 23, 171-178 (2013)

Kosko, B: Adaptive bi-directional associative memories. Appl. Opt. 26(23), 4947-4960 (1987)

Xu, CJ, Zhang, QM: Existence and global exponential stability of anti-periodic solutions for BAM neural networks with inertial term and delay. Neurocomputing 153, 108-116 (2015)

Kosko, B: Bi-directional associative memories. IEEE Trans. Syst. Man Cybern. 18(1), 49-60 (1988)

Xiong, WJ, Shi, YB, Cao, JD: Stability analysis of two-dimensional neutral-type Cohen-Grossberg BAM neural networks. Neural Comput. Appl. (2015). doi:10.1007/s00521-015-2099-1

Zhang, W, Li, CD, Huang, TW, Qi, JT: Global stability and synchronization of Markovian switching neural networks with stochastic perturbation and impulsive delay. Circuits Syst. Signal Process. 34(8), 2457-2474 (2015)

Wang, HM, Duan, SK, Li, CD, Wang, LD: Exponential stability analysis of delayed memristor-based recurrent neural networks with impulse effects. Neural Comput. Appl. (2015). doi:10.1007/s00521-015-2094-6

Li, YK, Yang, L, Li, B: Existence and stability of pseudo almost periodic solution for neutral type high-order Hopfield neural networks with delays in leakage terms on time scales. Neural Process. Lett. (2015). doi:10.1007/s11063-015-9483-9

Cai, ZW, Huang, LH: Functional differential inclusions and dynamic behaviors for memristor-based BAM neural networks with time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 19(5), 1279-1300 (2014)

Zhu, QX, Rakkiyappan, R, Chandrasekar, A: Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136, 136-151 (2014)

Zhang, ZQ, Liu, WB, Zhou, DM: Global asymptotic stability to a generalized Cohen-Grossberg BAM neural networks of neutral type delays. Neural Netw. 25, 94-105 (2012)

Zhang, ZQ, Liu, KY: Existence and global exponential stability of a periodic solution to interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales. Neural Netw. 24(5), 427-439 (2011)

Li, XD: Exponential stability of Cohen-Grossberg-type BAM neural networks with time-varying delays via impulsive control. Neurocomputing 73(1-3), 525-530 (2009)

Anbuvithya, R, Mathiyalagan, K, Sakthivel, R, Prakash, P: Non-fragile synchronization of memristive BAM networks with random feedback gain fluctuations. Commun. Nonlinear Sci. Numer. Simul. 29(1-3), 427-440 (2015)

Arunkumar, A, Sakthivel, R, Mathiyalagan, K, Anthoni, SM: Robust state estimation for discrete-time BAM neural networks with time-varying delay. Neurocomputing 131, 171-178 (2014)

Berezansky, L, Braverman, E, Idels, L: New global exponential stability criteria for nonlinear delay differential systems with applications to BAM neural networks. Appl. Math. Comput. 243, 899-910 (2014)

Li, YK, Wang, C: Existence and global exponential stability of equilibrium for discrete-time fuzzy BAM neural networks with variable delays and impulses. Fuzzy Sets Syst. 217, 62-79 (2013)

Li, YK, Fan, XL: Existence and globally exponential stability of almost periodic solution for Cohen-Grossberg BAM neural networks with variable coefficients. Appl. Math. Model. 33(4), 2114-2120 (2009)

Duan, L, Huang, LH, Guo, ZY: Stability and almost periodicity for delayed high-order Hopfield neural networks with discontinuous activations. Nonlinear Dyn. 77(4), 1469-1484 (2014)

Duan, L, Huang, LH, Guo, ZY: Global robust dissipativity of interval recurrent neural networks with time-varying delay and discontinuous activations. Chaos 26(7), 073101 (2016)

Xu, CJ, Li, PL: Existence and exponentially stability of anti-periodic solutions for neutral BAM neural networks with time-varying delays in the leakage terms. J. Nonlinear Sci. Appl. 9(3), 1285-1305 (2016)

Xu, CJ, Zhang, QM, Wu, YS: Existence and stability of pseudo almost periodic solutions for shunting inhibitory cellular neural networks with neutral type delays and time-varying leakage delays. Netw. Comput. Neural Syst. 25(4), 168-192 (2014)

Song, QK, Zhao, ZJ: Stability criterion of complex-valued neural networks with both leakage delay and time-varying delays on time scales. Neurocomputing 171, 179-184 (2016)

Senthilraj, S, Raja, R, Zhu, QX, Samidurai, R, Yao, ZS: Exponential passivity analysis of stochastic neural networks with leakage, distributed delays and Markovian jumping parameters. Neurocomputing 175, 401-410 (2016)

Rakkiyappan, R, Lakshmanan, S, Sivasamy, R, Lim, CP: Leakage-delay-dependent stability analysis of Markovian jumping linear systems with time-varying delays and nonlinear perturbations. Appl. Math. Model. 40(7-8), 5026-5043 (2016)

Li, YK, Yang, L, Wu, WQ: Anti-periodic solution for impulsive BAM neural networks with time-varying leakage delays on time scales. Neurocomputing 149, 536-545 (2015)

Li, YK, Yang, L: Almost automorphic solution for neutral type high-order Hopfield neural networks with delays in leakage terms on time scales. Appl. Math. Comput. 242, 679-693 (2014)

Wang, F, Liu, MC: Global exponential stability of high-order bidirectional associative memory (BAM) neural networks with time delays in leakage terms. Neurocomputing 177, 515-528 (2016)

Gopalsamy, K: Stability and Oscillations in Delay Differential Equations of Population Dynamics. Kluwer Academic, Dordrecht (1992)

Li, XD, Fu, XL, Balasubramanianm, P, Rakkiyappan, R: Existence, uniqueness and stability analysis of recurrent neural networks with time delay in the leakage term under impulsive perturbations. Nonlinear Anal., Real World Appl. 11, 4092-4108 (2011)

Liu, BW: Global exponential stability for BAM neural networks with time-varying delays in the leakage terms. Nonlinear Anal., Real World Appl. 14(1), 559-566 (2013)

Balasubramanianm, P, Vembarasan, V, Rakkiyappan, R: Leakage delay in T-S fuzzy cellular neural networks. Neural Process. Lett. 33, 111-136 (2011)

Balasubramaniam, P, Kalpana, M, Rakkiyappan, R: Global asymptotic stability of BAM fuzzy cellular neural networks with time delay in the leakage term, discrete and unbounded distributed delays. Math. Comput. Model. 53(5-6), 839-853 (2011)

Li, YK, Yang, L, Sun, LJ: Existence and exponential stability of an equilibrium point for fuzzy BAM neural networks with time-varying delays in leakage terms on time scales. Adv. Differ. Equ. 2013, 218 (2013)

Li, YK, Li, YQ: Exponential stability of BAM fuzzy cellular neural networks with time-varying delays in leakage terms and impulses. Abstr. Appl. Anal. 2014, Article ID 634394 (2014)

Gopalsamy, K, He, XZ: Stability in asymmetric Hopfield nets with transmission delays. Phys. D, Nonlinear Phenom. 76(4), 344-358 (1994)

Yang, T, Yang, LB: The global stability of fuzzy cellular neural networks. IEEE Trans. Circuits Syst. 43(10), 880-883 (1996)

Acknowledgements

The first author was supported by National Natural Science Foundation of China (No. 61673008 and No. 11261010) and Natural Science and Technology Foundation of Guizhou Province (J[2015]2025) and 125 Special Major Science and Technology of Department of Education of Guizhou Province ([2012]011). The second author was supported by National Natural Science Foundation of China (No. 11101126). The authors would like to thank the referees and the editor for helpful suggestions incorporated into this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have equally made contributions. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xu, C., Li, P. Exponential stability for fuzzy BAM cellular neural networks with distributed leakage delays and impulses. Adv Differ Equ 2016, 276 (2016). https://doi.org/10.1186/s13662-016-0978-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0978-0