Abstract

An efficient parallel algorithm for Caputo fractional reaction-diffusion equation with implicit finite-difference method is proposed in this paper. The parallel algorithm consists of a parallel solver for linear tridiagonal equations and parallel vector arithmetic operations. For the parallel solver, in order to solve the linear tridiagonal equations efficiently, a new tridiagonal reduced system is developed with an elimination method. The experimental results show that the parallel algorithm is in good agreement with the analytic solution. The parallel implementation with 16 parallel processes on two eight-core Intel Xeon E5-2670 CPUs is 14.55 times faster than the serial one on single Xeon E5-2670 core.

Similar content being viewed by others

1 Introduction

Fractional differential equations (FDEs) refer to a class of differential equations which use derivatives of non-integer order [1, 2]. Fractional equations have proved to be very reliable models for many scientific and engineering problems [3, 4]. Because it is difficult to solve complex fractional problems analytically, more and more work focuses on numerical solutions [5, 6].

Recently, there has been great interest in FDEs [7–12]. Ahmad et al. [7] discussed the existence of the solution of a Caputo fractional reaction-diffusion equation with various boundary conditions. Rida et al. [8] applied the generalized differential transform method to solve nonlinear fractional reaction-diffusion partial differential equations. Chen et al. used the explicit finite-difference approximation [9] and implicit difference approximation [10] to solve the Riesz space fractional reaction-dispersion equation.

The numerical methods for FDEs include finite-difference methods [13, 14], finite element methods [15] and spectral methods [16–20]. Fractional reaction-diffusion equations are related to spatial and time coordinates, so the numerical solutions are often time-consuming. Large scale applications and algorithms in science and engineering such as neutron transport [21–23], computational fluid dynamics [24–26], large sparse systems [27] rely on parallel computing [24, 28, 29]. In order to overcome the difficulty, parallel computing has been introduced into the numerical solutions for fractional equations [30–32]. Kai Diethelm [31] parallelized the fractional Adams-Bashforth-Moulton algorithm for the first time and the execution time of the algorithm was efficiently reduced. Gong et al. [33] presented a parallel solution for time fractional reaction-diffusion equation with explicit difference method. In the parallel solver, the technology named pre-computing fractional operator was used to optimize performance.

In this paper, we address an efficient parallel algorithm for time fractional reaction-diffusion equation with an implicit finite-difference method. In this parallel algorithm, the system of linear tridiagonal equations, vector-vector additions and constant-vector multiplications are efficiently processed in parallel. The linear tridiagonal system is parallelized with a new elimination method, which is effective and has simplicity in computer programming. The results indicate there is no significant difference between the implementation and the exact solution. The parallel algorithm with 16 parallel processes on two eight-core Intel Xeon E5-2670 CPUs runs 14.55 times faster than the serial algorithm.

This paper focuses on the Caputo fractional reaction-diffusion equation:

on a finite domain \(0\le x\le x_{R}\) and \(0\le t \le T\), where μ and K are constants. If α equals 1, equation (1) is the classical reaction-diffusion equation. The fractional derivative is in the Caputo form.

2 Background

2.1 Numerical solution with implicit finite difference

The fractional derivative of \(f(t)\) in the Caputo sense is defined as [1]

If \(f'(t)\) is continuous bounded derivatives in \([0,T]\) for every \(T>0\), we can get

Define \(\tau= \frac{T}{N}\), \(h=\frac{x_{R}}{M}\), \(t_{n}=n\tau\), \(x_{i}=0+ih\) for \(0\le n \le N\), \(0\le i \le M\). Define \(u_{i}^{n}\), \(\varphi_{i}^{n}\), and \(\phi_{i}\) as the numerical approximations to \(u(x_{i},t_{n})\), \(f(x_{i},t_{n})\), and \(\phi(x_{i})\). We can get [11]

where \(1\le i \le M-1\), \(n \ge1\), and

Using implicit center difference scheme for \(\frac{\partial^{2} u(x,t)}{\partial x^{2}}\), we can get

The implicit finite difference approximation [11] for equation (1) is

Define \(w=\tau\Gamma(1-\alpha)\), \(\lambda_{0}=b_{0}+\mu w+ 2w/h^{2}\), \(\lambda_{1}=-w/h^{2}\), \(\sigma=Kw\), \(U^{n}=(u^{n}_{1},u^{n}_{2}, \ldots, u^{n}_{M-1})^{T}\), \(F^{n}=(\varphi_{1}^{n}, \varphi_{2}^{n}, \ldots, \varphi _{M-1}^{n})^{T}\), and \(r_{l}=b_{l}-b_{l+1}\). Equation (7) evolves as

where the matrix A is a tridiagonal matrix, defined as

3 Parallel algorithm

3.1 Analysis

In order to get \(U^{n}\) from equation (8), two steps need to be processed. One step performs the right-sided computation of equation (8). Set \(V^{n}=\sum_{k=1}^{n-1}r_{n-1-k}U^{k} + b_{n-1}U^{0} + \sigma F^{n}\). The calculation of \(V^{n}\) mainly involves the constant-vector multiplications and vector-vector additions. The other step solves the tridiagonal linear equations \(AU^{n}=V^{n}\). The constant-vector multiplications and vector-vector additions can easily be parallelized. So we only analyze the parallel implementation of solving the tridiagonal linear equations.

As \(2|\lambda_{1}| < |\lambda_{0}|\), the tridiagonal matrix A is strictly diagonally dominant by rows. The dominance factor ϵ can be got by

For strictly diagonally dominant linear systems, one parallel approximation algorithm has been proposed [34]. For given α and N, the dominance factor would be very close to one with big M. The approximation algorithm would decrease the precision of the solution and even exacerbate the convergence. In other words, applying the algorithm to this solution of tridiagonal linear equations with fixed M will obviously increase the number of time steps in order to keep the same precision. Thus, it is necessary to solve tridiagonal linear equations accurately. Moreover, each iteration on time step involves one system of tridiagonal linear equations. The right-hand side \(V^{n}\) varies while the tridiagonal matrix A keeps constant in all time iterations. In order to avoid the repeated calculations, the transformation of the tridiagonal matrix is recorded in the parallel solution of tridiagonal linear equations.

3.2 Parallel solution of tridiagonal linear equations

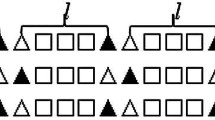

Based on the analysis above, a parallel implementation of solving the tridiagonal linear equations is shown in Algorithm 1. The implementation is based on the divide-and-conquer principle. The number of parallel processes is p. Set \(M-1=pk\) and \(q=\frac{p}{2}\). The matrix A is divided into p blocks as follows:

where

\(G_{i}=g_{(i-1)k+1}e_{1}e_{k}^{T}\), \(i=2,3,\ldots,p\), \(C_{j}=c_{jk}e_{k}e_{1}^{T}\), \(j = 1,2, \ldots, p-1\). \(e_{1}\) and \(e_{k}\) are k-dimensional unit vectors in which the first and last elements are one, respectively. Similarly, U and V can also have corresponding partitions. \(U^{n}=(U_{1}, U_{2}, \ldots, U_{P})^{T}\), \(U_{i}=(u_{(i-1)k+1},\ldots,u_{ik})^{T}\), and \(V^{n}=(V_{1}, V_{2}, \ldots, V_{P})^{T}\), \(V_{i}=(v_{(i-1)k+1},\ldots,v_{ik})^{T}\).

Line 3 allocates \(D_{i}\), \(g_{(i-1)k+1}\), \(c_{jk}\), \(V_{i}\) to the ith process, \(i=1, \ldots, p\).

Lines 4-11 eliminate the lower and upper diagonal elements of sub-matrix \(D_{i}\) and transform diagonal elements to one. For the downward elimination, \(P_{1}\) and \(P_{p}\) begin with the first row of \(D_{i}\) in line 9 while \(P_{i}\) (\(1 < i < p\)) begins with the second row of \(D_{i}\) in line 5. After the downward elimination, the equations in all processes can be transformed to

For the upward elimination, \(P_{1}\) and \(P_{p}\) begin with the last row of \(D_{i}\) in line 10 while \(P_{i}\) (\(1 < i < p\)) begins with the penultimate row of \(D_{i}\) in line 6. The upward elimination makes the equations in all processes become

In the elimination, the tridiagonal matrix and the right-hand-side vector are processed separately.

Line 12 means that \(P_{i}\) (\(1< i\le q\)) and \(P_{i}\) (\(q+1\le i < p\)), respectively, sends the first and last equations to \(P_{1}\) and \(P_{p}\). Those equations, the last one in \(P_{1}\) and the first one in \(P_{p}\) can form the reduced system of equations as follows:

We can find the coefficient matrix in equation (19) is still a tridiagonal matrix. Line 13 means the reduced system of equations is solved only with processes \(P_{1}\) and \(P_{p}\). \(P_{1}\) and \(P_{p}\) deal with the reduced system of equations using the functions in lines 9-11 as well. \(P_{1}\) and \(P_{p}\) exchange the qkth and \(qk+1\)th equations after elimination, and both can obtain \(u_{(q+1)k}\) and \(u_{(q+1)k+1}\). In process \(P_{1}\), \(u_{(i-1)k+1}\) and \(u_{ik}\) are acquired and sent to \(P_{i}\) (\(1 < i \le q\)). In process \(P_{p}\), \(u_{(i-1)k+1}\) and \(u_{ik}\) are got and dispatched to \(P_{i}\) (\(q+1 \le i < p\)). After \(P_{i}\) (\(1 < i < p\)) receives \(u_{(i-1)k+1}\) and \(u_{ik}\) from \(P_{1}\) or \(P_{p}\), \(U_{i}\) can be solved using equation (17). \(U_{1}\) and \(U_{p}\) can also be figured out by equation (16) and equation (18), respectively.

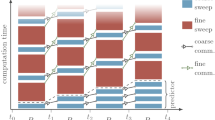

For the parallel implementation of solving tridiagonal linear equations, there are totally three communications shown in Figure 1. The first communication means that \(P_{i}\) (\(1< i< p\)) sends the first and last equations to \(P_{1}\) or \(P_{p}\) after the elimination. The second communication indicates that \(P_{1}\) and \(P_{p}\) exchange the qkth and \(qk+1\)th equations in order to acquire \(u_{(q+1)k}\) and \(u_{(q+1)k+1}\) simultaneously in the solution of the reduced system. The third communication is that \(P_{i}\) (\(1< i< p\)) receives \(u_{(i-1)k+1}\) and \(u_{ik}\) from \(P_{1}\) or \(P_{p}\) after the reduced system is solved.

3.3 Implementation

The parallel algorithm for Caputo fractional reaction-diffusion equation with implicit finite-difference method is proposed as shown in Algorithm 2. \(F(,)\), \(U(,)\), \(V()\), \(A(,)\) are evenly distributed among all processes in order to void the load imbalance. Line 6 computes \(F(,)\), \(A()\), \(r()\) and initializes \(U(,)\), \(V()\). Vector \(r()\) is stored in all processes and calculated simultaneously. Lines 9-13 solve the tridiagonal linear equations \(AU^{0}=V\) for the first time. The loop of line 14 represents iterations on time steps. Since there are dependence between the solutions of adjacent time steps, the parallelization of the solution could be carried out only on space steps. In each iteration on time steps, line 16 solves \(V^{n}=\sum_{k=1}^{n-1}r_{n-1-k}U^{k} + b_{n-1}U^{0} + \sigma F^{n}\) in parallel, and mainly includes parallel constant-vector multiplications and vector-vector additions. Lines 18-22 solve the tridiagonal linear equations \(AU^{n}=V^{n}\) for the \((n+1)\)th time. As the tridiagonal matrix keeps constant in all time iterations, the solution of lines 18-22 does not deal with the tridiagonal matrix and only processes \(V^{n}\) with transform_mid_v() and transform_edg_v(). Particularly, the coefficient matrix of the reduced system also does not vary with time iterations. The elimination in line 22 simply involves the right-sided vector of the reduced system as well. Therefore, the communications in lines 18-22 only transfer the right-hand side of the corresponding equations rather than the entire ones.

4 Experimental results and discussion

The experiment platform is a service node containing two eight-core Intel Xeon E5-2670 CPUs with 128 GB of memory. All codes are compiled with Intel C compiler with level-three optimization and run in double-precision floating-point. The specifications are listed in Table 1.

The following Caputo fractional reaction-diffusion equation [11] is considered, shown in equation (20):

with \(\mu=1\), \(K=1\), \(\alpha=0.7\), and

The exact solution of equation (20) is

4.1 Accuracy of parallel solution

For the example in equation (20), the parallel solution compares well with the exact analytic solution to the fractional partial differential equation shown in Figure 2. Δt and h are \(\frac{1.0}{65}\) and \(\frac{2.0}{65}\). The maximum absolute error is \(7.47 \times10^{-4}\). The difference between serial and parallel solution is only \(4.22 \times10^{-15}\). When different Δt and h are applied, the maximum errors between the exact analytic solution and the parallel solution are shown in Table 2. The maximum error gradually decreases with the increasing number of time and space steps. Altogether, our proposed parallel solution and the exact analytic solution have no noticeable artifacts.

4.2 Performance improvement

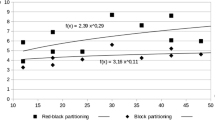

The performance comparison between serial solution (SS) and parallel solution (PS) is shown in Table 3 when different M and N are applied. In PS, 16 processes run in parallel. With \(M=N=65\), PS is a little slower than SS. When M and N are greater than or equal to 129, PS is faster than SS. Compared with SS, the speedup of PS increases gradually with increasing M and N. When M and N increase to 2,049, the runtime of SS reaches 4.03 seconds and that of PS is only \(2.77 \times 10^{-1}\) seconds. Therefore, the speedup between PS and SS rises to 14.55.

4.3 Scalability

With fixed \(M=N=2\text{,}049\), the performance comparison among the parallel solutions with a different number of processes is shown in Table 4. When two parallel processes are used, the speedup is 2.22. When four processes run in parallel, the speedup reaches 4.24. When more than one process is adopted, the total communication cost will increase with the number of parallel processes. However, the speedups in the two situations above outperform the corresponding perfect speedups. The main reason is that the solution of Caputo fractional reaction-diffusion equation with implicit finite-difference method is memory-intensive, and the decrease of memory overhead by the improvement of data locality is more than the increase of communication cost in the parallelization of two or four processes. When the number of processes grows to 16, the speedup is about 14.55 times and the scaling efficiency reaches 91%. Altogether, our proposed parallel solution has good scalability in performance.

5 Conclusions and future work

In this article, we propose a parallel algorithm for time fractional reaction-diffusion equation using the implicit finite-difference method. The algorithm includes a parallel solver for linear tridiagonal equations and parallel vector arithmetic operations. The solver is based on the divide-and-conquer principle and introduces a new tridiagonal reduced system with an elimination method. The experimental results shows the proposed parallel algorithm is valid and runs much more rapidly than the serial solution. The results also demonstrate the algorithm exhibits good scalability in performance. In addition, the introduced tridiagonal reduced system can be regarded as a general method for tridiagonal systems and applied on more applications. In the future, we would like to accelerate the solution of time fractional reaction-diffusion equation on heterogeneous architectures [35].

References

Podlubny, I: Fractional Differential Equations. Academic Press, San Diego (1999)

Debbouche, A, Baleanu, D: Controllability of fractional evolution nonlocal impulsive quasilinear delay integro-differential systems. Comput. Math. Appl. 62(3), 1442-1450 (2011)

Lenzi, EK, Neto, RM, Tateishi, AA, Lenzi, MK, Ribeiro, HV: Fractional diffusion equations coupled by reaction terms. Phys. A, Stat. Mech. Appl. 458, 9-16 (2016)

Hristov, J: Approximate solutions to time-fractional models by integral balance approach. In: Fractals and Fractional Dynamics, pp. 78-109 (2015)

Bhrawy, AH, Taha, TM, Alzahrani, EO, Baleanu, D, Alzahrani, AA: New operational matrices for solving fractional differential equations on the half-line. PLoS ONE 10(5), e0126620 (2015)

Doha, EH, Bhrawy, AH, Baleanu, D, Ezz-Eldien, SS: On shifted Jacobi spectral approximations for solving fractional differential equations. Appl. Math. Comput. 219(15), 8042-8056 (2013)

Ahmad, B, Alhothuali, MS, Alsulami, HH, Kirane, M, Timoshin, S: On a time fractional reaction diffusion equation. Appl. Math. Comput. 257, 199-204 (2015)

Rida, SZ, El-Sayed, AMA, Arafa, AAM: On the solutions of time-fractional reaction-diffusion equations. Commun. Nonlinear Sci. Numer. Simul. 15(12), 3847-3854 (2010). doi:10.1016/j.cnsns.2010.02.007

Chen, J, Liu, F, Turner, I, Anh, V: The fundamental and numerical solutions of the Riesz space fractional reaction-dispersion equation. ANZIAM J. 50, 45-57 (2008)

Chen, J, Liu, F: Stability and convergence of an implicit difference approximation for the space Riesz fractional reaction-dispersion equation. Numer. Math. J. Chin. Univ., Engl. Ser. 16(3), 253-264 (2007)

Chen, J: An implicit approximation for the Caputo fractional reaction-dispersion equation. J. Xiamen Univ. Natur. Sci. 46(5), 616-619 (2007) (in Chinese)

Ding, X-L, Nieto, JJ: Analytical solutions for the multi-term time-space fractional reaction-diffusion equations on an infinite domain. Fract. Calc. Appl. Anal. 18(3), 697-716 (2015)

Wang, H, Du, N: Fast alternating-direction finite difference methods for three-dimensional space-fractional diffusion equations. J. Comput. Phys. 258, 305-318 (2014)

Ferrás, LL, Ford, NJ, Morgado, ML, Nóbrega, JM, Rebelo, MS: Fractional Pennes’ bioheat equation: theoretical and numerical studies. Fract. Calc. Appl. Anal. 18(4), 1080-1106 (2015)

Jiang, Y, Ma, J: High-order finite element methods for time-fractional partial differential equations. J. Comput. Appl. Math. 235(11), 3285-3290 (2011)

Bhrawy, AH, Abdelkawy, MA, Alzahrani, AA, Baleanu, D, Alzahrani, EO: A Chebyshev-Laguerre-Gauss-Radau collocation scheme for solving a time fractional sub-diffusion equation on a semi-infinite domain. Proc. Rom. Acad., Ser. A 16, 490-498 (2015)

Bhrawy, AH, Zaky, MA, Baleanu, D, Abdelkawy, MA: A novel spectral approximation for the two-dimensional fractional sub-diffusion problems. Rom. J. Phys. 60(3-4), 344-359 (2015)

Bhrawy, AH, Doha, EH, Baleanu, D, Ezz-Eldien, SS: A spectral tau algorithm based on Jacobi operational matrix for numerical solution of time fractional diffusion-wave equations. J. Comput. Phys. 293, 142-156 (2015)

Abdelkawy, MA, Zaky, MA, Bhrawy, AH, Baleanu, D: Numerical simulation of time variable fractional order mobile-immobile advection-dispersion model. Rom. Rep. Phys. 67(3), 1-19 (2015)

Bhrawy, AH, Zaky, MA, Baleanu, D: New numerical approximations for space-time fractional Burgers’ equations via a Legendre spectral-collocation method. Rom. Rep. Phys. 67(2), 1-13 (2015)

Rosa, M, Warsa, JS, Perks, M: A cellwise block-Gauss-Seidel iterative method for multigroup \(S_{N}\) transport on a hybrid parallel computer architecture. Nucl. Sci. Eng. 173(3), 209-226 (2013)

Gong, C, Liu, J, Chi, L, Huang, H, Fang, J, Gong, Z: GPU accelerated simulations of 3D deterministic particle transport using discrete ordinates method. J. Comput. Phys. 230(15), 6010-6022 (2011). doi:10.1016/j.jcp.2011.04.010

Wang, Q, Liu, J, Gong, C, Xing, Z: Scalability of 3D deterministic particle transport on the Intel MIC architecture. Nucl. Sci. Tech. 26(5), 50502 (2015)

Xu, C, Deng, X, Zhang, L, Fang, J, Wang, G, Jiang, Y, Cao, W, Che, Y, Wang, Y, Wang, Z, Liu, W, Cheng, X: Collaborating CPU and GPU for large-scale high-order CFD simulations with complex grids on the TianHe-1A supercomputer. J. Comput. Phys. 278, 275-297 (2014)

Wang, Y-X, Zhang, L-L, Liu, W, Che, Y-G, Xu, C-F, Wang, Z-H, Zhuang, Y: Efficient parallel implementation of large scale 3D structured grid CFD applications on the Tianhe-1A supercomputer. Comput. Fluids 80, 244-250 (2013)

Che, Y, Zhang, L, Xu, C, Wang, Y, Liu, W, Wang, Z: Optimization of a parallel CFD code and its performance evaluation on Tianhe-1A. Comput. Inform. 33(6), 1377-1399 (2014)

Bai, Z-Z: Parallel multisplitting two-stage iterative methods for large sparse systems of weakly nonlinear equations. Numer. Algorithms 15(3-4), 347-372 (1997). doi:10.1023/A:1019110324062

Mo, Z, Zhang, A, Cao, X, Liu, Q, Xu, X, An, H, Pei, W, Zhu, S: JASMIN: a parallel software infrastructure for scientific computing. Front. Comput. Sci. China 4(4), 480-488 (2010). doi:10.1007/s11704-010-0120-5

Yang, B, Lu, K, Gao, Y, Wang, X, Xu, K: GPU acceleration of subgraph isomorphism search in large scale graph. J. Cent. South Univ. 22, 2238-2249 (2015)

Gong, C, Bao, W, Tang, G, Jiang, Y, Liu, J: Computational challenge of fractional differential equations and the potential solutions: a survey. Math. Probl. Eng. 2015, 258265 (2015)

Diethelm, K: An efficient parallel algorithm for the numerical solution of fractional differential equations. Fract. Calc. Appl. Anal. 14, 475-490 (2011). doi:10.2478/s13540-011-0029-1

Gong, C, Bao, W, Tang, G: A parallel algorithm for the Riesz fractional reaction-diffusion equation with explicit finite difference method. Fract. Calc. Appl. Anal. 16(3), 654-669 (2013)

Gong, C, Bao, W, Tang, G, Yang, B, Liu, J: An efficient parallel solution for Caputo fractional reaction-diffusion equation. J. Supercomput. 68(3), 1521-1537 (2014). doi:10.1007/s11227-014-1123-z

Chi, L, Liu, J, Li, X: An effective parallel algorithm for tridiagonal linear equations. Chinese J. Comput. 22(2), 218-221 (1999) (in Chinese)

Liao, X, Xiao, L, Yang, C, Lu, Y: MilkyWay-2 supercomputer: system and application. Front. Comput. Sci. 8(3), 345-356 (2014)

Acknowledgements

This research work is supported in part by the National Natural Science Foundation of China under Grant Nos. 11175253, 61303265, 61402039, 91430218, 91530324, 61303265, 61170083 and 61373032, in part by Specialized Research Fund for the Doctoral Program of Higher Education under Grant No. 20114307110001, in part by China Postdoctoral Science Foundation under Grant Nos. 2014M562570 and 2015T81127, and in part by 973 Program of China under Grant Nos. 61312701001 and 2014CB430205.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

Each of the authors contributed to each part of this work equally and read and approved the final version of the manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, Q., Liu, J., Gong, C. et al. An efficient parallel algorithm for Caputo fractional reaction-diffusion equation with implicit finite-difference method. Adv Differ Equ 2016, 207 (2016). https://doi.org/10.1186/s13662-016-0929-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0929-9