Abstract

In this article, we will consider the a class of interval general bidirectional associative memory (BAM) neural networks with multiple delays. Based on the fundamental solution matrix of coefficients, inequality technique and Lyapunov method, we derive a series of sufficient conditions to ensure the existence and exponential stability of anti-periodic solutions of the neural networks with multiple delays. Our findings are new and complement some previously known studies.

Similar content being viewed by others

1 Introduction

As is well known, neural networks have been effectively applied in numerous disciplines such as pattern recognition, classification, associative memory, optimization, signal and image processing, parallel computation, nonlinear optimization problems, and so on (see [1–8]). Thus there is a lot of work focusing on the dynamical nature of various neural networks, such as stability, periodic solution, almost periodic solution, bifurcation, and chaos. A great deal of interesting findings of neural networks have been reported (see [9–19]). In 2006, Ding and Huang [20] investigated the following interval general bidirectional associative memory (BAM) neural networks with multiple delays:

where \(i,j=1,2,\ldots,m\), \(x_{i}\) and \(y_{j}\) stand for the activations of the ith and jth neurons, respectively; \(f_{j}(\cdot, \cdot)\) and \(g_{i}(\cdot, \cdot)\) denote the activation functions of the jth and ith units, respectively; \(a_{i}\) and \(b_{j}\) are constants; the time delays \(\tau_{ji}\) and \(\delta_{ij}\) are nonnegative constants; \(s_{ji}\) and \(t_{ij}\) denote the connection weights, which stand for the strengths of connectivity between cells j and i; and \(c_{i}\) and \(d_{j}\) denote the ith and jth components of an external input source introduced from outside the network to cell i and cell j, respectively. Using fixed point theory, the authors discussed the existence and uniqueness of the equilibrium point for (1.1). By constructing a suitable Lyapunov functions, the authors got some sufficient conditions to ensure the global robust exponential stability of (1.1). In many situations, the coefficients of neural networks often fluctuate in time and it is cockamamie to analyze the existence and stability of periodic solutions for continuous systems and discrete systems, respectively, Zhang and Liu [21] investigated the following interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales:

With the help of the continuation theorem of coincidence degree theory and constructing some suitable Lyapunov functionals, the authors discussed the existence and global exponential stability of periodic solutions for system (1.2).

Lots of researchers think that anti-periodic solutions can describe the dynamical nature of nonlinear differential systems effectively [22–27], for example, the signal transmission process of neural networks can be described as an anti-periodic phenomenon. Then the discussion of the anti-periodic solutions of neural networks has important theoretical value and tremendous potential for applications. Therefore it is meaningful to discuss the existence and stability of anti-periodic solutions of neural networks. In recent decades, there have been many articles that deal with this content. For instance, Li et al. [28] discussed the anti-periodic solution of generalized neural networks with impulses and delays on time scales, Abdurahman and Jiang [29] established some sufficient conditions on the existence and stability of the anti-periodic solution for delayed Cohen-Grossberg neural networks with impulses, Ou [30] considered the anti-periodic solutions for high-order Hopfield neural networks, Peng and Huang [31] made a detailed analysis on the anti-periodic solutions of shunting inhibitory cellular neural networks with distributed delays. For details, see [32–45]. Inspired by the idea and work above, we will investigate the anti-periodic solutions of the following interval general bidirectional associative memory (BAM) neural networks with multiple delays:

where \(i,j=1,2,\ldots,m\). The main object of this article is to discuss the existence and exponential stability of anti-periodic solution for system (1.3). By applying the fundamental solution matrix, the Lyapunov function, and constructing fundamental function sequences based on the solution of networks, we obtain a set of sufficient criteria which ensure the existence and global exponential stability of anti-periodic solutions of system (1.3). The obtained results complement the work of [22–45].

The rest of this paper is organized as follows. In Section 2, we introduce necessary notations and results. In Section 3, we obtain some sufficient criteria on the existence and global exponential stability of anti-periodic solution of the networks. In Section 4, the theoretical predictions are verified by an example and computer simulations. The paper ends with a brief conclusion in Section 5.

2 Preliminary results

In this section, we first introduce some notations and lemmas. Denote

For any vector \(U=(u_{1},u_{2},\ldots,u_{m})^{T}\) and matrix \(M=(m_{ij})_{m\times{m}}\), define the following norm:

Let

where \(\tau=\max_{1\leq{i,j}\leq{m}}\{\tau_{ij}\}\), \(\delta=\max_{1\leq{i,j}\leq {m}}\{\delta_{ji}\}\). Define

The initial conditions of (1.3) are given by

Let \(x(t)=(x_{1}(t),x_{2}(t), \ldots,x_{m}(t))^{T}\), \(y(t)=(y_{1}(t),y_{2}(t), \ldots,y_{m}(t))^{T} \) be the solution of system (1.3) with initial conditions (2.1). We say the solution \(x(t)=(x_{1}(t),x_{2}(t), \ldots,x_{m}(t))^{T}\) is T-anti-periodic on \(R^{m}\) if \(x_{i}(t+T)=-x_{i}(t)\) (\(i=1,2, \ldots,m\)) for all \(t\in{R}\), where T is a positive constant.

Throughout this paper, we assume that the following conditions hold.

-

(H1)

\(f_{i}, g_{i}\in C(R^{2},R)\), \(i,j=1,2,\ldots,m\), there exist constants \(\alpha_{if}>0\), \(\alpha_{ig}>0\), \(\beta_{if}>0\), and \(\beta_{ig}>0\) such that

$$\left \{ \textstyle\begin{array}{lc} |f_{i}(u_{i}, u_{j} )-f_{i}(\bar{u}_{i}, \bar{u}_{j})| \leq\alpha_{if}|u_{i} -\bar {u}_{i}|+\beta_{if}|u_{j} -\bar{u}_{j}|, \qquad |f_{i}(u,v)|\leq F_{i}, \quad i\neq j, \\ |g_{i}(u_{i}, u_{j} )-g_{i}(\bar{u}_{i}, \bar{u}_{j})| \leq\alpha_{ig}|u_{i} -\bar {u}_{i}|+\beta_{ig}|u_{j} -\bar{u}_{j}|,\qquad |g_{i}(u,v)|\leq G_{i},\quad i\neq j \end{array}\displaystyle \right . $$for all \(u_{i}, u_{j}, \bar{u}_{i}, \bar{u}_{j}, u, v \in{R} \).

-

(H2)

For all \(t,u,v\in{R}\),

$$\left \{ \textstyle\begin{array}{l} s_{ji}(t+T)f_{j}(u,v)=-s_{ji}(t)f_{j}(-u,-v), \\ t_{ij}(t+T)g_{i}(u,v)=-t_{ij}(t)g_{i}(-u,-v), \\ c_{i}(t+T)=-c_{i}(t),\qquad d_{j}(t+T)=-d_{j}(t), \end{array}\displaystyle \right . $$where \(i,j=1,2,\ldots,m \) and T is a positive constant.

Definition 2.1

The solution \((x^{*}(t),y^{*}(t))^{T}\) of model (1.3) is said to globally exponentially stable if there exist constants \(\beta>0\) and \(M>1\) such that

for each solution \((x(t),y(t))^{T}\) of model (1.3).

Lemma 2.1

Let

then

Proof

Note that

then

in view of the definition of matrix norm, we have

□

Lemma 2.2

Suppose that

where \(0\leq\epsilon_{j},\varepsilon_{j}, \xi_{i}, \varsigma_{i}<1\) (\(i,j=1,2,\ldots,m\)) are any constants. Then there exists \(\beta>0\) such that

Proof

Let

Clearly, \(\varrho_{1i}(\beta)\), \(\varrho_{2j}(\beta)\) (\(i,j=1,2,\ldots,m\)) are continuously differential functions. One has

According to the intermediate value theorem, we can conclude that there exist constants \(\beta_{i}^{*}>0\), \(\beta_{j}^{*}>0\) such that

Let \(\beta_{0}=\min\{\beta_{1},\beta_{2},\ldots, \beta_{m}, \beta_{1}^{*},\beta_{2}^{*}, \ldots,\beta_{m}^{*}\}\), then it follows that \(\beta_{0}>0\) and

The proof of Lemma 2.2 is complete. □

Lemma 2.3

Suppose that (H1) holds true. Then for any solution \((x(t),y(t))^{T}\) of model (1.3), there exists a constant

such that

Proof

Let

then the model (1.3) takes the following form:

By (2.2), we get

In view of Lemma 2.1, we have

Let

Then it follows that \(|x_{i}(t)|\leq\gamma\), \(|y_{j}(t)|\leq\gamma\) for all \(t>0\). This completes the proof of Lemma 2.3. □

3 Main results

In this section, we state our main findings for model (1.3).

Theorem 3.1

Suppose that (H1)-(H3) are satisfied. Then any solution \((x^{*}(t),y^{*}(t))^{T}\) of model (1.3) is globally exponentially stable.

Proof

Let \(u_{i}(t)=x_{i}(t)-x_{i}^{*}(t)\), \(v_{j}(t)=y_{j}(t)-y_{j}^{*}(t)\), \(i,j=1,2,\ldots,m\). By model (1.3), we have

which leads to

Then

where \(0\leq\epsilon_{j},\varepsilon_{j}, \xi_{i}, \varsigma_{i}<1\), \(i,j=1,2,\ldots,m\). Now we define a Lyapunov function as follows:

where β is defined by Lemma 2.2. Differentiating \(V(t)\) along solutions to model (1.3), together with (3.3), we have

In view of Lemma 2.2, we have \(\frac{dV(t)}{dt}\leq0\), which implies that \(V(t)\leq{V(0)}\) for all \(t>0\). Thus

Let

By (3.6), one has

for all \(t>0\). Then

for all \(t>0\). Thus the solution \((x^{*}(t),y^{*}(t))^{T}\) of model (1.3) is globally exponentially stable. □

Theorem 3.2

Suppose that (H1)-(H3) hold. Then model (1.3) has exactly one T-anti-periodic solution which is globally stable.

Proof

By model (1.3) and (H2), for each \(k\in{N}\), we get

In a similar way, we have

Let

Clearly, for any \(k\in{N}\), \((\bar{x}^{T}(t),\bar{y}^{T}(t))^{T}\) is also the solution of model (1.3). If the initial functions \(\varphi_{i}(s)\), \(\psi_{j}(s)\) (\(i,j=1,2,\ldots,m\)) are bounded, it follows from Theorem 3.1 that there exists a constant \(\gamma>1\) such that

where \(t+kT>0\), \(i=1,2,\ldots,m\). For any \(k\in{N}\) we have

Then

In view of Lemma 2.3, we know that the solutions of system (1.3) are bounded. In view of (3.10) and (3.12), we can easily see that \(\{(-1)^{k+1} x_{i}(t + (k+1)T)\}\) uniformly converges to a continuous function \(x^{*}(t)=(x^{*}_{1}(t),x^{*}_{2}(t), \ldots,x^{*}_{m}(t) )^{T}\) on any compact set of R. In a similar way, we can easily prove that \(\{(-1)^{k+1} y_{j}(t + (k+1)T)\}\) uniformly converges to a continuous function \(y^{*}(t)=(y^{*}_{1}(t),y^{*}_{2}(t), \ldots,y^{*}_{m}(t) )^{T}\) on any compact set of R. Now we will show that \((x^{*}(t), y^{*}(t))^{T}\) is a T-anti-periodic solution of (1.3). Since

Thus \(x^{*}(t)\) is the T-anti-periodic solution. Similarly, \(y^{*}(t)\) is also the T-anti-periodic solution. Thus we know that \((x^{(}t),y^{*}(t))^{T}\) is the solution of model (1.3). In fact, together with the continuity of the right side of model (1.3), letting \(k\to\infty\), we can easily get

Therefore, \((x^{*}(t),y^{*}(t))^{T}\) is the T-periodic solution of (1.3). Finally, by applying Theorem 3.1, it is easy to check that \((x^{*}(t),y^{*}(t))^{T}\) is globally exponentially stable. The proof of Theorem 3.2 is completed. □

Remark 3.1

In [22, 24–45], the authors investigated the existence and exponential stability of anti-periodic solutions for some neural networks by applying the differential inequality techniques, the continuation theorem of coincidence degree theory, the contraction mapping principle, and the Lyapunov functional method, respectively. All the results in [22, 24–45] cannot applicable to model (1.3) to obtain the existence and exponential stability of the anti-periodic solutions. In [23], the authors studied the existence and exponential stability of anti-periodic solutions of neural networks by the fundamental solution matrix of coefficients, the inequality technique, and the Lyapunov method. But the activation functions of neural networks are single variable functions. In this paper, the activation functions of neural networks model (1.3) are two-variable functions. All the results in [23] cannot applicable to model (1.3) to obtain the existence and exponential stability of the anti-periodic solutions. This implies that the results of this paper are essentially new and complement previously known results in [22–45].

4 An example

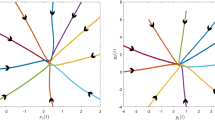

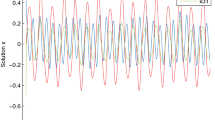

In this section, to illustrate the feasibility of our theoretical findings obtained in previous sections, we give an example. Consider the following interval general bidirectional associative memory (BAM) neural networks with multiple delays:

where \(a_{1}=2\), \(a_{2}=2\), \(b_{1}=2\), \(b_{2}=2.5\), \(s_{11}(t)=0.2+0.2\sin t\), \(s_{21}(t)=0.2+0.1\cos t\), \(s_{12}(t)=0.3+0.2\sin t\), \(s_{22}(t)=0.3+0.1\cos t\), \(t_{11}(t)=0.3+0.1\sin t\), \(t_{21}(t)=0.4+0.1\cos t\), \(t_{12}(t)=0.3+0.2\cos t\), \(t_{22}(t)=0.4+0.1\cos t\), \(c_{1}(t)=0.5+0.1\cos t\), \(c_{2}(t)=0.2+0.1\cos t\), \(d_{1}(t)=0.3+0.1\sin t\), \(d_{2}(t)=0.4+0.1\sin t\), \(\tau_{11}=0.5\), \(\tau_{21}=0.2\), \(\tau_{12}=0.3\), \(\tau_{22}=0.4\), \(\delta_{11}=0.4\), \(\delta_{21}=0.3\), \(\delta_{12}=0.2\), \(\delta_{22}=0.1\). Set \(f_{j}(x_{j},y_{j})=|x_{j}(t)|+|y_{j}(t-\tau_{ji}(t))|\), \(g_{i}(x_{i},y_{i})=|x_{i}(t-\delta_{ij}(t))|+|y_{i}(t)|\), \(i,j=1,2\). Then \(\alpha_{1f}=\alpha_{2f}=\beta_{1f}=\beta_{2f}=\alpha_{1g}=\alpha _{2g}=\beta_{1g}=\beta_{2g}=1\), \(\bar{s}_{11}=0.4\), \(\bar{s}_{21}=0.3\), \(\bar{s}_{12}=0.5\), \(\bar{s}_{22}=0.4\), \(\bar{t}_{11}=0.4\), \(\bar{t}_{21}=0.5\), \(\bar{t}_{12}=0.5\), \(\bar{t}_{22}=0.5\). It is easy to verify that

Then all the conditions (H1)-(H3) hold. Thus model (4.1) has exactly one π-anti-periodic solution which is globally exponentially stable.

5 Conclusions

In this article, a class of interval general bidirectional associative memory (BAM) neural networks with multiple delays have been dealt with. Applying the matrix theory and the inequality technique, a series of sufficient criteria to guarantee the existence and global exponential stability of anti-periodic solutions for the interval general bidirectional associative memory (BAM) neural networks with multiple delays have been established. The derived criteria are easily to check in practice. Finally, an example with its numerical simulations is carried out to illustrative the effectiveness of our findings. The obtained results in this article complement the studies of [22–45].

References

Guo, SJ, Huang, LH: Hopf bifurcating periodic orbits in a ring of neurons with delays. Phys. D, Nonlinear Phenom. 183(1-2), 19-44 (2003)

Song, YY, Han, YY, Peng, YH: Stability and Hopf bifurcation in an unidirectional ring of n neurons with distributed delays. Neurocomputing 121, 442-452 (2013)

Ho, DWC, Liang, JL, Lam, J: Global exponential stability of impulsive high-order BAM neural networks with time-varying delays. Neural Netw. 19(10), 1581-1590 (2006)

Zhang, ZQ, Yang, Y, Huang, YS: Global exponential stability of interval general BAM neural networks with reaction-diffusion terms and multiple time-varying delays. Neural Netw. 24(5), 457-465 (2011)

Zhang, ZQ, Liu, KY: Existence and global exponential stability of a periodic solution to interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales. Neural Netw. 24(5), 427-439 (2011)

Zhang, ZQ, Liu, WB, Zhou, DM: Global asymptotic stability to a generalized Cohen-Grossberg BAM neural networks of neutral type delays. Neural Netw. 25, 94-105 (2012)

Samidurai, R, Sakthivel, R, Anthoni, SM: Global asymptotic stability of BAM neural networks with mixed delays and impulses. Appl. Math. Comput. 212(1), 113-119 (2009)

Raja, R, Anthoni, SM: Global exponential stability of BAM neural networks with time-varying delays: the discrete-time case. Commun. Nonlinear Sci. Numer. Simul. 16(2), 613-622 (2011)

Lakshmanan, S, Park, JH, Lee, TH, Jung, HY, Rakkiyappan, R: Stability criteria for BAM neural networks with leakage delays and probabilistic time-varying delays. Appl. Math. Comput. 219(17), 9408-9423 (2013)

Li, YK, Wang, C: Existence and global exponential stability of equilibrium for discrete-time fuzzy BAM neural networks with variable delays and impulses. Fuzzy Sets Syst. 217, 62-79 (2013)

Zhang, ZQ, Liu, KY, Yang, Y: New LMI-based condition on global asymptotic stability concerning BAM neural networks of neutral type. Neurocomputing 81, 24-32 (2012)

Zhang, CR, Zheng, BD, Wang, LC: Multiple Hopf bifurcations of symmetric BAM neural network model with delay. Appl. Math. Lett. 22(4), 616-622 (2009)

Song, QK, Wang, ZD: An analysis on existence and global exponential stability of periodic solutions for BAM neural networks with time-varying delays. Nonlinear Anal., Real World Appl. 8(4), 1224-1234 (2007)

Sakthivel, R, Arunkumar, A, Mathiyalagan, K, Marshal Anthoni, S: Robust passivity analysis of fuzzy Cohen-Grossberg BAM neural networks with time-varying delays. Appl. Math. Comput. 218(7), 3799-3809 (2011)

Jiang, HJ, Teng, ZD: Boundedness, periodic solutions and global stability for cellular neural networks with variable coefficients and infinite delays. Neurocomputing 72(10-12), 2455-2463 (2009)

Balasubramaniam, P, Vembarasan, V, Rakkiyappan, R: Global robust asymptotic stability analysis of uncertain switched Hopfield neural networks with time delay in the leakage term. Neural Comput. Appl. 21(7), 1593-1616 (2012)

Samidurai, R, Sakthivel, R, Anthoni, SM: Global asymptotic stability of BAM neural networks mixed delays and impulses. Appl. Math. Comput. 212(1), 113-119 (2009)

Balasubramaniam, P, Rakkiyappan, R, Sathy, R: Delay dependent stability results for fuzzy BAM neural networks with Markovian jumping parameters. Expert Syst. Appl. 38(1), 121-130 (2011)

Stamova, IM, Ilarionov, R, Krustev, K: Asymptotic behavior of equilibriums of a class of impulsive bidirectional associative memory neural networks with time-varying delays. Neural Comput. Appl. 20(7), 1111-1116 (2011)

Ding, KE, Huang, NJ: Global robust exponential stability of interval general BAM neural networks with delays. Neural Process. Lett. 23(2), 171-182 (2006)

Zhang, ZQ, Liu, KY: Existence and global exponential stability of a periodic solution to interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales. Neural Netw. 24, 427-439 (2011)

Wei, XR, Qiu, ZP: Anti-periodic solutions for BAM neural networks with time delays. Appl. Math. Comput. 221, 221-229 (2013)

Li, YK, Yang, L: Anti-periodic solutions for Cohen-Grossberg neural networks with bounded and unbounded delays. Commun. Nonlinear Sci. Numer. Simul. 14(7), 3134-3140 (2009)

Shao, JY: Anti-periodic solutions for shunting inhibitory cellular neural networks with time-varying delays. Phys. Lett. A 372(30), 5011-5016 (2008)

Fan, QY, Wang, WT, Yi, XJ: Anti-periodic solutions for a class of nonlinear nth-order differential equations with delays. J. Comput. Appl. Math. 230(2), 762-769 (2009)

Xia, YH, Cao, JD, Lin, MR: Existence and global exponential stability of periodic solution of a class of impulsive networks with infinite delays. Int. J. Neural Syst. 17(1), 35-42 (2007)

Gong, SH: Anti-periodic solutions for a class of Cohen-Grossberg neural networks. Comput. Math. Appl. 58(2), 341-347 (2009)

Li, YK, Xu, EL, Zhang, TW: Existence and stability of anti-periodic solution for a class of generalized neural networks with impulsives and arbitrary delays on time scales. J. Inequal. Appl. 2010, Article ID 132790 (2010)

Abdurahman, A, Jiang, HJ: The existence and stability of the anti-periodic solution for delayed Cohen-Grossberg neural networks with impulsive effects. Neurocomputing 149, 22-28 (2015)

Ou, CX: Anti-periodic solutions for high-order Hopfield neural networks. Comput. Math. Appl. 56(7), 1838-1844 (2008)

Peng, GQ, Huang, LH: Anti-periodic solutions for shunting inhibitory cellular neural networks with continuously distributed delays. Nonlinear Anal., Real World Appl. 10(40), 2434-2440 (2009)

Huang, ZD, Peng, LQ, Xu, M: Anti-periodic solutions for high-order cellular neural networks with time-varying delays. Electron. J. Differ. Equ. 2010, 59 (2010)

Zhang, AP: Existence and exponential stability of anti-periodic solutions for HCNNs with time-varying leakage delays. Adv. Differ. Equ. 2013, 162 (2013). doi:10.1186/1687-1847-2013-162

Li, YK, Yang, L, Wu, WQ: Anti-periodic solutions for a class of Cohen-Grossberg neural networks with time-varying on time scales. Int. J. Syst. Sci. 42(7), 1127-1132 (2011)

Pan, LJ, Cao, JD: Anti-periodic solution for delayed cellular neural networks with impulsive effects. Nonlinear Anal., Real World Appl. 12(6), 3014-3027 (2011)

Li, YK: Anti-periodic solutions to impulsive shunting inhibitory cellular neural networks with distributed delays on time scales. Commun. Nonlinear Sci. Numer. Simul. 16(8), 3326-3336 (2011)

Peng, L, Wang, WT: Anti-periodic solutions for shunting inhibitory cellular neural networks with time-varying delays in leakage terms. Neurocomputing 111, 27-33 (2013)

Shi, PL, Dong, LZ: Existence and exponential stability of anti-periodic solutions of Hopfield neural networks with impulses. Appl. Math. Comput. 216(2), 623-630 (2010)

Wang, Q, Fang, YY, Li, H, Su, LJ, Dai, BX: Anti-periodic solutions for high-order Hopfield neural networks with impulses. Neurocomputing 138, 339-346 (2014)

Li, YK, Yang, L, Wu, WQ: Anti-periodic solution for impulsive BAM neural networks with time-varying leakage delays on time scales. Neurocomputing 149, 536-545 (2015)

Liu, Y, Yang, YQ, Liang, T, Li, L: Existence and global exponential stability of anti-periodic solutions for competitive neural networks with delays in the leakage terms on time scales. Neurocomputing 133, 471-482 (2014)

Xu, CJ, Zhang, QM: On anti-periodic solutions for Cohen-Grossberg shunting inhibitory neural networks with time-varying delays and impulses. Neural Comput. 26(10), 2328-2349 (2014)

Xu, CJ, Zhang, QM: Existence and exponential stability of anti-periodic solutions for a high-order delayed Cohen-Grossberg neural networks with impulsive effects. Neural Process. Lett. 40(3), 227-243 (2014)

Abdurahman, A, Jiang, HJ: The existence and stability of the anti-periodic solution for delayed Cohen-Grossberg neural networks with impulsive effects. Neurocomputing (2014). doi:10.1155/2012/168375

Chen, XF, Song, QK: Global exponential stability of anti-periodic solutions for discrete time neural networks with mixed delays and impulses. Discrete Dyn. Nat. Soc. 2012, Article ID 168375 (2012)

Acknowledgements

The first author was supported by National Natural Science Foundation of China (No. 11401186), Natural Science Foundation of Hubei Province (No. 2013CFAO053). The second author was supported by Development Foundation of Yangtze University (No. 2013CJY01).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have made the same contribution. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Li, X., Ding, D., Feng, J. et al. Existence and exponential stability of anti-periodic solutions for interval general bidirectional associative memory neural networks with multiple delays. Adv Differ Equ 2016, 190 (2016). https://doi.org/10.1186/s13662-016-0882-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0882-7