Abstract

In this paper, the authors employ Lyapunov stability theory, and the M-matrix, H-matrix, and linear matrix inequality (LMI) techniques and variational methods to obtain the LMI-based stochastically exponential robust stability criterion for discrete and distributed time-delays Markovian jumping reaction-diffusion integro-differential equations with uncertain parameters, whose background of physics and engineering is bidirecional associative memory (BAM) neural networks. It is worth mentioning that an LMI-based stability criterion can easily be computed by the Matlab toolbox which has high efficiency and other advantages in large-scale engineering calculations. Since using the M-matrix and H-matrix methods is not easy in obtaining the LMI criterion conditions, the methods employed in this paper improve those of previous related literature to some extent. Moreover, a numerical example is presented to illustrate the effectiveness of the proposed methods.

Similar content being viewed by others

1 Introduction and preliminaries

The time stability analysis of reaction-diffusion time-delays neural networks has attached more and more interests ([1–5] and the references therein) due to the practical importance and successful applications of neural networks in many areas, such as image processing, combinatorial optimization, signal processing, pattern recognition. Generally, an important precondition of the above applications is that the equilibrium of the neural networks should be stable. Hence, the stability analysis of neural networks has always been an important research topic. Many methods have been proposed to reduce the conservatism of the stability criteria, such as the model transformation method, the free-weighting-matrix approach, constructing novel Lyapunov functionals method, the delay decomposition technique, and the weighting-matrix decomposition method. For example, by using a Lyapunov functional, a modified stability condition for neural networks with discrete and distributed delays has been obtained in [6]; by constructing a general Lyapunov functional and convex combination approach, the stability criterion for neural networks with mixed delays has been obtained in [7]; by partitioning the time delay and using the Jensen integral inequalities, the stability condition on delayed neural networks with both discrete and distributed delays has been obtained in [8], by employing homomorphic mapping theory and M-matrix theory, the stability criterion for neural networks with discrete and distributed time-delays has been derived in [9]. However, the above methods seem to become less effective in studying the stability of reaction-diffusion integro-differential equations. Some variational methods should be added (see, e.g., [1, 3, 4]). In the actual operations, diffusion effects cannot be avoided in neural networks when electrons are moving in asymmetric electromagnetic fields. Strictly speaking, all the neural networks models should have been reaction-diffusion partial differential equations. Recently, Lyapunov functionals method and the Poincaré inequality were employed to investigate the stability of discrete and distributed time-delays reaction-diffusion integro-differential equations in [1]. But it should be noted that parameter uncertainties cannot be inevitable as usual. Besides, the neural networks are often disturbed by environmental noise in the real world. The noise may influence the stability of the equilibrium and vary some structure parameters, which usually correspond to the Markov process. Systems with Markovian jumping parameters are frequently dominated by discrete-state homogeneous Markov processes, and each state of the parameters represents a mode of the system. During the recent decade, bidirectional associative memory (BAM) neural networks, neural networks with Markovian jumping parameters, have been extensively studied ([10–14] and the references therein) due to the fact that systems with Markovian jumping parameters are useful in modeling abrupt phenomena, such as random failures, changing in the interconnections of subsystems, and operating in different points of a nonlinear plant. So, in this paper, we are to employ Lyapunov stability theory and the M-matrix, H-matrix, and linear matrix equality (LMI) techniques and variational methods to investigate the stochastically robust exponential stability of a class of discrete and distributed time-delays Markovian jumping reaction-diffusion uncertain parameter integro-differential equations (BAM neural networks) as follows:

where \(x\in\Omega\), and Ω is a bounded domain in \(R^{m}\) with a smooth boundary ∂Ω of class \(\mathcal{C}^{2}\) by Ω (see, e.g., [15]). \(u=u(t,x)=(u_{1}(t,x),u_{2}(t,x),\ldots ,u_{n}(t,x))^{T}\), \(v=v(t,x)=(v_{1}(t,x),v_{2}(t,x),\ldots,v_{n}(t,x))^{T}\in R^{n}\), and \(u_{i}(t,x)\) and \(v_{j}(t,x)\) are state variables of the ith neuron and the jth neuron at time t and in space variable x. \(f(v)=f(v(t,x))=(f_{1}(v_{1}(t,x)),\ldots, f_{n}(v_{n}(t,x)))^{T}\), \(g(u)=g(u(t,x))=(g_{1}(u_{1}(t,x)),\ldots,g_{n}(u_{n}(t,x)))^{T}\), and \(f_{j}(u_{j}(t,x))\), \(g_{j}(u_{j}(t,x))\) are neuron activation functions of the jth unit at time t and in space variable x. \(f(v(t-\tau(t),x))=(f_{1}(v_{1}(t-\tau_{1}(t),x)),\ldots,f_{j}(v_{j}(t-\tau _{j}(t),x)),\ldots,f_{n}(v_{n}(t-\tau_{n}(t),x)))^{T}\), \(g(u(t-\gamma(t),x))=(g_{1}(u_{1}(t-\gamma_{1}(t),x)),\ldots,g_{j}(u_{j}(t-\gamma _{j}(t),x)),\ldots,g_{n}(u_{n}(t-\gamma_{n}(t),x)))^{T}\), \(\tau_{j}(t)\) and \(\gamma_{j}(t)\) are discrete delays, and ϱ and ρ are distributed time-delays. τ is the constant, satisfying \(0\leqslant\max\{\tau_{j}(t),\gamma_{j}(t), \varrho,\rho\}\leqslant\tau\) for all \(j=1,2,\ldots,n\). \(\Theta=\Theta(t,x,u)\), \(\Lambda=\Lambda(t,x,v) \), and \(\Theta\circ \nabla u=(\Theta_{ik} \frac{\partial u_{i}}{\partial x_{k}})_{n\times m}\), \(\Lambda\circ\nabla u=(\Lambda_{ik} \frac{\partial u_{i}}{\partial x_{k}})_{n\times m}\) are the Hadamard products of matrix \(\Theta=(\Theta_{ik})_{n\times m}\) and ∇u, and of matrix \(\Lambda=(\Lambda_{ik})_{n\times m}\) and ∇u, respectively (see [16]). The smooth functions \(\Theta _{ik} = \Theta_{ik}(t,x,u)\geqslant0\) and \(\Lambda_{ik} = \Lambda_{ik}(t,x,v)\geqslant0\) are diffusion operators. \((\Omega_{*}, \Upsilon, \mathbb{P})\) is the given probability space where \(\Omega_{*}\) is the sample space, ϒ is the σ-algebra of the subset of the sample space, and \(\mathbb{P}\) is the probability measure defined on ϒ. Let \(S=\{1, 2, \ldots, N\}\) and the random form process \(\{r(t): [0, +\infty)\to S\}\) be a homogeneous, finite-state Markovian process with right continuous trajectories with generator \(\Pi=(\pi_{ij})_{N\times N}\) and transition probability from mode i at time t to mode j at time \(t +\Delta t\), \(i, j \in S\),

where \(\pi_{ij}\geqslant0\) is the transition probability rate from i to j (\(j\neq i\)) and \(\pi_{ii}=-\sum_{j=1, j\neq i}^{N}\pi_{ij}\), \(\delta>0\), and \(\lim_{\delta\to0}o(\delta)/\delta=0\).

Define the norm \(\|\cdot\|\) for vector \(u\in R^{n}\) and matrix \(C=(c_{ij})_{n\times n}\) as follows:

Denote \(|u|=(|u_{1}|,|u_{2}|,\ldots,|u_{n}|)^{T}\) for the vector \(u=(u_{1},\ldots ,u_{n})^{T}\in R^{n}\), and \(|C|=(|c_{ij}|)_{n\times n}\) for the matrix \(C=(c_{ij})_{n\times n}\).

Definition 1.1

For symmetric matrices A, B, we denote \(A< B\) or \(B>A\) if matrix \(B-A\) is a positive definite matrix. Particularly, \(A>0\) if a symmetric matrix A is a positive definite matrix.

In this paper, we denote by \(\lambda_{\max} C\) and \(\lambda_{\min} C\) the maximum and minimum eigenvalue of matrix C. For any mode \(r(t)=r\in S\), we denote \(A_{r}=A(r(t))\) for convenience. So do \(B_{r}\), \(C_{r}\), \(D_{r}\), \(E_{r}\), \(H_{r}\), \(L_{r}\), and \(W_{r}\). In the real world, the parameter uncertainties are inevitable. We assume that

where \(C^{*}_{r}\), \(D^{*}_{r}\), \(E^{*}_{r}\), \(H^{*}_{r}\), \(L^{*}_{r}\), \(W^{*}_{r}\) are nonnegative matrices. \(A_{r}\), \(B_{r}\) are diagonal matrices.

There are positive definite diagonal matrices \(\underline {A}_{r}\), \(\underline{B}_{r}\), \(\overline{A}_{r}\), and \(\overline{B}_{r}\) such that

There exist positive definite diagonal matrices F and G such that

Throughout this paper, we assume that \(f(0)=g(0)=0\in R^{n}\). Then \(u=0\), \(v=0\) is the null solution for the system (1.1).

Let \(u(t,x ;\phi,\psi,i_{0})\), \(v(t,x ;\phi,\psi,i_{0})\) denote the state trajectory from the initial condition \(r(0)=i_{0}\), \(u(t_{0}+\theta,x ; \phi,\psi,i_{0})=\phi(\theta,x)\), \(v(t_{0}+\theta,x ; \phi,\psi,i_{0})=\psi(\theta,x)\) on \(-\tau\leqslant\theta\leqslant0\) in \(L_{\mathcal{F}_{0}}^{2}([-\tau ,0]\times\Omega; R^{n})\). (Remark: \(t_{0}=0\).) Here, \(L_{\mathcal{F}_{0}}^{2}([-\tau,0]\times \Omega; R^{n})\) denotes the family of all \(\mathcal{F}_{0}\)-measurable \(\mathcal{C}([-\tau,0]\times\Omega; R^{n})\)-value random variables \(\xi=\{\xi(\theta,x): -\tau\leqslant\theta\leqslant0, x\in\Omega\}\) such that \(\sup_{-\tau\leqslant\theta\leqslant0}\mathbb{E}\|\xi(\theta)\| _{2}^{2}<\infty\), where \(\mathbb{E}\{\cdot\}\) stands for the mathematical expectation operator with respect to the given probability measure \(\mathbb{P}\).

Definition 1.2

The system (1.1) is said to be globally stochastically exponentially robust stability if for every initial condition \(\phi, \psi\in L_{\mathcal{F}_{0}}^{2}([-\tau,0]\times\Omega; R^{n})\), \(r(0)=i_{0}\), there exist scalars \(c_{1}>0\), \(c_{2}>0\), \(\beta>0\), and \(\gamma>0\) such that for any solution \(u(t,x ;\phi,\psi,i_{0})\), \(v(t,x ;\phi,\psi,i_{0})\),

\(t\geqslant t_{0}\), for all admissible uncertainties satisfying (1.1), where the norm \(\|u\| _{2}= (\sum_{i=1}^{n}\int_{\Omega}u_{i}^{2}(t, x)\,dx )^{\frac{1}{2}}\) for \(u=(u_{1}(t,x),\ldots, u_{n}(t,x))^{T}\in R^{n}\).

Definition 1.3

[17]

A matrix \(A=(a_{ij})_{n\times n}\) is an H-matrix if its comparison matrix \(M(A)=(m_{ij})_{n\times n}\) is an M-matrix, where

Lemma 1.4

[17]

\(A,B\in z_{n}\triangleq\{ A=(a_{ij})_{n\times n}\in R^{n\times n}: a_{ij}\leqslant0, i\neq j\}\), if A is a M-matrix, A, B satisfy \(a_{ij}\leqslant b_{ij}\), \(i,j=1,2,\ldots,n\), then B is a M-matrix.

Lemma 1.5

[18]

For given constants \(k_{1}\) and \(k_{2}\) satisfying \(k_{1} >k_{2} >0\), \(V(t)\) is a nonnegative continuous function on \([t_{0}-\tau , t_{0}]\), if the following inequality holds:

where

\(\tau>0\) is a constant. Then, as \(t \geqslant t_{0}\), we have

where λ is the unique positive solution of the following equation:

Lemma 1.6

(Schur complement [19])

Given matrices \(\mathcal{Q}(t)\), \(\mathcal{S}(t)\), and \(\mathcal{R}(t)\) with appropriate dimensions, where \(\mathcal{Q}(t)=\mathcal{Q}(t)^{T}\), \(\mathcal{R}(t)=\mathcal{R}(t)^{T}\), then

if and only if

or

where \(\mathcal{Q}(t)\), \(\mathcal{S}(t)\), and \(\mathcal{R}(t)\) are dependent on t.

2 Main result

Denote by \(\lambda_{1}\) the first eigenvalue of −Δ in Sobolev space \(W_{0}^{1,2}(\Omega) \), where

Lemma 2.1

Let \(P_{r}=\operatorname{diag}(p_{r1} ,p_{r2} ,\ldots ,p_{rn})\) be a positive definite matrix, \(\alpha_{r}>0\) with \(\alpha _{r}I\leqslant P_{r}\), and u, v be a solution of system (1.1). Then we have

where I denotes the identity matrix, \(\Theta_{*}=\min_{ik}\inf_{t,x,u}\Theta(t,x,u)_{ik}\geqslant0\), \(\Lambda_{*}=\min_{ik}\inf_{t,x,v}\Lambda (t, x,v)_{ik}\geqslant0\).

Proof

Since u, v is a solution of system (1.1), it follows by the Gauss formula and the Dirichlet boundary condition that

Similarly, we can prove that \(\int_{\Omega}v^{T} P_{r} \nabla\cdot (\Lambda\circ\nabla v )\,dx\leqslant-\lambda_{1}\alpha_{r}\Lambda_{*}\|v\|_{2}^{2}\). Thus, the proof is completed. □

Throughout this paper, we define Φ, Ψ, \(\widetilde{\Phi}\), and \(\widetilde{\Psi}\) as follows:

Lemma 2.2

If the following two inequalities hold:

and

we have

and

where I denotes the identity matrix, and \(\alpha_{r}, \beta , \alpha>0\).

Proof

Let

Since \(C^{*}\), \(E^{*}\), \(L^{*}\) all are nonnegative matrices, U is also a nonnegative matrix.

Then \((\widetilde{\Phi}+U-\frac{(1+\beta )(\tau+1)\max\{(\lambda _{\max}F)^{2},(\lambda_{\max}G)^{2}\}}{\alpha}I )\) is a positive diagonal matrix, and \((\widetilde{\Phi}-\frac{(1+\beta )(\tau+1)\max\{(\lambda _{\max}F)^{2},(\lambda_{\max}G)^{2}\}}{\alpha}I )\) is a M-matrix.

Let

It follows by (1.2) that \(|Q_{ij}|\leqslant U_{ij}\) for all \(i,j=1,2,\ldots,n\). Here, we denote matrices \(Q=(Q_{ij})_{n\times n}\) and \(U=(U_{ij})_{n\times n}\). For convenience, we denote

Denote, in addition, \(\widetilde{Q}= \frac{1}{\alpha} [2\lambda _{1}\alpha_{r}\Theta_{*}I+2P_{r}\underline{A}_{r} -\beta \| C_{r}\| (\lambda_{\max}F)I-G^{2}-\beta \| D_{r}\| (\lambda_{\max }G)I -\beta \| |E_{r}|\|^{2}I -\varrho\beta \| |L_{r}|\|^{2}I-\sum_{j=1}^{N}\pi_{rj}P_{j} ]-\frac{(1+\beta )(\tau+1)\max\{(\lambda_{\max }F)^{2},(\lambda_{\max}G)^{2}\}}{\alpha}I\), and \(\widetilde{Q}=\operatorname{diag}(\widetilde{Q}_{1},\widetilde{Q}_{2}, \ldots ,\widetilde{Q}_{n})\) is a diagonal matrix. So the comparison matrix \(M(\underline{\Phi})=(\mu_{ij})\) of \(\underline {\Phi}\) is

It is obvious that \(\mu_{ij}\geqslant\widehat{\Phi}_{ij}\) for all \(i,j=1,2,\ldots,n\). From Lemma 1.4, we know that \(M(\underline{\Phi})\) is an M-matrix. According to Definition 1.3, \(\underline{\Phi}\) is a H-matrix with positive diagonal elements, it is to say \(\underline{\Phi}>0\). Hence we have proved (2.3) by way of (2.1). Similarly, we can also derive (2.4) from the condition (2.2). The proof is completed. □

Theorem 2.3

Assume that (1.2)-(1.4) hold. If, in addition, there exist a sequence of positive definite diagonal matrices \(P_{r}\) (\(r\in S\)) and positive scalars β, \(\alpha_{r}\) (\(r\in S\)) such that the following LMIs conditions hold:

then the system (1.1) is stochastically global exponential robust stability, where

and α is a positive scalar with \(\alpha<\alpha_{r}\) for all \(r\in S\).

Proof

Let \(P_{r}=\operatorname{diag}(p_{r1},\ldots,p_{rn})\) be a positive definite matrix for mode \(r\in S\), we consider the following Lyapunov-Krasovskii functional:

It follows from Lemma 2.1 that

Obviously, we can get by (1.3)

Besides, we can derive the following inequalities by the assumptions on f, g, β, and \(C_{r}\), \(D_{r}\), \(E_{r}\), \(H_{r}\), \(L_{r}\), \(W_{r}\):

Moreover, we can conclude from the restrictions of α, β that

and

Then we can conclude from (2.9)-(2.20) and the weak infinitesimal operator \(\mathcal{L}\) that

Let \(\Delta t>0\) be small enough, we can integrate (2.21) from t to \(t+\Delta t\) as follows:

Hence,

Let \(k_{1}=\min_{r\in S}\{\lambda_{\min}\Phi,\lambda_{\min}\Psi\} \), and \(k_{2}=\frac{(1+\beta )(\tau+1)\max\{(\lambda_{\max}F)^{2},(\lambda _{\max}G)^{2}\}}{\alpha}\). Moreover, the conditions (2.1) and (2.2) are satisfied owing to (2.7)-(2.10) and Schur complement theorem (Lemma 1.6), and we can conclude from Lemma 2.2 that \(k_{1}\) and \(k_{2}\) satisfy all the conditions in Lemma 1.5. Since \(k_{1}\) and \(k_{2}\) are independent of mode \(r\in S\), it follows by Lemma 1.5 that

where λ is the unique positive solution of the equation \(\lambda =k_{1}-k_{2}e^{\lambda\tau}\), and

where \(\beta=\max_{r\in S} (\lambda_{\max}P_{r} )\).

Moreover, (2.24) and (2.25) yield

from which one derives

According to Definition 1.2, we know that the system (1.1) is stochastically global exponential robust stability. The proof is completed. □

Remark 2.1

In 2015, [9], Theorem 3.3 offered a stability criterion for the following neural networks ([9], (22)) with discrete and distributed time-delays by way of M-matrix and H-matrix methods:

However, using the M-matrix and H-matrix methods is not easy in obtaining the LMI criterion conditions. Motivated by some methods of [9], we employ the Schur complement technique to derive the LMI-based stability criterion - Theorem 2.3.

3 Example

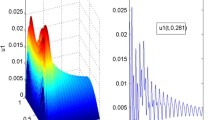

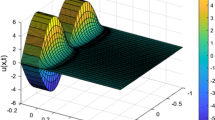

Consider two modes for the Markovian jumping system (1.1).

For Mode 1,

For Mode 2,

Let \(u=(u_{1},u_{2})^{T}\), \(v=(v_{1},v_{2})^{T}\in R^{2}\), \(x=(x_{1},x_{2})\in(0,10)\times (0,10)\subset R^{2}\), and then \(\lambda_{1}=0.02\pi^{2}=0.1974\) (see [22], Remark 2.5). Assume that \(\varrho=0.8\), \(\rho=0.7\), \(\tau=1.2\), and

So \(\Lambda_{*}=0.004\), \(\Theta_{*}=0.005\). Let \(\pi_{11}=-0.6\), \(\pi_{12}=0.6\); \(\pi_{21}=0.2\), \(\pi_{22}=-0.2\). Let \(\alpha=5\), then we use the Matlab LMI toolbox to solve the linear matrices inequalities (2.7)-(2.10), and then the feasibility data follows:

and \(\alpha_{1}=5.2048\), \(\alpha_{2}=5.3765\), \(\beta=6.1317\). Hence, \(\alpha=5<\alpha_{1}\) and \(\alpha=5<\alpha_{2}\). According to Theorem 2.3, we know that the system (1.1) is stochastically global exponential robust stability (see Figures 1-4).

4 Conclusions

LMI-based stability criterion can easily be computed by the Matlab toolbox which has a high efficiency and other advantages in large-scale engineering calculations. In this paper, we employ Lyapunov stability theory, the M-matrix, H-matrix and linear matrix equality (LMI) techniques and variational methods to obtain the LMI-based stochastically exponential robust stability criterion for discrete and distributed time-delays Markovian jumping reaction-diffusion integro-differential equations with uncertain parameters, whose background of physics and engineering is BAM neural networks. Since using the M-matrix and H-matrix methods is not easy in obtaining the LMI criterion conditions, the methods employed in this paper improve those of previous related literature to some extent. Moreover, a numerical example in Section 3 is presented to illustrate the effectiveness of the proposed methods.

References

Zhu, Q, Li, X, Yang, X: Exponential stability for stochastic reaction-diffusion BAM neural networks with time-varying and distributed delays. Appl. Math. Comput. 217(13), 6078-6091 (2011)

Zhang, Z, Yang, Y, Huang, Y: Global exponential stability of interval general BAM neural networks with reaction-diffusion terms and multiple time-varying delays. Neural Netw. 24(5), 457-465 (2011)

Quan, Z, Huang, L, Yu, S, Zhang, Z: Novel LMI-based condition on global asymptotic stability for BAM neural networks with reaction-diffusion terms and distributed delays. Neurocomputing 136(20), 213-223 (2014)

Rao, R, Zhong, S, Wang, X: Delay-dependent exponential stability for Markovian jumping stochastic Cohen-Grossberg neural networks with p-Laplace diffusion and partially known transition rates via a differential inequality. Adv. Differ. Equ. 2013, 183 (2013)

Zhao, K: Global robust exponential synchronization of BAM recurrent FNNs with infinite distributed delays and diffusion terms on time scales. Adv. Differ. Equ. 2014, 317 (2014)

Zhu, X, Wang, Y: Delay-dependent exponential stability for neural networks with discrete and distributed time-varying delays. Phys. Lett. A 373(44), 4066-4072 (2009)

Tian, JK, Zhong, SM: New delay-dependent exponential stability criteria for neural networks with discrete and distributed time-varying delays. Neurocomputing 74(17), 3365-3375 (2011)

Shi, L, Zhu, H, Zhong, SM, Hou, LY: Globally exponential stability for neural networks with time-varying delays. Appl. Math. Comput. 219(21), 10487-10498 (2013)

Chen, H, Zhong, S, Shao, J: Exponential stability criterion for interval neural networks with discrete and distributed delays. Appl. Math. Comput. 250(1), 121-130 (2015)

Du, Y, Zhong, S, Zhou, N: Global asymptotic stability of Markovian jumping stochastic Cohen-Grossberg BAM neural networks with discrete and distributed time-varying delays. Appl. Math. Comput. 243(15), 624-636 (2014)

Zhu, Q, Rakkiyappan, R, Chandrasekar, A: Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136(20), 136-151 (2014)

Balasubramaniam, P, Rakkiyappan, R, Sathy, R: Delay dependent stability results for fuzzy BAM neural networks with Markovian jumping parameters. Expert Syst. Appl. 38(1), 121-130 (2011)

Rao, R, Zhong, S, Wang, X: Stochastic stability criteria with LMI conditions for Markovian jumping impulsive BAM neural networks with mode-dependent time-varying delays and nonlinear reaction-diffusion. Commun. Nonlinear Sci. Numer. Simul. 19(1), 258-273 (2014)

Vadivel, P, Sakthivel, R, Mathiyalagan, K, Thangaraj, P: New passivity criteria for fuzzy BAM neural networks with Markovian jumping parameters and time-varying delays. Rep. Math. Phys. 71(1), 69-91 (2013)

Maz’ya, V, Shaposhnikova, TO: Sobolev Spaces: With Applications to Elliptic Partial Differential Equations, 2nd, augmented edn. Springer, Berlin (2011)

Wang, L, Zhang, Z, Wang, Y: Stochastic exponential stability of the delayed reaction-diffusion recurrent neural networks with Markovian jumping parameters. Phys. Lett. A 372(18), 3201-3209 (2008)

Horn, RA, Johnson, CR: Topics in Matrix Analysis. Cambridge University Press, Cambridge (1991)

Shao, JL, Huang, TZ: A new result on global exponential robust stability of neural networks with time-varying delays. J. Control Theory Appl. 7(3), 315-320 (2009)

Boyd, SP, Ghaoui, LF, Feron, F, Balakrishnan, V: Linear Matrix Inequalities in Systems and Control Theory. SIAM, Philadelphia (1994)

Drábek, P, Manásevich, R: On the closed solution to some nonhomogeneous eigenvalue problems with p-Laplacian. Differ. Integral Equ. 12(6), 773-788 (1999)

Lindqvise, P: On the equation \(\operatorname{div}(|\nabla u|^{p-2}\nabla u) +\lambda|u|^{p-2}u=0\). Proc. Am. Math. Soc. 109, 159-164 (1990)

Pan, J, Zhong, S: Dynamic analysis of stochastic reaction-diffusion Cohen-Grossberg neural networks with delays. Adv. Differ. Equ. 2009, 410823 (2009)

Acknowledgements

We thank the anonymous reviewers very much for their suggestions, which improved the quality of this paper. This work is supported by the Scientific Research Fund of Science Technology Department of Sichuan Province (2012JYZ010), and by the Scientific Research Fund of Sichuan Provincial Education Department (14ZA0274, 12ZB349).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

XY wrote the original manuscript, carrying out the main part of this work. SZ examined the original manuscript in detail before submission. XW and RR are in charge of the correspondence. All authors typed, read, and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yang, X., Wang, X., Zhong, S. et al. Robust stability analysis for discrete and distributed time-delays Markovian jumping reaction-diffusion integro-differential equations with uncertain parameters. Adv Differ Equ 2015, 186 (2015). https://doi.org/10.1186/s13662-015-0526-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-015-0526-3