Abstract

In this paper, new stochastic global exponential stability criteria for delayed impulsive Markovian jumping p-Laplace diffusion Cohen-Grossberg neural networks (CGNNs) with partially unknown transition rates are derived based on a novel Lyapunov-Krasovskii functional approach, a differential inequality lemma and the linear matrix inequality (LMI) technique. The employed methods are different from those of previous related literature to some extent. Moreover, a numerical example is given to illustrate the effectiveness and less conservatism of the proposed method due to the significant improvement in the allowable upper bounds of time delays.

Similar content being viewed by others

1 Introduction

It is well known that Cohen-Grossberg in [1] proposed originally the Cohen-Grossberg neural networks (CGNNs). Since then the CGNNs have found their extensive applications in pattern recognition, image and signal processing, quadratic optimization, and artificial intelligence [2–6]. However, these successful applications are greatly dependent on the stability of the neural networks, which is also a crucial feature in the design of the neural networks. In practice, both time delays and impulse may cause undesirable dynamic network behaviors such as oscillation and instability [2–12]. Therefore, the stability analysis for delayed impulsive CGNNs has become a topic of great theoretic and practical importance in recent years [2–6]. Recently, CGNNs with Markovian jumping parameters have been extensively studied due to the fact that systems with Markovian jumping parameters are useful in modeling abrupt phenomena such as random failures, operating in different points of a nonlinear plant, and changing in the interconnections of subsystems [13–18]. Noise disturbance is unavoidable in real nervous systems, which is a major source of instability and poor performances in neural networks. A neural network can be stabilized or destabilized by certain stochastic inputs. The synaptic transmission in real neural networks can be viewed as a noisy process introduced by random fluctuations from the release of neurotransmitters and other probabilistic causes. Hence, noise disturbance should be also taken into consideration in discussing the stability of neural networks [14–18]. On the other hand, diffusion phenomena cannot be unavoidable in real world. Usually diffusion phenomena were simulated by linear Laplacian diffusion for simplicity in many previous literatures [2, 19–21]. However, diffusion behavior is so complicated that the nonlinear reaction-diffusion models were considered in several papers [3, 22–25]. The nonlinear p-Laplace diffusion () was considered in simulating some diffusion behaviors [3]. In addition, aging of electronic component, external disturbance, and parameter perturbations always result in a side-effect of partially unknown Markovian transition rates [26, 27]. To the best of our knowledge, stochastic stability for the delayed impulsive Markovian jumping p-Laplace diffusion CGNNs has rarely been considered. Besides, the stochastic exponential stability always remains the key factor of concern owing to its importance in designing a neural network, and such a situation motivates our present study. So, in this paper, we shall investigate the stochastic global exponential stability criteria for the above-mentioned CGNN by means of the linear matrix inequalities (LMIs) approach.

2 Model description and preliminaries

The stability of the following Cohen-Grossberg neural networks was studied in some previous literature via the differential inequality (see, e.g., [2]).

where , , .

In this paper, we always assume for some rational reason (see [3]). According to [[2], Definition 2.1], a constant vector is said to be an equilibrium point of system (2.1) if

Let , then system (2.1) with can be transformed into

where , , ,

and

Then, according to [[2], Definition 2.1], is an equilibrium point of system (2.3). Hence, further we only need to consider the stability of the null solution of Cohen-Grossberg neural networks. Naturally, we propose the following hypotheses on system (2.3) with .

(A1) is a bounded, positive, and continuous diagonal matrix, i.e., there exist two positive diagonal matrices and such that .

(A2) such that there exists a positive definite matrix satisfying

(A3) There exist constant diagonal matrices , , with , , , such that

Remark 2.1 In many previous works (see, e.g., [2, 3]), authors always assumed

However, , in (A3) may not be positive constants, and hence the functions f, g are more generic.

Remark 2.2 It is obvious from (2.4) that , and then .

Since stochastic noise disturbance is always unavoidable in practical neural networks, it may be necessary to consider the stability of the null solution of the following Markovian jumping CGNNs:

The initial conditions and the boundary conditions are given by

and

Here is a given scalar, and is a bounded domain with a smooth boundary ∂ Ω of class by Ω, , where is the state variable of the i th neuron and the j th neuron at time t and in a space variable x. Matrix satisfies for all j, k, , where the smooth functions are diffusion operators. denotes the Hadamard product of matrix and (see [13] or [28] for details).

Denote , where is scalar standard Brownian motion defined on a complete probability space with a natural filtration . Noise perturbations is a Borel measurable function. is a right-continuous Markov process on the probability space, which takes values in the finite space with generator given by

where is a transition probability rate from i to j () and , , and . In addition, the transition rates of the Markovian chain are considered to be partially available, namely some elements in the transition rate matrix Π are time-invariant but unknown. For instance, a system with three operation modes may have the transition rate matrix Π as follows:

where ‘?’ represents the inaccessible element. For notational clarity, we denote and for a given . Denote . The time-varying delay satisfies with . , , where represents an amplification function, and is an appropriately behavior function. , and are denoted by , , with , , respectively, and , denote the connection strengths of the k th neuron on the l th neuron in the mode , respectively. is a symmetrical matrix for any given k, i. Denote vector functions , , where , are neuron activation functions of the j th unit at time t and in a space variable x.

In addition, we always assume that and for all , where and represent the left-hand and right-hand limits of at . And each () is an impulsive moment satisfying and . The boundary condition (2.5c) is called the Dirichlet boundary condition or the Neumann boundary condition, which is defined as (6a) in [13]. Similarly as (i)-(xii) of [13], we introduce the following standard notations.

Throughout this paper, we assume (A1)-(A3) and the following conditions hold:

(A4) for all .

Remark 2.3 The condition is not too stringent for a semi-positive definite matrix . Indeed, if all , .

(A5) There exist symmetrical matrices with , such that for any mode ,

Similarly as is [[2], Definition 2.1], we can see from (A4) that system (2.5) has the null solution as its equilibrium point.

Lemma 2.1 [[13], Lemma 6]

Let be a positive definite matrix for a given i, and v be a solution of system (2.5). Then we have

Lemma 2.2 (see [11])

Consider the following differential inequality:

where , , and is continuous except , , where it has jump discontinuities. The sequence satisfies , and . Suppose that

-

(1)

;

-

(2)

, where , and there exist constants , such that

where , is the unique solution of the equation ;

then

In addition, if , then

3 Main results

Theorem 3.1 Assume that . If the following conditions are satisfied:

(C1) there exist a sequence of positive scalars , () and positive definite diagonal matrices (), , and Q such that the following LMI conditions hold:

where for all t, and

(C2) ;

(C3) there exists a constant such that , and , where with and , and is the unique solution of the equation with and ,

then the null solution of system (2.5) is stochastically exponentially stable with the convergence rate .

Proof Consider the Lyapunov-Krasovskii functional

where

From (A3), we have

Denote . Let ℒ be the weak infinitesimal operator such that for any given . Next, it follows by Lemma 2.1, (A1)-(A5), (3.5) and (3.6) that for ,

where is a solution for system (2.5). □

Remark 3.1 Here, we employ some new methods different from those of [[3], (2.4)-(2.6)] in the proof of [[3], Theorem 2.1] and [[2], Theorem 3.1]. Hence, our LMI condition (3.1) is more effective than the LMI condition (2.1) in [[3], Theorem 2.1] even when system (2.5) is reduced to system (3.11) (see Remark 3.3 below).

It is not difficult to conclude from the Itô formula that for ,

Owing to , we can derive

It follows from (C3) that , where with and , and is the unique solution of the equation , satisfying . Moreover, combining (3.8), (3.9), (C2), (C3) and Lemma 2.2 results in

Therefore, we can see by [[13], Definition 2.1] that the null solution of system (2.5) is globally stochastically exponentially stable in the mean square with the convergence rate .

Remark 3.2 Although the employed Lyapunov-Krasovskii functional is simple, together with the condition (A3) it simplifies the proof process. Moreover, the obtained LMI-based criterion is more effective and less conservative than [[3], Theorem 2.1], which will be shown in a numerical example of Remark 3.3.

Moreover, if Markovian jumping phenomena are ignored, system (2.5) is reduced to the following system:

where M is a symmetrical matrix.

In order to compare with the main result of [3], we may as well educe the following corollary based on Theorem 3.1.

Corollary 3.2 If the following conditions are satisfied:

(C1*) there exist positive scalars , and positive definite diagonal matrices P, , and Q such that the following LMI conditions hold :

where

(C2*) ;

(C3*) there exists a constant such that , and , where with and , and is the unique solution of the equation with and ,

then the null solution of system (3.11) is stochastically globally exponential stable in the mean square with the convergence rate .

Remark 3.3 In [[3], Theorem 2.1], in (A5) is assumed to be 0. In addition, are also assumed to be 0. In [[3], Theorem 2.1], if there exist positive definite diagonal matrices , such that the following LMI holds:

and other two conditions similar as (C2*) and (C3*) hold, then the null solution of system (3.11) is stochastically globally exponential stable in the mean square.

Note that in () exercises a malign influence on the negative definite possibility of matrix  when .

when .

Indeed, we may consider system (3.11) with the following parameter:

and , .

Now we use matlab LMI toolbox to solve the LMI (C1*), and the result is , which implies the LMI () is found infeasible, let alone other two conditions (i.e., (C2) and (C3) in [[3], Theorem 2.1]). However, by solving LMIs, Equation (3.3*) can be seen in Page 8, one can obtain and , ,

As pointed out in Remark 3.1 and Remark 3.2, our Theorem 3.1 and its Corollary 3.2 are more feasible and less conservative than [[3], Theorem 2.1] as a result of our new methods employed in this paper.

Remark 3.4 Both the conclusion and the proof methods of Theorem 3.1 are different from those previous related results in the literature (see, e.g., [2, 3]). Below, we shall give a numerical example to show that Theorem 3.1 is more effective and less conservative than some existing results due to significant improvement in the allowable upper bounds of delays.

4 A numerical example

Example 1 Consider the following CGNN under the Neumann boundary condition:

with the initial condition

and the Neumann boundary condition (or the Dirichlet boundary condition), where , , , , , , , , , and

The two cases of the transition rate matrices are considered as follows:

In Case (1), (the empty set), and hence and for all .

Now we use the Matlab LMI toolbox to solve the LMIs (3.1)-(3.3) for Case (1), and the result shows , and , , , , , ,

Next, we shall prove that the above data , , and Q make the conditions (C2) and (C3) hold, respectively.

Indeed, by computing, we have , , , , , , , and then , . Then , and hence (C2) holds.

Let , , and . Solve , and hence . Moreover, it follows by direct computation that . Owing to , we have . Thereby, a direct computation can derive that and .

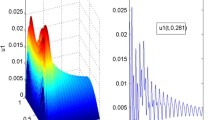

Therefore, it follows from Theorem 3.1 that the null solution of system (4.1) is stochastically exponentially stable with the convergence rate (see, Figures 1-3).

In Case (2), it is obvious that , , . Solving LMIs (3.1)-(3.3) for Case (2), one can obtain , and , , , , , ,

Next, we shall prove that the above data , , and Q make the conditions (C2) and (C3) hold, respectively.

Indeed, we can get by direct computations that , , , , , , , and then , , and hence . So, the condition (C2) in Theorem 3.1 holds.

Similarly, let , , and . Solve , and hence . Moreover, it follows by direct computation that . Owing to , we have . Thereby, a direct computation can derive that and .

Therefore, it follows from Theorem 3.1 that the null solution of system (4.1) is stochastically exponentially stable with the convergence rate .

Table 1 shows that the convergence rate decreases when the number of unknown elements increases.

Remark 4.1 Table 1 shows that the null solution of system (4.1) (or (2.5)) is stochastically globally exponential stable in the mean square for the maximum allowable upper bounds . Hence, as pointed out in Remark 3.3, the approach developed in Theorem 3.1 is more effective and less conservative than some existing results ([[3], Theorem 2.1], [29]).

5 Conclusions

In this paper, new LMI-based stochastic global exponential stability criteria for delayed impulsive Markovian jumping reaction-diffusion Cohen-Grossberg neural networks with partially unknown transition rates and the nonlinear p-Laplace diffusion are obtained, the feasibility of which can be easily checked by the Matlab LMI toolbox. Moreover, numerical example illustrates the effectiveness and less conservatism of all the proposed methods via the significant improvement in the allowable upper bounds of time delays. For further work, we are considering how to make the nonlinear p-Laplace diffusion item play a positive role in the stability criteria, which still remains an open and challenging problem.

References

Cohen M, Grossberg S: Absolute stability and global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans. Syst. Man Cybern. 1983, 13: 815-826.

Zhang X, Wu S, Li K: Delay-dependent exponential stability for impulsive Cohen-Grossberg neural networks with time-varying delays and reaction-diffusion terms. Commun. Nonlinear Sci. Numer. Simul. 2011, 16: 1524-1532. 10.1016/j.cnsns.2010.06.023

Wang XR, Rao RF, Zhong SM: LMI approach to stability analysis of Cohen-Grossberg neural networks with p -Laplace diffusion. J. Appl. Math. 2012., 2012: Article ID 523812 10.1155/2012/523812

Tian JK, Zhong SM: Improved delay-dependent stability criteria for neural networks with two additive time-varying delay components. Neurocomputing 2012, 77: 114-119. 10.1016/j.neucom.2011.08.027

Kao YG, Wang CH, Zhang L: Delay-dependent robust exponential stability of impulsive Markovian jumping reaction-diffusion Cohen-Grossberg neural networks. Neural Process. Lett. 2012. 10.1007/s11063-012-9269-2

Liu ZX, Yu J, Xu DY: Vector Wirtinger-type inequality and the stability analysis of delayed neural network. Commun. Nonlinear Sci. Numer. Simul. 2013, 18: 1246-1257. 10.1016/j.cnsns.2012.09.027

Zhou X, Zhong SM: Riccati equations and delay-dependent BIBO stabilization of stochastic systems with mixed delays and nonlinear perturbations. Adv. Differ. Equ. 2010., 2010: Article ID 494607 10.1155/2010/494607

Li DS, He DH, Xu QY: Mean square exponential stability of impulsive stochastic reaction-diffusion Cohen-Grossberg neural networks with delays. Math. Comput. Simul. 2012, 82: 1531-1543. 10.1016/j.matcom.2011.11.007

Tian JK, Zhong SM: New delay-dependent exponential stability criteria for neural networks with discrete and distributed time-varying delays. Neurocomputing 2011, 74: 3365-3375. 10.1016/j.neucom.2011.05.024

Rao RF, Pu ZL: Stability analysis for impulsive stochastic fuzzy p -Laplace dynamic equations under Neumann or Dirichlet boundary condition. Bound. Value Probl. 2013., 2013: Article ID 133 10.1186/1687-2770-2013-133

Yue D, Xu SF, Liu YQ: Differential inequality with delay and impulse and its applications to design robust control. Control Theory Appl. 1999, 16: 519-524.

Liu ZX, Lü S, Zhong SM, Ye M: Improved robust stability criteria of uncertain neutral systems with mixed delays. Abstr. Appl. Anal. 2009., 2009: Article ID 294845 10.1155/2009/294845

Rao RF, Wang XR, Zhong SM, Pu ZL: LMI approach to exponential stability and almost sure exponential stability for stochastic fuzzy Markovian-jumping Cohen-Grossberg neural networks with nonlinear p -Laplace diffusion. J. Appl. Math. 2013., 2013: Article ID 396903 10.1155/2013/396903

Kao YG, Guo JF, Wang CH, Sun XQ: Delay-dependent robust exponential stability of Markovian jumping reaction-diffusion Cohen-Grossberg neural networks with mixed delays. J. Franklin Inst. 2012, 349: 1972-1988. 10.1016/j.jfranklin.2012.04.005

Zhu Q, Cao J: Stability analysis for stochastic neural networks of neutral type with both Markovian jump parameters and mixed time delays. Neurocomputing 2010, 73: 2671-2680. 10.1016/j.neucom.2010.05.002

Zhu Q, Yang X, Wang H: Stochastically asymptotic stability of delayed recurrent neural networks with both Markovian jump parameters and nonlinear disturbances. J. Franklin Inst. 2010, 347: 1489-1510. 10.1016/j.jfranklin.2010.07.002

Zhu Q, Cao J: Stochastic stability of neural networks with both Markovian jump parameters and continuously distributed delays. Discrete Dyn. Nat. Soc. 2009., 2009: Article ID 490515 10.1155/2009/490515

Zhu Q, Cao J: Robust exponential stability of Markovian jump impulsive stochastic Cohen-Grossberg neural networks with mixed time delays. IEEE Trans. Neural Netw. 2010, 21: 1314-1325.

Liang X, Wang LS: Exponential stability for a class of stochastic reaction-diffusion Hopfield neural networks with delays. J. Appl. Math. 2012., 2012: Article ID 693163 10.1155/2012/693163

Zhang YT: Asymptotic stability of impulsive reaction-diffusion cellular neural networks with time-varying delays. J. Appl. Math. 2012., 2012: Article ID 501891 10.1155/2012/501891

Abdelmalek S: Invariant regions and global existence of solutions for reaction-diffusion systems with a tridiagonal matrix of diffusion coefficients and nonhomogeneous boundary conditions. J. Appl. Math. 2007., 2007: Article ID 12375 10.1155/2007/12375

Higham DJ, Sardar T: Existence and stability of fixed points for a discretised nonlinear reaction-diffusion equation with delay. Appl. Numer. Math. 1995, 18: 155-173. 10.1016/0168-9274(95)00051-U

Baranwal VK, Pandey RK, Tripathi MP, Singh OP: An analytic algorithm for time fractional nonlinear reaction-diffusion equation based on a new iterative method. Commun. Nonlinear Sci. Numer. Simul. 2012, 17: 3906-3921. 10.1016/j.cnsns.2012.02.015

Meral G, Tezer-Sezgin M: The comparison between the DRBEM and DQM solution of nonlinear reaction-diffusion equation. Commun. Nonlinear Sci. Numer. Simul. 2011, 16: 3990-4005. 10.1016/j.cnsns.2011.02.008

Liang F: Blow-up and global solutions for nonlinear reaction-diffusion equations with nonlinear boundary condition. Appl. Math. Comput. 2011, 218: 3993-3999. 10.1016/j.amc.2011.10.021

Tian J, Li Y, Zhao J, Zhong S: Delay-dependent stochastic stability criteria for Markovian jumping neural networks with mode-dependent time-varying delays and partially known transition rates. Appl. Math. Comput. 2012, 218: 5769-5781. 10.1016/j.amc.2011.11.087

Sathy R, Balasubramaniam P: Stability analysis of fuzzy Markovian jumping Cohen-Grossberg BAM neural networks with mixed time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 2011, 16: 2054-2064. 10.1016/j.cnsns.2010.08.012

Rao, RF, Zhong, SM, Wang, XR: Stochastic stability criteria with LMI conditions for Markovian jumping impulsive BAM neural networks with mode-dependent time-varying delays and nonlinear reaction-diffusion. Commun. Nonlinear Sci. Numer. Simul. doi:10.1016/j.cnsns.2013.05.024 (2013, in press). 10.1016/j.cnsns.2013.05.024

Liu HY, Ou Y, Hu J, Liu TT: Delay-dependent stability analysis for continuous-time BAM neural networks with Markovian jumping parameters. Neural Netw. 2010, 23: 315-321. 10.1016/j.neunet.2009.12.001

Acknowledgements

The authors would like to thank the editor and the anonymous referees for their detailed comments and valuable suggestions which considerably improved the presentation of this paper. This work was supported in part by the National Basic Research Program of China (2010CB732501), the Scientific Research Fund of Science Technology Department of Sichuan Province (2012JYZ010), and the Scientific Research Fund of Sichuan Provincial Education Department (12ZB349).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

RR completed the proof and wrote the initial draft. SZ and XW gave some suggestions on the amendment. RR then finalized the manuscript. Correspondence was mainly done by RR. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Rao, R., Zhong, S. & Wang, X. Delay-dependent exponential stability for Markovian jumping stochastic Cohen-Grossberg neural networks with p-Laplace diffusion and partially known transition rates via a differential inequality. Adv Differ Equ 2013, 183 (2013). https://doi.org/10.1186/1687-1847-2013-183

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2013-183