Abstract

The split feasibility problem (SFP) is finding a point \(x\in C\) such that \(Ax\in Q\), where C and Q are nonempty closed convex subsets of Hilbert spaces \(H_{1}\) and \(H_{2}\), and \(A:H_{1}\rightarrow H_{2}\) is a bounded linear operator. Byrne’s CQ algorithm is an effective algorithm to solve the SFP, but it needs to compute \(\|A\|\), and sometimes \(\|A\| \) is difficult to work out. López introduced a choice of stepsize \(\lambda_{n}\), \(\lambda_{n}=\frac{\rho_{n}f(x_{n})}{\|\nabla f(x_{n})\| ^{2}}\), \(0<\rho_{n}<4\). However, he only obtained weak convergence theorems. In order to overcome the drawbacks, in this paper, we first provide a regularized CQ algorithm without computing \(\|A\|\) to find the minimum-norm solution of the SFP and then obtain a strong convergence theorem.

Similar content being viewed by others

1 Introduction

Let \(H_{1}\) and \(H_{2}\) be real Hilbert spaces and let C and Q be nonempty closed convex subsets of \(H_{1}\) and \(H_{2}\), and let \(A:H_{1}\rightarrow H_{2}\) be a bounded linear operator. Let \(\mathbb {N}\) and \(\mathbb{R}\) denote the sets of positive integers and real numbers.

In 1994, Censor and Elfving [1] came up with the split feasibility problem (SFP) in finite-dimensional Hilbert spaces. In infinite-dimensional Hilbert spaces, it can be formulated as

where C and Q are nonempty closed convex subsets of \(H_{1}\) and \(H_{2}\), and \(A:H_{1}\rightarrow H_{2}\) is a bounded linear operator. Suppose that SFP (1.1) is solvable, and let S denote its solution set. The SFP is widely applied to signal processing, image reconstruction and biomedical engineering [2–4].

So far, some authors have studied SFP (1.1) [5–17]. Others have also found a lot of algorithms to study the split equality fixed point problem and the minimization problem [18–20]. Byrne’s CQ algorithm is an effective method to solve SFP (1.1). A sequence \(\{x_{n}\}\), generated by the formula

where the parameters \(\lambda_{n}\in(0,\frac{2}{\|A\|^{2}})\), \(P_{C}: H\rightarrow C\), and \(P_{Q}: H \rightarrow Q\), is a set of orthogonal projections.

As is well-known, Cencor and Elfving’s algorithm needs to compute \(A^{-1}\), and Byrne’s CQ algorithm needs to compute \(\|A\|\). However, they are difficult to calculate.

Consider the following convex minimization problem:

where

\(f(x)\) is differentiable and the gradient ∇f is L-Lipschitz with \(L>0\).

The gradient-projection algorithm [21] is the most effective method to solve (1.3). A sequence \(\{x_{n}\}\) is generated by the recursive formula

where the parameter \(\lambda_{n}\in(0,\frac{2}{L})\). Then we know that Byrne’s CQ algorithm is a special case of the gradient-projection algorithm.

In Byrne’s CQ algorithm, \(\lambda_{n}\) depends on the operator norm \(\| A\|\). However, it is difficult to compute. In 2005, Yang [22] considered \(\lambda_{n}\) as follows:

where \(\rho_{n}>0\) and satisfies

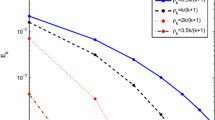

In 2012, López [23] introduced \(\lambda_{n}\) as follows:

where \(0<\rho_{n}<4\). However, López’s algorithm only has weak convergence.

In 2013, Yao [24] introduced a self-adaptive method for the SFP and obtained a strong convergence theorem. However, the algorithm is difficult to work out.

In general, there are two types of algorithms to solve SFPs. One is the algorithm which depends on the norm of the operator. The other is the algorithm without a priori knowledge of the operator norm. The first type of algorithm needs to calculate \(\|A\|\), but \(\|A\|\) is not easy to work out. The second type of algorithm also has a drawback. It always has weak convergence. If we want to obtain strong convergence, we have to use the composited iterative method, but then the algorithm is difficult to calculate. In order to overcome the drawbacks, we propose a new regularized CQ algorithm without a priori knowledge of the operator norm to solve the SFP and we obtain a strong convergence theorem.

Consider the following regularized minimization problem:

where the regularization parameter \(\beta>0\). A sequence \(\{x_{n}\}\) is generated by the formula

where \(\nabla f(x_{n})=A^{*}(I-P_{Q})Ax_{n}\), \(0<\beta_{n}<1\), and \(\lambda_{n}=\frac{\rho_{n}f(x_{n})}{\|\nabla f(x_{n})\|^{2}}\), \(0<\rho _{n}<4\). Then, under suitable conditions, the sequence \(\{x_{n}\}\) generated by (1.8) converges strongly to a point \(z\in S\), where \(z=P_{S}(0)\) is the minimum-norm solution of SFP (1.1).

2 Preliminaries

In this part, we introduce some lemmas and some properties that are used in the rest of the paper. Throughout this paper, let \(H_{1}\) and \(H_{2}\) be real Hilbert spaces, \(A:H_{1}\rightarrow H_{2}\) be a bounded linear operator and I be the identity operator on \(H_{1}\) or \(H_{2}\). If \(f:H\rightarrow\mathbb{R}\) is a differentiable functional, then the gradient of f is denoted by ∇f. We use the sign ‘→’ to denote strong convergence and use the sign ‘⇀’ to denote weak convergence.

Definition 2.1

See [25]

Let D be a nonempty subset of H, and let \(T:D\rightarrow H\). Then T is firmly nonexpansive if

Lemma 2.2

See [26]

Let \(T:H\rightarrow H\) be an operator. Then the following are equivalent:

-

(i)

T is firmly nonexpansive,

-

(ii)

\(I-T\) is firmly nonexpansive,

-

(iii)

\(2T-I\) is nonexpansive,

-

(iv)

\(\|Tx-Ty\|^{2}\leq\langle x-y, Tx-Ty \rangle\), \(\forall x, y \in H\),

-

(v)

\(0\leq\langle Tx-Ty, (I-T)x-(I-T)y\rangle\).

Recall \(P_{C}: H\rightarrow C\) is an orthogonal projection, where C is a nonempty closed convex subset of H. Then to each point \(x\in H\), the unique point \(P_{C}x\in C\) satisfies the following property:

\(P_{C}\) also has the following characteristics.

Lemma 2.3

See [27]

For a given \(x\in H\),

-

(i)

\(z=P_{C}x \Longleftrightarrow\langle x-z,z-y\rangle\geq0\), \(\forall y\in C\),

-

(ii)

\(z=P_{C}x \Longleftrightarrow\|x-z\|^{2}\leq\|x-y\|^{2}-\|y-z\| ^{2}\), \(\forall y\in C\),

-

(iii)

\(\langle P_{C}x-P_{C}y, x-y\rangle\geq\|P_{C}x-P_{C}y\|^{2}\), \(\forall x,y\in H\).

Lemma 2.4

See [28]

Let f be given by (1.4). Then

-

(i)

f is convex and differential,

-

(ii)

\(\nabla f(x)=A^{*}(I-P_{Q})Ax\), \(\forall x\in H\),

-

(iii)

f is w-lsc on H,

-

(iv)

∇f is \(\|A\|^{2}\)-Lipschitz: \(\|\nabla f(x)-\nabla f(y)\|\leq\|A\|^{2}\|x-y\|\), \(\forall x,y\in H\).

Lemma 2.5

See [29]

Let \(\{a_{n}\}\) be a sequence of nonnegative real numbers such that

where \(\{\alpha_{n}\}_{n=0}^{\infty}\) is a sequence in \((0,1)\) and \(\{ \delta_{n}\}_{n=0}^{\infty}\) is a sequence in \(\mathbb{R}\) such that

-

(i)

\(\sum_{n=0}^{\infty}\alpha_{n} = \infty\),

-

(ii)

\(\limsup_{n\rightarrow\infty}\delta_{n} \leq0\) or \(\sum_{n=0}^{\infty}\alpha_{n}|\delta_{n}| < \infty\).

Then \(\lim_{n\rightarrow\infty}a_{n} = 0\).

Lemma 2.6

See [30]

Let \(\{\gamma_{n}\}_{n\in\mathbb{N}}\) be a sequence of real numbers such that there exists a subsequence \(\{\gamma_{n_{i}}\}_{i\in \mathbb{N}}\) of \(\{\gamma_{n}\}_{n\in\mathbb{N}}\) such that \(\gamma _{n_{i}}<\gamma_{n_{i}+1}\) for all \(i\in\mathbb{N}\). Then there exists a nondecreasing sequence \(\{m_{k}\}_{k\in\mathbb{N}}\) of \(\mathbb{N}\) such that \(\lim_{k\rightarrow\infty}m_{k}=\infty\) and the following properties are satisfied by all (sufficiently large) numbers \(k\in \mathbb{N}\):

In fact, \(m_{k}\) is the largest number n in the set \(\{1,\ldots,k\}\) such that the condition

holds.

3 Main results

In this paper, we always assume that \(f:H\rightarrow\mathbb{R}\) is a real-valued convex function, where \(f(x)=\frac{1}{2}\|(I-P_{Q})Ax\|^{2}\), the gradient \(\nabla f(x)=A^{*}(I-P_{Q})Ax\), C and Q are nonempty closed convex subsets of real Hilbert spaces \(H_{1}\) and \(H_{2}\), and \(A:H_{1}\rightarrow H_{2}\) is a bounded linear operator.

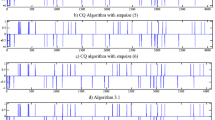

Algorithm 3.1

Choose an initial guess \(x_{0}\in H\) arbitrarily. Assume that the nth iterate \(x_{n}\in C\) has been constructed and \(\nabla f(x_{n})\neq0\). Then we calculate the \((n+1)\)th iterate \(x_{n+1}\) via the formula

where \(\lambda_{n}\) is chosen as follows:

with \(0<\rho_{n}<4\). If \(\nabla f(x_{n})= 0\), then \(x_{n+1}=x_{n}\) is a solution of SFP (1.1) and the iterative process stops. Otherwise, we set \(n:=n+1\) and go to (3.1) to evaluate the next iterate \(x_{n+2}\).

Theorem 3.1

Suppose that \(S\neq\emptyset\) and the parameters \(\{\beta_{n}\}\) and \(\{\rho_{n}\}\) satisfy the following conditions:

-

(i)

\(\{\beta_{n}\}\subset(0,1)\), \(\lim_{n\rightarrow\infty}\beta _{n}=0\), \(\sum_{n=1}^{\infty}\beta_{n} = \infty\),

-

(ii)

\(\varepsilon\leq\rho_{n}\leq4-\varepsilon\) for some \(\varepsilon>0\) small enough.

Then the sequence \(\{x_{n}\}\) generated by Algorithm 3.1 converges strongly to \(z\in S\), where \({z=P_{S}(0)}\).

Proof

Let \(x^{*}\in S\). Since minimization is an exactly fixed point of its projection mapping, we have \(x^{*}=P_{C}x^{*}\) and \(Ax^{*}=P_{Q}Ax^{*}\).

By (3.1) and the nonexpansivity of \(P_{C}\), we derive

Since \(P_{Q}\) is firmly nonexpansive, from Lemma 2.2, we deduce that \(I-P_{Q}\) is also firmly nonexpansive. Hence, we have

Note that \(\nabla f(x_{n})=A^{*}(I-P_{Q})Ax_{n}\). From (3.3), we obtain

By condition (ii), without loss of generality, we assume that \((4-\frac{\rho_{n}}{1-\lambda_{n}\beta_{n}})>0\) for all \(n\geq0 \). Thus from (3.2) and (3.4), we obtain

Hence, \(\{x_{n}\}\) is bounded.

Let \(z=P_{S}0\). From (3.5), we deduce

We consider the following two cases.

Case 1. One has \(\|x_{n+1}-z\|\leq\|x_{n}-z\|\) for every \(n\geq n_{0}\) large enough.

In this case, \(\lim_{n\rightarrow\infty}\|x_{n}-z\|\) exists as finite and hence

This, together with (3.6), implies that

Since \(\liminf_{n\rightarrow\infty}\rho_{n}(4-\frac{\rho_{n}}{1-\lambda _{n}\beta_{n}})\geq\varepsilon_{0}\) (where \(\varepsilon_{0}>0\) is a constant), we get

Noting that \(\|\nabla f(x_{n})\|^{2}\) is bounded, we deduce immediately that

Next, we prove that

Since \(\{x_{n}\}\) is bounded, there exists a subsequence \(\{x_{n_{i}}\} \) satisfying \(x_{n_{i}}\rightharpoonup\hat{z}\) and

By the lower semicontinuity of f, we get

So

That is, ẑ is a minimizer of f, and \(\hat{z}\in S\). Therefore

Then we have

Note that \(\|\nabla f(x_{n})\|^{2}\) is bounded, and that \(\lambda_{n}\| \nabla f(x_{n})\|=\frac{\rho_{n}f(x_{n})}{\|\nabla f(x_{n})\|^{2}}\cdot\| \nabla f(x_{n})\|\). Thus \(\lambda_{n}\|\nabla f(x_{n})\|\rightarrow0\) by (3.8). From Lemma 2.5, we deduce that

Case 2. There exists a subsequence \(\{\|x_{n_{j}}-z\|\}\) of \(\{\| x_{n}-z\|\}\) such that

By Lemma 2.6, there exists a strictly nondecreasing sequence \(\{m_{k}\} \) of positive integers such that \(\lim_{k\rightarrow\infty}m_{k}=+\infty \) and the following properties are satisfied by all numbers \(k\in \mathbb{N}\):

We have

Consequently,

Hence,

By a similar argument to that of Case 1, we prove that

where

In particular, from (3.13), we get

Since \(\|x_{m_{k}}-z\|\leq\|x_{m_{k}+1}-z\|\), we deduce that

Then, from (3.14), we have

Then

Then, from (3.12), we deduce that

Thus, from (3.11) and (3.16), we conclude that

Therefore, \(x_{n}\rightarrow z\). This completes the proof. □

4 Conclusion

Recently, the SFP has been studied extensively by many authors. However, some algorithms need to compute \(\|A\|\), and this is not an easy thing to work out. Others do not need to compute \(\|A\|\), but the algorithms always have weak convergence. If we want to obtain strong convergence theorems, the algorithms are complex and difficult to calculate. We try to get over the drawbacks. In this article, we use the regularized CQ algorithm without computing \(\|A\|\) to find the minimum-norm solution of the SFP, where \(\lambda_{n}=\frac{\rho _{n}f(x_{n})}{\|\nabla f(x_{n})\|^{2}}\), \(0<\rho_{n}<4\). Then, under suitable conditions, the explicit strong convergence theorem is obtained.

References

Censor, Y, Elfving, T: A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 8, 221-239 (1994)

Censor, Y, Bortfeld, T, Martin, B, Trofimov, A: A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 51, 2353-2365 (2003)

Censor, Y, Elfving, T, Kopf, N, Bortfeld, T: The multiple-sets split feasibility problem and its applications for inverse problem. Inverse Probl. 21, 2071-2084 (2005)

López, G, Martín-Márquez, V, Xu, HK: Perturbation techniques for nonexpansive mappings with applications. Nonlinear Anal., Real World Appl. 10, 2369-2383 (2009)

López, G, Martín-Márquez, V, Xu, HK: Iterative algorithms for the multiple-sets split feasibility problem. In: Censor, Y, Jiang, M, Wang, G (eds.) Biomedical Mathematics: Promising Directions in Imaging, Therapy Planning and Inverse Problems, pp. 243-279. Medical Physics Publishing, Madison (2010)

Xu, HK: Iterative methods for the split feasibility problem in infinite-dimensional Hilbert space. Inverse Probl. 26, Article ID 105018 (2010)

Dang, Y, Gao, Y: The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 27, Article ID 015007 (2011)

Qu, B, Xiu, N: A note on the CQ algorithm for the split feasibility problem. Inverse Probl. 21, 1655-1665 (2005)

Wang, F, Xu, HK: Approximating curve and strong convergence of the CQ algorithm for the split feasibility problem. J. Inequal. Appl. 2010, Article ID 102085 (2010)

Xu, HK: A variable Krasnosel’skii-Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 22, 2021-2034 (2006)

Yang, Q: The relaxed CQ algorithm for solving the split feasibility problem. Inverse Probl. 20, 1261-1266 (2004)

Byrne, C: Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18, 441-453 (2002)

Byrne, C: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20, 103-120 (2004)

Yao, YH, Wu, JG, Liou, YC: Regularized methods for the split feasibility problem. Abstr. Appl. Anal. 2012, Article ID 140679 (2012)

Yao, YH, Liou, YC, Yao, JC: Iterative algorithms for the split variational inequality and fixed point problems under nonlinear transformations. J. Nonlinear Sci. Appl. 10, 843-854 (2017)

Yao, YH, Agarwal, RP, Postolache, M, Liou, YC: Algorithms with strong convergence for the split common solution of the feasibility problem and fixed point problem. Fixed Point Theory Appl. 2014, Article ID 183 (2014)

Latif, A, Qin, X: A regularization algorithm for a splitting feasibility problem in Hilbert spaces. J. Nonlinear Sci. Appl. 10, 3856-3862 (2017)

Chang, SS, Wang, L, Zhao, YH: On a class of split equality fixed point problems in Hilbert spaces. J. Nonlinear Var. Anal. 1, 201-212 (2017)

Tang, JF, Chang, SS, Dong, J: Split equality fixed point problem for two quasi-asymptotically pseudocontractive mappings. J. Nonlinear Funct. Anal. 2017, Article ID 26 (2017)

Shi, LY, Ansari, QH, Wen, CF, Yao, JC: Incremental gradient projection algorithm for constrained composite minimization problems. J. Nonlinear Var. Anal. 1, 253-264 (2017)

Xu, HK: Averaged mappings and the gradient-projection algorithm. J. Optim. Theory Appl. 150, 360-378 (2011)

Yang, Q: On variable-step relaxed projection algorithm for variational inequalities. J. Math. Anal. Appl. 302, 166-179 (2005)

López, G, Martín-Márquez, V, Wang, FH, Xu, HK: Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. (2012). doi:10.1088/0266-5611/28/8/085004

Yao, YH, Postolache, M, Liou, YC: Strong convergence of a self-adaptive method for the split feasibility problem. Fixed Point Theory Appl. 2013, Article ID 201 (2013)

Bauschke, HH, Combettes, PL: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, Berlin (2011)

Goebel, K, Kirk, WA: Topics on Metric Fixed Point Theory. Cambridge University Press, Cambridge (1990)

Takahashi, W: Nonlinear Functional Analysis. Yokohama Publishers, Yokohama (2000)

Aubin, JP: Optima and Equilibria: An Introduction to Nonlinear Analysis. Springer, Berlin (1993)

Xu, HK: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 298, 279-291 (2004)

Maingé, PE: Strong convergence of projected subgradient methods for non-smooth and non-strictly convex minimization. Set-Valued Anal. 17(7-8), 899-912 (2008)

Acknowledgements

The authors thank the referees for their helping comments, which notably improved the presentation of this paper. This work was supported by the Fundamental Research Funds for the Central Universities [grant number 3122017072]. MT was supported by the Foundation of Tianjin Key Laboratory for Advanced Signal Processing. H-FZ was supported in part by the Technology Innovation Funds of Civil Aviation University of China for Graduate (Y17-39).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All the authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Tian, M., Zhang, HF. The regularized CQ algorithm without a priori knowledge of operator norm for solving the split feasibility problem. J Inequal Appl 2017, 207 (2017). https://doi.org/10.1186/s13660-017-1480-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1480-2