Abstract

Background

Unabridged access to drug industry and regulatory trial registers and data reduces reporting bias in systematic reviews and may provide a complete index of a drug’s clinical study programme. Currently, there is no public index of the human papillomavirus (HPV) vaccine industry study programmes or a public index of non-industry funded studies.

Methods

By cross-verification via study programme enquiries to the HPV vaccine manufacturers and regulators and searches of trial registers and journal publication databases, we indexed clinical HPV vaccine studies as a basis to address reporting bias in a systematic review of clinical study reports.

Results

We indexed 206 clinical studies: 145 industry and 61 non-industry funded studies. One of the four HPV vaccine manufacturers (GlaxoSmithKline) provided information on its study programme. Most studies were cross-verified from two or more sources (160/206, 78%) and listed on regulatory or industry trial registers or journal publication databases (195/206, 95%)—in particular, on ClinicalTrials.gov (176/195, 90%). However, study results were only posted for about half of the completed studies on ClinicalTrials.gov (71/147, 48%). Two thirds of the industry studies had a study programme ID, manufacturer specific ID, and national clinical trial (NCT) ID (91/145, 63%). Journal publications were available in journal publication databases (the Cochrane Collaboration’s Central Register of Controlled Trials, Google Scholar and PubMed) for two thirds of the completed studies (92/149, 62%).

Conclusion

We believe we came close to indexing complete HPV vaccine study programmes, but only one of the four manufacturers provided information for our index and a fifth of the index could not be cross-verified. However, we indexed larger study programmes than those listed by major regulators (i.e., the EMA and FDA that based their HPV vaccine approvals on only half of the available trials). To reduce reporting bias in systematic reviews, we advocate the registration and publication of all studies and data in the public domain.

Similar content being viewed by others

Background

Healthcare guidelines often rely on drug manufacturers’ studies, regulators’ assessments and independent researchers’ systematic reviews of these studies. Drug manufacturers usually conduct study programmes in agreement with the drug regulators’ pre-approval requirements. However, more than half of all studies are never published [1, 2] and the published studies’ intervention effects are often exaggerated in comparison to the unpublished studies [3,4,5]. This introduces reporting bias that undermines the validity of systematic reviews. To address reporting bias in systematic reviews, it is necessary to use industry and regulatory trial registers and trial data—in particular, the drug manufacturers’ complete study programmes with their corresponding clinical study reports.

A clinical study report of over a thousand pages in length may be condensed into a ten-page journal publication, i.e., a compression factor of 100 [6, 7]. The criteria used to select the resulting fraction of available data in journal publications are unknown and journal publications are often misleading, especially in relation to the reporting of harms of drug interventions [7,8,9,10,11,12,13,14]. Consequently, some researchers now rely on study programmes and clinical study reports as their primary or only source of information for their systematic reviews [7, 13, 15]. The first systematic review of influenza antivirals showed the feasibility of this approach [15]. The review demonstrated the importance of using clinical study reports and the shortcomings of relying only on journal publication database searches (for example, in PubMed). Although the use of clinical study reports reduces the risk of reporting bias, clinical study reports themselves may still be subject to significant reporting bias when compared to their underlying data [6, 12, 16].

Currently, there is no easily accessible source to access study programmes. However, drug manufacturers’ common technical documents (CTDs) contain lists of the studies in a study programme that support marketing authorization applications (MAAs) to regulatory authorities (such as the European Medicines Agency, EMA, or the Food and Drug Administration, FDA). Since 2010 and 2015, it has been possible for researchers to obtain common technical documents and marketing authorization applications from the EMA via policies 0043 and 0070, respectively.

Nonetheless, accessing study programmes and unpublished studies and obtaining clinical study reports from the industry and regulators can prove difficult [13, 17,18,19,20]. Therefore, it is not surprising that only a minority of systematic reviews include unpublished studies (10–20%) [21, 22] or searches of trial registers (10–50%) [23,24,25,26]. Searches of trial registers may lead to the identification of additional eligible studies in about 20–60% of systematic reviews [23,24,25,26]. To identify unpublished studies and address reporting bias, we present here a method for indexing the study programmes of the human papillomavirus (HPV) vaccines as a basis for a systematic review of clinical study reports (see our protocol at PROSPERO: CRD42017056093 [27]).

Methods

Our study involved indexing the HPV vaccine industry study programmes and non-industry funded clinical studies using a six-step process that focuses on identifying unpublished studies (see Additional file 1 for a detailed description of steps 1 to 6 and Additional file 2 for the e-mail correspondence with the HPV vaccine manufacturers in step 6).

In step 1, we corresponded with the EMA and obtained a list of their holdings of clinical study reports and Module 2.5 of the common technical document (CTD) for one HPV vaccine (Gardasil 9) that listed all studies in the Gardasil 9 study programme. This gave us a basic study list with industry study identifiers that usually consist of a prefix that identifies the HPV vaccine being tested (for example, HPV-xxx for Cervarix and V50x-xxx for Gardasil studies). In mid-2017, we were granted access to EMA’s holdings of Modules 2.5 of two other HPV vaccines (Cervarix and Gardasil), but we have not received the modules yet.

In step 2, we expanded the basic list by searching 45 trial registers: eight industry trial registers (where the manufacturers had been involved or possibly involved in one or more HPV vaccine studies), 32 international and regional trial registers chosen according to their level of impact (e.g., https://clinicaltrials.gov and http://apps.who.int/trialsearch/ are used globally and were considered high impact) or where one or more HPV vaccine studies had been conducted (e.g., we searched the Chinese Clinical Trial Registry: http://www.chictr.org.cn/index.aspx, since several HPV vaccine studies had been conducted in China) and five regulatory registers (where the regulators had been involved or possibly involved with the assessment or approval of one or more HPV vaccines, e.g., EMA and FDA).

In step 3, we searched the HPV vaccines’ regulatory drug approval packages (DAPs) from FDA and the HPV vaccines’ European Public Assessment Reports (EPARs) from EMA to identify studies possibly not listed in the 45 trial registers.

In step 4, we conducted searches of three journal publication databases (the Cochrane Collaboration’s Central Register of Controlled Trials, Google Scholar, and PubMed) to identify studies possibly not listed in the 45 trial registers. We also searched WikiLeaks for HPV vaccine studies (or related information). When possible, we matched indexed studies to their corresponding journal publications.

In step 5, we added studies listed in recent HPV vaccine regulatory and independent reviews to verify the studies’ existence and add any studies that we had not identified.

In step 6, we sent the assembled indexes to the corresponding HPV vaccine manufacturers and requested them to verify the indexed studies’ existence and add any studies that we had not identified. We gave the manufacturers a 1-month deadline to respond and sent a reminder if the manufacturer had not responded within 1 to 2 weeks.

We indexed interventional prospective preventive (not therapeutic) comparative (with two or more intervention arms) HPV vaccine clinical studies (and their follow-up studies) in humans. We classified studies by cross-verification as follows:

-

1)

“Definitely exists” (cross-verification of a study’s existence from two or more sources)

-

2)

“Probably exists” (verification of a study’s existence from one source)

-

3)

“Probably does not exist” (no manufacturer or regulatory verification but with a passing reference identified from another source)

We indexed studies from the first two categories. No language restriction was applied, and Google Translate was used for non-familiar languages. By comparing all gathered study IDs, we deleted duplicate entries.

One author (LJ) conducted steps 1 to 6 of the indexing and extracted data. The steps were conducted from October 2016 to July 2017. A second author (TJ) checked the indexing and data extraction. Any disagreements were solved by discussion or by consulting the third author (PCG). One author (LJ) classified the studies according to the likelihood of their existence (e.g., “definitely exists”) and assessed the degree of manufacturer involvement (i.e., studies that were funded or partly funded by the manufacturers were classified as industry studies). A second author (TJ) checked the classifications.

For each study, one author (LJ) extracted the following study information: type, phase (I-IV), intervention type, completion status (completed or on-going), centre status (single or multicentre), participant characteristics (age, gender and number of participants), programme ID, manufacturer ID, trial register ID, results availability, and modes of identification.

Results

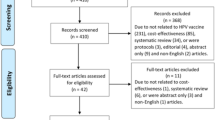

We excluded 79 non-comparative prospective clinical studies and indexed 206 studies: 145 industry and 61 non-industry funded studies with a total of 623,005 participants. One of the four HPV vaccine manufacturers (GlaxoSmithKline) provided us with an index of 81 GlaxoSmithKline studies and four MedImmune studies (MedImmune and GlaxoSmithKline collaborated in the early development of GlaxoSmithKline’s Cervarix HPV vaccine) (see Fig. 1, Table 1 and Additional files 1, 2, 3; and our study’s PRISMA statement, Additional file 4).

Two thirds of the indexed studies were randomized clinical trials (136/206, 66%) and about half were phase III studies (89/206, 43%). The remaining studies were either follow-ups (23 industry and three non-industry), non-randomized (24 and 18), or of unclear design (two industry studies). Most randomized clinical trials had either another vaccine (for example, the hepatitis A vaccine, Havrix) or the HPV vaccine aluminum adjuvants as comparators (111/136, 82%); only 17 studies used a “placebo” (13%; note that if a study reported that it used a “placebo” comparator, but the study did not describe the “placebo”, i.e., as saline, we noted the study’s comparator as “placebo”). A third of the industry studies and no non-industry study used adjuvant comparisons (36/96 vs. 0/40, P < 0.0001; Fisher’s exact test). More industry studies were phase III (79/145 vs. 10/61, P < 0.0001) while more non-industry studies used a “placebo” comparison (12/40 vs. 5/96, P = 0.0002) and were phase IV studies (22/61 vs. 9/145, P < 0.0001) (see Table 1).

GlaxoSmithKline’s study programme primarily used its Cervarix HPV vaccine (65/69, 94%) and included more follow-up studies than the Merck Sharp & Dohme’s study programme (18/69 vs. 5/66, P = 0.006) that mainly used its Gardasil or Gardasil 9 HPV vaccines (55/66, 83%, see Table 1).

Most industry studies were solely industry funded (128/145, 88%), but Merck Sharp and Dohme co-funded more studies than GlaxoSmithKline (17/66 vs. 0/69, P < 0.0001; ten Merck studies were co-funded with universities, four with hospitals and three with governmental healthcare institutions) (see Table 1).

Most studies were completed (149/206, 72%). In comparison to industry studies, non-industry studies were on average of a longer duration (42.2 vs. 36.9 months), while industry studies were more often multicentre (79/106 vs. 13/53, P < 0.0001) (see Table 1).

Most studies included only females (151/206, 73%) and had participants younger than 18 years (105/206, 51%). More non-industry studies included both sexes (19/61 vs. 22/145, P = 0.013), but Merck Sharp and Dohme funded more studies on both sexes than GlaxoSmithKline (16/66 vs. 4/69, P = 0.003). Industry studies had on average twice as many participants enrolled compared to non-industry studies (3602 vs. 1767). For multicentre studies, the mean number of participants was similar for industry and non-industry studies (2388 vs. 2745), but non-industry studies enrolled twice as many participants per study centre compared to industry studies (128 vs. 66, see Table 1).

Most indexed studies were cross-verified from two or more sources (i.e., “definitely exists”, 160/206, 78%). Merck Sharp and Dohme’s Gardasil and Gardasil 9 programme had more studies with a single verification than GlaxoSmithKline’s Cervarix programme (i.e., “probably exists”, 16/66 vs. 0/69, P < 0.0001, see Table 2).

Most industry studies had a study programme specific ID (125/145, 86%), manufacturer specific ID (98/145, 68%) and the US national clinical trial (NCT) ID, which was also used by most non-industry studies (132/145 vs. 44/61, P = 0.0009). About two thirds of the industry studies used all three ID types (91/145, 63%, see Table 2).

Most of the included studies were listed in industry, public or regulatory registers or databases (195/206, 95%)—in particular, on ClinicalTrials.gov (176/195, 90%). However, study results were only posted for about half of the completed studies on ClinicalTrials.gov (71/147, 48%), but more completed industry studies posted results on ClinicalTrials.gov compared to non-industry studies (65/110 vs. 6/37, P < 0.0001, see Table 2).

We reconciled the index with journal publications, but did not run all-inclusive journal publication database searches as recommended for systematic reviews, since clinical study reports (not journal publications) were our focus. Journal publications were available for two thirds of the completed studies (92/149, 62%, see Table 2).

Discussion

Our index showed serious deficiencies and variability in the availability of HPV vaccine studies and data. For example, only half of the completed studies listed on ClinicalTrials.gov posted their results. The clinical study reports we obtained via our index process confirmed that the amount of information and data are vastly greater than that in journal publications. For example, the journal publication for one GlaxoSmithKline Cervarix study (HPV-008) is 14 pages long [28] while its publicly available corresponding clinical study report is more than 7000 pages long [29], even though it is a shortened interim report.

Identification of some studies involved a considerable amount of work and a fifth of the index could not be cross-verified. We indexed studies that we would not have been able to index if we only relied on the journal publication databases. For example, one Cervarix randomized clinical trial (HPV-002) was only listed on GlaxoSmithKline’s trial register. The index that GlaxoSmithKline provided us with contained three Cervarix meta-analyses that were only identifiable by their GSK ID (i.e., 205206, 207644, and 205639), and the index did not include three randomized clinical trials (HPV-009 and HPV-016 and the prematurely terminated HPV-078) that were listed on ClinicalTrials.gov. One Cervarix trial (HPV-049) and three Gardasil studies (V501–001, V501–002, and V501–004) were only identified via regulatory registers or correspondence. One Gardasil follow-up study (for V501–005) only had a journal publication listed in PubMed without any manufacturer-specific ID or registration. Four non-industry studies were published in journal publications but were not registered in any of the 45 trial registers. Three non-industry studies were only listed on regional trial registers (Australia: https://www.anzctr.org.au/; Germany: https://www.drks.de/; and India: http://ctri.nic.in/) (see Additional files 2 and 3).

We also indexed more studies than those listed in the holdings of major regulators. For example, EMA conducted a review (of the relation between HPV vaccination and two syndromes: postural orthostatic tachycardia syndrome, POTS and complex regional pain syndrome, CRPS) [30] that EMA believed was based on the manufacturers’ complete HPV vaccine study programmes (“GSK [GlaxoSmithKline] has conducted a review of all available data from clinical studies…with Cervarix”; and “The MAH [Market Authorisation Holder, i.e., Merck Sharp and Dohme] has reviewed data from all clinical studies of the qHPV vaccine [Gardasil]” (30,31)). However, when we compared the manufacturers’ study programmes (submitted to EMA, see Additional file 1) with our index (see Table 1 and Additional file 3), we found that only half (38/79, 48%) of the manufacturers’ randomized clinical trials and follow-ups of Cervarix and Gardasil completed before the submission dates in July 2015 were included (EMA’s review did not assess Gardasil 9) [30, 31]. Similarly, the FDA’s Drug Approval Packages (DAPs) only mentioned half (32/60, 53%) of the randomized clinical trials and follow-ups that were completed before the vaccines’ date of the Drug Approval Packages (Cervarix: 17/36, 47%; Gardasil: 6/11, 54%; and Gardasil 9: 9/13, 69%). We find this very disturbing.

To our knowledge, our study is the first with the aim of indexing a complete study programme. We do not know if the considerable reporting bias we found is generalizable to all drugs and vaccines, but similar industry examples exist for oseltamivir [15], rofecoxib [32], and rosiglitazone where 83% (35/42) of the study programme was unpublished [33, 34]. Indexing is important when there is high risk of reporting bias, which often is the case for industry funded drug trials. Our approach should therefore be considered for systematic reviews of drugs and vaccines. Steps 2 and 4 contributed quantitatively the most to the identification and cross-verification of studies—in particular, searches on ClinicalTrials.gov and the HPV vaccine manufacturers’ trial registers. Searches of regulatory registers and journal publication databases contributed to a lesser extent. Steps 1, 3, 5 and 6 contributed mainly to the verification of some studies (see Additional files 1 and 3).

Our six-step process is reproducible, the step sequence is interchangeable and most steps could be performed simultaneously. For example, we started by corresponding with EMA, since we are familiar with EMA’s handling of study programmes and clinical study reports [27]. This correspondence helped us get started (but EMA response times may prove very slow and EMA often denies data requests (18)). The index took approximately 3 months to assemble. Researchers may save time if they perform the steps simultaneously and focus on steps 2 and 6. For example, researchers could start requesting the drug manufacturers’ study programmes and subsequently make an independent index and compare the two. However, correspondence with manufacturers may prove challenging and slow. Only one of the four HPV vaccine manufacturers (GlaxoSmithKline) provided us with study programme information, which we received 9 months after our initial request. Merck Sharp and Dohme responded to our enquiry, but did not provide study programme information. Shanghai Zerun Biotechnology and Xiamen Innovax Biotech did not respond to our inquiries (Additional files 1, 2, 3, and 5).

Compared to industry studies, non-industry funded studies were registered less often (for example, on ClinicalTrials.gov) and posted less study results. Non-industry researchers are not legally required to register their studies, adhere to industry reporting guidelines (the International Conference on Harmonization of Technical Requirements for Registration of Pharmaceuticals for Human Use, ICH: http://www.ich.org/) or produce clinical study reports unless their results are used to support a drug’s marketing authorization application. This involves a high risk of reporting bias. Therefore, we recommend that non-industry funders require researchers to register their studies and commit to reporting guidelines similar to the ICH.

Finally, although study programme and clinical study report access from the industry and regulators have improved since 2010 [17], access is often slow and inefficient [18]. In May 2014, one of us (TJ) requested the HPV vaccine clinical study reports from EMA. The request was initially declined by EMA because, “disclosure would undermine the protection of commercial interests”. TJ successfully appealed, but EMA has only released 18 incomplete clinical study reports more than 3 years after the initial request (as of 1 July 2017), which is only half of the clinical study reports included in the EMA review (18/38) [30] and a fifth of our indexed randomized clinical industry trials (18/96).

Conclusion

Authors of systematic reviews may recognize and reduce reporting bias if they adopt our index process of study programmes. We believe we came close to indexing complete HPV vaccine study programmes, but only one of the four HPV vaccine manufacturers provided information for our index and a fifth of the index could not be cross-verified. However, we indexed larger study programmes than those listed by major regulators (i.e., the EMA and FDA that based their HPV vaccine approvals on only half of the available trials). To reduce reporting bias in systematic reviews, we advocate the registration and publication of all studies and data in the public domain and that non-industry studies register and adhere to reporting guidelines similar to the ICH.

Abbreviations

- CTD:

-

Common technical document

- DAP:

-

Drug approval package

- EMA:

-

European medicines agency

- EPAR:

-

European public assessment report

- FDA:

-

Food and Drug Administration

- GSK:

-

GlaxoSmithKline plc

- HPV:

-

Human papillomavirus

- ICH:

-

International conference on harmonization of technical requirements for registration of pharmaceuticals for human use

- MAA:

-

Marketing authorization application

- MAH:

-

Market authorization holder

- Merck:

-

Merck Sharp & Dohme (or Merck & Co., Inc.)

- NCT:

-

National clinical trial

References

McGauran N, Wieseler B, Kreis J, Schüler Y-B, Kölsch H, Kaiser T. Reporting bias in medical research - a narrative review. Trials. 2010;11:37.

Ross JS, Tse T, Zarin DA, Xu H, Zhou L, Krumholz HM. Publication of NIH funded trials registered in ClinicalTrials.Gov: cross sectional analysis. BMJ. 2012 Jan 3;344:d7292.

Als-Nielsen B, Chen W, Gluud C, Kjaergard LL. Association of funding and conclusions in randomized drug trials: a reflection of treatment effect or adverse events? JAMA. 2003;290(7):921–8.

Eyding D, Lelgemann M, Grouven U, Härter M, Kromp M, Kaiser T, et al. Reboxetine for acute treatment of major depression: systematic review and meta-analysis of published and unpublished placebo and selective serotonin reuptake inhibitor controlled trials. BMJ. 2010;341:c4737.

Lundh A, Lexchin J, Mintzes B, Schroll JB, Bero L. Industry sponsorship and research outcome. In: Cochrane database of systematic reviews [internet]. John Wiley & Sons, Ltd; 2017 [cited 3 Apr 2017]. Available from: http://onlinelibrary.wiley.com/doi/10.1002/14651858.MR000033.pub3/abstract

Wieseler B, Kerekes MF, Vervoelgyi V, McGauran N, Kaiser T. Impact of document type on reporting quality of clinical drug trials: a comparison of registry reports, clinical study reports, and journal publications. BMJ. 2012;344:d8141.

Maund E, Tendal B, Hróbjartsson A, Jørgensen KJ, Lundh A, Schroll J, et al. Benefits and harms in clinical trials of duloxetine for treatment of major depressive disorder: comparison of clinical study reports, trial registries, and publications. BMJ. 2014;348:g3510.

Saini P, Loke YK, Gamble C, Altman DG, Williamson PR, Kirkham JJ. Selective reporting bias of harm outcomes within studies: findings from a cohort of systematic reviews. BMJ. 2014;349:g6501.

Rodgers MA, Brown JVE, Heirs MK, Higgins JPT, Mannion RJ, Simmonds MC, et al. Reporting of industry funded study outcome data: comparison of confidential and published data on the safety and effectiveness of rhBMP-2 for spinal fusion. BMJ. 2013;346:f3981.

Hughes S, Cohen D, Jaggi R. Differences in reporting serious adverse events in industry sponsored clinical trial registries and journal articles on antidepressant and antipsychotic drugs: a cross-sectional study. BMJ Open. 2014;4(7):e005535.

Belknap SM, Aslam I, Kiguradze T, Temps WH, Yarnold PR, Cashy J, et al. Adverse event reporting in clinical trials of Finasteride for androgenic alopecia: a meta-analysis. JAMA Dermatol. 2015;151(6):600–6.

Hodkinson A, Gamble C, Smith CT. Reporting of harms outcomes: a comparison of journal publications with unpublished clinical study reports of orlistat trials. Trials. 2016;17(1):207.

Schroll JB, Penninga EI, Gøtzsche PC. Assessment of adverse events in protocols, clinical study reports, and published papers of trials of Orlistat: a document analysis. PLoS Med [Internet]. 2016 [cited 14 Nov 2016];13(8). Available from: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC4987052/

Golder S, Loke YK, Wright K, Norman G. Reporting of adverse events in published and unpublished studies of health care interventions: a systematic review. PLoS Med. 2016 Sep;13(9):e1002127.

Jefferson T, Jones MA, Doshi P, Del Mar CB, Hama R, Thompson MJ, et al. Neuraminidase inhibitors for preventing and treating influenza in adults and children. In: Cochrane database of systematic reviews [internet]. John Wiley & Sons, Ltd; 2014 [cited 14 Nov 2016]. Available from: http://onlinelibrary.wiley.com/doi/10.1002/14651858.CD008965.pub4/abstract

Sharma T, Guski LS, Freund N, Gøtzsche PC. Suicidality and aggression during antidepressant treatment: systematic review and meta-analyses based on clinical study reports. BMJ. 2016;352:i65.

Gøtzsche PC, Jørgensen AW. Opening up data at the European medicines agency. BMJ. 2011;342:d2686.

Doshi P, Jefferson T. Open data 5 years on: a case series of 12 freedom of information requests for regulatory data to the European medicines agency. Trials [Internet]. 2016 [cited 14 Nov 2016];17. Available from: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC4750208/

Jefferson T, Doshi P, Thompson M, Heneghan C. Ensuring safe and effective drugs: who can do what it takes? BMJ. 2011;342:c7258.

Gøtzsche PC. Why we need easy access to all data from all clinical trials and how to accomplish it. Trials. 2011;12:249.

van Driel ML, De Sutter A, De Maeseneer J, Christiaens T. Searching for unpublished trials in Cochrane reviews may not be worth the effort. J Clin Epidemiol. 2009 Aug;62(8):838–844.e3.

Golder S, Loke YK, Wright K, Sterrantino C. Most systematic reviews of adverse effects did not include unpublished data. J Clin Epidemiol. 2016;77:125–33.

Baudard M, Yavchitz A, Ravaud P, Perrodeau E, Boutron I. Impact of searching clinical trial registries in systematic reviews of pharmaceutical treatments: methodological systematic review and reanalysis of meta-analyses. BMJ. 2017;356:j448.

Jones CW, Keil LG, Weaver MA, Platts-Mills TF. Clinical trials registries are under-utilized in the conduct of systematic reviews: a cross-sectional analysis. Syst Rev. 2014;3:126.

Potthast R, Vervölgyi V, McGauran N, Kerekes MF, Wieseler B, Kaiser T. Impact of inclusion of industry trial results registries as an information source for systematic reviews. PloS One. 2014;9(4):e92067.

van Enst WA, Scholten RJ, Hooft L. Identification of additional trials in prospective trial registers for Cochrane systematic reviews. PLOS One. 2012;7(8):e42812.

Jørgensen L, Gøtzsche PC, Jefferson T. PROSPERO: Benefits and harms of the human papillomavirus vaccines: systematic review of industry and nonindustry study reports [Internet]. Available from: Web: http://www.crd.york.ac.uk/PROSPERO/display_record.asp?ID=CRD42017056093.PDF: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20170030.pdf

Paavonen J, Naud P, Salmerón J, Wheeler CM, Chow S-N, Apter D, et al. Efficacy of human papillomavirus (HPV)-16/18 AS04-adjuvanted vaccine against cervical infection and precancer caused by oncogenic HPV types (PATRICIA): final analysis of a double-blind, randomized study in young women. Lancet. 2009;374(9686):301–314.

Interim Clinical Study Report for Study 580299/008 (HPV-008) [Internet]. Available from: https://www.gsk-clinicalstudyregister.com/files2/gsk-580299-008-clinical-study-report-redact.pdf

European Medicines Agency. Assessment report review under article 20 of regulation (EC) no 726/2004 human papillomavirus (HPV) vaccines [internet]. [cited 14 Nov 2016 ]. Available from: http://www.ema.europa.eu/docs/en_GB/document_library/Referrals_document/HPV_vaccines_20/Opinion_provided_by_Committee_for_Medicinal_Products_for_Human_Use/WC500197129.pdf

Jefferson T, Jørgensen L. Human papillomavirus vaccines, complex regional pain syndrome, postural orthostatic tachycardia syndrome, and autonomic dysfunction – a review of the regulatory evidence from the European Medicines Agency. Indian J Med Ethics. [S.l.], v. 2, n. 1 (NS), p. 30, nov. 2016. ISSN 0975-5691. Available at: Accessed 13 Jan 2018.

Lenzer JFDA. Is incapable of protecting US “against another Vioxx”. BMJ. 2004;329(7477):1253.

Gøtzsche PC. Deadly medicines and organised crime: how big pharma has corrupted health care. London: Radcliffe Publishing; 2013.

Cohen D. Rosiglitazone: what went wrong? BMJ. 2010;341:c4848.

Acknowledgements

We would like to thank EMA and GlaxoSmithKline for providing information for our index.

Funding

Our study received no funding or grant other than salary to the authors and associated expenses provided by the Nordic Cochrane Centre.

Availability of data and materials

All raw data underlying our study is available upon request.

Author information

Authors and Affiliations

Contributions

LJ and TJ contributed to the conception of the study and its design, collection and assembly of data, analysis and interpretation of the data, drafting of the article, critical revision of the article for important intellectual content, and final approval of the article. PCG contributed to the conception of the study, critical revision of the article for important intellectual content, and final approval of the article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

LJ and PCG have no conflicts of interest to declare. TJ is occasionally interviewed by market research companies about phase I or II pharmaceutical products. In 2011–14, TJ acted as an expert witness in a litigation case related to the antiviral oseltamivir, in two litigation cases on potential vaccine-related damage and in a labour case on influenza vaccines in healthcare workers in Canada. He has acted as a consultant for Roche (1997–99), GlaxoSmithKline (2001–2), Sanofi-Synthelabo (2003) and IMS Health (2013). In 2014–16, TJ was a member of three advisory boards for Boehringer Ingelheim and is holder of a Cochrane Methods Innovations Fund grant to develop guidance on the use of regulatory data in Cochrane reviews. TJ was a member of an independent data monitoring committee for a Sanofi Pasteur clinical trial on an influenza vaccine. TJ was a co-signatory of the Nordic Cochrane Centre’s complaint to EMA over maladministration at EMA in relation to the investigation of alleged harms of HPV vaccines and consequent complaints to the European Ombudsman. TJ is also sole author of a complaint to the European Ombudsman over slowness and redactions of clinical study reports of HPV vaccine released by EMA.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Index of the HPV vaccines clinical studies: Search strategy for identifying the HPV vaccines industry study programmes and non-industry funded clinical studies. (DOC 1294 kb)

Additional file 2:

Index of the HPV vaccines clinical studies: Correspondence with the HPV vaccine manufacturers for the assessment of the accuracy of our indexed industry study programmes. (DOC 151 kb)

Additional file 3:

Index of the HPV vaccines clinical studies: Indexes of the identified industry study programmes and non-industry funded clinical studies and a list of the identified corresponding journal publications. (DOC 659 kb)

Additional file 4:

Prisma 2009 checklist. (DOCX 147 kb)

Additional file 5:

Data sharing agreement with GlaxoSmithKline.(PDF 301 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Jørgensen, L., Gøtzsche, P.C. & Jefferson, T. Index of the human papillomavirus (HPV) vaccine industry clinical study programmes and non-industry funded studies: a necessary basis to address reporting bias in a systematic review. Syst Rev 7, 8 (2018). https://doi.org/10.1186/s13643-018-0675-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13643-018-0675-z