Abstract

Background

No study has looked at differences of pooled estimates—such as meta-analyses—of corresponding study documents of the same intervention. In this study, we compared meta-analyses of human papillomavirus (HPV) vaccine trial data from clinical study reports with trial data from corresponding trial register entries and journal publications.

Methods

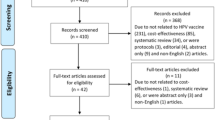

We obtained clinical study reports from the European Medicines Agency and GlaxoSmithKline, corresponding trial register entries from ClinicalTrials.gov and corresponding journal publications via the Cochrane Collaboration’s Central Register of Controlled Trials, Google Scholar and PubMed. Two researchers extracted data. We compared reporting of trial design aspects and 20 prespecified benefit and harm outcomes extracted from each study document type. Risk ratios were calculated with the random effects inverse variance method.

Results

We included study documents from 22 randomized clinical trials and 2 follow-up studies with 95,670 healthy participants and non-HPV vaccine comparators (placebo, HPV vaccine adjuvants and hepatitis vaccines). We obtained 24 clinical study reports, 24 corresponding trial register entries and 23 corresponding journal publications; the median number of pages was 1351 (range 357 to 11,456), 32 (range 11 to 167) and 11 (range 7 to 83), respectively. All 24 (100%) clinical study reports, no (0%) trial register entries and 9 (39%) journal publications reported on all six major design-related biases defined by the Cochrane Handbook version 2011. The clinical study reports reported more inclusion criteria (mean 7.0 vs. 5.8 [trial register entries] and 4.0 [journal publications]) and exclusion criteria (mean 17.8 vs. 11.7 and 5.0) but fewer primary outcomes (mean 1.6 vs. 3.5 and 1.2) and secondary outcomes (mean 8.8 vs. 13.0 and 3.2) than the trial register entries. Results were posted for 19 trial register entries (79%). Compared to the clinical study reports, the trial register entries and journal publications contained 3% and 44% of the seven assessed benefit data points (6879 vs. 230 and 3015) and 38% and 31% of the 13 assessed harm data points (167,550 vs. 64,143 and 51,899). No meta-analysis estimate differed significantly when we compared pooled risk ratio estimates of corresponding study document data as ratios of relative risk.

Conclusion

There were no significant differences in the meta-analysis estimates of the assessed outcomes from corresponding study documents. The clinical study reports were the superior study documents in terms of the quantity and the quality of the data they contained and should be used as primary data sources in systematic reviews.

Systematic review registration

The protocol for our comparison is registered on PROSPERO as an addendum to our systematic review of the benefits and harms of the HPV vaccines: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20180320.pdf: CRD42017056093. Our systematic review protocol was registered on PROSPERO on January 2017: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20170030.pdf. Two protocol amendments were registered on PROSPERO on November 2017: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20171116.pdf. Our index of the HPV vaccine studies was published in Systematic Reviews on January 2018: https://doi.org/10.1186/s13643-018-0675-z. A description of the challenges obtaining the data was published on September 2018: https://doi.org/10.1136/bmj.k3694.

Similar content being viewed by others

Background

Since 1995, the pharmaceutical industry has written structured clinical study reports of randomized clinical trials following international guidelines to document their products’ benefits and harms when applying for marketing approval [1]. Clinical study reports are usually confidential documents, but can be requested or downloaded from the European Medicines Agency (EMA) [2], ClinicalStudyDataRequest.com (CSDR), GlaxoSmithKline’s trial register website and, in the future, possibly from the US Food and Drug Administration (FDA) [3]. Publicly available trial data mainly come from biomedical journal publications and trial register entries such as those on ClinicalTrials.gov. The intention of ClinicalTrials.gov is that all studies publish all results and that those who do not publish results within 12 months of trial completion are fined. According to fdaaa.trialstracker.net, 32% of studies on ClinicalTrials.gov have no results posted and no fines have been issued. Clinical study reports usually have all prespecified data included or include amendments. There can be important differences in results from published [4] and unpublished [5] versions of corresponding study documents. Clinical study reports include highly detailed information on all aspects of a trial [6] and are on average about 2000 pages long [7], but it can be difficult to obtain complete and unredacted clinical study reports [8].

We carried out a systematic review of the human papillomavirus (HPV) vaccines’ clinical study reports [9] based on an index we constructed of 206 HPV vaccine studies [10]. As of July 2017, 62% (92/149) of the completed studies were not published in journal publications and 48% (71/147) of the completed studies on ClinicalTrials.gov had no study results posted [10]. Systematic reviewers often only use journal publications and trial registers for their reviews, which may increase the risk of using a data set influenced by selective outcome reporting.

To our knowledge, no study has looked at differences of pooled estimates—such as meta-analyses—of corresponding study documents of the same intervention. Our primary aim in this study was to compare meta-analyses of HPV vaccine data from clinical study reports with data from corresponding trial register entries and journal publications. Our secondary aim was to compare the reporting of study design aspects of the corresponding study documents.

Methods

We compared corresponding HPV vaccine study documents of clinical study reports, trial register entries and journal publications to investigate the degree of reporting bias for prespecified outcomes and the reporting of trial design aspects; see our protocol on PROSPERO [11] (registered as ‘Protocol amendment no. 3’ for our systematic review of the HPV vaccines [9]).

Clinical study reports were obtained from EMA and GlaxoSmithKline [9]. We identified the clinical study reports’ corresponding trial register entries on ClinicalTrials.gov and corresponding primary journal publications from our published index of the HPV vaccine studies. The search strings used to identify the studies are available in the index publication [10]. We assessed all identified journal publications for a study (including supplementary documents and errata) for eligible information and chose the primary publication that corresponded to the clinical study report for our comparison. We did not check for eligible information in additional trial registers (such as the EU Clinical Trials Register) or letters to the editors.

Data extraction and comparison of the study documents were carried out by two researchers (LJ extracted the data; TJ checked the extractions; and PCG arbitrated). For each study document, the following data were compared: study ID, number of pages, date of document, time from study completion to publication in a journal, result availability, protocol availability (including pre-specification of outcomes and inclusion of a statistical analysis plan), reporting of PICO criteria (participants, interventions, comparisons and outcomes) and reporting of six major design-related biases defined by the Cochrane Handbook (version 2011) for the Cochrane risk of bias tool [12] (random sequence generation, allocation concealment, blinding of outcome assessors, blinding of personnel, blinding of participants and loss to follow-up). We collected these data, as they are important to evaluate a study’s internal and external validity. We did not include the Cochrane risk of bias tool domain ‘selective outcome reporting’, since we compared this domain quantitatively between corresponding documents.

For each study document, we extracted and compared data on the outcomes we assessed in our systematic review [9]. As our review contained 166 meta-analyses, we only compared the 20 most clinically relevant outcomes (or statistically significant outcomes with a p value ≤ 0.05; noted in parentheses). Benefit outcomes included all-cause mortality, HPV-related cancer mortality, HPV-related cancer incidence, HPV-related carcinoma in situ, HPV-related moderate intraepithelial neoplasia, HPV-related moderate intraepithelial neoplasia or worse and HPV-related treatment procedures. Harm outcomes include fatal harms, serious harms (including those judged as ‘definitely associated’ with postural orthostatic tachycardia syndrome [POTS] and complex regional pain syndrome [CRPS; see our systematic review protocol amendment [13] for these two post hoc exploratory analyses] and the nervous system disorders that were Medical Dictionary for Regulatory Activities [MedDRA] classified in this system organ class), new-onset diseases (including back pain, vaginal infection and the vascular disorders that were MedDRA classified in this system organ class) and general harms (including fatigue, headache and myalgia). Histological outcomes were assessed irrespective of involved HPV types. The most aggregated data account (participants with events over the total number of participants) was used for the meta-analyses, and the most detailed harm account of MedDRA preferred terms was used for event comparisons. For example, if harms were registered separately per harm, we would count the separate harms and summarize them as a total number of harms. For all GlaxoSmithKline clinical study reports and for serious harms for Merck clinical study reports, we pooled MedDRA preferred terms in their respective system organ classes. A participant could potentially be included more than once in a separate analysis (e.g. if a participant experienced both serious ‘headache’ and serious ‘dizziness’, the participant would be counted twice in the MedDRA system organ class analysis of serious nervous system disorders); we therefore consider the MedDRA system organ class analyses exploratory.

Merck Sharp & Dohme did not provide a formal definition for its new-onset disease category—new medical history—but described the category as ‘all new reported diagnoses’ in the clinical study report of trial V501-019. Although ‘new medical history’ was not explicitly mentioned in the trial register entries and journal publications, we included eligible new reported diagnoses not reported as serious or general harms in this category.

For our meta-analyses, we used the intention to treat principle. Risk ratios (RRs) were calculated with the random effects inverse variance method. Random effects estimates were compared to fixed effect estimates, as the former method may weigh small trials unduly if there is considerable heterogeneity between trials [12].

Results

We included study documents from 22 randomized clinical trials and 2 follow-up studies and obtained 24 clinical study reports, 24 corresponding trial register entries and 23 corresponding primary journal publications (for the remaining journal publication—HPV-003, of 61 participants—the manufacturer confirmed that no journal publication had been published [10]). See Additional file 1 for our study’s PRISMA statement.

Characteristics of included studies

The 24 included studies investigated four different HPV vaccines: Cervarix™, Gardasil™, Gardasil 9™ and an HPV type 16 vaccine, and included 95,670 healthy participants (79,102 females and 16,568 males) aged 8 to 72. One (4%) study used a saline placebo comparator, but its participants had been HPV vaccinated before randomization. Fourteen (58%) studies used vaccine adjuvants as comparators: amorphous aluminium hydroxyphosphate sulphate (AAHS), aluminium hydroxide (Al[OH]3) or carrier solution. Nine (38%) studies used hepatitis vaccine comparators: Aimmugen™, Engerix™, Havrix™ or Twinrix Paediatric™.

Characteristics of included study documents

Nearly all study documents (70/72) reported data from study start to completion, except for the clinical study report and journal publication of study HPV-040 that described interim analyses. The median number of pages in the clinical study reports was 1351 (range 357 to 11,456) (see Table 1). For four studies (HPV-008, HPV-013, HPV-015 and HPV-040), we obtained clinical study reports from both EMA and GlaxoSmithKline (we did not account for duplicate pages). EMA’s clinical study reports were only 22% of the length of the corresponding GlaxoSmithKline reports (5316 vs. 23,645 pages). After transformation to PDFs, the median number of pages in the trial register entries was 32 (range 11 to 167). Results were posted on ClinicalTrials.gov for 19 studies (79%) but were not posted for 5 studies: HPV-001, HPV-003, HPV-013, HPV-033 and HPV-035. The median number of pages in the journal publications—including supplementary appendices—was 11 (range 7 to 83). Twelve (52%) journal publications contained supplementary appendices. The mean time from study completion to journal publication was 2.3 years (see Table 1).

Inclusion of protocols

Ten clinical study reports (42%), no trial register entries (0%) and 2 journal publications (9%) included protocols. All 12 protocols listed prespecified outcomes and contained statistical analysis plans (see Table 2). The GlaxoSmithKline trial register entries contained protocol hyperlinks to ClinicalStudyDataRequest.com, but the protocols were not freely available and had to be requested. We did not request the protocols, as this required us to sign a data sharing agreement, which would restrict our ability to publish our results.

Reporting of major design-related biases

All 24 (100%) clinical study reports, no (0%) trial register entries and 9 (39%) journal publications reported explicitly on all six domains to be assessed for bias according to the Cochrane Handbook version 2011 [12] (see Table 2).

Reporting of PICO criteria

Compared to the trial register entries and journal publications, the clinical study reports reported on average more inclusion criteria (mean 7.0 vs. 5.8 and 4.0, respectively) and exclusion criteria (mean 17.8 vs. 11.7 and 5.0) (see Table 2). As an example, while 20 (83%) clinical study reports reported that participants with immunological disorders were excluded, only 12 (50%) trial register entries and 9 (39%) journal publications reported this criterion. All clinical study reports and journal publications specified the intervention and comparator contents (including antigens, adjuvants and doses), whereas only 18 (75%) and 8 (33%) trial register entries specified these. Active comparators (AAHS, Al[OH]3 and carrier solution) were referred to as ‘placebos’ in 14 (58%) clinical study reports, 13 (54%) trial register entries and 17 (74%) journal publications. The mean number of reported primary outcomes was higher in the trial register entries (3.5) than in the clinical study reports (1.6) and the journal publications (1.2). This was also the case for secondary outcomes (13.0 vs. 8.8 and 3.2) (see Table 2).

Meta-analyses of benefits

Of our seven prespecified benefit outcomes from the clinical study reports, the trial register entries included data for 2 (29%) and the journal publications for 6 (86%) (see Table 3 and Additional file 2). Compared to the clinical study reports, the trial register entries and journal publications contained 3% and 44% of the assessed benefit data points (6879 vs. 230 and 3015). Due to the lack of data in the trial register entries and journal publications, it was only possible to calculate the ratios of relative risk for half (10/21) of the prespecified benefit comparisons (see Table 4). The meta-analysis risk ratio estimates from corresponding study documents did not differ much (see Table 3), and the ratios of relative risk differences that could be calculated was not statistically significant (see Table 4).

Meta-analyses of harms

Of our 13 prespecified harm outcomes from the clinical study reports, the trial register entries included data for 11 (85%) and the journal publications for 10 (77%) (see Tables 3 and 4 and Additional file 2). Compared to the clinical study reports, the trial register entries and journal publications contained 38% and 31% of the assessed harm data points (167,550 vs. 64,143 and 51,899). It was only possible to calculate the ratios of relative risk for 80% (31/39) of the prespecified harm comparisons (see Table 4). The meta-analysis risk ratio estimates did not differ much (see Table 3), and the ratio of relative risk differences that could be calculated was not statistically significant (see Table 4).

Random effects vs. fixed effect analyses

We found similar results with the fixed effect model but with narrower confidence intervals, as the between-trial variance is not included in this model.

Subgroup analyses

When we excluded the studies that had no results posted on their corresponding trial register entries (HPV-001, HPV-003, HPV-013, HPV-033 and HPV-035) from the clinical study report meta-analyses, the results did not differ significantly.

Study document differences

There were substantial differences between the amount of data in the three study document types (see Figs. 1, 2, 3, 4 and 5). For example, the journal publication for V501-013 included more cases of HPV-related moderate intraepithelial neoplasia or worse compared to its clinical study report (417 vs. 370; see Fig. 1). The trial register entry for HPV-015 reported fewer HPV-related treatment procedures than the clinical study report (160 vs. 198; see Fig. 2). The trial registry entry of HPV-040 reported 10 deaths (five in each group), whereas the clinical study report reported ‘no deaths considered as possibly related to vaccination according to the investigator (up to 30 April 2011)’, and the journal publication reported ‘No deaths had been reported at the time of this interim analysis (up to April 2011)’. Compared to the corresponding clinical study report, the journal publication of HPV-008 only contained an aggregate total number of serious harms (1400), whereas the clinical study report contained all individual serious harms classified with MedDRA preferred terms (2043). Only the trial register entries and journal publications for HPV-023 and HPV-032 included serious harms classified with MedDRA preferred terms (see Fig. 3). No journal publication of Merck Sharp & Dohme studies included their new-onset disease category: ‘new medical history’ (V501-005 to V503-006). Merck Sharp & Dohme did not provide a formal definition for ‘new medical history’ but described the category as ‘all new reported diagnoses’ in the clinical study report of trial V501-019. Although not mentioned as an explicit category, the trial register entries reported fewer events of new diagnoses than the clinical study reports (e.g. for V501-015: 329 vs. 35,546; see Fig. 4). Only the trial registry report of HPV-032 and the journal publication of V501-013 included general harms (see Fig. 5).

Discussion

There were on average 50 and 121 times more pages in the clinical study reports than in their corresponding trial register entries and journal publications. This was likely a main reason why the clinical study reports were superior at reporting trial design aspects. If our systematic review of clinical study reports [9] had relied on trial register entries or journal publications, it would have had no data for a quarter of our prespecified outcomes (11/40). Although the inclusion of clinical study reports led to significantly more eligible and available data, no changes in the direction of available results occurred when comparing the risk ratios of corresponding meta-analyses as ratios of relative risks. This may have several explanations. First, GlaxoSmithKline might be more transparent than other pharmaceutical companies [36], so corresponding study documents from GlaxoSmithKline could be more consistent compared to corresponding study documents from other companies [37,38,39,40]. Second, we used the random effects model, but more risk ratios had narrower confidence intervals with a fixed effect model. Third, there were low event numbers for several outcomes; differences in low event numbers may be overestimated when using risk ratios [12]. Finally, the studies were designed with a lack of placebo controls and incomplete reporting of harms [8] and the trial register entries and journal publications only included very few of the assessed data points (from 3% to 44%) compared to the clinical study reports. This may have skewed some of our comparison results towards being false-negative and led to an underestimation of harms caused by the HPV vaccines. Major study design features such as the use of active comparators and the reporting format of harms are not affected by the number of pages in a study document, but the vast increase in the amount of detail in clinical study reports allows for a more complete understanding that might impact conclusions. We have expanded on the issues of the lack of placebo controls and incomplete harms reporting elsewhere [8].

Strengths and limitations

Our comparison included 71 of 72 primary study documents (except for the journal publication of trial HPV-003 with 61 participants, which does not exist). Nearly all corresponding study documents (70/72) reported data from initiation to completion. To our knowledge, our study is the first with the aim of comparing meta-analyses from different study document data. The majority of study document comparison studies have mainly looked at harms [37,38,39,40]; we looked at both benefits and harms.

We did not obtain a single complete and unredacted clinical study report, so the included reports are less useful than complete and unredacted ones. We did not prespecify comparisons of clinical study reports obtained from different sources (i.e. EMA vs. GlaxoSmithKline), and we only prespecified ClinicalTrials.gov register entries for inclusion, as these are intended to have detailed summaries uploaded within 12 months of a study’s completion. We considered it appropriate to only compare a clinical study report with a single corresponding primary register entry and a single corresponding primary journal publication. A comparison that included all published information would become very complex and, in our view, less useful for researchers conducting systematic reviews.

As the clinical study reports were incomplete and often redacted, some eligible data may have been left out. We have described these issues elsewhere [8]. Cervarix™ clinical study reports obtained from EMA were a fifth of the length of the reports that we downloaded from GlaxoSmithKline’s trial register. Merck Sharp & Dohme clinical study reports (of Gardasil™, Gardasil 9™ and the HPV type 16 vaccine) were only obtained from EMA. These consisted of 9588 pages for seven trials. Thus, potentially 40,000 pages remain undisclosed for our comparison of Merck Sharp & Dohme clinical study reports [8].

Only 12 of 71 study documents contained the study protocol. We believe that all study publications should include the study protocol, as readers otherwise are less able to evaluate whether selective outcome reporting, protocol amendments or post hoc analyses were present in the study publication.

It was not possible to compare meta-analyses of per-protocol and intention to treat populations, as we had prespecified [11]. In the trial register entries and journal publications, per-protocol benefit outcomes were not reported irrespective of HPV type and harm results were not reported for per-protocol populations. Differences might have been more marked for these comparisons. For example, in the journal publication for HPV-015, it was stated that ‘Few cases of CIN2+ (moderate cervical intraepithelial neoplasia or worse) were recorded’ for the per-protocol population for CIN2+ related to HPV types 16 and 18 (25 vs. 34), but the corresponding clinical study report reported four times as many CIN2+ cases for the intention to treat population irrespective of HPV type (103 vs. 108).

The lower amount of data points in journal publications might be due to space restrictions, but in many biomedical journals, it is possible to include large electronic appendices. As there is no space restriction on ClinicalTrials.gov [41], the lower amount of data points was likely due to incomplete reporting.

Journal publications for five studies (HPV-031, HPV-035, HPV-040, HPV-058 and HPV-069) only included figures with graphs of general harms without exact numbers. We could calculate the absolute numbers from the percentages of general harms that were provided for four of the five journal publications (HPV-035, HPV-040, HPV-058 and HPV-069).

No journal publication of Merck Sharp & Dohme mentioned ‘new medical history’—a category used in all seven Merck clinical study reports. Merck Sharp & Dohme described ‘new medical history’ as ‘all new reported diagnoses’.

Some data in the trial register entries and journal publications were not comparable for our prespecified outcomes; for example, whereas the clinical study reports had reported an aggregate number of participants experiencing ‘solicited and unsolicited’ harms, the trial register entries and journal publications only reported general harms as ‘solicited’ and ‘unsolicited’ harms and that on a MedDRA preferred term and total level, respectively. We decided to compare such data as number of events but excluded non-aggregated data from the meta-analyses, as the data would constitute a considerable risk of counting participants more than once in an analysis (e.g. for trial register entries for GlaxoSmithKline studies, we only used ‘unsolicited’ events for general harms, as these were reported aggregately). For trial register entries for Merck studies, general harms were reported aggregately with local harms. We had not prespecified local harms as an outcome, so we did not use these data.

Since a journal publication page usually has a higher word and character count than a clinical study report page (that usually has a higher word count than a trial register PDF page), it may have been more appropriate to compare the word count of the study documents instead of the number of pages. As we received clinical study reports both from EMA and GlaxoSmithKline for some clinical study reports, some of the pages were duplicates and the median number of pages was therefore overestimated to some extent.

Similar studies

Our study supplements earlier studies that found reporting bias from clinical study reports to trial register entries and journal publications [38,39,40, 42]. Golder et al. performed a systematic review of 11 comparison studies that compared the number of harms in corresponding published and unpublished study documents [37]. They found that 62% (mean) of the harms and 2–100% of the serious harms would have been missed if the comparison studies had relied on journal publications. Similarly, our systematic review of the HPV vaccines of clinical study reports would have missed 62% of the assessed harm data points if it had relied on trial register entries and 69% of the harms if it had relied on journal publications. Our systematic review would have included 1% more serious harms classified with MedDRA preferred terms if it had relied on trial registers but missed 26% serious harms classified with MedDRA preferred terms if it was based on journal publications. It would also have missed 97% of the benefit data points if it had relied on trial register entries and 56% if it had relied on journal publications.

We found a mean time from trial completion to journal publication of 2.3 years. This is similar to a study by Sreekrishnan et al.—from 2018, of 2000 neurology studies—that found a mean time to publication of 2.2 years [43], but less similar to a study by Ross et al.—from 2013, of 1336 clinical trials—that found a mean time to publication of 1.8 years [44].

Conclusion

There were no significant differences in the meta-analysis estimates of the assessed outcomes from corresponding study documents. The clinical study reports were the superior study documents in terms of the quantity and the quality of the data they contained and should be used as primary data sources in systematic reviews; trial register entries and journal publications should be used concomitantly with clinical study reports, as some data may only be available in trial register entries or journal publications. A systematic review of the HPV vaccines would have had considerably less information and data included if it relied on trial register entries and journal publications instead of clinical study reports. A full data set would be expected to be available from case report forms and individual participant data, but there are regulatory barriers that need to be lifted before independent researchers can access such data [8]. Corresponding study documents ought to use consistent terminology and provide all aggregate and individual benefits and harms data. To test our results’ generalizability, we recommend that other researchers replicate and expand on our method of comparison for other interventions.

Availability of data and materials

The datasets generated and analysed during our study are available from the corresponding author (LJ) upon request.

Abbreviations

- AAHS:

-

Amorphous aluminium hydroxyphosphate sulphate

- Al(OH)3 :

-

Aluminium hydroxide

- CRPS:

-

Chronic regional pain syndrome

- EMA:

-

European Medicines Agency

- FDA:

-

Food and Drug Administration

- GSK:

-

GlaxoSmithKline

- HPV:

-

Human papillomavirus

- ICH:

-

International Council for Harmonisation of Technical Requirements for Pharmaceuticals for Human Use

- MedDRA:

-

Medical Dictionary for Regulatory Activities

- Merck:

-

Merck & Co., Inc. or Merck Sharp & Dohme outside the USA and Canada

- PICO:

-

Patients, interventions, comparisons and outcomes

- POTS:

-

Postural orthostatic tachycardia syndrome

- PRISMA :

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PROSPERO:

-

International Prospective Register of Systematic Reviews

References

Guidelines: ICH. Available from: http://www.ich.org/products/guidelines.html.

Doshi P, Jefferson T. Open data 5 years on: a case series of 12 freedom of information requests for regulatory data to the European Medicines Agency. Trials. 2016;17:78. https://doi.org/10.1186/s13063-016-1194-7.

Doshi P. FDA to begin releasing clinical study reports in pilot programme. BMJ. 2018;360:k294.

Jefferson T, Jones M, Doshi P, Mar CD. Neuraminidase inhibitors for preventing and treating influenza in healthy adults: systematic review and meta-analysis. BMJ. 2009;339:b5106. https://doi.org/10.1136/bmj.b5106.

Jefferson T, Jones MA, Doshi P, Del Mar CB, Hama R, Thompson MJ, et al. Neuraminidase inhibitors for preventing and treating influenza in adults and children. Cochrane Database Syst Rev. 2014. https://doi.org/10.1002/14651858.CD008965.pub4.

Jefferson T, Jørgensen L. Redefining the ‘E’ in EBM. BMJ Evid Based Med. 2018;23:46–7. https://doi.org/10.1136/bmjebm-2018-110918.

Doshi P, Jefferson T. Clinical study reports of randomised controlled trials: an exploratory review of previously confidential industry reports. BMJ Open. 2013;3(2):e002496. https://doi.org/10.1136/bmjopen-2012-002496.

Jørgensen L, Doshi P, Gøtzsche PC, Jefferson T. Challenges of independent assessment of potential harms of HPV vaccines. BMJ. 2018;362:k3694. https://doi.org/10.1136/bmj.k3694.

Jørgensen L, Gøtzsche PC, Jefferson T. Benefits and harms of the human papillomavirus vaccines: systematic review of industry and non-industry study reports. Prospero. 2017; Available from: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20170030.pdf.

Jørgensen L, Gøtzsche PC, Jefferson T. Index of the human papillomavirus (HPV) vaccine industry clinical study programmes and non-industry funded studies: a necessary basis to address reporting bias in a systematic review. Syst Rev. 2018;7(1):8. https://doi.org/10.1186/s13643-018-0675-z.

Jørgensen L, Gøtzsche PC, Jefferson T. Protocol: Comparison of clinical study reports with trial registry reports and journal publications. PROSPERO. 2018. Available from: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20180320.pdf.

Cochrane handbook for systematic reviews of interventions. Available from: http://training.cochrane.org/handbook.

Jørgensen L, Gøtzsche PC, Jefferson T. Protocol amendment no. 1 and 2: Benefits and harms of the human papillomavirus vaccines: systematic review of industry and non-industry study reports. PROSPERO. 2017; Available from: https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20171116.pdf.

Harper DM, et al. Efficacy of a bivalent L1 virus-like particle vaccine in prevention of infection with human papillomavirus types 16 and 18 in young women: a randomized controlled trial. Lancet. 2004;364(9447):1757–65. https://doi.org/10.1016/S0140-6736(04)17398-4.

Paavonen J, et al. Efficacy of human papillomavirus (HPV)-16/18 AS04-adjuvanted vaccine against cervical infection and precancer caused by oncogenic HPV types (PATRICIA): final analysis of a double-blind, randomized study in young women. Lancet. 2009;374(9686):301–14. https://doi.org/10.1016/S0140-6736(09)61248-4.

Medina DM, et al. Safety and immunogenicity of the HPV-16/18 AS04-adjuvanted vaccine: a randomized, controlled trial in adolescent girls. J Adolesc Health. 2010;46(5):414–21. https://doi.org/10.1016/j.jadohealth.2010.02.006.

Skinner S, et al. Efficacy, safety, and immunogenicity of the human papillomavirus 16/18 AS04-adjuvanted vaccine in women older than 25 years: 4-year interim follow-up of the phase 3, double-blind, randomized controlled VIVIANE study. Lancet. 2014;384(9961):2213–27. https://doi.org/10.1016/S0140-6736(14)60920-X.

Naud PS, et al. Sustained efficacy, immunogenicity, and safety of the HPV-16/18 AS04-adjuvanted vaccine. Human Vaccin Immunother. 2014;10:8. https://doi.org/10.4161/hv.29532.

Pedersen C, et al. Randomized trial: immunogenicity and safety of coadministered human papillomavirus-16/18 AS04-adjuvanted vaccine and combined hepatitis A and B vaccine in girls. J Adolesc Health. 2012;50(1):38–46. https://doi.org/10.1016/j.jadohealth.2011.10.009.

Schmeink CE, et al. Co-administration of human papillomavirus-16/18 AS04-adjuvanted vaccine with hepatitis B vaccine: randomized study in healthy girls. Vaccine. 2011;29(49):9276–83. https://doi.org/10.1016/j.vaccine.2011.08.037.

Bhatla N, et al. Immunogenicity and safety of human papillomavirus-16/18 AS04-adjuvanted cervical cancer vaccine in healthy Indian women. J Obstet Gynaecol Res. 2010;36(1):123–32. https://doi.org/10.1111/j.1447-0756.2009.01167.x.

Konno R, et al. Efficacy of human papillomavirus type 16/18 AS04-adjuvanted vaccine in Japanese women aged 20 to 25 years: final analysis of a phase 2 double-blind, randomized controlled trial. Int J Gynecol Cancer. 2010;20(5):847–55. https://doi.org/10.1111/IGC.0b013e3181da2128.

Kim YJ, et al. Vaccination with a human papillomavirus (HPV)-16/18 AS04-adjuvanted cervical cancer vaccine in Korean girls aged 10-14 years. J Korean Med Sci. 2010;25(8):1197–204. https://doi.org/10.3346/jkms.2010.25.8.1197.

Ngan HY, et al. Human papillomavirus-16/18 AS04-adjuvanted cervical cancer vaccine: immunogenicity and safety in healthy Chinese women from Hong Kong. Hong Kong Med J. 2010;16(3):171–9.

Kim SC, et al. Human papillomavirus 16/18 AS04-adjuvanted cervical cancer vaccine: immunogenicity and safety in 15-25 years old healthy Korean women. J Gynecol Oncol. 2011;22(2):67–75. https://doi.org/10.3802/jgo.2011.22.2.67.

Lehtinen M, et al. Safety of the human papillomavirus (HPV)-16/18 AS04-adjuvanted vaccine in adolescents aged 12–15 years: Interim analysis of a large community-randomized controlled trial. Hum Vaccin Immunother. 2016;12(12):3177–85. https://doi.org/10.1080/21645515.2016.1183847.

Zhu F, et al. Immunogenicity and safety of the HPV-16/18 AS04-adjuvanted vaccine in healthy Chinese girls and women aged 9 to 45 years. Hum Vaccin Immunother. 2014;10(7):1795–806. https://doi.org/10.4161/hv.28702.

Konno R et al. Efficacy of the human papillomavirus (HPV)-16/18 AS04-adjuvanted vaccine against cervical intraepithelial neoplasia and cervical infection in young Japanese women. Hum Vaccin Immunother. 2014;10(7):1781-1794. doi: 10.4161/hv.28712.

Koutsky LA, et al. A controlled trial of a human papillomavirus type 16 vaccine. N Engl J Med. 2002;347(21):1645–51. https://doi.org/10.1056/NEJMoa020586.

Garland SM. Quadrivalent vaccine against human papillomavirus to prevent anogenital diseases. N Engl J Med. 2007;356(19):1928–43. https://doi.org/10.1056/NEJMoa061760.

The FUTURE II Study Group. Quadrivalent vaccine against human papillomavirus to prevent anogenital diseases. N Engl J Med. 2007;356(19):1915–27. https://doi.org/10.1056/NEJMoa061760.

Reisinger KS, et al. Safety and persistent immunogenicity of a quadrivalent human papillomavirus types 6, 11, 16, 18 L1 virus-like particle vaccine in preadolescents and adolescents: a randomized controlled trial. Pediatr Infect Dis J. 2007;26(3):201–9.

Muñoz N, et al. Safety, immunogenicity, and efficacy of quadrivalent human papillomavirus (types 6, 11, 16, 18) recombinant vaccine in women aged 24-45 years: a randomized, double-blind trial. Lancet. 2009;373(9679):1949–57. https://doi.org/10.1016/S0140-6736(09)60691-7.

Giuliano AR, et al. Efficacy of quadrivalent HPV vaccine against HPV Infection and disease in males. N Engl J Med. 2011;364(5):401–11. https://doi.org/10.1056/NEJMoa0909537.

Garland SM, et al. Safety and immunogenicity of a 9-valent HPV vaccine in females 12-26 years of age who previously received the quadrivalent HPV vaccine. Vaccine. 2015;33(48):6855–64. https://doi.org/10.1016/j.vaccine.2015.08.059.

Nisen P, Rockhold F. Access to patient-level data from GlaxoSmithKline Clinical Trials. N Engl J Med. 2013;369(5):475–8. https://doi.org/10.1056/NEJMsr1302541.

Golder S, Loke YK, Wright K, Norman G. Reporting of adverse events in published and unpublished studies of health care interventions: a systematic review. PLoS Med. 2016 Sep 20;13(9):e1002127. https://doi.org/10.1371/journal.pmed.1002127.

Maund E, Guski LS, Gøtzsche PC. Considering benefits and harms of duloxetine for treatment of stress urinary incontinence: a meta-analysis of clinical study reports. CMAJ. 2017 Feb 6;189(5):E194–203. https://doi.org/10.1503/cmaj.151104.

Schroll JB, Penninga EI, Gøtzsche PC. Assessment of adverse events in protocols, clinical study reports, and published papers of trials of orlistat: a document analysis. PLoS Med. 2016 Aug 16;13(8):e1002101. https://doi.org/10.1371/journal.pmed.1002101.

Sharma T, Guski LS, Freund N, Gøtzsche PC. Suicidality and aggression during antidepressant treatment: systematic review and meta-analyses based on clinical study reports. BMJ. 2016 Jan 27;352:i65. https://doi.org/10.1136/bmj.i65.

Frequently Asked Questions - ClinicalTrials.gov. Available from: https://clinicaltrials.gov/ct2/manage-recs/faq.

Hughes S, Cohen D, Jaggi R. Differences in reporting serious adverse events in industry sponsored clinical trial registries and journal articles on antidepressant and antipsychotic drugs: a cross-sectional study. BMJ Open. 2014;4(7):e005535. https://doi.org/10.1136/bmjopen-2014-005535.

Sreekrishnan A, Mampre D, Ormseth C, Miyares L, Leasure A, Ross JS, et al. Publication and dissemination of results in clinical trials of neurology. JAMA Neurol. 2018;75(7):890–1. https://doi.org/10.1001/jamaneurol.2018.0674.

Ross JS, Mocanu M, Lampropulos JF, Tse T, Krumholz HM. Time to publication among completed clinical trials. JAMA Intern Med. 2013 May 13;173(9):825–8. https://doi.org/10.1001/jamainternmed.2013.136.

Acknowledgements

We would like to thank EMA for its assistance.

Funding

This study was funded by the Nordic Cochrane Centre, which is funded by the Danish government.

Author information

Authors and Affiliations

Contributions

LJ wrote the first draft. LJ and TJ contributed to the conception of the review, the design of the review, the collection and assembly of data, the analysis and interpretation of the data, the drafting of the article, the critical revision of the article for important intellectual content and the final approval of the article. PCG contributed to the conception of the review, the critical revision of the article for important intellectual content and the final approval of the article. All authors had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

All authors have completed the ICMJE uniform disclosure form. LJ declares no support from any organization for the submitted work, no financial relationships with any organizations that might have an interest in the submitted work and no other relationships or activities that could appear to have influenced the submitted work. PCG spoke by video link about the HPV vaccines at the IFICA conference in 2018 but received no fee or reimbursement for this. PCG and TJ were co-signatories of a complaint to the European Ombudsman on maladministration in relation to the EMA investigation of possible harms from HPV vaccines. PCG does not regard this as a competing interest. TJ was a co-recipient of a UK National Institute for Health Research grant (HTA—10/80/01 Update and amalgamation of two Cochrane Reviews: neuraminidase inhibitors for preventing and treating influenza in healthy adults and children—https://www.journalslibrary.nihr.ac.uk/programmes/hta/108001#/). TJ is also in receipt of a Cochrane Methods Innovations Fund grant to develop guidance on the use of regulatory data in Cochrane reviews. TJ is occasionally interviewed by market research companies about phase I or II pharmaceutical products. In 2011–2014, TJ acted as an expert witness in a litigation case related to the antiviral oseltamivir, in two litigation cases on potential vaccine-related damage and in a labour case on influenza vaccines in healthcare workers in Canada. He has acted as a consultant for Roche (1997-99), GSK (2001-2), Sanofi-Synthelabo (2003) and IMS Health (2013). In 2014–2016, TJ was a member of three advisory boards for Boehringer Ingelheim. TJ was a member of an independent data monitoring committee for a Sanofi Pasteur clinical trial on an influenza vaccine.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The protocol for our comparison is registered on PROSPERO as an addendum to our systematic review of the benefits and harms of the HPV vaccines:https://www.crd.york.ac.uk/PROSPEROFILES/56093_PROTOCOL_20180320.pdf

Our index of the HPV vaccine studies was published in January 2018: https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-018-0675-z

A description of our difficulties obtaining the clinical study reports is published here: https://www.bmj.com/content/362/bmj.k3694.full?ijkey=0ibTwph3m0aErxL&keytype=ref

Supplementary information

Additional file 1.

Word document: Comparison of HPV vaccine study documents: PRISMA 2009 checklist.

Additional file 2.

PDF document: Comparison of HPV vaccine study documents: meta-analyses.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Jørgensen, L., Gøtzsche, P.C. & Jefferson, T. Benefits and harms of the human papillomavirus (HPV) vaccines: comparison of trial data from clinical study reports with corresponding trial register entries and journal publications. Syst Rev 9, 42 (2020). https://doi.org/10.1186/s13643-020-01300-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13643-020-01300-1