Abstract

Background

Predicting outcomes of critically ill intensive care unit (ICU) patients with coronavirus-19 disease (COVID-19) is a major challenge to avoid futile, and prolonged ICU stays.

Methods

The objective was to develop predictive survival models for patients with COVID-19 after 1-to-2 weeks in ICU. Based on the COVID–ICU cohort, which prospectively collected characteristics, management, and outcomes of critically ill patients with COVID-19. Machine learning was used to develop dynamic, clinically useful models able to predict 90-day mortality using ICU data collected on day (D) 1, D7 or D14.

Results

Survival of Severely Ill COVID (SOSIC)-1, SOSIC-7, and SOSIC-14 scores were constructed with 4244, 2877, and 1349 patients, respectively, randomly assigned to development or test datasets. The three models selected 15 ICU-entry variables recorded on D1, D7, or D14. Cardiovascular, renal, and pulmonary functions on prediction D7 or D14 were among the most heavily weighted inputs for both models. For the test dataset, SOSIC-7’s area under the ROC curve was slightly higher (0.80 [0.74–0.86]) than those for SOSIC-1 (0.76 [0.71–0.81]) and SOSIC-14 (0.76 [0.68–0.83]). Similarly, SOSIC-1 and SOSIC-7 had excellent calibration curves, with similar Brier scores for the three models.

Conclusion

The SOSIC scores showed that entering 15 to 27 baseline and dynamic clinical parameters into an automatable XGBoost algorithm can potentially accurately predict the likely 90-day mortality post-ICU admission (sosic.shinyapps.io/shiny). Although external SOSIC-score validation is still needed, it is an additional tool to strengthen decisions about life-sustaining treatments and informing family members of likely prognosis.

Similar content being viewed by others

Introduction

Since January 2020, the world has been massively affected by the coronavirus-19 disease (COVID-19) outbreak. In that context, intensive care units (ICUs) are frequently forced to expand bed capacity in many countries. Unusually long mechanical ventilation (MV) duration and ICU stays observed during the first wave are some of the most distinctive characteristics of treating severe acute respiratory syndrome-coronavirus 2 (SARS-CoV-2)-infection-related acute respiratory distress syndrome (ARDS), with 90-day mortality ranging from 31 to 53% [1,2,3,4]. Although accurately predicting patients’ clinical outcomes throughout this prolonged ICU stay can be difficult, effective recognition—at ICU admission and within the first 14 days—of those at high risk of death in-ICU is crucial to inform clinical decision-making and families of likely prognoses. It could also facilitate adequate resource allocation, including hospital beds and critical care resources, and risk-adjusted comparison of center-specific outcomes. Predicting outcomes of critically ill patients with COVID-19 being treated in the ICU is a major challenge, aimed at avoiding futile prolonged ICU stays and resource use, and provide additional reliable information for decision-making concerning withholding or withdrawing life-sustaining treatment, especially within disease epicenters needing to triage the high-volume influx of patients.

COVID-19-survival models published to date tried to predict the risk of clinical deterioration of acute cases [5, 6] using data from hospitalization day (D)1 [7]. To the best of our knowledge, none focused on predicting the survival of patients after 1-to-2 weeks in ICU. Taking advantage of the COVID–ICU-cohort database containing prospectively collected characteristics, management, and outcomes of patients admitted to ICUs for severe COVID-19 in France, Belgium, and Switzerland, between February and May 2020 [3], we used machine learning to develop three dynamic, clinically useful models able to predict 90-day mortality using in-ICU data collected on ICU D1, D7 or D14, respectively.

Patients and methods

Study population and data collection

COVID–ICU is a multicenter, prospective cohort study, conducted in 149 ICUs from 138 centers, across three countries (France, Switzerland, and Belgium), launched by the Reseau Europeen de recherche en Ventilation Artificielle (REVA) network. Most of the centers were in France (135/138) whereas two were in Belgium and one in Switzerland. All consecutive patients, over 16 years old, admitted to the participating ICUs between February 25, 2020, and May 4, 2020, with laboratory-confirmed SARS-CoV-2 infection, were included. Among the 4643 patients admitted to the ICU, 4244 had available survival status up to D90 post-ICU admission.

Every day, study investigators completed a standardized electronic case report form. Details of the information collected are described elsewhere [3]. Briefly, baseline information collected within the first 24 h post-ICU admission (D1) were: age, sex, body mass index (BMI), Simplified Acute Physiology Score (SAPS)-II [8], Sequential Organ-Failure Assessment (SOFA) score [9], comorbidities, clinical frailty-scale category [10], date of the first symptom(s) and ICU admission date. A daily-expanded dataset included respiratory support devices (oxygen mask, high-flow nasal cannula, noninvasive ventilation, or invasive MV), arterial blood gases, standard laboratory parameters, and adjuvant therapies for ARDS until D90. In-ICU organ dysfunctions included acute kidney failure requiring renal replacement therapy, proven thromboembolic complications, confirmed ventilator-associated pneumonia (VAP) or bacterial coinfection, and cardiac arrest. Each patient’s vital status was obtained 90 days post-ICU admission.

COVID–ICU received approval from the French Intensive Care Society Ethics Committee (CE-SRLF 20–23) in accordance with local regulations. All patients, or close relatives, were informed that their data were included in the COVID–ICU cohort. This study was conducted in accordance with the amended Declaration of Helsinki.

Candidate predictors

We included candidate predictors considered in our previous multivariate Cox regression analyses, which assessed baseline risk factors of death by D90 [3]. D7 and D14 candidate predictors were defined a priori among data available in the COVID–ICU cohort [3] (i.e., before the building of the SOSIC models), based on recent publications describing risk factors and specific complications associated with COVID-19 prognosis [11, 12]. VAP was diagnosed by quantitative distal bronchoalveolar lavage cultures growing ≥ 104 CFU/mL, blind protected specimen-brush distal samples growing ≥ 103 CFU/mL, or endotracheal aspirates growing ≥ 106 CFU/mL. Pulmonary embolism was proven by pulmonary computed-tomography angiography or echocardiography.

Statistical analyses

Model development

We implemented a systematic machine learning-based framework to construct three mortality-prediction models (SOSIC-1, SOSIC-7, and SOSIC-14) from randomly selected development datasets, comprising 90% of the study sample; the remaining 10% were randomly assigned to the test datasets. Each prediction model was built using a gradient-boosting machine with decision trees, as implemented in the eXtreme Gradient-Boosting (XGBoost) classification algorithm [13]. XGBoost algorithm contains several tuning parameters (e.g., the number of decision trees, the maximal length of the component decision trees). The best set of parameters was chosen among a large grid of tuning parameters using tenfold cross-validation to maximize the prediction model’s discrimination ability, as assessed by the area under the receiver operating characteristics curve (AUC). We aimed to build models that could accurately estimate D90 survival for patients alive on D1, D7, or D14 following ICU admission. The SOSIC-1 model included only baseline candidate predictors, while SOSIC-7 and SOSIC-14 models combined baseline and D7 or D14 patient characteristics. The variable importance, which quantifies how much each variable contributed to the classification was extracted from the models. SHAP (SHapley Additive exPlanations) values were also computed to visualize the influence of each input variable on the final score [14].

Model validation

The performances of the three SOSIC models predicting 90-day mortality were evaluated using AUC-assessed discrimination (i.e., the probability that patients who experience the outcome will be ranked above those who do not), and calibration (i.e., the agreement between predicted and observed risks) assessed by the calibration curve (i.e., the ideal calibration intercept is 0 and ideal calibration slope is 1). The Brier score was also computed; it combines calibration and discrimination by quantifying how close predictions are to the observed outcomes (i.e., better performance is observed with a lower Brier score) [15].

A double internal validation was applied for the three SOSIC prediction models. First, internal validity was assessed by estimating the model performance corrected from optimism using bootstrap resampling with 100 repetitions. All the steps leading to the final prediction model (including the selection of the set of XGBoost tuning parameters) were applied to every bootstrap sample [16]. Second, model performance was assessed on the independent test datasets, distinct from development datasets used for model construction. One of the advantages of the XGBoost algorithm is its sparsity awareness that can handle the possibility of missing values [17]. Therefore, no missing value was imputed before model development or validation. Because the COVID-19 pandemic did not hit similarly all regions, we tested the performances of the three SOSIC models in two distinct populations namely in centers from Paris-greater areas and Grand Est compared to centers from other regions. Lastly, the performances of the SOSIC-1 were also compared to the SOFA and the SAPS II scores in the development and test datasets.

Descriptive analysis

Characteristics of the data included in the SOSIC scores are expressed as number (percentage) for categorical variables and means ± standard deviations or medians (interquartile ranges) for continuous variables. In a univariate analysis, categorical variables were compared with χ2 or Fisher’s exact test and continuous variables were compared with Student's t-test or Wilcoxon's rank-sum test. A P value < 0.05 was considered statistically significant. Statistical analyses and predictive model construction were computed with R v4.0.3, caret package v6.0-86, and XGBoost package v1.3.2.1.

Results

Study population

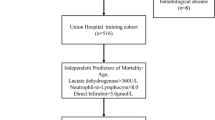

Among 4643 patients enrolled by May 4, 2020, 399 were lost to follow-up by D90. Thus, the predictive survival models were built based on the remaining 4244 patients with available D90 vital status. Then, 4244, 2877, and 1349 patients, respectively, were included in the development datasets to construct the SOSIC-1, SOSIC-7, and SOSIC-14 scores, with 424, 292, and 185 from each group, respectively, randomly assigned to the corresponding test datasets (Fig. 1). The three models selected 15 ICU (baseline) variables: i.e., age; sex; BMI; treated hypertension; known diabetes; immunocompromised status; clinical frailty-scale category; bacterial coinfection; ventilation profile; SOFA-score respiratory, cardiovascular, and renal components; lactate concentration; and lymphocyte count). d-Dimers were also selected a priori but were not retained for model development because of their inconsistent collection at ICU admission (Additional file 1). Selected in-ICU parameters obtained on D7 (SOSIC-7) or D14 (SOSIC-14) were: SOFA-score respiratory, cardiovascular, and renal components; lactate level, and ventilation profile. In addition, on D7 or D14, the duration of invasive MV, extubation procedure, prone-positioning, continuous neuromuscular blockade, VAP, cardiac arrest, and/or proven pulmonary embolism since ICU admission were integrated into the SOSIC-7 and SOSIC-14 scores. Table 1 reports the distributions of these variables according to D90 vital status in the D1, D7, or D14 development and test datasets.

Univariate analyses of patient characteristics in the development datasets showed that those who died were significantly older and had a higher clinical frailty-scale category, lower BMI, and shorter intervals between first symptom(s) and ICU admission (except for the SOSIC-14 dataset) compared to D90 survivors (P < 0.01). Similarly, patients who had died by D90 were more likely on invasive MV in ICU D1 and had significantly higher SOFA-score respiratory, cardiovascular, and renal components. Their lactate levels during the first 24 h in-ICU were significantly higher and lymphocyte counts were lower.

Among patients still in-ICU on D7 or D14, the same differences were observed regarding their SOFA-score components, lactate levels, and ventilation profiles on those days (Table 2). As expected, patients who died were more likely to have undergone prone-positioning or received neuromuscular blockade and experienced significantly more complications in-ICU (i.e., VAP, cardiac arrest, pulmonary embolism) within the first 7 or 14 days.

Importance of the 90-day mortality predictors

Figure 2 and Additional file 2 illustrate variable-weighting in the machine-learning models used to build the D1, D7, and D14 SOSIC scores. Briefly, age, clinical frailty-scale category, D1 lymphocyte count, and the interval between first symptom(s) and ICU admission were given significant weight to predict D90 mortality. However, the weights of these baseline characteristics tended to decrease when the prediction was estimated after 7 or 14 days in-ICU. Conversely, other baseline comorbidities, such as known diabetes, immunocompromised status, or treated hypertension, were accorded similar weights in all three scores.

Variable weighting in the machine-learning models: Survival of Severely Ill COVID (SOSIC) scores on days 1, 7 and 14. A color gradient is used to show variable strength in the machine-learning models at different times, ranging from yellow for the highest preponderance input variables to progressively darker shade of purple for lower input variables. D day, BMI body mass index, ICU intensive care unit, MV mechanical ventilation, SOSIC Survival of Severely Ill COVID score, SOFA Sequential Organ-Failure Assessment, VAP ventilator-associated pneumonia

Interestingly, when the prediction was estimated on D7 (SOSIC-7) or D14 (SOSIC-14), SOFA-score respiratory and cardiovascular components, and respiratory support at ICU admission were accorded greater importance compared to the D1 prediction. Moreover, cardiovascular, renal, and pulmonary functions on the prediction D7 or D14 were among the inputs with the highest preponderance in both models, while in-ICU complications since admission had only modest weight.

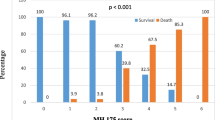

Performance of the SOSIC scores

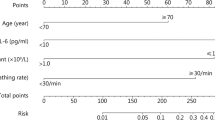

We developed three models using XGBoost algorithms that accurately predicted 90-day mortality using data from ICU D1 and the prediction day. Apparent-, bootstrap-corrected-, and test-dataset-validation metrics are reported in the Additional file 3. Based on the test dataset the AUC was slightly higher for SOSIC-7 (0.80 [0.74–0.86]) than for SOSIC-1 (0.76 [0.71–0.81]) and SOSIC-14 (0.76 [0.68–0.83]). Similarly, SOSIC-1 and SOSIC-7 calibration curves were excellent (Fig. 3). Those findings indicate fair agreement between predicted and observed risks in those two scores. Although calibration of SOSIC-14 was lower (slope 0.83 [0.49–1.22]) compared to the SOSIC-1 and SOSIC-7, the Brier scores, which assess both calibration and discrimination of the predictive models, were similar for all three models. Besides, the correlations between the three scores were good (Additional file 4). We did not identify any center effect, as evidenced by the AUC of SOSIC-1 which was similar in Paris-greater areas and Grand Est vs other regions (0.75 95%CI [0.69;0.81] vs 0.76 95%CI [0.67;0.85]). Similarly, calibration slope close to one whatever the region (Paris-greater area and Grand Est AUC: 0.92 95%CI [0.65;1.21] versus other regions AUC: 0.90 95%CI [0.53;1.33]). Similar results were observed for SOSIC-7 and SOSIC-14 models and are reported in Additional file 5. The internal validation of SOSIC-1 on the test dataset exhibited fair performance (AUC: 0.76 [95%CI 0.71;0.81]) in contrast to much poorer discrimination of the SAPS II (AUC:0.64 [95%CI 0.58;0.70]) and SOFA scores (AUC:0.62 [95%CI 0.56; 0.69]). Graphic representation of the SOSIC-1, SAPS II, and SOFA discrimination performances is shown in Fig. 4. Similarly, performances of the SOSIC-7 and SOSIC-14 were slightly better than SOFA at day-7 and day-14, respectively (Additional file 6).

Discussion

We developed and validated three prognostic models (SOSIC-1, SOSIC-7, and SOSIC-14) to predict 90-day mortality of 4244 critically ill patients with COVID-19 treated in France, Belgium, and Switzerland, evaluated during the 2 weeks following ICU admission. The SOSIC scores showed that entering 15 to 27 baseline and dynamic clinical parameters (depending on the score day) into an automatable XGBoost algorithm had the potential to accurately predict likely mortality 90 days post-ICU admission. Although external validations of the SOSIC scores in other critically ill populations with COVID-19 are still needed, these dynamic tools could enable clinicians to objectively assess the in-ICU mortality risk of patients with COVID-19 for up to 14 days. It offers an additional tool to strengthen decisions about life-sustaining treatments, hospital and ICU resources, and informing family members of likely prognosis.

Predicting outcomes of critically ill COVID patients is challenging. Patients hospitalized with COVID-19 can be classified into three phenotypes that have prognostic implications [18]. Indeed, patients with more chronic heart, lung, or renal disease(s), obesity, diabetes, an intense inflammatory syndrome, higher creatinine level, and poorer oxygenation parameters were classified as having the highest risk of deterioration that was associated with poorer outcomes [18]. Age is frequently associated with higher rates of hospitalization, ICU admission, and mortality of patients with COVID-19 [18,19,20]. Frailty is a useful tool to stratify the risk of death 90 days post-ICU admission and offers important additional prognostic information to combine with age over 70 years for patients with COVID [21]. Interestingly, the weights accorded age and frailty in our predictive models declined over the ICU stay. In other words, those two variables more weakly affected mortality prediction after 7 or 14 days in-ICU, compared to the prediction at ICU admission (Fig. 2). Similarly, a shorter interval from the onset of COVID-19 symptom(s) to ICU admission, which was associated with a higher risk of death [22], weighed less in SOSIC-7 and SOSIC-14.

Despite being collected on D1, SOFA-score cardiovascular, respiratory, and renal components strongly impacted later predictions but only modestly affected the D1 prediction. Similarly, in an observational multicenter cohort of patients with moderate to severe COVID-19 ARDS, the decrease of the static compliance of the respiratory system observed between ICU day-1 and day-14 was not associated with day-28 outcome [23]. Besides, cardiac injuries appear frequent with nearly 70% of COVID-19 patients experienced cardiac injury within the first 14 days of ICU stay [24].

The poor discriminant accuracy of the SOFA-score to predict mortality of patients before intubation for COVID-19 pneumonia was recently highlighted [25]; indeed, these patients generally have severe single-organ dysfunction and globally less SOFA-score variations. However, the impacts D7 and D14 respiratory, cardiovascular and renal statuses are of the utmost importance in the mortality prediction at those times. The SOSIC scores put the spotlight on the possibility of some variables exerting variable influence to predict mortality of patients with COVID-19, e.g., demographic variables had less weight after 1 or 2 weeks in-ICU. However, the discrimination of the SOSIC scores did not improve over time. Indeed, the AUC was not better at day-7 or 14 compared to day-1 and its better performance compared to the SOFA-score was reduced over time. A greater number of variables inducing a higher heterogeneity associated with a reduction of the sample size of the development and the test datasets at day-7 and 14 could explain this finding.

Because no models predicting COVID-19 outcomes focused on patients already in the ICU [5, 6, 26], the SOSIC scores have the potential for clinical usefulness and generalizability. Internal validations of the SOSIC scores showed consistent discrimination and calibration, which obviously deserves further external validation. With an AUC around 0.80, external validation is desirable to assess the mortality prediction beyond population levels and to fully assess the mortality risk of the individual being admitted and cared for up to 2 weeks in-ICU. Although discrimination was largely consistent for the different validation methods, SOSIC-14’s calibrations were lower; that finding suggests its performance using an external independent sample might be lower than those of SOCIC-1 or SOSIC-7. By construction, SOSIC-14 was developed on a smaller sample size than the other two models, which might explain its lower quality in terms of predicting 90-day mortality.

Despite being developed and validated on a substantial cohort with a large number of participating ICUs, these scores were constructed during the first COVID wave in Europe, a period with high pressure on the health systems and before the publication of core randomized trials [4, 27]. Moreover, ventilator strategies have also changed, as the pandemic has evolved and the medical community acquired a greater understanding of the pathophysiology of the disease and how to treat it. Caregiver reluctance to provide noninvasive oxygen strategies has been overcome [3], leading to higher percentages of patients on high-flow oxygen and noninvasive ventilation, and lower rates of intubation on ICU D1 [3, 28].

Debates are still ongoing as to the best timing of intubation in that population, as recent data have suggested poorer outcomes associated with an early intubation strategy [29,30,31]. Thus, the very high percentages of our patients intubated on ICU D1 will probably differ during subsequent COVID-19 outbreaks, in countries with different public healthcare organizations or ICU admission policies.

Indeed, SOSIC predictions should be interpreted as reflecting a profile of critically ill patients with COVID-19 not routinely treated with corticosteroids and outside vaccination campaigns, which may have changed since May 2020. Besides, this cohort was conducted at a time where the national health system was extremely pressured which lead to an important reorganization of intensive care supplies in some regions although we did not find a region effect on the performances of the SOSIC scores. However, we cannot rule out that outside a surge situation, the model could slightly overestimate the mortality. As commonly done for other scoring systems [32], prospective external validations of the SOSIC scores are warranted to determine the need for temporal recalibration and to evaluate model performance in diverse international settings. External validations in more recent cohorts of patients who received recent treatments and ventilation management are warranted. The publicly available calculator (sosic.shinyapps.io/shiny) should help achieve these goals. Another limitation is that we only included predictors that were routinely collected in the COVID–ICU database during the study period. Thus, we cannot rule out that some additional laboratory or ventilatory parameters reflecting respiratory mechanics (especially measured on ICU D7 or D14) would have improved SOSIC-score performances. We were also unable to integrate d-dimer concentrations as initially planned [33], because of their inconsistent collection at ICU admission. Although the XGBoost algorithm incorporates missing data in its split finding algorithm, we cannot guarantee that this method can handle any pattern of missing data effectively [34]. As this algorithm potentially exploits the data missingness patterns for prediction, a major shift in the missingness mechanism in an external independent sample may affect SOSIC scores performance. Lastly, important detailed information on therapy withholding or withdrawing is lacking.

Conclusion

The SOSIC-1, -7, and -14 scores were able to fairly predict 90-day mortality of critically ill patients with COVID-19 admitted and managed in-ICU (sosic.shinyapps.io/shiny). These machine-learning models, built with XGBoost algorithms, showed good discriminations and excellent calibrations. The patient’s demographic characteristics contributed most to SOSIC-1, while ventilatory status and extrapulmonary dysfunctions were the preponderant predictors in SOSIC-7 and SOSIC-14. Further studies are now warranted to externally validate these scores in recent cohorts of critically ill COVID-19 patients and assess their performances at individual levels as the pandemic evolves.

Availability of data and materials

Not applicable.

Abbreviations

- ARDS:

-

Acute respiratory distress syndrome

- AUC:

-

Area under the receiver operating characteristics (ROC) curve

- BMI:

-

Body mass index

- COVID-19:

-

Coronavirus-19 disease

- ICU:

-

Intensive care units

- MV:

-

Mechanical ventilation

- ML:

-

Machine learning

- SAPS II:

-

Simplified Acute Physiology Score II

- SARS-CoV-2:

-

Severe acute respiratory syndrome-coronavirus-2

- SOFA:

-

Sequential Organ-Failure Assessment

- SOSIC:

-

Survival of Severely Ill COVID

- VAP:

-

Ventilator-associated pneumonia

References

Karagiannidis C, Windisch W, McAuley DF, Welte T, Busse R. Major differences in ICU admissions during the first and second COVID-19 wave in Germany. Lancet Respir Med. 2021;9(5):e47–8.

Karagiannidis C, Mostert C, Hentschker C, Voshaar T, Malzahn J, Schillinger G, et al. Case characteristics, resource use, and outcomes of 10 021 patients with COVID-19 admitted to 920 German hospitals: an observational study. Lancet Respir Med. 2020;8:853–62.

COVID-ICU Group on behalf of the REVA Network and the COVID-ICU Investigators. Clinical characteristics and day-90 outcomes of 4244 critically ill adults with COVID-19: A prospective cohort study. Intensive Care Med. 2021;47:60–73.

RECOVERY Collaborative Group, Horby P, Lim WS, Emberson JR, Mafham M, Bell JL, et al. Dexamethasone in hospitalized patients with Covid-19. N Engl J Med. 2021;384(8):693–704.

Gupta RK, Harrison EM, Ho A, Docherty AB, Knight SR, van Smeden M, et al. Development and validation of the ISARIC 4C Deterioration model for adults hospitalised with COVID-19: a prospective cohort study. Lancet Respir Med. 2021;9:349–59.

Pimentel MA, Redfern OC, Malycha J, Meredith P, Prytherch D, Briggs J, et al. Detecting deteriorating patients in hospital: development and validation of a novel scoring system. Am J Respir Crit Care Med. 2021;204(1):44–52.

Bennett TD, Moffitt RA, Hajagos JG, Amor B, Anand A, Bissell MM, et al. The National COVID Cohort Collaborative: clinical characterization and early severity prediction. JAMA Netw Open. 2021;4(7)

Le Gall JR, Lemeshow S, Saulnier F. A new Simplified Acute Physiology Score (SAPS II) based on a European/North American multicenter study. JAMA. 1993;270:2957–63.

Vincent JL, Moreno R, Takala J, Willatts S, De Mendonça A, Bruining H, et al. The SOFA (Sepsis-related Organ Failure Assessment) score to describe organ dysfunction/failure. On behalf of the Working Group on Sepsis-Related Problems of the European Society of Intensive Care Medicine. Intensive Care Med. 1996;22:707–10.

Juma S, Taabazuing M-M, Montero-Odasso M. Clinical frailty scale in an acute medicine unit: a simple tool that predicts length of stay. Can Geriatr J. 2016;19:34–9.

Rouzé A, Martin-Loeches I, Povoa P, Makris D, Artigas A, Bouchereau M, et al. Relationship between SARS-CoV-2 infection and the incidence of ventilator-associated lower respiratory tract infections: a European multicenter cohort study. Intensive Care Med. 2021;47:188–98.

Erben Y, Franco-Mesa C, Gloviczki P, Stone W, Quinones-Hinojosa A, Meltzer AJ, et al. Deep venous thrombosis and pulmonary embolism among hospitalized coronavirus disease 2019 (COVID-19) positive patients predict higher mortality, prolonged intensive care unit and hospital stays in a multi-site healthcare system. J Vasc Surg Venous Lymphat Disord. 2021;9(6):1361–70.

XGBoost|Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. https://doi.org/10.1145/2939672.2939785 Accessed 7 May 2021.

Lundberg SM, Lee S-I. A Unified approach to interpreting model predictions. advances in neural information processing systems. Curran Associates, Inc.; 2017. https://papers.nips.cc/paper/2017/hash/8a20a8621978632d76c43dfd28b67767-Abstract.html. Accessed 30 Sept 2021.

Steyerberg EW, Vickers AJ, Cook NR, Gerds T, Gonen M, Obuchowski N, et al. Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology. 2010;21:128–38.

Steyerberg EW, Harrell FE Jr, Borsboom GJ, Eijkemans MJ, Vergouwe Y, Habbema JD. Internal validation of predictive models: efficiency of some procedures for logistic regression analysis. J Clin Epidemiol. 2001;54:774–81.

Chen T, Guestrin C. XGBoost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. New York: Association for Computing Machinery; 2016. p. 785–94. https://doi.org/10.1145/2939672.2939785 Accessed 30 Sept 2021.

Gutiérrez-Gutiérrez B, Del Toro MD, Borobia AM, Carcas A, Jarrín I, Yllescas M, et al. Identification and validation of clinical phenotypes with prognostic implications in patients admitted to hospital with COVID-19: a multicentre cohort study. Lancet Infect Dis. 2021;21(6):783–92.

Gupta S, Hayek SS, Wang W, Chan L, Mathews KS, Melamed ML, et al. Factors associated with death in critically ill patients with coronavirus disease 2019 in the US. JAMA Intern Med. 2020;180:1436–47.

Grasselli G, Greco M, Zanella A, Albano G, Antonelli M, Bellani G, et al. Risk factors associated with mortality among patients with COVID-19 in Intensive Care Units in Lombardy, Italy. JAMA Intern Med. 2020;180(10):1345–55.

Jung C, Flaatten H, Fjølner J, Bruno RR, Wernly B, Artigas A, et al. The impact of frailty on survival in elderly intensive care patients with COVID-19: the COVIP study. Crit Care. 2021;25:149.

Azoulay E, Fartoukh M, Darmon M, Géri G, Voiriot G, Dupont T, et al. Increased mortality in patients with severe SARS-CoV-2 infection admitted within seven days of disease onset. Intensive Care Med. 2020;46(9):1714–22.

Vandenbunder B, Ehrmann S, Piagnerelli M, Sauneuf B, Serck N, Soumagne T, et al. Static compliance of the respiratory system in COVID-19 related ARDS: an international multicenter study. Crit Care. 2021;25:52.

Doyen D, Dupland P, Morand L, Fourrier E, Saccheri C, Buscot M, et al. Characteristics of cardiac injury in critically ill patients with coronavirus disease 2019. Chest. 2021;159:1974–85.

Raschke RA, Agarwal S, Rangan P, Heise CW, Curry SC. Discriminant accuracy of the SOFA score for determining the probable mortality of patients with COVID-19 pneumonia requiring mechanical ventilation. JAMA. 2021;325:1469–70.

Yadaw AS, Li Y-C, Bose S, Iyengar R, Bunyavanich S, Pandey G. Clinical features of COVID-19 mortality: development and validation of a clinical prediction model. Lancet Digit Health. 2020;2:e516–25.

Kalil AC, Patterson TF, Mehta AK, Tomashek KM, Wolfe CR, Ghazaryan V, et al. Baricitinib plus remdesivir for hospitalized adults with Covid-19. N Engl J Med. 2021;384(9):795–807.

Auld SC, Caridi-Scheible M, Robichaux C, Coopersmith CM, Murphy DJ, Emory COVID-19 Quality and Clinical Research Collaborative. Declines in mortality over time for critically ill adults with coronavirus disease 2019. Crit Care Med. 2020;48(12):e1382–4.

Ursino M, Dupuis C, Buetti N, de Montmollin E, Bouadma L, Golgran-Toledano D, et al. Multistate modeling of COVID-19 patients using a large multicentric prospective cohort of critically ill patients. J Clin Med. 2021;10:544.

Demoule A, Vieillard Baron A, Darmon M, Beurton A, Géri G, Voiriot G, et al. High-flow nasal cannula in critically iii patients with severe COVID-19. Am J Respir Crit Care Med. 2020;202:1039–42.

Dres M, Hajage D, Lebbah S, Kimmoun A, Pham T, Béduneau G, et al. Characteristics, management, and prognosis of elderly patients with COVID-19 admitted in the ICU during the first wave: insights from the COVID-ICU study: prognosis of COVID-19 elderly critically ill patients in the ICU. Ann Intensive Care. 2021;11:77.

Paul E, Bailey M, Van Lint A, Pilcher V. Performance of APACHE III over time in Australia and New Zealand: a retrospective cohort study. Anaesth Intensive Care. 2012;40:980–94.

Grasselli G, Tonetti T, Protti A, Langer T, Girardis M, Bellani G, et al. Pathophysiology of COVID-19-associated acute respiratory distress syndrome: a multicentre prospective observational study. Lancet Respir Med. 2020;8(12):1201–8.

Le Morvan M, Josse J, Scornet E, Varoquaux G. What’s a good imputation to predict with missing values? 2021. https://hal.archives-ouvertes.fr/hal-03243931 Accessed 8 Nov 2021.

Acknowledgements

We gratefully acknowledge all the French, Belgian, and Swiss clinical research centers, COVID-ICU investigators, the medical students, Polytechnic University students, and patients involved in the study.

Participating Sites and COVID–ICU Investigators: CHU Angers, Angers, France (Alain Mercat, Pierre Asfar, François Beloncle, Julien Demiselle), APHP-Hôpital Bicêtre, Le Kremlin-Bicêtre, France (Tài Pham, Arthur Pavot, Xavier Monnet, Christian Richard), APHP-Hôpital Pitié Salpêtrière, Paris, France (Alexandre Demoule, Martin Dres, Julien Mayaux, Alexandra Beurton), CHU Caen Normandie-Hôpital Côte de Nacre, Caen, France, (Cédric Daubin, Richard Descamps, Aurélie Joret, Damien Du Cheyron), APHP-Hôpital Cochin, Paris, France (Frédéric Pene, Jean-Daniel Chiche, Mathieu Jozwiak, Paul Jaubert), APHP-Hôpital Tenon, Paris, France, (Guillaume Voiriot, Muriel Fartoukh, Marion Teulier, Clarisse Blayau), CHRU de Brest–La Cavale Blanche, Brest, France (Erwen L'Her, Cécile Aubron, Laetitia Bodenes, Nicolas Ferriere), Centre Hospitalier de Cholet, Cholet, France (Johann Auchabie, Anthony Le Meur, Sylvain Pignal, Thierry Mazzoni), CHU Dijon Bourgogne, Dijon, France (Jean-Pierre Quenot, Pascal Andreu, Jean-Baptiste Roudau, Marie Labruyère), CHU Lille-Hôpital Roger Salengero, Lille, France (Saad Nseir, Sébastien Preau, Julien Poissy, Daniel Mathieu), Groupe Hospitalier Nord Essonne, Longjumeau, France (Sarah Benhamida, Rémi Paulet, Nicolas Roucaud, Martial Thyrault), APHM-Hopital Nord, Marseille, France (Florence Daviet, Sami Hraiech, Gabriel Parzy, Aude Sylvestre), Hôpital de Melun-Sénart, Melun, France (Sébastien Jochmans, Anne-Laure Bouilland, Mehran Monchi), Élément Militaire de Réanimation du SSA, Mulhouse, France (Marc Danguy des Déserts, Quentin Mathais, Gwendoline Rager, Pierre Pasquier), CHU Nantes-Hôpital Hotel Dieu, Nantes, France (Reignier Jean, Seguin Amélie, Garret Charlotte, Canet Emmanuel), CHU Nice-Hôpital Archet, Nice, France (Jean Dellamonica, Clément Saccheri, Romain Lombardi, Yanis Kouchit), Centre Hospitalier d'Orléans, Orléans, France (Sophie Jacquier, Armelle Mathonnet, Mai-Ahn Nay, Isabelle Runge), Centre Hospitalier Universitaire de la Guadeloupe, Pointe-à-Pitre, France (Frédéric Martino, Laure Flurin, Amélie Rolle, Michel Carles), Hôpital de la Milétrie, Poitiers, France (Rémi Coudroy, Arnaud W Thille, Jean-Pierre Frat, Maeva Rodriguez), Centre Hospitalier Roanne, Roanne, France (Pascal Beuret, Audrey Tientcheu, Arthur Vincent, Florian Michelin), CHU Rouen-Hôpital Charles Nicolle, Rouen, France (Fabienne Tamion, Dorothée Carpentier, Déborah Boyer, Christophe Girault), CHRU Tours-Hôpital Bretonneau, Tours, France (Valérie Gissot, Stéphan Ehrmann, Charlotte Salmon Gandonniere, Djlali Elaroussi), Centre Hospitalier Bretagne Atlantique, Vannes, France (Agathe Delbove, Yannick Fedun, Julien Huntzinger, Eddy Lebas), CHU Liège, Liège, Belgique (Grâce Kisoka, Céline Grégoire, Stella Marchetta, Bernard Lambermont), Hospices Civils de Lyon-Hôpital Edouard Herriot, Lyon, France (Laurent Argaud, Thomas Baudry, Pierre-Jean Bertrand, Auguste Dargent), Centre Hospitalier Du Mans, Le Mans, France (Christophe Guitton, Nicolas Chudeau, Mickaël Landais, Cédric Darreau), Centre Hospitalier de Versailles, Le Chesnay, France (Alexis Ferre, Antoine Gros, Guillaume Lacave, Fabrice Bruneel), Hôpital Foch, Suresnes, France (Mathilde Neuville, JérômeDevaquet, Guillaume Tachon, Richard Gallot), Hôpital Claude Galien, Quincy sous Senart, France (Riad Chelha, Arnaud Galbois, Anne Jallot, Ludivine Chalumeau Lemoine), GHR Mulhouse Sud-Alsace, Mulhouse, France (Khaldoun Kuteifan, Valentin Pointurier, Louise-Marie Jandeaux, Joy Mootien), APHP-Hôpital Antoine Béclère, Clamart, France (Charles Damoisel, Benjamin Sztrymf), APHP-Hôpital Pitié-Salpêtrière, Paris, France (Matthieu Schmidt, Alain Combes, Juliette Chommeloux, Charles-Edouard Luyt), Hôpital Intercommunal de Créteil, Créteil, France (Frédérique Schortgen, Leon Rusel, Camille Jung), Hospices Civils de Lyon-Hôpital Neurologique, Lyon, France (Florent Gobert), APHP-Hôpital Necker, Paris, France (Damien Vimpere, Lionel Lamhaut), Centre Hospitalier Public du Cotentin-Hôpital Pasteur, Cherbourg-en-cotentin, France (Bertrand Sauneuf, Liliane Charrrier, Julien Calus, Isabelle Desmeules), CHU Rennes-Hôpital du Pontchaillou, Rennes, France (Benoît Painvin, Jean-Marc Tadie), CHU Strasbourg-Hôpital Hautepierre, Strasbourg, France (Vincent Castelain, Baptiste Michard, Jean-Etienne Herbrecht, Mathieu Baldacini), APHP-Hôpital Pitié Salpêtrière, Paris, France (Nicolas Weiss, Sophie Demeret, Clémence Marois, Benjamin Rohaut), Centre Hospitalier Territorial Gaston-Bourret, Nouméa, France (Pierre-Henri Moury, Anne-Charlotte Savida, Emmanuel Couadau, Mathieu Série), Centre Hospitalier Compiègne-Noyon, Compiègne, France (Nica Alexandru), Groupe Hospitalier Saint-Joseph, Paris, France (Cédric Bruel, Candice Fontaine, Sonia Garrigou, Juliette Courtiade Mahler), Centre hospitalier mémorial de Saint-Lô, Saint-Lô, France (Maxime Leclerc, Michel Ramakers), Grand Hôpital de l'Est Francilien, Jossigny, France (Pierre Garçon, Nicole Massou, Ly Van Vong, Juliane Sen), Gustave Roussy, Villejuif, France (Nolwenn Lucas, Franck Chemouni, Annabelle Stoclin), Centre Hospitalier Intercommunal Robert Ballanger, Aulnay-sous-Bois, France (Alexandre Avenel, Henri Faure, Angélie Gentilhomme, Sylvie Ricome), Hospices Civiles de Lyon-Hôpital Edouard Herriot, Lyon, France (Paul Abraham, Céline Monard, Julien Textoris, Thomas Rimmele), Centre Hospitalier d'Avignon, Avignon, France (Florent Montini), Groupe Hospitalier Diaconesses-Croix Saint Simon, Paris, France (Gabriel Lejour, Thierry Lazard, Isabelle Etienney, Younes Kerroumi), CHU Clermont-Ferrand-Hôpital Gabriel Montpied, Clermont Ferrand, France (Claire Dupuis, Marine Bereiziat, Elisabeth Coupez, François Thouy), Hôpital d'Instruction des Armées Percy, Clamart, France (Clément Hoffmann, Nicolas Donat, Anne Chrisment, Rose-Marie Blot), CHU Nancy-Hôpital Brabois, Vandoeuvre-les-Nancy, France (Antoine Kimmoun, Audrey Jacquot, Matthieu Mattei, Bruno Levy), Centre Hospitalier de Vichy, Vichy, France (Ramin Ravan, Loïc Dopeux, Jean-Mathias Liteaudon, Delphine Roux), Hopital Pierre Bérégovoy, Nevers, France (Brice Rey, Radu Anghel, Deborah Schenesse, Vincent Gevrey), Centre Hospitalier de Tarbes, Tarbes, France (Jermy Castanera, Philippe Petua, Benjamin Madeux), Hôpitaux Civils de Colmar-Hôpital Louis pasteur, Colmar, France (Otto Hartman), CHU Charleroi-Hôpital Marie Curie, Bruxelles, Belgique (Michael Piagnerelli, Anne Joosten,Cinderella Noel, Patrick Biston), Centre hospitalier de Verdun Saint Mihiel, Saint Mihiel, France (Thibaut Noel), CH Eure-Seine-Hôpital d'Evreux-Vernon, Evreux, France (Gurvan Bouar, Messabi Boukhanza, Elsa Demarest, Marie-France Bajolet), Hôpital René Dubos, Pontoise, France (Nathanaël Charrier, Audrey Quenet, Cécile Zylberfajn, Nicolas Dufour), APHP-Hôpital Lariboisière, Paris, France (Buno Mégarbane, SqébastianVoicu, Nicolas Deye, Isabelle Malissin), Centre Hospitalier de Saint-Brieuc, Saint-Brieuc, France (François Legay, Matthieu Debarre, Nicolas Barbarot, Pierre Fillatre), Polyclinique Bordeaux Nord Aquitaine, Bordeaux, France (Bertrand Delord, Thomas Laterrade, Tahar Saghi, Wilfried Pujol), HIA Sainte Anne, Toulon, France (Pierre-Julien Cungi, Pierre Esnault, Mickael Cardinale), Grand Hôpital de l'Est Francilien, Meaux, France (Vivien Hong Tuan Ha, Grégory Fleury, Marie-Ange Brou, Daniel Zafimahazo), HIA Robert Picqué, Villenave d'Ornon, France (David Tran-Van, Patrick Avargues, Lisa Carenco), Centre Hospitalier Fontainebleau, Fontainebleau, France (Nicolas Robin, Alexandre Ouali, Lucie Houdou), Hôpital Universitaire de Genève, Genève, Suisse (Christophe Le Terrier, Noémie Suh, Steve Primmaz, JéromePugin), APHP-Hôpital Beaujon, Clichy, France (Emmanuel Weiss, Tobias Gauss, Jean-Denis Moyer, Catherine Paugam Burtz), Groupe Hospitalier Bretage Sud, Lorient, France (Béatrice La Combe, Rolland Smonig, Jade Violleau, Pauline Cailliez), Centre Hospitalier Intercommunal Toulon, La Seyne sur Mer, France (Jonathan Chelly), Centre Hospitalier de Dieppe, Dieppe, France (Antoine Marchalot, Cécile Saladin, Christelle Bigot), CHU de Martinique, Fort-de-France, France (Pierre-Marie Fayolle, Jules Fatséas, Amr Ibrahim, Dabor Resiere), Hôpital Fondation Adolphe de Rothchild, Paris, France (Rabih Hage, Clémentine Cholet, Marie Cantier, Pierre Trouiler), APHP-Bichat Claude Bernard, Paris, France (Philippe Montravers, Brice Lortat-Jacob, Sebastien Tanaka, Alexy Tran Dinh), APHP-Hôpital Universitaire Paris Sud, Bicêtre, France (Jacques Duranteau, Anatole Harrois, Guillaume Dubreuil, Marie Werner), APHP-Hôpital Européen Georges Pompidou, Paris, France (Anne Godier, Sophie Hamada, Diane Zlotnik, Hélène Nougue), APHP, GHU Henri Mondor, Créteil, France (Armand Mekontso-Dessap, Guillaume Carteaux, Keyvan Razazi, Nicolas De Prost), APHP-Hôpitaux Universitaires Henri Mondor, Créteil, France (Nicolas Mongardon, Olivier Langeron, Eric Levesque, Arié Attias), APHP-Hôpital Lariboisière, Paris, France (Charles de Roquetaillade, Benjamin G. Chousterman, Alexandre Mebazaa, Etienne Gayat), APHP-Hôpital Saint-Antoine, Paris, France (Marc Garnier, Emmanuel Pardo, LeaSatre-Buisson, Christophe Gutton), APHP Hôpital Saint-Louis, Paris, France (Elise Yvin, Clémence Marcault, Elie Azoulay, Michael Darmon), APHP-Hôpital Saint-Antoine, Paris, France (Hafid Ait Oufella, Geoffroy Hariri, Tomas Urbina, Sandie Mazerand), APHP-Hôpital Raymond Pointcarré, Garches, France (Nicholas Heming, Francesca Santi, Pierre Moine, Djillali Annane), APHP-Hôpital Pitié Salpêtrière, Paris, France (Adrien Bouglé, Edris Omar, Aymeric Lancelot, Emmanuelle Begot), Centre Hospitalier Victor Dupouy, Argenteuil, France (Gaétan Plantefeve, Damien Contou, Hervé Mentec, Olivier Pajot), CHU Toulouse-Hôpital Rangueil, Toulouse, France (Stanislas Faguer, Olivier Cointault, Laurence Lavayssiere, Marie-Béatrice Nogier), Centre Hospitalier de Poissy, Poissy, France (Matthieu Jamme, Claire Pichereau, Jan Hayon, Hervé Outin), APHP-Hôpital Saint-Louis, Paris, France (François Dépret, Maxime Coutrot, Maité Chaussard, Lucie Guillemet), Clinique du MontLégia, CHC Groupe-Santé, Liège, Belgique (Pierre Goffin, Romain Thouny, Julien Guntz, Laurent Jadot), CHU Saint-Denis, La Réunion, France (Romain Persichini), Centre Hospitalier de Tourcoing, Tourcoing, France (Vanessa Jean-Michel, Hugues Georges, Thomas Caulier), Centre Hospitalier Henri Mondor d'Aurillac, Aurillac, France (Gaël Pradel, Marie-Hélène Hausermann, Thi My Hue Nguyen-Valat, Michel Boudinaud), Centre Hospitalier Saint Joseph Saint Luc, Lyon, France (Emmanuel Vivier, SylvèneRosseli, Gaël Bourdin, Christian Pommier) Centre Hospitalier de Polynésie Française, Polynésie, France (Marc Vinclair, Simon Poignant, Sandrine Mons), Ramsay Générale de Santé, Hôpital Privé Jacques Cartier, Massy, France (Wulfran Bougouin), Centre Hospitalier Alpes Léman, Contamine sur Arve, France (Franklin Bruna, Quentin Maestraggi, Christian Roth), Hospices Civils de Lyon-Hôpital de la Croix Rousse, Lyon, France (Laurent Bitker, François Dhelft, Justine Bonnet-Chateau, Mathilde Filippelli), Centre Cardiologique du Nord, Saint-Denis, France (Tristan Morichau-Beauchant, Stéphane Thierry, Charlotte Le Roy, Mélanie Saint Jouan), GHU-Hôpital Saint-Anne, Paris, France (Bruno Goncalves, Aurélien Mazeraud, Matthieu Daniel, Tarek Sharshar) CHR Metz-Hôpital Mercy, Metz, France (Cyril Cadoz, RostaneGaci, Sébastien Gette, Guillaune Louis), APHP-Hôpital Paul Brousse, Villejuif, France (Sophe-Caroline Sacleux, Marie-Amélie Ordan), CHRU Nancy-Hôpital Central, Nancy, France (Aurélie Cravoisy, Marie Conrad, Guilhem Courte, Sébastien Gibot), Centre Hospitalier d’Ajaccio, Ajaccio, France (Younès Benzidi, Claudia Casella, Laurent Serpin, Jean-Lou Setti), Centre Hospitalier de Bourges, Bourges, France (Marie-Catherine Besse, Anna Bourreau), Centre hospitalier de la Côte Basque, Bayonne, France (Jérôme Pillot, Caroline Rivera, Camille Vinclair, Marie-Aline Robaux), Hospices Civils de Lyon-Hôpital de la Croix Rousse, Lyon, France (Chloé Achino, Marie-Charlotte Delignette, Tessa Mazard, Frédéric Aubrun), CH Saint-Malo, Saint-Malo, France (Bruno Bouchet, Aurélien Frérou, Laura Muller, Charlotte Quentin), Centre Hospitalier de Mulhouse, Mulhouse, France (Samuel Degoul), Centre Hospitalier de Briançon, Briançon, France (Xavier Stihle, Claude Sumian, Nicoletta Bergero, Bernard Lanaspre), CHU Nice, Hôpital Pasteur 2, Nice, France (Hervé Quintard, Eve Marie Maiziere), Centre Hospitalier des Pays de Morlaix, Morlaix, France (Pierre-Yves Egreteau, Guillaume Leloup, Florin Berteau, Marjolaine Cottrel), Centre Hospitalier Valence, Valence, France (Marie Bouteloup, Matthieu Jeannot, Quentin Blanc, Julien Saison), Centre Hospitalier Niort, Niort, France (Isabelle Geneau, Romaric Grenot, Abdel Ouchike, Pascal Hazera), APHP-Hôpital Pitié Salpêtrière, Paris, France (Anne-Lyse Masse, Suela Demiri, Corinne Vezinet, Elodie Baron, Deborah Benchetrit, Antoine Monsel), Clinique du Val d'Or, Saint Cloud, France (Grégoire Trebbia, Emmanuelle Schaack, Raphaël Lepecq, Mathieu Bobet), Centre Hospitalier de Béthune, Béthune, France (Christophe Vinsonneau, Thibault Dekeyser, Quentin Delforge, Imen Rahmani), Groupe Hospitalier Intercommunal de la Haute-Saône, Vesoul, France (Bérengère Vivet, Jonathan Paillot, Lucie Hierle, Claire Chaignat, Sarah Valette), Clinique Saint-Martin, Caen, France (Benoït Her, Jennifier Brunet), Ramsay Générale de Santé, Clinique Convert, Bourg en Bresse, France (Mathieu Page, Fabienne Boiste, Anthony Collin), Hôpital Victor Jousselin, Dreux, France(Florent Bavozet, Aude Garin, Mohamed Dlala, KaisMhamdi), Centre Hospitalier de Troye, Troye, France, (Bassem Beilouny, Alexandra Lavalard, Severine Perez), CHU de ROUEN-Hôpital Charles Nicolle, Rouen, France (Benoit Veber, Pierre-Gildas Guitard, Philippe Gouin, Anna Lamacz), Centre Hospitalier Agen-Nérac, Agen, France (Fabienne Plouvier, Bertrand P. Delaborde, AïssaKherchache, Amina Chaalal), APHP-Hôpital Louis Mourier, Colombes, France (Jean-Damien Ricard, Marc Amouretti, Santiago Freita-Ramos, Damien Roux), APHP-Hôpital Pitié-Salpêtrière, Paris, France (Jean-Michel Constantin, Mona Assefi, Marine Lecore, Agathe Selves), Institut Mutualiste Montsouris, Paris, France (Florian Prevost, Christian Lamer, Ruiying Shi, Lyes Knani), CHU Besançon–Hôpital Jean Minjoz, Besançon, France, (Sébastien Pili Floury, Lucie Vettoretti), APHP-Hôpital Universitaire Robert-Debré, Paris, France (Michael Levy, Lucile Marsac, Stéphane Dauger, Sophie Guilmin-Crépon), CHU Besançon–Hôpital Jean Minjoz, Besançon, France, (Hadrien Winiszewski, Gael Piton, Thibaud Soumagne, Gilles Capellier); Médipôle Lyon-Villeurbanne, Vileurbanne, France, (Jean-Baptiste Putegnat, Frédérique Bayle, Maya Perrou, Ghyslaine Thao), APHP-Ambroise Paré, Boulogne-Billancourt, France (Guillaume Géri, Cyril Charron, Xavier Repessé, Antoine Vieillard-Baron), CHU Amiens Picardie, Amiens, France (Mathieu Guilbart, Pierre-Alexandre Roger, Sébastien Hinard, Pierre-Yves Macq), Hôpital Nord-Ouest, Villefranche-sur-Saône, France (Kevin Chaulier, Sylvie Goutte), CH de Châlons en Champagne, Châlons en Champagne, France (Patrick Chillet, Anaïs Pitta, Barbara Darjent, Amandine Bruneau), CHU Angers, Angers, France (Sigismond Lasocki, Maxime Leger, Soizic Gergaud, Pierre Lemarie), CHU Grenoble Alpes, Grenoble, France (Nicolas Terzi, Carole Schwebel, Anaïs Dartevel, Louis-Marie Galerneau), APHP-Hôpital Européen Georges Pompidou, Paris, France (Jean-Luc Diehl, Caroline Hauw-Berlemont, Nicolas Péron, Emmanuel Guérot), Hôpital Privé d'Antony, Antony, France (Abolfazl Mohebbi Amoli, Michel Benhamou, Jean-Pierre Deyme, Olivier Andremont), Institut Arnault Tzanck,Saint Laurent du Var, France (Diane Lena, Julien Cady, Arnaud Causeret, Arnaud De La Chapelle); Centre Hospitalier d’ Angoulême, Angoulême, France (Christophe Cracco, Stéphane Rouleau, David Schnell); Centre Hospitalier de Cahors, Cahors, France (Camille Foucault), Centre hospitalier de Carcassonne, Carcassonne, France (Cécile Lory); CHU Nice–Hôpital L’Archet 2, Nice, France (Thibault Chapelle, Vincent Bruckert, Julie Garcia, Abdlazize Sahraoui); Hôpital Privé du Vert Galant, Tremblay-en-France, France (Nathalie Abbosh, Caroline Bornstain, Pierre Pernet); Centre Hospitalier de Rambouillet, Rambouillet, France (Florent Poirson, Ahmed Pasem, Philippe Karoubi); Hopitaux du Léman, Thonon les Bains, France (Virginie Poupinel, Caroline Gauthier, François Bouniol, Philippe Feuchere), Centre Hospitalier Victor Jousselin, Dreux, France (Florent Bavozet, Anne Heron), Hôpital Sainte Camille, Brie sur Marne, France (Serge Carreira, Malo Emery, Anne Sophie Le Floch, Luana Giovannangeli), Hôpital d’instruction des armées Clermont-Tonnerre, Brest, France (Nicolas Herzog, Christophe Giacardi, Thibaut Baudic, Chloé Thill), APHP-Hôpital Pitié Salpêtrière, Paris, France (Said Lebbah, Jessica Palmyre, Florence Tubach, David Hajage); APHP-Hôpital Avicenne, Bobigny, France (Nicolas Bonnet, Nathan Ebstein, Stéphane Gaudry, Yves Cohen); Groupement Hospitalier la Rochelle Ré Amis, La Rochelle, France (Julie Noublanche, Olivier Lesieur); Centre Hospitalier Intercommunal de Mont de Marsan et du Pays des Sources, Mont de Marsan, France (Arnaud Sément, Isabel Roca-Cerezo, Michel Pascal, Nesrine Sma); Centre Hospitalier Départemental de Vendée, La-Roche-Sur-Yon, France (Gwenhaël Colin, Jean-Claude Lacherade, Gauthier Bionz, Natacha Maquigneau); Pôle Anesthésie-Réanimation, CHU Grenoble (Pierre Bouzat, Michel Durand, Marie-Christine Hérault, Jean-Francois Payen).

Funding

This study was funded by the Fondation APHP and its donators though the program “Alliance Tous Unis Contre le Virus”, the Direction de la Recherche Clinique et du Développement, and the French Ministry of Health. The Reseau European de recherche en Ventilation Artificielle (REVA) network received a 75,000 € research grant from Air Liquide Healthcare. The funders had no role in the design and conduct of the study; collection, management, analysis and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication. The sponsor was Assistance Publique Hôpitaux de Paris (APHP).

Author information

Authors and Affiliations

Consortia

Contributions

MS designed the study, created the web calculator, analyzed and interpreted the data, draft the manuscript, and obtained funding. BG, MF, LP, AC designed the study, analyzed and interpreted the data, and revised the manuscript for important intellectual. AD designed the study, analyzed and interpreted the data, revised the manuscript for important intellectual, and obtained funding. MP created the web calculator, analyzed and interpreted the data, and drafted the manuscript. DH designed the study, created the web calculator, analyzed and interpreted the data, draft the manuscript, and obtained funding. MS and DH are co-guarantors and had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

COVID–ICU received approval from the French Intensive Care Society Ethics Committee (CE-SRLF 20–23) in accordance with local regulations. All patients, or close relatives, were informed that their data were included in the COVID–ICU cohort. This study was conducted in accordance with the amended Declaration of Helsinki.

Consent for publication

Not applicable.

Competing interests

Dr. Schmidt reports receiving personal fees from Getinge, Drager, and Xenios, outside the submitted work. Dr. Demoule reports receiving: personal fees from Medtronic; grants, personal fees and non-financial support from Philips; personal fees from Baxter; personal fees from Hamilton; personal fees and non-financial support from Fisher & Paykel; grants from French Ministry of Health; personal fees from Getinge; grants and personal fees from Respinor; grants and non-financial support from Lungpacer; outside the submitted work. Dr. Combes reports grants from Getinge, personal fees from Getinge, Baxter and Xenios outside the submitted work. No other disclosures were reported.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Number and percentage of missing variables in the SOSIC-1, SOSIC-7, and SOSIC-14 scores, respectively.

Additional file 2.

SHAP (SHapley Additive exPlanations) values to visualize the influence of each input variable on the final score.

Additional file 3.

Validation Metrics of the SOSIC-1, SOSIC-7, and SOSIC-14 Scores.

Additional file 4.

Correlation between SOSIC-1, SOSIC-7, and SOSIC-14.

Additional file 5.

Calibration and discrimination of the Survival of Severely Ill COVID (SOSIC)-1, SOSIC-7, and SOSIC-14 scores in centers from Paris-greater area and Grand Est vs other regions, respectively.

Additional file 6.

Graphic representation of the performances of A) SOSIC-7 and the SOFA at day-7, and B) SOSIC-14 and the SOFA at day-14 in the development and the test datasets.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schmidt, M., Guidet, B., Demoule, A. et al. Predicting 90-day survival of patients with COVID-19: Survival of Severely Ill COVID (SOSIC) scores. Ann. Intensive Care 11, 170 (2021). https://doi.org/10.1186/s13613-021-00956-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13613-021-00956-9