Abstract

The specificity of toxicant-target biomolecule interactions lends to the very imbalanced nature of many toxicity datasets, causing poor performance in Structure–Activity Relationship (SAR)-based chemical classification. Undersampling and oversampling are representative techniques for handling such an imbalance challenge. However, removing inactive chemical compound instances from the majority class using an undersampling technique can result in information loss, whereas increasing active toxicant instances in the minority class by interpolation tends to introduce artificial minority instances that often cross into the majority class space, giving rise to class overlapping and a higher false prediction rate. In this study, in order to improve the prediction accuracy of imbalanced learning, we employed SMOTEENN, a combination of Synthetic Minority Over-sampling Technique (SMOTE) and Edited Nearest Neighbor (ENN) algorithms, to oversample the minority class by creating synthetic samples, followed by cleaning the mislabeled instances. We chose the highly imbalanced Tox21 dataset, which consisted of 12 in vitro bioassays for > 10,000 chemicals that were distributed unevenly between binary classes. With Random Forest (RF) as the base classifier and bagging as the ensemble strategy, we applied four hybrid learning methods, i.e., RF without imbalance handling (RF), RF with Random Undersampling (RUS), RF with SMOTE (SMO), and RF with SMOTEENN (SMN). The performance of the four learning methods was compared using nine evaluation metrics, among which F1 score, Matthews correlation coefficient and Brier score provided a more consistent assessment of the overall performance across the 12 datasets. The Friedman’s aligned ranks test and the subsequent Bergmann-Hommel post hoc test showed that SMN significantly outperformed the other three methods. We also found that a strong negative correlation existed between the prediction accuracy and the imbalance ratio (IR), which is defined as the number of inactive compounds divided by the number of active compounds. SMN became less effective when IR exceeded a certain threshold (e.g., > 28). The ability to separate the few active compounds from the vast amounts of inactive ones is of great importance in computational toxicology. This work demonstrates that the performance of SAR-based, imbalanced chemical toxicity classification can be significantly improved through the use of data rebalancing.

Similar content being viewed by others

Introduction

Structure–activity relationship (SAR) has been frequently used to predict the biological activities of chemicals from their molecular structures. One of the major challenges in SAR-based chemical classification or drug discovery is the extreme imbalance between active and inactive chemicals [1]. Despite the existence of as many as 107 commercially available molecules [2], there is almost always a skew in the distribution of molecules across the bioactivity landscape or toxicity classes. Biomacromolecules such as proteins are often highly selective in their binding to small molecular ligands. Regardless of the huge chemical space, only a few compounds are likely to interact with a target biomacromolecule causing biological effects and are consequently labelled as active compounds, whereas the remaining majority are labelled as inactive compounds. This gives rise to a common problem of class imbalance for SAR-based predictive modeling, particularly in chemical classification and activity quantification using machine learning approaches [3,4,5].

In machine learning, classifiers are built on data statistics and require a balanced data distribution to achieve optimal performance. Classifiers trained from imbalanced data tend to have a bias towards the majority class. This leads to low sensitivity and precision for the minority class [6], even though the minority class is usually of greater importance than the majority class [7, 8]. In fields such as toxicology and disease diagnosis, bias towards the majority class may result in a higher rate of false negative predictions [1].

The problem of data imbalance has been studied in the context of machine learning-based SAR modeling for more than two decades [7, 9, 10]. As a result, a plethora of methods have been proposed to alleviate the skewness of class distribution. These methods can be grouped into three categories: data-level, algorithm-level, and hybrid [7, 11]. Data-level methods aim to rebalance the training dataset’s class distribution either by undersampling the majority class or oversampling the minority class [12, 13]. They also include methods that clean overlapping samples and remove noisy samples that may negatively affect classifiers [13, 14]. Algorithm-level methods attempt to alter a given learning algorithm by inducing cost sensitivity that biases a model towards the minority class. For example, this may be achieved by imposing a high misclassification cost for the minority class [7, 11]. Recently, Mondrian conformal prediction (MCP) has been applied to improve the performance of machine learning from imbalanced datasets by computing nonconformity scores to model the reliability of predictions. This allows for identifying reliable predications at user-defined significance and confidence levels [15,16,17,18,19]. The MCP approach does not require data rebalancing. Hybrid methods combine the use of resampling strategies with special-purpose learning algorithms [11]. Ensemble approaches (e.g., bagging and boosting), known to increase the accuracy of single classifiers, have also been hybridized with resampling strategies [6].

The selection of appropriate metrics plays a key role in evaluating the performance of imbalanced learning algorithms [11, 20]. In consideration of user preference (e.g., identifying rare active chemicals) and data distribution, a number of metrics have been proposed, including precision, recall, Area Under the Precision-Recall Curve (AUPRC) [21], Area Under the Receiver Operating Characteristics (AUROC) [22], F-measure, geometric mean (G-mean), balanced accuracy, etc. [23,24,25,26]. For instance, precision is not affected by a large number of negative samples because it measures the number of true positives out of the samples predicted as positives (i.e., true positive + false positive). A high AUPRC represents both high recall and high precision. High precision relates to a low false positive rate, and high recall relates to a low false negative rate [21, 27].

The present study was motivated by the scarcity of reported efforts in the application of the above-mentioned methods to the SAR-based chemical classification domain. We conducted a literature survey which only identified a few studies in this domain where cost-sensitive learning [28, 29], resampling [29, 30], conformal prediction [18] and extreme entropy machines [1, 31] were employed to specifically deal with data imbalance. Although predictive modeling was improved for certain datasets, a consistent performance enhancement was not observed as a result of resampling and algorithm modification. Apparently, more studies are warranted to further examine such questions as: (1) Does imbalance ratio (IR), i.e., inactive-to-active sample ratio, affect the effectiveness of data-level methods (particularly resampling methods)? (2) Would different data rebalancing techniques affect the performance of a classifier differentially, and does the combination of undersampling and oversampling techniques, such as SMOTEENN (SMOTE + ENN) [32], outperform an undersampling or oversampling technique alone? (3) What metrics can better evaluate the results of imbalanced learning in SAR-based chemical classification? This study attempted to address all three of these questions.

To address the first question, we selected twelve binary datasets of 10 K compounds with varying degrees of imbalance, which were generated within the Toxicology in the 21st century (Tox21) program [33] and used for the Tox21 Data Challenge 2014 [34, 35] (https://tripod.nih.gov/tox21/challenge/about.jsp). To address the other two questions, we chose nine evaluation metrics, compared three resampling algorithms integrated with the base classifier (random forest—RF), and performed statistical analysis to rank the metrics.

In this work, we selected RF as the base classifier and bagging as the ensemble learning algorithm to improve the stability and accuracy of model predictions. Then, we applied three representative resampling methods for data imbalance handling, i.e., random under-sampling (RUS), the synthetic minority over-sampling technique (SMOTE) and SMOTEENN (i.e., a combination of SMOTE and Edited Nearest Neighbor (ENN) algorithms). Consequently, four hybrid learning methods, i.e., RF without imbalance handling (RF), RF with RUS (RUS), RF with SMOTE (SMO), and RF with SMOTEENN (SMN) were tested. Here, we did not intend to conduct a comprehensive or exhaustive comparative investigation of all existing imbalance handling methods, but rather to use this case study to demonstrate that appropriate handling of imbalanced data and the choice of appropriate evaluation metrics could improve SAR-based classification modelling. We also investigated the performance of these existing approaches and highlighted their limitations regarding imbalance ratio. The rest of the paper is organized as follows: “Materials and methods” section covers the study design, data curation and preprocessing steps, imbalance handling methods, and performance metrics. “Results and discussion” section presents our classification performance results, statistical analysis, and a comparison with published results for the Tox21 datasets. Lastly, “Conclusions” section briefly summarizes the major findings from this study and concludes with some remarks on future research needs.

Materials and methods

Study design

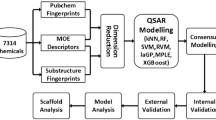

The workflow of our study design is outlined in Fig. 1. It consists of data preprocessing, feature generation and selection, resampling, model training (ensemble learning), model testing and performance evaluation. The data preprocessing and feature generation steps were applied to a total of 12,707 compounds in the raw dataset of 12 assays. However, feature selection, resampling and training of classifiers were conducted separately for each individual assay. For each assay, the preprocessed compounds in the training set were split into N stratified bootstrap samples with replacement (i.e., samples were randomly selected but retained the same imbalance ratio). This was followed by ensemble learning either without resampling (RF) or with the application of a resampling technique (RUS, SMOTE, or SMOTEENN). Optimal parameters for each base learner were obtained via grid search with fivefold cross validation. Optimized base learners were combined to form the final ensemble learner. Evaluation metrics were calculated using the prediction results of RF, RUS, SMO and SMN to statistically compare their performance. Details of the workflow are presented below.

Chemical in vitro toxicity data curation

The Tox21 Data Challenge dataset used in this study consisted of 12 quantitative high throughput screening (qHTS) assays for a collection of over 10 K compounds (with redundancy within and across assays). The 12 in vitro assays included a nuclear receptor (NR) signaling panel and a stress response (SR) panel. The NR panel comprised 7 qHTS assays for identifying compounds that either inhibited aromatase or activated androgen receptor (AR), aryl hydrocarbon receptor (AhR), estrogen receptor (ER), or peroxisome proliferator-activated receptor γ (PPAR-γ). The SR panel contained 5 qHTS assays for detecting agonists of antioxidant response element (ARE), heat shock factor response element (HSE) or p53 signaling pathways, disruptors of the mitochondrial membrane potential (MMP), or genotoxicity inducers in human embryonic kidney cells expressing luciferase-tagged ATAD5. There were three sets of chemicals: a training set of 11,764 chemicals, a leaderboard set of 296 chemicals and a test set of 647 chemicals [35]. For this study, we merged the leaderboard set with the original training set to form our “training set” and retained the original test set as our “test set”. The Tox21 dataset was downloaded in SDF format at https://tripod.nih.gov/tox21/challenge/data.jsp. There were four possible assay outcomes for each compound: active, inactive, inconclusive or not tested. Only those chemicals labeled as either active (1) or inactive (0) were retained for this study.

Compound preprocessing and chemical descriptor (feature) generation

Chemical structures were also downloaded at https://tripod.nih.gov/tox21/challenge/data.jsp as SMILES files. Data standardization/cleaning was carried out using MolVS [36], a publicly available tool built on RDKit [37]. Standardization involved a fragmentation step as described in [25] where compounds possessing distinct structures not linked by covalent bonds were split into separate “compound fragments”. Then, solvent fragments, salts and problematic molecules with inconsistent resonance structures and tautomers [38], which should not contribute to the biological effect of a compound [39], were removed. The resulting SMILES entries were canonicalized by standardizing chemotypes such as nitro groups and aromatic rings, and the largest uncharged fragments of the compound were retained. After standardization, the resulting fragments were merged based on their reported activity to exclude replicates and conflicting instances. Specifically, only one instance of a set of duplicates was retained with the most frequent activity label, while duplicates with ambiguous activity labels (i.e., an equal number of active and inactive outcomes for the same chemical) were removed. Three types of molecular features (> 2000 in total), i.e., RDKit descriptors, MACCS (Molecular ACCess System) keys and Extended-Connectivity Fingerprints (ECFPs) [40] with a radius of 2 and a fixed bit length of 1024, were generated using RDKit [37] to characterize the final set of compounds. All features with zero variance were dropped.

Sampling and classification methods

Here we briefly describe the three resampling techniques (i.e., RUS, SMOTE and SMOTEENN) that we used for handling imbalanced data with RF chosen as the base classifier.

RUS

RUS is a widely used undersampling technique which randomly removes samples from the majority class. In our study, RUS was used to randomly remove inactive compounds. While RUS alleviates imbalance in the dataset, it may potentially discard useful or important samples and increase the variance of the classifier. Recent studies have shown that the integration of RUS with ensemble learning can achieve better results [6, 41]. To overcome its drawbacks, we combined RUS with bagging (an ensemble learning algorithm) for SAR-based chemical classification.

SMOTE

SMOTE is an oversampling technique that creates synthetic samples based on feature space similarities between existing examples in the minority class [12]. It has shown a great deal of success in various applications [20]. To create a synthetic data sample, we first took a sample from the dataset of the minority class and considered its k-nearest neighbors based on Euclidian distance to form a vector between the current data point and one of those k neighbors. The new synthetic data sample was obtained by multiplying this vector by a random number α between 0 and 1 and adding the product to the current data point. More technical details on how to create synthetic samples are described in the Additional file 1: Figure S1 and in [12, 20]. Applying SMOTE to the minority class instances can balance class distributions [12] and augment the original dataset in a manner that generally significantly improves learning [20].

SMOTEENN

Despite many promising benefits, the SMOTE algorithm also has its drawbacks, including over generalization and variance [20]. In many cases, class boundaries are not well defined since some synthetic minority class instances may cross over to appear in the majority class space, especially for nonlinear data with a large feature space [42]. As a result, some new synthetic samples in the minority class may be mislabeled and attempting to learn from such datasets often results in a higher false prediction rate [43]. To remove the mislabeled samples created by the SMOTE technique, we applied SMOTEENN [32], a combination of SMOTE and the Edited Nearest Neighbor (ENN) [44] algorithm, to clean the synthetic data samples.

In the ENN algorithm, the label of every synthetic instance is compared with the vote of its k-nearest neighbors. The instance is removed if it is inconsistent with its k-nearest neighbors; otherwise, it remains in the dataset. The process of removing mislabeled samples and retaining the valid synthetic instances is illustrated in the Additional file 1: Figure S1c. A higher k value in the edited nearest neighbors algorithm leads to a more stringent cleaning rule that allows more synthetic instances to be eliminated. Applying SMOTEENN to an imbalanced dataset does not automatically result in a perfectly balanced set after resampling, but it creates more meaningful synthetic samples in the minority class and reduces the imbalance ratio to a more manageable level.

RF and ensemble learning

RF is a robust supervised learning algorithm that has been widely used for classification in many applications in data science [45]. An RF model consists of many individual decision trees that operate as an ensemble. The individual decision trees are generated using a random selection of features at each node to determine the split. During classification, each tree votes and the class with most votes becomes the model’s prediction.

RF can be built [46] and improved [47] using bagging (short for bootstrap aggregation). Bagging is a common ensemble method that uses bootstrap sampling in which several base classifiers are combined (usually by averaging) to form a more stable aggregate classifier [48]. Each base classifier (RF in this study) in the ensemble is trained on a different subset of the training dataset obtained by random selection with replacement, thus introducing some level of diversity and robustness. It is well known that the bagging classifier is more robust in overcoming the effects of noisy data and overfitting, and it often has greater accuracy than a single classifier because the ensemble model reduces the effect of the variance of individual classifiers [6, 48, 49].

In our case, the Tox21 dataset was both highly dimensional and highly imbalanced [6, 50]. For datasets with such a large feature space and a small number of minority class samples, classification often suffers from overfitting. Because bagging is less susceptible to model overfitting, we chose it as the ensemble method. Combining the base classifier RF with three sampling techniques (RUS, SMO and SMOTEENN) and bagging, we assembled four hybrid classification methods: (1) RF without resampling, (2) RF + RUS, (3) RF + SMO, and (4) RF + SMOTEENN. For more convenient result analysis, the four methods were simply denoted as RF, RUS, SMO and SMN, respectively.

Here we use SMN as an example to illustrate the algorithm that integrates resampling with ensemble learning (see Algorithm 1 and Fig. 1). First, a subset, \({S}_{i}\), was obtained by taking a stratified bootstrap sampling from the training set, \(X\). This sampling process was repeated N times, where i = 1 to N, with N ranging between 5 and 100 in steps of 5. Stratification was employed to ensure that each bootstrap had the same class distribution as the entire training set. Each subset is used to train a classifier in the ensemble, hence N is also equivalent to the number of classifiers. Then, the SMOTEENN algorithm was applied to \({S}_{i}\) to oversample the minority class and obtain an augmented training subset \(S^{\prime}_{i}\), which was used to train a random forest classifier \({f}_{i}\left(x\right)\). The parameters for each classifier in the ensemble were selected using a grid search with a fivefold cross-validation. This would give every individual classifier a chance to attain its best performance and contribute optimally to the ensemble. The final ensemble model was a bagged classifier that would count the votes of the N classifiers and assign the class with the most votes to a chemical in the test dataset. The other three methods RF, RUS and SMO also employed Algorithm 1 with the only difference being the resampling technique, i.e., no resampling, RUS and SMOTE, respectively. All classifiers were implemented using the Scikit-learn package [51] and Imbalanced-learn in a Python toolbox [52].

Performance evaluation metrics

The output of a binary classification model can be primarily represented by four terms: (1) true positive (TP) defined as the number of true active chemicals that are correctly predicted as active by the model; (2) false positive (FP) as the number of true inactive chemicals incorrectly predicted as active; (3) true negative (TN) as the number of true inactive chemicals correctly predicted as inactive; and (4) false negative (FN) as the number of true active chemicals incorrectly predicted as inactive. Most evaluation metrics are derived from these four terms. True positive rate (TPR), also referred to as sensitivity or recall, represents the fraction of correctly predicted active chemicals. In SAR modeling, recall is also considered as a measure of the accuracy of the active (minority) class. True negative rate (TNR) or specificity provides a similar measure (accuracy) for the inactive (majority) class. Precision estimates the probability of a model to make a correct active class prediction. F1 score is the harmonic mean of precision and recall. Similarly, balanced accuracy (BA) is the average of correct predictions for both classes. Matthews correlation coefficient (MCC) offers a good index for the performance of imbalanced classification tasks as it incorporates all the components of the confusion matrix [53]. MCC has been widely used to evaluate the performance of SAR-based chemical classification [34, 54]. The MCC value varies in the range of [− 1, 1] with − 1 implying disagreement, 1 complete agreement and 0 no correlation between the prediction and the known truth. The Brier score is a measure of the average squared difference between the predicted probabilities and the known value for a class, and it assesses the overall accuracy of a probability model. The formulas of these evaluation metrics are given as follows:

where N is the total number of chemicals in a dataset, \({p}_{i}\) (\(\in \left[\mathrm{0,1}\right])\) is the predicted probability, and \({o}_{i}\) is the ground truth for the ith chemical (equal to 1 for active and 0 for inactive).

In addition, the two widely used metrics AUROC and AUPRC were also calculated using Scikit-learn [51] to evaluate and compare the overall performance of a classifier against another. Finally, sensitivity–specificity gap (SSG), calculated as the absolute value of the difference between sensitivity and specificity, was introduced as a metric to evaluate how balanced a classifier was in terms of its performance on these two metrics [13].

We performed statistical analysis to assess if there existed significant differences among the four investigated classification methods in terms of their performance metrics across the twelve bioassays (Table 1). We adopted a nonparametric test for multiple comparisons as described in Garcia et al. [55]. Using the Statistical Comparison of Multiple Algorithms in Multiple Problems (scmamp) library in R [56], we conducted a Friedman’s aligned-rank test [57]. The Friedman test was chosen over other statistical tests such as ANOVA because it does not require the assumption of data normality. The Bergmann-Hommel post-hoc test was carried out for pairwise comparisons between SMN and the other three methods (RF, RUS and SMO) [54].

Results and discussion

In this section, we present (1) a summary of the curated and preprocessed Tox21 dataset, (2) the preliminary comparative results to justify the selection of RF as the base classifier, (3) parameter optimization for RF and ENN algorithms, (4) performance metrics of four classification methods for the twelve imbalanced Tox21 datasets, (5) the impact of IR and classification methods on prediction performance, and (6) a comparison between this study and published Tox21 studies.

Data curation and preprocessing

A summary of the preprocessed training and test datasets of chemicals and their activities measured by 12 qHTS in vitro assays is presented in Table 1. Although the original raw Tox21 datasets contained more than 12 K chemicals, approximately 50% of them or fewer were retained for each assay after preprocessing. This was primarily due to duplication and the absence of testing data for individual assays. The imbalanced ratio (IR), defined as the ratio of the number of the majority class (inactive compounds) to that of the minority class (active compounds) [42], varied widely between assays and between the training and the test sets. Such large disparities offered a great opportunity to investigate the performance of different ensemble-resampling approaches as a function of IR (see below for detailed results). In the training datasets, the highest IR of 41.7 appeared in the dataset of the NR-PPAR-γ assay, whereas the lowest IR of 5.7 was observed with the SR-MMP assay. The test datasets generally had IRs larger than or equivalent to those of their corresponding training datasets, e.g., measuring as high as ~ 70 for NR-AR-LBD (except for NR-Aromatase, NR-PPAR-γ, and SR-ATAD5).

Selecting RF as the base classifier

A comparison of six popular machine learning algorithms, i.e., RF, K-nearest neighbors (KNN), decision trees (CART), Naïve Bayes (NB), support vector machine (SVM) and multilayer perceptron (MLP), was performed using the training datasets of all twelve assays and a stratified fivefold cross validation. These algorithms were all implemented in Scikit-learn [51] with default parameter settings. The purpose of this preliminary study was to select a base classifier from these algorithms. F1 score was calculated and used as the metric to evaluate classification performance. As shown in Fig. 2, RF was the frontrunner for four of the 12 assay datasets, including NR-AR-LBD, SR-ARE, SR-HSE, and SR-MMP. RF was the second best performer for another five assays (i.e., NR-AR, NR-ER, NR-ER-LBD, NR-PPAR-γ, and SR-p53). The average F1 score of RF for all 12 assays was the highest (0.2783) among all six algorithms, and the runner-up was MLP with an average F1 score of 0.2487. Clearly, RF outperformed the other five algorithms on the Tox21 dataset, which informed our decision to proceed with choosing RF as the base classifier and to focus our study on imbalance handling methods.

Furthermore, the RF classifier was widely used by the participating teams in the Tox21 Data Challenge [28, 48]. Two of the winning teams developed RF models that achieved the best performance in predicting compound activities against AR, aromatase, and p53 [58] as well as ER-LBD [59]. Using the same RF classifier and the same dataset made it convenient to compare our results with those from the participating teams and allowed us to better investigate the impact of resampling methods on improving imbalanced learning and, consequently, improving classification performance (see “Comparison with Tox21 Data Challenge winners” section below for more info).

Parameter optimization for the RF classifier

It is generally accepted that the accuracy of a classifier ensemble is positively correlated with ensemble diversity [60]. Here, we adjusted the ensemble diversity by randomly selecting data instances to create the bootstrap samples (see Fig. 1) and by increasing the number of classifiers included in the ensemble. Figure 3 shows that the performance of classifier ensembles measured by the average F1 score, AUPRC, AUROC and MCC for all four methods changes with the varying number of classifiers in the ensemble. A plateau was encountered when the number of classifiers reached 30, which may have been the optimal number of classifiers in this situation. After this point, there was little improvement in performance as the number of classifiers increased. Even if minor improvements were noticed using 100 classifiers for some metrics (e.g., MCC), this dramatically increased the computational time and resources needed to train the model. The relationship between performance and the number of classifiers may be explained by the importance of diversity in ensemble learning. With every bootstrap sample being different from another in terms of chemical composition and fingerprint features, diversity in the bagging ensemble was inherent. However, as the number of classifiers increased, the number of times (frequency) that a sample was selected from the same population also increased. This would result in a decline in the variance between such bootstrap samples or a flat line in ensemble diversity. Consequently, a flat line was observed in performance metrics as the number of classifiers in an ensemble increased from 30 to 100 (Fig. 3). In the subsequent experiments, we adopted the optimal number of 30 classifiers for ensemble learning.

Optimal number of nearest neighbors (k) in the ENN algorithm of SMN models

Another parameter we optimized was the k value in the ENN algorithm. The choice of a synthetic instance to be removed from the training set is determined by the voting of its k neighbors. As shown in Fig. 4, we varied the number of nearest neighbors k from 1 to 5, and 3 appeared to be the optimal k value for most of the five measured performance metrics. F1 score and AUPRC peaked at k = 3, BA plateaued when k = 3 or 4, whereas MCC peaked earlier at k = 2. AUROC was the only metric not affected by the change in k value. Thus, the k value was set at 3 for SMN in this study.

By setting k at this optimal value, ENN may help increase the classifier’s generalizability by removing noisy (mislabeled) synthetic instances introduced in the SMOTE step. By reducing the amount of noise in the dataset while reducing imbalance, it is expected that the class boundaries between active and inactive compounds can be better defined. A reduction in noisy instances can also reduce the chance of over-fitting. This is essentially where the power of SMN lies. However, further increments in the k value beyond the optimum led to a decline in classifier performance.

Performance evaluation metrics

Table 2 reports nine performance metrics and their average values for four classification methods (RF, RUS, SMO and SMN) for 12 bioactivity assays, with the best performer highlighted in bold for each evaluation metric and assay. The derived specificity results are reported alone with sensitivity and SSG results in Additional file 1: Table S1. For each assay, the training dataset was employed to train a classifier using four different algorithms, and then the trained classifier was applied to the test dataset to determine performance metrics as described in the “Materials and methods” section (also see Fig. 1). The reported values varied greatly depending on metrics, assays and algorithms. For instance, AUROC has the highest values averaged at 0.8049, whereas MCC has the lowest mean value of 0.2945. This is not surprising as different metrics measure different aspects of learning algorithm performance and trained model quality [61].

We excluded accuracy (the ratio of correct predictions to the total number of chemicals) and specificity from the metrics panel presented in Table 2 because accuracy may be misleading in evaluating model performance for highly imbalanced classification [22]. Specifically, a high accuracy does not translate into a high capability of the prediction model to correctly predict the rare class, whereas specificity is less relevant since we are more interested in the positive class (active minority). However, the nine chosen metrics in the panel are not necessarily the ideal ones for evaluating the performance of classification with a skewed class distribution. For instance, both AUROC and AUPRC can provide a model-wide evaluation of binary classifiers [27]. Although AUROC, proposed as an alternative to accuracy [22], is unaffected by data skewness [62], it may provide an excessively optimistic view of an algorithm’s performance on highly imbalanced data [21]. AUPRC, on the other hand, is affected by data imbalance [62], but it is a more informative and more realistic measure than AUROC for imbalanced classification [27]. Another example is precision and recall, both of which depend on a threshold selected to determine if a chemical compound is active or inactive. A higher recall may be obtained by setting a lower threshold (increasing the number of TP predictions and decreasing the number of FN predictions), which results in a lower precision (more FP predictions). On the other hand, raising the threshold for labeling active chemicals may benefit precision but hurt recall. Optimizing both precision and recall occurs with a tradeoff, especially with imbalanced data. F1 score appears to be a balanced trade-off between precision and recall. Nevertheless, like AUPRC, F1 score is also attenuated by data skewness [62]. SSG, a good indicator of balance between sensitivity and specificity [13], may become an inefficient performance metric when both sensitivity and specificity are low. For such applications as predictive toxicology and drug discovery, one may be more interested in improving sensitivity instead of reducing SSG due to the rarity of positive instances. Given the pros and cons of these metrics, it is necessary to use a suite of metrics for performance evaluation. Hence, we calculated the “average” of the nine metrics (Table 2) which may serve as a comprehensive indicator of model performance. However, its formula (e.g., membership composition, weight of each component metric, and normalization method) and applicability still require further investigation.

Impact of imbalance ratio on performance metrics

The variation in the same performance metrics between different assay datasets is as high as 87% CV (Table 2), suggesting that dataset properties (IR in particular) have a significant impact. Nevertheless, systematic assessment of the impact of IR on prediction accuracy remains a challenging problem. The IRs in our assay datasets varied from 5 to 70 (Table 1). We calculated correlation coefficients (CCs) between log2(IR) and the score of five evaluation metrics (Table 3). Except for the CCs between AUROC and RF/RUS/SMO, there exists a significant negative correlation between IR (of the test datasets) and the performance evaluation metrics F1 score, MCC, BA, AUPRC, AUROC, and the average of all 9 chosen metrics. This is consistent with earlier reports on the adverse effects of IR on these metrics [62]. The statistically significant positive correlation between IR and SSG suggests that higher IRs would increase SSG, which is also undesirable.

To investigate how IR affects the extent of performance improvement obtained by different resampling techniques, the scores of four metrics (F1 score, MCC, SSG and the average of 9 metrics) of all twelve assays are plotted against their log2IR (see Fig. 5). For MCC, F1 score and the average of 9 metrics, the trend line of SMN is well above those of SMO, RUS and RF, indicating that SMN performed better than other classifiers. The trend lines of SMO and RUS intertwine with that of RF, suggesting that both SMO and RUS did not consistently improve the performance metrics over the base classifier RF. In addition, the SMN trend line intercepts with the other three at about log2IR = 4.8 (for average), 5.5 (for MCC) or 6.1 (for F1 score), suggesting that a metric-specific IR between 28 and 70 is likely the threshold at which SMN can outperform other classifiers. The lower the IR value is, the more improvements SMN can achieve, compared to the RF, RUS and SMO classifiers. When IR approaches the threshold, the improvements are insignificant. These results demonstrate the limitation of data rebalancing techniques and also provide useful feedback for data acquisition. If evaluated by the SSG metric (the smaller, the better), RUS outperformed SMN and the other two algorithms, suggesting that SMN had limited power in narrowing the gap between sensitivity and specificity. Whenever possible, we should increase the number of active compounds to reduce the imbalance ratio in order to obtain more accurate predictions in SAR-based chemical classification.

Impact of resampling techniques on classifier performance

The effect of algorithm choice is partially reflected by a change of 0.1263 in the average metrics score from RF (0.1854) to SMN (0.3116) (Table 2). We also calculated the average Friedman ranking of each classifier [55] by ranking the four algorithms from 1 to 4 based on their performance on each assay dataset. The best classifiers were assigned a rank of 1 and the worst classifiers were assigned a rank of 4. The algorithm with the lowest average rank is considered the best for a specific metric. As shown in Fig. 6, SMN outperformed the other algorithms (RF, RUS and SMO) in terms of four metrics (F1 score, AUPRC, AUROC and MCC) and was only slightly surpassed by the frontrunner RUS for the BA metric. Taking F1 score as an example, SMN performed better in seven of the 12 assay datasets, followed by RUS which was the best performer for three assays (Table 2). More interestingly, the magnitude of improvement offered by SMN from the next best method ranged from approximately 8% for the NR-ER-LBD dataset to as much as 27% for the SR-ARE and NR-Aromatase datasets. Understandably, the baseline classifier RF had the worst average performance even though its parameters were also optimized. SMN demonstrated a better F1 score in most cases because of its ability to improve recall without excessively lowering precision. A moderately higher recall value with comparable precision positively impacts the F1 score.

Average Friedman ranks of the four classification methods (RF, RUS, SMO and SMN) with respect to five metrics (F1 score, AUPRC, AUROC, MCC and BA). Error bars represent standard errors. See Table 4 for statistical significance in the difference between classifiers

The Friedman’s Aligned Rank Test for Multiple Comparisons [55] was performed to further examine the statistical significance of the algorithmic effects of resampling techniques. Our null hypothesis was that all four algorithms had similar capability in classification measured by nine metrics for 12 datasets. Results shown in Table 4 suggest that all metrics except AUPRC were significantly affected by the resampling algorithm (p < 0.05). The Bergmann-Hommel post hoc analysis was applied to compare pairwise performance metrics of SMN against the other three classifiers. SMN differed more from RF than from SMO and RUS because one, two, and five metrics were insignificantly different (p > 0.05) between SMN and RF, SMN and SMO, and SMN and RUS, respectively. F1 score, MCC and Brier score showed significant difference among the four classifiers in both multiple and pair-wise comparisons. For instance, SMN had the lowest average Brier score of 0.3312 ± 0.0509 (average ± standard error) in comparison with SMO (0.4109 ± 0.0627), RUS (0.3894 ± 0.0361), and the baseline classifier RF (0.3967 ± 0.0395). A lower Brier score indicates that the predictions of a classifier are more accurate because they are closer to the ground truth. MCC, a metric widely used to evaluate the performance of SAR-based chemical classification [63, 64], embodies all the components of the confusion matrix and hence presents a reliable summary of the performance of models trained on imbalanced data.

On the contrary, AUPRC was the sole metric that did not differ significantly in any of the comparisons. AUPRC computes the area under the precision-recall curve that is obtained by using the output of the precision function at different recall levels to assess the overall performance of a prediction model [51]. SMN showed improved AUPRC scores compared to the other algorithms. However, this improvement was not very substantial. Unlike F1 score, which benefits from a varied classification threshold, minor improvements in the probabilities for each class do not translate to a marked improvement in the AUPRC score. This is because, being a threshold-independent metric, AUPRC computes the entire area under the curve for the plot of precision versus recall at all possible thresholds. Nevertheless, SMN still showed the best performance in 33% (4/12) of cases tested, RF and SMO in 25% (3/12) each, and RUS in 16% (2/12).

The above results suggest that AUPRC is not sensitive to algorithmic effects, whereas F1 score, MCC and Brier score are sensitive metrics that can distinguish among the classifiers by their performance. These results also indicate that SMN was the best performer, followed by RUS, while SMO and RF had the poorest performance with the Tox21 datasets. When looking at the average of all 9 metrics (Table 2), SMN and RUS ranked the best for 6 and 5 assays, separately, whereas RF only had the best performance with the NR-AR assay and SMO always underperformed across all 12 assays. These results led us to speculate that the activity landscape of the majority class (inactive compounds) may be more continuous and smooth than that of the minority class (active compounds) [65]. Consequently, removing some instances from the majority class would not affect class boundaries. On the contrary, adding synthetic instances to the minority class (SMOTE) may introduce noise along the borderlines, leading to the loss of activity cliffs and mislabeling of the synthetic instances [66]. The ENN algorithm may effectively remove those synthetic outliers and restore the activity cliffs and class boundaries, leading to enhanced prediction performance for SMOTEENN (SMN) [67]

Comparison with Tox21 Data Challenge winners

In this section, we compared the prediction performance of the four classifiers in this study with those developed by the winning teams for each of the assays in the Tox21 Data Challenge [34]. The winning team for each sub-challenge was judged by AUROC (and BA if there was a tie in AUROC [35]). The AUROC and BA scores of the top ten ranked teams are posted at (https://tripod.nih.gov/tox21/challenge/leaderboard.jsp). The 12 assay sub-challenges were won by four teams: Bioinf@JKU, Amaziz, Dmlab and Microsomes. Bioinf@JKU developed DeepTox models using deep learning [25] and won six out of the 12 assay sub-challenges (NR-AhR, NR-AR-LBD, NR-ER, NR-PPAR-γ, SR-ARE, and SR-HSE) in addition to the Grand Challenge and two additional sub-challenges for the Nuclear Receptor Panel and the Stress Response Panel. Amaziz [68] employed associative neural networks to develop winning models for SR-ATAD5 and SR-MMP assays, and had the best overall BA score. Dmlab [58] used multi-tree ensemble methods, such as Random Forests and Extra Trees, to produce winning models for three assays (i.e., NR-AR, NR-aromatase and SR-p53). Microsomes [59] chose Random Forest for descriptor selection and model generation, and produced the best performing NR-ER-LBD model. For the purpose of comparison, we selected Dmlab and Microsomes because they used Random Forest. We also compared our best classifier with the winner of each assay sub-challenge. Given the over-optimistic nature of AUROC, the BA metric provides a more realistic and reliable measure for performance comparison. The titles of the best BA scores were shared by five teams: Kibutz (1 assay), Bioinf@JKU (2), Amaziz (2), T (3), and StructuralBioinformatics@Charite (4). The AUROC and BA scores of the winning teams are shown in Table 5 side by side with those of our best performing classifiers because they are the only metrics available for the Tox21 Data Challenge.

Although the AUROC and BA metrics are not ideal for evaluating imbalanced classification, we made the comparison to demonstrate that the improvement obtained from imbalance pre-processing enabled our classifiers to perform equally well or outperform the winning models of the Tox21 Data Challenge. This is primarily reflected by the following observations: (1) our best classifiers outperformed Dmlab and Microsomes in terms of both AUROC and BA by large margins with only four exceptions (NR-AR, NR-PPAR-γ, SR-ATAD5 and SR-MMP), where Dmlab exceeded our best classifiers in AUROC by less than 4%; (2) our best classifiers had the same or higher AUROC and a higher BA than challenge winners for six and three assays, respectively, with less than 8% (AUROC) or 17% (BA) difference for the remaining assays; and (3) on average, our best classifiers performed almost equally as well as the challenge winners as a whole (Table 5). The last two columns in Table 5 report the comparison between our best classifier and the winner of Tox21 Challenge in terms of BA and AUROC ratios, with a value greater than 1 indicating that our model performed better than the Challenge winning model. These results (particularly the BA scores) not only establish the validity, credibility and scientific soundness of the approach, methodology and algorithms implemented in this study, but also demonstrate that the excellence of our work reached levels comparable to that of the Tox21 Data Challenge winners.

It is also worth noting that Banerjee et al. [13] performed similar work on three Tox21 datasets (AhR, ER-LDB, and HSE). They employed RF as the base classifier (without ensemble learning) and applied eight different undersampling or oversampling techniques (including random undersampling and SMOTE). Similar to this study, their work also demonstrated that dataset and resampling techniques had significant impacts on classification outcome and that such impacts varied from one metric to another with sensitivity and F-measure being more sensitive than AUROC and accuracy.

Another study worth mentioning described how Norinder and Boyer [16] achieved balanced prediction performance with sensitivity and specificity (for the external test dataset) both attaining 0.70 − 0.75 when they applied MCP to the similar ToxCast and Tox21 datasets of estrogen receptor assays and used SVM as the classifier. These results are far superior to those obtained using SVM or RF alone without resampling or MCP [16, 69], but they are only slightly better than the performance of RUS with sensitivity at 0.69 or 0.55 (Table 2) and specificity at 0.61 or 0.84 (Additional file 1: Table S1) obtained in our study. Therefore, it warrants further in-depth investigations to compare side-by-side resampling with MCP and MCP + resampling using the same machine learning algorithms, the same raw datasets, and the same preprocessing procedure.

Conclusions

Due to the specificity of toxicant-target biomolecule interactions, SAR-based chemical classification studies are often impeded by the imbalanced nature of many toxicity datasets. Furthermore, class boundaries are often blurred since active toxicants often appear in the minority class. In order to address these issues, common resampling techniques can be applied. However, removing majority class instances using an undersampling technique can result in information loss, whereas increasing minority instances by interpolation tends to further obfuscate the majority class space, giving rise to over-fitting. In order to improve the prediction accuracy attained from imbalanced learning, SMOTEENN, a combination of SMOTE and ENN algorithms, is often employed to oversample the minority class by creating synthetic samples, followed by cleaning the mislabeled instances. Here, we integrated an ensemble approach (bagging) with a base classifier (RF) and various resampling techniques to form four learning algorithms (RF, RUS, SMO and SMN). Then, we applied them to the binary classification of 12 highly imbalanced Tox21 in vitro qHTS bioassay datasets.

We generated multiple sets of chemical descriptors or fingerprints and down-selected small groups of features for use in class prediction model generation. After data preprocessing, parameters were optimized for both resampling and classifier training. The performance of the four learning methods was compared using nine evaluation metrics, among which F1 score, MCC and Brier score provided more consistent assessment of the overall performance across the 12 datasets. The Friedman’s aligned ranks test and the subsequent Bergmann-Hommel post hoc test showed that SMN significantly outperformed the other three methods. It was also found that there was a strong negative correlation between prediction accuracy and IR. We observed that SMN became less effective when IR exceeded a certain threshold (e.g., > 28). Therefore, SAR-based imbalanced learning can be affected by the degree of dataset skewness, resampling algorithms, and evaluation metrics. We recommend assembling a panel of representative, diversified and imbalance-sensitive metrics, developing a comprehensive index from this panel, and using the index to evaluate the performance of classifiers for imbalanced datasets.

The ability to separate the small number of active compounds from the vast amounts of inactive ones is of great importance in computational toxicology. This work demonstrates that the performance of SAR-based, imbalanced chemical toxicity classification can be significantly improved through imbalance handling. Although the best classifiers of this study achieved the same level of performance as the winners of the Tox21 Data Challenge as a whole, we believe that there is still plenty of room for further improvement. Given the exceptionally outstanding performance of DeepTox [25] and our own experience with deep learning-based chemical toxicity classification [70], our future plan is to replace RF with a deep learning algorithm like deep neural networks as the base classifier and combine it with class rebalancing techniques to build novel deep learning models for SAR-based chemical toxicity prediction. We are also interested in pursuing a novel approach by integrating MCP, resampling and ensemble strategies to further improve the robustness and performance of imbalanced learning.

Availability of data and material

The dataset supporting the conclusions of this article is available at https://tripod.nih.gov/tox21/challenge/data.jsp in sdf and smi formats. The source code of this article is available at https://github.com/Idakwo/SAR_Imbalance_SMOTEENN.

Abbreviations

- ANOVA:

-

Analysis of variance

- AUPRC:

-

Area Under the Precision-Recall Curve

- AUROC:

-

Area Under the Receiver Operating Characteristics

- BA:

-

Balanced accuracy

- CART:

-

Classification and Regression Trees (Decision trees)

- CC:

-

Correlation coefficient

- CV:

-

Coefficient of variation

- df:

-

Degree of freedom

- ENN:

-

Edited Nearest Neighbor (ENN) algorithm

- FN:

-

False negative

- FP:

-

False positive

- IR:

-

Imbalance ratio

- KNN:

-

K-nearest neighbors

- MCC:

-

Matthews correlation coefficient

- MLP:

-

Multilayer perceptron

- NB:

-

Naïve Bayes

- RF:

-

Random Forest classification method

- RUS:

-

Random Undersampling

- SAR:

-

Structure–Activity Relationship

- SMN:

-

RF classification method with SMOTEENN technique

- SMO:

-

RF classification method with SMOTE technique

- SMOTE:

-

Synthetic Minority Over-sampling Technique (SMOTE)

- SMOTEENN:

-

Combined the SMOTE technique with the ENN algorithm

- SVM:

-

Support vector machine

- TN:

-

True negative

- TNR:

-

True negative rate

- Tox21:

-

Toxicology in the 21st Century program

- TP:

-

True positive

- TPR:

-

True positive rate

References

Czarnecki WM, Rataj K (2015) Compounds activity prediction in large imbalanced datasets with substructural relations fingerprint and EEM. 2015 IEEE Trustcom/BigDataSE/ISPA. IEEE, Helsinki, pp 192–192

Irwin JJ, Sterling T, Mysinger MM et al (2012) ZINC: a free tool to discover chemistry for biology. J ChemInf Model 52:1757–1768. https://doi.org/10.1021/ci3001277

Dahl GE, Jaitly N, Salakhutdinov R (2014) Multi-task neural networks for QSAR predictions. https://arxiv.org/abs/1406.1231. Accessed 6 Oct 2017

Darnag R, Mostapha Mazouz EL, Schmitzer A et al (2010) Support vector machines: development of QSAR models for predicting anti-HIV-1 activity of TIBO derivatives. Eur J Med Chem 45:1590–1597. https://doi.org/10.1016/j.ejmech.2010.01.002

Polishchuk PG, Muratov EN, Artemenko AG et al (2009) Application of random forest approach to QSAR prediction of aquatic toxicity. J ChemInf Model 49:2481–2488. https://doi.org/10.1021/ci900203n

Galar M, Fernández A, Barrenechea E et al (2012) A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern Part C 42:463–484. https://doi.org/10.1109/TSMCC.2011.2161285

Krawczyk B, Krawczyk BB (2016) Learning from imbalanced data: open challenges and future directions. Prog Artif Intell 5:221–232. https://doi.org/10.1007/s13748-016-0094-0

Hido S, Kashima H, Takahashi Y (2009) Roughly balanced bagging for imbalanced data. Stat Anal Data Min 2:412–426. https://doi.org/10.1002/sam.10061

Chawla NV (2005) Data mining for imbalanced datasets: an overview. In: Maimon O, Rokach L (eds) Data Mining and Knowledge Discovery Handbook. Springer-Verlag, New York, pp 853–867

He H, Ma Y (2013) Imbalanced learning: foundations, algorithms, and applications. John Wiley & Sons Inc, New York

Branco P, Torgo L, Ribeiro R (2015) A survey of predictive modelling under imbalanced distributions. https://arxiv.org/abs/1505.01658. Accessed 8 Aug 2017

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: Synthetic Minority Over-sampling Technique. J Artif Intell Res 16:321–357. https://doi.org/10.1613/jair.953

Banerjee P, Dehnbostel FO, Preissner R (2018) Prediction is a balancing act: importance of sampling methods to balance sensitivity and specificity of predictive models based on imbalanced chemical data sets. Front Chem 6:362. https://doi.org/10.3389/fchem.2018.00362

Stefanowski J (2016) Dealing with Data Difficulty Factors While Learning from Imbalanced Data. Challenges in computational statistics and data mining. Springer, Cham, Switzerland, pp 333–363

Bosc N, Atkinson F, Felix E et al (2019) Large scale comparison of QSAR and conformal prediction methods and their applications in drug discovery. J Cheminform 11:4. https://doi.org/10.1186/s13321-018-0325-4

Norinder U, Boyer S (2016) Conformal Prediction Classification of a Large Data Set of EnRvironmental Chemicals from ToxCast and Tox21 Estrogen Receptor Assays. Chem Res Toxicol 29:1003–1010. https://doi.org/10.1021/acs.chemrestox.6b00037

Sun J, Carlsson L, Ahlberg E et al (2017) Applying mondrian cross-conformal prediction to estimate prediction confidence on large imbalanced bioactivity data sets. J ChemInf Model 57:1591–1598. https://doi.org/10.1021/acs.jcim.7b00159

Cortés-Ciriano I, Bender A (2019) Concepts and applications of conformal prediction in computational drug discovery

Norinder U, Boyer S (2017) Binary classification of imbalanced datasets using conformal prediction. J Mol Graph Model 72:256–265. https://doi.org/10.1016/j.jmgm.2017.01.008

He H, Garcia EA (2009) Learning from Imbalanced Data. IEEE Trans Knowl Data Eng 21:1263–1284. https://doi.org/10.1109/TKDE.2008.239

Davis J, Goadrich M (2006) The relationship between precision-recall and ROC curves. In: Proceedings of the 23rd International Conference on Machine Learning. ACM, Pittsburgh, pp 233–240

Provost F, Fawcett T, Kohavi R (1998) The case against accuracy estimation for comparing induction algorithms. In: Proceedings of the Fifteenth International Conference on Machine Learning. Morgan Kaufmann Publishers Inc, San Francisco, pp 445–453

Capuzzi SJ, Politi R, Isayev O et al (2016) QSAR modeling of Tox21 challenge stress response and nuclear receptor signaling toxicity assays. Front Environ Sci 4:3. https://doi.org/10.3389/fenvs.2016.00003

Ribay K, Kim MT, Wang W et al (2016) Predictive modeling of estrogen receptor binding agents using advanced cheminformatics tools and massive public data. Front Environ Sci 4:12. https://doi.org/10.3389/fenvs.2016.00012

Mayr A, Klambauer G, Unterthiner T et al (2016) DeepTox: toxicity prediction using deep learning. Front Environ Sci 3:1–15. https://doi.org/10.3389/fenvs.2015.00080

Drwal MN, Siramshetty VB, Banerjee P et al (2015) Molecular similarity-based predictions of the Tox21 screening outcome. Front Environ Sci 3:54. https://doi.org/10.3389/fenvs.2015.00054

Saito T, Rehmsmeier M, Hood L et al (2015) The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 10:e0118432. https://doi.org/10.1371/journal.pone.0118432

Chen J, Tang YY, Fang B, Guo C (2012) In silico prediction of toxic action mechanisms of phenols for imbalanced data with Random Forest learner. J Mol Graph Model 35:21–27. https://doi.org/10.1016/J.JMGM.2012.01.002

Pham-The H, Casañola-Martin G, Garrigues T et al (2016) Exploring different strategies for imbalanced ADME data problem: case study on Caco-2 permeability modeling. Mol Divers 20:93–109. https://doi.org/10.1007/s11030-015-9649-4

Lei T, Sun H, Kang Y et al (2017) ADMET evaluation in drug discovery. 18. Reliable prediction of chemical-induced urinary tract toxicity by boosting machine learning approaches. Mol Pharm 14:3935–3953. https://doi.org/10.1021/acs.molpharmaceut.7b00631

Czarnecki WM, Tabor J (2017) Extreme entropy machines: robust information theoretic classification. Pattern Anal Appl 20:383–400. https://doi.org/10.1007/s10044-015-0497-8

Batista GEAPA, Prati RC, Monard MC (2004) A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDDExplorNewsl 6:20–29. https://doi.org/10.1145/1007730.1007735

NCATS Toxicology in the 21st Century (Tox21). https://ncats.nih.gov/tox21. Accessed 11 May 2017

Huang R, Xia M, Nguyen D-T et al (2016) Editorial: Tox21 challenge to build predictive models of nuclear receptor and stress response pathways as mediated by exposure to environmental toxicants and drugs. Front Environ Sci 5:3. https://doi.org/10.3389/fenvs.2017.00003

Huang R, Xia M, Nguyen D-T et al (2017) Tox21Challenge to build predictive models of nuclear receptor and stress response pathways as mediated by exposure to environmental chemicals and drugs. Frontiers Media, Lausanne

MolVS: Molecule Validation and Standardization—MolVS 0.0.9 documentation. https://molvs.readthedocs.io/en/latest/. Accessed 6 Feb 2018

Greg L RDKit: Open-source cheminformatics Software

Tropsha A, Gramatica P, Gombar V (2003) The importance of being Earnest: validation is the absolute essential for successful application and interpretation of QSPR models. QSAR Comb Sci 22:69–77. https://doi.org/10.1002/qsar.200390007

Stefaniak F (2015) Prediction of compounds activity in nuclear receptor signaling and stress pathway assays using machine learning algorithms and low-dimensional molecular descriptors. Front Environ Sci 3:77. https://doi.org/10.3389/fenvs.2015.00077

Rogers D, Hahn M (2010) Extended-connectivity fingerprints. J ChemInf Model 50:742–754. https://doi.org/10.1021/ci100050t

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A (2010) RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man, Cybern Part ASyst Humans 40:185–197. https://doi.org/10.1109/TSMCA.2009.2029559

García V, Sánchez JS, Mollineda RA (2012) On the effectiveness of preprocessing methods when dealing with different levels of class imbalance. Knowl Based Syst. https://doi.org/10.1016/j.knosys.2011.06.013

Galar M, Fernández A, Barrenechea E, Herrera F (2013) EUSBoost: enhancing ensembles for highly imbalanced data-sets by evolutionary undersampling. Pattern Recognit 46:3460–3471. https://doi.org/10.1016/J.PATCOG.2013.05.006

Wilson DL (1972) Asymptotic Properties of Nearest Neighbor Rules Using Edited Data. IEEE Trans Syst Man Cybern 3:408–421. doi.:https://doi.org/10.1109/TSMC.1972.4309137

Breiman L (2001) Random forests. Mach Learn 45:5–32. https://doi.org/10.1023/A:1010933404324

Han J, Kamber M, Pei J (2011) Data mining : concepts and techniques, 3rd edn. Elsevier Science, Amsterdam

Altman N, Krzywinski M (2017) Ensemble methods: bagging and random forests. Nat Methods 14:933–934. https://doi.org/10.1038/nmeth.4438

Khoshgoftaar TM, Van Hulse J, Napolitano A (2011) Comparing boosting and bagging techniques with noisy and imbalanced data. IEEE Trans Syst Man Cybern Part A Syst Humans 41:552–568. https://doi.org/10.1109/TSMCA.2010.2084081

Laszczyski J, Stefanowski J, Idkowiak L (2013) Extending bagging for imbalanced data. In: Burduk R., Jackowski K., Kurzynski M., Wozniak M., Zolnierek A. (eds) Proceedings of the 8th International Conference on Computer Recognition Systems CORES 2013. Advances in Intelligent Systems and Computing. Springer, Heidelberg, pp 269–278

Chawla NV, Lazarevic A, Hall LO, Bowyer KW (2003) SMOTEBoost: improving prediction of the minority class in boosting. Springer, Berlin, Heidelberg, pp 107–119

Pedregosa F, Varoquaux G, Gramfort A et al (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Lemaˆıtre G, Nogueira F, Aridas CK (2017) Imbalanced-learn: a python toolbox to tackle the curse of imbalanced datasets in machine learning. J Mach Learn Res 18:1–5

Boughorbel S, Jarray F, El-Anbari M (2017) Optimal classifier for imbalanced data using Matthews Correlation Coefficient metric. PLoS ONE 12:e0177678. https://doi.org/10.1371/journal.pone.0177678

Bergmann B, Hommel G (1988) Improvements of general multiple test procedures for redundant systems of hypotheses. Springer, Berlin, Heidelberg, pp 100–115

García S, Fernández A, Luengo J, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. InfSci (Ny) 180:2044–2064. https://doi.org/10.1016/J.INS.2009.12.010

Calvo B, Santafé G (2016) scmamp: Statistical comparison of multiple algorithms in multiple problems. R J 8:248–256. https://doi.org/10.32614/rj-2016-017

Hodges JL, Lehmann EL (2012) Rank methods for combination of independent experiments in analysis of variance. In: Rojo J (ed) Selected works of E L. Lehmann. Springer US, Boston, MA, pp 403–418

Barta G (2016) Identifying biological pathway interrupting toxins using multi-tree ensembles. Front Environ Sci. https://doi.org/10.3389/fenvs.2016.00052

Uesawa Y (2016) Rigorous selection of random forest models for identifying compounds that activate toxicity-related pathways. Front Environ Sci 4:9. https://doi.org/10.3389/fenvs.2016.00009

Kuncheva LI, Whitaker CJ (2003) Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach Learn 51:181–207

Ferri C, Hernández-Orallo J, Modroiu R (2009) An experimental comparison of performance measures for classification. Pattern Recognit Lett 30:27–38. https://doi.org/10.1016/J.PATREC.2008.08.010

Jeni LA, Cohn JF, De La Torre F (2013) Facing imbalanced data—recommendations for the use of performance metrics. In: 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction. IEEE, New York, pp 245–251

Tong W, Hong H, Fang H et al (2003) Decision forest: combining the predictions of multiple independent decision tree models. J ChemInfComputSci 43:525–531. https://doi.org/10.1021/ci020058s

Sakkiah S, Selvaraj C, Gong P et al (2017) Development of estrogen receptor beta binding prediction model using large sets of chemicals. Oncotarget 8:92989–93000. https://doi.org/10.18632/oncotarget.21723

Cruz-Monteagudo M, Medina-Franco JL, Pé Rez-Castillo Y et al (2014) Activity cliffs in drug discovery: Dr Jekyll or Mr Hyde? Drug Discov Today 19:1069–1080. https://doi.org/10.1016/j.drudis.2014.02.003

Stumpfe D, Hu H, Bajorath J (2019) Evolving concept of activity cliffs. ACS Omega 4:14360

Yang Z, Gao D (2013) Classification for imbalanced and overlapping classes using outlier detection and sampling techniques. NSP Natural Sciences Publishing, New York

Abdelaziz A, Spahn-Langguth H, Schramm K-W, Tetko IV (2016) Consensus modeling for HTS assays using in silico descriptors calculates the best balanced accuracy in Tox21 challenge. Front Environ Sci 4:2. https://doi.org/10.3389/fenvs.2016.00002

Zang Q, Rotroff DM, Judson RS (2013) Binary classification of a large collection of environmental chemicals from estrogen receptor assays by quantitative structure-activity relationship and machine learning methods. J Chem Inf Model 53:3244–3261. https://doi.org/10.1021/ci400527b

Idakwo G, Thangapandian S, Luttrell J et al (2019) Deep learning-based structure-activity relationship modeling for multi-category toxicity classification: a case study of 10KTox21 chemicals with high-throughput cell-based androgen receptor bioassay data. Front Physiol 10:1044. https://doi.org/10.3389/fphys.2019.01044

Acknowledgements

Permission was granted by the Chief of Engineers, U.S. Army Corps of Engineers to publish this paper. This research was supported in part by an appointment to the Research Participation Program at the U.S. Army Corps of Engineers-Engineer Research and Development Center-Environmental Laboratory (USACE-ERDC-EL), administered by the Oak Ridge Institute for Science and Education (ORISE) through an interagency agreement between the U.S. Department of Energy and USACE-ERDC-EL.

Disclaimer

The content is solely the responsibility of the authors and does not necessarily represent the official views of U.S. Army Corps of Engineers or U.S. Food and Drug Administration.

Funding

PG, ST and YL were supported by the U.S. Army Environmental Quality and Installations Research Program.

Author information

Authors and Affiliations

Contributions

PG and CZ conceived and supervised the study. GI, CZ and PG designed the study plan. GI was also responsible for implementing the algorithms. ST and YL undertook chemical descriptors generation and bioassay data curation. NW, ZZ, HH and BY provided useful insights for machine learning implementation. GI, PG and CZ drafted the manuscript. All authors revised the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1: Text S1.

SMOTEENN algorithm. Figure S1. Illustration of SMOTE and ENN techniques. (a) The original imbalanced data; (b) Synthetic samples are generated for the minority class using SMOTE. (c) Using ENN, those mislabeled synthetic samples were removed from the minority class. (d) The rebalanced data after the application of SMOTEENN. Table S1. Evaluation metrics derived for four classification methods (RF, RUS, SMO and SMN) with twelve Tox21 qHTS assay datasets. Specificity and two other metrics (sensitivity and SSG, both appearing in Table 2) are shown.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Idakwo, G., Thangapandian, S., Luttrell, J. et al. Structure–activity relationship-based chemical classification of highly imbalanced Tox21 datasets. J Cheminform 12, 66 (2020). https://doi.org/10.1186/s13321-020-00468-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13321-020-00468-x