Abstract

There is a high rate of failure in Alzheimer’s disease (AD) drug development with 99% of trials showing no drug-placebo difference. This low rate of success delays new treatments for patients and discourages investment in AD drug development. Studies across drug development programs in multiple disorders have identified important strategies for decreasing the risk and increasing the likelihood of success in drug development programs. These experiences provide guidance for the optimization of AD drug development. The “rights” of AD drug development include the right target, right drug, right biomarker, right participant, and right trial. The right target identifies the appropriate biologic process for an AD therapeutic intervention. The right drug must have well-understood pharmacokinetic and pharmacodynamic features, ability to penetrate the blood-brain barrier, efficacy demonstrated in animals, maximum tolerated dose established in phase I, and acceptable toxicity. The right biomarkers include participant selection biomarkers, target engagement biomarkers, biomarkers supportive of disease modification, and biomarkers for side effect monitoring. The right participant hinges on the identification of the phase of AD (preclinical, prodromal, dementia). Severity of disease and drug mechanism both have a role in defining the right participant. The right trial is a well-conducted trial with appropriate clinical and biomarker outcomes collected over an appropriate period of time, powered to detect a clinically meaningful drug-placebo difference, and anticipating variability introduced by globalization. We lack understanding of some critical aspects of disease biology and drug action that may affect the success of development programs even when the “rights” are adhered to. Attention to disciplined drug development will increase the likelihood of success, decrease the risks associated with AD drug development, enhance the ability to attract investment, and make it more likely that new therapies will become available to those with or vulnerable to the emergence of AD.

Similar content being viewed by others

Introduction

Alzheimer’s disease (AD) is rapidly increasing in frequency as the world’s population ages. In the USA, there are currently an estimated 5.3 million individuals with AD dementia, and this number is expected to increase to more than 13 million by 2050 [1, 2]. Approximately 15% of the US population over age 60 has prodromal AD and nearly 40% has preclinical AD [3]. Similar trends are seen globally with an anticipated worldwide population of AD dementia patients exceeding 100 million by 2050 unless means of delaying, preventing, or treating AD are found [4]. The financial burden of AD in the USA will increase from its current $259 billion US dollars (USD) annually to more than $1 trillion USD by 2050 [5]. The cost of AD to the US economy currently exceeds that of cancer or cardiovascular disease [6].

Amplifying the demographic challenge of the rising numbers of AD victims is the low rate of success of the development of AD therapies. Across all types of AD therapies, the failure rate is more than 99%, and for disease-modifying therapies (DMTs), the failure rate is 100% [7, 8]. These numbers demand a re-examination of the drug development process. Success in other fields such as cancer therapeutics can be helpful in guiding better drug discovery and development practices of AD treatments. For example, 12 of 42 (28%) drugs approved by the US Food and Drug Administration (FDA) in 2017 were oncology therapies (www.fda.gov); this contrasts with 0% of AD drugs in development. There are currently 112 new molecular entities in clinical trials in AD, whereas there are 3558 in cancer trials [9, 10]. Success in cancer drug development attracts funding and leads to more clinical trials, accelerating the emergence of new therapies. This model can assist in improving AD drug development.

Patient care increasingly demands precision medicine with the right drug, in the right dose, administered to the right patient, at the right time [11,12,13]. Precision medicine requires precision drug development. Effective medications, delivered in a correct dose, to a patient in the stage of the illness that can be impacted by therapy requires that these precision treatment characteristics be determined in a disciplined drug development program [14]. Drug development sponsors have developed systematic approaches to drug testing including the “rights” of drug development [15, 16], the “pillars” of drug development [17], model-based drug development [18, 19], and a translational medicine guide [20]. These approaches are appropriate across therapeutic areas, and none have been applied specifically to AD drug development. Building on these foundations, we describe a set of “rights” for AD drug development which are aligned with precision drug development. We consider lessons derived from drug development across several fields as well as learnings from recent negative AD treatment trials [14, 17, 21, 22]; we note the areas where success in the “right” principles is pursued. These “rights” for drug development are not all new innovations, but recent reviews of the AD drug pipeline show that they are often not implemented [16, 23, 24]. We consider how the “rights” will strengthen the AD drug discovery and development process, increase the likelihood of success, de-risk investment in AD therapeutic research, and spur interest in meeting the treatment challenges posed by the coming tsunami of patients.

Figure 1 provides an overview of the “rights of AD drug development.”

The right target

AD biology is complex, and only one target—the cholinergic system—has been fully validated through multiple successful therapies. Four cholinesterase inhibitors have been found to improve the dual outcomes of cognition plus function or cognition plus global status in patients with AD dementia [25, 26]. The successful development of memantine supports the validity of the N-methyl-d-aspartate (NMDA) receptor as a viable target, although only one agent has been shown to exert a therapeutic effect when modulating this receptor [27, 28]. A combination agent (Namzaric) addressing these two targets has been approved, establishing a precedent for combination therapy of two approved agents in AD [29]. Cholinesterase inhibitors have shown benefit in mild, moderate, and severe AD dementia [26]; memantine is effective in moderate and severe AD dementia [30]. No agent has shown benefit in prodromal AD (pAD), mild cognitive impairment (MCI), or preclinical AD [31].

No other target has been validated by successful therapy; all agents currently in development are unvalidated at the level of human benefit. Several targets are partially supported by biological and behavioral effects in animal models, and some agents have shown beneficial effects in preliminary clinical trials [32]. The lack of validation of a target by a specific trial does not disprove its worthiness for drug development; validation depends on concurrent conduct of other “rights” in the development program.

For an agent to be a DMT, the candidate drug treatment must meaningfully intervene in disease processes leading to nerve cell death [33] and be druggable (e.g., modifiable by a small molecule agent or immunotherapy [34, 35]). Viable targets must represent critical non-redundant pathways necessary for neuronal survival. Ideal targets have a proven function in disease pathophysiology, are genetically linked to the disease, have greater representation in disease than in normal function, can be assayed using high-throughput screening, are not uniformly distributed throughout the body, have an associated biomarker, and have a favorable side effect prediction profile [36]. Druggability relates to proteins, peptides, or nucleic acids with an activity that can be modified by a treatment [35].

A current National Institute of Health (NIH) ontology of candidate targets in AD includes amyloid-related mechanisms, tau pathways, apolipoprotein E e-4 (ApoE-4), lipid metabolism, neuroinflammation, autophagy/proteasome/unfolded protein response, hormones/growth factors, dysregulation of calcium homeostasis, heavy metals, mitochondrial cascade/mitochondrial uncoupling/antioxidants, disease risk genes and related pathways, epigenetics, and glucose metabolism [37, 38]. Other mechanisms may emerge; highly influential nodes in networks may be identified through systems pharmacology approaches; and opportunities or requirements for combination therapies may be discovered. Genetic editing techniques are increasingly used in experimental treatment paradigms, and RNA interference approaches show promise in non-AD neurodegenerative disorders [39]. With the recognition that late-life sporadic AD frequently has multiple contributing pathologies, identifying a single molecular therapeutic target whose manipulation is efficacious in all affected individuals may not be forthcoming [40,41,42,43].

Analysis of predictors of success in drug development programs shows that agents linked to genetically defined targets have a greater chance of being advanced from one phase to the next than drugs that address targets having no genetic links to the underlying disease [15, 21]. Transgenic (tg) animal models and knockout and knockin models of disease can add to the genetic evidence for a target. Genes can help prioritize drug candidates as well as support target validation [44]. Genes implicate potentially druggable pathways and networks involved in AD pathogenesis [45, 46]. Genetic linkages to amyloid precursor protein (APP), beta-site amyloid precursor protein cleavage enzyme (BACE), gamma-secretase, ApoE, tau metabolism, and immune function are elements within the pathophysiology of AD with identified genetic influences [47]. A coding mutation in the APP gene, for example, results in a 40% reduction in amyloid beta protein (Aβ) formation and a substantial reduction in the risk of AD [48]. This observation supports exploring the use of APP-modifying agents for the treatment and prevention of AD.

Defining the “right target” (or combination of targets) is currently the weakest aspect of AD drug discovery and development. The absence of a deep understanding of AD biology or focus on inappropriate targets will result in drug development failures regardless of how well the drug development program is conducted. This emphasizes the importance of investment by the National Institutes of Health (NIH), non-US basic biology initiatives, foundations, philanthropists, and others in the fundamental understanding of AD biology and identifying druggable targets and pathways [49].

The right drug

Clinical drug development is guided by defining a target product profile (TPP) describing the desirable and necessary features of the candidate therapy. The TPP establishes the goals of the development program, and each phase of a program is a step toward fulfilling the TPP [50, 51]. Drugs with TPP-driven development plans have a higher rate of regulatory success than those without [50].

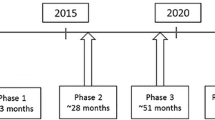

Characterizing a candidate therapy begins with screening assays of the identified target in preclinical discovery campaigns, identifies a lead candidate or limited set of related candidates, continues through establishing the pharmacokinetic (PK) and pharmacodynamic (PD) features in non-clinical animal models, gains refined PK and safety information with first-in-human (FIH) exposure in phase 1 clinical trials, and accrues greater PD and dose-response information in phase 2 trials. Finally, fully powered trials for clinical efficacy are undertaken in phase 3 with efficacy confirmation [52]. Safety data are collected throughout the process.

Preliminary characterization of the molecule as a treatment candidate showing the desired effect in the screening assay starts by determining that it has drug-like properties including molecular weight of ≤ 500 Da, bond features that support membrane penetration including the blood-brain barrier (BBB), no “alerts” that predict toxicity [53, 54], and chemical properties that suggest scalable manufacture and formulation [55, 56]. If the molecule has these encouraging properties, its absorption, distribution, metabolism, excretion, and toxicity (ADMET) are determined in non-clinical models [57].

BBB penetration must be shown in humans in the course of the drug development program during phase 1 [53]. The human BBB has p-glycoprotein transporters and other mechanisms that may not be present in rodents, and central nervous system (CNS) penetration in animal models of AD is not a sufficient guide to human CNS entry [58]. Measurement of CNS levels in non-human primates more closely reflects the human physiology, but direct measures of cerebrospinal fluid (CSF) levels in phase 1 human studies are required in a disciplined drug development program. CSF levels allow the determination of plasma/CSF ratios and help establish whether peripheral levels predict CNS exposures and whether CSF levels are compatible with those showing therapeutic effects in animal models of AD [59, 60]. CSF levels are an acceptable proxy for brain levels but leave some aspects of brain entry, neuronal penetration, and target exposure unassessed [61]. Understanding the PK/PD principles at the site of exposure of the agent to the target is one of the three pillars of drug development proposed by Morgan et al. [17]. Challengesin achievingtarget exposure is one reason for drug development failures in otherwise well-conducted programs. Tarenflurbil, for example, was shown to have poor BBB penetration after the development program was completed [62].

The “right drug” has shown efficacy in non-clinical models of AD. These models have not predicted success in human AD but advancing an agent to human testing without efficacy in animal models would add additional risk to the development program. A common strategy involves using genetic technologies to establish tg species bearing one or more human mutations leading to the overproduction of Aβ [63, 64]. These animals develop amyloid plaques similar to those of human AD but lack neurofibrillary tangles or cell death and are only partial simulacra of human AD [65]. They more closely resemble autosomal dominant AD with mutation-related overproduction of Aβ than typical late-onset AD where clearance of Aβ is the principal underlying problem [66, 67]. Activity in several AD models should be demonstrated to increase confidence in the robustness of the mechanism of the candidate agent [68]. There are recent efforts to more closely model human systems biology using human induced pluripotent stem cell (IPSC) disease models for drug screening [69,70,71].

Demonstration that the agent has neuroprotective effects is critical to the definition of DMT [33, 52], and interference in the processes leading to cell death should be established prior to human exposure. Many programs have shown effects on Aβ without documenting an impact on neuroprotection; more thorough exploration and demonstration of neuroprotection in non-clinical models may result in agents that exert greater disease modification in human trials.

Phase 1 establishes the PK features and ADMET characteristics of the candidate compound in humans. Several drug doses are assessed, first in single ascending dose (SAD) studies and then in multiple ascending dose (MAD) studies. A maximum tolerated dose (MTD) should be established in phase 1; without this, failure to show efficacy in later stages of development will invariably raise the question of whether the candidate agent was administered at a too-low dose. In some cases, receptor occupancy studies with positron emission tomography (PET), saturation of active transport mechanisms, physical limits on the amount of drug that can be administered, or dose-response curves that remain flat above specific doses obviate the need or the ability to demonstrate an MTD. In all other circumstances, an MTD should be established during phase 1 [72]. MTDs have been difficult to establish for monoclonal antibodies (mAbs), and decisions are often based on feasibility rather than established PK/PD relationships [5]. The decision to increase the doses of mAbs by several folds in recent trials after phase 2 or 3 trials showed no drug-placebo difference (e.g., solanezumab, crenezumab, gantenerumab, aducanumab) demonstrates the difficulty of establishing dose and PK/PD relationships of mAbs; the absence of understanding of PK/PD for mAbs may have contributed to the failure of development programs for these agents. Formulation issues should be resolved prior to evaluating the MTD to ensure that formulation challenges do not prevent the assessment of a full range of doses.

Phase 2 studies establish dose and dose-response relationships. Showing a dose-response association increases confidence in the biological effects of an agent and de-risks further development. The response may be a clinical outcome or a target engagement biomarker linked to the mechanism of action (MOA) of the agent [73,74,75]. An acceptable dose-response approach includes a low dose with no or little effect, a middle dose with an acceptable biological or clinical outcome, and a high dose that is not well tolerated or raises safety concerns. After the exploration of the dose-response range in phase 2, one or two doses are advanced to phase 3 and will include the final dose(s) of the package insert of information for prescribers and patients. Using a Bayesian dose-finding approach to decide which of 5 BAN2401 doses to advance to phase 3 is an example of dose-finding in phase 2 of a development program [76].

The “right drug” has acceptable toxicity. Safety assessment begins with a review of structural alerts of the molecule predictive of toxicity such as hepatic injury assessed as part of lead candidate nomination and proceeds through evaluations of target organ toxicity in several animal species—typically a rodent species and a dog species [77, 78]. Given an acceptable non-clinical safety profile, the agent is advanced to phase 1 for a FIH assessment of safety in the clinical setting with the determination of the MTD. Safety and tolerability data continue to accrue in phase 2 and phase 3 trials. The number of human exposures remains relatively low until phase 3, and important toxicity observations may be delayed until the late phases of drug development. Semagecestat, avagecestat, and verubecestat were all in phase 3 before cognitive toxicity was identified as an adverse event [79,80,81]. Some toxicities may not be identified until after approval and widespread human use. Vigilance for toxic effects of agents does not stop with drug approval and continues through the post-approval and marketing period [82]. AD is a fatal illness and—like life-extending cancer therapies—side effects of treatment may be an acceptable trade-off for slowing cognitive decline and maintaining quality of life [83].

The “right drug” at the end of phase 3 has demonstrated the specified features of the TPP, including efficacy and safety, and meets all the requirements for approval by the FDA, the European Medicines Agency (EMA), and other regulatory authorities as an AD therapy [50]. From an industry perspective, the “right” drug has substantial remaining patent life, is competitive with other agents with similar mechanisms, and will be acceptable to payers with reimbursement rates that make the development of the agent commercially attractive [15, 21]. The “right” features of the candidate agent can be scored with a translatability score that allows comparison and prioritization of agents for their readiness to proceed along the translational pathway to human testing and through the phases of clinical trials [84, 85]. Greater use of translational metrics may enhance the likelihood of drug development success [86].

The right biomarker

Biomarkers play many roles in drug development and are critical to the success of development programs (Table 1) [48]. Including biomarkers in development plans has been associated with greater success rates across therapeutic areas [15, 21, 87]. The use of several types of biomarkers (predictive, prognostic) in development programs is associated with higher success rates in trials compared to trials with no or few biomarkers [88]. The “right” biomarker varies by the type of information needed to inform a development program and the specific phase of drug development. Despite their importance, no biomarker has been qualified by the FDA for use across development programs [89].

The amyloid (A), tau (T), and neurodegeneration (N) framework provides an approach to diagnosis and monitoring of AD and helps guide the choice of biomarkers for drug development [90, 91]. “A” biomarkers (amyloid positron emission tomography [PET], CSF Aβ) support the diagnosis of AD; “A” and “T” (tau PET; CSF phospho-tau) biomarkers are pharmacodynamic biomarkers that can be used to demonstrate target engagement with Aβ or tau species; and “N” (magnetic resonance imaging [MRI], fluorodeoxyglucose PET, CSF total tau) biomarkers are pharmacodynamic markers of neurodegeneration that can provide evidence of neuroprotection and disease modification [33]. Additional markers for “N” are evolving, including neurofilament light (NfL) chain, which has shown promise in multiple sclerosis (MS) trials and preliminary AD trials [92]. Markers of synaptic degeneration such as neurogranin may also contribute to the understanding of therapeutic impact on “N” in AD. Emerging biomarkers are gaining credibility and will add to or amplify the ATN framework applicable to drug development [93].

In AD trials, biomarkers are needed to support the diagnosis. In prevention trials involving cognitively normal individuals, genetic trait biomarkers are used to establish the risk state of the individual or state biomarkers are employed to demonstrate the presence of AD pathology. In trials of treatments for autosomal dominant AD, demonstration of the presenilin 1, presenilin 2, or APP mutation is required in the trial participants [94, 95]. Similarly, in trials involving ApoE-4 homozygotes or heterozygotes or AD in Down syndrome, appropriate testing of chromosome 19 polymorphisms or chromosome 21 triplication is required [96]. A combination of ApoE-4 and TOMM-40 has been used to attempt to show the risk and age of onset of AD [97]. State biomarkers useful in preclinical diagnosis include amyloid PET and the CSF Aβ/tau signature of AD [98, 99]. Tau PET may be useful in identifying individuals appropriate for tau-targeted interventions or for measuring success in reducing the propagation of tau pathology [100].

A substantial number of individuals with a clinical diagnosis of AD have been shown to lack amyloid plaque deposition when studied with amyloid imaging. Forty percent of patients diagnosed clinically with prodromal AD and 25% of those diagnosed with mild AD dementia lack evidence of amyloid pathology when studied with amyloid PET [52, 101]. Those with suspected non-amyloid pathology (SNAP) have undetermined underlying pathology and may not respond to proposed AD therapies. SNAPs may not decline in the expected manner in the placebo group, compromising the ability to demonstrate a drug-placebo difference [102]. SNAPs should be excluded from AD trials; the “right” biomarker for this includes amyloid imaging, the CSF AD signature, or tau imaging in patients with the AD dementia phenotype. In the idalopirdine development program, no enrichment strategies were used and power calculations showed that more than 1600 participants per arm would be needed to show a drug-placebo difference. With enrichment based on amyloid abnormalities, the decline was more rapid and the predicted sample size per arm to show a drug-placebo difference was 148 [103].

Target engagement biomarkers are the “missing link” in many development programs. Having shown that the candidate agent affects the target pathology in preclinical models and is safe in phase 1, sponsors have sometimes advanced through minimal phase 2 studies or directly to phase 3 [22] without showing that the drug treatment has meaningfully engaged the target in humans. Well-conducted phase 2 studies are a critical element of principled drug development and will provide two key pieces of information: target engagement and doses to be assessed in phase 3 [73, 74]. Phase 2 provides the platform for deciding if the candidate agent is viable for further development [75]. Target engagement may be shown directly, for example, with PET receptor occupancy studies or indirectly through proof-of-pharmacology [104, 105]. Examples of proof-of-pharmacology in AD drug development include the demonstration of reduced Aβ production using stable isotope-labeled kinetics (SILK) [106], reduced CSF Aβ with BACE inhibitors [107], glutaminyl cyclase enzyme activity with phosphodiesterase inhibitors [108], and increased Aβ fragments in the plasma and CSF with gamma-secretase inhibitors and modulators [109]. Candidate target engagement/proof-of-pharmacology biomarkers include peripheral indicators of inflammation and oxidation for use in trials of anti-inflammatory and antioxidant compounds. Sponsors of drug development should advance markers of target engagement in concert with the candidate therapy; these may be used after regulatory approval as companion or complementary biomarkers [110, 111]. Demonstration of target engagement does not guarantee efficacy in later stages of development, but target engagement shown by the “right” biomarker provides important de-risking of a candidate treatment by showing biological activity that may translate into clinical efficacy. Semagecestat’s effect on Aβ production in the CSF and aducanumab’s plaque-lowering effect are examples where target engagement was demonstrated in phase 2 or phase 1B, and the agents still failed to show a beneficial drug-placebo difference in later-stage trials [32, 109]. Target engagement and proof-of-pharmacology are “pillars” of successful drug development [17].

Changes in the basic biology of AD—amyloid generation, tau aggregation, inflammation, oxidation, mitochondrial dysfunction, neurodegeneration, etc.—are linked to human cognition through neural circuits whose integrity is critical to normal memory and intellectual function [112]. Two techniques of assessing neural networks are electroencephalography (EEG) and functional magnetic resonance imaging (fMRI). In cognitively normal individuals with positive amyloid PET and low levels of tau as shown by tau PET, fMRI measures of the default mode network (DMN) reveal hyperactive circuit functions. In those with elevated amyloid and elevated tau levels, the circuits become hypoactive compared to age-matched controls [113, 114]. Decline in circuit function predicts progressive cognitive impairment [115]. Disrupted DMN function is present in prodromal AD and in AD dementia [116, 117]. Assessment of DMN integrity may be an important biomarker with predictive value for the impact of the intervention on clinical outcomes [112]. EEG is dependent on the intact network function and may have applications in AD drug development similar to, but more robustly, than those of fMRI [108, 118, 119]. Both EEG and fMRI require procedural and interpretative standardization to be implemented in multi-site trials. A recent alternative for the assessment of circuit integrity in AD is SV2A PET, targeting and visualizing the synaptic network and currently under study as a possible measure of target engagement for drugs aiming to influence synaptic function [120].

Amyloid imaging is a target engagement biomarker establishing reduction of plaque amyloid [111]. Several monoclonal antibodies have shown a dose and time-dependent plaque reduction. In a phase 1B trial, aducanumab achieved both significant plaque reduction and benefit on some clinical measures with evidence of a dose-response relationship [32]. The beneficial effect was not recapitulated in a phase 3 trial. Bapineuzumab and gantenerumab decreased plaque Aβ but had no corresponding impact on cognition or function in the doses studied [121, 122]. Removal of plaque amyloid may be necessary but not sufficient for a therapeutic benefit of anti-amyloid agents or may be a coincidental marker of engagement of a broad range of amyloid species including those required for a therapeutic response. Tau PET assesses target engagement by anti-tau therapeutics; reduced tau burden or reduced tau spread would indicate a therapeutic response [123]. Aβ and tau signals do not measure neuroprotection and are not necessarily evidence of disease modification (DM).

Biomarkers play a critical role in demonstrating DM in DMT development programs. Evidence of neuroprotection is essential to support DM, and structural magnetic resonance imaging (MRI) is the current biomarker of choice for this purpose. Hippocampal atrophy has been linked to progressive disease and to nerve cell loss [124,125,126]. In clinical trials, MRI has often not fulfilled expectations, and atrophy has sometimes been greater in the treatment groups than in the placebo controls [127, 128]. Recent studies have shown drug-placebo differences on MRI in the anticipated direction suggesting that MRI may be an important DM marker depending on the underlying MOA of the agent. As noted, serum and CSF biomarkers of neurodegeneration such as NfL and synaptic markers have promise to assess successful DMTs but have been incorporated into relatively few AD trials [129]. CSF measures of total tau may be closely related to neurodegeneration and provide useful evidence of the impact on cell death [130, 131].

Biomarkers could eventually have a role as surrogate outcomes for AD trials if they are shown to be predictive of clinical outcomes. Currently, no AD biomarker has achieved surrogate status, and biomarkers are used in concert with clinical outcomes as measures of treatment effects.

Biomarkers have a role in monitoring side effects in the course of clinical trials. Liver, hematologic, and cardiac effects are monitored with liver function tests, complete blood counts, and electrocardiography, respectively. Atabecestat, for example, is a BACE inhibitor whose development was interrupted by the emergence of liver toxicity [132]. Amyloid-related imaging abnormalities (ARIA) of the effusion (ARIA-E) or hemorrhagic (ARIA-H) type may occur with MAbs and are monitored in trials with serial MRI [133]. ARIA has been observed with bapineuzumab, gantenerumab, aducanumab, and BAN2401 [32, 134, 135].

The right participant

AD progresses through a spectrum of severity from cognitively normal amyloid-bearing preclinical individuals, to those with prodromal AD or prodromal/mild AD dementia and, finally, to those with more severe AD dementia [136, 137] (Fig. 2). Based on this model, trials can target primary prevention in cognitively normal individual with risk factors for AD but no state biomarkers indicative of AD pathology, secondary prevention in preclinical AD participants who are cognitively normal but have positive state biomarkers (positive amyloid PET, low CSF Aβ), and treatment trials aimed at slowing disease progression in prodromal or prodromal/mild AD dementia or mild, moderate, and severe AD dementia (Fig. 2). Although AD represents a seamless progression from unaffected to severely compromised individuals, participants can be assigned to the progressive phases based on genetic markers, cognitive and functional assessments, amyloid imaging or CSF Aβ and tau measures, tau imaging, and MRI [52, 136, 137]. The ATN Framework is designed to guide the identification of the “right” participant for clinical trials [90, 91]. Early intervention has proven to be associated with better outcomes in other disorders such as heart failure [138] suggesting that early intervention in the “brain failure” of AD may have superior outcomes compared to later-phase interventions. However, available cognitive-enhancing agents have been approved for mild, moderate, and severe AD and have failed in trials with predementia participants; some DMT mechanisms may require use earlier in the disease process before pathologic changes are extensive [139,140,141].

The right participant also relates to the MOA of the agent being assessed. Cognitive enhancing agents will be examined in patients with cognitive abnormalities; agents reducing amyloid production may have the optimal chance of success in primary or secondary prevention; tau prevention trials may focus on the preclinical participants; tau removal agents might be appropriate for prodromal AD or AD dementia; combinations of agents may be assessed in trials with participants with corresponding biomarker changes. Experience with a greater array of agents in a variety of disease phases will help inform the match between the “right” participant and specific agent MOAs. Development of more biomarkers such as those indicating CNS inflammation, excessive oxidation, or the presence of concurrent pathologies such as TDP-43 or alpha-synuclein may assist in matching treatment MOA to the pathological form of AD.

The right trial

The “right trial” is a well-conducted clinical experiment that answers the central question regarding the superiority of the drug over placebo at the specified dose in the time frame of observation in the defined population. Poorly conducted or underpowered trials do not resolve the central issue of drug efficacy and should not be conducted since they involve participant exposures and potential toxicity without the ability to provide valid informative scientific data. Trial sponsors incur the responsibility to report the results of trials to allow the field to progress by learning from the outcome of each experiment. Participants have accepted the risks of unknown drug effects and placebo exposure, and honoring this commitment requires that the learnings from the trial be made available publically [142].

A key element includes a sample size based on thoroughly vetted anticipated effect sizes. Trial simulations are available to model the results of varying effect sizes and the corresponding required population size [143].

Participation criteria critical to the trial success include defining an appropriate population of preclinical, prodromal, or AD dementia using biomarkers as noted above [136, 137]. Other key participation criteria include the absence of non-AD neurologic diagnoses, physical illness incompatible with trial requirements, or use of medications that may interact with the test agents. Fewer exclusions from trials lead to more generalizable results. Inclusion of diverse populations representative of the populations to which the agent will be marketed enhances the generalizability of trial results.

Clinical outcomes will be chosen based on the specific population included in the trial. The Preclinical Alzheimer Cognitive Composite (PACC) and the Alzheimer Preclinical Cognitive Composite (APCC) used in the Alzheimer’s Prevention Initiative, for example, are used as outcomes in studies of preclinical AD [137, 144, 145]. The Clinical Dementia Rating-Sum of Boxes (CDR-sb) is commonly used as an outcome in prodromal AD [146]. The AD Assessment Scale-Cognitive subscale (ADAS-cog) [147] or the neuropsychological test battery (NTB) [148] and the CDR-sb or Clinical Global Impression of Change with Caregiver Input (CIBIC+) are common dual outcomes in trials of mild-moderate AD dementia [40, 146]. The AD Composite Score (ADCOMS) is an analytic approach including items from the CDR-sb, ADAS-cog, and Mini-Mental State Examination (MMSE) that is sensitive to change and drug effects in prodromal AD and mild AD dementia [149]. The severe impairment battery (SIB) is the outcome assessment most commonly used in severe AD [150]. Having tools with sufficient sensitivity to detect drug-placebo differences in predementia phases of AD is challenging. Commonly used tools such as the ADAS-cog were developed for later stages of the disease. Newer instruments such as the PACC and APCC detect changes over time in natural history studies, but their performance in trials is unknown.

The Alzheimer’s Disease Cooperative Study (ADCS) Activities of Daily Living (ADL) scale is commonly used to assess daily function in patients with MCI and mild to severe AD dementia [151]. The Amsterdam Instrumental Activities of Daily Living (IADL) Questionnaire is increasingly employed for this purpose in MCI/prodromal AD and mild AD dementia [152, 153]. Table 2 summarizes the instruments currently used in trials of each major phase of AD.

The trial duration may vary from 12 months to 8 years for DMTs or 3–6 months for symptomatic agents based on the anticipated duration of exposure needed to demonstrate a drug-placebo difference. Preclinical trials may involve observing patients for up to 5 years to allow sufficient decline in the placebo group to be able to demonstrate a drug-placebo difference. These trial duration choices are arbitrary; a basic biological understanding linking the changes in the pathology to the duration of drug exposure is lacking. Using an adaptive design approach, it is possible to adjust trial durations based on emerging patterns of efficacy [76, 154]. Adaptive designs may be used to optimize sample size, trial duration, and dose selection and have been successful in trials of chemotherapy and in trials for treatments of diabetes [155]. Adaptive designs are currently in use in the European Prevention of AD (E-PAD), the Dominantly Inherited Alzheimer Network-Treatment Unit (DIAN-TU), and a study of oxytocin in frontotemporal dementia [156]; broad exploration of the approach is warranted [157, 158].

Globalization of clinical trials with the inclusion of trial sites in many countries is a common response to slow recruitment of trial participants. By increasing the number of trial sites, recruitment can be accelerated and drug efficacy demonstrated more promptly. Globalization, however, increases the number of languages and cultures of participants in the trials as well as increasing the heterogeneity of background experience among the trial sites and investigators. These factors may increase measurement variability and make it more difficult to demonstrate a drug-placebo difference [159,160,161]. The “right trial” will limit these factors by minimizing the number of regions, languages, and trial sites involved. Within diverse countries such as the USA, the inclusion of minority participants is key to insuring the generalizability of the findings from trials [162].

The right trial will include the right doses selected in phase 2 and the right biomarkers as noted above. The biomarker will be chosen to match the questions to be answered for each trial phase. Target engagement biomarkers are critical in phase 2, and DM biomarkers are critical in phase 3 of DMT trials.

The right trial is also efficiently conducted with rapid start-up, certified raters, a central institutional review board (IRB), and timely recruitment of appropriate subjects. Programs such as the Trial-Ready Cohort for Prodromal and Preclinical AD (TRC-PAD), Global Alzheimer Platform (GAP), and the EPAD initiative aim to enhance the efficiency with which trials are conducted [157, 163]. Development of online registries and trial-ready cohorts may accelerate trial recruitment and treatment evaluation [164,165,166]. Registries have been helpful in trial recruitment to non-AD disorders [167].

Inclusion of the right number of the right participants is of key importance in successfully advancing AD therapeutics. Compared to other fields, there is a reluctance by patients and physicians to participate in clinical trials for a disease that is considered by some to be a part of normal aging. Advocacy groups throughout the world strive to overcome this attitude; success in engaging participants in trials will become more pressing as more preclinical trials involving cognitively normal individuals are initiated. Sample size is related to the magnitude of the detectable effect which is in turn related to the effect size of the agent and the sensitivity of the measurement tool (clinical instruments or biomarkers); these factors require optimization to allow the conduct of trials with feasible sample sizes.

Hallmarks of poorly designed or conducted trials include failure of the placebo group to decline in the course of a trial (assuming an adequate observation period), failure to show separation of the placebo group from an active treatment arm such as donepezil, excessive measurement variability, or low levels of biological indicators of AD such as the percent of ApoE-4 carriers or the presence of fibrillar amyloid on amyloid imaging [22]. Trials with these features would not be expected to detect drug-placebo differences or to inform the drug development agenda.

A well-designed phase 3 trial builds on observations made in phase 2. Drugs have often been advanced to phase 3 based on the interpretation of apparent effects observed in phase 2 unprespecified subgroup analyses that are derived from small non-randomized samples and are rarely if ever reproduced in phase 3 [22].

Summary and conclusions

AD drug development has had a high rate of failure [7]. In many cases, BBB penetration, dose, target engagement, or rigorous interrogation of early-stage data has not been adequately pursued. Agents have been advanced to phase 3 with little or no evidence of efficacy in phase 2. Better designed and conducted phase 2 studies will inform further development and enable stopping earlier and preserving resources that can be assigned to testing more drugs in earlier stages (preclinical and FIH), as well as promoting better drugs with a greater chance of success to phase 3 [168]. Deep insight into the biology of AD is currently lacking, and predicting drug success will continue to be challenging; optimizing drug development and clinical trial conduct will reduce this inevitable risk of AD treatment development. Table 3 provides a summary of the integration of the “rights” of AD drug development across the phases of the development cycle.

This “rights” approach to drug development will enable the precision medicine objective of the right drug, at the right dose, for the right patient, at the right time, tested in the right trial [11,12,13, 16]. Approaches such as these when used in other therapeutic areas have improved the rate of success of drug development in other settings [15, 21]. Adhering to the “rights of AD drug development” will de-risk many of the challenges of drug development and increase the likelihood of successful trials of critically needed new treatments for AD.

Availability of data and materials

Not applicable.

Abbreviations

- A:

-

Amyloid

- Aβ:

-

Amyloid beta protein

- AD:

-

Alzheimer’s disease

- ADAS-cog:

-

AD Assessment Scale-Cognitive subscale

- ADCOMS:

-

AD Composite Score

- ADCS:

-

Alzheimer’s Disease Cooperative Study

- ADL:

-

Activities of daily living

- ADMET:

-

Absorption, distribution, metabolism, excretion, and toxicity

- APOE:

-

Apolipoprotein E

- APP:

-

Amyloid precursor protein

- ARIA:

-

Amyloid-related imaging abnormalities

- ARIA-E:

-

Amyloid-related imaging abnormalities effusion

- ARIA-H:

-

Amyloid-related imaging abnormalities hemorrhagic

- BACE:

-

Beta-site amyloid precursor protein cleavage enzyme

- BBB:

-

Blood-brain barrier

- CDR-sb:

-

Clinical Dementia Rating-Sum of Boxes

- CIBIC+:

-

Clinical Global Impression of Change with Caregiver Input

- CNS:

-

Central nervous system

- CSF:

-

Cerebrospinal fluid

- DIAN-TU:

-

Dominantly Inherited Alzheimer Network-Treatment Unit

- DM:

-

Disease modification

- DMN:

-

Default mode network

- DMT:

-

Disease-modifying therapy

- EEG:

-

Electroencephalography

- EMA:

-

European Medicines Agency

- E-PAD:

-

European Prevention of Alzheimer’s Disease

- fMRI:

-

Functional magnetic resonance imaging

- FDA:

-

US Food and Drug Administration

- FIH:

-

First-in-human

- GAP:

-

Global Alzheimer Platform

- IADL:

-

Instrumental Activities of Daily Living Questionnaire

- IPSC:

-

Induced pluripotent stem cell

- IRB:

-

Institutional review board

- MAbs:

-

Monoclonal antibodies

- MAD:

-

Multiple ascending dose

- MCI:

-

Mild cognitive impairment

- MMSE:

-

Mini-Mental State Examination

- MOA:

-

Mechanism of action

- MTD:

-

Maximum tolerated dose

- MRI:

-

Magnetic resonance imaging

- MS:

-

Multiple sclerosis

- N:

-

Neurodegeneration

- NfL:

-

Neurofilament light

- NIH:

-

National Institutes of Health

- NMDA:

-

N-methyl-d-asparate

- NPI:

-

Neuropsychiatric Inventory

- NTB:

-

Neuropsychological test battery

- PACC:

-

Preclinical Alzheimer Cognitive Composite

- pAD:

-

Prodromal Alzheimer’s disease

- PD:

-

Pharmacodynamic

- PET:

-

Positron emission tomography

- PK:

-

Pharmacokinetic

- SAD:

-

Single ascending dose

- SIB:

-

Severe impairment battery

- SILK:

-

Stable isotope-labeled kinetics

- SNAP:

-

Suspected non-amyloid pathology

- T:

-

Tau

- tg:

-

Transgenic

- TOMM-40:

-

Translocase of outer mitochondrial membrane 40

- TPP:

-

Target product profile

- TRC-PAD:

-

Trial-Ready Cohort for Prodromal and Preclinical AD

- USD:

-

US dollars

References

Alzheimer’s Association. 2015 Alzheimer’s disease facts and figures. Alzheimers Dement. 2015;11(3):332–84.

Alzheimer's Association. 2016 Alzheimer’s disease facts and figures. Alzheimer Dement. 2016;12(4):459–509.

Mar J, Soto-Gordoa M, Arrospide A, Moreno-Izco F, Martinez-Lage P. Fitting the epidemiology and neuropathology of the early stages of Alzheimer’s disease to prevent dementia. Alzheimers Res Ther. 2015;7(1):2.

Brookmeyer R, Johnson E, Ziegler-Graham K, Arrighi HM. Forecasting the global burden of Alzheimer’s disease. Alzheimers Dement. 2007;3(3):186–91.

Alzheimer’s Association. 2017 Alzheimer’s disease facts and figures. Alzheimers Dement. 2017;13(4):325–73.

Hurd MD, Martorell P, Langa KM. Monetary costs of dementia in the United States. N Engl J Med. 2013;369(5):489–90.

Cummings JL, Morstorf T, Zhong K. Alzheimer’s disease drug-development pipeline: few candidates, frequent failures. Alzheimers Res Ther. 2014;6(4):37–43.

Cummings J, Lee G, Mortsdorf T, Ritter A, Zhong K. Alzheimer’s disease drug development pipeline: 2017. Alzheimers Dement. 2017;3(3):367–84.

Cummings J, Lee G, Ritter A, Zhong K. Alzheimer’s disease drug development pipeline: 2018. Alzheimers Dement. 2018;4:195–214.

Moser J, Verdin P. Trial watch: burgeoning oncology pipeline raises questions about sustainability. Nat Rev Drug Discov. 2018;17(10):698–9.

Hampel H, O’Bryant SE, Castrillo JI, Ritchie C, Rojkova K, Broich K, et al. Precision medicine - the golden gate for detection, treatment and prevention of Alzheimer’s disease. J Prev Alzheimers Dis. 2016;3(4):243–59.

Hampel H, O’Bryant SE, Durrleman S, Younesi E, Rojkova K, Escott-Price V, et al. A precision medicine initiative for Alzheimer’s disease: the road ahead to biomarker-guided integrative disease modeling. Climacteric. 2017;20(2):107–18.

Peng X, Xing P, Li X, Qian Y, Song F, Bai Z, et al. Towards personalized intervention for Alzheimer’s disease. Genomics Proteomics Bioinformatics. 2016;14(5):289–97.

Plenge RM. Disciplined approach to drug discovery and early development. Sci Transl Med. 2016;8(349):349ps15.

Morgan P, Brown DG, Lennard S, Anderton MJ, Barrett JC, Eriksson U, et al. Impact of a five-dimensional framework on R&D productivity at AstraZeneca. Nat Rev Drug Discov. 2018;17(3):167–81.

Golde TE, DeKosky ST, Galasko D. Alzheimer’s disease: the right drug, the right time. Science. 2018;362(6420):1250–1.

Morgan P, Van Der Graaf PH, Arrowsmith J, Feltner DE, Drummond KS, Wegner CD, et al. Can the flow of medicines be improved? Fundamental pharmacokinetic and pharmacological principles toward improving phase II survival. Drug Discov Today. 2012;17(9–10):419–24.

Milligan PA, Brown MJ, Marchant B, Martin SW, van der Graaf PH, Benson N, et al. Model-based drug development: a rational approach to efficiently accelerate drug development. Clin Pharmacol Ther. 2013;93(6):502–14.

Visser SA, Aurell M, Jones RD, Schuck VJ, Egnell AC, Peters SA, et al. Model-based drug discovery: implementation and impact. Drug Discov Today. 2013;18(15–16):764–75.

Dolgos H, Trusheim M, Gross D, Halle JP, Ogden J, Osterwalder B, et al. Translational medicine guide transforms drug development processes: the recent Merck experience. Drug Discov Today. 2016;21(3):517–26.

Cook D, Brown D, Alexander R, March R, Morgan P, Satterthwaite G, et al. Lessons learned from the fate of AstraZeneca’s drug pipeline: a five-dimensional framework. Nat Rev Drug Discov. 2014;13(6):419–31.

Cummings J. Lessons learned from Alzheimer disease: clinical trials with negative outcomes. Clin Transl Sci. 2017;11:147–52.

Becker RE, Greig NH. Increasing the success rate for Alzheimer’s disease drug discovery and development. Expert Opin Drug Discov. 2012;7(4):367–70.

Karran E, Hardy J. A critique of the drug discovery and phase 3 clinical programs targeting the amyloid hypothesis for Alzheimer disease. Ann Neurol. 2014;76(2):185–205.

Kobayashi H, Ohnishi T, Nakagawa R, Yoshizawa K. The comparative efficacy and safety of cholinesterase inhibitors in patients with mild-to-moderate Alzheimer’s disease: a Bayesian network meta-analysis. Int J Geriatr Psychiatry. 2016;31(8):892–904.

Tan CC, Yu JT, Wang HF, Tan MS, Meng XF, Wang C, et al. Efficacy and safety of donepezil, galantamine, rivastigmine, and memantine for the treatment of Alzheimer’s disease: a systematic review and meta-analysis. J Alzheimers Dis. 2014;41(2):615–31.

Tariot PN, Farlow MR, Grossberg GT, Graham SM, McDonald S, Gergel I. Memantine treatment in patients with moderate to severe Alzheimer disease already receiving donepezil: a randomized controlled trial. JAMA. 2004;291(3):317–24.

Doody RS, Tariot PN, Pfeiffer E, Olin JT, Graham SM. Meta-analysis of six-month memantine trials in Alzheimer’s disease. Alzheimers Dement. 2007;3(1):7–17.

Greig SL. Memantine ER/donepezil: a review in Alzheimer’s disease. CNS Drugs. 2015;29(11):963–70.

Reisberg B, Doody R, Stoffler A, Schmitt F, Ferris S, Mobius HJ, et al. Memantine in moderate-to-severe Alzheimer’s disease. N Engl J Med. 2003;348(14):1333–41.

Strohle A, Schmidt DK, Schultz F, Fricke N, Staden T, Hellweg R, et al. Drug and exercise treatment of Alzheimer disease and mild cognitive impairment: a systematic review and meta-analysis of effects on cognition in randomized controlled trials. Am J Geriatr Psychiatry. 2015;23(12):1234–49.

Sevigny J, Chiao P, Bussiere T, Weinreb PH, Williams L, Maier M, et al. The antibody aducanumab reduces Aβ plaques in Alzheimer’s disease. Nature. 2016;537(7618):50–6.

Cummings JL, Fox N. Defining disease modification for Alzheimer’s disease clinical trials. J Prev Alz Dis. 2017;4:109–15.

Fauman EB, Rai BK, Huang ES. Structure-based druggability assessment--identifying suitable targets for small molecule therapeutics. Curr Opin Chem Biol. 2011;15(4):463–8.

Gashaw I, Ellinghaus P, Sommer A, Asadullah K. What makes a good drug target? Drug Discov Today. 2011;16(23–24):1037–43.

Gashaw I, Ellinghaus P, Sommer A, Asadullah K. What makes a good drug target? Drug Discov Today. 2012;17(Suppl):S24–30.

Lo AW, Ho C, Cummings J, Kosik KS. Parallel discovery of Alzheimer’s therapeutics. Sci Transl Med. 2014;6(241):241cm5.

Refolo LM, Snyder H, Liggins C, Ryan L, Silverberg N, Petanceska S, et al. Common Alzheimer’s disease research ontology: National Institute on Aging and Alzheimer’s Association collaborative project. Alzheimers Dement. 2012;8(4):372–5.

Tabrizi SJ, Leavitt BR, Landwehrmeyer GB, Wild EJ, Saft C, Barker RA, et al. Targeting huntingtin expression in patients with Huntington’s disease. N Engl J Med. 2019;380(24):2307–16.

Schneider LS, Raman R, Schmitt FA, Doody RS, Insel P, Clark CM, et al. Characteristics and performance of a modified version of the ADCS-CGIC CIBIC+ for mild cognitive impairment clinical trials. Alzheimer Dis Assoc Disord. 2009;23(3):260–7.

Schneider JA, Arvanitakis Z, Leurgans SE, Bennett DA. The neuropathology of probable Alzheimer disease and mild cognitive impairment. Ann Neurol. 2009;66(2):200–8.

Woodward M, Mackenzie IRA, Hsiung GY, Jacova C, Feldman H. Multiple brain pathologies in dementia are common. Eur Ger Med. 2010;1:259–65.

Devi G, Scheltens P. Heterogeneity of Alzheimer’s disease: consequence for drug trials? Alzheimers Res Ther. 2018;10(1):122.

Plenge RM, Scolnick EM, Altshuler D. Validating therapeutic targets through human genetics. Nat Rev Drug Discov. 2013;12(8):581–94.

Li P, Nie Y, Yu J. An effective method to identify shared pathways and common factors among neurodegenerative diseases. PLoS One. 2015;10(11):e0143045.

International Genomics of Alzheimer’s Disease C. Convergent genetic and expression data implicate immunity in Alzheimer’s disease. Alzheimer Dement. 2015;11(6):658–71.

Guerreiro R, Hardy J. Genetics of Alzheimer’s disease. Neurotherapeutics. 2014;11(4):732–7.

Townsend MJ, Arron JR. Reducing the risk of failure: biomarker-guided trial design. Nat Rev Drug Discov. 2016;15(8):517–8.

Kosik KS, Sejnowski TJ, Raichle ME, Ciechanover A, Baltimore D. A path toward understanding neurodegeneration. Science. 2016;353(6302):872–3.

Breder CD, Du W, Tyndall A. What’s the regulatory value of a target product profile? Trends Biotechnol. 2017;35(7):576–9.

Wyatt PG, Gilbert IH, Read KD, Fairlamb AH. Target validation: linking target and chemical properties to desired product profile. Curr Top Med Chem. 2011;11(10):1275–83.

Cummings J, Ritter A, Zhong K. Clinical trials for disease-modifying therapies in Alzheimer’s disease: a primer, lessons learned, and a blueprint for the future. J Alzheimers Dis. 2017; In press.

Banks WA. Developing drugs that can cross the blood-brain barrier: applications to Alzheimer’s disease. BMC Neurosci. 2008;9(Suppl 3):S2.

Walters WP. Going further than Lipinski’s rule in drug design. Expert Opin Drug Discov. 2012;7(2):99–107.

Ticehurst MD, Marziano I. Integration of active pharmaceutical ingredient solid form selection and particle engineering into drug product design. J Pharm Pharmacol. 2015;67(6):782–802.

Mignani S, Huber S, Tomas H, Rodrigues J, Majoral JP. Compound high-quality criteria: a new vision to guide the development of drugs, current situation. Drug Discov Today. 2016;21(4):573–84.

Steinmetz KL, Spack EG. The basics of preclinical drug development for neurodegenerative disease indications. BMC Neurol. 2009;9(Suppl 1):S2.

Lacombe O, Videau O, Chevillon D, Guyot AC, Contreras C, Blondel S, et al. In vitro primary human and animal cell-based blood-brain barrier models as a screening tool in drug discovery. Mol Pharm. 2011;8(3):651–63.

Lin JH. CSF as a surrogate for assessing CNS exposure: an industrial perspective. Curr Drug Metab. 2008;9(1):46–59.

Shen DD, Artru AA, Adkison KK. Principles and applicability of CSF sampling for the assessment of CNS drug delivery and pharmacodynamics. Adv Drug Deliv Rev. 2004;56(12):1825–57.

Rizk ML, Zou L, Savic RM, Dooley KE. Importance of drug pharmacokinetics at the site of action. Clin Transl Sci. 2017;10(3):133–42.

Wan HI, Jacobsen JS, Rutkowski JL, Feuerstein GZ. Translational medicine lessons from flurizan’s failure in Alzheimer’s disease (AD) trial: implication for future drug discovery and development for AD. Clin Transl Sci. 2009;2(3):242–7.

Ameen-Ali KE, Wharton SB, Simpson JE, Heath PR, Sharp P, Berwick J. Review: Neuropathology and behavioural features of transgenic murine models of Alzheimer's disease. Neuropathol Appl Neurobiol. 2017;43(7):553–70.

Sabbagh JJ, Kinney JW, Cummings JL. Animal systems in the development of treatments for Alzheimer’s disease: challenges, methods, and implications. Neurobiol Aging. 2013;34(1):169–83.

Laurijssens B, Aujard F, Rahman A. Animal models of Alzheimer’s disease and drug development. Drug Discov Today Technol. 2013;10(3):e319–27.

Potter R, Patterson BW, Elbert DL, Ovod V, Kasten T, Sigurdson W, et al. Increased in vivo amyloid-β42 production, exchange, and loss in presenilin mutation carriers. Sci Transl Med. 2013;5(189):189ra77.

Xu G, Ran Y, Fromholt SE, Fu C, Yachnis AT, Golde TE, et al. Murine Aβ over-production produces diffuse and compact Alzheimer-type amyloid deposits. Acta Neuropathol Commun. 2015;3:72.

Sabbagh JJ, Kinney JW, Cummings JL. Alzheimer’s disease biomarkers in animal models: closing the translational gap. Am J Neurodegener Dis. 2013;2(2):108–20.

Yang J, Li S, He XB, Cheng C, Le W. Induced pluripotent stem cells in Alzheimer’s disease: applications for disease modeling and cell-replacement therapy. Mol Neurodegener. 2016;11(1):39.

Liu Q, Waltz S, Woodruff G, Ouyang J, Israel MA, Herrera C, et al. Effect of potent gamma-secretase modulator in human neurons derived from multiple presenilin 1-induced pluripotent stem cell mutant carriers. JAMA Neurol. 2014;71(12):1481–9.

Choi SH, Kim YH, Quinti L, Tanzi RE, Kim DY. 3D culture models of Alzheimer’s disease: a road map to a “cure-in-a-dish”. Mol Neurodegener. 2016;11(1):75.

Pangalos MN, Schechter LE, Hurko O. Drug development for CNS disorders: strategies for balancing risk and reducing attrition. Nat Rev Drug Discov. 2007;6(7):521–32.

Greenberg BD, Carrillo MC, Ryan JM, Gold M, Gallagher K, Grundman M, et al. Improving Alzheimer’s disease phase II clinical trials. Alzheimers Dement. 2013;9(1):39–49.

Gray JA, Fleet D, Winblad B. The need for thorough phase II studies in medicines development for Alzheimer’s disease. Alzheimers Res Ther. 2015;7(1):67.

Cartwright ME, Cohen S, Fleishaker JC, Madani S, McLeod JF, Musser B, et al. Proof of concept: a PhRMA position paper with recommendations for best practice. Clin Pharmacol Ther. 2010;87(3):278–85.

Satlin A, Wang J, Logovinsky V, Berry S, Swanson C, Dhadda S, et al. Design of a Bayesian adaptive phase 2 proof-of-concept trial for BAN2401, a putative disease-modifying monoclonal antibody for the treatment of Alzheimer’s disease. Alzheimers Dement (N Y). 2016;2(1):1–12.

Dalgaard L. Comparison of minipig, dog, monkey and human drug metabolism and disposition. J Pharmacol Toxicol Methods. 2015;74:80–92.

Galijatovic-Idrizbegovic A, Miller JE, Cornell WD, Butler JA, Wollenberg GK, Sistare FD, et al. Role of chronic toxicology studies in revealing new toxicities. Regul Toxicol Pharmacol. 2016;82:94–8.

Coric V, Salloway S, van Dyck CH, Dubois B, Andreasen N, Brody M, et al. Targeting prodromal Alzheimer disease with avagacestat: a randomized clinical trial. JAMA Neurol. 2015;72(11):1324–33.

Egan MF, Kost J, Voss T, Mukai Y, Aisen PS, Cummings JL, et al. Randomized trial of verubecestat for prodromal Alzheimer’s disease. N Engl J Med. 2019;380(15):1408–20.

Doody RS, Raman R, Sperling RA, Seimers E, Sethuraman G, Mohs R, et al. Peripheral and central effects of gamma-secretase inhibition by semagacestat in Alzheimer’s disease. Alzheimers Res Ther. 2015;7(1):36.

Hoffman KB, Dimbil M, Tatonetti NP, Kyle RF. A pharmacovigilance signaling system based on FDA regulatory action and post-marketing adverse event reports. Drug Saf. 2016;39(6):561–75.

Hauber AB, Johnson FR, Fillit H, Mohamed AF, Leibman C, Arrighi HM, et al. Older Americans’ risk-benefit preferences for modifying the course of Alzheimer disease. Alzheimer Dis Assoc Disord. 2009;23(1):23–32.

Wehling M. Assessing the translatability of drug projects: what needs to be scored to predict success? Nat Rev Drug Discov. 2009;8(7):541–6.

Wendler A, Wehling M. Translatability score revisited: differentiation for distinct disease areas. J Transl Med. 2017;15(1):226.

Wendler A, Wehling M. Translatability scoring in drug development: eight case studies. J Transl Med. 2012;10:39.

Figueroa-Magalhaes MC, Jelovac D, Connolly R, Wolff AC. Treatment of HER2-positive breast cancer. Breast. 2014;23(2):128–36.

Green DJ, Liu XI, Hua T, Burnham JM, Schuck R, Pacanowski M, et al. Enrichment strategies in pediatric drug development: an analysis of trials submitted to the US Food and Drug Administration. Clin Pharmacol Ther. 2018;104(5):983–8.

Arneric SP, Kern VD, Stephenson DT. Regulatory-accepted drug development tools are needed to accelerate innovative CNS disease treatments. Biochem Pharmacol. 2018;151:291–306.

Cummings J. The National Institute on Aging-Alzheimer’s Association framework on Alzheimer’s disease: application to clinical trials. Alzheimers Dement. 2018; Epub ahead of print. https://doi.org/10.1016/j.jalz.2018.05.006.

Jack CR Jr, Bennett DA, Blennow K, Carrillo MC, Dunn B, Haeberlein SB, et al. NIA-AA research framework: toward a biological definition of Alzheimer’s disease. Alzheimers Dement. 2018;14(4):535–62.

Kuhle J, Disanto G, Lorscheider J, Stites T, Chen Y, Dahlke F, et al. Fingolimod and CSF neurofilament light chain levels in relapsing-remitting multiple sclerosis. Neurology. 2015;84(16):1639–43.

Molinuevo JL, Ayton S, Batrla R, Bednar MM, Bittner T, Cummings J, et al. Current state of Alzheimer’s fluid biomarkers. Acta Neuropathol. 2018;136(6):821–53.

Mills SM, Mallmann J, Santacruz AM, Fuqua A, Carril M, Aisen PS, et al. Preclinical trials in autosomal dominant AD: implementation of the DIAN-TU trial. Rev Neurol (Paris). 2013;169(10):737–43.

Reiman EM, Langbaum JB, Tariot PN. Alzheimer’s prevention initiative: a proposal to evaluate presymptomatic treatments as quickly as possible. Biomark Med. 2010;4(1):3–14.

Qian J, Wolters FJ, Beiser A, Haan M, Ikram MA, Karlawish J, et al. APOE-related risk of mild cognitive impairment and dementia for prevention trials: an analysis of four cohorts. PLoS Med. 2017;14(3):e1002254.

Zeitlow K, Charlambous L, Ng I, Gagrani S, Mihovilovic M, Luo S, et al. The biological foundation of the genetic association of TOMM40 with late-onset Alzheimer’s disease. Biochim Biophys Acta. 2017.

Boluda S, Toledo JB, Irwin DJ, Raible KM, Byrne MD, Lee EB, et al. A comparison of Abeta amyloid pathology staging systems and correlation with clinical diagnosis. Acta Neuropathol. 2014;128(4):543–50.

de Souza LC, Sarazin M, Teixeira-Junior AL, Caramelli P, Santos AE, Dubois B. Biological markers of Alzheimer’s disease. Arq Neuropsiquiatr. 2014;72(3):227–31.

Scholl M, Lockhart SN, Schonhaut DR, O’Neil JP, Janabi M, Ossenkoppele R, et al. PET imaging of tau deposition in the aging human brain. Neuron. 2016;89(5):971–82.

Sevigny J, Suhy J, Chiao P, Chen T, Klein G, Purcell D, et al. Amyloid PET screening for enrichment of early-stage Alzheimer disease clinical trials: experience in a phase 1b clinical trial. Alzheimer Dis Assoc Disord. 2016;30(1):1–7.

Jack CR Jr, Knopman DS, Chetelat G, Dickson D, Fagan AM, Frisoni GB, et al. Suspected non-Alzheimer disease pathophysiology--concept and controversy. Nat Rev Neurol. 2016;12(2):117–24.

Ballard C, Atri A, Boneva N, Cummings JL, Frolich L, Molinuevo JL, et al. Enrichment factors for clinical trials in mild-to-moderate Alzheimer’s disease. Alzheimers Dement (N Y). 2019;5:164–74.

Soares HD. The use of mechanistic biomarkers for evaluating investigational CNS compounds in early drug development. Curr Opin Investig Drugs. 2010;11(7):795–801.

Wagner JA. Strategic approach to fit-for-purpose biomarkers in drug development. Annu Rev Pharmacol Toxicol. 2008;48:631–51.

Bateman RJ, Munsell LY, Morris JC, Swarm R, Yarasheski KE, Holtzman DM. Human amyloid-beta synthesis and clearance rates as measured in cerebrospinal fluid in vivo. Nat Med. 2006;12:856–61.

Kennedy ME, Stamford AW, Chen X, Cox K, Cumming JN, Dockendorf MF, et al. The BACE1 inhibitor verubecestat (MK-8931) reduces CNS beta-amyloid in animal models and in Alzheimer’s disease patients. Sci Transl Med. 2016;8(363):363ra150.

Scheltens P, Hallikainen M, Grimmer T, Duning T, Gouw AA, Teunissen CE, et al. Safety, tolerability and efficacy of the glutaminyl cyclase inhibitor PQ912 in Alzheimer’s disease: results of a randomized, double-blind, placebo-controlled phase 2a study. Alzheimers Res Ther. 2018;10(1):107.

Portelius E, Zetterberg H, Dean RA, Marcil A, Bourgeois P, Nutu M, et al. Amyloid-beta (1-15/16) as a marker for gamma-secretase inhibition in Alzheimer’s disease. J Alzheimers Dis. 2012;31(2):335–41.

Luo D, Smith JA, Meadows NA, Schuh A, Manescu KE, Bure K, et al. A quantitative assessment of factors affecting the technological development and adoption of companion diagnostics. Front Genet. 2015;6:357.

Cummings J. The role of biomarkers in Alzheimer’s disease drug development. Adv Exp Med Biol. In Press.

Cummings J, Zhong K, Cordes D. Drug development in Alzheimer’s disease: the role of default mode network assessment in phase II. US Neurol. 2017;13(2):67–9.

Schultz AP, Chhatwal JP, Hedden T, Mormino EC, Hanseeuw BJ, Sepulcre J, et al. Phases of hyperconnectivity and hypoconnectivity in the default mode and salience networks track with amyloid and tau in clinically normal individuals. J Neurosci. 2017;37(16):4323–31.

Sepulcre J, Sabuncu MR, Li Q, El Fakhri G, Sperling R, Johnson KA. Tau and amyloid beta proteins distinctively associate to functional network changes in the aging brain. Alzheimers Dement. 2017;13(11):1261–9.

Buckley RF, Schultz AP, Hedden T, Papp KV, Hanseeuw BJ, Marshall G, et al. Functional network integrity presages cognitive decline in preclinical Alzheimer disease. Neurology. 2017;89(1):29–37.

Koch K, Myers NE, Gottler J, Pasquini L, Grimmer T, Forster S, et al. Disrupted intrinsic networks link amyloid-beta pathology and impaired cognition in prodromal Alzheimer’s disease. Cereb Cortex. 2015;25(12):4678–88.

Weiler M, de Campos BM, Nogueira MH, Pereira Damasceno B, Cendes F, Balthazar ML. Structural connectivity of the default mode network and cognition in Alzheimers disease. Psychiatry Res. 2014;223(1):15–22.

Wilson FJ, Danjou P. Early decision-making in drug development: the potential role of pharmaco-EEG and pharmaco-sleep. Neuropsychobiology. 2015;72(3–4):188–94.

Wilson FJ, Leiser SC, Ivarsson M, Christensen SR, Bastlund JF. Can pharmaco-electroencephalography help improve survival of central nervous system drugs in early clinical development? Drug Discov Today. 2014;19(3):282–8.

Chen MK, Mecca AP, Naganawa M, Finnema SJ, Toyonaga T, Lin SF, et al. Assessing synaptic density in Alzheimer disease with synaptic vesicle glycoprotein 2A positron emission tomographic imaging. JAMA Neurol. 2018;75(10):1215–24.

Liu E, Schmidt ME, Margolin R, Sperling R, Koeppe R, Mason NS, et al. Amyloid-beta 11C-PiB-PET imaging results from 2 randomized bapineuzumab phase 3 AD trials. Neurology. 2015;85(8):692–700.

Panza F, Solfrizzi V, Imbimbo BP, Giannini M, Santamato A, Seripa D, et al. Efficacy and safety studies of gantenerumab in patients with Alzheimer’s disease. Expert Rev Neurother. 2014;14(9):973–86.

Cummings J, Blennow K, Johnson K, Keeley M, Bateman RJ, Molinuevo JL, et al. Anti-tau trials for Alzheimer’s disease: a report from the EU/US/CTAD Task Force. J Prev Alzheimers Dis. 2019;6(3):157–63.

Apostolova LG, Zarow C, Biado K, Hurtz S, Boccardi M, Somme J, et al. Relationship between hippocampal atrophy and neuropathology markers: a 7T MRI validation study of the EADC-ADNI Harmonized Hippocampal Segmentation Protocol. Alzheimers Dement. 2015;11(2):139–50.

Csernansky JG, Hamstra J, Wang L, McKeel D, Price JL, Gado M, et al. Correlations between antemortem hippocampal volume and postmortem neuropathology in AD subjects. Alzheimer Dis Assoc Disord. 2004;18(4):190–5.

Whitwell JL, Jack CR Jr, Pankratz VS, Parisi JE, Knopman DS, Boeve BF, et al. Rates of brain atrophy over time in autopsy-proven frontotemporal dementia and Alzheimer disease. Neuroimage. 2008;39(3):1034–40.

Fox NC, Black RS, Gilman S, Rossor MN, Griffith SG, Jenkins L, et al. Effects of Abeta immunization (AN1792) on MRI measures of cerebral volume in Alzheimer disease. Neurology. 2005;64(9):1563–72.

Novak G, Fox N, Clegg S, Nielsen C, Einstein S, Lu Y, et al. Changes in brain volume with bapineuzumab in mild to moderate Alzheimer’s disease. J Alzheimers Dis. 2015;49(4):1123–34.

Mattsson N, Andreasson U, Zetterberg H, Blennow K, Alzheimer’s Disease Neuroimaging I. Association of plasma neurofilament light with neurodegeneration in patients with Alzheimer disease. JAMA Neurol. 2017;74(5):557–66.

Johnson KA, Schultz A, Betensky RA, Becker JA, Sepulcre J, Rentz D, et al. Tau positron emission tomographic imaging in aging and early Alzheimer disease. Ann Neurol. 2016;79(1):110–9.

Tarawneh R, Head D, Allison S, Buckles V, Fagan AM, Ladenson JH, et al. Cerebrospinal fluid markers of neurodegeneration and rates of brain atrophy in early Alzheimer disease. JAMA Neurol. 2015;72(6):656–65.

Henley D, Raghavan N, Sperling R, Aisen P, Raman R, Romano G. Preliminary results of a trial of atabecestat in preclinical Alzheimer’s disease. N Engl J Med. 2019;380(15):1483–5.

Sperling RA, Jack CR Jr, Black SE, Frosch MP, Greenberg SM, Hyman BT, et al. Amyloid-related imaging abnormalities in amyloid-modifying therapeutic trials: recommendations from the Alzheimer’s Association Research Roundtable Workgroup. Alzheimers Dement. 2011;7(4):367–85.

Ketter N, Brashear HR, Bogert J, Di J, Miaux Y, Gass A, et al. Central review of amyloid-related imaging abnormalities in two phase III clinical trials of bapineuzumab in mild-to-moderate Alzheimer’s disease patients. J Alzheimers Dis. 2017;57(2):557–73.

Ostrowitzki S, Lasser RA, Dorflinger E, Scheltens P, Barkhof F, Nikolcheva T, et al. A phase III randomized trial of gantenerumab in prodromal Alzheimer’s disease. Alzheimers Res Ther. 2017;9(1):95.

Dubois B, Feldman HH, Jacova C, Cummings JL, Dekosky ST, Barberger-Gateau P, et al. Revising the definition of Alzheimer’s disease: a new lexicon. Lancet Neurol. 2010;9(11):1118–27.

Dubois B, Feldman HH, Jacova C, Hampel H, Molinuevo JL, Blennow K, et al. Advancing research diagnostic criteria for Alzheimer’s disease: the IWG-2 criteria. Lancet Neurol. 2014;13(6):614–29.

Herscovici R, Kutyifa V, Barsheshet A, Solomon S, McNitt S, Polonsky B, et al. Early intervention and long-term outcome with cardiac resynchronization therapy in patients without a history of advanced heart failure symptoms. Eur J Heart Fail. 2015;17(9):964–70.

Reiman EM, Langbaum JB, Tariot PN, Lopera F, Bateman RJ, Morris JC, et al. CAP--advancing the evaluation of preclinical Alzheimer disease treatments. Nat Rev Neurol. 2016;12(1):56–61.

Sperling RA, Jack CR Jr, Aisen PS. Testing the right target and right drug at the right stage. Sci Transl Med. 2011;3(111):111cm33.

Sperling R, Mormino E, Johnson K. The evolution of preclinical Alzheimer’s disease: implications for prevention trials. Neuron. 2014;84(3):608–22.

Cox CG, Ryan BAM, Gillen DL, Grill JD. A preliminary study of clinical trial enrollment decisions among people with mild cognitive impairment and their study partners. Am J Geriatr Psychiatry. 2019;27(3):322–32.

Romero K, Ito K, Rogers JA, Polhamus D, Qiu R, Stephenson D, et al. The future is now: model-based clinical trial design for Alzheimer’s disease. Clin Pharmacol Ther. 2015;97(3):210–4.

Weintraub S, Carrillo MC, Farias ST, Goldberg TE, Hendrix JA, Jaeger J, et al. Measuring cognition and function in the preclinical stage of Alzheimer’s disease. Alzheimers Dement (N Y). 2018;4:64–75.

Langbaum JB, Hendrix SB, Ayutyanont N, Chen K, Fleisher AS, Shah RC, et al. An empirically derived composite cognitive test score with improved power to track and evaluate treatments for preclinical Alzheimer’s disease. Alzheimers Dement. 2014;10(6):666–74.

Morris JC. The Clinical Dementia Rating (CDR): current version and scoring rules. Neurology. 1993;43(11):2412–4.

Rosen WG, Mohs RC, Davis KL. A new rating scale for Alzheimer’s disease. Am J Psychiatry. 1984;141(11):1356–64.

Harrison J, Minassian SL, Jenkins L, Black RS, Koller M, Grundman M. A neuropsychological test battery for use in Alzheimer disease clinical trials. Arch Neurol. 2007;64(9):1323–9.

Wang J, Logovinsky V, Hendrix SB, Stanworth SH, Perdomo C, Xu L, et al. ADCOMS: a composite clinical outcome for prodromal Alzheimer’s disease trials. J Neurol Neurosurg Psychiatry. 2016;87:993–9.

Schmitt FA, Ashford W, Ernesto C, Saxton J, Schneider LS, Clark CM, et al. The severe impairment battery: concurrent validity and the assessment of longitudinal change in Alzheimer’s disease. The 6 Study. Alzheimer Dis Assoc Disord. 1997;11(Suppl 2):S51–S6.

Galasko D, Bennett D, Sano M, Ernesto C, Thomas R, Grundman M, et al. An inventory to assess activities of daily living for clinical trials in Alzheimer’s disease. The Alzheimer’s Disease Cooperative Study. Alzheimer Dis Assoc Disord. 1997;11(Suppl 2):S33–S9.

Jutten RJ, Peeters CFW, Leijdesdorff SMJ, Visser PJ, Maier AB, Terwee CB, et al. Detecting functional decline from normal aging to dementia: development and validation of a short version of the Amsterdam IADL Questionnaire. Alzheimers Dement (Amst). 2017;8:26–35.

Sikkes SA, Pijnenburg YA, Knol DL, de Lange-de Klerk ES, Scheltens P, Uitdehaag BM. Assessment of instrumental activities of daily living in dementia: diagnostic value of the Amsterdam Instrumental Activities of Daily Living Questionnaire. J Geriatr Psychiatry Neurol. 2013;26(4):244–50.

Vellas B, Carrillo MC, Sampaio C, Brashear HR, Siemers E, Hampel H, et al. Designing drug trials for Alzheimer’s disease: what we have learned from the release of the phase III antibody trials: a report from the EU/US/CTAD Task Force. Alzheimers Dement. 2013;9(4):438–44.

Das S, Lo AW. Re-inventing drug development: a case study of the I-SPY 2 breast cancer clinical trials program. Contemp Clin Trials. 2017;62:168–74.

Finger EC, Berry S, Cummings J, Coleman K, Hsiung R, Feldman HH, et al. Adaptive cross-over designs for assessment of symptomatic treatments targeting behavior in neurodegenerative disease: a phase 2 clinical trial of intranasal oxytocin for frontotemporal dementia (FOXY). Alzheimers Res Ther. 2018;10:102–9.

Ritchie CW, Molinuevo JL, Truyen L, Satlin A, Van der Geyten S, Lovestone S. Development of interventions for the secondary prevention of Alzheimer’s dementia: the European Prevention of Alzheimer’s Dementia (EPAD) project. Lancet Psychiatry. 2016;3(2):179–86.

Bateman RJ, Benzinger TL, Berry S, Clifford DB, Duggan C, Fagan AM, et al. The DIAN-TU Next Generation Alzheimer’s prevention trial: adaptive design and disease progression model. Alzheimers Dement. 2017;13(1):8–19.

Grill JD, Raman R, Ernstrom K, Aisen P, Dowsett SA, Chen YF, et al. Comparing recruitment, retention, and safety reporting among geographic regions in multinational Alzheimer’s disease clinical trials. Alzheimers Res Ther. 2015;7(1):39–53.

Henley DB, Dowsett SA, Chen YF, Liu-Seifert H, Grill JD, Doody RS, et al. Alzheimer’s disease progression by geographical region in a clinical trial setting. Alzheimers Res Ther. 2015;7(1):43–52.

Cummings JL, Atri A, Ballard C, Boneva N, Frolich L, Molinuevo JL, et al. Insights into globalization: comparison of patient characteristics and disease progression among geographic regions in a multinational Alzheimer’s disease clinical program. Alzheimer Res Ther. 2018;10:116–28.

Kennedy RE, Cutter GR, Wang G, Schneider LS. Challenging assumptions about African American participation in Alzheimer disease trials. Am J Geriatr Psychiatry. 2017;25(10):1150–9.

Cummings JL, Aisen P, Barton R, Bork J, Doody R, Dwyer J, et al. Re-engineering Alzheimer clinical trials: Global Alzheimer Platform Network. J Prevent Alz Dis. 2016;3:114–20.

Mackin RS, Insel PS, Truran D, Finley S, Flenniken D, Nosheny R, et al. Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: results from the Brain Health Registry. Alzheimers Dement (Amst). 2018;10:573–82.

Nosheny RL, Camacho MR, Insel PS, Flenniken D, Fockler J, Truran D, et al. Online study partner-reported cognitive decline in the Brain Health Registry. Alzheimers Dement (N Y). 2018;4:565–74.

Zhong K, Cummings J. Healthybrains.org: from registry to randomization. J Prev Alz Dis. 2016;3(3):123–6.

Tan MH, Thomas M, MacEachern MP. Using registries to recruit subjects for clinical trials. Contemp Clin Trials. 2015;41:31–8.

Paul SM, Mytelka DS, Dunwiddie CT, Persinger CC, Munos BH, Lindborg SR, et al. How to improve R&D productivity: the pharmaceutical industry’s grand challenge. Nat Rev Drug Discov. 2010;9(3):203–14.

Acknowledgements

Not applicable.

Funding

The authors acknowledge the support of a COBRE grant from the NIH/NIGMS (P20GM109025) and Keep Memory Alive.

Author information

Authors and Affiliations

Contributions

All authors contributed in the writing and revising of the manuscript, and all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

JC has provided consultation to Acadia, Accera, Actinogen, Alkahest, Allergan, Alzheon, Avanir, Axsome, BiOasis Technologies, Biogen, Bracket, Cassava, Denali, Diadem, EIP Pharma, Eisai, Genentech, Green Valley, Grifols, Hisun, Idorsia, Kyowa Kirin, Lilly, Lundbeck, Merck, Otsuka, Proclara, QR, Resverlogix, Roche, Samumed, Samus, Sunovion, Suven, Takeda, Teva, Toyama, and United Neuroscience pharmaceutical and assessment companies. JC is supported by Keep Memory Alive (KMA) and COBRE award from the NIGMC (P20GM109025).