Abstract

Background

Providing health professionals with quantitative summaries of their clinical performance when treating specific groups of patients (“feedback”) is a widely used quality improvement strategy, yet systematic reviews show it has varying success. Theory could help explain what factors influence feedback success, and guide approaches to enhance effectiveness. However, existing theories lack comprehensiveness and specificity to health care. To address this problem, we conducted the first systematic review and synthesis of qualitative evaluations of feedback interventions, using findings to develop a comprehensive new health care-specific feedback theory.

Methods

We searched MEDLINE, EMBASE, CINAHL, Web of Science, and Google Scholar from inception until 2016 inclusive. Data were synthesised by coding individual papers, building on pre-existing theories to formulate hypotheses, iteratively testing and improving hypotheses, assessing confidence in hypotheses using the GRADE-CERQual method, and summarising high-confidence hypotheses into a set of propositions.

Results

We synthesised 65 papers evaluating 73 feedback interventions from countries spanning five continents. From our synthesis we developed Clinical Performance Feedback Intervention Theory (CP-FIT), which builds on 30 pre-existing theories and has 42 high-confidence hypotheses. CP-FIT states that effective feedback works in a cycle of sequential processes; it becomes less effective if any individual process fails, thus halting progress round the cycle. Feedback’s success is influenced by several factors operating via a set of common explanatory mechanisms: the feedback method used, health professional receiving feedback, and context in which feedback takes place. CP-FIT summarises these effects in three propositions: (1) health care professionals and organisations have a finite capacity to engage with feedback, (2) these parties have strong beliefs regarding how patient care should be provided that influence their interactions with feedback, and (3) feedback that directly supports clinical behaviours is most effective.

Conclusions

This is the first qualitative meta-synthesis of feedback interventions, and the first comprehensive theory of feedback designed specifically for health care. Our findings contribute new knowledge about how feedback works and factors that influence its effectiveness. Internationally, practitioners, researchers, and policy-makers can use CP-FIT to design, implement, and evaluate feedback. Doing so could improve care for large numbers of patients, reduce opportunity costs, and improve returns on financial investments.

Trial registration

PROSPERO, CRD42015017541

Similar content being viewed by others

Background

Providing health professionals with quantitative summaries of their clinical performance when treating specific groups of patients has been used for decades as a quality improvement strategy (Table 1) [1]. Such approaches may be called “audit and feedback”, “clinical performance feedback”, “performance measurement”, “quality measurement”, “key performance indicators”, “quality indicators”, “quality dashboards”, “scorecards”, “report cards”, or “population health analytics” [2,3,4]. In this paper, we use the term “feedback” intervention to encompass all these approaches and to refer to the entire process of selecting a clinical topic on which to improve, collecting and analysing population-level data, producing and delivering a quantitative summary of clinical performance, and making subsequent changes to clinical practice.

Feedback has been extensively researched in numerous quantitative and qualitative studies [5]. However, despite its popularity, the mechanisms by which it operates are poorly understood [5]. In this paper, we define mechanisms as underlying explanations of how and why an intervention works [6]. Three consecutive Cochrane reviews have found feedback produces “small but potentially important improvements in professional practice” [7] with wide variations in its impact: the most recent demonstrated a median clinical practice improvement of 4.3%, ranging from a 9% decrease to a 70% increase [8]. When feedback interventions target suboptimally performed high-volume and clinically impactful practices, such as hypertension management or antimicrobial stewardship, this variation can translate to thousands of quality-adjusted life years [9, 10].

Policymakers and practitioners only have a tentative set of best practices regarding how feedback could be optimally conducted [5, 11]; thus there is a need to better understand how and why feedback works in order to maximise their impact [5, 7]. One approach is to consider the underlying theory of feedback, which has often been over-looked [5, 12]. In this paper, we define theory as a “coherent description of a process that is arrived at by inference, provides an explanation for observed phenomena, and generates predictions” [13]. In the 140 randomised controlled trials in the most recent Cochrane review, 18 different theories were used in only 20 (14%) of the studies, suggesting a lack of consensus as to which is most appropriate for feedback [12]. More recently, three theories have gained popularity in the feedback literature: [5] Control Theory [14], Goal Setting Theory [15], and Feedback Intervention Theory [16]. However, these theories address only part of the feedback process, and even if used in conjunction may still miss potentially important factors specific to health care (Table 2).

Qualitative evaluations of quality improvement interventions can generate hypotheses regarding their effect modifiers (i.e. variables that influence success) and mechanisms of action [17]. For example, by helping explain why a particular intervention was ineffective (e.g. [18]), or developing a logic model for success (e.g. [19]). Synthesising findings from different qualitative studies can help build theories of how interventions may be optimally designed and implemented [20]. Such approaches have been used to improve interventions in tuberculosis therapy [21], smoking cessation [22], skin cancer prevention [23], and telephone counselling [24]. A similar approach may therefore be useful for feedback and, to the best of our knowledge, has not yet been attempted.

Aims and objectives

We aimed to synthesise findings from qualitative research on feedback interventions to inform the development of a comprehensive new health care-specific feedback theory. Informed by our definition of theory [13], our objectives were to (1) describe the processes by which feedback interventions effect change in clinical practice, (2) identify variables that may predict the success of these processes, (3) formulate explanatory mechanisms of how these variables may operate, and (4) distil these findings into parsimonious propositions.

Methods

We published our protocol on the International Prospective Register of Systematic Reviews (PROSPERO; registration number CRD42015017541 [25]).

Search strategy

We replicated the latest Cochrane review’s search strategy [8], adding qualitative research filters [26,27,28] (Additional file 1). MEDLINE (Ovid), EMBASE (Ovid), and CINAHL (Ebsco) were searched without time limits on 25 March 2015. Citation, related article, and reference list searches were undertaken up to 31 December 2016 for all included studies, relevant reviews, and essays (e.g. [5, 11, 12, 29,30,31,32,33,34,35,36,37]) [38]. Further studies were found through international experts and Google Scholar alerts.

Study selection and data extraction

Table 3 describes our inclusion criteria. Two reviewers independently screened titles and abstracts. Full manuscripts of potentially relevant citations were obtained and the criteria re-applied. Data from included articles were extracted independently by BB and WG regarding the study [39] and feedback intervention details [40, 41] (Additional file 2; e.g. study setting, who provided the feedback, and what information the feedback contained). Critical appraisal was conducted concurrently using 12 technical and theoretical criteria including the appropriateness of data collection and analysis methods, adequacy of context description, and transferability of findings [42]. Any disagreements were resolved through discussion, with the wider team consulted as necessary.

Data synthesis

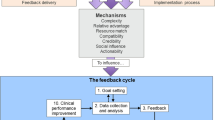

Study findings were extracted as direct quotations from participants and author interpretations [143, 43] found in the abstract, results, and discussion sections. Data were synthesised in five stages (Fig. 1; please see Additional file 3 for details): coding excerpts from individual papers in batches using framework analysis [44] and realistic evaluation [6], generalising findings across papers [45] and building on pre-existing theories to formulate hypotheses [36], iteratively testing and improving these hypotheses on new batches of papers using Analytic Induction [46], assessing confidence in our hypotheses using the GRADE-CERQual method [47], and summarising high-confidence hypotheses into a core set of propositions.

Results

Study characteristics

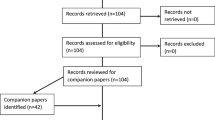

We screened 15,413 papers (Fig. 2). Sixty-five were ultimately included, reporting 61 studies of 73 feedback interventions involving 1791 participants, from which we synthesised 1369 excerpts. Table 4 summarises their main characteristics, full details of which are in Additional file 4.

Meta-synthesis: Clinical Performance Feedback Intervention Theory (CP-FIT)

From our synthesis, we developed Clinical Performance Feedback Intervention Theory (CP-FIT). CP-FIT argues that effective feedback works in a cycle, the success of progressing round which is influenced by variables operating through a set of common explanatory mechanisms related to the feedback itself, the recipient, and wider context (Fig. 3). How these variables and mechanisms influence the feedback cycle is illustrated by 42 high-confidence hypotheses (Table 5), which are in turn summarised by three propositions (Table 6). CP-FIT draws on concepts from 30 pre-existing behaviour change theories (Table 7) and has over 200 lower confidence hypotheses (Additional file 5).

We describe CP-FIT in detail below. To maintain readability, we focus on its high-confidence hypotheses and provide only key example references to supporting studies and theories. CP-FIT’s constructs are in italics. Table 8 provides example illustrative quotes and Additional file 5 contains the full descriptions of constructs, with references to supporting papers and theories. Additional file 6 provides case studies demonstrating how CP-FIT can explain the success of different feedback interventions included in the synthesis.

The feedback cycle (research objective 1)

Similar to existing feedback [14, 16, 48], goal setting [15], and information value [49] theories, we found that successful feedback exerts its effects through a series of sequential processes, each of which required a non-trivial work commitment from health professionals (Fig. 3). This started with choosing standards of clinical performance against which care would be measured (Goal setting), followed by collection and analysis of clinical performance data (Data collection and analysis); communication of the measured clinical performance to health professionals (Feedback); reception, comprehension, and acceptance of this by the recipient (Interaction, Perception, and Acceptance respectively); a planned behavioural response based on the feedback (Intention and Behaviour); and ultimately positive changes to patient care (Clinical performance improvement). A further step of Verification could occur between Perception and Acceptance where recipients interrogated the data underlying their feedback (e.g. [50]). The cycle then repeated, usually starting with further Data collection and analysis. Feedback interventions became less effective if any of the above processes failed, halting progress round the cycle. For example, if Data collection was not conducted (e.g. [51]), or a recipient did not Accept the feedback they were given (e.g. [52]; Table 8, quote 1).

In addition to potentially improving clinical performance, we found both positive and negative unintended outcomes associated with feedback. Health care organisations often noted improved record-keeping (e.g. [53]), and recipient knowledge and awareness of the feedback topic (e.g. [54]). However, it could also result in: Gaming, where health professionals manipulated clinical data or changed the patient population being measured scrutiny to artificially improve their performance (e.g. [55]), or Tunnel vision, where health professionals excessively focused on the feedback topic at the detriment of other clinical areas [56, 57] (Table 8, quote 2).

Feedback variables (research objective 2)

We found four feedback variables that influenced progress round the feedback cycle: (1) the goal, (2) data collection and analysis methods, (3) feedback display, and (4) feedback delivery.

Goal

This variable refers to the clinical topic and the associated clinical behaviours or patient outcomes measured by the feedback intervention. For example, the proportion of diabetic patients with controlled cholesterol in primary care [58], or whether nutritional advice is provided to nursing home residents [59]. Similar to feedback-specific [15] and general behaviour change theories [60, 61], we found Acceptance and Intention more likely when feedback measured aspects of care recipients thought were clinically meaningful (Importance; Table 8, quote 3). Acceptance and Intention were also more likely when feedback targeted goals within the control of recipients (Controllability e.g. [62]) [48, 63] and that were relevant to their job (Relevance e.g. [64]) [65, 66].

Data collection and analysis method

When undertaken by feedback recipients themselves (Conducted by recipients e.g. [67]) or performed manually (Automation e.g. [68]), we found the Data collection and analysis process was inhibited, often due to a lack of time or skills. In extreme cases, the Goal setting process was re-visited in order to find more suitable methods (e.g. [69]).

We found Acceptance was more likely when recipients believed the data collection and analysis process produced a true representation of their clinical performance (Accuracy) [48], which often related to the positive predictive value of the feedback (i.e. its ability to correctly highlight areas of clinical performance requiring improvement). If perceived Accuracy was low, recipients were also more likely to undertake Verification (Table 8, quote 4).

Likewise, Acceptance was facilitated when feedback recipients could exception report patients they felt were inappropriate to include in feedback (Exclusions e.g. [70]) [56]. Potential reasons for exception reporting are discussed in the “Patient population” section.

Feedback display

We found Intention and Behaviour were more likely when feedback communicated recipients’ performance level had room for improvement (Performance level). This violated their perception they delivered high-quality care, thus providing motivation and opportunity to change (e.g. [64]) [16, 56, 61]. It also encouraged Verification as recipients often wanted to clarify this alternative view of their achievements themselves (e.g. [50]). We found some support for theories that suggested the feedback process could be inhibited if performance was so extreme that improvement was unlikely: [16, 56] for example, non-Acceptance if current performance was too low (e.g. [71]), or Goal setting re-visited if performance too high (e.g. [53]); though these findings were inconsistent.

Feedback that detailed the patients included in the clinical performance calculation (Patient lists) facilitated Verification, Perception, Intention, and Behaviour by enabling recipients to understand how suboptimal care may have occurred, helping them take corrective action (where possible) for those patients and learn lessons for the future (e.g. [50]). It also facilitated Acceptance by increasing transparency and trustworthiness of the feedback methodology [48] (Table 8, quote 5).

Feedback focusing on the performance of individual health professionals rather than their wider team or organisation increased Acceptance, Intention, and Behaviour because, similar to Controllability and Relevance (see “Goal” section), it was more likely to highlight situations for which they had responsibility (Specificity e.g. [72]) [48]. Using recent data to calculate recipients’ current performance (Timeliness) had a similar effect because it was based on what recipients could change currently, rather than events that had long passed (e.g. [50]).

Feedback often compared recipients’ current performance to other scores, such as their past performance (Trend e.g. [73]), others’ performance (Benchmarking e.g. [74]), or an expected standard (usually determined by experts; Target e.g. [75]). We found that Trend facilitated Perception by helping recipients interpret their current performance in a historical context [16, 76]. Benchmarking worked in a similar fashion by helping recipients understand how they performed relative to other health professionals or organisations, stimulating Intention and Behaviour because they wanted to do better than their colleagues and neighbours [77]. Benchmarking also worked by motivating recipients to maintain their social status when they saw others in their peer group behaving differently [78, 79]. These findings contradicted Feedback Intervention Theory, which predicts that drawing attention to other’s performance reduces the impact of feedback [16]. It was unclear whether Benchmarking was more effective when the identities of the health professionals were visible to each other, or to which health professionals’ performance should be compared. We found only minimal evidence that Targets influenced feedback effectiveness despite their prominence in existing feedback theories [14,15,16].

Feedback was more effective when it communicated the relative importance of its contents (Prioritisation) and employed user-friendly designs (Usability) [80, 81], because it reduced cognitive load by helping recipients decide what aspects of their performance required attention (e.g. [55, 82]) [83]. Studies provided little detail on how this could be practically achieved, though strategies may include limiting the number of clinical topics in the feedback (Number of metrics e.g. [55]) or using charts (Graphical elements e.g. [84]) [76]. We found insufficient evidence that feedback’s effectiveness was influenced by whether it was presented positively or negatively (Framing) [16, 48].

Feedback delivery

Recipients often rejected feedback whose purpose they believed was to punish rather than support positive change because it did not align with their inherent motivation to improve care (Function e.g. [85]) [60, 61]. Similarly, when feedback was reported to external organisations or the public, it often drew negative reactions with little evidence of impact on clinical performance (External reporting e.g. anxiety and anger [75]; Table 8, quote 6).

Acceptance was also less likely when delivered by a person or organisation perceived to have an inappropriate level of knowledge or skill (Source knowledge and skill). This could relate to the clinical topic on which feedback was provided (e.g. [86]) or quality improvement methodology (e.g. [85]) [48]. We found inconsistent evidence that the location of feedback delivery, for example whether internal or external to the recipients’ organisation, influenced effectiveness (Source location).

Feedback that was “pushed” to recipients facilitated Interaction more than those requiring them to “pull” it (Active delivery). For example, feedback sent by email (e.g. [87]) was received more frequently than when published in a document that was not distributed (e.g.[75]). An exception was feedback solely delivered in face-to-face meetings, as the significant time commitments often meant health professionals could not attend (e.g. [88]).

Feedback delivered to groups of health professionals improved Teamwork (see “Organisation or team characteristics” section) by promoting engagement and facilitating discussion (Delivery to a group e.g. [89]) [60, 78]. There was inconsistent evidence on the effects of how often feedback was delivered (Frequency) [5], and little insight into whether it was best delivered electronically or on paper (Medium) [5, 16].

Recipient variables (research objective 2)

We found two recipient variables that influenced progress round the feedback cycle: (1) health professional characteristics and (2) their behavioural response.

Health professional characteristics

Often health professionals did not possess the knowledge and skills to effectively engage with and respond to feedback. This included technical quality improvement skills such as interpreting data or formulating and implementing action plans, rather than the clinical topic in question (e.g. [90]). We found interventions targeting those with greater capability (both technical and clinical) were more effective because recipients were more likely to successfully proceed through the Perception, Intention, and Behaviour feedback processes (Knowledge and skills in quality improvement and the clinical topic, respectively; e.g. [91, 92]) [61, 93]. This seemed to undermine the rationale of interventions predicated on addressing health professionals’ presumed lack of clinical knowledge (e.g. [94]; Table 8, quote 7).

Understandably, health professionals with positive views on the potential benefits of feedback were more likely to engage with it (Feedback attitude e.g. [64]) [66, 93]. And although health professionals often had profound emotional reactions to feedback, both positive and negative (e.g. [85]), we found no reliable evidence that these directly influenced the feedback cycle.

Behavioural response

We found two main types of action taken by recipients (if any) in response to feedback: those relating to the care of individual patients one-at-a-time (Patient-level) or those aimed at the wider health care system (Organisation-level). Patient-level behaviours included retrospectively “correcting” suboptimal care given in the past, or prospectively providing “better” care to patients in the future. For example, resolving medication safety errors by withdrawing previously prescribed medications [86] versus optimising treatment when a patient with uncontrolled diabetes is next encountered [90]. In contrast, Organisation-level behaviours focused on changing care delivery systems. For example, changing how medications are stored in hospital [87], or introducing computerised decision support software to support clinician-patient interactions [95]. We found Organisation-level behaviours often led to greater Clinical performance improvement because they enabled multiple Patient-level behaviours by augmenting the clinical environment in which they occurred [96]. For example, changing how medications were stored reduced the likelihood of delayed administration to all patients [87], and decision support software could remind clinicians how to optimally treat diabetic patients [95]. Conversely, by definition, Patient-level behaviours only ever affected one patient (Table 8, quote 8). We found no clear evidence that feedback success was affected if it required an increase, decrease, change, or maintenance of recipients’ current clinical behaviours to improve their performance (Direction) [5, 7].

Context variables (research objective 2)

We found three context variables that influenced progress round the feedback cycle: (1) organisation or team characteristics, (2) patient population, (3) co-interventions, and (4) implementation process.

Organisation or team characteristics

We found all organisations and teams had a finite supply of staff, time, finances, and equipment (e.g. [90]), stretched by the complexity of modern health care, such as serving increasing numbers of elderly multimorbid patients and dealing with wider organisational activities such as existing quality improvement initiatives and re-structures (e.g. [97]). Consequently, if an organisation had less capacity (Resource) or significant other responsibilities (Competing priorities), they were less able to interact with and respond to feedback (e.g. [90, 98]) [65, 99]. However, if senior managers advocated for the feedback intervention or individuals were present who were dedicated to ensuring it was a success, they often influenced others and provided additional resource to enable more meaningful engagement with feedback (Leadership support e.g. [87] and Champions e.g. [68], respectively; Table 8, quote 9) [78, 100].

Increased Resource and Leadership support also increased the likelihood that Organisation-level behaviours were undertaken (see “Behavioural response” section), because they often required process redesign and change management (e.g. [82]). In turn, Organisation-level behaviours also had the potential to further increase Resource, for example by recruiting new staff (e.g. [101]) or purchasing new equipment (e.g.[75]), which in turn further increased their capacity to engage with and respond to feedback.

Feedback was more successful when members of organisations and teams worked effectively towards a common goal (Teamwork; e.g. [72]), had strong internal communication channels (Intra-organisational networks; e.g. [51]), and actively communicated with other organisations and teams (Extra-organisational networks; e.g. [86]) [65, 99]. These characteristics often co-existed and provided practical support for feedback recipients during Interaction, Perception, Intention, and Behaviour (Table 8, quote 10).

Organisations and teams also commonly had long-established systems and processes that were often difficult to change, such as methods of care delivery and technical infrastructure (e.g. [102]). Therefore, if the feedback intervention fitted alongside their existing ways of working (Workflow fit) [65, 80], it required less effort to implement (e.g. [64]).

Patient population

Health professionals felt it was inappropriate to include certain patients in the their clinical performance calculation [103, 104]. For example, patients that refused the aspects of care measured by the feedback (Choice alignment; e.g. [105]), or those who already received maximal therapy or had relevant clinical contraindications (e.g. medication allergies; Clinical appropriateness; Table 8, quote 11). Including such patients in their clinical performance calculation inhibited Acceptance and Intention, with some evidence it may have also led to Gaming ( “The feedback cycle (research objective 1)” section e.g. [101]).

Co-interventions

Synthesised papers used eight different quality improvement interventions alongside feedback (Table 4). However, only four appeared to impact feedback success because they addressed specific barriers. The provision of support for health professionals to discuss their feedback with peers (Peer discussion) and to identify reasons for and develop solutions to suboptimal performance (Problem solving and Action planning) facilitated Perception, Intention, and Behaviour. These co-interventions addressed shortcomings in health professionals’ quality improvement skills (see “Health professional characteristics” section). Peer discussion had the added benefit of improving Teamwork (see “Organisation or team characteristics” section) [60]. Such approaches often co-existed, and could be delivered in different ways, for example as didactic workshops (e.g. [89]) or led by recipients themselves (e.g. [90]), though it was unclear which was most effective (Table 8, quote 12).

Co-interventions that provided additional staff to explicitly support the implementation of feedback helped overcome time and staffing issues (see “Organisation or team characteristics” section; External change agents) [65, 99]. These personnel could either be directly involved in feedback processes (e.g. carrying out improvement actions [86]), or indirectly support recipients (e.g. facilitating Perception and Intention [94]) [106].

We found little support for education (Clinical education) or financial incentives (Financial rewards). There was some evidence that Financial rewards could negatively impact feedback success by conflicting with recipients’ motivation and sense of professionalism (e.g. [107]) [60, 61].

Implementation process

How feedback was introduced into clinical practice impacted all feedback cycle processes. Feedback tailored to the specific requirements of the health care organisation and its staff appeared more successful because it aligned with their needs and improved Workflow fit (see “Organisation or team characteristics” section; Adaptability) [65, 99]. For example, if quality indicator definitions could be amended to fit existing data sources [69] or focus on local clinical problems [91].

When training and support were provided on how to use an intervention (not the clinical topic under scrutiny; Training and support), it improved recipients’ Knowledge and skills in quality improvement (see “Health professional characteristics” section; e.g. [91]) [80, 106]. Further, if the training demonstrated the intervention’s potential benefits (Observability), recipients were also more likely to engage with it [65, 108]. These benefits could be to recipients themselves, such as improved feedback user-friendliness (Usability, the “Feedback display” section; e.g. [98]), or to patient care (e.g. [88]). Trend (see “Feedback display” section) could also increase Observability if its trajectory was positive (Table 8, quote 13) [109].

Interventions considered “expensive” to deploy, in terms of time, human, or financial resources, were generally less effective because they required more resource or effort (Cost) [80, 99]. Examples of expensive interventions included when data collection was Conducted by recipients (see “Data collection and analysis method” section; e.g. [67]) or when feedback was delivered solely face-to-face (see “Feedback delivery” section; e.g. [88]).

We found more consistent evidence to support interventions that made recipients feel like they “owned” the feedback intervention rather than those imposed via external policies or directives [65, 99] because they harnessed their autonomy and internal motivation to improve patient care (Ownership) [60, 61]. Despite this, we found little support for seeking input from recipients into the design and implementation of feedback (Linkage at the development stage) [65].

Mechanisms (research objective 3)

We found seven explanatory mechanisms through which the above variables operated. Many mirrored constructs from existing theories of context and implementation [65, 99, 108], and variables often effected change through multiple mechanisms (Table 5).

Complexity

Complexity is how straightforward it was to undertake each feedback cycle process. This could refer to the number of steps required or how difficult they were to complete. Simple feedback facilitated all feedback cycle processes.

Relative advantage

Relative advantage refers to whether health professionals believed the feedback had a perceived advantage over alternative ways of working, including other feedback interventions. Understandably, variables operating via this mechanism depended on the specific circumstances into which they were implemented. Relative advantage facilitated all feedback cycle processes.

Resource match

Resource match details whether health professionals, organisations, and teams had adequate resources to engage with and respond to those required by the feedback intervention. It included time, staff capacity and skills, equipment, physical space, and finances. When Resource match was achieved, all feedback cycle processes were facilitated.

Compatibility

Compatibility characterises the degree to which the feedback interventions aligned with the beliefs, values, needs, systems, and processes of the health care organisations and their staff. Compatibility facilitated all feedback cycle processes.

Credibility

Credibility was how health professionals perceived the trustworthiness and reliability of the feedback. Recipients were more likely to believe and engage with credible feedback [48], which facilitated Interaction, Verification, Acceptance, Intention, and Behaviour.

Social influence

Social influence specifies how much the feedback harnessed the social dynamics of health care organisations and teams. Exploiting Social influence could facilitate all feedback cycle processes.

Actionability

Actionability describes how easily health professionals could take action in response to feedback and in turn how directly that action influenced patient care. Actionability facilitated Intention, Behaviour, and Clinical performance improvement.

Propositions (research objective 4)

We distilled the above hypotheses of how context and intervention variables influenced feedback cycle processes (Table 5) into three propositions that govern the effects of feedback interventions (Table 6). Each proposition summarised multiple variable hypotheses, though only related to a mutually exclusive set of explanatory mechanisms.

Discussion

Summary of findings

CP-FIT describes causal pathways of feedback effectiveness synthesised from 65 qualitative studies of 73 interventions (Table 4), and 30 pre-existing theories (Table 7). It states that effective feedback is a cyclical process of Goal setting, Data collection and analysis, Feedback, recipient Interaction, Perception, and Acceptance of the feedback, followed by Intention, Behaviour, and Clinical performance improvement (the feedback cycle; Fig. 3). Feedback becomes less effective if any individual process fails causing progress round the cycle to stop and is influenced by variables relating to the feedback itself (its Goal, Data collection and analysis methods, Feedback display, and Feedback delivery), the recipient (Health professional characteristics, and Behavioural response), and context (Organisation or team characteristics, Patient population, Co-interventions and Implementation process). These variables exert their effects via explanatory mechanisms of Complexity, Relative advantage, Resource match, Compatibility, Credibility, Social influence, and Actionability (Table 5) and are summarised by three propositions (Table 6).

Applying CP-FIT in practice and research

Each of Table 5’s 42 high-confidence hypotheses can be viewed as specific design recommendations to increase feedback effectiveness. For example, hypothesis 12 (Trend) recommends feedback should display recipients’ current performance in relation to their past performance; hypothesis 17 (Source knowledge and skill) recommends feedback should be delivered by a person or organisation perceived as having an appropriate level of knowledge or skill by recipients; and hypothesis 26 (Leadership support) recommends that feedback interventions should seek the support of senior managers in health care organisations when implemented. For practitioners and policy-makers, CP-FIT therefore provides guidance they should consider when developing and deploying feedback interventions. This includes national clinical audits (e.g. [110, 111]), pay-for-performance programmes (e.g. [112, 113]), and learning health systems (where routinely collected health care data is analysed to drive continuous improvement [114])—such programmes are large-scale, address impactful clinical problems (e.g. cardiovascular mortality or antimicrobial resistance) [9, 10], and require substantial expenditure to develop and maintain (e.g. data collection and analysis infrastructure) [4, 115]. Using CP-FIT thus has the potential to improve care for large numbers of patients, in addition to reducing the opportunity cost from unsuccessful feedback initiatives and improving returns on health care systems’ financial investments.

Table 5’s hypotheses can also be translated into explanations why feedback may or may not have been effective. Additional file 6 provides examples of how to do this by presenting three case studies of different feedback interventions included in our meta-synthesis [74, 86, 116], and using CP-FIT to explain their successes and failures. CP-FIT can therefore help researchers and feedback evaluators assess and explain feedback’s observed or predicted effects. Specifically for qualitative methodologists, Additional file 5 provides a comprehensive codebook that can be used to analyse data and discover causal pathways. For quantitative investigators, both Table 5 and Additional file 5 provide over 200 potentially falsifiable hypotheses to test. As illustrated in Additional file 6, CP-FIT may be particularly useful in process evaluations to identify weak points in a feedback interventions’ logic model (i.e. the feedback cycle; Fig. 3) [17, 117] and barriers and facilitators to its use (i.e. its variables) [11].

Although developed specifically for feedback, CP-FIT may also have relevance to other quality improvement strategies that analyse patient data and communicate those analyses to health professionals in order to effect change. Examples include computerised clinical decision support and educational outreach [118], where CP-FIT concepts such as Accuracy (see “Data collection and analysis method” section), Timeliness (see “Feedback display” section), Credibility (see “Credibility” section), and Actionability (see “Actionability” section) may all be important. CP-FIT concepts related to population-level feedback (e.g. Benchmarking and Trend; the “Feedback display” section) may be less relevant when the focus of the intervention is on individual patient-level care, such as in clinical decision support [18].

Comparison to existing literature

Table 9 shows how CP-FIT may explain reasons for feedback effectiveness variation found in the latest Cochrane review [8]. CP-FIT suggests further sources of variation not identified that could be operationalised in a future update of the review: for example, if feedback allows Exclusions or provides Patient lists (see “Data collection and analysis method” and “Feedback display” sections, respectively).

CP-FIT aligns well with tentative best practices for effective feedback interventions [5, 11] and provides potential evidence-based explanations as to why they may work (Table 10). It also provides additional potential recommendations such as automating data collection and analysis (Automation; see “Data collection and analysis method” section) and gaining leadership support (Leadership support; see “Organisation or team characteristics” section). An advantage of CP-FIT over these existing best practice recommendations is that it provides parsimonious generalisable principles (in the form of its explanatory mechanisms and propositions; see “Mechanisms (research objective 3)” and “Propositions (research objective 4)” sections, respectively). Consequently, CP-FIT’s hypotheses can be extended beyond those in Table 5 if they conform to these constructs. For example, the Complexity (see “Complexity” section) of a feedback interventions’ targeted clinical behaviour (Goal; see “Goal” section) may be reasonably expected to influence its effectiveness [80, 119], despite not being a consistent finding in our synthesis.

Table 7 demonstrates how pre-existing theories contribute to, and overlap with, CP-FIT. In comparison to other theories used to model clinical performance feedback [14,15,16, 48], CP-FIT adds value for health care settings by specifying potential unintended consequences (see “The feedback cycle (research objective 1)” section); detailing new context-related constructs, for example in relation to the organisation or team (see “Organisation or team characteristics” section); and elaborating on specific aspects of the feedback process, for example data collection and analysis (see “Data collection and analysis method” section). This wider and more detailed view may explain why CP-FIT occasionally provides different predictions: [14, 16, 48] for example, Feedback Intervention Theory predicts the presentation of others’ performance (Normative information) decreases effectiveness by diverting attention away from the task at hand [16], whereas CP-FIT states it does the opposite by harnessing the social dynamics between recipients (Benchmarking; see “Data collection and analysis method” section).

To our knowledge, a systematic search and synthesis of qualitative evaluations of feedback interventions has not been previously undertaken. However, two reviews exploring the use of patient-reported outcome measure (PROM) feedback in improving patient care have been recently published [120, 121]. Although neither explicitly attempted to develop theory, their main findings can be mapped to CP-FIT constructs. Boyce et al. [120] found there were practical difficulties in collecting and managing PROMs data related to an organisation’s resources and compatibility with existing workflows (cf. CP-FIT Propositions 1 and 2, respectively; Table 6); whereas Greenhalgh et al. [121] note “actionability” as a key characteristic in the effective use of PROM data (cf. CP-FIT Proposition 3; Table 6). Both noted the “credibility” of data and source from which it was fed back were essential to securing health professional’s acceptance (cf. CP-FIT’s Credibility; see “Credibility” section).

Colquhoun et al. generated 313 theory-informed hypotheses about feedback interventions by interviewing subject experts [122]. Many of the hypotheses appear in CP-FIT (e.g. feedback will be more effective if patient-specific information is provided cf. CP-FIT’s Patient lists; see “Data collection and analysis method” section), though some are contradictory (e.g. feedback will be less effective when presented to those with greater expertise cf. CP-FIT’s Knowledge and skills in quality improvement and clinical topic; see “Health professional characteristics” section) [122]. A possible explanation is that Colquhoun et al.’s hypotheses have been informed by disparate research paradigms (including those outside health care) rather than attempting to develop a unifying theory based on empirical evaluations of feedback interventions like CP-FIT. Work is ongoing to prioritise these hypotheses for empirical testing [122], which will also help further validate CP-FIT.

Limitations

Like all literature syntheses, our findings reflect only what has been reported by its constituent studies. Consequently, CP-FIT may not include features of feedback interventions or contexts associated with effectiveness that have been under-reported. This may manifest by such findings being absent, having “low” or “moderate” GRADE-CERQual ratings (Additional file 5) or unclear effects (e.g. Frequency; see “Feedback delivery” section or Direction, see “Behavioural response” section). For similar reasons CP-FIT’s current form may also lack detail regarding certain construct definitions (e.g. how is good Usability [see “Feedback display” section] or effective Action planning [see “Co-interventions” section] best achieved?), how particular variables may be manipulated in practice (e.g. how can we persuade health professionals of a feedback topic’s Importance [see “Goal” section] or to undertake Organisation-level as well as Patient-level behaviour [see “Behavioural response” section]?), and inherent tensions within the theory (e.g. how do we ensure Compatibility [see “Compatibility” section] whilst also attempting to change health professional behaviour?). Future research should address these evidence gaps by evaluating innovative new feedback designs delivered in different contexts, employing both robust qualitative and quantitative approaches, using CP-FIT as a framework.

Finally, CP-FIT does not currently quantify the relative effect sizes of its variables and mechanisms. It is possible that variables appearing to influence feedback effectiveness with “high” GRADE-CERQual confidence may in fact have negligible effects on patient care. Consequently, future work should aim to quantitatively test CP-FIT’s hypotheses and refine its assumptions.

Conclusions

The advent of electronic health records and web-based technologies has resulted in widespread use and expenditure on feedback interventions [4, 115]. Whilst there is pressure to provide higher quality with lower costs, the messy reality of health care means feedback initiatives have varying success [8]. This results in missed opportunities to improve care for large populations of patients (e.g. [9, 10]) and see returns on financial investments. Feedback interventions are often as complex as the health care environments in which they operate, with multiple opportunities and reasons for failure (Fig. 3 and Table 5). To address these challenges, we have presented the first reported qualitative meta-synthesis of real-world feedback interventions and used the results to develop the first comprehensive theory of feedback designed specifically for health care. CP-FIT contributes new knowledge on how feedback works in practice (research objective 1) and factors that influence its effects (research objectives 2 and 3, respectively), in a parsimonious and usable way (research objective 4). CP-FIT meets the definition of a theory provided in the “Background” section [13] because it (1) coherently describes the processes of clinical performance feedback (see “The feedback cycle (research objective 1)” section and Fig. 3), (2) was arrived at by inferring causal pathways of effectiveness and ineffectiveness from 65 studies of 73 feedback interventions (as detailed in Additional file 3), (3) can provide explanations as to why feedback interventions were effective or ineffective (as demonstrated by the case studies in Additional file 6), and (4) generates predictions about what factors make feedback interventions more or less effective (see hypotheses in Table 4 and Additional file 5). We hope our findings can help feedback designers and practitioners build more effective interventions, in addition to supporting evaluators discern why a particular initiative may (not) have been successful. We strongly encourage further research to test CP-FIT whilst exploring its applicability to other quality improvement strategies, refining and extending it where appropriate.

Abbreviations

- CP-FIT:

-

Clinical Performance Feedback Intervention Theory

- PROM:

-

Patient-reported outcome measure

- PROSPERO:

-

Prospective Register of Systematic Reviews

References

Boaden R, Harvey G, Moxham C, Proudlove N. Quality improvement: theory and practice in healthcare. London: NHS Institute for Innovation and Improvement; 2008.

Scrivener R, Morrell C, Baker R, Redsell S, Shaw E, Stevenson K, et al. Principles for best practice in clinical audit. Oxon: Radcliffe Medical Press; 2002.

Freeman T. Using performance indicators to improve health care quality in the public sector: a review of the literature. Health Serv Manag Res. 2002;15(May):126–37.

Dowding D, Randell R, Gardner P, Fitzpatrick G, Dykes P, Favela J, et al. Dashboards for improving patient care: review of the literature. Int J Med Inform. 2015;84(2):87–100.

Ivers NM, Sales A, Colquhoun H, Michie S, Foy R, Francis JJ, et al. No more ‘business as usual’ with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9(1):14.

Pawson R, Tilley N. Realistic evaluation. London: Sage Publications; 1997. p. 256.

Ivers NM, Grimshaw JM, Jamtvedt G, Flottorp S, O’Brien MA, French SD, et al. Growing literature, stagnant science? Systematic review, meta-regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med. 2014;29:1534 (Table 1).

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback: effects on professional practice and healthcare outcomes. In: Ivers N, editor. Cochrane database of systematic reviews. Chichester: Wiley; 2012. p. 1–227.

Farley TA, Dalal MA, Mostashari F, Frieden TR. Deaths preventable in the U.S. by improvements in use of clinical preventive services. Am J Prev Med. 2010;38(6):600–9.

O’Neill J. Antimicrobial resistance: tackling a crisis for the health and wealth of nations. London: Review on Antimicrobial Resistance; 2014.

Brehaut JC, Colquhoun HL, Eva KW, Carroll K, Sales A, Michie S, et al. Practice feedback interventions: 15 suggestions for optimizing effectiveness. Ann Intern Med. 2016;164:435–41.

Colquhoun HL, Brehaut JC, Sales A, Ivers N, Grimshaw J, Michie S, et al. A systematic review of the use of theory in randomized controlled trials of audit and feedback. Implement Sci. 2013;8(1):66.

Michie S, West R, Campbell R, Brown J, Gainforth H. ABC of behaviour change theories. London: Silverback Publishing; 2014.

Carver CS, Scheier MF. Control theory: a useful conceptual framework for personality-social, clinical, and health psychology. Psychol Bull. 1982;92:111–35.

Locke EA, Latham GP. Building a practically useful theory of goal setting and task motivation. A 35-year odyssey. Am Psychol. 2002;57:705–17.

Kluger A, DeNisi A. The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol Bull. 1996;119:254–84.

Moore G, Audrey S, Barker M, Bonell C, Hardeman W, Moore L, et al. Process evaluation of complex interventions: UK Medical Research Council (MRC) guidance. 2014.

Goud R, van Engen-Verheul M, de Keizer NF, Bal R, Hasman A, Hellemans IM, et al. The effect of computerized decision support on barriers to guideline implementation: a qualitative study in outpatient cardiac rehabilitation. Int J Med Inform. 2010;79(6):430–7.

Millery M, Shelley D, Wu D, Ferrari P, Tseng T-Y, Kopal H. Qualitative evaluation to explain success of multifaceted technology-driven hypertension intervention. Am J Manag Care. 2011;17(12):SP95–102 United States: Department of Clinical Sociomedical Sciences, Mailman School of Public Health, Columbia University, 722 W 168th Street, New York, NY 10032, USA. mm994@columbia.edu.

Barnett-Page E, Thomas J. Methods for the synthesis of qualitative research: a critical review. BMC Med Res Methodol. 2009;9:59.

Noyes J, Popay J. Directly observed therapy and tuberculosis: how can a systematic review of qualitative research contribute to improving services? A qualitative meta-synthesis. J Adv Nurs. 2007;57(3):227–43.

Fishwick D, McGregor M, Drury M, Webster J, Rick J, Carroll C. BOHRF smoking cessation review. Buxton: Health and Safety Laboratory; 2012.

Lorenc T, Pearson M, Jamal F, Cooper C, Garside R. The role of systematic reviews of qualitative evidence in evaluating interventions: a case study. Res Synth Methods. 2012;3(1):1–10.

Lins S, Hayder-Beichel D, Rucker G, Motschall E, Antes G, Meyer G, et al. Efficacy and experiences of telephone counselling for informal carers of people with dementia. Cochrane Database Syst Rev. 2014;9(1469–493X (Electronic)):CD009126 German Cochrane Centre, Institute of Medical Biometry and Medical Informatics, University Medical Center Freiburg, Berliner Allee 29, Freiburg, Germany, 79110.

Brown B, Jameson D, Daker-White G, Buchan I, Ivers N, Peek N, et al. A meta-synthesis of findings from qualitative studies of audit and feedback interventions (CRD42015017541). PROSPERO International prospective register of systematic reviews 2015.

Walters LA, Wilczynski NL, Haynes RB. Developing optimal search strategies for retrieving clinically relevant qualitative studies in EMBASE. Qual Health Res. 2006;16(1):162–8.

Wilczynski NL, Marks S, Haynes RB. Search strategies for identifying qualitative studies in CINAHL. Qual Health Res. 2007;17(5):705–10.

UTHealth. Search filters for various databases: Ovid Medline [Internet]: The University of Texas. University of Texas School of Public Health Library; 2014. Available from: http://libguides.sph.uth.tmc.edu/ovid_medline_filters. [Cited 8 Jan 2015]

Boyce MB, Browne JP. Does providing feedback on patient-reported outcomes to healthcare professionals result in better outcomes for patients? A systematic review. Qual Life Res. 2013;22(9):2265–78 Netherlands: Department of Epidemiology and Public Health, University College Cork, Cork, Ireland, m.boyce@ucc.ie.

Landis-Lewis Z, Brehaut JC, Hochheiser H, Douglas GP, Jacobson RS. Computer-supported feedback message tailoring: theory-informed adaptation of clinical audit and feedback for learning and behavior change. Implement Sci. 2015;10(1):1–12.

Foy R, Eccles MP, Jamtvedt G, Young J, Grimshaw JM, Baker R. What do we know about how to do audit and feedback? Pitfalls in applying evidence from a systematic review. BMC Health Serv Res. 2005;5:50.

Foy R, MacLennan G, Grimshaw J, Penney G, Campbell M, Grol R. Attributes of clinical recommendations that influence change in practice following audit and feedback. J Clin Epidemiol. 2002;55(7):717–22.

Gardner B, Whittington C, McAteer J, Eccles MP, Michie S. Using theory to synthesise evidence from behaviour change interventions: the example of audit and feedback. Soc Sci Med. 2010;70(10):1618–25.

Hysong SJ. Meta-analysis: audit and feedback features impact effectiveness on care quality. Med Care. 2009;47(3):356–63.

van der Veer SN, de Keizer NF, Ravelli ACJ, Tenkink S, Jager KJ. Improving quality of care. A systematic review on how medical registries provide information feedback to health care providers. Int J Med Inform. 2010;79(5):305–23.

Brehaut JC, Eva KW. Building theories of knowledge translation interventions: use the entire menu of constructs. Implement Sci. 2012;7(1):114.

Lipworth W, Taylor N, Braithwaite J. Can the theoretical domains framework account for the implementation of clinical quality interventions? BMC Health Serv Res. 2013;13:530.

Booth A. Chapter 3: searching for studies. In: Booth A, Hannes K, Harden A, Harris J, Lewin S, Lockwood C, editors. Supplementary guidance for inclusion of qualitative research in Cochrane Systematic Reviews of Interventions. Adelaide: Cochrane Collaboration Qualitative Methods Group; 2011.

Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349–57 School of Public Health, University of Sydney, NSW, Australia. allisont@health.usyd.edu.au.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95.

Colquhoun H, Michie S, Sales A, Ivers N, Grimshaw JM, Carroll K, et al. Reporting and design elements of audit and feedback interventions: a secondary review. BMJ Qual Saf. 2016;(January):1–7.

Walsh D, Downe S. Appraising the quality of qualitative research. Midwifery. 2006;22(2):108–19.

Campbell R, Pound P, Morgan M, Daker-White G, Britten N, Pill R, et al. Evaluating meta-ethnography: systematic analysis and synthesis of qualitative research. Health Technol Assess. 2011;15(1366–5278 (Print)):1–164 School of Social and Community Medicine, University of Bristol, Bristol, UK.

Ritchie J, Spencer L. Qualitative data analysis for applied policy research. In: Bryman A, Burgess R, editors. Analysing Qualitative Data. London: Routledge; 1994. p. 173–94.

Pawson R. Evidence-based policy:a realist perspective. London: SAGE Publications; 2006.

Byng R, Norman I, Redfern S. Using realistic evaluation to evaluate a practice-level intervention to improve primary healthcare for patients with long-term mental illness. Evaluation. 2005;11(1):69–93.

Lewin S, Glenton C, Munthe-Kaas H, Carlsen B, Colvin CJ, Gülmezoglu M, et al. Using qualitative evidence in decision making for health and social interventions: an approach to assess confidence in findings from qualitative evidence syntheses (GRADE-CERQual). PLoS Med. 2015;12(10):e1001895.

Ilgen DR, Fisher CD, Taylor MS. Consequences of individual feedback on behavior in organizations. J Appl Psychol. 1979;64(4):349–71.

Coiera E. Designing and evaluating information and communication systems. Guide to health informatics. 3rd ed. Boca Raton: CRC Press; 2015. p. 151–4.

Payne VL, Hysong SJ. Model depicting aspects of audit and feedback that impact physicians’ acceptance of clinical performance feedback. BMC Health Serv Res. 2016;16(1):260.

Nouwens E, van Lieshout J, Wensing M. Determinants of impact of a practice accreditation program in primary care: a qualitative study. BMC Fam Pract. 2015;16:78.

Boyce MB, Browne JP, Greenhalgh J. Surgeon’s experiences of receiving peer benchmarked feedback using patient-reported outcome measures: a qualitative study. Implement Sci. 2014;9(1):84.

Siddiqi K, Newell J. What were the lessons learned from implementing clinical audit in Latin America? Clin Gov An Int J. 2009;14(3):215–25.

Ramsay AIG, Turner S, Cavell G, Oborne CA, Thomas RE, Cookson G, et al. Governing patient safety: lessons learned from a mixed methods evaluation of implementing a ward-level medication safety scorecard in two English NHS hospitals. BMJ Qual Saf. 2014;23(2):136–46.

Yi SG, Wray NP, Jones SL, Bass BL, Nishioka J, Brann S, et al. Surgeon-specific performance reports in general surgery: an observational study of initial implementation and adoption. J Am Coll Surg. 2013;217(4):636–647.e1.

Festinger L. A theory of cognitive dissonance. Evanston: Row Peterson; 1957.

Steele C. The psychology of self-affirmation: sustaining the integrity of the self. Adv Exp Soc Psychol. 1988;21:261–302.

Damschroder LJ, Robinson CH, Francis J, Bentley DR, Krein SL, Rosland AM, et al. Effects of performance measure implementation on clinical manager and provider motivation. J Gen Intern Med. 2014;29(4):877–84.

Meijers JMM, Halfens RJG, Mijnarends DM, Mostert H, Schols JMGA. A feedback system to improve the quality of nutritional care. Nutrition. 2013;29(7–8):1037–41 United States: Department of Health Services Research, Faculty of Health, Medicine and Life Sciences, Maastricht University, Maastricht, the Netherlands. j.meijers@maastrichtuniversity.nl.

Deci EL, Ryan RM. Intrinsic motivation and self-determination in human behaviour. New York: Plenum Publishing Co.; 1985.

Michie S, van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci. 2011;6(1):42.

Gort M, Broekhuis M, Regts G. How teams use indicators for quality improvement - a multiple-case study on the use of multiple indicators in multidisciplinary breast cancer teams. Soc Sci Med. 2013;96:69–77.

Rotter JB. Internal versus external control of reinforcement: a case history of a variable; 1990. p. 489–93.

Wilkinson EK, McColl A, Exworthy M, Roderick P, Smith H, Moore M, et al. Reactions to the use of evidence-based performance indicators in primary care: a qualitative study. Qual Health Care. 2000;9(3):166–74.

Greenhalgh T, Robert G, Bate P, Kyriakidou O, Macfarlane F, Peacock R. How to spread good ideas: a systematic review of the literature on diffusion, dissemination and sustainability of innovations in health service delivery and organisation. London: National Coordinating Centre for the Service Delivery and Organisation; 2004.

Venkatesh V, Bala H. Technology acceptance model 3 and a research agenda on interventions. Decis Sci. 2008;39(2):273–315.

Cameron M, Penney G, Maclennan G, McLeer S, Walker A. Impact on maternity professionals of novel approaches to clinical audit feedback. Eval Health Prof. 2007;30(1):75–95 United States: University of Edinburgh.

Palmer C, Bycroft J, Healey K, Field A, Ghafel M. Can formal collaborative methodologies improve quality in primary health care in New Zealand? Insights from the EQUIPPED Auckland Collaborative. J Prim Health Care. 2012;4(4):328–36 New Zealand: Auckland District Health Board, New Zealand. ourspace@orcon.net.nz.

Groene O, Klazinga N, Kazandjian V, Lombrail P, Bartels P. The World Health Organization Performance Assessment Tool for Quality Improvement in Hospitals (PATH): an analysis of the pilot implementation in 37 hospitals. Int J Qual Health Care. 2008;20(3):155–61 England: Quality of Health Systems and Services, WHO Regional Office for Europe, Copenhagen, Denmark. ogroene@fadq.org.

Chadwick LM, Macphail A, Ibrahim JE, Mcauliffe L, Koch S, Wells Y. Senior staff perspectives of a quality indicator program in public sector residential aged care services: a qualitative cross-sectional study in Victoria, Australia. Aust Health Rev. 2016;40(1):54–62.

Kristensen H, Hounsgaard L. Evaluating the impact of audits and feedback as methods for implementation of evidence in stroke rehabilitation. Br J Occup Ther. 2014;77(5):251–9 Head of Research, Odense University Hospital ¿ Rehabilitation Unit, Odense, Denmark.

Nessim C, Bensimon CM, Hales B, Laflamme C, Fenech D, Smith A. Surgical site infection prevention: a qualitative analysis of an individualized audit and feedback model. J Am Coll Surg. 2012;215(6):850–7.

Jeffs L, Beswick S, Lo J, Lai Y, Chhun A, Campbell H. Insights from staff nurses and managers on unit-specific nursing performance dashboards: a qualitative study. BMJ Qual Saf. 2014;23(September):1–6.

Mannion R, Goddard M. Impact of published clinical outcomes data: case study in NHS hospital trusts. BMJ. 2001;323(7307):260–3.

Kirschner K, Braspenning J, Jacobs JEA, Grol R. Experiences of general practices with a participatory pay-for-performance program: a qualitative study in primary care. Aust J Prim Health. 2013;19(2):102–6.

Vessey I. Cognitive fit: a theory-based analysis of the graphs versus tables literature. Decis Sci. 1991;22(2):219–40.

Festinger L. A theory of social comparison processes. Hum Relat. 1954;7:117–40.

Cialdini RB. Influence: the psychology of persuasion. Cambridge: Collins; 2007. p. 1–30.

Merton RK. Continuities in the theory of reference groups and social structure. In: Merton RK, editor. Social theory and social structure. Glencoe: Free Press; 1957.

Ammenwerth E, Iller C, Mahler C. IT-adoption and the interaction of task, technology and individuals: a fit framework and a case study. BMC Med Inform Decis Mak. 2006;6:3.

DeLone WH, McLean E. The DeLone and McLean model of information systems success: a ten-year updated. J Manag Inf Syst. 2003;19(4):9–30.

Guldberg TL, Vedsted P, Lauritzen T, Zoffmann V. Suboptimal quality of type 2 diabetes care discovered through electronic feedback led to increased nurse-GP cooperation. A qualitative study. Prim Care Diabetes. 2010;4(1):33–9 England: Department of General Practice, Institute of Public Health, Aarhus University, Denmark; Research Unit for General Practice, Institute of Public Health, Aarhus University, Denmark. Trine.Guldberg@alm.au.dk.

Sweller J, van Merrienboer JJG, Paas FGWC. Cognitive architecture and instructional design. Educ Psychol Rev. 1998;10(3):251–96.

Shepherd N, Meehan TJ, Davidson F, Stedman T. An evaluation of a benchmarking initiative in extended treatment mental health services. Aust Health Rev. 2010;34(3):328–33 Australia: Service Evaluation and Research Unit, The Park, Centre for Mental Health, Sumner Park, QLD 4074, Australia.

Exworthy M, Wilkinson EK, McColl A, Moore M, Roderick P, Smith H, et al. The role of performance indicators in changing the autonomy of the general practice profession in the UK. Soc Sci Med. 2003;56(7):1493–504.

Cresswell KM, Sadler S, Rodgers S, Avery A, Cantrill J, Murray SA, et al. An embedded longitudinal multi-faceted qualitative evaluation of a complex cluster randomized controlled trial aiming to reduce clinically important errors in medicines management in general practice. Trials. 2012;13(1):78.

Dixon-Woods M, Redwood S, Leslie M, Minion J, Martin GP, Coleman JJ. Improving quality and safety of care using “technovigilance”: an ethnographic case study of secondary use of data from an electronic prescribing and decision support system. Milbank Q. 2013;91(3):424–54 United States: University of Leicester.

Turner S, Higginson J, Oborne CA, Thomas RE, Ramsay AIG, Fulop NJ. Codifying knowledge to improve patient safety: a qualitative study of practice-based interventions. Soc Sci Med. 2014;113:169–76.

Vachon B, Desorcy B, Camirand M, Rodrigue J, Quesnel L, Guimond C, et al. Engaging primary care practitioners in quality improvement: making explicit the program theory of an interprofessional education intervention. BMC Health Serv Res. 2013;13:106 England: School of Rehabilitation, Faculty of Medicine, Universite de Montreal, 7077 Park Avenue, Montreal, Quebec H3N 1X7, Canada. brigitte.vachon@umontreal.ca.

Ivers N, Barnsley J, Upshur R, Tu K, Shah B, Grimshaw J, et al. My approach to this job is... one person at a time. Can Fam Physician. 2014;60:258–66.

Morrell C, Harvey G, Kitson A. Practitioner based quality improvement: a review of the Royal College of Nursing’s dynamic standard setting system. Qual Health Care. 1997;6(1):29–34 ENGLAND: Radcliffe Infirmary, Oxford, UK.

Grant AM, Guthrie B, Dreischulte T. Developing a complex intervention to improve prescribing safety in primary care: mixed methods feasibility and optimisation pilot study. BMJ Open. 2014;4(1):e004153 A.M. Grant, Quality, Safety and Informatics Group, Medical Research Institute, University of Dundee, Dundee, United Kingdom. E-mail: a.m.grant@dundee.ac.uk: BMJ Publishing Group (Tavistock Square, London WC1H 9JR, United Kingdom).

Ölander F, ThØgersen J. Understanding of consumer behaviour as a prerequisite for environmental protection. J Consum Policy. 1995;18(4):345–85.

Tierney S, Kislov R, Deaton C. A qualitative study of a primary-care based intervention to improve the management of patients with heart failure: the dynamic relationship between facilitation and context. BMC Fam Pract. 2014;15(153):1–10.

Powell AA, White KM, Partin MR, Halek K, Hysong SJ, Zarling E, et al. More than a score: a qualitative study of ancillary benefits of performance measurement. BMJ Qual Saf. 2014;23(February):1–8.

Ferlie E, Shortell S. Improving the quality of health care in the United Kingdom and the United States: a framework for change. Milbank Q. 2000;79(2):281–315.

Siddiqi K, Volz A, Armas L, Otero L, Ugaz R, Ochoa E, et al. Could clinical audit improve the diagnosis of pulmonary tuberculosis in Cuba, Peru and Bolivia? Tropical Med Int Health. 2008;13(4):566–78 England: Nuffield Centre for International Health and Development, Institute of Health Sciences and Public Health Research, Faculty of Medicine and Health, University of Leeds, Leeds, UK.

Hysong SJ, Knox MK, Haidet P. Examining clinical performance feedback in patient-aligned care teams. J Gen Intern Med. 2014;29(Suppl 2):S667–74.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Asch SE. Studies of independence and conformity: I. A minority of one against a unanimous majority. Psychol Monogr Gen Appl. 1956;70(9):1–70.

Wright FC, Fitch M, Coates AJ, Simunovic M. A qualitative assessment of a provincial quality improvement strategy for pancreatic cancer surgery. Ann Surg Oncol. 2011;18(3):629–35 United States: Division of General Surgery, Sunnybrook Health Sciences Centre, Toronto, ON, Canada. Frances.Wright@sunnybrook.ca.

Dixon-Woods M, Leslie M, Bion J, Tarrant C. What counts? An ethnographic study of infection data reported to a patient safety program. Milbank Q. 2012;90(3):548–91.

Cabana MD, Rand CS, Powe NR, Wu AW, Wilson MH. Why don’t physicians follow clinical practice guidelines. JAMA. 1999;282:1458-65.

Pawson R, Greenhalgh J, Brennan C. Demand management for planned care: a realist synthesis. 2016;4(2).

Powell AA, White KM, Partin MR, Halek K, Christianson JB, Neil B, et al. Unintended consequences of implementing a national performance measurement system into local practice. J Gen Intern Med. 2012;27(4):405–12.

Miller NE, Dollard J. Social learning and imitation. London: Kegan Paul; 1945.

Beckman H, Suchman AL, Curtin K, Greene RA. Physician reactions to quantitative individual performance reports. Am J Med Qual. 2006;21:192–9.

Rogers EM. Diffusion of innovations. Illinois: Free Press of Glencoe; 1962. p. 367.

Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychological review; 1977. p. 191–215.

Healthcare Quality Improvement Partnership. The National Clinical Audit Programme. 2018.

Nelson EC, Dixon-Woods M, Batalden PB, Homa K, Van Citters AD, Morgan TS, et al. Patient focused registries can improve health, care, and science. BMJ. 2016;354(July):1–6.

Foskett-Tharby R, Hex N, Chuter A, Gill P. Challenges of incentivising patient centred care. BMJ. 2017;359:6–11.

Roland M, Guthrie B. Quality and outcomes framework: what have we learnt? BMJ. 2016;354:i4060.

Committee on the Learning Health Care Systemin America. Best care at lower cost: the path to continuously learning health care in America. Washington DC: The National Academies Press; 2013. p. 27–36.

Tuti T, Nzinga J, Njoroge M, Brown B, Peek N, English M, et al. A systematic review of use of behaviour change theory in electronic audit and feedback interventions: intervention effectiveness and use of behaviour change theory. Implement Sci. 2017;12:61.

Redwood S, Ngwenya NB, Hodson J, Ferner RE, Coleman JJ. Effects of a computerized feedback intervention on safety performance by junior doctors: results from a randomized mixed method study. BMC Med Inf Decis Mak. 2013;13(1):63.

Gude W, van der Veer S, de Keizer N, Coiera E, Peek N. Optimising digital health informatics through non-obstrusive quantitative process evaluation. Stud Health Technol Inform. 2016;228:594.

Brown B, Peek N, Buchan I. The case for conceptual and computable cross-fertilization between audit and feedback and clinical decision support. Stud Heal Technol Inform. 2015;216:419–23.

Goodhue DL, Thompson RL, Goodhue BDL. Task-technology fit and individual performance. MIS Q. 1995;19(2):213–36.

Boyce MB, Browne JP, Greenhalgh J. The experiences of professionals with using information from patient-reported outcome measures to improve the quality of healthcare: a systematic review of qualitative research. BMJ Qual Saf. 2014;23(6):508–18.

Greenhalgh J, Dalkin S, Gooding K, Gibbons E, Wright J, Meads D, et al. Functionality and feedback: a realist synthesis of the collation, interpretation and utilisation of patient-reported outcome measures data to improve patient care. Heal Serv. 2017;5(2):1.

Colquhoun HL, Carroll K, Eva KW, Grimshaw JM, Ivers N, Michie S, et al. Advancing the literature on designing audit and feedback interventions: identifying theory-informed hypotheses. Implement Sci. 2017;12(1):1–10.

Harvey G, Jas P, Walshe K. Analysing organisational context: case studies on the contribution of absorptive capacity theory to understanding inter-organisational variation in performance improvement. BMJ Qual Saf. 2015;24:48–55. https://doi.org/10.1136/bmjqs-2014-002928.

Dolan JG, Veazie PJ, Russ AJ. Development and initial evaluation of a treatment decision dashboard. BMC Med Inform Decis Mak. 2013;13:51.

Mangera A, Parys B. BAUS Section of Endourology national Ureteroscopy audit: Setting the standards for revalidation. J Clin Urol. 2013;6:45–9 A. Mangera, Rotherham General Hospital, A1213, Moorgate Road, Rotherham, S60 2UD, United Kingdom. E-mail: mangeraaltaf@hotmail.com: SAGE Publications Inc. (2455 Teller Road, Thousand Oaks CA 91320, United States).

Lester HE, Hannon KL, Campbell SM. Identifying unintended consequences of quality indicators: a qualitative study. BMJ Qual Saf. 2011;20(12):1057–61.

Bowles EJA, Geller BM. Best ways to provide feedback to radiologists on mammography performance. AJR Am J Roentgenol. 2009;193(1):157–64.

Harrison CJ, Könings KD, Schuwirth L, Wass V, van der Vleuten C. Barriers to the uptake and use of feedback in the context of summative assessment. Adv Health Sci Educ. 2015;20:229–45. https://doi.org/10.1007/s10459-014-9524-6.

de Korne DF, van Wijngaarden JDH, Sol KJCA, Betz R, Thomas RC, Schein OD, et al. Hospital benchmarking: are U.S. eye hospitals ready? Health Care Manag Rev. 2012;37(2):187–98 United States: Rotterdam Ophthalmic Institute, Rotterdam Eye Hospital and Institute of Health Policy and Management, Erasmus University Rotterdam, Netherlands. d.dekorne@oogziekenhuis.nl.

Friedberg MW, SteelFisher GK, Karp M, Schneider EC. Physician groups’ use of data from patient experience surveys. J Gen Intern Med. 2011;26(5):498–504.

Robinson AC, Roth RE, MacEachren AM. Designing a web-based learning portal for geographic visualization and analysis in public health. Heal Informatics J. 2011;17(3):191–208.

EPOC. Data collection checklist. Oxford: Cochrane Effective Practice and Organisation of Care Review Group; 2002.

Sargeant J, Lockyer J, Mann K, Holmboe E, Silver I, Armson H, et al. Facilitated reflective performance feedback: developing an evidence- and theory-based model that builds relationship, explores reactions and content, and coaches for performance change (R2C2). Acad Med. 2015;90(12):1698.

HQIP. Criteria and indicators of best practice in clinical audit. London: Healthcare Quality Improvement Partnership; 2009.

Ahearn MD, Kerr SJ. General practitioners’ perceptions of the pharmaceutical decision-support tools in their prescribing software. Med J Aust. 2003;179(1):34–7 Australia: National Prescribing Service, Level 1, 31 Buckingham Street, Surry Hills, NSW 2010, Australia. mahearn@nps.org.au.

Lucock M, Halstead J, Leach C, Barkham M, Tucker S, Randal C, et al. A mixed-method investigation of patient monitoring and enhanced feedback in routine practice: barriers and facilitators. Psychother Res. 2015;25(6):633–46.

Andersen RS, Hansen RP, Sondergaard J, Bro F. Learning based on patient case reviews: an interview study. BMC Med Educ. 2008;8:43 England: The Research Unit for General Practice, University of Aarhus, Arhus, Denmark. rsa@alm.au.dk.

Zaydfudim V, Dossett LA, Starmer JM, Arbogast PG, Feurer ID, Ray WA, et al. Implementation of a real-time compliance dashboard to help reduce SICU ventilator-associated pneumonia with the ventilator bundle. Arch Surg. 2009;144(7):656–62.

Carter P, Ozieranski P, McNicol S, Power M, Dixon-Woods M. How collaborative are quality improvement collaboratives: a qualitative study in stroke care. Implement Sci. 2014;9(1):32.

Hysong SJ, Best RG, Pugh JA. Audit and feedback and clinical practice guideline adherence: making feedback actionable. Implement Sci. 2006;1(1):9.

Noyes J, Booth A, Hannes K, Harden A, Harris J, Lewin S, et al. Supplementary guidance for inclusion of qualitative research in Cochrane Systematic Reviews of Interventions: Cochrane Collaboration Qualitative Methods Group; 2011.

Rodriguez HP, Von Glahn T, Elliott MN, Rogers WH, Safran DG. The effect of performance-based financial incentives on improving patient care experiences: a statewide evaluation. J Gen Intern Med. 2009;24(12):1281–8.

Prior M, Elouafkaoui P, Elders A, Young L, Duncan EM, Newlands R, et al. Evaluating an audit and feedback intervention for reducing antibiotic prescribing behaviour in general dental practice (the RAPiD trial): a partial factorial cluster randomised trial protocol. Implement Sci. 2014;9:50 England: Health Services Research Unit, University of Aberdeen, Health Sciences Building, Foresterhill, Aberdeen, UK. m.e.prior@abdn.ac.uk.

B. H. Audits: Pitfalls and good practice. Pharmaceutical Journal. B. Hebron, City and Sandwell Hospitals, Birmingham: Pharmaceutical Press; 2008. p. 250.

Brand C, Lam SKL, Roberts C, Gorelik A, Amatya B, Smallwood D, et al. Measuring performance to drive improvement: development of a clinical indicator set for general medicine. Intern Med J. 2009;39(6):361–9 Australia: Clinical Epidemiology and Health Service Evaluation Unit, Royal Melbourne Hospital, Parkville, VIC, Australia. caroline.brand@mh.org.au.

Bachlani AM, Gibson J. Why not patient feedback on psychiatric services? Psychiatrist. 2011;35:117 A. M. Bachlani, Department of Psychological Medicine, Princess Anne Hospital, Hampshire Partnership NHS Foundation Trust, Southampton, United Kingdom. E-mail: asifbachlani@doctors.org.uk: Royal College of Psychiatrists (17 Belgrave Square, London SW1X 8PG, United Kingdom).

Schoonover-Shoffner KL. The usefulness of formal objective performance feedback: an exploration of meaning. Lawrence: University of Kansas; 1995.

Grando VT, Rantz MJ, Maas M. Nursing home staff’s views on quality improvement interventions: a follow-up study. J Gerontol Nurs. 2007;33(1):40–7 United States: College of Nursing, University of Arkansas for Medical Sciences, Little Rock, USA. Grando-Victoria@uams.edu.

Mcdonald R, Roland M. Pay for performance in primary care in England and California: comparison of unintended consequences; 2009. p. 121–7.

Ajzen I. The theory of planned behavior. Organ Behav Hum Decis Process. 1991;50(2):179–211.

Milgram S. Obedience to authority: an experimental view. New York: Harper & Row; 1974.

Berkowitz AD. The social norms approach: theory, research, and annotated bibliography; 2004.

McDonald R, White J, Marmor TR. Paying for performance in primary medical care: learning about and learning from “success” and “failure” in England and California. J Health Polit Policy Law. 2009;34(5):747–76.

Sondergaard J, Andersen M, Kragstrup J, Hansen P, Freng GL. Why has postal prescriber feedback no substantial impact on general practitioners’ prescribing practice? A qualitative study. Eur J Clin Pharmacol. 2002;58(2):133–6 Germany: Research Unit of Clinical Pharmacology, University of Southern Denmark, Odense University, Denmark. j-soendergaard@cekfo.sdu.dk.

Ross JS, Williams L, Damush TM, Matthias M. Physician and other healthcare personnel responses to hospital stroke quality of care performance feedback: a qualitative study. BMJ Qual Saf. 2015. https://doi.org/10.1136/bmjqs-2015-004197.

Acknowledgments