Abstract

Background

The gap between what is known and what is practiced results in health service users not benefitting from advances in healthcare, and in unnecessary costs. A supportive context is considered a key element for successful implementation of evidence-based practices (EBP). There were no tools available for the systematic mapping of aspects of organizational context influencing the implementation of EBPs in low- and middle-income countries (LMICs). Thus, this project aimed to develop and psychometrically validate a tool for this purpose.

Methods

The development of the Context Assessment for Community Health (COACH) tool was premised on the context dimension in the Promoting Action on Research Implementation in Health Services framework, and is a derivative product of the Alberta Context Tool. Its development was undertaken in Bangladesh, Vietnam, Uganda, South Africa and Nicaragua in six phases: (1) defining dimensions and draft tool development, (2) content validity amongst in-country expert panels, (3) content validity amongst international experts, (4) response process validity, (5) translation and (6) evaluation of psychometric properties amongst 690 health workers in the five countries.

Results

The tool was validated for use amongst physicians, nurse/midwives and community health workers. The six phases of development resulted in a good fit between the theoretical dimensions of the COACH tool and its psychometric properties. The tool has 49 items measuring eight aspects of context: Resources, Community engagement, Commitment to work, Informal payment, Leadership, Work culture, Monitoring services for action and Sources of knowledge.

Conclusions

Aspects of organizational context that were identified as influencing the implementation of EBPs in high-income settings were also found to be relevant in LMICs. However, there were additional aspects of context of relevance in LMICs specifically Resources, Community engagement, Commitment to work and Informal payment. Use of the COACH tool will allow for systematic description of the local healthcare context prior implementing healthcare interventions to allow for tailoring implementation strategies or as part of the evaluation of implementing healthcare interventions and thus allow for deeper insights into the process of implementing EBPs in LMICs.

Similar content being viewed by others

Background

The 2012 World Health Report No Health Without Research emphasized the importance of implementing research into policy and practice as a means of achieving universal and equitable access to healthcare [1]. This highlights the challenges to determine the most effective implementation strategies for interventions, how to understand which strategies work where and why, and in doing so, promoting the better use of research [2–4].

In order to move from ‘what works’ to ‘what works where and why’, there is a need to generate evidence of what facilitates successful implementation. The Promoting Action on Research Implementation in Health Services (PARIHS) framework was developed to provide a framework to understand implementation as a multifaceted process [5]. The framework emphasizes the strength of and interplay between the following: (a) the nature of the evidence being used, (b) the quality of the context in terms of coping with change and (c) the facilitation relevant for a successful change process [6, 7]. Thus, in addition to the availability of evidence for a certain innovation or practice and facilitation as a strategy used to implement this evidence, the context in which the evidence is implemented matters. Hence, there is a need to go beyond measuring the ‘hardware’ of the health system to capturing the ‘software’, i.e. contextual issues, including the ideas, values, norms and power relations that determine health system performance [8]. Context, in relation to implementing EBPs in healthcare settings, has been defined as ‘the environment or setting in which the proposed change is to be implemented’ [5]. Understanding the socio-political nature of health systems, the organization’s readiness to change and the role of tailored implementation is regarded as a priority field in implementation science, including the need to systematically study the attributes of context influencing this process [9–16].

The importance of understanding context prior to and during the evaluation of the implementation of EBPs has led to the development of three quantitative tools aimed at assessing healthcare context, all of which have been developed based on the PARIHS framework [17–19]. Out of the three tools, the Alberta Context Tool (ACT) is the one that has been most widely used and has been subjected to the most rigorous evaluation of validity and reliability [13, 20–24]. The tool was developed in Canada, has been psychometrically tested also in other countries and is presently used in several large studies in high-income settings [20, 25–27]. The ACT contains eight dimensions measuring (1) leadership, (2) culture, (3) feedback, (4) connection amongst people, (5) formal interactions, (6) informal interactions, (7) structural and electronic resources and (8) organizational slack (sub-divided into staffing, space and time) [19].

The three available tools were developed for, and validated in, high-income settings [17–19, 25, 26]. There has been no tool readily available for use in low- and middle-income countries (LMICs), where contextual issues influencing efforts to implement EBPs might include other aspects than those in high-income settings [28, 29]. The objective of the Context Assessment for Community Health (COACH) project was to develop and psychometrically validate a tool for LMICs to assess aspects of context influencing the implementation of evidence-based practices (EBP) [30] that could be used to achieve better insights into the process of implementing EBPs. The name of the tool was chosen to reflect the focus of the project in terms of understanding how health systems context relates to the provision of care to community members. The purpose of the tool is to (1) enhance the opportunities to act on locally identified shortcomings of the health system to increase effectiveness, (2) guide planning and promote adaptation of implementation strategies to the local context and (3) link contextual characteristics to outcome indicators of healthcare interventions. Out of the three developed tools developed for high-income settings, the Organizational Readiness to Change Assessment [17] assesses all three components of the PARIHS model, i.e. evidence, facilitation and context, and the Context Assessment Index [18] has a stronger focus on the individual health worker. Thus, the ACT, which has a stronger focus on assessing organizational aspects of context were perceived to be a suitable tool to depart from. Also, similarly to the ACT, we aimed to develop a tool that focused on modifiable aspects of context, i.e. that could be intervened upon [19].

Methods

This project was developed within an informal network, which had a focus on implementation research and the ‘know-do-gap’ in relation to the millennium development goals 4–6 [31]. In a network meeting in 2010, a member of the network identified the need for a tool to assess local organizational context in LMICs as context was seen as an important variable influencing the implementation of health interventions. Thus, the network formed a core group to carry out the COACH project including health services researchers from Bangladesh, Vietnam, Uganda, South Africa, Nicaragua and Sweden having extensive experience from working in LMICs and implementing EBPs in these settings. Early in the development, we initiated collaboration with two Canadian researchers who lead the development of the ACT. The rationale for forming this multi-country team was our common interest in context as an explanatory factor influencing the implementation of health interventions.

Study settings and design

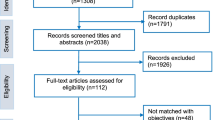

The COACH tool development has gone through six different phases resulting in five different versions of the COACH tool (see Fig. 1). Findings from one phase fed into the development of the next version of the tool including deletion of items, revisions of items and development of new items. Our approach was guided by the Standards for Educational and Psychological Testing [32] considered best practice in the field of psychometrics. Study sites were involved at similar stages of the project: Bangladesh (phases II, V, VI); Vietnam (phases II, V, VI); Uganda (phases II, V, VI); South Africa (phases IV, V, VI); and Nicaragua (phases II, V, VI).

Ethical approvals

Ethical approval was obtained from the Ethical Review Committee of the International Centre for Diarrhoeal Disease Research, Bangladesh (icddr,b), the Ethical Scientific Committee at Ministry of Health in Vietnam, León Medical Faculty Ethical Board in Nicaragua, Health Research Ethics Committee at Stellenbosch University in South Africa and Uppsala Regional Ethical Review Board in Sweden. In Uganda, ethical approval was obtained from the Makerere University School of Public Health Institutional Review Board and Uganda National Council of Science and Technology.

Phase I: defining dimensions and developing a draft version of the COACH tool

Defining the main dimensions (constructs) to be measured by the tool and developing items under each dimension was a key first step in the development of the COACH tool [33]. The process was iterative in that it was initiated during phase I, but further informed and revised by the findings from latter phases.

Dimensions

Initially, we reviewed studies focusing on how context influences the implementation of EBPs in healthcare [28, 29, 34–42], and the interconnected health system building blocks as presented by the WHO [43]. Furthermore, we considered and concluded that the ACT was a suitable starting point. Based on an agreement with the developers of the ACT, we thereinafter initiated the process of identifying constructs to be included in the new tool by reviewing dimensions in the ACT, which were found to have good psychometric properties in different settings [20–22]. Following that, we identified aspects of context that were not explicitly stated in the ACT dimensions, but perceived to be of relevance for a tool for LMICs.

Items

We agreed that all dimensions in the ACT were relevant for testing in LMIC settings, and thus, all ACT items were included in the first version of the COACH tool. In order to capture re-defined and newly developed dimensions, we went through a process of developing items based on these new dimensions through iterative discussions.

Phase I resulted in the development of COACH version I tool.

Phase II: testing content validity in country panels

Content validity allows for an identified panel of 8–12 individuals to assess the perceived relevance of each item in a tool [44]. Experts rated the relevance of each item (n = 94 items) in the COACH tool as 1 = not relevant, 2 = somewhat relevant, 3 = quite relevant and 4 = highly relevant [44]. Based on panels’ assessments, the item-content validity index (I-CVI), a measure of the proportion of raters in agreement of relevance per item and two types of scale-content validity indices (S-CVI), measuring the relevance of each dimension [44, 45] were calculated.

In phase II, content validity assessment was undertaken using the English version of the COACH version I tool amongst identified panel subjects in Bangladesh (n = 11), Vietnam (n = 8), Uganda (n = 11) and Nicaragua (n = 11). Panels included participants with contextual expertise and with the following characteristics: (1) researchers/managers or programmers with experience of large-scale implementation of healthcare interventions and (2) health workers in decision-making positions with experience of leading implementation of healthcare interventions. To explore additional factors that might influence implementation of EBPs in the current settings, we conducted focus group discussions with each of the panels on the content of the tool (see Table 1 for the semi-structured guide).

A COACH version II tool was developed based on findings from phase II.

Phase III: testing content validity in an international panel

Using a similar method to the content validity procedure in Phase II, we undertook a second set of content validity assessments with this version with eight international experts with extensive experience in undertaking research on the implementation of health interventions in LMICs. Content validity was assessed using an online console where experts could enter additional comments about each dimension in addition to rating the perceived relevance of items.

A COACH version III tool was developed based on findings from phase III.

Phase IV: investigating and analysing the response process validity

To understand how target group respondents perceived and understood the COACH version III tool, we investigated the response process with community health workers (CHWs) (n = 6), nurse/midwives (n = 3) and physicians (n = 2) in South Africa. Response process interviews using the ‘think aloud’ method [46] were conducted in English in order to achieve a solid English version of the tool ahead of translations. The method implies that respondents verbally report their thinking whilst answering survey questions [47]. Response process data were analysed using Conrad and Blair’s taxonomy [47] addressing the following: lexical problems, inclusion/exclusion problems, temporal problems, logical problems and computational problems.

A COACH version IV tool was developed based on findings from phase IV.

Phase V: translation of the draft COACH IV tool

As the tool aims to be utilized in settings where the majority of respondents do not understand English, the COACH version IV tool was translated from English into Bangla (Bangladesh), Vietnamese (Vietnam), Lusoga (Uganda), isiXhosa (South Africa) and Spanish (Nicaragua) ahead of field-testing. The process of translation followed Brislin’s model which has been summarized by Yu et al. [48] and included forward translation by bilingual individual, review of the translated tool by monolingual reviewer, backward translation by bilingual individual and comparison of the original version and the backward translated version focusing on conceptual clarity. Hence, the English COACH version IV tool was carefully compared with each of the backward-translated versions in several rounds to identify where and why the versions did not conform. In all settings, the country-specific researcher(s) undertook the forward translation, whereas professional translators undertook the backward translation.

Phase VI: investigating internal structure and reliability

To investigate internal structure, it is advised to have a sample of 100–200 eligible respondents [49]. In the assessment of internal structure and reliability, we strived to include an equal number of respondents across the three healthcare professional groups (CHWs, nurse/midwives and physicians) and the five countries. Hence, the translated version of COACH version IV was administered to eligible respondents in Bangladesh (n = 71), Vietnam (n = 195), Uganda (n = 134), South Africa (n = 162) and Nicaragua (n = 150) (see Table 2). Information about the project was given and informed consent obtained prior to participation. In addition to the 68 tested COACH version IV items, we included seven demographic questions. Participants filled in the questionnaire on paper. The exception to this was the CHW group in Nicaragua who requested that a data collector interview them and then fill in the form on their behalf.

Data were entered manually in Excel or by using an online data capturing form. Cases that had ≥20 missing values on the 68 context items were deleted [50]. A principal component analysis (PCA), using listwise deletion, was used to extract the major contributing factors and a Varimax rotation (orthogonal) was performed using SPSS (version 20) to identify the common factors. Factors with eigenvalues greater than 1 were also extracted. A factor loading greater than 0.40 was regarded as ‘practically significant’ in accordance with Hair et al. [51]. The rationale for choosing PCA was that the assessment was of exploratory nature. To render partial drop-out, k-nearest neighbour imputation was undertaken [52]. Following exclusion (see Table 2), the total number of respondents was 690: Bangladesh (n = 71), Vietnam (n = 183), Uganda (n = 134), South Africa (n = 161) and Nicaragua (n = 141). The PCA was undertaken on the pooled dataset, per country and per health worker category. Factors were identified using the 1.0 eigenvalue cut-off rule and Scree test [33]. We agreed that the following criteria for acceptable level of internal structure and reliability should be met:

-

1.

The item should have a factor loading >0.4 on the pooled dataset, and items belonging to the same theoretical dimension should load on the same factor.

-

2.

The item should have a factor loading of >0.4, and items belonging to the same theoretical dimension should load on the same factor in the majority of settings (i.e. at least in three out of five settings).

-

3.

The item should have a factor loading of >0.4, and items belonging to the same theoretical dimension should load on the same factor in the majority of health professional groups (i.e. at least in two of three health professional groups).

-

4.

The factor should have a Cronbach’s Alpha coefficient of ≥0.7 [53].

The criteria assisted in the retention of items and thus established an acceptable internal structure of the tool. Following retention of items, we examined corrected total item correlation and average inter-item correlation [53, 54].

Results

Findings from the six phases of development are presented below. Findings from each phase resulted in revisions on the tested COACH tool draft version that was then assessed in the next phase (see Fig. 1).

Phase I: defining dimensions and developing a draft version of the COACH tool

The definition of the dimensions and development of corresponding items was an iterative process whereby we initially included all eight dimensions in the ACT (n = 58 items) as well as additional dimensions thought to be relevant for LMIC settings. Our initial discussions resulted in definitions of some of the original ACT dimensions remaining ‘intact’ whilst others were adapted and included (see Additional file 1). In some cases, for existing ACT dimensions, new items were developed that were relevant for LMIC settings. Finally, three new dimensions, Organizational resources, Community engagement and Commitment, believed to be of importance for LMICs were developed specifically for the COACH tool, and new items were also developed for these dimensions. For example, to capture the proposed new dimension Commitment, we reviewed literature and opted to use parts of the Organizational Commitment Questionnaire (OCQ) and the Affective Commitment Scale (ACS) [55, 56] to cover the given definition of the dimension (see Additional file 1). In this paper, items that were originally developed by us are referred to as the COACH items, whilst the items from established tools such as the ACT, OCQ and ACS are referred to using their original instrument abbreviation.

Each item on all dimensions measured the extent to which a respondent agreed or disagreed with the statement on a 5-point Likert-type scale (Strongly disagree, Disagree, Neither agree nor disagree, Agree or Strongly agree). The one exception to this was the Sources of knowledge dimension, which measured how often respondents used different sources of knowledge in a typical month which was rated using another Likert-type scale (Not available; Never, 0 times; Rarely, 1–5 times; Occasionally, 6–10 times; Frequently, 11–15 times; and Almost always, 16 times or more). In terms of general terminology used in the COACH tool, the ACT term ‘organization/unit’ was replaced by the term ‘unit’ as it was identified as the level of organizational context of interest.

Phase II: testing content validity in country panels

For the content validity exercise with the English version of the COACH version I tool, none of the dimensions reached the generally accepted threshold scale-content validity index/average (S-CVI/Ave) ≥0.9 or scale-content validity index/universal agreement (S-CVI/UA) ≥0.8 in all of the four settings [45]. However, several dimensions reached S-CVI/Ave of 0.9 in one or more of the settings. Panel participants from all settings were in agreement regarding relevance of 31/94 items across all dimensions (I-CVI >0.78) (see Additional file 2).

In spite of the mixed results in the CVI assessment, panellists in all settings considered that all dimensions were relevant in the qualitative component of this phase. In particular, Leadership, Resources and Work culture were identified as important contextual influences that affect the implementation of EBPs in health settings. In addition to the dimensions presented to panellists in COACH version I tool, informal payment and nepotism were thought to be issues that influence the process of implementing EBPs in these settings. Examples of informal payment included the sale of drugs and services to patients that should be available free of charge. Participants also brought up the existence of ‘informal systems’ whereby health workers, primarily physicians, made decisions concerning healthcare delivery on the basis of payments into their own pocket, e.g. allowing one’s private patient to bypass the queue in a government healthcare facility.

The development of the COACH version II tool was based on findings from the content validity assessment from country panels. Some examples of revisions included the following. (1) Items relating to technological resources under Sources of knowledge were retained although they had not been found to be relevant as these resources are quickly becoming common in many parts of the world. (2) Under Organizational resources, items relating to Financing were added to assess an organization’s ability to autonomously manage their funds, as this had been identified as likely to impact upon the implementation of EBPs. (3) Items belonging to the dimension of Feedback were well understood and perceived as important but it was felt that the dimension had a broader focus and was more directed towards the continuous monitoring of services in order to inform implementation activities—thus, the dimension was renamed to Monitoring services for action. (4) As Culture and Community engagement were perceived as two important aspects of context, we developed additional items for these two dimensions. (5) Formal and Informal interactions were merged into one dimension of context, namely Interaction between members of the unit.

There was consensus that the language in the tool needed to be simplified for ease of understanding for all types of healthcare providers. Hence, during the development of COACH version II, we consulted the Language Centre at Stellenbosch University who converted the English version of the tool into plain language, which was deemed to be appropriate for the target audience’s average language proficiency and grasp of terminology.

Phase III: testing content validity in an international panel

For the second set of content validity assessments with COACH version II, none of the concepts reached S-CVI/Ave ≥0.9 or S-CVI/UA ≥0.8. In total, 44 of the tested 78 items reached I-CVI of 0.78 (see Additional file 3). In order to keep the dimension of Sources of knowledge, where only one item reached the desired I-CVI of 0.78, we decided to keep all items with an I-CVI above 0.67 although this decision implied a diversion from the generally acceptable I-CVI score. The rationale for this decision was that several of the experts stated that some of the electronic sources of knowledge were currently not available in all the LMICs they had experiences from and thus not perceived as relevant. They did, however, also comment that these resources are very quickly becoming more common. Furthermore, we concluded that a change in I-CVI threshold should be consistent throughout the tool. Hence, in total, 63/78 items reached I-CVI of 0.67 (see Additional file 3). Based on comments made by the participating experts, some linguistic revisions were undertaken to single items [57]. Further development based on findings from phase III resulted in the COACH version III tool.

Phase IV: investigating and analysing response process data

The response process investigation in South Africa revealed that most items were understandable without difficulty. Most items did not need any revisions. Minor revisions included changing an item such as My unit has adequate space to provide services to My unit has enough space to provide healthcare services. It was however noted that about 10 of the items were challenging for respondents to understand. For example, respondent reacted to items using words that were considered ambiguous. One such example was an item under the Informal payment dimension: It is possible for staff to earn extra income from other work or engagements during ordinary working hours. The concept of ‘other work engagements’ was perceived as too complicated, and respondents needed further explanation in order to respond to the item. In order to simplify the final version of that item, it became Health workers are sometimes absent from work earning money at other places [57].

Based on findings from the response process, further linguistic adaptations were made in collaboration with the Language Centre at Stellenbosch University. These adjustments resulted in the COACH version IV tool that was then considered ready for field-testing.

Phase V: translation of the draft COACH IV tool

The COACH version IV tool in English and the five backward translated tools were carefully compared focusing on conceptual clarity in order to identify where and why the tools did not conform. One dimension that was challenging to translate was the one of Informal payment. As an example, the phenomenon in the introduction to the dimension was translated to money and gift (‘envelope’ payments) in Vietnam. Reaching to contextually adapted translations that reflected the intended construct needed thorough discussions.

Phase VI: investigating internal structure and reliability

Following the administration of the translated version of COACH version IV in the five country settings, we investigated the internal structure and reliability of the tool. In conclusion, the analysis and parallel refining work resulted in the COACH version V having a good fit between the theoretical constructs and results from factor analysis. Cleaned data were merged from all settings into one file where descriptive statistics were examined and found satisfactory. The factor analysis revealed that an 11-factor structure accounted for 63.6 % of the variance in the pooled dataset. These 11 factors did relatively well represent the theoretical dimensions that the development of the instrument departed from (Table 3). None of the items cross-loaded >0.4 on two factors in the pooled dataset, but a few items cross-loaded >0.4 on two factors in the analysis made per country and healthcare professional group. Table 3 provides the result from the factor analysis and Cronbach’s alpha coefficient per theoretical dimension in the pooled data set. Table 4 provides a detailed description of the proportion of items reaching the set criteria for factor loadings per country and per professional group and summarizes the extent to which items in theoretical dimension loaded on the same factor in the different sub-analyses.

Reliability of the COACH version V tool was examined, and all dimensions but one reached acceptable Cronbach’s alpha levels of ≥0.70 (ranging between 0.76 and 0.89) [53], whilst Sources of knowledge reached a Cronbach’s alpha of 0.69. Corrected total item correlation and average inter-item correlation >0.3 was judged as good [53, 54]. All items in the dimensions had a corrected total item correlation of >0.3, and all dimensions had an average inter-item correlation of >0.3 (Table 3).

To summarize the field test, the COACH tool was investigated for internal structure in (1) the five different settings, (2) in three professional groups (pooled from the different settings) and (3) on the pooled dataset. The investigation of validity and reliability reduced the number of items from 67 to 49 measuring eight hypothesized contextual dimensions: Resources, Community engagement, Monitoring services for action, Sources of knowledge, Commitment to work, Work culture, Leadership and Informal payment. In applying our criteria for acceptable internal structure, the dimension of Interaction among people was excluded. Cronbach’s alpha, corrected total item correlation and average inter-item correlation provided evidence of reliability for the proposed eight-factor structure. As a consequence of the development process, the definitions of the dimensions were carefully scrutinized in the end of the project. The final definitions of dimensions are found in Table 5, which constitutes the final COACH (version V) tool (see Tables 3 and 4). The complete tool is available (see http://www.kbh.uu.se/IMCH/COACH).

Discussion

We have developed a new tool for assessing the context of healthcare organizations in LMIC settings with promising psychometric characteristics that provides insight into factors influencing the implementation of EBPs. The development of the COACH tool resulted in a tool covering eight dimensions of context and comprising 49 items. Currently, the tool is available in six languages. Whilst many of the dimensions of context that are central in high-income settings are also relevant in LMIC, also other dimensions (such as Informal payment, Commitment to work and Community engagement) were found to have particular resonance in LMICs and which are the focus of this discussion.

Informal payment is an influential factor in the implementation and provision of EBPs. The shifting of priorities in healthcare delivery, such as prioritizing the provision of healthcare to clients who can offer payment as opposed to those in greatest need has been reported [58–61]. It is important to get a better understanding of how much of a barrier informal payment is, especially in settings where policies of free provision of health services for vulnerable groups exist [62]. Informal payment may lead to limited availability of health services for those in need but may also be an important aspect of health workers’ motivation and retention in settings with widespread demoralization and demotivation due to low wages and poor human resource management [62, 63].

Previously, we have described that some health workers in Uganda had to provide informal payments in order to obtain employment, and then again to get on the payroll once having acquired a position [29]. In that case, informal payment is imposed from above and there is a risk that such behaviour will continue down the health system’s hierarchy [64]. In the settings where the COACH tool was developed, the Global Corruption Barometer 2013 has found that 9–43 % of respondents had paid bribes to medical and health services within the last 12 months, and 33–58 % perceived that medical and health services are corrupt (data for Nicaragua was not available) [65]. The effects of informal payments and nepotism remain silent and provide an ongoing threat to achieving continued progress in global health [66].

A further aspect of context that is included in the COACH tool but that has not been a part of other tools is Commitment to work. A recent review focusing on mechanisms for the successful implementation of support strategies for healthcare practitioners in rural and remote contexts found that strong organizational commitment is linked to greater participation levels, change in organizational culture, sustainable programmes and improved patient outcomes and quality of provided services [35]. From an equity perspective, poor clients in high-mortality countries often experience neglect, abuse, and marginalization by the health system by overworked and demotivated and uncommitted health workers [63, 67]. Motivation and staff satisfaction have been shown to be critical elements in improving the efficiency and effectiveness of health system performance, amongst mid-level providers in Malawi [68]. Commonly, competencies are assessed ahead of implementing EBPs. Mapping of individual health workers organizational commitment, motivation and barriers to good performance would also benefit our understanding of performance.

Another characteristic of context that was important in study countries was Community engagement. Central to the WHO health system building blocks model is the role of people not only as beneficiaries, but also as active drivers of the health system [43]. For example, strengthening the linkage between primary health centres and the community and reinforcing the power and involvement of community members in health service delivery appear to have contributed to a 33 % reduction in under-five mortality in Uganda [69], a 50 % reduction in neonatal mortality in Vietnam [70] and a significant reduction in child mortality in Nicaragua [71].

The impact of Work culture on the implementation of EBPs has led to calls to understand the culture in which a particular innovation will be implemented prior to its implementation [72–74]. In the case of the COACH tool, two separate aspects of culture were found to influence the implementation of EBPs: (1) working within a culture valuing learning and (2) harbouring a sense of responsibility to improve healthcare. Similar to other studies [74–77], we found that workplace culture in general, and teamwork in particular, was perceived to be an important contributing factor for health worker motivation, with poor teamwork being associated with difficulties in implementing change.

Leadership and the ability of the leader to create an environment of teamwork was emphasized by panellists in phase II–III. In addition, trust was described as a factor influencing leaders’ ability to form a culture where the implementation of EBPs would occur more effectively. Individuals’ trust in supervisors was related to personal behaviour, and supervisors’ actions might affirm or undermine the trust in the organization [78]. Panellists believed that lack of trust, either personal or professional, affected the organization’s readiness to adapt to changes. The importance of leadership and teamwork for the implementation of EBPs to occur has been described in other studies from low-income settings [77, 79–81].

Methodological considerations

Challenges in the development of a generic instrument to assess context in LMIC included whether the instrument was broad enough to include common aspects of context, but also specific with regard to including contextual elements of importance for implementation. Throughout the project, the research team has strived not to be too radical and delete items too quickly, but rather keep items and reduce the number of items with caution. One reflection of this is the lowering of cut-off for I-CVI from 0.78 to 0.67 during phase III in order to keep several of the items under the Sources of knowledge dimension. Different from the other dimensions asking about agreement, this dimension asks for how often different sources of knowledge are used in a normal month. It should however be noted that the experts were not seeing the answering options the way that they are presented in the tool—instead, they were subjected to a scale where they could rate their level of agreement with the relevance of the item. As some experts noted that these sources of knowledge were not currently available, without knowing that Resource not available is one of the answering options in the tool, we judged that the experts might have rated the relevance of these items different had they known that the scale had that option.

With regard to the findings for the two phases of content validity assessment (phase II–III), it was not surprising that none of the dimensions reached S-CVI/UA ≥0.8 across all settings since it requires unanimity amongst all raters. Furthermore, S-CVI/UA has been criticized to be ‘too stringent’, especially when used with larger groups [44].

Careful translations and backward translation was of uttermost importance. In translating the English version of the tool, we strived to be loyal to the meaning of the text (semantics) and to enable adaption for the translation to fit (context) [48]. In order to do this, it is essential that the parties working with the translation have a common language and that the communication allows for semantic discussions throughout the process. Although the translation process was time consuming, its importance was confirmed by the factor analysis which showed that the items within each dimension fitted well together across all countries, suggesting that the translated items measure the same concepts.

The Sources of knowledge dimension was not rated as relevant by international experts and lead us to lower the level of acceptable I-CVI score. One reason for this was low scoring of e-health and m-health items due to the unavailability of this type of technical devices. We, however, opted to keep these items as the development and utilization of technology is rapidly changing. We aimed to include dimensions reaching a Cronbach’s alpha coefficients of ≥0.7. Although the dimension of Sources of knowledge did not meet this criterion, the availability and usage of information were considered important, and as there is no cut-off that exactly determines the reliability of instrument dimensions, we decided to remain this dimension with its five items for future evaluations of its reliability.

Although there were many similarities in the psychometric evaluation of the tool across settings and occupational groups, some differences were also present on country/professional level. Similarly, some items cross-loaded on analysis per country and health professional group. The items that were cross-loading were, however, not consistent between groups (countries and health professional groups), and we therefore decided to retain the cross-loading items based on results from the analysis made on the pooled dataset. Further evaluation of the tool will show if keeping these cross-loading items was an appropriate decision.

Conclusion

The newly developed COACH tool specifically aims to assess the context of healthcare organizations in LMIC settings, thereby providing increased potential for insight into factors influencing the implementation of EBPs in these settings. Whilst we departed from the ACT in developing a tool that would be suitable in LMICs, the COACH tool shares some content with the ACT and is a derivative product of the ACT. Although many of the organizational context concepts recognized in high-income healthcare settings were found to be relevant also in LMICs, we identified additional aspects of context significant for the implementation of EBPs in LMICs. We foresee alternative ways of applying the COACH tool as means of characterizing context ahead of implementing health interventions, as a method for tailoring an implementation strategy to suit a certain context and for deepening the understanding of the outcomes of implementation efforts. All these applications have the potential to generate better understanding of the process of implementing EBPs.

Abbreviations

- ACS:

-

Affective Commitment Scale

- ACT:

-

Alberta Context Tool

- CHW:

-

community health worker

- COACH:

-

Context Assessment for Community Health

- EBP:

-

evidence-based practice

- iccdr,b:

-

International Centre for Diarrhoeal Disease Research, Bangladesh

- I-CVI:

-

item-content validity index

- OCQ:

-

Organizational Commitment Questionnaire

- PARIHS:

-

Promoting Action on Research Implementation in Health Services

- PCA:

-

principal component analysis

- S-CVI/Ave:

-

scale-content validity index/average

- S-CVI/UA:

-

scale-content validity index/universal agreement

- WHO:

-

World Health Organization

References

WHO. The World Health report 2012: no health without research. 2011. Available from: http://www.who.int/rpc/whr2012/en/.

Santesso N, Tugwell P. Knowledge translation in developing countries. J Contin Educ Health Prof. 2006;26(1):87–96.

Sanders D, Haines A. Implementation research is needed to achieve international health goals. PLoS Med. 2006;3(6):e186.

Wallin L. Knowledge translation and implementation research in nursing. Int J Nurs Stud. 2009;46(4):576–87.

Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care. 1998;7(3):149–58.

Kitson AL et al. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci. 2008;3:1.

Rycroft-Malone J et al. Ingredients for change: revisiting a conceptual framework. Qual Saf Health Care. 2002;11(2):174–80.

Sheikh K et al. Building the field of health policy and systems research: framing the questions. PLoS Med. 2011;8(8):e1001073.

McCoy D et al. Maternal, neonatal and child health interventions and services: moving from knowledge of what works to systems that deliver. Int Health. 2010;2(2):87–98.

Siddiqi K, Newell J, Robinson M. Getting evidence into practice: what works in developing countries? Int J Qual Health Care. 2005;17(5):447–54.

Dieleman M, Gerretsen B, van der Wilt GJ. Human resource management interventions to improve health workers’ performance in low and middle income countries: a realist review. Health Res Policy Syst. 2009;7:7.

English M et al. Health systems research in a low-income country: easier said than done. Arch Dis Child. 2008;93(6):540–4.

Cummings GG et al. The relationship between characteristics of context and research utilization in a pediatric setting. BMC Health Serv Res. 2010;10:168.

Attieh R et al. Organizational readiness for knowledge translation in chronic care: a review of theoretical components. Implement Sci. 2013;8:138.

Moat KA, Lavis JN, Abelson J. How contexts and issues influence the use of policy-relevant research syntheses: a critical interpretive synthesis. Milbank Q. 2013;91(3):604–48.

Shaw B et al. Tailored interventions to overcome identified barriers to change: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2005;3:CD005470.

Helfrich CD et al. Organizational readiness to change assessment (ORCA): development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci. 2009;4:38.

McCormack B et al. Development and testing of the Context Assessment Index (CAI). Worldviews Evid Based Nurs. 2009;6(1):27–35.

Estabrooks CA et al. Development and assessment of the Alberta Context Tool. BMC Health Serv Res. 2009;9:234.

Eldh AC et al. Translating and testing the Alberta context tool for use among nurses in Swedish elder care. BMC Health Serv Res. 2013;13:68.

Estabrooks CA et al. Advancing the argument for validity of the Alberta Context Tool with healthcare aides in residential long-term care. BMC Med Res Methodol. 2011;11:107.

Estabrooks CA et al. Assessment of variation in the Alberta Context Tool: the contribution of unit level contextual factors and specialty in Canadian pediatric acute care settings. BMC Health Serv Res. 2011;11:251.

Hoben M et al. Linguistic validation of the Alberta Context Tool and two measures of research use, for German residential long term care. BMC Res Notes. 2014;7:67.

Mallidou AA et al. Staff, space, and time as dimensions of organizational slack: a psychometric assessment. Health Care Manage Rev. 2011;36(3):252–64.

Estabrooks CA et al. Study protocol for the translating research in elder care (TREC): building context—an organizational monitoring program in long-term care project (project one). Implement Sci. 2009;4:52.

Seers K et al. FIRE (Facilitating Implementation of Research Evidence): a study protocol. Implement Sci. 2012;7(1):25.

Cummings GG et al. Influence of organizational characteristics and context on research utilization. Nurs Res. 2007;56(4 Suppl):S24–39.

Duc, D. et al. Exploring the influence of context in a community-based facilitation intervention on neonatal mortality in Vietnam. PLoS Medicine. 2013;10(5):e1001445. doi:10.1371/journal.pmed.1001445

Bergström A et al. Knowledge translation in Uganda: a qualitative study of perceptions among midwives and managers of the influence of contextual factors. Implement Sci. 2012;7(1):117.

DiCenso A, Guyatt G, Ciliska D. Evidence-based nursing: a guide to clinical practice. London: Mosby Inc; 2005.

United Nations. Millenium Development Goals. 2004; Available from: [http://www.un.org/millenniumgoals/].

American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. Standards for educational and psychological testing. Washington, D.C: American Educational Research Association; 1999.

Furr, M.R, B. V.R. Psychometrics: An introduction. 2008, California: Sage Publications, Inc

Moran AC et al. Benchmarks to measure readiness to integrate and scale up newborn survival interventions. Health Policy Plan. 2012;27 Suppl 3:iii29–39.

Moran AM et al. Supervision, support and mentoring interventions for health practitioners in rural and remote contexts: an integrative review and thematic synthesis of the literature to identify mechanisms for successful outcomes. Hum Resour Health. 2014;12:10.

The CDI Study Group. Community-directed interventions for priority health problems in Africa: results of a multicountry study. Bull World Health Organ, 2010. 88(7): 509-18.

English M. Designing a theory-informed, contextually appropriate intervention strategy to improve delivery of paediatric services in Kenyan hospitals. Implement Sci. 2013;8:39.

Kamuzora P et al. Promoting community participation in priority setting in district health systems: experiences from Mbarali district. Glob Health Action. 2013;6:22669.

Briggs CJ, Garner P. Strategies for integrating primary health services in middle- and low-income countries at the point of delivery. Cochrane Database Syst Rev. 2006;2:CD003318.

Jamtvedt G et al. Audit and feedback: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2006;2:CD000259.

Forsetlund L et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2009;2:CD003030.

O’Brien MA et al. Educational outreach visits: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2007;4:CD000409.

Savigny D, Adam T. Systems thinking for health systems strengthening. Geneva: WHO; 2009.

Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health. 2007;30(4):459–67.

Polit DF, Beck CT. The content validity index: are you sure you know what’s being reported? Critique and recommendations. Res Nurs Health. 2006;29(5):489–97.

Sudman S, Bradburn NM, Schwarz N. Thinking about answers: the application of cognitive processes to survey methodology. San Francisco: Jossey-Bass; 1996.

Conrad F, Blair J. From impression to data: increasing the objectivity of cognitive interviews. In: Survey research methods section. Alexandria: American Statistical Association; 1996.

Yu DS, Lee DT, Woo J. Issues and challenges of instrument translation. West J Nurs Res. 2004;26(3):307–20.

Spector, P. ed. Summated rating scale construction: an introduction. Quantitative Applications in Social Science ed. Lewis-Beck M. 1992, McCune Miller S,: Iowa City.

Fox-Wasylyshyn SM, El-Masri MM. Handling missing data in self-report measures. Res Nurs Health. 2005;28(6):488–95.

Hair JF et al. Multivariate data analysis. 7th ed. London: Prentice Hall; 2009.

Jönsson P, Wohlin C. An evaluation of k-nearest neighbour imputation using Likert data. in Proceedings of the 10th International Symposium on Software Metrics. 2004:108–18. doi10.1109/METRIC.2004.1357895.

Nunnally J, Bernstein I. Psychometric theory. New York: McGraw-Hill; 1994.

DeVon HA et al. A psychometric toolbox for testing validity and reliability. J Nurs Scholarsh. 2007;39(2):155–64.

Mowday RT, Steers RM, Porter LW. Measurement of organizational commitment. J Vocat Behav. 1979;14(2):224–47.

Allen NJ, Meyer JP. The measurement and antecedents of affective, continuance and normative commitment to the organization. Br Psych Soc. 1990;63:1–18.

Bergström A et al. Context Assessment for Community Health (COACH)—technical report. Uppsala: Department of Women’s and Children’s Health; 2014.

Saba Arbache J, editor. Silent and lethal: how quiet corruption undermines Africa’s development efforts. Africa development indicators 2010, International Bank for Reconstruction and Development/The World Bank. Washington DC: The World Bank; 2010.

Gauthier B, Montreal HEC. PETS-QSDS in Sub-Saharan Africa: a stocktaking study. Washington, DC: World Bank. 2010. Available from: http://documents.worldbank.org/curated/en/2010/07/16505739/pets-qsds-subsaharan-africa-stocktaking-study.

McPake B et al. Informal economic activities of public health workers in Uganda: implications for quality and accessibility of care. Soc Sci Med. 1999;49(7):849–65.

Dieleman M, Shaw DM, Zwanikken P. Improving the implementation of health workforce policies through governance: a review of case studies. Hum Resour Health. 2011;9:10.

Parkhurst JO et al. Health systems factors influencing maternal health services: a four-country comparison. Health Policy. 2005;73(2):127–38.

Freedman LP et al. Transforming health systems to improve the lives of women and children. Lancet. 2005;365(9463):997–1000.

Schaaf, M., Freedman, L.P. Unmasking the open secret of posting and transfer practices in the health sector. Health Policy Plan, 2013

International T. Global corruption barometer 2013. 2013.

Mackey TK, Liang BA. Combating healthcare corruption and fraud with improved global health governance. BMC Int Health Hum Rights. 2012;12:23.

Jewkes R, Abrahams N, Mvo Z. Why do nurses abuse patients? Reflections from South African obstetric services. Soc Sci Med. 1998;47(11):1781–95.

McAuliffe E et al. Measuring and managing the work environment of the mid-level provider—the neglected human resource. Hum Resour Health. 2009;7:13.

Björkman M, Svensson J. Power to the people: evidence from a randomized field experiment on community-based monitoring in Uganda. Q J Econ. 2009;124(2):735–69.

Persson L et al. Effect of facilitation of local maternal-and-newborn stakeholder groups on neonatal mortality: cluster-randomized controlled trial. PLoS Med. 2013;10(5):e1001445.

Sandiford P et al. Why do child mortality rates fall? An analysis of the Nicaraguan experience. Am J Public Health. 1991;81(1):30–7.

Wallin L et al. Understanding work contextual factors: a short-cut to evidence-based practice? Worldviews on evidence-based nursing/Sigma Theta Tau International. Honor Soc Nursing. 2006;3(4):153–64.

Austin MJ, Claassen J. Impact of organizational change on organizational culture: implications for introducing evidence-based practice. J Evidence Based Social Work. 2008;5(1-2):321–59.

Wilson VJ, McCormack BG, Ives G. Understanding the workplace culture of a special care nursery. J Adv Nurs. 2005;50(1):27–38.

King TM, Muzaffar S, George M. The role of clinic culture in implementation of primary care interventions: the case of reach out and read. Acad Pediatr. 2009;9(1):40–6.

Mbindyo P et al. Contextual influences on health worker motivation in district hospitals in Kenya. Implement Sci. 2009;4:43.

Puoane T et al. Why do some hospitals achieve better care of severely malnourished children than others? Five-year follow-up of rural hospitals in Eastern Cape. South Africa Health Policy Plan. 2008;23(6):428–37.

Goold SD. Trust and the ethics of health care institutions. Hast Cent Rep. 2001;31(6):26–33.

Lawn J, McCarthy BJ, Ross SR. The Healthy Newborn: A Reference Guide for Program Managers. Centers for Disease Control and Prevention, 2001: Atlanta.

WHO. In: Simmons R, Fajans P, Ghiron L, editors. Scaling up health service delivery: from pilot innovations to policies and programmes. Geneva: World Health Organization; 2008.

Mathauer I, Imhoff I. Health worker motivation in Africa: the role of non-financial incentives and human resource management tools. Hum Resour Health. 2006;4:24.

Acknowledgements

We wish to thank the Swedish International Development Cooperation Agency (Sida) for funding this project. Also, we wish to acknowledge Dr Estabrooks, Principal Investigator of the Knowledge Utilization Studies Program at University of Alberta, for the permission to use the Alberta Context Tool (Professional Nurse—Long-term Care Version) ©Estabrooks (2007) as a point of departure in the development of a context assessment tool for LMICs.

We also wish to thank Debra Jackson (University of Western Cape), Alexander K. Rowe (Centers for Disease Control and Prevention), Nana Twum-Danso (Bill & Melinda Gates Foundation), Tanya Doherty (University of the Western Cape), Abbas Bhuiya (iccdr,b), Mike English (University of Oxford), Kate Gerrish (University of Sheffield), Lars Lindholm (Umeå University) and David Sanders (University of the Western Cape) for contributing phase III of the development.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

LÅP and LW conceptualized the study. AB was responsible for coordinating the project. AB, PG, KS and LW partook in designing the analytic plan for the analyses presented in this paper. EZB, DMD, DTPH, NTN, JP, AR, SS, MT and PW were responsible for the data collection and translation of the tool in the five study settings. AB and KS conducted the statistical analysis. AB, EZB, DMD, DTPH, CK, MM, NTN, LÅP, JP, SP, AR, KS, SS, MT and LW partook in interpreting the findings from the different phases of the development. The manuscript was drafted by AB, DMD, LW and SS. All researchers involved in the development of the tool and the data collection, together with CE and JES provided comments on the manuscript and approved the final version.

Sarah Skeen and Duong M. Duc contributed equally to this work.

Additional files

Additional file 1:

Definitions of dimensions of COACH tool draft version I, phase I. (PDF 446 bytes)

Additional file 2:

Outcomes of content validity assessment in Bangladesh, Vietnam, Uganda and Nicaragua, phase II. (PDF 153 kb)

Additional file 3:

Outcomes of content validity assessment amongst international experts, phase III. (PDF 6.55 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Bergström, A., Skeen, S., Duc, D.M. et al. Health system context and implementation of evidence-based practices—development and validation of the Context Assessment for Community Health (COACH) tool for low- and middle-income settings. Implementation Sci 10, 120 (2015). https://doi.org/10.1186/s13012-015-0305-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-015-0305-2