Abstract

Background

Evaluations of school-based activity behaviour interventions suggest limited effectiveness on students’ device-measured outcomes. Teacher-led implementation is common but the training provided is poorly understood and may affect implementation and student outcomes. We systematically reviewed staff training delivered within interventions and explored if specific features are associated with intervention fidelity and student activity behaviour outcomes.

Methods

We searched seven databases (January 2015–May 2020) for randomised controlled trials of teacher-led school-based activity behaviour interventions reporting on teacher fidelity and/or students’ device-measured activity behaviour. Pilot, feasibility and small-scale trials were excluded. Study authors were contacted if staff training was not described using all items from the Template for Intervention Description and Replication reporting guideline. Training programmes were coded using the Behaviour Change Technique (BCT) Taxonomy v1. The Effective Public Health Practice Project tool was used for quality assessment. Promise ratios were used to explore associations between BCTs and fidelity outcomes (e.g. % of intended sessions delivered). Differences between fidelity outcomes and other training features were explored using chi-square and Wilcoxon rank-sum tests. Random-effects meta-regressions were performed to explore associations between training features and changes in students’ activity behaviour.

Results

We identified 68 articles reporting on 53 eligible training programmes and found evidence that 37 unique teacher-targeted BCTs have been used (mean per programme = 5.1 BCTs; standard deviation = 3.2). The only frequently identified BCTs were ‘Instruction on how to perform the behaviour’ (identified in 98.1% of programmes) and ‘Social support (unspecified)’ (50.9%). We found moderate/high fidelity studies were significantly more likely to include shorter (≤6 months) and theory-informed programmes than low fidelity studies, and 19 BCTs were independently associated with moderate/high fidelity outcomes. Programmes that used more BCTs (estimated increase per additional BCT, d: 0.18; 95% CI: 0.05, 0.31) and BCTs ‘Action planning’ (1.40; 0.70, 2.10) and ‘Feedback on the behaviour’ (1.19; 0.36, 2.02) were independently associated with positive physical activity outcomes (N = 15). No training features associated with sedentary behaviour were identified (N = 11).

Conclusions

Few evidence-based BCTs have been used to promote sustained behaviour change amongst teachers in school-based activity behaviour interventions. Our findings provide insights into why interventions may be failing to effect student outcomes.

Trial registration

PROSPERO registration number: CRD42020180624

Similar content being viewed by others

Background

Many school-based interventions have been delivered worldwide to promote physical activity and reduce sedentary behaviour (e.g. [1, 2]). Review-level evidence shows these interventions have largely failed to change students’ device-measured activity behaviour [3,4,5]. Research to date has largely focused on assessing students’ activity behaviour outcomes. Equal efforts have not been applied to determine how interventions have been implemented. Consequently, reasons for outcomes remain largely unknown and existing guidance for schools on how to promote physical activity or reduce sedentary behaviour is vague and underpinned by weak evidence (e.g. [6]).

Medical Research Council (MRC) guidance highlights the need to focus on the most important areas of uncertainty to interpret observed outcomes arising from interventions delivered within complex systems (e.g. educational systems) [7, 8]. In school-based interventions, successful implementation is often dependent on teachers, who are selected to deliver new instructional programmes (e.g. a new sports programme or ‘active’ lesson) (e.g. [9,10,11]). To facilitate this process, teachers are frequently enrolled onto training programmes, the broad aim of which is to change their teaching practice(s). However little is known about the training they receive [12], and how this effects their professional practice and student outcomes.

The most recent review to examine staff training within school-based activity behaviour interventions was conducted in 2015 [12]. Lander and colleagues evaluated features of training associated with significant changes in self-reported fundamental movement skills and/or physical activity within a physical education lesson. They found that training which is one day or more in length, delivered using multiple formats, and comprised of both subject and pedagogical content was associated with positive student outcomes. However, due to the prevalence of poor reporting across studies, the authors could not determine more specific training features that were causally related to desired outcomes. Hence, little is known on how to design training programmes to optimise intervention implementation (e.g. fidelity) and outcomes (e.g. activity behaviour).

To support the development of evidence-based teacher professional development, effective features of training programmes must be identified. This requires training features to be adequately described. ‘Behaviour change techniques’ (BCTs) offer a means of breaking down variable training programmes into observable, replicable, and irreducible features [13]. Specifying training programmes in terms of BCTs alongside features such as duration enables nuanced but rigorous evidence synthesis, and comparison with the wider professional development literature (e.g. [14,15,16]).

Many school-based intervention studies have been published since Lander and colleagues conducted the search for their review in 2015 [12]. The quality of reporting and underlying evidence may have improved since this time, given the greater availability of reporting guidelines (e.g. [17]) and use of device-based activity monitors (e.g. [18]). We therefore aimed to build on their review, and, in line with Cochrane guidance [19] reconsidered all elements of the review questions and scope. We aimed to determine, more specifically, which teacher-targeted BCTs have been used within school-based activity behaviour interventions that included staff training, and how their use and other training features are associated with intervention fidelity and students’ device-measured outcomes. Operational definitions are outlined in Table 1.

Review questions (RQs)

-

1.

What BCTs have been used in staff training programmes to change student activity behaviour?

-

2.

Is there an association between staff training features, including BCTs, and intervention fidelity?

-

3.

Is there an association between staff training features, including BCTs, and changes in students’ device-measured activity behaviours?

Methods

This review is reported in accordance with the 2020 Preferred Reporting Items for Systematic reviews and Meta-Analyses [24]. The review protocol was prospectively registered on PROSPERO (CRD42020180624).

Literature search

The search strategy and terms were based on the inclusion and exclusion criteria (Table 2), and developed in collaboration with an experienced librarian. The sensitivity and specificity of combinations of free-text terms and database subject headings were tested using MEDLINE (via Ovid). Search terms and operators were subsequently translated and iteratively tested on additional databases identified as relevant (Education Resources Information Center, Applied Social Sciences Index and Abstracts, Embase (via Ovid), Scopus, Web of Science, SPORTDiscus). Searches were run on 15 May 2020 and limited to articles published since 1 January 2015 to avoid inclusion of studies assessed in the Lander review [12] and to focus resources on the highest quality data available to address the review’s aims. No language or geographic limitations were applied. Additional file 1 outlines search terms used and numbers of records identified.

Screening

Search results were imported into EndNote X7 for deduplication (Clarivate, Philadelphia, PA). Remaining records were imported into Covidence (Veritas Health Innovation, Melbourne, Australia) for screening. Title and abstract screening was conducted by one reviewer. A random sample (10%) of excluded records were checked to minimise screening errors (Cohen’s Kappa = 0.48). All full texts were independently screened for eligibility by two reviewers (Cohen’s Kappa = 0.60). If eligibility could not be determined based on an article, we searched for other publications reporting on that same study to obtain further information. Eligibility disagreements were resolved by discussion. After the original criteria were applied, the number of eligible articles (n = 166) was deemed too large for the review team’s resources. A second round of full-text screening was conducted with updated inclusion/exclusion criteria; studies had to report on randomised controlled trials, and pilot, feasibility, and small-scale trials (≤100 students at baseline) were excluded (Cohen’s Kappa = 0.98) (Table 2). Following screening, we conducted forward and backward citation tracking using Google Scholar, and searched through articles and their supplementary materials for peer-reviewed publications and other outputs relevant to studies eligible for inclusion.

Data extraction

All data extraction was performed by one reviewer using a pre-piloted form. Articles not published in English (n = 2) were translated using DeepL Translator (available at www.deepl.com/translator). Details on staff training were extracted based on items in the Template for Intervention Description and Replication (TIDieR) checklist [17], a reporting guideline outlining the minimum set of items considered essential for intervention description and replication (e.g. use of theory, duration, mode of delivery). Where multiple training programmes were delivered within a study (e.g. in the form of content, dose, material etc. beyond local adaptation or personalisation), and outcome data were reported for each arm, data was sought and extracted for each arm. Information reported across study publications and outputs was pooled for data extraction. Where discrepancies were identified between study publications/outputs and data were mutually exclusive (e.g. training duration), data reported in the most recent outcome paper were selected. Where data differed but were mutually inclusive (e.g. BCTs), data were treated as cumulative and extracted as such.

Most studies (50/51; 98.0%) failed to report all TIDieR items about the staff training. Lead authors of included articles were contacted. They were requested to check and complete a partially filled TIDieR-based form, and to add any relevant study publications not listed. Authors were given three weeks to respond with a reminder email. Most authors responded (41/50; 82.0%) and 85.1% (39/41) provided additional information.

Data coding, outcome classification and selection

BCT coding

All training content extracted from peer-reviewed publications was compiled for coding, including any information about interventions delivered to staff in control groups. Other study outputs (e.g. websites) were not coded as access was variable between studies. Content was independently coded in duplicate by two reviewers for the presence and absence of BCTs using the BCT Taxonomy Version 1 (BCTTv1) [13]. Coders completed certified training in advance (available at www.bct-taxonomy.com). Only content that aimed to change staff behaviour within school hours and that specifically related to student activity behaviour was coded. Disagreements were resolved through discussion and by referring back to the BCTTv1 guidance (Cohen’s kappa = 0.70).

Assessing and classifying fidelity outcome(s)

To account for differences in fidelity measurement and reporting across studies, we established a structured process (see Additional file 2) to assess, calculate, and classify fidelity outcomes as high (80–100%), medium (50–79%), or low (0–49%) fidelity [25]. All fidelity data was classified by one reviewer. A second reviewer checked all fidelity classifications (low, moderate, high); conflicts were resolved by discussion.

Selecting activity behaviour outcomes

A single reviewer extracted one physical activity and one sedentary behaviour outcome per study. Where more than one of either outcome was reported, we applied a hierarchy (see Additional file 3) to focus on outcomes closest to the review’s exposure of interest. Activity behaviours measured during periods in which teachers were present for the greatest proportion of that time were prioritised as follows: i) teacher period, ii) school hours, iii) weekdays, and iv) whole of week. Where multiple physical activity outcomes within one of these periods were reported, outcomes were prioritised as follows: i) time spent in moderate-to-vigorous physical activity, ii) total physical activity, iii) vigorous physical activity, iv) moderate physical activity, and v) light physical activity, based on evidence of their respective associations with health outcomes [26, 27]. Where multiple sedentary behaviour outcomes within one of these periods were reported, we prioritised time spent in any sedentary behaviour above other outcomes (e.g. number of breaks in sedentary time). Where multiple follow-up measures were reported, outcomes measured closest to the end of the student-targeted intervention were extracted.

Quality assessment

Quality assessment ratings of fidelity and activity behaviour outcomes were conducted independently by two reviewers using the Effective Public Health Practice Project (EPHPP) tool and dictionary [28, 29]. The EPHPP tool rates six individual domains; selection bias, study design, confounder bias, blinding, data collection methods, and withdrawals and drop out. Domain-specific ratings were used to calculate the global rating (‘strong’, ‘moderate’ or ‘weak’) according to the EPHPP dictionary. We piloted the EPHPP using a subsample of studies (n = 11 studies) to ensure consistency in interpretation of signalling questions between reviewers before starting the full set. Conflicts regarding global ratings were resolved through discussion (inter-rater agreement = 76.2 and 80.6% for fidelity and activity behaviour outcomes, respectively).

Data synthesis

All statistical analyses were conducted using Stata (version 16.1). To assess the relative effectiveness of BCTs on fidelity, promise ratios were calculated as the frequency of a BCT appearing in a promising intervention (defined as high/moderate fidelity) divided by its frequency of appearance in a non-promising intervention (low fidelity) [30]. BCTs had to be identified in at least two interventions reporting eligible fidelity data to be assessed. Where BCTs were only identified in promising interventions, the promise ratio was calculated as the frequency of a BCT appearing in a promising intervention divided by one [30]. BCTs were considered promising if their calculated promise ratio was ≥2. Chi-square and Wilcoxon rank-sum tests were performed to assess differences in other training features (total training time, use of theory, session number, training period, number of BCTs) between moderate/high and low fidelity studies. The level of statistical significance and confidence were set at 5 and 95%, respectively. Results are reported in accordance with the Synthesis Without Meta-analysis guidelines [31].

Meta-analysis

Intervention effects on physical activity and sedentary behaviour outcomes were analysed separately. Standardised mean differences (SMDs) were used to estimate effect sizes, and calculated based on the number, mean, and standard deviations (SDs) of treatment and control groups at baseline and follow-up. Additional file 4 outlines all formulae used to calculate SMDs and their standard errors (SEs) to perform random-effects meta-analyses. Where means and SDs were reported at a subgroup level (e.g. by sex), formulae outlined in the Cochrane handbook [32] were used to estimate outcomes at the unit of interest. Missing SDs were calculated using SEs, 95% confidence intervals (CIs), and t-distributions using formulae [32]. Where both SDs and means were missing, these were calculated using medians and interquartile ranges (IQRs) using Wan’s formulae [33, 34]. Studies that did not report on the mean and SD values of the same sample size at baseline and follow-up were excluded from analyses. Cohen thresholds were used to interpret SMDs as trivial (< 0.2), small (≥0.2 to < 0.5), moderate (≥0.5 to < 0.8), and large (≥0.8) [35]. Random-effects meta-regressions were performed to explore variations in effect estimates for outcomes as a function of BCTs, total number of BCTs, total training time, number of training sessions, and training period. In line with previous reviews [36], only BCTs unique to treatment groups and those identified in at least four interventions were included in analyses. Statistical heterogeneity was assessed using forest plots, the tau-squared (τ2) value and its 95% prediction interval [37]. Publication bias was assessed by visual inspection of funnel plots and Egger’s test.

Results

Overview of studies included

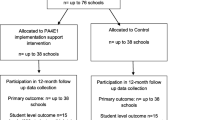

Figure 1 outlines the screening process, resulting in the inclusion of 51 individual studies. Further information about articles excluded during full-text screening is available in Additional file 5.

Studies originated from 19 countries, although 51% were from three countries (Australia: 19.6% [38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54], the United States: 15.7% [55,56,57,58,59,60,61,62,63] and the United Kingdom: 15.7% [64,65,66,67,68,69,70,71,72,73,74,75]). Most were conducted in primary school settings (n = 32, 62.8%). At baseline, the median number of schools and students per study was 14 (IQR: 9–24) and 779 (IQR: 361–1397), respectively. Fifty-three eligible training programmes were identified across 51 studies. Based on the percentage of studies with data reported, most programmes were delivered face-to-face (88.2%), in a group setting (60.5%), by research team members (65.3%) and underpinned by some theory or rationale (74.4%). The median training time was 7 hours (IQR: 2–14 hours). The median session count was 2 (IQR: 1–3). Full study details, including any theory or rationale used to inform training, are outlined in Additional file 6.

Use of BCTs in training programmes (RQ1)

Thirty-seven out of 93 possible unique BCTs were identified across 53 training programmes (see Table 3). The mean number of BCTs identified per treatment group was 5.1 (SD = 3.2; range = 1–15). Two BCTs were identified in at least 50% of treatment groups; ‘Instruction on how to perform the behaviour’ (98.1%) and ‘Social support (unspecified)’ (50.9%). We also identified BCTs in two control staff training programmes [61, 76]; ‘Instruction on how to perform the behaviour’ was coded in each of these.

Association with intervention fidelity (RQ2)

Thirty-five studies reported eligible fidelity data. Most (32/35; 91.4%) achieved a ‘weak’ overall quality assessment rating. Ten interventions (28.6%) were delivered with high fidelity, 18 with medium fidelity (51%) and seven with low fidelity (20%) (see Additional file 7 for domain ratings and fidelity classifications). Nineteen BCTs were associated with promising fidelity outcomes. The BCTs that held the highest promise ratio were ‘Adding objects to the environment’, ‘Feedback on behaviour’, ‘Demonstration of the behaviour’, ‘Behavioural practice/rehearsal’, and ‘Goal setting (behaviour)’. Eleven BCTs were unique to promising interventions (see Table 4).

Moderate/high fidelity studies were significantly more likely to include theory-informed and shorter training programmes than low fidelity studies (see Table 5). All other differences between training features and fidelity outcomes were non-significant.

Impact on student activity behaviour (RQ3)

Fifteen studies reported eligible physical activity data for inclusion in meta-analysis and 11 reported eligible sedentary behaviour data. Six studies (6/16 studies; 37.5%) achieved a ‘weak’ overall quality assessment rating, eight studies (50.0%) achieved a ‘moderate’ rating and two studies (12.5%) achieved a ‘strong’ rating (see Additional file 8 for domain ratings).

Physical activity

The median follow-up period for physical activity outcomes was 3 months (IQR: 6 weeks-8 months). The pooled effect size estimate was 0.44 (95% CI: 0.18, 0.71), indicating a significant positive intervention effect on students’ physical activity at follow-up (see Additional file 9). Heterogeneity was wide between studies (τ2 = 0.25; 95% prediction interval: −0.67, 1.56). Egger’s test indicated evidence of publication bias (p < 0.01) (see Additional file 9). Heterogeneity was largely driven by two studies [77, 78] that reported big effects and large adjusted SEs. When they were excluded from analyses, the pooled effect size estimate remained significant, 0.17 (95% CI: 0.02, 0.32), and Egger’s test did not indicate publication bias (p > 0.05) (see Additional file 9).

Meta-regressions were performed between BCTs eligible for analysis (n = 9), total number of BCTs, total training time, number of training sessions, and training period, and changes in physical activity outcomes from baseline to follow-up (Table 6). We found significant associations for the BCTs ‘Action planning’ and ‘Feedback on behaviour’, and total number of BCTs used (see Table 6). No other significant associations were identified.

Sedentary behaviour

The median follow-up period for sedentary behaviour outcomes was 4 months (IQR: 6 weeks-10 months). The pooled effect size estimate was 0.06 (95% CI: − 0.40, 0.53), indicating no effect on students’ sedentary behaviour at follow-up (see Additional file 10). Heterogeneity was wide between studies (τ2 = 0.59; 95% prediction interval: − 0.20, 0.36). Inspection of funnel plot and Egger’s test did not indicate publication bias (p > 0.05; see Additional file 10). Meta-regressions between training features and changes in sedentary behaviour outcomes from baseline to follow-up showed no significant associations (see Table 7).

Discussion

This is the first systematic review to identify BCTs used in staff training programmes delivered within school-based intervention studies aimed at changing student activity behaviour. We identified 53 eligible training programmes and found evidence that 37 unique BCTs have been used to change teacher behaviour. We found evidence that 19 BCTs are positively associated with promising fidelity outcomes, and that moderate/high fidelity studies are more likely to include theory-based and shorter training programmes (≤ 6 months) than low fidelity studies. We also found training programmes that use more BCTs and those that use ‘Action planning’ and ‘Feedback on the behaviour’ are associated with significant changes to students’ device-measured physical activity. We found no associations between training features and sedentary behaviour outcomes.

The mean number of BCTs identified per training programme suggests that few teacher-targeted BCTs have been used within school-based teacher-led activity behaviour interventions. The only frequently identified BCTs were ‘Instruction on how to perform the behaviour’ and ‘Social support (unspecified)’. The literature suggests that the use of these BCTs alone is unlikely to achieve or sustain professional change [14]. Certain well-evidenced BCTs were absent across studies. For example, a large body of research has highlighted the importance of providing teachers with tools to notice change in their students to promote professional change (e.g. [79]). Yet we identified ‘Feedback on outcome of the behaviour’ in just one training programme [50].

Many study authors reported that the training was underpinned by some rationale or theory, but the theory underpinning the intervention aimed at the student was often conflated with the theory underpinning the staff training (e.g. [38, 80]). In such instances, it was often unclear how the theory was used to inform the training. Few authors drew on relevant teacher professional development literature or theory to inform the design of programmes; this may help to explain the limited number of evidence-based BCTs identified across training programmes. Further, many authors provided no information (e.g. [59, 62]) or confirmed that the training was not informed by any theory or rationale (e.g. [63, 64, 81, 82]).

We found evidence to support an association between 19 BCTs and teacher fidelity. The most promising BCTs we identified were ‘Feedback on behaviour’, ‘Demonstration of the behaviour’, ‘Behavioural practice/rehearsal’, and ‘Goal setting (behaviour)’. Their use in future training programmes is supported by reviews examining causal components of effective teacher professional development for other school subjects (e.g. [14, 15, 83]). ‘Adding objects to the environment’ is less frequently cited within the literature. The objects provided (e.g. maths bingo tiles, sports equipment, signage, standing desks [53, 59, 84,85,86]) may have prompted teachers to implement the intervention on an ongoing basis. Further research is needed to determine how teaching resources and their placement within school settings may promote implementation. Consistent with findings from recent reviews (e.g. [15, 16, 87]), we found that training quality (i.e. theory-based training and use of evidence-based BCTs) rather than a longer training duration was associated with intervention fidelity.

We also found evidence to support the use of more BCTs and the use of ‘Action Planning’ and ‘Feedback on behaviour’ in staff training to increase students’ physical activity. Conversely, we found no evidence to support an association between training features and sedentary behaviour outcomes. These findings may be explained by the small number of studies that observed significant intervention effects, that measured sedentary behaviour during teacher periods and that specifically targeted students’ sedentary behaviour. Interventions must not just be effective but also feasible for teachers to implement and sustain within their workload. Recent research has found that participants often receive more implementation support in pilot interventions than those participating in larger-scale trials of the same or similar interventions [88]. Hence, it is also possible that the interventions were not feasible for teachers to deliver. Finally, quality teaching indicators (e.g. [89]) have yet to be identified within the context of student physical activity and sedentary behaviour. The techniques teachers were requested to implement, even when delivered with fidelity, may have been ineffective in changing student’s activity behaviour.

Strengths and limitations of the review

We employed a comprehensive search to identify and extract data about staff training by using a standardised reporting checklist, searching across study publications and outputs, and contacting authors to overcome limitations of existing reviews that observed poor reporting practices [12]. We achieved a high response rate from study authors and few changes were made to our partially completed forms, suggesting that data about the teacher training programmes was reliably extracted. We overcame limitations associated with recent teacher professional development reviews for other subjects (e.g. [14, 15, 90]), by exploring training effects on both professional practice and student outcomes [90], and by examining data from largely pre-registered [14, 90] and medium-to-large-scale studies [15].

Eligible studies and outputs may have been missed. To reduce the likelihood of missing outputs, all authors were contacted and requested to add study publications not listed. Due to resource limitations, all data extraction was conducted by a single reviewer, which may have resulted in extraction errors. Further, while a structured process was used to classify fidelity data into outcomes, this was conducted by a single reviewer and solely checked by a second. Studies conducted in low and middle-income countries and not published in English are likely disproportionately excluded due to eligibility criteria and databases used. Researchers and practitioners should be cautious about applying the findings to settings and populations underrepresented in this review. Where authors reported fidelity outcomes at multiple time points (e.g. [45, 54, 56, 77]), we selected outcomes measured closest to the training start time. BCTs identified may hence promote short-term fidelity, and should be used alongside evidence-based BCTs that promote sustained professional change (e.g. ‘Habit formation’ [14]). Finally, effective training features that are beyond the scope of the BCTTv1 and TIDieR checklist may exist but were not explored in the current review.

Limitations of the underlying evidence

Most of the limitations associated with our findings relate to the quality of the evidence we reviewed. Consistent with previous reviews [12, 91, 92], we observed poor reporting on staff training across studies. Consequently, it is difficult to discern whether the BCTs identified reflect what was delivered in practice. In line with previous reviews [93], fidelity measures used across studies were methodologically weak. Many studies did not report on fidelity to all intervention components or at the individual level. The BCTs identified may therefore overestimate the extent to which their use can promote overall fidelity, and warrant testing across intervention components, teacher populations and school climates. We sought to include all quantitative fidelity data in our analyses to make the best use of available data [94], but had to exclude 30% of studies as outcomes were reported in isolation of any identifiable target with which we could interpret the data (e.g. [78, 95]). This reduced the number of studies on which we could base our findings.

Implications

In line with existing guidance [7], we recommend that researchers engage with discipline-specific experts and literature when designing and evaluating all intervention components. In order for the field to progress, complete and consistent reporting is needed to determine what interventions have been delivered to the various actors within activity behaviour intervention studies. Consistent and effective implementation of reporting guidelines are important for this, but at the time of paper submission, we found that out of 33 journals that published articles included here just one explicitly requested submission of reporting checklists for all intervention components. We have therefore written to journal editors to update their submission policies to require study authors to submit relevant reporting checklists (e.g. [17, 96]) that describe each of the interventions being implemented and/or assessed [97]. We also advise that study authors use machine-readable tools (e.g. [98]) from the protocol stage to avoid inconsistent reporting within and across study outputs. Finally, valid, reliable and acceptable fidelity measures are needed to determine how school-based interventions are being implemented in practice. Progress is needed to understand the level of support teachers require for effective implementation, components teachers are most likely to deliver, and practices causally related to student activity behaviour change.

Conclusion

This review advances our understanding of how school-based interventions have been implemented, and identifies specific, replicable techniques that can be incorporated into future programmes to promote intervention fidelity and increase student physical activity. Our findings suggest training programmes should be informed by relevant theory and literature and include a combination of BCTs that provide teachers with i) a demonstration of the desired behaviour, ii) an opportunity to practice the behaviour, iii) feedback on their performance of the behaviour, iv) a behavioural goal (self-defined or otherwise) and v) objects that facilitate and cue performance of the behaviour. Our findings also suggest teachers should be prompted to make a detailed action plan regarding their performance of the behaviour. We encourage researchers to incorporate BCTs that have been shown to promote sustained professional change for other school subjects, so that their effectiveness can be assessed within the context of physical activity and sedentary behaviour. Changes to reporting practices in the field will enable researchers in time to determine BCT combinations and features (e.g. frequency, sequence) that best predict desired outcomes for defined teacher and student populations.

Availability of data and materials

A summary of reviewed studies and their outputs is available in Additional file 6.

Abbreviations

- MRC:

-

Medical Research Council

- BCT:

-

Behaviour Change Technique

- SMD:

-

Standardised Mean Difference

- TIDieR:

-

Template for Intervention Description and Replication

- EPHPP:

-

Effective Public Health Practice Project

- SD:

-

Standard deviation

- SE:

-

Standard error

- CI:

-

Confidence interval

- IQR:

-

Interquartile range

References

van de Kop JH, van Kernebeek WG, Otten RHJ, Toussaint HM, Verhoeff AP. School-based physical activity interventions in prevocational adolescents: a systematic review and meta-analyses. J Adolesc Health. 2019;65(2):185–94.

Owen MB, Curry WB, Kerner C, Newson L, Fairclough SJ. The effectiveness of school-based physical activity interventions for adolescent girls: a systematic review and meta-analysis. Prev Med. 2017;105:237–49.

Love R, Adams J, van Sluijs EMF. Are school-based physical activity interventions effective and equitable? A meta-analysis of cluster randomized controlled trials with accelerometer-assessed activity. Obes Rev. 2019;20(6):859–70.

Jones M, Defever E, Letsinger A, Steele J, Mackintosh KA. A mixed-studies systematic review and meta-analysis of school-based interventions to promote physical activity and/or reduce sedentary time in children. J Sport Health Sci. 2020;9(1):3–17.

Neil-Sztramko SE, Caldwell H, Dobbins M. School‐based physical activity programs for promoting physical activity and fitness in children and adolescents aged 6 to 18. Cochrane Database Syst Rev. 2021;(9). Art. No.: CD007651. https://doi.org/10.1002/14651858.CD007651.pub3.

PHE. What works in schools and colleges to increase physical activity? A resource for head teachers, college principals, staff working in education settings, school nurses, directors of public health, active partnerships and wider partners. 2020.

Skivington K, Matthews L, Simpson SA, Craig P, Baird J, Blazeby JM, et al. A new framework for developing and evaluating complex interventions: update of Medical Research Council guidance. BMJ. 2021;374:n2061.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258.

Resaland GK, Aadland E, Nilsen AKO, Bartholomew JB, Andersen LB, Anderssen SA. The effect of a two-year school-based daily physical activity intervention on a clustered CVD risk factor score—the Sogndal school-intervention study. Scand J Med Sci Sports. 2018;28(3):1027–35.

Sutherland R, Reeves P, Campbell E, Lubans DR, Morgan PJ, Nathan N, et al. Cost effectiveness of a multi-component school-based physical activity intervention targeting adolescents: the ‘Physical Activity 4 Everyone’ cluster randomized trial. Int J Behav Nutr Phys Act. 2016;13(1):94.

Habib-Mourad C, Ghandour LA, Maliha C, Awada N, Dagher M, Hwalla N. Impact of a one-year school-based teacher-implemented nutrition and physical activity intervention: main findings and future recommendations. BMC Public Health. 2020;20(1):256.

Lander N, Eather N, Morgan PJ, Salmon J, Barnett LM. Characteristics of teacher training in school-based physical education interventions to improve fundamental movement skills and/or physical activity: a systematic review. Sports Med. 2017;47(1):135–61.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95.

Sims S, Fletcher-Wood H, O’Mara-Eves A, Cottingham S, Stansfield C, Van Herwegen J, Anders J. What are the Characteristics of Teacher Professional Development that Increase Pupil Achievement? A systematic review and meta-analysis. London: Education Endowment Foundation; 2021.

Basma B, Savage R. Teacher professional development and student literacy growth: a systematic review and meta-analysis. Educ Psychol Rev. 2018;30(2):457–81.

Kraft MA, Blazar D, Hogan D. The effect of teacher coaching on instruction and achievement: a meta-analysis of the causal evidence. Rev Educ Res. 2018;88(4):547–88.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

Romanzini CLP, Romanzini M, Batista MB, Barbosa CCL, Shigaki GB, Dunton G, et al. Methodology used in ecological momentary assessment studies about sedentary behavior in children, adolescents, and adults: systematic review using the checklist for reporting ecological momentary assessment studies. J Med Internet Res. 2019;21(5):e11967.

Cumpston M, Chandler J. Chapter IV: Updating a review. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions version 6.3 (updated February 2022). Cochrane. 2022. Available from www.training.cochrane.org/handbook.

van Sluijs EM, Ekelund U, Crochemore-Silva I, Guthold R, Ha A, Lubans D, et al. Physical activity behaviours in adolescence: current evidence and opportunities for intervention. Lancet. 2021;398(10298):429–42.

Caspersen CJ, Powell KE, Christenson GM. Physical activity, exercise, and physical fitness: definitions and distinctions for health-related research. Public Health Rep. 1985;100(2):126.

Tremblay MS, Aubert S, Barnes JD, Saunders TJ, Carson V, Latimer-Cheung AE, et al. Sedentary behavior research network (SBRN)–terminology consensus project process and outcome. Int J Behav Nutr Phys Act. 2017;14(1):1–17.

Page MJ, Moher D, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews. BMJ. 2021;372:n160.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021:372.

Borrelli B. The assessment, monitoring, and enhancement of treatment fidelity in public health clinical trials. J Public Health Dent. 2011;71:S52–63.

Fridolfsson J, Buck C, Hunsberger M, Baran J, Lauria F, Molnar D, et al. High-intensity activity is more strongly associated with metabolic health in children compared to sedentary time: a cross-sectional study of the I. Family cohort. Int J Behav Nutr Phys Act. 2021;18(1):1–13.

Kwon S, Andersen LB, Grøntved A, Kolle E, Cardon G, Davey R, et al. A closer look at the relationship among accelerometer-based physical activity metrics: ICAD pooled data. Int J Behav Nutr Phys Act. 2019;16(1):1–9.

Thomas B, Ciliska D, Dobbins M, Micucci S. A process for systematically reviewing the literature: providing the research evidence for public health nursing interventions. Worldviews Evid-Based Nurs. 2004;1(3):176–84.

Project EPHP. Quality assessment tool for quantitative studies. Hamilton: National Collaborating Centre for Methods and Tools, McMaster University; 1998.

Gardner B, Smith L, Lorencatto F, Hamer M, Biddle SJ. How to reduce sitting time? A review of behaviour change strategies used in sedentary behaviour reduction interventions among adults. Health Psychol Rev. 2016;10(1):89–112.

Campbell M, McKenzie JE, Sowden A, Katikireddi SV, Brennan SE, Ellis S, et al. Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. BMJ. 2020;368:l6890.

Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions. 2019. https://doi.org/10.1002/9781119536604.

Wan X, Wang W, Liu J, Tong T. Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med Res Methodol. 2014;14(1):135.

Weir CJ, Butcher I, Assi V, Lewis SC, Murray GD, Langhorne P, et al. Dealing with missing standard deviation and mean values in meta-analysis of continuous outcomes: a systematic review. BMC Med Res Methodol. 2018;18(1):1–14.

Cohen J. Statistical Power Analysis for the Behavioral Sciences (2nd ed.). Routledge. 1988. https://doi.org/10.4324/9780203771587.

Michie S, Abraham C, Whittington C, McAteer J, Gupta S. Effective techniques in healthy eating and physical activity interventions: a meta-regression. Health Psychol. 2009;28(6):690.

Borenstein M, Higgins JP, Hedges LV, Rothstein HR. Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Res Synth Methods. 2017;8(1):5–18.

Bundy A, Engelen L, Wyver S, Tranter P, Ragen J, Bauman A, et al. Sydney playground project: a cluster-randomized trial to increase physical activity, play, and social skills. J Sch Health. 2017;87(10):751–9.

Sutherland RL, Nathan NK, Lubans DR, Cohen K, Davies LJ, Desmet C, et al. An RCT to facilitate implementation of school practices known to increase physical activity. Am J Prev Med. 2017;53(6):818–28.

Riley N, Lubans DR, Holmes K, Morgan PJ. Findings from the EASY minds cluster randomized controlled trial: evaluation of a physical activity integration program for mathematics in primary schools. J Phys Act Health. 2016;13(2):198–206.

Okely AD, Lubans DR, Morgan PJ, Cotton W, Peralta L, Miller J, et al. Promoting physical activity among adolescent girls: the girls in sport group randomized trial. Int J Behav Nutr Phys Act. 2017;14(1):81.

Miller A, Christensen EM, Eather N, Sproule J, Annis-Brown L, Lubans DR. The PLUNGE randomized controlled trial: evaluation of a games-based physical activity professional learning program in primary school physical education. Prev Med. 2015;74:1–8.

Miller A, Eather N, Gray S, Sproule J, Williams C, Gore J, et al. Can continuing professional development utilizing a game-centred approach improve the quality of physical education teaching delivered by generalist primary school teachers? Eur Phys Educ Rev. 2017;23(2):171–95.

Lubans DR, Beauchamp MR, Diallo TMO, Peralta LR, Bennie A, White RL, et al. School physical activity intervention effect on adolescents’ performance in mathematics. Med Sci Sports Exerc. 2018;50(12):2442–50.

Lubans DR, Smith JJ, Plotnikoff RC, Dally KA, Okely AD, Salmon J, et al. Assessing the sustained impact of a school-based obesity prevention program for adolescent boys: the ATLAS cluster randomized controlled trial. Int J Behav Nutr Phys Act. 2016;13:92.

Lonsdale C, Lester A, Owen KB, White RL, Peralta L, Kirwan M, et al. An internet-supported school physical activity intervention in low socioeconomic status communities: results from the Activity and Motivation in Physical Education (AMPED) cluster randomised controlled trial. Br J Sports Med. 2019;53(6):341–7.

Kennedy SG, Peralta LR, Lubans DR, Foweather L, Smith JJ. Implementing a school-based physical activity program: process evaluation and impact on teachers’ confidence, perceived barriers and self-perceptions. Phys Educ Sport Pedagogy. 2019;24(3):233–48.

Kennedy SG, Smith JJ, Morgan PJ, Peralta LR, Hilland TA, Eather N, et al. Implementing resistance training in secondary schools: a cluster randomized controlled trial. Med Sci Sports Exerc. 2018;50(1):62–72.

Kelly SA, Oswalt K, Melnyk BM, Jacobson D. Comparison of intervention fidelity between COPE TEEN and an attention-control program in a randomized controlled trial. Health Educ Res. 2015;30(2):233–47.

Hollis JL, Sutherland R, Campbell L, Morgan PJ, Lubans DR, Nathan N, et al. Effects of a ‘school-based’ physical activity intervention on adiposity in adolescents from economically disadvantaged communities: secondary outcomes of the ‘Physical Activity 4 Everyone’ RCT. Int J Obes. 2016;40(10):1486–93.

Sutherland R, Campbell E, Lubans DR, Morgan PJ, Okely AD, Nathan N, et al. ‘Physical Activity 4 Everyone’ school-based intervention to prevent decline in adolescent physical activity levels: 12 month (mid-intervention) report on a cluster randomised trial. Br J Sports Med. 2016;50(8):488–95.

Sutherland RL, Campbell EM, Lubans DR, Morgan PJ, Nathan NK, Wolfenden L, et al. The physical activity 4 everyone cluster randomized trial: 2-year outcomes of a school physical activity intervention among adolescents. Am J Prev Med. 2016;51(2):195–205.

Cohen KE, Morgan PJ, Plotnikoff RC, Callister R, Lubans DR. Physical activity and skills intervention: SCORES cluster randomized controlled trial. Med Sci Sports Exerc. 2015;47(4):765–74.

Cohen KE, Morgan PJ, Plotnikoff RC, Hulteen RM, Lubans DR. Psychological, social and physical environmental mediators of the SCORES intervention on physical activity among children living in low-income communities. Psychol Sport Exerc. 2017;32:1–11.

Gray HL, Contento IR, Koch PA. Linking implementation process to intervention outcomes in a middle school obesity prevention curriculum, ‘choice, control and change’. Health Educ Res. 2015;30(2):248–61.

Hillman CH, Greene JL, Hansen DM, Gibson CA, Sullivan DK, Poggio J, et al. Physical activity and academic achievement across the curriculum: results from a 3-year cluster-randomized trial. Prev Med. 2017;99:140–5.

Szabo-Reed AN, Willis EA, Lee J, Hillman CH, Washburn RA, Donnelly JE. Impact of three years of classroom physical activity bouts on time-on-task behavior. Med Sci Sports Exerc. 2017;49(11):2343–50.

Szabo-Reed AN, Willis EA, Lee J, Hillman CH, Washburn RA, Donnelly JE. The influence of classroom physical activity participation and time on task on academic achievement. Transl J Am Coll Sports Med. 2019;4(12):84–95.

Hodges MG, Hodges Kulinna P, van der Mars H, Chong L. Knowledge in action: fitness lesson segments that teach health-related fitness in elementary physical education. J Teach Phys Educ. 2016;35(1):16–26.

O'Neill JM, Clark JK, Jones JA. Promoting fitness and safety in elementary students: a randomized control study of the Michigan model for health. J Sch Health. 2016;86(7):516–25.

Patrick Abi N, Hilberg E, Schuna JM Jr, John DH, Gunter KB. Association of teacher-level factors with implementation of classroom-based physical activity breaks. J Sch Health. 2019;89(6):435–43.

Seibert T, Allen DB, Eickhoff JC, Carrel AL. US Centers for Disease Control and Prevention-based physical activity recommendations do not improve fitness in real-world settings. J Sch Health. 2019;89(3):159–64.

Wright CM, Chomitz VR, Duquesnay PJ, Amin SA, Economos CD, Sacheck JM. The FLEX study school-based physical activity programs - measurement and evaluation of implementation. BMC Public Health. 2019;19(1):73.

Adab P, Barrett T, Bhopal R, Cade JE, Canaway A, Cheng KK, et al. The west midlands active lifestyle and healthy eating in school children (Waves) study: a cluster randomised controlled trial testing the clinical effectiveness and cost-effectiveness of a multifaceted obesity prevention intervention programme targeted at children aged 6-7 years. Health Technol Assess. 2018;22(8):1–644.

Adab P, Pallan MJ, Lancashire ER, Hemming K, Frew E, Barrett T, et al. Effectiveness of a childhood obesity prevention programme delivered through schools, targeting 6 and 7 year olds: cluster randomised controlled trial (WAVES study). BMJ. 2018;360:k211.

Griffin TL, Clarke JL, Lancashire ER, Pallan MJ, Adab P. Process evaluation results of a cluster randomised controlled childhood obesity prevention trial: the WAVES study. BMC Public Health. 2017;17(1):681.

Drummy C, Murtagh EM, McKee DP, Breslin G, Davison GW, Murphy MH. The effect of a classroom activity break on physical activity levels and adiposity in primary school children. J Paediatr Child Health. 2016;52(7):745–9.

Harrington DM, Davies MJ, Bodicoat DH, Charles JM, Chudasama YV, Gorely T, et al. Effectiveness of the ‘Girls Active’ school-based physical activity programme: a cluster randomised controlled trial. Int J Behav Nutr Phys Act. 2018;15(1):40.

Morris JL, Daly-Smith A, Defeyter MA, McKenna J, Zwolinsky S, Lloyd S, et al. A pedometer-based physically active learning intervention: the importance of using preintervention physical activity categories to assess effectiveness. Pediatr Exerc Sci. 2019;31(3):356–62.

Norris E, Dunsmuir S, Duke-Williams O, Stamatakis E, Shelton N. Physically active lessons improve lesson activity and on-task behavior: a cluster-randomized controlled trial of the “Virtual Traveller” intervention. Health Educ Behav. 2018;45(6):945–56.

Norris E, Dunsmuir S, Duke-Williams O, Stamatakis E, Shelton N. Mixed method evaluation of the Virtual Traveller physically active lesson intervention: an analysis using the RE-AIM framework. Eval Program Plann. 2018;70:107–14.

Robertson J, Macvean A, Fawkner S, Baker G, Jepson RG. Savouring our mistakes: learning from the FitQuest project. Int J Child-Comput Interact. 2018;16:55–67.

Tymms PB, Curtis SE, Routen AC, Thomson KH, Bolden DS, Bock S, et al. Clustered randomised controlled trial of two education interventions designed to increase physical activity and well-being of secondary school students: the MOVE project. BMJ Open. 2016;6(1):e009318.

Anderson EL, Howe LD, Kipping RR, Campbell R, Jago R, Noble SM, et al. Long-term effects of the Active for Life Year 5 (AFLY5) school-based cluster-randomised controlled trial. BMJ Open. 2016;6(11):e010957.

Campbell R, Rawlins E, Wells S, Kipping RR, Chittleborough CR, Peters TJ, et al. Intervention fidelity in a school-based diet and physical activity intervention in the UK: Active for Life Year 5. Int J Behav Nutr Phys Act. 2015;12(1):141.

Escriva-Boulley G, Tessier D, Ntoumanis N, Sarrazin P. Need-supportive professional development in elementary school physical education: effects of a cluster-randomized control trial on teachers’ motivating style and student physical activity. Sport Exerc Perform Psychol. 2018;7(2):218–34.

Janssen M, Twisk JW, Toussaint HM, van Mechelen W, Verhagen EA. Effectiveness of the PLAYgrounds programme on PA levels during recess in 6-year-old to 12-year-old children. Br J Sports Med. 2015;49(4):259–64.

Zhou Z, Li S, Yin J, Fu Q, Ren H, Jin T, et al. Impact on physical fitness of the chinese champs: a clustered randomized controlled trial. Int J Environ Res Public Health. 2019;16(22):4412.

Gaudin C, Chaliès S. Video viewing in teacher education and professional development: a literature review. Educ Res Rev. 2015;16:41–67.

Belton S, McCarren A, McGrane B, Powell D, Issartel J. The youth-physical activity towards health (Y-PATH) intervention: results of a 24 month cluster randomised controlled trial. PLoS One. 2019;14(9):e0221684.

Tarp J, Domazet SL, Froberg K, Hillman CH, Andersen LB, Bugge A. Effectiveness of a school-based physical activity intervention on cognitive performance in Danish adolescents: LCoMotion-learning, cognition and motion - a cluster randomized controlled trial. PLoS One. 2016;11(6):e0158087.

Aittasalo M, Jussila AM, Tokola K, Sievanen H, Vaha-Ypya H, Vasankari T. Kids Out; evaluation of a brief multimodal cluster randomized intervention integrated in health education lessons to increase physical activity and reduce sedentary behavior among eighth graders. BMC Public Health. 2019;19(1):415.

Cordingley P, Higgins S, Greany T, Buckler N, Coles-Jordan D, Crisp B, et al. Developing great teaching: lessons from the international reviews into effective professional development; 2015.

Aadland KN, Ommundsen Y, Anderssen SA, Bronnick KS, Moe VF, Resaland GK, et al. Effects of the Active Smarter Kids (ASK) physical activity school-based intervention on executive functions: a cluster-randomized controlled trial. Scand J Educ Res. 2019;63(2):214–28.

Ha AS, Lonsdale C, Lubans DR, Ng JYY. Increasing students’ activity in physical education: results of the self-determined exercise and learning for FITness trial. Med Sci Sports Exerc. 2020;52(3):696–704.

Koykka K, Absetz P, Araujo-Soares V, Knittle K, Sniehotta FF, Hankonen N. Combining the reasoned action approach and habit formation to reduce sitting time in classrooms: outcome and process evaluation of the let’s move it teacher intervention. J Exp Soc Psychol. 2019;81:27–38.

Lynch K, Hill HC, Gonzalez KE, Pollard C. Strengthening the research base that informs STEM instructional improvement efforts: a meta-analysis. Educ Eval Policy Anal. 2019;41(3):260–93.

von Klinggraeff L, Dugger R, Okely AD, Lubans D, Jago R, Burkart S, et al. Early-stage studies to larger-scale trials: investigators’ perspectives on scaling-up childhood obesity interventions. Pilot Feasibility Stud. 2022;8(1):31.

Gore JM, Miller A, Fray L, Harris J, Prieto E. Improving student achievement through professional development: results from a randomised controlled trial of quality teaching rounds. Teach Teach Educ. 2021;101:103297.

Darling-Hammond L, Hyler ME, Gardner M. Effective teacher professional development; 2017.

Jacob CM, Hardy-Johnson PL, Inskip HM, Morris T, Parsons CM, Barrett M, et al. A systematic review and meta-analysis of school-based interventions with health education to reduce body mass index in adolescents aged 10 to 19 years. Int J Behav Nutr Phys Act. 2021;18(1):1–22.

Peralta LR, Werkhoven T, Cotton WG, Dudley DA. Professional development for elementary school teachers in nutrition education: a content synthesis of 23 initiatives. Health Behav Policy Rev. 2020;7(5):374–96.

Schaap R, Bessems K, Otten R, Kremers S, van Nassau F. Measuring implementation fidelity of school-based obesity prevention programmes: a systematic review. Int J Behav Nutr Phys Act. 2018;15(1):1–14.

Ogilvie D, Bauman A, Foley L, Guell C, Humphreys D, Panter J. Making sense of the evidence in population health intervention research: building a dry stone wall. BMJ Glob Health. 2020;5(12):e004017.

Verloigne M, Ridgers ND, De Bourdeaudhuij I, Cardon G. Effect and process evaluation of implementing standing desks in primary and secondary schools in Belgium: a cluster-randomised controlled trial 11 Medical and Health Sciences 1117 Public Health and Health Services 13 Education 1303 Specialist Studies in Education 13 Education 1302 Curriculum and Pedagogy. Int J Behav Nutr Phys Act. 2018;15(1):94.

Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, et al. Standards for reporting implementation studies (StaRI) statement. BMJ. 2017;356:i6795.

Ryan M, Hoffmann T, Hofmann R, van Sluijs E. Incomplete reporting of interventions in school-based research: a call to journal editors to review their submission guidelines under review; 2022.

West R. An online Paper Authoring Tool (PAT) to improve reporting of, and synthesis of evidence from, trials in behavioral sciences. Health Psychol. 2020;39(9):846.

Acknowledgements

The authors would like to thank Dr. Veronica Phillips (School of Clinical Medicine, University of Cambridge) for her assistance in developing the search strategy and Stephen Sharp (MRC Epidemiology Unit, University of Cambridge) for his statistical advice. We would also like to thank all corresponding authors of articles that provided additional information.

Funding

This work was supported by the Economic and Social Research Council [grant number ES/P000738/1], the NIHR School of Public Health Research [grant number: SJAI/126 RG88936], the University of Cambridge, and the Medical Research Council [grant number: MC_UU_00006/5]. The funders had no role in designing the review, analysing the data or in writing the manuscript.

Author information

Authors and Affiliations

Contributions

MR, RH and EvS designed and planned the review. MR, OA and EvS screened all articles. MR extracted all data, and MR and OA conducted all BCT coding. MR and EI conducted quality assessment ratings, and assessed and classified fidelity outcomes. MR conducted analyses, with input from JL and EvS. MR drafted the manuscript and all authors contributed to the review and revision of the final paper. All authors have approved the manuscript and provided consent for publication.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search terms and records identified.

Additional file 2.

Structured process to classify fidelity outcomes.

Additional file 3.

Hierarchies used to select activity behaviour outcomes.

Additional file 4.

Formulae used for meta-analyses of physical activity and sedentary behaviour outcomes.

Additional file 5.

Publications excluded with reasons at stages 1 and 2 of full-text screening.

Additional file 6.

Table of descriptive characteristics of studies (n = 51) included in systematic review of school-based activity behaviour interventions.

Additional file 7.

Quality assessment ratings and classification results for fidelity outcomes.

Additional file 8.

Quality assessment ratings for activity behaviour outcomes (physical activity and sedentary behaviour combined).

Additional file 9.

Forest and funnel plots for physical activity outcomes.

Additional file 10.

Forest plots and funnel plots for sedentary behaviour outcomes.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Ryan, M., Alliott, O., Ikeda, E. et al. Features of effective staff training programmes within school-based interventions targeting student activity behaviour: a systematic review and meta-analysis. Int J Behav Nutr Phys Act 19, 125 (2022). https://doi.org/10.1186/s12966-022-01361-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12966-022-01361-6