Abstract

Aim

The global pandemic of COVID-19 has led to extensive practice of online learning. Our main objective is to compare different online synchronous interactive learning activities to evaluate students’ perceptions. Moreover, we also aim to identify factors influencing their perceptions in these classes.

Methods

A cross-sectional, questionnaire-based study focusing on clinical year medical students’ perceptions and feedback was conducted between February 2021 –June 2021 at the University of Hong Kong. Online learning activities were divided into bedside teaching, practical skill session, problem-based learning (PBL) or tutorial, and lecture. A questionnaire based on the Dundee Ready Education Environment Measure (DREEM) was distributed to 716 clinical year students to document their perceptions.

Results

One hundred responses were received with a response rate of 15.4% (110/716, including 96 from bedside teaching, 67 from practical skill session, 104 from PBL/tutorial, and 101 from lecture).

For the mean score of the DREEM-extracted questionnaire, online PBL/tutorial scored the highest (2.72 ± 0.54), while bedside scored the lowest (2.38 ± 0.68, p = 0.001). Meanwhile, there was no significant difference when we compared different school years (p = 0.39), age (p = 0.37), gender (p = 1.00), year of internet experience (<17 vs ≥17 years p = 0.59), or prior online class experience (p = 0.62).

When asked about students’ preference for online vs face-to-face classes. Students showed higher preferences for online PBL/tutorial (2.06 ± 0.75) and lectures (2.27 ± 0.81). Distraction remains a significant problem across all four learning activities.

A multivariate analysis was performed regarding students’ reported behavior in comparison with their perception through the DREEM-extracted questionnaire. The results showed that good audio and video quality had a significant and positive correlation with their perception of online bedside teaching, practical skill sessions, and PBL/tutorial. It also showed that the use of the video camera correlated with an increase in perception scores for lectures.

Conclusion

The present analysis has demonstrated that students’ perception of different online synchronous interactive learning activities varies. Further investigations are required on minimizing distraction during online classes.

Similar content being viewed by others

Introduction

The spread of the SARS-CoV-2 virus worldwide has led to a global pandemic since 2020 [1]. A broad practice of restricted mobility and social distancing has been advocated to minimize the spread of the disease [2]. Undoubtedly, the clinical areas harbor the highest risk of infection – the 2003 severe atypical respiratory syndrome (SARS) has taught us a hard lesson where a group of medical students acquired the disease after a clinical visit with a later-confirmed patient [3].

However, clinical attachment years are among the most crucial periods for medical students. Under normal circumstances, the hospital creates a comprehensive learning environment for the students – following ward rounds and various work routines with their tutors, students can learn through role-modeling; by shouldering some work in the ward, they can learn through apprenticeship; through participation in various operations, they can learn through deliberative practice; with case discussion with clinicians, they learn through scaffolding [4].

In the previous decade, e-learning has been advocated to eliminate geographical constraints and allow multimedia use. With technological advances and improved internet setup, synchronous high-quality live stream teaching has become feasible.

Several recently published short reports described new teaching tools [5], modifications in the curriculum [6, 7], and unique arrangements in teaching format [8] during this pandemic. However, there is still a limited understanding of their effectiveness, drawbacks, and student feedback. Moreover, this is the first time with a complete application of online teaching in all activities, and a comparison between these different modes of education is crucial but lacking.

Students have different perceptions among various online synchronous interactive activities. The strengths, weaknesses, and limitations of each module are best addressed individually to deliver a more in-depth understanding. With around a year of adoption, most online learning activities have been optimized, making it suitable to evaluate students’ perceptions and satisfaction to improve the current teaching system.

The main objective of the current study is to assess the perception of medical students regarding online synchronous interactive teaching in the clinical year during COVID-19 pandemic. Moreover, we also aim to identify factors influencing their perception of these activities. All this information is aimed at guiding us for recommendations for future online classes.

Methods

The current study is a cross-sectional, questionnaire-based study focusing on clinical year medical students’ perceptions and feedback among various online synchronous interactive learning activities.

Learning activities

We divided the online synchronous learning activities into four major types – bedside teaching, where authentic patients were involved; practical skill sessions, where clinical skills, such as suturing, catheter insertion, and clinical examinations, were taught; small group tutorials or problem-based learning; and lectures. Different modes of online synchronous learning activities were evaluated separately.

Various adoptions had been carried out at the University of Hong Kong. “Webside teaching”, coined by Tsang et al. [9], comprises interactive video conference technology with patients utilizing high-definition video cameras and microphones. Co et al. [10] used a multi-camera setup, in particular one focusing on his hand movement, for basic surgical skill classes. In ophthalmology, Shih et al. [11] introduced some video-based and written materials to precede and complement Zoom platform-based small group tutorials.

Questionnaire design

The first part explored students’ backgrounds, including their experience of internet use and previous online learning experience (Additional file 1: Appendix 1).

The second to fourth part of questionnaire was divided into the four modes of online teaching. At the second part, questions regarding the situation and circumstances during class were documented. Further questions were asked focusing on the student’s behavior during class with the Likert scale, ranging from “strongly disagree” to “strongly agree”. The rating was transferred into marks, with “strongly agree” being four and “strongly disagree” being zero.

At the third part, the focus was on perceptions about students’ learning, which was shown to correlate with their academic performance, learning pleasure, and propensity to achieve learning outcomes [12]. This part of the questionnaire was extracted from the well-validated Dundee Ready Education Environment Measure (DREEM) for medical education environments evaluation [13, 14]. The original DREEM questionnaire composes of 50 questions divided into five different aspects - Students’ perceptions of learning (POL) (12 items); Students’ perceptions of teachers (POT) (11 items); Students’ academic self-perceptions (ASP) (8 items); Students’ perceptions of atmosphere (POA) (12 items); and Students’ social self-perceptions (SSP) (7 items) [13]. After reviewing the original questionnaire, 17 more relevant questions were chosen with mutual agreement in our research team to keep the questionnaire concise yet representative - Five questions in the POL category, three questions in the POT category, three questions in the ASP category, and seven in the POA category. No question from the SSP category was chosen as this area is less relevant to the learning process that we were focusing on. This arrangement provides a broader range of feedback from our students, which offers more insight than a previous study by Dost et al. [15]. The mean score was calculated to compare individual items. A mean score of 3.5 is regarded as exceptionally strong areas, whereas items with a mean score of 2.0 need particular attention; items with mean scores between 2 and 3 are areas that could be improved [16].

Because of a lack of control, the fourth part was dedicated to a comparison between online and face-to-face activities. The last part was two open-ended questions regarding their online experience and recommendations.

Study period

The study period was approximately one year after the first confirmed COVID-19 case in Hong Kong [17]. The current research was a prospective study conducted between February 2021 –June 2021 at the Li Ka Shing Faculty of Medicine, University of Hong Kong.

Participants and ethical considerations

The subjects were medical students in their clinical year (716 year 4–6 students). A comprehensive list of their official emails was obtained from the faculty office, and an open invitation was sent to these email addresses. The survey was created using Jotform (https://form.jotform.com/210532006871446), an online surveying software (2021, San Francisco, US [18]). All data collected was non-identifiable and used for research purposes only. A mandatory selection box consenting to participation at the beginning of the survey was provided on the first page of the questionnaire in both the online and paper format, ensuring a 100% consent rate. Students were also invited to participate in the study after class to increase the response rate. This was only done after performance rating to ensure genuine voluntary participation, and teachers had confirmed the absence of prior participation in this study before distributing the invitation link. The current study was approved by the Faculty Research Ethics Committee of the Faculty of Education.

Data analysis

Data were extracted into Excel (Excel V.16.29, 2019) from Jotform questionnaires. Then they were re-coded and transferred into the statistical package for social sciences SPSS version 22.0.0 (IBM SPSS, IBM Corporation, Armonk, NY). The demographic data and their perceptions were described.

To facilitate subsequent comparison, students were further categorized into two groups – yes, neutral, or no, for their behavior and experience during class. Descriptive statistics (percentages, mean, and standard errors of the mean) were used to describe the quantitative variables. At the same time, analysis of variance (ANOVA) was used to compare the mean score between the four principal learning activities. In each learning activity, Student’s t-test was used to indicate the difference between factors potentially influencing the students’ perception. Multivariate logistic regression analysis was then performed to indicate any correlations between these factors and the scores and to calculate the Odds ratio. Results are considered statistically significant when p < 0.05.

For the open-ended questions, results were summarized to reflect their general impression. Insightful comments, concerns, or suggestions were highlighted.

Results

Cohort demographics

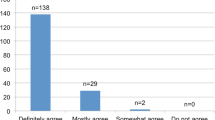

In the study period, among the 716 clinical year medical students, 110 replies were received, with a response rate of 15.4% (110/716). Of the 110 responses collected, 45.5% (n = 50) of respondents were women and 54.5% (n = 60) were men. 41.8% (n = 46) were from the fourth year (first year of their three-year clinical clerkship), 30.0% (n = 33) were from the fifth year, and 28.2% (n = 31) were from the sixth year (Fig. 1). The mean age of our respondents is 23.0 (range 21–30), with the mean year of internet use 15.9 years (range 10–23). Eighty-nine of them (80.9%) had prior online learning experiences before the COVID-19 period.

Regarding the type of lesson they experienced, most had had online bedside teaching (89.1%, n = 98). Slightly fewer students had online practical and skills lesion during this period (61.8%, 68/110), and almost all of them experienced online PBL and tutorials (96.4%, n = 106) and lectures (98.2%, n = 108) (Fig. 2). This makes up a total of three hundred sixty-eight valid responses with all four learning activities combined.

Students’ behavior and perception during class, the DREEM-extracted questionnaire, and their preference toward online classes

Learning circumstances and students’ behavior during class

Focusing on their experience, 44.8% (165/368) of them reported spending less than two hours on online classes in the previous four weeks. A vast majority of them (89.1%, 328/368) underwent the lesson at home, and 89.9% (331/368) reported using a computer for the class. For their behavior, most of them were muted (79.6%, 293/368), but 63.6% of them (234/368) switched on the video during class. Regarding the technical aspect, the results looked satisfying, with 76.9% of them (283/368) agreed that the audio and video were good, and 83.4% (307/368) agreed that the material was clearly shown. The software was reported to be easy to use in 87.5% of them (332/368).

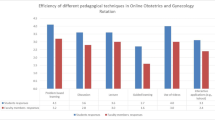

Overall mean score from the DREEM-extracted questionnaire

The overall mean scores of the DREEM-extracted questionnaire comparing the four learning activities are summarised in Fig. 3. We can see that the mean score is the highest for the PBL/tutorial group and the worst for the bedside group (p = 0.001). Meanwhile, there was no significant difference when we compared different school years (p = 0.39), age (p = 0.37), gender (p = 1.00), year of internet experience (<17 vs ≥17 years p = 0.59), or prior online class experience (p = 0.62).

The detailed results for the DREEM-extracted questionnaire are laid out in Table 1. There were two items with a mean score below 2.0 – that include question 1 in the online bedside teaching group (1.61 ± 0.81, boldened), which is also the lowest score item, and question 14 among the practical skills sessions group (1.87 ± 0.92, boldened). For the overall mean score, question 14 is the lowest score item among the 14 questions (2.06 ± 0.99, row No. 7). We could not identify any modifiable factors contributing to the low score. On the other hand, question 7 scores the highest (2.97 ± 0.75, row No. 14) with two subgroups scores above 3 (bedside teaching group, mean score 3.02 ± 0.78; PBL/tutorial group, mean score 3.05 ± 0.73, both underlined).

Comparison between online and face-to-face classes

Students were asked to compare the online class to the face-to-face counterpart (Table 2). In all four aspects of the comparisons, online bedside teaching was inferior to face-to-face, with all of them scoring below 2.0. This means that students are more preferred towards face-to-face classes in this category. On the other hand, online PBL/tutorial and lecture are mostly preferred by students (Table 2, row No. 4) and the overall mean scores (Table 2, row No. 5) in this section. Students indicated that they were prone to distraction among all four learning activities (Table 2, Bolded), worst in the bedside teaching group. None of the items scored above 3.0, and the best mean scores were achieved in the online lecture group with a significantly higher mean score of 2.27 ± 0.81 (p = 0.000).

Evaluation of individual learning activity

We tried to evaluate the students’ behavior for individual learning activities and then compare it to the overall mean of the DREEM-extracted questionnaire scores and the preference for online classes. The demographic factors showed no correlation to their scores in all four activities. For the online practical skills session, students who used smartphones/tablets for the class and students who spent more than two hours in the past four weeks rated a higher score in this part of the questionnaire (Table 3). Such difference was not demonstrated in other learning activities.

Regarding students’ behavior and perception of the DREEM-extracted questionnaire score, the results showed that good audio and video quality showed a significant and positive correlation with their perception of online bedside teaching, practical skill sessions, and PBL/tutorial. It also showed that switching on the video camera correlated with an increase in perception scores for lectures after the multivariate analysis (Table 4). For the comparison with face-to-face classes, there is a negative correlation between their preference toward online learning in PBL/tutorial when students were muted most of the time (Table 5). When the video is switched on most of the time, there is a positive correlation with their preference for online learning (Table 5).

Response to the open-ended questions

Online bedside teaching, which is new in our faculty, attracted the most feedback among the four activities from our students, with 42 feedbacks among the 96 respondents (43.8%). Many only left a brief comment with a similar amount of positive and negative comments (12/42, 28.6%, respectively). Some students expressed frustration with the online bedside, especially about the student-patient interaction and the lack of physical contact. Student A: “… It lacks the human touch and interactions, making learning less fun….” Student B: “…I still feel incompetent in detecting signs….” On the positive side, some students showed their support and appreciation for the effort of their teachers, especially during the pandemic with tight social restrictions. There were twenty-three suggestions provided by our respondents. Thirteen of them (13/23, 56.5%) preferred face-to-face over online, with another two (2/23, 8.7%) picked online and commented it as “time-efficient”, and three (3/23, 13.0%) preferred a hybrid approach.

There were notably fewer responses to the online practical skill session– only eleven provided their comments out of the 68 respondents (16.2%). Three (3/11, 27.3%) mentioned that online classes could not replace face-to-face classes in this category due to the need for practice and feedback. There were only two positive detailed comments - One mentioned that it was suitable for investigation learning and discussions such as electrocardiogram and chest X-ray. Another one said that it provided an optimal view compared to face-to-face: “I can see better (not standing too distant away from the tutor, and the tutor does not have to shout for us to hear).”

Twenty-eight responses were received for the online PBL/tutorial (28/106, 26.4%). Twenty (20/28, 71.4%) of them thought it was “good”, “great”, or “suitable”. Four of them thought it was “okay”. There were no negative comments in this open session. Many comments prized online PBL/tutorial for its efficiency and the time saved, and some even showed a preference for online classes. Student C: “It is efficient. It is easier to hear everyone clearly than in a room.” Student D: “More chance to voice out my opinions and answer the questions in PBL.” On the other hand, a few preferred face-to-face due to better interaction. Student E: “…clash of voice and internet connection hinder interaction between students and tutors.” Student E: “…People tend to mute in tutorial / PBL, hence less discussion….”

For the online lecture, twenty-nine comments were obtained from our 108 respondents in the online lecture session (26.9%). Among them, nineteen (19/29, 65.5%) left some positive comments, while another two respondents commented lectures were “okay” (6.9%). Among these positive reviews, the most mentioned benefits were time-efficient and flexibility. Student F: “Watching the online lectures at a higher speed is efficient for my study….” Student G: “This is the best way to learn from lectures. I can pause the recorded video and type notes….” One respondent tried to divide lectures into two types – the interactive type and the unidirectional type. He suggested face-to-face teaching sessions for interactive lectures, especially when case scenarios involved a joint discussion.

Discussion

Overall

The current study provided a more detailed assessment of students’ perception of various online synchronous interactive learning activities during the COVID-19 pandemic. From our results, it is encouraging to know our students are satisfied with the preparation of our teachers for their classes, especially in online bedside teaching and problem-based learning (Table 1). However, we can also see room for improvement in many areas.

One item that scores particularly low across all four learning activities is the disappointment regarding the online learning experience (2.06 ± 0.99, Table 1). Although students commonly used online resources before the pandemic, they were primarily additional components supporting traditional face-to-face teaching rather than primary learning activities [19]. The faculty never anticipated a sudden yet complete switch to the online environment, which is understandably prone to shortcomings. An earlier survey in the UK in May 2020 explored medical students’ perceptions and found that they were not enjoying or engaged in online learning in general [15]. On the bright side, similar to this UK study, our students also acknowledged that online education is time-efficient and flexible.

When we compare online to face-to-face classes, distraction is the main issue expressed by our respondents (Table 2). However, there was no association with any modifiable factors in our study. There was no significant correlation between their perception with any demographic factor, learning experience, or situation during the class.

Online bedside

The online version is a new learning activity for our students, primarily developed and evolved since the pandemic. It scored the lowest on the DREEM-extracted questionnaire and the preference session (Tables 1 and 2). The lowest score was seen in the item “I was encouraged to participate in class” with a mean score of 1.61 ± 0.81. A large-scale qualitative analysis focusing on obstacles during online learning showed that social interaction correlates with enjoyment, effectiveness, and willingness to continue online learning [20]. As suggested by some students, a straightforward strategy is to divide the class into smaller groups to receive more attention from their teacher.

The score in the DREEM-extracted questionnaire is associated with audio and video quality (Table 3). This result indicates the importance of high-speed internet infrastructure and adequate training to educators to ensure quality. The current software and hardware provide explicit videos and audio. However, when they were not correctly set up or used, the learning experience could be disrupted. This result also communicates an important message to the educators to monitor the quality during class and seek help if any poor internet signal is encountered. This can affect students’ perception and potentially the learning outcome. There was no factor identified associated with the low preference for online bedside. However, a few potential barriers could be seen from our open comment session, including difficulty building rapport with the patient, lack of human touch, and a lack of tactile feedback for physical signs.

From earlier studies, inadequate clinical examination carries the potential for subsequent medical error [21], and physical examination was found to have a substantial effect on the management of patients [22, 23]. To replace physical examination on actual patients, simulation training has been applied for teaching physical examination [24, 25]. A meta-analysis was performed to examine simulation training for breast and pelvic physical examinations. They concluded that simulation training is associated with positive effects on skills outcomes compared to no intervention. Unfortunately, simulation training is associated with a lack of authenticity, possible higher cost, and availability of specific models, as most simulation models are task-specific rather than comprehensive [26]. On top of this, bedside teaching is not only a skill-training process. Since the class is commonly taught in clinical areas with an actual patient under some active management, this creates a comprehensive learning experience applying multiple learning theories, including social learning, behaviorism, constructivism, and the cognitive apprenticeship mode [27]. Again, this may not be easily carried out on an online platform since students would be staying at a remote place facing a screen instead of embracing a clinical environment.

Online practical skill session

The online version is another new form of teaching developed during the current pandemic. Both surgical and clinical examination skills not involving patient was conducted online during this period. Multiple modifications have been suggested to facilitate remote skill teaching while students could be staying at home safely during classes [10, 28,29,30,31,32,33]. Some of these modifications involve a creative approach to adapt to resource limitations. Some online courses require students to utilize household applications and other readily available resources to mimic surgical tools [30, 32] while others through the distribution of mini-practical kids [10, 33]. One of them performed a comparative study with a 2019 face-to-face cohort with a superior result with an earlier proficiency in suturing and knot-tying skills with less coaching [31].

From our results, online practical skills score the second-highest in the DREEM-extracted questionnaire, just inferior to online PBL (Table 1). This result reflects a relatively positive perception of the four online learning activities. Like bedside teaching, clear video and audio are associated with a higher score. One possible explanation is that because students need to observe the demonstrations, practice, and perform return demonstrations in front of the camera, a clear video and audio allow for a more detailed observation with better assessment and guidance.

On the other hand, this should not be overlooked because there is still a low preference for online practical skill sessions compared to face-to-face sessions (Table 2). Despite the positive results reported for online practical skills sessions [31, 32], most other studies only compare pre-and post-intervention skills proficiency but not face-to-face teaching [28, 30, 33]. Besides, the online class material is not standardized, and hence the external validity of these studies may be limited.

Online PBL and tutorial

Online PBL achieved the highest mean score from the DREEM-extracted questionnaire (2.72 ± 0.54, Table 4). Studies comparing online and face-to-face PBL have been performed for more than a decade with reliable results [34,35,36,37]. Their results indicated that it is feasible to conduct PBL online with an enhancement of their ability of critical thinking and fulfilled the intended learning objectives. In agreement with these results, online PBL could enhance metacognitive skills, problem-solving ability, and teamwork. Compatibly, in our study, students were positive regarding the online PBL, praising its efficiency, and some even became more involved.

From our results, clear video and audio are associated with a higher DREEM-extracted score, and students who muted most of the time have a lesser preference for online. Similar to the results in online bedside, educators should ensure their signal quality during class. In addition, educators should also encourage students to unmute themselves, given that PBL is highly interactive in which students learn through discussion and become active members, contributing to solving a clinical scenario with joint efforts [38].

Despite a higher DREEM-extracted questionnaire score, Foo et al. discovered that the scores from online PBL groups were lower than the previous face-to-face cohort [39]. They suggested that this lower score, persistent despite in the following tutorial, is potentially due to more than transitional issues. Students were more neutral regarding whether online or face-to-face PBL should be performed for future arrangements and expressed concerns regarding the distraction (Table 2).

Online lecture

Both synchronous and asynchronous lectures were conducted in our faculty during this period. Despite that relatively low mean score for the DREEM-extracted questionnaire (2.51 ± 0.59, Table 1), the mean score compared to face-to-face learning is ranked the highest among these four modes of learning (2.27 ± 0.81, Table 2). The majority of our respondents gave some positive feedback, and a number of them suggested continuing its use even when the pandemic is over. The most prized advantage is time efficiency, and that play-back function provides a flexible approach for a revisit. Reports indicate students’ preference towards pure synchronous online teaching over recorded videos with the concern over a negative influence on the engagement, including a letter to the Editor from Motie et al. [40]. One of the significant concerns lay in a potential decrease in real-time engagement during synchronous teaching, as there would be no consequence with reduced interaction and attention. This argument is supported by an earlier qualitative study by Dommett et al. [41]. They found that students might substitute attendance at live lectures with decreased questions. They also expressed concern that students might miss essential concepts from the pre-recorded videos as they tended to skip through them. However, there are also supports for asynchronous videos. Hsin et al. [42] described the use of video mini-lectures to improve students’ satisfaction and increase average grades with a significantly higher percentage of students and with much less instructor intervention. The ability of learners to control their learning pace with video also indicates a shift from educator-centered learning in the traditional face-to-face lecture towards a learner-based approach [43], which is potentially beneficial with an increase in learners’ autonomy. A more detailed analysis focusing on learners’ satisfaction and learning outcome found that videos compliant with multimedia learning principles are highly satisfying [44]. Despite the above conflicting evidence, asynchronous videos are possibly helpful, provided they are properly constructed while students’ attendance and engagement in class can be maintained. Currently, most lectures become pre-recorded videos, followed by synchronous online question-and-answer sessions, potentially maintaining their engagement by lack of overlapping. To further improve their satisfaction during online synchronous lectures, we also recommend that videos should be switched on based on our results from the regression analysis.

Limitation

Despite the interesting findings in our study, the results may need to be interpreted with caution. The current study is limited to a single institute, limiting its external validity to a certain. With only a part of the DREEM questionnaire being selected, the perception assessment could be incomprehensive and cannot reflect the whole picture. In addition, the behaviors were only retrospectively reported by our students. There was no other objective measurement, such as in-class observation or recording to verify, potentially contributing to report bias. Lastly, there could be a selection bias as students who felt satisfied after class might be more willing to fill in the questionnaire.

Conclusion

The present analysis has demonstrated that students’ perception of different online synchronous interactive learning activities varies. Continuous effort should be encouraged to maximize patient exposure for our clinical year medical students, particularly for bedside teaching. With a positive perception regarding online PBL/tutorials and a strong preference for online lectures, there is a high possibility that these classes will remain online. Good audio and video quality should be ensured. Implementation for online practical skill classes should remain cautious as it is not preferred to face-to-face classes, despite relatively high scores in the DREEM-extracted questionnaire. Moreover, further investigations are required to minimize distraction during online classes.

Availability of data and materials

Please contact Dr. Billy HH Cheung via email at bhhcheung@gmail.com if further data and materials are required.

References

(WHO), W.H.O. WHO announces COVID-19 outbreak a pandemic. [Online]. http://www.euro.who.int/en/health-topics/health-emergencies/coronaviruscovid-19/news/news/2020/3/who-announces-covid-19-outbreak-apandemic 2020 [cited 2021 27/4/2021].

News.gov.hk, Work From Home Plan Advised, news.gov.hk, Editor. 2020, Hong Kong Government Hong Kong SAR.

Wong TW, et al. Cluster of SARS among medical students exposed to single patient, Hong Kong. Emerg Infect Dis. 2004;10(2):269–76.

Fischer F, Hmelo-Silver CE, Goldman SR, Reimann P. International Handbook of The Learning Science ed. New York: Taylor & Francis Group; 2018.

Reinholz M, French LE. Medical education and care in dermatology during the SARS-CoV2 pandemia: challenges and chances. J Eur Acad Dermatol Venereol. 2020;34(5):e214–6.

Geha R, Dhaliwal G. Pilot virtual clerkship curriculum during the COVID-19 pandemic: Podcasts, peers and problem-solving. Med Educ. 2020;54(9):855–6.

Gami M, et al. Perspective of a Teaching Fellow: Innovation in Medical Education: The Changing Face of Clinical Placements During COVID-19. J Med Educ Curric Dev. 2022;9:23821205221084935.

Murdock HM, Penner JC, Le S, Nematollahi S. Virtual Morning Report during COVID-19: A novel model for case-based teaching conferences. Med Educ. 2020;54(9):851–2.

Tsang ACO, et al. From bedside to webside: A neurological clinical teaching experience. Med Educ. 2020;54(7):660.

Co M, Chu K-M. Distant surgical teaching during COVID-19 - A pilot study on final year medical students. Surg Pract. 2020;24(3):105–9.

Shih KC, et al. Ophthalmic clinical skills teaching in the time of COVID-19: A crisis and opportunity. Med Educ. 2020;54(7):663–4.

Lizzio A, Wilson K, Simons R. University Students' Perceptions of the Learning Environment and Academic Outcomes: Implications for theory and practice. Stud High Educ. 2002;27(1):27–52.

Roff S, et al. Development and validation of the Dundee Ready Education Environment Measure (DREEM). Med Teach. 1997;19(4):295–9.

Chan CYW, et al. Adoption and correlates of the Dundee Ready Educational Environment Measure (DREEM) in the evaluation of undergraduate learning environments - a systematic review. Med Teach. 2018;40(12):1240–7.

Dost S, et al. Perceptions of medical students towards online teaching during the COVID-19 pandemic: a national cross-sectional survey of 2721 UK medical students. BMJ Open. 2020;10(11):e042378.

McAleer S, Roff S. A practical guide to using the Dundee Ready Education Environment Measure (DREEM). AMEE Educ Guide. 2001;23:29–33.

Cheung E. China coronavirus: death toll almost doubles in one day as Hong Kong reports its first two cases, in South China Morning Post. Hong Kong: South China Morning Post Publishers Limited; 2020.

Inc., J. JotForm - About Us. 2021 [cited 2021 23/5/2021]; Available from: https://www.jotform.com/about/.

Ellman MS, Schwartz ML. Online Learning Tools as Supplements for Basic and Clinical Science Education. J Med Educ Curric Dev. 2016;3:JMECD.S18933.

Muilenburg L, Berge Z. Student Barriers to Online Learning: A Factor Analytic Study. Dist Educ. 2005;26:29–48.

Schiff GD, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169(20):1881–7.

Reilly BM. Physical examination in the care of medical inpatients: an observational study. Lancet. 2003;362(9390):1100–5.

Paley L, et al. Utility of clinical examination in the diagnosis of emergency department patients admitted to the department of medicine of an academic hospital. Arch Intern Med. 2011;171(15):1394–6.

Karnath B, Thornton W, Frye AW. Teaching and testing physical examination skills without the use of patients. Acad Med. 2002;77(7):753.

Salud LH, et al. Clinical examination simulation: getting to real. Stud Health Technol Inform. 2012;173:424–9.

Flanagan JL, De Souza N. Simulation in Ophthalmic Training. Asia Pac J Ophthalmol (Phila). 2018;7(6):427–35.

Collins A. Cognitive apprenticeship. In: Sawyer RK, editor. The Cambridge handbook of the learning sciences. Cambridge: Cambridge University Press; 2006. p. 47–60.

Quaranto BR, et al. Development of an Interactive Remote Basic Surgical Skills Mini-Curriculum for Medical Students During the COVID-19 Pandemic. Surg Innov. 2021;28(2):220–5.

Bachmann C, et al. Digital teaching and learning of surgical skills (not only) during the pandemic: a report on a blended learning project. GMS. J Med Educ. 2020;37(7):Doc68.

Gallardo FC, et al. Home Program for Acquisition and Maintenance of Microsurgical Skills During the Coronavirus Disease 2019 Outbreak. World Neurosurg. 2020;143:557–563.e1.

Tellez J, Abdelfattah K, Farr D. In-person versus virtual suturing and knot-tying curricula: Skills training during the COVID-19 era. Surgery. 2021;170(6):1665–9.

Schlégl Á, , et al. Teaching Basic Surgical Skills Using Homemade Tools in Response to COVID-19. Acad Med. 2020;95(11):e7.

Wallace D, Sturrock A, Gishen F. You've got mail!': Clinical and practical skills teaching re-imagined during COVID-19. Future Healthc J. 2021;8(1):e50–3.

Valaitis RK, et al. Problem-based learning online: perceptions of health science students. Adv Health Sci Educ Theory Pract. 2005;10(3):231–52.

Nathoo AN, Goldhoff P, Quattrochi JJ. Evaluation of an Interactive Case-based Online Network (ICON) in a problem based learning environment. Adv Health Sci Educ Theory Pract. 2005;10(3):215–30.

Broudo M, Walsh C. MEDICOL: online learning in medicine and dentistry. Acad Med. 2002;77(9):926–7.

Jin J, Bridges SM. Educational technologies in problem-based learning in health sciences education: a systematic review. J Med Internet Res. 2014;16(12):e251.

Distlehorst LH, et al. Problem-based learning outcomes: the glass half-full. Acad Med. 2005;80(3):294–9.

Foo C-C, Cheung B, Chu K-M. A comparative study regarding distance learning and the conventional face-to-face approach conducted problem-based learning tutorial during the COVID-19 pandemic. BMC Med Educ. 2021;21(1):141.

Motie LZ, Kaini S, Bhandari A. Synchronous teaching should be exclusively synchronous: Medical students' perspective on increasing student engagement online. Med Teach. 2022;44(4):456.

Dommett EJ, Gardner B, van Tilburg W. Staff and student views of lecture capture: a qualitative study. Int J Educ Technol High Educ. 2019;16(1):23.

Hsin W-J, Cigas J. Short videos improve student learning in online education. J Comput Sci Coll. 2013;28(5):253–9.

Hartsell T, Yuen SC-Y. Video Streaming in Online Learning. AACE Review (formerly AACE Journal). 2006;14(1):31–43.

Choe RC, et al. Student Satisfaction and Learning Outcomes in Asynchronous Online Lecture Videos. CBE—Life Sciences. Education. 2019;18(4):ar55.

Acknowledgments

We would like to thank all the participants and helpers for improving our understanding during this difficult time.

Funding

None reported.

This manuscript has not been previously published and is not under consideration in the same or substantially similar form in any other journal.

Author information

Authors and Affiliations

Contributions

Dr. Cheung has full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. Concept and design: Dr. Cheung and Dr. Foo. Acquisition, analysis, or interpretation of data: Dr. Cheung. Drafting of the manuscript: Dr. Cheung. Critical revision of the manuscript for important intellectual content: Dr. Foo, Prof Chu, Dr. Co. Statistical analysis: Dr. Cheung. Administrative, technical, or material support: Dr. Cheung and Miss Lee. Supervision: Dr. Foo. The authors read and approved the final manuscript.

Author’s information

1. Billy Ho Hung Cheung

https://orcid.org/0000-0002-8843-7893

Dr. Cheung is currently a resident specialist working in Queen Mary Hospital and Tung Wah Hospital in the Division of Breast Surgery. He is also an Honorary Clinical Tutor of the University of Hong Kong LKS Faculty of Medicine. He has an immense interest in medical education and completed a Master of Education (Healthcare Professional) in 2021.

2. Dominic Chi Chun Foo

http://orcid.org/0000-0001-8849-6597

Dr. Foo is a Clinical Assistant Professor in the Division of Colorectal Surgery of The University of Hong Kong LKS Faculty of Medicine. Dr. Foo is heavily involved in medical education. He was involved in the medical curriculum development of both the preclinical and clinical years. He has been Deputy Director of the Centre for Education and Training in the Department of Surgery of the University since 2022.

3. Kent Man Chu

http://orcid.org/0000-0002-0332-4321

Prof Chu is a Clinical Professor of Surgery, Honorary Consultant Surgeon, Director of the Surgical Endoscopy Service, and Director of the Centre for Education and Training in the Department of Surgery. He is the Censor-in-Chief, Chairman of the Education & Examination Committee, and Chairman of the Examination Committee of The College of Surgeons of Hong Kong. He was the Director of the Department of Education, the Chairman of the Scientific Committee, and the Chairman of the General Surgery Board of The College of Surgeons of Hong Kong. He was the Chief Examiner for the Joint Specialty Fellowship Examination in General Surgery of the Royal College of Surgeons of Edinburgh and The College of Surgeons of Hong Kong until 2019. He is a member of the Board of Examiners, Research Committee, Mainland Centre Accreditation Subcommittee, and the Task Force for Subspecialisation in General Surgery of The College of Surgeons of Hong Kong. He is a council member of The College of Surgeons of Hong Kong and the Hong Kong Society of Minimal Access Surgery.

He is a Medical Assessor for The Medical Council of Hong Kong. He was Deputy Chairman of the Institutional Review Board of The University of Hong Kong and Hospital Authority Hong Kong West Cluster. He is an Expert Reviewer for the International Union Against Cancer (UICC), Geneva, Switzerland. He is a Sentinel Reader for McMaster Online Rating of Evidence (MORE), McMaster University, Canada. He is or has been a member of the Editorial Board of 9 international journals. He was a member of the International Advisory Board for the textbook Schwartz’s Principles of Surgery. He has published one doctoral thesis, four book chapters, over 175 journal articles, and over 150 conference proceedings. He has also been an invited speaker or faculty on more than 285 occasions. His current h-index is 58.

4. Michael Co

https://orcid.org/0000-0002-1705-051X

Dr. Co joined The University of Hong Kong as a Clinical Assistant Professor and worked in the Division of Breast Surgery. His main interest of research is in premalignant breast disorders and breast cancer epidemiology. He was awarded the Korean Breast Cancer Foundation Scholarship. Dr. Co also published numerous journals in the field of medical education to address the challenges brought by the pandemic.

5. Lok Sze Lee

https://orcid.org/0000-0003-1051-4198

Miss Lee is a final-year medical student keen to become a future surgeon. She has been performing well both in classes and in examiners and has shown a deep interest in research in the surgical field.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All methods were carried out in accordance with relevant guidelines and regulations.

All experimental protocols were approved by the Ethical Committee, the Faculty of Education, University of Hong Kong.

Informed consent was obtained from all participants.

Consent for publication

Not applicable.

Competing interests

None reported.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Questionnaire.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Cheung, B.H.H., Foo, D.C.C., Chu, K.M. et al. Perception from students regarding online synchronous interactive teaching in the clinical year during COVID-19 pandemic. BMC Med Educ 23, 5 (2023). https://doi.org/10.1186/s12909-022-03958-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-022-03958-8