Abstract

Background

It is well recognised that medical students need to acquire certain procedural skills during their medical training, however, agreement on the level and acquisition of competency to be achieved in these skills is under debate. Further, the maintenance of competency of procedural skills across medical curricula is often not considered. The purpose of this study was to identify core procedural skills competencies for Australian medical students and to establish the importance of the maintenance of such skills.

Methods

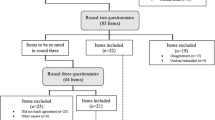

A three-round, online Delphi method was used to identify consensus on competencies of procedural skills for graduating medical students in Australia. In Round 1, an initial structured questionnaire was developed using content identified from the literature. Respondents were thirty-six experts representing medical education and multidisciplinary clinicians involved with medical students undertaking procedural skills, invited to rate their agreement on the inclusion of teaching 74 procedural skills and 11 suggested additional procedures. In Round 2, experts re-appraised the importance of 85 skills and rated the importance of maintenance of competency (i.e., Not at all important to Extremely important). In Round 3, experts rated the level of maintenance of competence (i.e., Observer, Novice, Competent, Proficient) in 46 procedures achieving consensus.

Results

Consensus, defined as > 80% agreement, was established with 46 procedural skills across ten categories: cardiovascular, diagnostic/measurement, gastrointestinal, injections/intravenous, ophthalmic/ENT, respiratory, surgical, trauma, women’s health and urogenital procedures. The procedural skills that established consensus with the highest level of agreement included cardiopulmonary resuscitation, airway management, asepsis and surgical scrub, gown and gloving. The importance for medical students to demonstrate maintenance of competency in all procedural skills was assessed on the 6-point Likert scale with a mean of 5.03.

Conclusions

The findings from the Delphi study provide critical information about procedural skills for the Clinical Practice domain of Australian medical curricula. The inclusion of experts from medical faculty and clinicians enabled opportunities to capture a range of experience independent of medical speciality. These findings demonstrate the importance of maintenance of competency of procedural skills and provides the groundwork for further investigations into monitoring medical students’ skills prior to graduation.

Similar content being viewed by others

Background

Training in clinical procedures (i.e., procedural skills) is a fundamental component of medical curricula, but the selection of skills included in medical programs in Australia differs between medical schools. Procedural skills can range from simple tasks, such as taking vital signs, to complex tasks, such as the insertion of an endotracheal tube for intubation. In Australia, the accreditation requirements for medical programs are set by the Australian Medical Council (AMC). The AMC provides guidance for graduate outcome requirements in the Clinical Practice domain and stipulates that medical students are taught ‘a range of common procedural skills’ [1], p. 9). The current prerequisite is for medical students to develop competency in a range of procedures by graduation to enable them to work safely in complex, dynamic and unpredictable clinical environments [1, 2].

Competency in medicine can be defined as having the knowledge, skills and experience to be able to fulfil the requirements of the role of the medical professional [3]. Universities are tasked with the preparation of medical students by providing training and experiences during their undergraduate medical program [1]. Students are required to achieve competency in a range of skills by graduation to enable them to work safely in complex, dynamic and unpredictable clinical environments [3,4,5]. Acquiring competency in procedural skills, therefore, is an essential goal of medical education, with the expectation that a graduate should be proficient in basic procedural and clinical skills and able to assume responsibility for safe patient care at entry to the profession. Universities are tasked with preparing medical students for clinical work by providing procedural skills training required to practice as a graduate doctor. However, it is for individual medical programs to decide which procedures are taught and determine the competency level for each skill.

Although there is no national undergraduate medical curriculum in Australia, most medical programs teach procedural skills in the pre-clinical or early stages of the degree in preparation for clinical practice in the latter years of training [1]. The clinical skills curriculum in Australian medical schools is often aligned with the Australian Curriculum Framework for Junior Doctors [6]. This framework outlines the knowledge, skills, and behaviours inclusive of procedural skills required by interns (i.e., after graduation). Given that the suggested skills are for post- rather than pre-graduation, it is possible that they may not align with the level of ability or requirements of medical students to perform these procedures. Nearly a decade ago, the Medical Deans of Australia and New Zealand Inc (MDANZ) comprising the Deans of Australia’s 21 medical schools and the two New Zealand medical schools, reviewed and suggested 58 procedures plus diagnostic and therapeutic skills [7]. There is, however, no published data to support the rigor of the methods used to determine such findings.

Several reviews of clinical curricula have been undertaken internationally. In the UK, medical students are required to demonstrate competency in 23 practical skills and procedures by graduation [8]. In Canada, a review of the medical curriculum was developed with a competency focus designed to identify and describe the abilities required to effectively meet the health care needs of patients, and is being adopted in the undergraduate medical schools to aid graduates to transition into the clinical area more easily [9, 10]. In the US, using a competency-based model, 13 clinical tasks are specified as entrusted professional activities (EPA) i.e., procedural skills that medical graduates should attain [11, 12]. The definition of an EPA is that it is comprised of discrete tasks relevant to the clinical area and competency is acquired in those activities [13]. In Germany, a consensus review identified 289 practical skills [14] to inform the German National Competency-based Catalog of Learning Objectives for Medicine [15]. In Switzerland and Netherlands, similar catalogues have been developed [16, 17]. Nonetheless, the procedural skills curricula developed in other countries may lack applicability in many aspects of an Australian context [6, 18,19,20,21].

An increasing number of studies have reported the difficulty in determining when a medical student is competent to undertake a procedural skill, and as such, many new graduates feel inadequately prepared for clinical practice [22, 23]. Recent research reported the lack of competency in procedural skills among graduates and has implicated shortcomings associated with traditional approaches to teaching clinical and procedural skills and the challenges in maintaining competence during medical school [24]. Another study described downstream consequences of such ill-preparedness in clinical and procedural skills for preventable medical errors, infection rates and patient morbidity, all known to be greater in newly graduated doctors [25].

As the paradigm shifts from the long-established, time-based medical education model, grounded in apprentice-type practice with patients in primary care, towards a competency-based model involving mastery learning and competency standards, the importance of acquisition of procedural skills along with the maintenance of competence is becoming increasingly relevant [5, 26]. Competency standards serve several developmental functions (i.e., stages of competency) that promote the minimum requirements for fitness to practice [27]. The standards also direct students towards undertaking responsibility for their own professional development and practice [28]. However, there is a decline in opportunities for attaining clinical experience, partly due to patients not spending as much time in hospital pre- or post-operatively, and partly due to the acuity of inpatients not always being conducive to allowing medical students to practice procedural skills [29]. Further, many procedures once performed by interns and practised by medical students, are now performed by other health practitioners, for example, nurses routinely undertake peripheral intravenous cannulation on wards and suture wounds in the Emergency Department [24] and midwives suture vaginal lacerations and episiotomies in the birthing suite [30].

Sawyer et al. [26] proposed an evidence-based framework for teaching and learning of procedural skills in six steps of ‘Learn, See, Practice, Prove, Do and Maintain’. Sawyer et al., highlights the importance of maintenance, arguing that competency in procedural skills ‘degrades’ if practice is not undertaken and/or refreshed. In the undergraduate years of medical curricula, however, individual procedural skills are often only formally assessed in OSCE examinations and not re-assessed. As such, many medical students do not recognise that their level of skills has declined nor realise the importance of maintaining skills. The importance of maintaining competence has long been recognised in other areas of health (e.g., basic and specialised resuscitation skills) and the post-graduate speciality colleges, to ensure skills are maintained and professional development requirements are met [31,32,33].

Currently, the level of achievement in the learning of procedural skills, focuses on assessment of psychomotor skills and a multidimensional nature of competence, but the translation of the procedure to a range of attributes required for professional practice does not appear to be well considered [34]. The need to review a set of procedural skills competencies that will enable medical students to function more efficiently in the clinical setting, is evident. Importantly, it is likely that once standardised strategies for ensuring student competence in procedural skills is determined, monitoring student outcomes prior to graduation may be required [35,36,37].

Method

Design

The present Australian-based study utilised a three-round modified Delphi technique [38, 39] to explore consensus from a panel of key medical education academics and healthcare clinicians. The purpose of this Delphi study was to identify core procedural skills competencies for Australian medical students and to establish the importance of the maintenance of such skills. The Delphi technique is a well-established hybrid research method that combines both quantitative and qualitative approaches. This method has been used to arrive at group consensus across a range of subject areas, including the field of competencies in clinical education when knowledge of the subject is not well defined or has not been recently addressed [40,41,42].

Traditionally, the first round of the Delphi technique asks the selected expert panel to consider questions to establish the content required and then to establish consensus [39] whereas the Delphi method used here is considered ‘modified’ as the method used a prepared set of items. Consensus in the Delphi method is developed through successive survey rounds as participants identify their level of agreement and reassess their previous level of agreement [41, 43] or a criterion for stopping is reached [44]. To achieve an acceptable range of consensus, a definition of consensus for this study was set (a priori) at 80% agreement and the number of rounds at three, although there is no uniformity about how to conduct a Delphi, as the number of rounds and the panel size varies [44,45,46,47].

Core competency was defined as the essential minimum set of a combination of attributes, such as applied knowledge, skills, and attitudes that enable an individual to perform a set of tasks to an appropriate standard, efficiently, effectively and competently in the profession at the specified level [48]. Core competencies offer a shared language for health professions for defining the expectations of procedural skills competency. In this study, participants were also asked to consider the priorities of the AMC Graduate Outcomes Statement [1] and the definition of competency in making their decisions. Maintenance of competency is defined as the ongoing ability to integrate and apply the knowledge and skills to practise the set of tasks safely in a designated role and setting. Medical professionals are responsible for ensuring they stay up to date on a continuing basis with lifelong learning to meet the requirements of the regulatory body and these standards protect the public as well as advance practice.

Delphi expert panel

A total of 75 medical academics, clinical educators and clinicians from Australia were invited to participate in this study by email in March 2020. A purposive sampling strategy was used to identify a representative sample of potential experts. Recruitment was by emailing an invitation to experts identified from websites of Australian medical schools, medical students’ placement affiliations (e.g., hospitals), Australian authors of published papers, and snowballing using recommendations by third parties. The email provided the inclusion criteria and information about the Delphi study. Literature has suggested panels with 10 to 50 individuals are appropriate [38], based on similar studies, we anticipated between 25 and 40 experts would agree to take part [49]. To capture the collective opinion of experts in this area, the inclusion criteria for the study were: a medical or health qualification, involved with medical students in clinical and/or educational settings in Australia where procedural skills are undertaken [39]. These settings were selected to access individuals who would have the pre-requisite knowledge and experience with medical students and procedural skills. Equal weight was given to the opinions of each participant. Ethical approval (reference # PG03205) for this study was obtained through the Human Research Ethics Committee of the university. All participants provided informed consent to take part at the beginning of each survey round.

Data collection

Invited experts accessed survey rounds via a link hosted on the web-based platform Qualtrics, (www.Qualtrics.com). The research questions and information about the Delphi process was provided on the first screen of each survey. Demographic characteristics were collected, and the survey was open for three weeks. Non-responders received a follow-up email reminder at two weeks.

Pilot of Delphi survey

Each round of the Delphi survey was piloted with a selected group of eight faculty educators and healthcare clinicians who met the Delphi panel inclusion criteria. Given that they were a convenience sample known to the researcher, their responses were not included in the data [39]. Piloting the survey instrument was done to ensure the relevance of the competencies selected for medical students, to identify incongruent and vague statements and suggest corrections and to ensure the usability and acceptability to participants. The pilot panel were not included as participants in the Delphi study.

Round 1

A comprehensive list of procedural skills competencies was developed from a review of practice standards of existing curricula, guidelines, and frameworks from national and international published studies e.g., MDANZ [7], the GMC UK Practical skills and procedures [8], and a literature search on procedural skills competencies. Key words and phrases included competency, medical students, procedural skills, curricula and Boolean combinations. Databases searched included PubMed, MEDLINE, Web of Science and Scopus. In Round 1, using the description of core competency, experts were asked to consider the question: ‘Should medical students be able to perform these skills?’ Specifically, they were asked to rate a total of 74 procedural skills across ten categories using a three-point scale (yes, no or unsure) according to whether they considered medical students should achieve a level of competence for each procedure by the end of their medical degree. The categories were: cardiovascular, diagnostic/measurement, gastrointestinal, injections/intravenous, ophthalmic/ENT, respiratory, surgical, trauma, women’s health, and urogenital sections. The option ‘unsure’ was included following pilot testing that indicated some participants were unaware of how essential some of the procedures were and preferred to leave the question unanswered. To capture skills that might be considered essential but were not included, the experts were asked to use a free-text box to propose any missing procedures.

Round 2

In Round 2, experts were provided with findings from Round 1 and invited to clarify and re-rate the relevance of items to determine the level of consensus by answering the question: Should medical students be able to provide safe treatment to patients through performing these procedures by graduation? using a six- point Likert scale (Not at all Important through to Extremely Important) to indicate their level of agreement for inclusion as a requirement by medical students to achieve by graduation. In May 2020, the MDANZ identified a set of core competencies for final year students which aligned with the AMC’s graduate outcomes [50]. Irrespective of consensus, the eighteen procedural skills from the MDANZ guidance statement were included and presented to the experts in Round 2. Round 2 aimed to establish stability with procedural skills that did not achieve > 90% consensus in Round 1 and were re-submitted in Round 2. Skills from Round 1 that achieved > 90% were considered to have reached consensus and it was assumed that agreement was unlikely to alter, therefore they were not represented in Round 2. Additionally, a determination about the importance for students to demonstrate maintenance of competency was assessed on the 6-point Likert scale. The type of maintenance program was also investigated and the timing that would be appropriate for such a program of procedural skills.

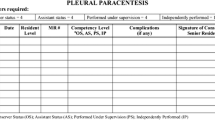

Round 3

In Round 3, experts were invited to re-evaluate the procedures that achieved consensus in the previous rounds for a level of maintenance. As part of a two-part question, experts were invited to establish ‘If maintenance would be required for the procedure?’, and ‘If yes, at what level should maintenance be at?’ The scale for the level of maintenance was rated in four levels based on the Dreyfus model of skill acquisition: Observer – understands and observes the procedure in the clinical environment, Novice – performs the procedure under direct supervision in a simulated environment, Competent – performs with supervision nearby in the clinical environment and Proficient – performs proficiently under limited supervision in the clinical environment [51]. A Not Applicable category was available. A free text box was available for any comments regarding maintenance of competency following each section.

Data analysis

Descriptive statistics were used to describe experts’ demographic characteristics and group responses to each item in all rounds with frequency statistical data calculated for each item during the rounds. Descriptive statistics (median and interquartile range) were calculated to determine the indicators for selection in the next round and to present quantitative feedback (median and interquartile ranges). Measurement of percentage of agreement, range of ratings (interquartile ratings), mean and median were analysed using IBM SPSS version 26 (IBM, 2016). The appropriate level of consensus is inadequately defined within the literature with measurements ranging from 51 to 100% [52]. Consistent with previous work, in the current study a priori decision to establish consensus was made if 80% or more of experts agreed on an item rating [53, 54].

Results

Delphi expert panel

The Delphi rounds were conducted between March 2020 and July 2020. Table 1 displays the characteristics of panel experts at each round. Of the 75 experts contacted, 40 agreed to participate, and 36 completed Round 1. This equated to a response rate of 48%. Those who participated in Round 1 were sent the Round 2 survey, and 33 completed surveys were received, representing a response rate of 92%. Those who participated in Rounds 1 and 2 were sent the Round 3 survey, with a 75% response rate. The consensus opinions, representing an expert group of Australian faculty educators from nine medical schools and healthcare clinicians, with 83% having more than five years’ experience in clinical education.

Round 1

Round 1 comprised a list of 74 procedural skills. Table 2 shows the consensus of importance of procedural skill competency level rated by the experts. Eleven procedural skills were rated 100% agreement and were not re-submitted in Round 2. Twenty-eight skills achieved 90 – 100% agreement and nine skills scored 80 – 90% and were re-presented in Round 2 for stability of scoring. The specialist categories of ophthalmoscopy, women’s health and urogenital scored the lowest agreement (see Table 2 for the procedural skills not re-submitted in Round 2). Following analysis of experts’ suggestions and reconciliation with the MDANZ guidance statement [50] 21 skills were added, and one removed as it was not a procedural skill according to the definition of a procedural skill (prescription of intravenous fluids). Additionally, six skills were combined, namely, male and female catheterisation were combined into one skill, perform and interpret an ECG were combined into one skill, and maintaining an airway and basic airway management was combined.

Round 2

In Round 2, 54 procedures were considered by the experts who identified 25 procedures as being very or extremely important for medical students’ competency (see Table 2). Fourteen procedures did not establish consensus in importance, 12 were identified as having slight or low importance and one (cystoscopy) was ranked as not at all important. One procedure in the newly published MDANZ guidance [50], arterial blood gas, was included in Rounds 2 and 3 although not considered important in Round 1 but was deemed to require consideration. No further procedures were recommended after the first round. The importance of medical students demonstrating maintenance of competency of all procedures was rated on the 6-point Likert scale with a mean of 5.03. Some form of maintenance program was identified by 55% of the experts, with the majority creating a pre-intern program of between 3–5 procedures. The question about the intervals of a maintenance programme of procedural skills to be assessed/reviewed was variably reported as between every 6 months to annually and prior to graduation as shown in Table 3.

Round 3

An individual summary of the Round 2 median scores for each procedural competency plus the median group results were provided separately to each participant prior to the Round 3 survey. Round 3 explored the level of importance of maintenance for the final set of core procedural skill and the level of maintenance (i.e., observer, novice, competent, proficient). Table 4 shows that 41 procedural skills were considered to require maintenance at a proficient or competent level, 14 with 100% agreement. Four procedures achieved between 70—77% agreement but did not establish the threshold for consensus in maintenance of competency. The levels of maintenance showed variability in the selections.

Discussion

The purpose of this Delphi study was to identify core procedural competencies for Australian medical students and to establish the level of importance of maintenance of such skills. To our knowledge, this is the first study to explore and achieve consensus on the requirement to maintain competency in identified procedural skills and to what level, in the Australian context. We deployed a three-round Delphi technique resulting in a final list of 46 procedural skills representing the consensus opinion of an expert group of Australian faculty educators and healthcare clinicians. Importantly, experts agreed on the importance of competence, acknowledged that skills decay and that continued practice is required to maintain competency.

Our findings provide critical information about the essential procedural skills integral to the Clinical Practice domain of Australian medical curricula. Importantly, they reveal agreement to ensure graduates are able to: select and perform safely a range of common procedural skills as required by the AMC [1]. Reconciling our findings with other guidelines/catalogues reveals general agreement. All procedures on the MDANZ guidance statement of clinical practice [50] achieved consensus in our study (i.e., they are within the listed 46 skills). We also established consensus for other skills e.g., vaginal birth, otoscopy, breast examination, and insertion of a Guedel airway. Such differences may reflect changes in the roles of medical students and interns since the Australian Junior Doctor Framework was published in 2009 [6]. There was agreement that procedures such as intravenous drug administration, diagnosis of pregnancy, corneal and foreign body removal and skin lesion excision should remain at an intern role level [6].

Our findings concur with all recommended practical skills and procedures from the UK’s GMC revised 2019 list [8] and the majority of the clinical-practical skills in the German National competency-based learning objective catalog medicine [15] and the Dutch nested EPAs [55]. Surprisingly, the GMC graduate outcomes list of practical skills does not feature basic life support or cardiorespiratory procedures although most UK medical schools do provide some form of compulsory life support training [56]. The number of practical skills and procedures that a UK graduate must know and be able to do has reduced from 32 practical procedures in 2014 (to 23 in 2019) [8].

In Australia, upon successful completion of an accredited medical program, graduates complete a mandatory internship year. Interns may demonstrate procedural lapses and/or practice areas of risk which must be remediated prior to full registration [57]. There are, however, no Australian national requirements to demonstrate procedural competency for registration. By comparison, in other countries regulatory bodies provide a clear catalogue of practical skills and procedures accompanied by minimum levels of proficiency for safe practice [58]. Significantly, our findings highlight that experts view maintenance of competency as essential for professional growth and confidence, and for the safety of patients [22]. We hope the findings from the present study become the catalyst for further research exploring factors that benefit students understanding of maintenance of procedural skills.

The present study has some limitations. Thirty-six skills did not reach consensus from our expert panel. For example, lumbar puncture, proctoscopy and sigmoidoscopy, central line insertion, endotracheal placement, fell short of reaching 80% consensus, which does concur with previous studies [24, 59]. There are several reasons why this might be the case. We were specifically interested in preparation of students for learning on a continuum to achieve and maintain competency in the Clinical Practice domain of Australian medical curricula. Barr and Graffeo’s [24] study was conducted in US, and Monrouxe et al.’s. [59] study was conducted in UK; therefore, it may be due to differences across countries. Given that many hospitals have staff to perform dedicated services (e.g., intravenous cannulation and PICC line insertion), it may be that some specialist procedures were not perceived as a competency requirement for medical students. We drew on a national network of academics and clinicians and gathered views from a range of disciplines, however, it is possible that the size and composition of the expert panel may not have been representative of all medical schools and states. Further, our panel comprised of faculty and practicing health care clinicians which may have contributed to unknown expectations of medical students’ role in specialty areas such as ophthalmology, urology and women’s health and a disproportionate involvement in conveying practical skills in cardiovascular, respiratory and trauma areas.

Medical educators engaged with interest in our consensus study with a high retention of participants in the three rounds, and importantly there exists agreement about the core features of procedural skills training (i.e., skills being taught, level of competency, importance of maintenance). It is not known if this lack of preparedness during internship is due to a decline in practical skills teaching or the maintenance of competency in the curricula of medical students [60]. Our findings highlighted areas where there is less certainty in the requirements for medical students’ competency related to procedural skills, potentially requiring further exploration to examine this. Furthermore, our findings support Sawyer’s evidence-based framework suggesting the importance of maintaining skill levels [26]. An area of future research is to explore how students are currently maintaining their competence with semi-structured interviews. We are currently undertaking this work.

Conclusions

The present study used a modified Delphi method to establish consensus of 46 procedural skills to underpin the core competencies required for Australian medical students by graduation. Our findings support the importance teaching and maintenance of competency in these procedures within the pre-clinical years of medical curricula and beyond, aligning with the change to an outcomes model of competency-based medical education. Our findings highlight the importance of maintenance to alleviate decay in procedural skills reported in the literature. We suggest that valuing the importance of maintaining skills competency improves patient care and demonstrates attributes of twenty-first century sustainable medical professionals who work as safe, functional practitioners.

Availability of data and materials

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Australian Medical Council. Standards for Assessment and Accreditation of Primary Medical Programs by the Australian Medical Council. 2012. https://www.amc.org.au/wp-content/uploads/2019/10/Standards-for-Assessment-and-Accreditation-of-Primary-Medical-Programs-by-the-Australian-Medical-Council-2012.pdf.

Australian Medical Council. Assessment and Accreditation of Medical Schools: Procedures for Assessment and Accreditation of Medical Schools by the Australian Medical Council. 2019.

Frank JR, Mungroo R, Ahmad Y, Wang M, De Rossi S, Horsley T. Toward a definition of competency-based education in medicine: a systematic review of published definitions. Med Teach. 2010;32(8):631–7.

Dehmer JJ, Amos KD, Farrell TM, Meyer AA, Newton WP, Meyers MO. Competence and confidence with basic procedural skills: the experience and opinions of fourth-year medical students at a single institution. Acad Med. 2013;88(5):682–7.

McGaghie WC. Mastery Learning: It Is Time for Medical Education to Join the 21st Century. Acad Med. 2015;90:1438–41.

Confederation of Postgraduate Medical Education Councils. Australian Curriculum Framework for Junior Doctors. 2009.

Medical Deans of Australia and New Zealand. Medical Graduate Competency Framework Stage 2. Final Report. Sydney: HealthWorkforce Australia; 2012.

General Medical Council. Practical skills and procedures. 2019.

Frank JR, Snell LS, Cate OT, Holmboe ES, Carraccio C, Swing SR, et al. Competency-based medical education: theory to practice. Med Teach. 2010;32(8):638–45.

The Royal College of Physicians and Surgeons of Canada. The CanMEDS 2005 physician competency framework. Better standards. Better physicians. Better care. In: Frank JRE, editor. Ottawa: The Royal College of Physicians and Surgeons of Canada; 2005.

Lomis K, Amiel JM, Ryan MS, Esposito K, Green M, Stagnaro-Green A, et al. Implementing an Entrustable Professional Activities Framework in Undergraduate Medical Education: Early Lessons From the AAMC Core Entrustable Professional Activities for Entering Residency Pilot. Acad Med. 2017;92(6):765–70.

Englander R, Flynn T, Call S, Carraccio C, Cleary L, Fulton TB, et al. Toward Defining the Foundation of the MD Degree: Core Entrustable Professional Activities for Entering Residency. Acad Med. 2016;91(10):1352–8.

Ten Cate O. Competency-based education, entrustable professional activities, and the power of language. J Grad Med Educ. 2013;5(1):6–7.

Schnabel K, Boldt P, Breuer G, Fichtner A, Karsten G, Kujumdshiev S, et al. A Consensus Statement on Practical Skills in Medical School – a position paper by the GMA Committee on Practical Skills. GMS Z Med Ausbild. 2011;28(4):Doc58.

Hahn EG, Fischer MR. National competence-based learning objectives for undergraduate medical education (NKLM) in Germany: cooperation of the association for medical education (GMA) and the association of medical faculties in Germany (MFT). GMS Z Med Ausbild. 2009;26(Doc35).

Burgi H, Rindlisbacher B, Bader C, Bloch RD, Bosman F, Gasser C, et al. Swiss Catalogue of Learning Objectives for Undergraduate Medical Training. Bern: Working Group under a Mandate of the Joint Commission of the Swiss Medical Schools; 2008.

Laan RF, Leunissen CL, van Herwaarden CLobotPG. The 2009 framework for undergraduate medical education in the Netherlands. Tijdschrift voor Medisch Onderwijs. 2010;29(1):10–5.

General Medical Council. The state of medical education and practice in the UK. 2014. https://www.gmc-uk.org/-/media/documents/somep-2014-final_pdf-58751753.pdf. Accessed 11 Feb 2022.

Frank JR, Snell L, Englander R, Holmboe ES. Implementing competency-based medical education: Moving forward. Med Teach. 2017;39(6):568–73.

General Medical Council. Outcomes for graduates (Tomorrow’s Doctors). 2015.

Teagle AR, George M, Gainsborough N, Haq I, Okorie M. Preparing medical students for clinical practice: easing the transition. Perspect Med Educ. 2017;6(4):277–80.

van Mook WNKA, van Luijk SJ, Zwietering PJ, Southgate L, Schuwirth LWT, Scherpbier AJJA, et al. The threat of the dyscompetent resident: A plea to make the implicit more explicit! Adv Health Sci Educ. 2015;20(2):559–74.

Goldacre MJ, Lambert TW, Svirko E. Foundation doctors’ views on whether their medical school prepared them well for work: UK graduates of 2008 and 2009. Postgrad Med J. 2014;90(1060):63.

Barr J, Graffeo CS. Procedural Experience and Confidence Among Graduating Medical Students. J Surg Educ. 2016;73(3):466–73.

Liddell MJ, Davidson SK, Taub H, Whitecross LE. Evaluation of procedural skills training in an undergraduate curriculum. Med Ed. 2002;36:1035–41.

Sawyer T, White M, Zaveri P, Chang T, Ades A, French H, et al. Learn, See, Practice, Prove, Do, Maintain: An Evidence-Based Pedagogical Framework for Procedural Skill Training in Medicine. Acad Med. 2015;90(8):1025–33.

Epstein RM, Hundert EM. Defining and Assessing Professional Competence. J Am Med Assoc. 2002;287(2):226–35.

Fitch MT, Edmunds S, Bruggen J, Askew K, Jackson J, Manthey DE. Designing and Implementing a Medical Student Procedures Curriculum. Med Sci Educ. 2014;24(3):325–32.

McKenzie S, Burgess A, Chapman R, Mellis C. Pre-interns: ready to perform? Clin Teach. 2015;12:109–14.

Fullerton JT, Thompson JB. Examining the evidence for The International Confederation of Midwives’ essential competencies for midwifery practice. Midwifery. 2005;21(1):2–13.

Connick RM, Connick P, Klotsas AE, Tsagkaraki PA, Gkrania-Klotsas E. Procedural confidence in hospital based practitioners: implications for the training and practice of doctors at all grades. BMC Med Educ. 2009;9:2.

Memon MA, Brigden D, Subramanya MS. Assessing the Surgeon’s Technical Skills: Analysis of the Available Tools. Acad Med. 2010;85:869–80.

Riegel B. How Well are Cardiopulmonary Resuscitation and Automated External Defibrillator Skills Retained over Time? Results from the Public Access Defibrillation (PAD) Trial. Acad Emerg Med. 2006;13(3):254–63.

Davis CR, Toll EC, Bates AS, Cole MD, Smith FC. Surgical and procedural skills training at medical school - a national review. Int J Surg. 2014;12(8):877–82.

Ericsson KA. Acquisition and Maintenance of Medical Expertise: A Perspective From the Expert-Performance Approach with Deliberate Practice. Acad Med. 2015;90(11):1471–86.

Bashook PG, Parboosingh J. Continuing medical education: Recertification and the maintenance of competence. BMJ. 1998;316(7130):545.

Kovacs G, Bullock G, Ackroyd-Stolarz S, Cain E, Petrie D. A randomized controlled trial on the effect of educational interventions in promoting airway management skill maintenance. Ann Emerg Med. 2000;36(4):301–9.

Linstone HA, Turoff M. Delphi: A brief look backward and forward. Technol Forecast Soc Chang. 2011;78:1712–9.

Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs. 2000;32(4):1008–15.

Michels ME, Evans DE, Blok GA. What is a clinical skill? Searching for order in chaos through a modified Delphi process. Med Teach. 2012;34(8):e573–81.

Albarqouni L, Hoffmann T, Straus S, Olsen NR, Young T, Ilic D, et al. Core Competencies in Evidence-Based Practice for Health Professionals: Consensus Statement Based on a Systematic Review and Delphi Survey. JAMA Netw Open. 2018;1(2):e180281.

Green RA. The Delphi technique in educational research. SAGE Open. 2014;4:1–8.

Hsu C, Sandford B. The Delphi technique: making sense of consensus. Pract Assess Res Eva. 2007;12:1–8.

Holey EA, Feeley JL, Dixon J, Whittaker VJ. An exploration of the use of simple statistics to measure consensus and stability in Delphi studies. BMC Med Res Methodol. 2007;7:52.

de Villiers MR, de Villiers PJT, Kent AP. The Delphi technique in health sciences education research. Med Teach. 2005;27(7):639–43.

Diamond IR, Granta RC, Feldmana BM, Pencharzd PB, Lingd SC, Moorea AM, et al. Defining consensus: A systematic review recommends methodologic criteria for reporting of Delphi studies. J Clin Epidemiol. 2014;67:401–9.

Okoli C, Pawlowski SD. The Delphi method as a research tool: an example, design considerations and applications. Inform Manag. 2004;42(1):15–29.

Khan K, Ramachandran S. Conceptual framework for performance assessment: Competency, competence and performance in the context of assessments in healthcare – Deciphering the terminology. Med Teach. 2012;34(11):920–8.

Hordijk R, Hendrickx K, Lanting K, MacFarlane A, Muntinga M, Suurmond J. Defining a framework for medical teachers’ competencies to teach ethnic and cultural diversity: Results of a European Delphi study. Med Teach. 2019;41(1):68–74.

Medical Deans of Australia and New Zealand. Clinical practice core competencies for graduating medical students. Sydney: Medical Deans Australia and New Zealand; 2020.

Dreyfus SE, Dreyfus HL. A Five-Stage Model of the Mental Activities Involved in Directed Skill Acquisition. Berkeley: University of California; 1980.

Keeney S, Hasson F, McKenna HP. A critical review of the Delphi technique as a research methodology for nursing. Int J Nurs Stud. 2001;38(2):195–200.

Trevelyan EG, Robinson N. Delphi methodology in health research: how to do it? Eur J Integr Med. 2015;7:423–8.

Copeland C, Fisher J, Teodorczuk A. Development of an international undergraduate curriculum for delirium using a modified delphi process. Age Ageing. 2018;47(1):131–7.

ten Cate O, Graafmans L, Posthumus I, Welink L, van Dijk M. The EPA-based Utrecht undergraduate clinical curriculum: Development and implementation. Med Teach. 2018;40(5):506–13.

Phillips PS. Training in basic and advanced life support in UK medical schools: questionnaire survey. BMJ. 2001;323(7303):22–3.

Wilson A, Feyer AM. Review of Medical Intern Training: final report. Australian Ministers' Advisory Council. 2015. http://www.coaghealthcouncil.gov.au/portals/0/review. Accessed Mar 2021.

Antonoff MB, Swanson JA, Green CA, Mann BD, Maddaus MA, D’Cunha J. The significant impact of a competency-based preparatory course for senior medical students entering surgical residency. Acad Med. 2012;87(3):308–19.

Monrouxe LV, Grundy L, Mann M, John Z, Panagoulas E, Bullock A, et al. How prepared are UK medical graduates for practice? A rapid review of the literature 2009–2014. BMJ Open. 2017;7(1):e013656.

Matheson C, Matheson D. How well prepared are medical students for their first year as doctors? The views of consultants and specialist registrars in two teaching hospitals. Postgrad Med. 2009;85(1009):582–9.

Acknowledgements

The authors wish to thank Dr Suzanne Gough for her invaluable assistance, and Dr David Waynforth, Dr Gary Hamlin and Professor Peter Jones for early discussions. We also thank the Delphi participants for their time, effort, and expertise, without which this project would not have been possible.

Funding

None.

Author information

Authors and Affiliations

Contributions

PG developed, planned and carried out the Delphi survey, completed the analyses and drafted the manuscript. EE contributed to the development and planning stages of the Delphi survey, participated in the analyses, and writing of the manuscript. EE and MT contributed extensively to the drafting and critical revision of the manuscript. All authors read and approved the final manuscript.

Authors’ information

PG is a PhD candidate, School of Education, Faculty of Humanities and Social Sciences, The University of Queensland, Brisbane, Australia. EE is a Senior Lecturer, School of Education, Faculty of Humanities and Social Sciences, The University of Queensland, Brisbane, Australia. MT is a Senior Lecturer, School of Nursing, Midwifery and Social Work, Faculty of Health and Behavioural Sciences, The University of Queensland, Brisbane, Australia.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This project was approved by Bond University’s Human Research Ethics Committee protocol no: PG03205. All methods were carried out in accordance with relevant guidelines and regulations. All participants provided informed consent prior to participation and consent to publication.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Green, P., Edwards, E.J. & Tower, M. Core procedural skills competencies and the maintenance of procedural skills for medical students: a Delphi study. BMC Med Educ 22, 259 (2022). https://doi.org/10.1186/s12909-022-03323-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-022-03323-9