Abstract

Background

Imperial College London launched a new, spiral undergraduate medical curriculum in September 2019. Clinical & Scientific Integrative cases (CSI) is an innovative, flagship module, which uses pioneering methodology to provide early-years learning that [1] is patient-centred, [2] integrates clinical and scientific curriculum content, [3] develops advanced team-work skills and [4] provides engaging, student-driven learning. These aims are designed to produce medical graduates equipped to excel in a modern healthcare environment.

Methods

CSI has adopted a novel educational approach which utilises contemporary digital resources to deliver a collaborative case-based learning (CBL) component, paired with a team-based learning (TBL) component that incorporates both learning and programmatic assessment. This paper serves to explore how first-year students experienced CSI in relation to its key aims, drawing upon quantitative and qualitative data from feedback surveys from CSI’s inaugural year. It provides a description and analysis of the module’s design, delivery, successes and challenges.

Results

Our findings indicate that CSI has been extremely well-received and that the majority of students agree that it met its aims. Survey outputs indicate success in integrating multiple elements of the curriculum, developing an early holistic approach towards patients, expediting the development of important team-working skills, and delivering authentic and challenging clinical problems, which our students found highly relevant. Challenges have included supporting students to adapt to a student-driven, deep learning approach.

Conclusions

First-year students appear to have adopted a patient-centred outlook, the ability to integrate knowledge from across the curriculum, an appreciation for other team members and the self-efficacy to collaboratively tackle challenging, authentic clinical problems. Ultimately, CSI’s innovative design is attractive and pertinent to the needs of modern medical students and ultimately, future doctors.

Similar content being viewed by others

Background

Imperial College London’s School of Medicine launched a new, spiral undergraduate curriculum in 2019 with emphasis on patient-orientated integration of content, digital innovation, and student-driven, active learning. Medical schools are increasingly recognising the importance of integrating curriculum contents both vertically (across the years of the degree) and horizontally (between contemporaneous modules), to represent the integrated nature of a doctor’s role and to develop students’ abilities to apply knowledge in basic, clinical and social sciences to build holistic understanding of patients [1, 2]. Additionally, available medical knowledge remains an ever-expanding field, with resultant saturation of curricula [3]. Developing the ability to find, evaluate and utilise relevant information [4, 5] is therefore increasingly important. In response to these needs, a pioneering flagship module called Clinical & Scientific Integrative cases (CSI), was created. It creatively combines collaborative case-based learning (CBL) with programmatic assessment that uses a team-based learning (TBL) structure; an approach which has not been documented previously. This novel methodology provides potential for great benefit in the advancement of team-working skills, problem-solving capabilities and clinical application of knowledge in early-years students. CSI aims to encompass four key principles; [1] to be patient-focused, [2] to integrate clinical and scientific learning, [3] to develop advanced team-work and collaboration skills and [4] to provide student-driven learning that is motivating and engaging. In delivering such learning from the beginning of an undergraduate curriculum, we hope to cultivate graduates with the capacity to integrate multi-level aspects of health (and thus deliver patient-focused care), with good skills in working with colleagues and with the confidence to solve the clinical problems they will face as newly qualified doctors.

CBL “prepares students for clinical practice. It links theory to practice, through the application of knowledge to authentic cases, using inquiry-based learning” [6]. TBL combines team-work with investigation, use of resources and application of knowledge and is a valuable tool for improving conceptual understanding [7]. A recent increase in TBL usage reflects a shift towards developing skills that will equip graduates to thrive, rather than memorization of facts [8]. CBL and TBL are therefore both useful tools for contemporary curricula. In combination, we believe they have potential to profoundly impact the learning of early-years students. Particular features of our combined approach include a focus on collaborative work (shown to increase engagement and enhance student-driven learning [9]), professionally-produced patient videos that make learning feel authentic [10] and enhance student immersion, and a persisting central theme of a patient, serving as a focus to integrate learning. Perhaps most novel is our use of TBL in its full structure as a means of programmatic assessment. Whilst individual readiness assurance test (iRAT) and team readiness assurance test (tRAT) components (composed of single-best answer questions (SBAQs)) have been used for assessment previously [11,12,13,14], team application components have not. Collaborative testing may expedite the development of reliable team-working, critical thinking and problem-solving skills [15], support the development of clinical initiative and expertise, and provide impetus to engage in formative learning events.

The main aim of this study is to present the design and delivery of a pioneering and innovative module underpinned by four key principles (stated above) relevant to the needs of a modern medical curriculum. We will present students’ perspectives (collected via systematic surveys) about the different facets of the module, and analyse whether, from their experiences, the module can achieve these core principles. We will discuss these perspectives in conjunction with aspects of module design, programmatic assessment results and our own experience in order to critically review our novel approach. A secondary aim is to specifically explore the role of TBL-based programmatic assessment in student engagement and the development of key skills; namely the ability to integrate knowledge to create patient-centred management plans, and to work effectively in teams.

Methods

Module Design

CSI was developed by a curriculum reform group, based on significant collective experience in higher education and review of the available literature on relevant teaching practices. Year one content was designed to reflect common presentations likely to be encountered during students’ first clinical placements and on this basis, fictional but authentic patient cases were created. A core module team was established, comprising of module leads (one clinical, one scientific), teaching fellows (one clinical, one scientific) and an instructional designer. Case content was discussed with experts and other module leads; important for the optimisation of an integrated, spiral curriculum design.

The core structure of CSI is shown in Fig. 1. Initially, ten CSI ‘cases’ were planned for year one. However, the 2020 COVID-19 pandemic necessitated remote delivery methods during term 3, therefore, this paper will focus solely on the first 6 cases (terms 1-2), delivered in the format initially intended.

Case-based learning component

Asynchronous guided online material was released one week prior to each face-to-face session. The ‘patient’ was introduced to students using an illustrated character profile and a bespoke videographic representation of their consultation (Fig. 1). The profile required digital interaction with a virtual collection of objects, which provided important information about the patient (often referenced during sessions), helping to build a holistic understanding. The bespoke videos utilised original scripts, influenced by relevant clinical/communication specialists and created by a specialist digital team using professional actors. These introduced the clinical problem, whilst maintaining a degree of uncertainty, essential for exploration within the upcoming session. Additional pre-session material included a case introduction video from module leads and preparatory reading (bespoke content or reputable online resources) to ensure sufficient basic understanding.

Each 2-hour face-to-face CBL session was facilitated by a scientific and clinical tutor pair. Approximately 50 students per session worked in pre-defined groups of 5-6. Groups remained constant throughout the year to facilitate the development of team dynamic. CBL sessions explored three broad themes relevant to the case, each comprising of 2-4 tasks. For example, ‘Mrs Wilkins’ (Fig. 1), is an elderly lady who falls and breaks her hip. Themes/tasks explored [1] risk factors for falls and fracture (including the mechanostat theory and osteoporosis), [2] radiological identification, management and outcomes of hip fractures and [3] recognising and managing delirium. The diverse content of each case built to produce an integrated and holistic understanding of the subject, featuring a balance of clinical and scientific content and regular representation of other aspects of the curriculum.

Tasks required groups to collaboratively produce answers and usually to submit these to a digital ‘whiteboard’ using an online audience response tool. Task format varied; groups might be required to submit short answers to a word cloud, complete a matching exercise, cast votes, or rank responses in order of preference. Tasks also varied in complexity, requiring and developing a range of cognitive skills and spanning Bloom’s taxonomy levels [16]. Each task was consolidated with an inclusive, enriching classroom discussion facilitated by tutors, drawing upon submitted responses and engaging the whole class simultaneously. These scaffolded the introduction of new and challenging concepts, distributed learning and ensured objectives were met.

Following a session, further resources were released for independent study, including a de-briefing video from module leads, a task recap and further reading to build upon session content.

Team-Based Learning component

Each face-to-face session was paired with a TBL-based assessment (TBL-A), as a means of programmatic assessment (summarised in Table 1). Each TBL-A contained an iRAT, a tRAT and a team application exercise (tAPP). Each component contributed to the case mark, each case mark contributed to the end-of-module mark, and the end-of module mark was weighted to 16.5% of each student’s end-of-year mark.

TBL-As were carried out under exam conditions, with students synchronously present in an exam hall, accessing a dedicated online platform from university-issued electronic devices. Students worked in their pre-defined teams, with a group leader submitting consensus responses. tAPPs were related to the index case content and their formats varied, including: data interpretation to guide selection of appropriate antibiotics, production of an infographic for healthcare workers on patient-centred care in sickle cell anaemia, and making/explaining clinical decisions around short case studies. A number of clinical specialists contributed to tAPP design to ensure relevance and accuracy. Whereas iRATs/tRATs were closed-book, tAPPs were either open-book (allowing access to notes and web browsers), or provided specific online resources for reference. iRAT/tRAT scores were calculated automatically by the software and tAPPs were double-marked by faculty according to robust mark schemes. Following each TBL-A, students were provided with their iRAT/tRAT marks and either group-specific or cohort-level feedback on the tAPP. Students were encouraged to submit challenges for SBAQs that remained unclear after the tRAT process, via email. These were addressed in correspondence distributed to all students.

Evaluation Methodology

This is an exploratory and descriptive case study [17]. Its objectives are two-fold: to understand how learners experienced the CSI module in relation to its key principles, and to analyse whether programmatic TBL-based assessment contributed to an integrated and lived experience of those principles. This is a single case study about the CSI module, implemented at Imperial College London School of Medicine to first-year students starting their MBBS programme in 2019. It relies on multiple sources of evidence, which will be brought together for an integrated discussion and understanding of the innovative nature of CSI.

Learners’ perspectives

We delivered a number of optional, online surveys to students. These were developed over several weeks by members of the CSI team in collaboration with a designated evaluation and research team. Surveys A and B were developed to capture information on student experience alongside more logistical information to aid adjustment and improvement of the module in its early stages. Survey C was designed with a greater focus on understanding student development, and is part of a longitudinal research project that will look at the development of self-efficacy traits across students’ first three academic years (the duration of CSI). Survey C therefore incorporates modified elements of validated scales around teamwork and empathy [18, 19] and where an appropriate validated scale was not available, elements of a pilot study around integration of knowledge [20]. The use of Survey A for research was deemed exempt from requiring ethical approval by the Faculty’s Medical Education Ethics Committee (MEEC). The use of Surveys B and C and exam results was granted ethical approval (MEEC1920-181).

Survey A was piloted with a ‘warm-up’ case, which students undertook to become acquainted with the module structure prior to the first programmatic case. Survey B and C, which were offered as one-offs, were not piloted. Many survey items sought practical feedback (for example material, timings, constructive alignment) or feedback on other aspects not relevant to this paper (including remote delivery during the pandemic). For this paper we have drawn upon survey items relevant to CSI’s four key aims. The full surveys are included in Additional file 1.

Survey A was offered to students after each of the cases 1-6, as a feedback link at the end of the TBL-A (on the final page of the digital session content). Students that chose to participate accessed the link and undertook the survey before leaving the examination room. A series of statements (15 closed items, of which 4 are used in this analysis), invited responses on a six-point Likert scale of: ‘strongly agree’, ‘agree’, ‘somewhat agree’, ‘somewhat disagree’, ‘disagree’ and ‘strongly disagree’.

Survey B was offered to students once, after the final year one case (delivered remotely during term 3), as a feedback link on the final page of TBL-A content. It asked about overall experiences of the cases in both original and remote formats, using a series of statements (16 closed items, of which 7 used in this analysis) and the same Likert scale.

Survey C was offered to students once via email, after completion of case 6 (the final case delivered in its intended format). Students were invited to score statements (16 closed items, of which 7 used in this analysis) from 0 to 100, where 0 meant “I cannot do this at all” and 100 meant “I am highly certain I can do this”, indicating their perceived capability.

In addition to the above scales, all three surveys featured a small number of additional, optional open questions allowing free-text responses.

Results

There were 361 year one students. The number of respondents per survey varied from 57 to 275 per case for survey A (median respondents 119). Survey B yielded 74 respondents and survey C yielded 97 respondents.

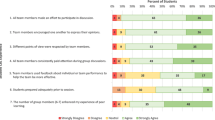

Surveys A and B: Experiences of CSI

Responses from survey A (repeated after each case) and survey B (distributed after the final case) are combined in Table 2 in order to categorise feedback by the key principles of CSI. This represents the percentage of respondents selecting each Likert response. For survey A, data is presented in the format of median percentages and median number of respondents per case. The number of survey A respondents for each case varied from 57 to 275. Data for survey B is shown as absolute percentages of respondents per Likert option, as the survey was only collected once. There were 74 responses, although not all respondents answered every question. For all statements in surveys A and B pertaining positively to the core principles of CSI, the minimum percentage of students selecting agree/strongly agree was 50%. In contrast, the highest percentage selecting disagree/strongly disagree was never greater than 9%.

Survey C: Development of self-efficacy

Responses are presented in the format of median student score per item. 97 students completed the survey, although only 89 students answered all questions. After six CSI cases, students’ responses indicated a high level of self-efficacy in relation to the key principles of CSI, as detailed in Table 3. A selection of relevant free text comments from this survey is included in Additional file 2.

Programmatic assessment performance

TBL-As results are summarised in Table 4. Of 361 first-year students, 6 were excluded from analysis due to interruption of studies. Mean scores per case were calculated from students in attendance. Although the iRAT and tRAT have different scoring systems, an increment from one to the other is typical; we observed increases ranging from 10.6 to 16.1% points across the six cases, with a median increment of 14.1 per case (IQR 2.1).

Of six TBL-As, 88.7% of students attended six, 11.0% attended five and 0.3% attended four or fewer.

Discussion

Patient-focused learning

Patients want medical care that explores their concerns, seeks an integrated understanding of their world and provides management options that are mutually agreeable and enhance a continuing relationship with their doctor [21]. Such care is emphasised within the General Medical Council’s outcomes for graduates [22], indicating that medical education should develop the adoption of a flexible and empathetic approach towards patients. For this to become habitual, medical education must develop a drive to manage health in partnership with patients from the very beginning of training. Patient-centred medical education can therefore be described as being “about the patients, with the patients, and for the patients” [23].

CSI sessions were designed to maintain focus on the index ‘case’. For example, in a task on falls risk factors in Mrs Wilkins’ session, students were asked to highlight the risk factors most relevant to her. Other tasks were more transparent in building a patient-centred approach; for example, asking students to consider the ideas, concerns and expectations of a patient with sickle cell anaemia. The utility of such tasks in engendering a holistic approach has been evident not only from the thoughtful responses that we observed but also from feedback. A median of 89% of students (across six cases) at least somewhat agreed that the cases encouraged them to relate to the patient at hand (survey A, Table 2) and after six cases, the students gave a median confidence score of 74/100 for being able to focus on a patient in a holistic manner (survey C, Table 3). We received numerous comments across surveys about how CSI has taught students to appreciate different experiences of disease and to adopt a personalised approach. Collectively, this suggests that our first-year students have developed an understanding of the centrality of the patient to providing good medical care. We only received positive feedback on the use of patient cases to enhance learning, which indicates that these were both accessible and helpful.

CBL is integral to our patient-centred format. The video resources were specifically highlighted by students as helping to show how patients can be affected by disease. When delivered to qualified doctors, CBL has improved patient outcomes [24]; after taking part in CBL around diabetes management, physicians saw improved glycaemic parameters in their diabetic patients [25]. One of our early CSI case videos featured a doctor asking the patient to score their pain severity. We later obtained feedback from faculty that students had asked similar questions to patients during clinical placements (reporting learning this in CSI). Our students put patient-centred approaches into practice not only during formative tasks, but within TBL-As. One tAPP required students to read fictional case studies of patients attending a falls clinic, and using a number of resources, propose personalised management plans. Groups produced a huge variety of holistic suggestions, including home alterations, family support, medication changes and exploring the patients’ wishes. We have been consistently impressed by the insightful application of knowledge to patient-orientated tasks from these relatively inexperienced students.

Integration of clinical and scientific content

Integration in medical education is thought to be highly beneficial. It supports students to draw connections between scientific, social, clinical, professional and personal parameters and in this way can be considered crucial in preparing students for the complex nature of their future roles [1]. It is also a key aspect of deep learning, the approach broadly understood to be most valuable in medical graduates [26] and encouraged by CSI. Integration may also result in enjoyable learning and increased student satisfaction [27].

We were interested in whether [a] students felt CSI was successful in integrating clinical and scientific concepts, and [b] whether they felt that this was beneficial to their learning. Almost all students (98.4%) at least ‘somewhat agreed’ that CSI “encouraged [them] to integrate knowledge and skills from different areas”, with 79% agreeing/strongly agreeing (survey B, Table 2). After six cases, students gave median confidence scores of 76/100 for being able to apply understanding of basic science to clinical problems in order to contribute to better patient care, and 85/100 for being able to explain why integrated teaching is important to their development (survey C, Table 3); this indicates that students feel integration will improve their clinical proficiency and also that after six cases, they have self-efficacy in their ability to draw upon this. Throughout the surveys, students provided comments on the perceived benefits of integration; they expressed that CSI connected their scientific learning with clinical application and helped them to understand the importance of other aspects of their curriculum. Although we didn’t receive any negative feedback about the integration of clinical and scientific content, not all students found this aspect easy, with one commenting that “it is hard to combine the two, however the more we do it the more we understand how to do so”, Another student said they felt more able to draw such connections independently as the year progressed (Additional file 2), indicating that CSI helped them to develop their own deep, integrative learning approach.

Motivating and engaging learning

Active learning methodologies require individuals to participate and take responsibility for their learning. They allow learners to engage with material in a way that encourages discussion and critical thinking, and to build on pre-existing knowledge [28]. In this way, such methodologies lend themselves to integration of content and better understanding. Active learning can also develop communication skills by allowing students to practice reasoning and debating. A student-centred approach is thought to improve engagement [29] and it follows that active learning has the potential to develop the self-motivation that is crucial for a career that requires lifelong learning [28].

We observed a high level of engagement with CSI and believe that the reasons for this are two-fold. The first is its patient-orientated, integrative, active-learning format, described by students as interesting, engaging, relevant and fun (Additional file 2). This student-centred focus is tangible; 92% of respondents at least somewhat agreed that the cases required them to take responsibility for their own learning (survey B, Table 2). Although we did not enquire how students felt about this, we also observed that 82% of respondents per case at least somewhat agreed that they had been motivated to explore more about the topic (survey A, Table 2), from which one might interpret some enthusiasm. 84% of students at least somewhat agreed that the cases helped them to build knowledge they will remember (survey B, Table 2). We interpret these as rewarding figures given the volume of material that early students must cover and that a student-driven approach may be new to many first-year students [30].

The second contributor may lie in CSI’s assessment – an extrinsic driver to engage with formative sessions. It has previously been found that iRAT scores correlate with final examination scores when they contribute to grades, but are lower and do not correlate if they don’t [12, 14], suggesting that a summative test improves motivation to learn. We have experienced this directly, with attendance rates of ≥90% at face-to-face sessions and ≥96% at TBL-As. Being a new module, our first-year students have not been passed down informal resources from more senior students, a key element of a medical school’s hidden curriculum [31]. It will be interesting to observe what role this will play in future years. We intend to make yearly small changes to content in order to retain uncertainty, and we hope that the assessment element will continue to be a protective factor in maintaining engagement.

Team-work and collaboration

The ability to work effectively in a team is a universally-accepted skill required of a medical graduate. Doctors must know how to build teams and maintain effective teamwork, identify the impact of their behaviour on others, work effectively with colleagues in ways that best serve patients, apply adaptability and a problem-solving approach to shared decision-making and recognise and respect the roles of others [22].

CSI has teamwork at its core and this clearly benefits learning. 81% of respondents agreed/strongly agreed that the cases resulted in in-depth discussions with colleagues (survey B, Table 2) and 75% of students agreed/strongly agreed that discussing an answer aided their learning, with 95% at least ‘somewhat agreeing’ (survey A, Table 2). A particularly novel aspect of CSI is the contribution of a tAPP to grades. There is little research into collaborative testing for high-stakes exams but there are clear benefits to be obtained, which our survey results support. Firstly, collaborative testing may improve academic performance and knowledge retention [32]. Secondly, it may improve communication and team-work [33]. Feedback from the earliest cases suggest some challenges with team dynamics (“we need to work on time management, and try and share out the tasks equally” and “all my efforts in trying to contribute have been dismissed”). However, in later surveys, students provided numerous comments on the benefits of CSI in developing teamwork skills. We posit that this learning curve may not have been as remarkable had there not been the extrinsic motivation of an assessment - supported by comments like, “time pressure means we’re all willing to communicate effectively, so has greatly increase[d] this skill” (Additional file 2). Thirdly, collaborative testing may result in learning about oneself, others and interpersonal dynamics [34]. Many of the students’ comments around CSI improving understanding of others’ perspectives are relevant not only to patients but to team-members. As such, CSI may help to develop empathy for colleagues, an important characteristic in interprofessional collaboration [35]. Several students commented on how CSI has improved relationships within the group, increased respect for others’ opinions and allowed students to realise when others need support. Finally, TBL may help to develop an understanding of the value of teamwork; many students commented on learning to appreciate/utilise the individual strengths of colleagues. Although early comments indicated some students did not feel able to contribute, later surveys featured more positive feedback. Taking an overview, 84% of respondents per case agreed/strongly agreed that they felt able to make their voice heard (survey A, Table 2) and after six cases, students gave a median score of 80/100 for how able they felt to work with their team to achieve the required goals (survey C, Table 3), indicating a good degree of perceived ability to work effectively with others.

Teamwork as a support structure for challenging tasks

The scaffolding that comes from a group structure and provision of resources allowed us to deliver complex tasks and tAPPs (featuring challenges authentic to those that a junior doctor might face), to first-year students. These provided experiential learning around team decision-making and problem-solving. A median of 77% of students per case at least ‘somewhat agreed’ that the tAPPs were stimulating and interesting - a pleasing outcome, given that assessments are not always enjoyed by students [36] (survey A, Table 2). This value reached as high as 95% for individual tAPPs, supported by comments such as: “the tAPP was really interesting; the combination of the medical history with the use of the British National Formulary made it feel very similar to how I perceive clinical practice to be”, and “I like that we get to test our knowledge in ways that we will actually use in the future” (Additional file 2). CSI aims to capitalise upon the benefits in learning that can come from assessment [37]; not only relevant to conceptual knowledge, but also the skills and experiences that the TBL-As involve, and this benefit appears to have been felt by students.

Challenges and future steps

As a new module, CSI has experienced challenges. One has been in supporting students to adjust to a self-driven, deep learning approach. First-year students arrive with differing previous educational experiences, some of which will have favoured surface or strategic learning [26]; indeed, medical students may typically not shift to a deep learning approach until clinical years [38]. CSI favours the latter, and places emphasis on experiential learning through tasks that are semi-authentic mimics of future experiences in clinical teams, supported by consolidative tutor-facilitated discussions. However, it quickly became clear that more support was needed, as early sessions yielded requests for slides/recordings, with feedback such as “no slides were provided and didn’t know what I was supposed to make notes on”. Students also demonstrated pre-occupation with knowing what content might feature in the TBL-As and concern at the discrepancy of discussion points between different classrooms (“more guidelines would be helpful as different groups were taught different things”) (Additional file 2), in-keeping with the strategic approach that is common in medical students [26]. Student groups were permitted to submit ‘question challenges’, if they felt iRAT questions were unfair. A panel of academics from both the CSI team and the Year 1 assessments team met to discuss each challenge, approved or rejected the challenge and provided justification for the decision. This information was subsequently released to the students.

In response to feedback, we made key resource slides available after sessions and increased the detail in the post-session summaries, with positive feedback from students, who felt more able to immerse themselves and less worried about taking notes. We also provided tutors with clear learning points for each discussion. These measures aimed to provide better framework for revision whilst maintaining a focus on student-driven learning. Although we have no earlier data for comparison, after all cases, 79% of students at least somewhat agreed that the face-to-face sessions provided clarity around key learning points (survey B, Table 2). This issue could perhaps be tackled further with improved communications to students around the concepts of the module, for example ‘selling’ the advantages of experiential group-learning. It may also benefit students to understand that the focus of the TBL-As is on the associated learning, rather than to fail students. In our inaugural year, no student scored <50% in CSI which may reflect the support that a team-structure can provide. We also note the early feedback indicating difficulties experienced around team-working and collaborative assessment. We plan to discuss these issues pre-emptively in future introductory sessions, to normalise them as part of a learning curve, and encourage students to talk about any problems constructively within their team. Other possibilities include providing resources and escalation pathways for common team-work issues.

Obtaining a broad representation of our student cohort through survey responses was a challenge and is a possible limitation of this study. We observed a wide range of response rates to Survey A, from 16% (case 3) to 76% (case 2) of the year group. This may be for a variety of reasons: the verbal encouragement of the facilitator to provide feedback, whether the session had run late, students following others’ examples, or logistical aspects; for example, case 3 TBL-A was the students’ final activity prior to their Christmas break and students were therefore impatient to leave for the holidays. Surveys B and C captured 20% and 26% of the year group, respectively. Survey B was offered as on online link at the end of a remotely-delivered online session, making it easy for students to leave anonymously prior to completing it. Survey C was offered via email and it can be notoriously difficult to obtain responses this way without verbal encouragement. Obtaining high yield from online surveys is challenging across student and medical cohorts [39] for a number of reasons, and response rates may be lower for online surveys than other formats [40]. It is not uncommon for web-surveys conducted among students to yield response rates of <20% and it has been suggested that even response rates of 10% or less may be trusted provided the quality is checked [41] (although we acknowledge that low response rates may increase bias). A major contributor to low survey yield may have been ‘survey fatigue’; our students were faced with numerous, regular surveys from many modules due to the new curriculum changes. Although it is possible to improve uptake for online surveys with reminders, this was not considered appropriate given the volume of surveys being administered. Therefore, we had only brief windows to obtain responses. We made careful considerations to maximise responses [42]: limiting the number of items, making surveys clear and user-friendly, offering optional free-text spaces, and ensuring with regular bi-directional communication that students could see their feedback being addressed. Whilst we acknowledge the survey response rates could be higher, we feel the responses do overall demonstrate the experiences of students that we witnessed in leading the sessions. We also obtained verbal feedback during meetings with student representatives and feel that the survey responses reflect the feelings of the cohort that were communicated to us.

The composition of regular SBAQs based on new content was challenging. With content now established, it will be possible to write SBAQs further in advance, allowing for standard setting. Our six iRATs produced a wide range of mean scores (50.6-82.4%), demonstrating that it is feasible to write challenging SBAQs but also that providing sufficient challenge can be difficult. A difficult iRAT allows scope for substantial learning from the tRAT process (provided there is sufficient combined knowledge within the group to yield meaningful discussion). Conversely, if questions are too easy and understanding is universal, then tRAT discussion adds little. Interestingly, the iRAT to tRAT increment was reasonably stable across our TBL-As (10.6-16.1% points, Table 4). Even our poorest-scoring iRAT incremented by 15.1% points to tRAT, indicating that it was not so difficult that it limited the benefits to be had from discussion. However, this must be balanced with the fact that regular scores in this vicinity would result in a significant proportion of students failing the module. Therefore, we propose that aiming for a mean iRAT score of 60-80% allows both learning benefit and fair assessment.

Conclusions

In conclusion, CSI has successfully incorporated a number of highly important and relevant educational objectives (both skills-based and knowledge-based) into one module by means of a novel structure that draws upon the most valuable elements of CBL and TBL. This structure appears to have helped first-year medical students to develop a patient-centred, holistic approach to care, to integrate knowledge and skills from across the curriculum, to develop an inquisitive and self-driven approach to learning and to build important skills in communicating and working with colleagues, as well as a sense of appreciation for what others in a team have to offer. These are all skills that may not previously have been addressed until much later in a medical curriculum. The design of CSI has also supported the delivery of authentic clinical problems, which our early-years students have embraced, successfully tackled and found both enjoyable and relevant. By featuring a patient as a central focus and placing students in teams, CSI begins to develop the advanced skills required of medical graduates from the very first day of medical school.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CSI:

-

Clinical & Scienctific Integrative Cases

- CBL:

-

Case-based learning

- TBL:

-

Team-based learning

- TBL-A:

-

TBL-based assessment

- iRAT:

-

Individual readiness assurance test

- tRAT:

-

Team readiness assurance test

- tAPP:

-

Team application exercise

- SBAQ:

-

Single best answer question

References

Eisenstein A, Vaisman L, Johnston-Cox H, Gallan A, Shaffer K, Vaughan D, et al. Integration of basic science and clinical medicine: the innovative approach of the cadaver biopsy project at the Boston University School of Medicine. Acad Med. 2014;89(1):50–3.

Irby DM, Cooke M, O’Brien BC. Calls for reform of medical education by the Carnegie Foundation for the Advancement of Teaching: 1910 and 2010. Acad Med. 2010;85(2):220–7.

Teodorczuk A, Fraser J, Rogers GD. Open book exams: A potential solution to the “full curriculum”? Med Teach. 2018;40(5):529–30.

DiCarlo SE. Too much content, not enough thinking, and too little fun! Adv Physiol Educ. 2009;33(4):257–64.

Wartman SA, Combs CD. Reimagining Medical Education in the Age of AI. AMA J Ethics. 2019;21(2):E146-52.

Thistlethwaite JE, Davies D, Ekeocha S, Kidd JM, MacDougall C, Matthews P, et al. The effectiveness of case-based learning in health professional education. A BEME systematic review: BEME Guide No. 23. Med Teach. 2012;34(6):e421-44.

Smith MK, Wood WB, Adams WK, Wieman C, Knight JK, Guild N, et al. Why peer discussion improves student performance on in-class concept questions. Science. 2009;323(5910):122–4.

Reimschisel T, Herring AL, Huang J, Minor TJ. A systematic review of the published literature on team-based learning in health professions education. Med Teach. 2017;39(12):1227–37.

Krupat E, Richards JB, Sullivan AM, Fleenor TJ, Schwartzstein RM. Assessing the Effectiveness of Case-Based Collaborative Learning via Randomized Controlled Trial. Acad Med. 2016;91(5):723–9.

Saltan F, Ozden M, Kiraz E. Design and Development of an Online Video Enhanced Case-Based Learning Environment for Teacher Education. J Educ Pract. 2016;7(11):14–23.

Wang XR, Hillier T, Oswald A, Lai H. Patterns of performance in students with frequent low stakes team based learning assessments: Do students change behavior? Med Teach. 2020;42(1):111–3.

Behling KC, Gentile MM, Lopez OJ. The Effect of Graded Assessment on Medical Student Performance in TBL Exercises. Med Sci Educ. 2017;27(3):451–55.

Bauler T, Sheakley M, Ho A. Use of the Team-Based Learning Readiness Assessment Test as a Low-Stakes Weekly Summative Assessment to Promote Spaced and Retrieval-Based Learning. Medical Science Educator. 2019;30:605–8.

Carrasco GA, Behling KC, Lopez OJ. Evaluation of the role of incentive structure on student participation and performance in active learning strategies: A comparison of case-based and team-based learning. Med Teach. 2018;40(4):379–86.

Eastwood JL, Kleinberg KA, Rodenbaugh DW. Collaborative Testing in Medical Education: Student Perceptions and Long-Term Knowledge Retention. Medical Science Educator. 2020(30):737–47.

Bloom BSE. Taxonomy of Education Objectives Book 1 - Cognitive Domain. New York: David McKay Co.; 1956.

Yin RK. Case study research and applications: design and methods. Sixth edition. ed. Los Angeles: SAGE; (2018).

Di Giunta L, Eisenberg N, Kupfer A, Steca P, Tramontano C, Caprara GV. Assessing Perceived Empathic and Social Self-Efficacy Across Countries. Eur J Psychol Assess. 2010;26(2):77–86.

Lurie SJ, Schultz SH, Lamanna G. Assessing teamwork: a reliable five-question survey. Fam Med. 2011;43(10):731–4.

Sakles JC, Renee JKumari, Vijaya G. Integration of Basic Sciences and Clinical Sciences in a Clerkship: A Pilot Study. Med Sci Educ. 2006;16(1):4–9.

Little P, Everitt H, Williamson I, Warner G, Moore M, Gould C, et al. Preferences of patients for patient centred approach to consultation in primary care: observational study. Br Med J. 2001;322(7284):468–72.

Council GM. Outcomes for Graduates (Tomorrow’s Doctors). 2018.

Hearn J, Dewji M, Stocker C, Simons G. Patient-centered medical education: A proposed definition. Med Teach. 2019;41(8):934–8.

McLean SF. Case-Based Learning and its Application in Medical and Health-Care Fields: A Review of Worldwide Literature. J Med Educ Curric Dev. 2016;3:39–49.

O’Connor PJ, Sperl-Hillen JM, Johnson PE, Rush WA, Asche SE, Dutta P, et al. Simulated physician learning intervention to improve safety and quality of diabetes care: a randomized trial. Diabetes Care. 2009;32(4):585–90.

Chonkar SP, Ha TC, Chu SSH, Ng AX, Lim MLS, Ee TX, et al. The predominant learning approaches of medical students. BMC Med Educ. 2018;18(1):17.

Quintero GA, Vergel J, Arredondo M, Ariza MC, Gómez P, Pinzon-Barrios AM. Integrated Medical Curriculum: Advantages and Disadvantages. J Med Educ Curric Dev. 2016;3:133–37.

Torralba KD, Doo L. Active Learning Strategies to Improve Progression from Knowledge to Action. Rheum Dis Clin North Am. 2020;46(1):1–19.

Severiens S, Meeuwisse M, Born M. Student experience and academic success: comparing a student-centred and a lecture-based course programme. Higher Education. 2015;70:1–17.

Richardson JT. Mature students in higher education: Academic performance and intellectual ability. Higher Education. 1994;28:373–86.

Ozolins I, Hall H, Peterson R. The student voice: recognising the hidden and informal curriculum in medicine. Med Teach. 2008;30(6):606–11.

Cortright RN, Collins HL, Rodenbaugh DW, DiCarlo SE. Student retention of course content is improved by collaborative-group testing. Adv Physiol Educ. 2003;27(1-4):102–8.

LoGiudice AB, Pachai AA, Kim JA. Testing together: When do students learn more through collaborative tests? Scholarsh Teach Learn Psychol. 2015;1(4):377–89.

Levine RE, Borges NJ, Roman BJB, Carchedi LR, Townsend MH, Cluver JS, et al. High-Stakes Collaborative Testing: Why Not? Teach Learn Med. 2018;30(2):133–40.

Calabrese LH, Bianco JA, Mann D, Massello D, Hojat M. Correlates and changes in empathy and attitudes toward interprofessional collaboration in osteopathic medical students. J Am Osteopath Assoc. 2013;113(12):898–907.

Kivunja C. Why Students Don’t Like Assessment and How to Change Their Perceptions in 21st Century Pedagogies. Creative Education. 2015;6(20):2117–26.

Schuwirth LW, Van der Vleuten CP. Programmatic assessment: From assessment of learning to assessment for learning. Med Teach. 2011;33(6):478–85.

Jhala M, Mathur J. The association between deep learning approach and case based learning. BMC Med Educ. 2019;19(1):106.

Cunningham CT, Hemmelgarn H, Noseworthy B, Beck T, Dixon CA, Samuel E, Ghali S, Sykes WA, LJetté L, Nathalia. Exploring physician specialist response rates to web-based surveys. BMC Med Res Methodol. 2015;15:32.

Nair CS, Adams P, Mertova P. Student engagement: The key to improving survey response rates. Quality in Higher Education. 2008;14:225–32.

Van Mol C. Improving web survey efficiency: the impact of an extra reminder and reminder content on web survey response. International Journal of Social Research Methodology. 2017;20(4):317–27.

Park K, Park N, Heo W, Gustafson K. What Prompts College Students to Participate in Online Surveys? Int Educ Stud. 2109;12(1):69–79.

Acknowledgements

The team would like to thank the phase 1 curriculum review group at Imperial College School of Medicine, particularly Professor Mary Morrell (Director of Phase 1 MBBS), for their contributions towards the development of CSI.

Authors’ information

Chris John and Omar Usmani are the scientific and clinical CSI co-leads (respectively). Deepak Barnabas and Mariel James are/were the scientific and clinical teaching fellows (respectively). Agata Sadza is a Learning Design Lead at Imperial College London. Ana Madeira Teixeira Baptista is a Strategic Lead for Medical Education Transformation and Susan Smith directs the Medical Education Research Unit at Imperial College London.

Funding

Imperial College London’s Learning and Teaching Strategy funding supported the development and delivery of the CSI module.

Author information

Authors and Affiliations

Contributions

OU and CJ devised the concept of CSI. MJ, DB, AS, OU and CJ were involved in the design, delivery and evaluation of the module. AB and SS contributed to the evaluation of the module. MJ performed statistical analysis, and MJ/CJ interpreted the student feedback data. MJ wrote the main manuscript text and prepared the figures/tables. All authors contributed to amendments of manuscript drafts and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study protocol was carried out in accordance with relevant guidelines and regulations laid out by the Declaration of Helsinki. Surveys were optional and informed consent for participation was obtained from participants. Students were informed of the possible uses of their anonymous data ahead of filling the surveys, and were informed that submission of the surveys would indicate demonstration of consent for the use of their data for research/publication. The use of Survey A for research publication was deemed exempt from requiring ethical approval by the Faculty of Medicine’s Medical Education Ethics Committee (MEEC). The uses of Surveys B and C and exam results were granted ethical approval from MEEC (MEEC1920-181).

Consent for publication

Surveys were optional and informed consent for publication was obtained from participants. Students were informed of the possible uses of their anonymous data ahead of filling the surveys, and were informed that submission of the surveys would indicate demonstration of consent for the use of their data for research/publication.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

James, M., Baptista, A.M.T., Barnabas, D. et al. Collaborative case-based learning with programmatic team-based assessment: a novel methodology for developing advanced skills in early-years medical students. BMC Med Educ 22, 81 (2022). https://doi.org/10.1186/s12909-022-03111-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-022-03111-5