Abstract

Background

Previous studies have assessed note quality and the use of electronic medical record (EMR) as a part of medical training. However, a generalized and user-friendly note quality assessment tool is required for quick clinical assessment. We held a medical record writing competition and developed a checklist for assessing the note quality of participants’ medical records. Using the checklist, this study aims to explore note quality between residents of different specialties and offer pedagogical implications.

Methods

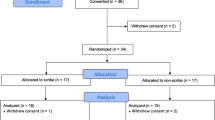

The authors created an inpatient checklist that examined fundamental EMR requirements through six note types and twenty items. A total of 149 records created by residents from 32 departments/stations were randomly selected. Seven senior physicians rated the EMRs using a checklist. Medical records were grouped as general medicine, surgery, paediatric, obstetrics and gynaecology, and other departments. The overall and group performances were analysed using analysis of variance (ANOVA).

Results

Overall performance was rated as fair to good. Regarding the six note types, discharge notes (0.81) gained the highest scores, followed by admission notes (0.79), problem list (0.73), overall performance (0.73), progress notes (0.71), and weekly summaries (0.66). Among the five groups, other departments (80.20) had the highest total score, followed by obstetrics and gynaecology (78.02), paediatrics (77.47), general medicine (75.58), and surgery (73.92).

Conclusions

This study suggested that duplication in medical notes and the documentation abilities of residents affect the quality of medical records in different departments. Further research is required to apply the insights obtained in this study to improve the quality of notes and, thereby, the effectiveness of resident training.

Similar content being viewed by others

Introduction

Effective documentation is a key component of medical training. At teaching hospitals, residents are responsible for most of the medical documentation, including inpatient records. They must be able to produce compendious documentation as a core competency for entering residency [1]. An electronic medical record (EMR) includes admission notes, progress notes, weekly summaries, and discharge notes. EMRs form a critical part of e-health systems and are highly instrumental in improving the quality and efficiency of healthcare services; they also serve as legal records [2]. Residents need to learn ways to conduct documentation using the EMR system efficiently while minimising errors.

We consider that the weaknesses in residents’ medical record writing include (1) The problem list affects patient care and health insurance declaration. It is the most important part of medical record writing that needs improvement. Residents usually only write about one disease, which results in treatment problems. (2) The diagnostic plan should be in accordance with the rules of confirm, complete and course follow-up, but most residents write it incompletely. (3) The therapeutic plan should include the content of decision-making. (4) The educational plan should include the preventive measures plan, with the current situation informing the treatment plan. (5) The content of the progress note is always “copied and pasted” from the previous course of the disease without modification, thus is unable to present the authentic course of the disease.

We reviewed previous studies to assess note quality and use of EMR as part of medical training [3,4,5,6,7,8,9,10]. However, we found these studies had limitations for assessing our residents’ medical record writing. For example, Baker et al [3] developed an interpretive summary, differential diagnosis, explanation of reasoning, and alternatives assessment tool to assess comprehensive new patient admission notes. Liang et al [8] created a checklist for neurology residents” documentation. These tools are used for a specific section in medical records or a neurology department, which may not be practical for other specialties. Thammasitboon et al [4] created the Assessment of Reasoning Tool that allows teachers to assess clinical reasoning and structure feedback conversations, which is an incomplete fit for assessing writing. Lee et al [5] investigated the use of medical record templates in an EMR system, and the result showed that residents appreciate templates, but the overall documentation quality remained unknown. Other studies have developed curricula for better understanding of medical documentation [6, 7, 9, 10]. However, these curricula and study results are insufficient for use with medical record writing assessment.

In this regard, a clinically-applicable generalized note quality assessment tool needs to be developed. Our residents faced challenges in using the EMR systems for documentation in daily clinical practice. For example, the transition from clinical observation to medical writing and then to the EMR system requires time and effort. In the current EMR system, information is often presented in segments, splitting data from multiple screens and modules in various formats. EMR writing could overwhelm residents with massive volumes of data; it may stunt critical clinical thinking and restrict doctor-patient communication, weakening interprofessional collaboration [11,12,13,14,15]. Consequently, using note templates in the EMR system and copy-and-paste from previous notes are frequently used strategies; however, these dramatically decrease the quality of medical records [16, 17]. Although some departments have created medical record templates based on their daily routine, the effectiveness of these templates lacks proper evaluation and investigation.

From 2018 to 2019, the Medical Quality Review Committee (MQRC) in our hospital held a competition to improve the note quality of EMRs. The competition aimed to raise healthcare providers’ awareness of medical records and investigate medical record training in departments/stations so that clinical teachers could construct a systematic medical record training programme. Hospital staff, including attending physicians, residents, interns, nurse practitioners, and medical students participated. The competition comprised two stages: the first stage contained (1) medical record quality review (50%) and (2) self-report (20%); the second stage was an oral presentation (30%). The winners received awards and certificates. This competition was funded by our hospital and the MQRC.

This study reports the assessment of note quality obtained by reviewing medical records. It aims to identify the critical issues in the context of EMRs written by residents from various departments. The research questions are as follows: (1) What is the overall note quality among residents of different specialties? (2) Does note quality vary between residents of different departments/specialties? This study was reviewed and approved by the Institutional Review Board of the National Cheng Kung University Hospital.

Method

Data collection

Out of 37 departments in our hospital, 32 participated in the competition; the participation rate was 87% (Table 1). The administrative staff of each station—who had no prior knowledge of competition—were asked randomly to select five inpatient records by using a computer-generated list from the stations. Ultimately, 149 inpatient records were collected.

Rating process

To examine the quality of inpatient records, the MQRC first produced a draft checklist based on our hospital’s clinical needs and a literature review [3,4,5,6,7,8,9,10]. Considering our needs in assessing overall documentation quality in clinical environments, the MQRC then held interactive workshops involving multiple departments at our hospital. In the workshops, doctors and medical experts considered the unique needs of each specialty. Medical records committee members and senior doctors in the MQRC revised the checklist based on workshop feedback.

The final version of the inpatient record checklist contained six note types and twenty items (Table 2). The six note types were as follows: (1) admission notes (25%); (2) problems list (8%); (3) progress notes (25%); (4) weekly summaries (4%); (5) discharge notes (25%); and (6) overall performance (13%). The total score was 100. Furthermore, an ‘outstanding performance’ fetched an additional nine points (Table 2, Items 21–23). Each note type included a different percentage of points based on their functions in an inpatient record. Each note type and item were rated and scored as excellent, good, fair, or poor.

The seven raters were from the departments of Nephrology (1), Neurology (1), Internal Medicine (1), Obstetrics and Gynaecology (1), Paediatric (1), and Cardiovascular Surgery (2). To avoid discrepancies caused due to multiple authors, the rating process only used notes written by a single resident. The raters independently scored 149 medical records based on the checklist, and the final scores were averaged.

Data analysis

This study used the scores obtained for six note types and twenty items. Data were categorised into five groups: general medicine, surgery, paediatrics, obstetrics and gynaecology, and other departments. Scores were analysed using descriptive statistics, and ANOVA was used to examine the average differences between and within groups. Owing to the unequal variance of the five groups, we used generalized least squares instead of ordinary least squares to calculate the differences.

Results

Overall performance

The overall quality of medical records was rated from ‘fair’ to ‘good’ (Table 3).

Inter-comparison among note types and the five groups

To investigate each note type’s performance, we calculated the standardised scores (group mean/total score). As Table 4 shows, the weekly summary needed the most improvement (0.66), followed by progress notes (0.71), problem list (0.73), overall performance (0.73), admission notes (0.79), and discharge notes (0.81) (Table 4). The format of progress notes was the subjective-objective-assessment-plan (SOAP), but we found that instances of duplication often occurred in the ‘subjective’ section, which led to low scores in this note type. This finding showed that residents used a copy-and-paste strategy rather than revising patients’ daily changes and summarising daily information when writing progress notes.

Additionally, we compared the total score differences between the five groups. The EMRs conducted by other departments gained the highest total score (80.20), followed by obstetrics and gynaecology (78.02), paediatrics (77.47), general medicine (75.58), and surgery (73.92).

To understand the performance of each item among the five groups, ANOVA analysis was conducted with grand means as a reference group. As Table 5 shows, residents of other departments gained significantly higher scores than the grand mean in the following note types:

-

(1)

Admission notes: Item 3 (0.76) and Item 4 (0.36)

-

(2)

Problem list: Item 5 (0.24) and Item 6 (0.30)

-

(3)

Progress notes: Item 8 (0.17) and Item 12 (0.40)

-

(4)

Weekly summary: none

-

(5)

Discharge notes: Item 14 (0.40) and Item 15 (0.18)

-

(6)

Overall performance: Items 18 (0.15), 19 (0.32), and 20 (0.26)

Among the twenty items, surgical residents gained only one item score higher than the grand mean (Item 12 in progress note, 0.17). In addition, surgical residents showed significantly low scores on the following seven items:

-

(1)

Admission notes: Items 1 (− 0.18), 3 (− 0.26), and 4 (− 0.21).

-

(2)

Problem list: Item 5 (− 0.13).

-

(3)

Weekly summary: Item 13 (− 0.21).

-

(4)

Discharge notes: Item 14 (− 0.32).

-

(5)

Overall performance: Item 19 (− 0.15).

For general medicine, only the Item 12 (− 0.18) score was significantly lower than the grand mean. Paediatric residents received scores significantly higher than the grand mean for Items 2 (0.23), 3 (0.28), 7 (0.29), 9 (0.16), and 19 (0.14). Obstetrics and gynaecology residents earned scores significantly higher than the grand mean for Items 1 (0.24), 12 (0.22), 13 (0.29), 18 (0.15), 19 (0.22), and 20 (0.18).

Discussion

This study contributes to the assessment of note quality in residency in the EMR era. Based on previous studies that assessed note quality in a particular format or a specific department [3, 4, 8], we developed an overall clinically usable checklist and assessed its effectiveness by examining our residents’ medical record writing. The results indicated that residents from different departments demonstrated diverse levels of performance. This finding expanded previous studies indicating that residents demonstrated inconsistencies in note quality [5, 15, 18, 19]. This finding gives new insight into note quality and can be useful for creating medical record templates. The results also suggest that duplications and incorrect documentation processes obscure the use of the EMR system in complex clinical scenarios.

First, of the six note types, the weakest was the weekly summary, followed by progress notes. Subsequently, we found that some residents copied data from admission notes to create progress notes instead of renewing daily examination results. As EMR systems comprise large volumes of data, residents need to record everyday changes and select useful data to complete precise and concise documentation. Unlike discharge notes—which show a summary with written orders—weekly summaries and progress notes required careful clinical reasoning skills, including collecting the patient’s background details and screening data to assess relevant and critical information. The massive amount of data in the EMR system may hinder the process of constructing the notes de novo or receiving essential feedback from residents’ advisers or colleagues. Residents might ignore the inter-connection among note types within EMRs, which leads to a long but pointless record. In addition, when residents relied solely on copy-and-paste from previous records or templates in the EMR system, the feedback from senior doctors was likely not meaningful because the records were created through computer programming rather than critical thinking. In the United States, more than 60% of residents duplicate data without confirming their accuracy, which leads to 2–3% of inappropriate diagnoses [20]. Hence, the competencies of medical knowledge and system-based practice could be particularly vital for improving note quality in the EMR system [11, 20].

Second, we found differences among the groups. Performance varied depending on the unique affordances and workloads of each department. Surgery had the lowest score among the five groups. They performed well solely on Item 12, which was the record for the operation. This could be a result of the specific culture of surgery, for example, heavy workload, time pressure, insufficient information from interviews, lack of feedback, and the focus on surgical skills training. As such, we propose that surgical residents might require extra opportunities to learn clinical reasoning processes and chronological descriptions of the EMR system, rather than relying overly on templates and the copy-and-paste strategy.

Conclusively, meaningful experiences in using the EMR system should be consistently implemented in clinical training. The integration of the EMR system and core competencies should be rigorously designed and assessed. Enhancing EMR as a useful tool could benefit not only the level of clinical skills of residents but also their attitudes and professionalism in clinical practice [12, 21].

Pedagogical suggestion

A previous study suggested that EMRs can influence the ways in which residents develop clinical reasoning skills and document strategies [21]. We further suggest that eliminating duplication should be a key factor in resident education. The findings of this study show that our residents may lack a comprehensive review of the systems and patients’ clinical information. In the era of specialised divisions in medicine, a comprehensive review is necessary because it may affect more than 10% of final clinical diagnoses [22]. Some suggestions are provided to mitigate the negative educational impact of EMRs. First, meetings for residents, attending physicians, and other healthcare providers can be held for effective interprofessional interactions. Second, residents can be trained to interact with patients before directly reviewing EMRs [17] to decrease their reliance on computer data. Third, the introduction of a systematic coaching programme that integrates important elements and strategies as a comprehensive course can help clinical teachers train residents effectively.

Limitations

This study had some limitations. First, an inpatient record checklist was created for the clinical assessment. The development process largely relied on the discussions of medical experts. Lack of validity is a major limitation, and the checklist needs further revision. Second, although the sample comprised residents from various departments, it only included 7–10% of the residents in our hospital. In future studies, we will expand the sample size to other hospitals and examine the checklist’s reliability and validity to promote generalization. Despite these limitations, this study provides new insights into the directions for future studies on residents’ note quality and the use of EMRs.

Conclusion

This study adds to the existing body of literature and demonstrates the need to improve note quality in residents’ EMRs. In addition, the various results of note types and groups offer potential insights into the diverse cultures and needs of EMR training for residents in different specialties. From the assessment of authentic medical records in the EMR system, we provided pedagogical suggestions that thoroughly encompassed the spectrum of resident education.

Availability of data and materials

Not applicable.

Abbreviations

- ANOVA:

-

Analysis of variance

- EMR:

-

Electronic Medical Record

- MQRC:

-

Medical Quality Review Committee

- SD:

-

Standard Deviation

- SE:

-

Standard Error

- Std.:

-

Standardised Scores

References

Core Entrust able Professional Activities for Entering Residency. Publication of the AAMC. Available at: aamc.org. Accessed 20 Jul 2021.

Enaizan O, Zaidan AA, Alwi NHM, Zaidan BB, Alsalem MA, Albahri OS, et al. Electronic medical record systems: decision support examination framework for individual, security and privacy concerns using multi-perspective analysis. Heal Technol. 2020;10:795–822. https://doi.org/10.1007/s12553-018-0278-7.

Baker EA, Ledford CH, Fogg L, Way DP, Park YS. The IDEA assessment tool: assessing the reporting, diagnostic reasoning, and decision-making skills demonstrated in medical students’ hospital admission notes. Teach Learn Med. 2015;27(2):163–73. https://doi.org/10.1080/10401334.2015.1011654.

Thammasitboon S, Rencic J, Trowbridge R, Olson A, Sur M, Dhaliwal G. The assessment of reasoning tool (ART): structuring the conversation between teachers and learners. Diagnosis. 2018;5(4):197–203. https://doi.org/10.1515/dx-2018-0052.

Lee WW, Alkureishi ML, Wroblewski KE, Farnan JM, Arora VM. Incorporating the human touch: piloting a curriculum for patient-centered electronic health record use. Med Educ Online. 2017;22(1):1396171. https://doi.org/10.1080/10872981.2017.1396171.

Aylor M, Campbell EM, Winter C, Phillipi CA. Resident notes in an electronic health record: a mixed-methods study using a standardized intervention with qualitative analysis. Clin Pediatr. 2017;56(3):257–62. https://doi.org/10.1177/0009922816658651.

Otokiti A, Sideeg A, Ward P, Dongol M, Osman M, Rahaman O, et al. A quality improvement intervention to enhance performance and perceived confidence of new internal medicine residents. J Community Hosp Intern Med Perspect. 2018;8(4):182–6. https://doi.org/10.1080/20009666.2018.1487244.

Liang JW, Shanker VL. Education in neurology resident documentation using payroll simulation. J Grad Med Educ. 2017;9(2):231–6. https://doi.org/10.4300/JGME-D-16-00235.1.

As-Sanie S, Zolnoun D, Wechter ME, Lamvu G, Tu F, Steege J. Teaching residents coding and documentation: effectiveness of a problem-oriented approach. Am J Obstet Gynecol. 2005;193(5):1790–3. https://doi.org/10.1016/j.ajog.2005.08.004.

Patel A, Ali A, Lutfi F, Nwosu-Lheme A, Markham MJ. An interactive multimodality curriculum teaching medicine residents about oncologic documentation and billing. MedEdPORTAL. 2018;14:10746. https://doi.org/10.15766/mep_2374-8265.10746.

Tierney MJ, Pageler NM, Kahana M, Pantaleoni JL, Longhurst CA. Medical education in the electronic medical record (EMR) era: benefits, challenges, and future directions. Acad Med. 2013;88(6):748–52. https://doi.org/10.1097/ACM.0b013e3182905ceb.

Welcher CM, Hersh W, Takesue B, Stagg Elliott V, Hawkins RE. Barriers to medical students’ electronic health record access can impede their preparedness for practice. Acad Med. 2018;93(1):48–53. https://doi.org/10.1097/ACM.0000000000001829.

Gagliardi JP, Rudd MJ. Sometimes determination and compromise thwart success: lessons learned from an effort to study copying and pasting in the electronic medical record. Perspect Med Educ. 2018;7:4–7. https://doi.org/10.1007/s40037-018-0427-8.

Hammoud MM, Margo K, Christner JG, Fisher J, Fischer SH, Pangaro LN. Opportunities and challenges in integrating electronic health records into undergraduate medical education: a national survey of clerkship directors. Teach Learn Med. 2012;24(3):219–24. https://doi.org/10.1080/10401334.2012.692267.

Stewart WF, Shah NR, Selna MJ, Paulus RA, Walker JM. Bridging the inferential gap: the electronic health record and clinical evidence: emerging tools can help physicians bridge the gap between knowledge they possess and knowledge they do not. Health Aff. 2007;26(Suppl1):w181–91. https://doi.org/10.1377/hlthaff.26.2.w181.

Hirschtick RE. Copy-and-paste. JAMA. 2006;295(20):2335–6. https://doi.org/10.1001/jama.295.20.2335.

Peled JU, Sagher O, Morrow JB, Dobbie AE. Do electronic health records help or hinder medical education? PLoS Med. 2009;6(5):e1000069. https://doi.org/10.1371/journal.pmed.1000069.

Bowen JL. Educational strategies to promote clinical diagnostic reasoning. N Engl J Med. 2006;355(21):2217–25. https://doi.org/10.1056/NEJMra054782.

McBee E, Ratcliffe T, Goldszmidt M, Schuwirth L, Picho K, Artino AR, et al. Clinical reasoning tasks and resident physicians: what do they reason about? Acad Med. 2016;91(7):1022–8. https://doi.org/10.1097/ACM.0000000000001024.

Tsou AY, Lehmann CU, Michel J, Solomon R, Possanza L, Gandhi T. Safe practices for copy and paste in the EHR. Appl Clin Inform. 2017;26(01):12–34. https://doi.org/10.4338/ACI-2016-09-R-0150.

Stephens MB, Gimbel RW, Pangaro L. Commentary: the RIME/EMR scheme: an educational approach to clinical documentation in electronic medical records. Acad Med. 2011;86(1):11–4. https://doi.org/10.1097/ACM.0b013e3181ff7271.

Hendrickson MA, Melton GB, Pitt MB. The review of systems, the electronic health record, and billing. JAMA. 2019;322(2):115–6. https://doi.org/10.1001/jama.2019.5667.

Acknowledgements

None.

Funding

This work was supported by grants from the National Science Council of Taiwan (MOST 108–2314-B–006–097 to JNR).

Author information

Authors and Affiliations

Contributions

HH and JNR led on conception, design, interpretation of data, writing the article and critical appraising the content, and are the guarantor of the paper. LLK contributed to review literatures and analyze data. CCL contributed to organize the competition. CCT, HWH, SYW, YNH, PYL, JLW, and PFC responsible for collection of data. All authors have approved the final version of the article submitted.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. The institutional review board at National Cheng Kung University Hospital approved to waive the requirement for obtaining informed consent (Approval No. B-EX-108–048). Waiver of informed consent was approved by the institutional review board.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplemental file

A. Note template 1. For intensive care unit patients. B. Note template 2. For intensive care unit patients. C. Note template for endocrinology patients.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hung, H., Kueh, LL., Tseng, CC. et al. Assessing the quality of electronic medical records as a platform for resident education. BMC Med Educ 21, 577 (2021). https://doi.org/10.1186/s12909-021-03011-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-021-03011-0