Abstract

Introduction

Open Online Courses (OOCs) are increasingly presented as a possible solution to the many challenges of higher education. However, there is currently little evidence available to support decisions around the use of OOCs in health professions education. The aim of this systematic review was to summarise the available evidence describing the features of OOCs in health professions education and to analyse their utility for decision-making using a self-developed framework consisting of point scores around effectiveness, learner experiences, feasibility, pedagogy and economics.

Methods

Electronic searches of PubMed, Medline, Embase, PsychInfo and CINAHL were made up to April 2019 using keywords related to OOC variants and health professions. We accepted any type of full text English publication with no exclusions made on the basis of study quality. Data were extracted using a custom-developed, a priori critical analysis framework comprising themes relating to effectiveness, economics, pedagogy, acceptability and learner experience.

Results

54 articles were included in the review and 46 were of the lowest levels of evidence, and most were offered by institutions based in the United States (n = 11) and United Kingdom (n = 6). Most studies provided insufficient course detail to make any confident claims about participant learning, although studies published from 2016 were more likely to include information around course aims and participant evaluation. In terms of the five categories identified for analysis, few studies provided sufficiently robust evidence to be used in formal decision making in undergraduate or postgraduate curricula.

Conclusion

This review highlights a poor state of evidence to support or refute claims regarding the effectiveness of OOCs in health professions education. Health professions educators interested in developing courses of this nature should adopt a critical and cautious position regarding their adoption.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Background

Open Online Courses (OOCs), including Massive Open Online Courses (MOOCs), have been characterised as “the next evolution of networked learning” [1] and identified as a platform that may expand access to higher education and support innovative teaching practices. Coined in 2008, MOOCs refer to online courses offered by institutions that attract thousands of participants, partly due to the fact that they are “open”, which usually refers to the fact that they are not credit-bearing and therefore free to anyone with an internet connection. While formal research in this emerging field is limited, many supporters of the format have embraced its implementation with enthusiasm [2]. There has been a dramatic increase in the development and implementation of MOOCs across many aspects of higher education and, more recently, within health professions education [3].

Few studies have demonstrated significant benefits of OOCs on either student learning, professional workforce shortages, or the need to disrupt more “traditional” approaches to teaching and learning. The lack of evidence in the field of health professions education has not, however, diminished the enthusiasm with which they are discussed [4, 5]. Mehta and colleagues (2013) [5] suggest that “no longer will a limited number of medical schools or faculty constrain our ability to educate medical students” and that “learning communities will form naturally, and students will need to take ownership of their education”. However, this also articulates a divide between pedagogical vision and professions founded upon evidence-based principles.

To date, the most comprehensive review of MOOCs in health professions education has been by Liyanagunawardena and colleagues (2014) [3]. This review provided detailed overviews of the courses themselves but, importantly, did not appraise and synthesise the evidence regarding their effectiveness. Their conclusion, that MOOCs have the potential to make an important contribution to health professions education, was therefore not founded upon evidence. This lack of evidence is not limited to studies of OOCs in health professions and medical education. Critically reviewed literature is also scarce in the domain of OOCs in the more general higher education literature [6]. This weak foundation poses significant issues for academic institutions responsible for the design and implementation of evidence-based models of health professions education, and who are considering the large-scale adoption of MOOCs in their curricula.

This does not mean OOCs lack the potential to disrupt health professions education. There is evidence that they may introduce broader social connections, opportunities for enhanced collaboration, and exposure to many different perspectives, all of which change the educational space in ways that may improve student learning. The original MOOCs were informed by emergent theories of knowledge and learning, such as connectivism, and supported the development of socially-negotiated and relationally-constructed knowledge, as well as moving the teacher towards the periphery of the learning interaction [7]. These environments may facilitate a type of learning that is self-organised, collaborative, and open, where the learner is at the centre of the process. The networked nature of the course leads to a high number of interactions between people and resources, where learners organise and determine the process and to some extent the outcomes, making the course relatively unpredictable [7]. It may be that this disruptive innovation has the potential to significantly change how we think about learning in the twenty-first century [8] or it may simply be a “good thing to think with” [9]. It is presently difficult to say with confidence whether MOOCs in health professions education enhance student learning or not.

This systematic review therefore aimed to 1) summarise the available evidence describing the use of OOCs in health professions education; 2) describe the features of these courses; and 3) determine their effectiveness against performance outcomes of relevance to health professions education providers.

Methods

The protocol for this review was registered on PROSPERO in July 2016 (#CRD42016042421). Ethics approval was deemed unnecessary for this study as it was a systematic review of the literature. Electronic searches of PubMed, Medline, Embase, PsychInfo and CINAHL databases were conducted from inception to April 2019 to identify relevant publications in the field of OOCs in health professions education. Each database was searched using the following terms: ‘massive open online course’ OR ‘MOOC’ OR ‘open online course’ OR ‘OOC’ OR ‘distributed online collaborative course’ OR ‘DOCC’ OR ‘small private online course’ OR ‘SPOC’, without any restrictions. The last two terms were included due to their relatively broad context and potential to identify relevant studies (despite not being truly ‘open’ in nature). The intervention was defined as any OOC that was designed to address an aspect of health considered relevant to the scope of practice of health professional students. Courses targeting undergraduate or postgraduate training were deemed appropriate for inclusion.

As we expected the search to yield a wide variety of studies, no exclusions were made on the basis of study type. Studies must have been published in full text, English language and targeted towards any of the following health professionals: medicine, physiotherapy, occupational therapy, nursing, radiology, speech and language therapy, dietetics, public health, dentistry and psychology. Grey literature was identified via Google Scholar using the same search terms as per the database searches, with any literature included if it was identified from the first three pages of the google search. Reference lists of included studies were hand-searched.

Study selection and data extraction were undertaken by two members of the research team, with random accuracy checks provided by another team member. Discrepancies were resolved by a third member of the team (when relevant) to derive consensus. We developed and piloted a standardised data extraction form to identify the key study characteristics (year and location of publication), study type (methodology), participant characteristics, key outcomes using a self-developed framework (described further), and quality appraisal. Assessment of risk of bias of included studies was undertaken using instruments specific to individual study designs. This approach limits the ability to pool judgments across studies but enables greater depth of evaluation within studies, in keeping with the focus of this review. Randomised controlled trials were evaluated using the Cochrane Risk of Bias tool; reviews evaluated via the AMSTAR checklist; other study types were evaluated using the suite of The Joanna Briggs Institute quality appraisal instruments for cohort studies, pre/post test studies and commentaries/expert opinion. The ‘level of evidence’ was defined for all studies according to the extended version of the Australian National Health and Medical Research Council (NHMRC) hierarchy for intervention studies [10]. This hierarchy is the reference standard for appraising levels of evidence for health technology assessment in Australia and was developed following an extensive four-year pilot process involving a combination of evidence, theory and consultation, informed by existing tool such as those used by the National Institute for Clinical Excellence (adapted from the Scottish Intercollegiate Guidelines Network) [11], the National Health Service Centre for Reviews and Dissemination [12] and the Centre for Evidence Based Medicine (CEBM) hierarchy [13]. Individual studies are rated with a score ranging from I (systematic reviews of randomised controlled trials) to IV (case series with either post-test or pre-test/post-test outcomes), with higher scores equating to higher levels of evidence. Commentary or expert opinion papers do not feature on this scale, so were attributed a score of ‘V’ (lowest form of evidence). No studies were excluded from the review on the basis of study quality.

Given the relative infancy of research in this field, data were not anticipated to be suitable for inclusion in a meta-analysis of primary and secondary outcomes. Data were therefore analysed using a mixed-methods approach of quantitative synthesis (incorporating descriptive summary statistics) and narrative summary of relevant data regarding the impact of OOCs in health professions education. In order for data to be permissible, findings needed to be clearly interpretable via either quantitative (e.g. summary statistics, count data) or qualitative means (e.g. user experience statements). In order to optimise the relevance of OOC research in the field of health professions education, data needed to be evaluated against metrics of importance to education administrators and performance outcomes. We reviewed the available literature to identify suitable tools for the purpose of such directed reporting but failed to identify any that contained the requisite detail for this study. Review findings were therefore summarised using a user-defined OOC evaluation framework, defined a priori for this review, that comprised five key outcome ‘pillars’, as follows:

-

1)

Effectiveness (primary outcome): i.e. did the OOC increase learner knowledge?

-

2)

Learner perceptions (opinions / attitudes): i.e. was the OOC enjoyable or rewarding?

-

3)

Acceptability (feasibility / usability): i.e. how well could learners engage with the OOC?

-

4)

Pedagogy: i.e. was the OOC based upon a stated educational framework or theory?

-

5)

Economics: i.e. was the OOC evaluated against a measure of cost and/or value?

Data from each study were mapped against each pillar to derive five quantitative point estimates that reflected the total number of studies providing admissible data. These data were summarised as a percentage of the total number of included studies and represented visually via radar graph using Microsoft Excel. Qualitative data such as participant testimonies or user feedback was considered requisite evidence to satisfy the meeting of any OOC pillar.

Results

Aim 1 (overview of included studies of OOCs in health professions education)

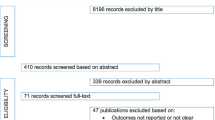

The electronic database search yielded 2417 records and hand-searching retrieved an additional 15 studies. After de-duplication and removal of records based on title and abstracts, we screened 128 full-text articles against the inclusion criteria, resulting in 54 articles being included in the review (Fig. 1)

Detailed information regarding the characteristics of included articles is presented in Table 1. Most included papers were of a narrative / opinion (n = 24) or descriptive / case series (n = 22) design, meaning 46 of the 54 included articles were deemed to be of the lowest levels of evidence (levels IV / V) according to the NHMRC hierarchy. One randomised controlled trial (RCT) and two cohort/case control studies were included. Four review articles were included, however none were systematic reviews of RCTs (level I evidence). The RCT was deemed to be at high risk of bias due to lack of blinding of participants to knowledge of group allocation, which may have affected self-reported outcome data. Complete details regarding quality appraisals of individual studies are provided in the Additional file 1: Table S1, Additional file 2: Table S2, Additional file 3: Table S3, Additional file 4: Table S4, Additional file 5: Table S5, Additional file 6: Table S6.

Aim 2 (features of OOCs in this study)

No single health profession was overtly over or under-represented with a spread of courses offered across medical, nursing and the allied health professions. Most courses were delivered by academic centres from either the United States of America (n = 11), the United Kingdom (n = 6) or Australia and China (n = 2). The number of participants enrolled in OOCs ranged from as low as 8 (who were participating in a qualitative study) to as high as 35,968. OOCs were reported to have been offered for durations ranging from a single session of one hour to 18 weeks. Some uncertainty existed regarding the precise course duration for some studies (see Table 1 for additional detail).

Of the 36 studies that provided sufficient detail to describe the online course, 32 defined the aim(s) of the OOC. Most were developed with the intent of improving participants’ knowledge and 15 studies reported outcome data related to this aim.

Sixteen studies defined the methods of assessment for evaluating the OOC. Many articles incorporated online quizzes to assess the extent of knowledge acquisition, either after an individual module or upon conclusion of the OOC. Two of these studies reported the use of baseline testing. Two studies required the submission of a written essay to evaluate the impact of the course [15, 57], one of which was peer reviewed [57].

Most OOCs involved at least one element of participant ‘interaction’ although more recent articles included 3–5 different interactive elements. These included embedded video lectures with interactive revision questions, online lessons, discussion forums for peer engagement, or formative quizzes (e.g. multiple choice questions) that were either mandatory or voluntary. Most OOCs presented course materials using existing platforms such as Coursera, Udemy, EdX, and Canvas.

Aim 3 (evaluation of the effectiveness of OOCs for health professions education)

As anticipated, data were not suitable for formal meta-analysis. The very low percentage of studies that reported against any of the core outcomes (indicated by the small area of shading relative to the total graph region in Fig. 2 below) demonstrates that the evaluation of OOCs against outcomes of importance to health professions educators was rare. This was particularly evident across the ‘economic’ and ‘pedagogical’ pillars of our outcome framework.

Effectiveness

Twenty-three studies presented participant self-reported data concerning changes in knowledge and or behaviours of the learners after completion of the MOOC. The following descriptions are presented as examples of the ways in which articles report on the effectiveness of the courses with respect to achieving the stated aims. One paper provided comparative data with self-directed learning, revealing no differences between groups for either knowledge or perceived confidence in patient management. Another reported that 85% of its health professional learner participants believed that it changed the care of their patients (n = 300). Another reported that 93% of its participants believed the course had changed their lives (n = 516). Two studies [20, 34] attempted to use controls to determine differences in outcomes between respondents who had used MOOCs in isolation vs respondents who had used MOOCs in addition to “traditional” courses. One qualitative study [54] attempted to map students’ responses from focus group discussions to Herrington’s authentic learning framework [66] as a way to demonstrate the achievement of learning outcomes related to the development of graduate attributes.

Only one included study was a randomised controlled trial that directly compared the effect of a MOOC to an alternative model of education. This study by Hossain et al. (2015) [30] compared the delivery of a 5-week online learning module on spinal cord injuries via either a weekly guided MOOC with Facebook interactive discussions to a conventional self-paced module in a small sample of undergraduate physiotherapy students from Bangladesh and evaluated its effectiveness in improving knowledge, confidence and/or satisfaction. The study failed to demonstrate any significant favorable effects of the MOOC model of education on these outcomes. Students also reported some positive aspects of the MOOC relating to the unique opportunities it afforded to interacting with students from other countries. While this study does offer some insight into the use of MOOCs in health professions education in general, the findings should be interpreted with caution, especially considering the high risk of bias as a result of the lack of blinding.

Learner experience (attitudes of health professionals toward their learning)

Seven studies reported on outcome measures relating to the learner experience of participating in the MOOC. The most common measure was participant satisfaction with twelve studies reporting overwhelmingly positive experiences of participation in MOOCs. However one study [30] reported the participants were neutral in their assessment of satisfaction (Likert Scale score of 0.0 (95% CI − 1.1 to 1.2), and another [21] reported that only 56% of learners were satisfied with the quality of the course discussion forums.

One study [39] provided strong agreement from participants for the helpfulness of a virtual patient experience. One study [21] included qualitative comments from participants, reporting that the course helped with self-discovery, and expanded their view of the world. Whereas another [25] reported that they found the course provided an opportunity engage with other health professionals and health professional students from around the globe.

Acceptability (feasibility / usability)

Few studies reported participant feedback on the acceptability (feasibility or usability) of the OOC format. This item was focused on the self-reported ability of the learner to effectively engage with the course learning materials and methods. Findings included studies reporting the course being ‘too technical’ (n = 1), trying to be too many things to too many people (n = 1), an excess of interactive screens (n = 1), technical problems for approximately 16% of participants such as broken sessions and issues concerning internet connectivity (n = 1), taking too much time (n = 1) and an excessive number of discussion posts and threads (n = 1). In addition, one study [60] found participants believed the course was a valuable supplement to the existing “traditional” course but that it should not be used as a replacement.

Pedagogy

While three studies [25, 30, 39] specifically described the included courses as xMOOCs, most of the descriptive studies included in this review described couse features that would fit into an xMOOC-type design. These were characterised by features such as embedded video lectures, assigned reading texts, answering multiple choice questions, and participating in forum discussions. Another study [54] reported on the course design as being informed by cMOOCs and described the use of authentic learning as a pedagogical framework for the course structure [54]. Finally, one study [64] reported on the use of the ADDIE model of instructional design (Analysis, Design, Development, Implementation, and Evaluation) in order to develop the course. No other articles reported on the development process of any courses.

Economics

While two articles included information related to the expense of course development (50,000 Euros and 10,000–50,000 dollars) [28, 46], no studies reported outcome measures relating to either a simple cost or value analysis, or comparative costs in the form of cost-benefit or cost-effectiveness analysis. We looked for evidence across the full spectrum of cost and value analyses, including cost-analyses (where outcomes are not considered), and breakeven analyses, and comparative approaches such as cost-minimisation analyses (where the outcomes are assumed equal), cost-benefit analyses (where costs and effects are considered in monetary units), and cost-effectiveness analyses (where outcomes are retained in natural units, such as measures of learning) [67, 68].

Discussion

This is the first review to systematically investigate the published literature regarding the use and efficacy of OOCs in the field of health professions education. The most prominent outcome from this review is the striking imbalance between the state of anecdotal buoyant enthusiasm for their use in education practice compared to the robustness of the evidence regarding their effectiveness – only 54 papers were deemed eligible for inclusion, with 46 of these defined as low level evidence according to the NHMRC hierarcy we used. This is a significant concern. While some may argue that progress need not always occur in response to evidence of benefit and that it could act as the driver to produce such evidence, we feel this represents an unacceptably high-risk approach to take in the field of health professions education where the acquisition of core disciplinary principles underpins the development of clinical professional competencies. Academic education providers must be mindful of this when deciding on the best ways to achieve educational outcomes in an ecosystem that is expanding to include the field of OOCs.

The high prevalence of MOOCs from the USA and UK may be a result of the exclusion of articles in languages other than English, but this not unusual in the literature [2, 69]. This skew towards developed, Western countries being the implementers and evaluators of MOOCs may impact upon participant perceptions and management of global health needs. This dominance of courses from developed countries is concerning, particularly when MOOCs are presented as educational alternatives for health care professionals in resource-constrained environments and developing countries [70].

While OOCs may be used to facilitate qualitative changes in teaching and learning practice, they require an approach to design that is quite different to the predominant form of MOOC [54]. Five studies in this review reported on the pedagogical framework used to design the course. In three cases the framework described was an xMOOC, the most common form of MOOC currently being implemented by the major providers. Institutions that choose technology platforms like Coursera and Udemy may do so in an attempt to focus on developing content rather than technology, but this means that educators may not have much choice in the kinds of activities their students complete. In about half of the articles the specific activities that participants were required to complete in the courses were not reported and, when they were, included watching videos and answering questions in forum discussions. While there is strong evidence in support of the notion that learning is socially constructed and that interaction is especially important in online learning, few studies in this review included elements that could be described as truly interactive. For example, the use of ‘embedded videos’ or ‘online lessons / modules’ are not interactive, despite author claims. Even in cases where articles in this study demonstrated an innovation in the MOOC space by, for example, including virtual patient cases in the traditional MOOC infrastructure, they still analysed outcomes using server logs and participant satisfaction surveys [39]. xMOOCs are arguably the least pedagogically sound variant if the outcome of interest is a qualitative change in teaching and learning behaviour, and they have been criticised for adopting a knowledge transmission mode of learning. In essence, they are considered to be technology-enriched, traditional, teacher-centred modes of instruction [8]. As this area of practice continues to evolve, clear distinctions between different kinds of MOOCs are becoming increasingly problematic. Future courses will need to integrate approaches across both formats [2]. Such MOOCs may be more likely to enhance innovative teaching and learning practices to inform the established ‘traditional’ method of health professions education. With this in mind, we feel the findings of the present review do not so much represent ‘evidence of a lack of effect’ as they depict ‘a lack of evidence of effect’. The distinction between the two positions is quite overt. The magnitude of interest in this field suggest OOCs may well be a model of education worthy of our attention. The precise nature of its suitability within academic healthcare education providers to address specific learning needs, however, is less clear. The tailoring of different types of OOCs to specific applications within this context will likely be an area of intense interest for future research.

The aim of using economic analyses for educational innovations is to provide low cost and high value approaches to teaching and learning, allowing evidence-based decision-making about the most appropriate allocation of what are often limited resources in an educational context [71, 72]. No such evidence for OOCs emerged from this review. While some economic analyses of MOOCs have previously been conducted, results have been difficult to interpret. For example, Hollands and Tithali (2014) [70] found that, while the cost per learner of some MOOCs may be lower than for traditional online courses, they may only be cost-effective for the most motivated of learners. While the course itself may be costed less than equivalent campus-based courses, such simplistic modelling fails to acknowledge the costs associated with student services such as academic counselling, library services, tutoring, and proctoring for assessment [73, 74]. Inclusion of such factors in MOOC modelling has high potential to render the courses prohibitively expensive [70]. This does not mean that OOCs are unable to offer innovative, low cost, high value avenues for health professions education. However, until economic evaluations of theoretically and pedagogically sound OOCs are conducted, any claims toward these aspirations lack credibility. The combination of making open courses available to vulnerable learner populations, such as those in low income countries, along with fees for certification in the absence of high quality educational evidence of student outcomes and learning experience, further raises concerns of moral and professional accountability [75].

A crucial issue emerging from this review is the lack of strong evidence to support student learning via OOCs. One of the challenges facing research in this field is the question of how institutes should use the high volume of data generated from mass participant interactions within a learning environment [76]. Advanced automated analytic processes ( e.g. data mining) may assist such challenges but are scarcely accessible within health professions education. Furthermore, the availability of large data sets of user interactions within online platforms does little to inform health professions educators about the impact of their intervention upon learning and behaviour. Inherent challenges with OOC research such as incomplete databases and distribution across multiple platforms and academic institutions further highlights the need to critically examine the way we conduct research in this space to ensure ‘future proofing’ against the replication of previous pitfalls [6]. In order to improve the quality of data acquisition, it appears essential to develop a collaborative culture among researchers and educators operating within this field. In order for health professions educators to optimise the value of data arising from such courses for their disciplines, it would be prudent to establish a minimum standard of research robustness at the course design phase. Based on the stark lack of such high-quality data, it would be reasonable to expect further such studies to significantly impact upon future review conclusions in this area.

Limitations

An important factor limiting the applicability of our findings to health professions education is the very low level of evidence included within this review – with the largest volume of information coming from descriptive and commentary articles (n = 46). Findings should thus be interpreted with due caution in light of this fact. We also added one additional outcome pillar related to the ‘learner experience (opinions / attitudes)’ to the proposed method outlined in our published review registration protocol. This was in response to the nature and amount of data that emerged from several included papers that we felt warranted inclusion. While our framework encapsulated domains we felt to be of principal interest for critical evaluation related to this field of research, this was based upon consensus within our team rather than that of published critical literature. For example, we did not evaluate OOC completion rates, despite being commonly reported, as it was felt to confer minimal relevance of the impact of OOCs for health professions education. Future critical analyses in this field may adopt alternate approaches to ours.

Conclusion

This review found minimal high-quality evidence that could be used to support decision-making around the inclusion of MOOCs in the field of health professions education. From 2016 to 2019 there has been an increase in the volume of published studies in this domain of practice, albeit with only a small increase in rigour. The majority of articles prior to 2016 included commentary and opinion pieces, while those after 2016 have tended towards descriptive studies that captured simplistic data from participants. While OOCs may turn out to be a disruptive innovation with the potential to influence the nature of the teaching and learning interactions in health professions education, there is currently very limited robust evidence to support the claim. The ability for MOOCs to increase access to education through overcoming geographic boundaries and administrative processes is of significant appeal, however close attention needs to be directed towards comprehensive, multifactorial evaluation of such courses from the perspectives of professionally accountable education institutes. There is an overt need for a vast increase in high quality research in this field. It is our belief that the implementation of MOOCs in health professions education cannot be upheld as sound, evidence-based pedagogical practice until future research demonstrates their precise role and effect on outcomes that are of critical importance to health professions education institutions.

Availability of data and materials

All relevant data collected during this study will be uploaded and shared on the publicly available repository at the University of the Western Cape (http://repository.uwc.ac.za/), when the the final article is published.

Abbreviations

- AMSTAR:

-

A MeaSurement Tool to Assess systematic Reviews

- CEBM:

-

Centre for Evidence Based Medicine

- CINAHL:

-

Cumulative Index to Nursing and Allied Health Literature

- DOCC:

-

Distributed Online Collaborative Course

- MOOC:

-

Massive Open Online Course

- NHMRC:

-

National Health and Medical Research Council

- OOC:

-

Open Online Course

- PROSPERO:

-

Prospective Register of Systematic Reviews

- RCT:

-

Randomised Controlled Trial

- SPOC:

-

Small Private Online Course

References

Johnson L, Adams Becker S, Cummins M, Freeman A, Ifenthaler D, Vardaxis N. Technology Outlook for Australian Tertiary Education 2013–2018: An NMC Horizon Project Regional Analysis. Austin: ERIC: The New Media Consortium; 2013.

Veletsianos G, Shepherdson P. A Systematic Analysis and Synthesis of the Empirical MOOC Literature Published in 2013–2015. Int Rev Res Open Distributed Learn. 2016;17(2).

Liyanagunawardena TR, Williams SA. Massive open online courses on health and medicine: review. J Med Internet Res. 2014;16(8):e191.

Harder B. Are MOOCs the future of medical education? BMJ. 2013;346(Apr 26/2):f2666.

Mehta NB, Hull AL, Young JB, Stoller JK. Just imagine. Acad Med. 2013;88(10):1418–23.

Fournier H, Kop R, Durand G. Challenges to research in MOOCs. J Online Learn Teach. 2014;10(1):1.

Anders A. Theories and Applications of Massive Online Open Courses (MOOCs): The Case for Hybrid Design. Int Rev Res Open Distributed Learn. 2015;16(6).

Yuan L, Powell S. MOOCs and open education: Implications for higher education. In: University of Bolton, United Kingdom: JISC Centre for Educational Technology & Interoperability Standards; 2013.

Ross J, Gallagher MS, Macleod H. Making distance visible: Assembling nearness in an online distance learning programme. Int Rev Res Open Distributed Learn. 2013;14(4).

Merlin T, Weston A, Tooher R. Extending an evidence hierarchy to include topics other than treatment: revising the Australian 'levels of evidence'. BMC Med Res Methodol. 2009;9:34.

National Institute for Health and Clinical Excellence. National Institute for Health and Clinical Excellence. London: The guidelines manual; 2007.

Khan KS, Ter Riet G, Glanville JM, Sowden AJ, Kleijnen J. Undertaking systematic reviews of research on effectiveness. In: CRD's guidance for those carrying out or commissioning reviews. York: NHS Centre for Reviews and Dissemination: University of York; 2001.

Phillips B, Ball C, Sackett D, Badenoch D, Straus S, Haynes B, Dawes M. Oxford Centre for Evidence-Based Medicine Levels of evidence (may 2001). In. Oxford: Centre for Evidence-Based Medicine; 2001.

Bellack J. MOOCs: the future is here. J Nurs Educ. 2013;52(1):3–4.

Billings DM. Understanding massively open online courses. J Contin Educ Nurs. 2014;45(2):58–9.

Coughlan T, Perryman L-A. Learning from the innovative open practices of three international health projects: IACAPAP, VCPH and Physiopedia. Open Praxis. 2015;7(2):173–89.

Davies E. Will MOOCs transform medicine? BMJ. 2013;346(May 03/1):f2877.

DeSilets LD. No longer a passing fad. J Contin Educ Nurs. 2013;44(4):149–50.

Evans DP, Luffy SM, Parisi S, del Rio C. The development of a massive open online course during the 2014–15 Ebola virus disease epidemic. Ann Epidemiol. 2017;27(9):611–5.

Frank E, Tairyan K, Everton M, Chu J, Goolsby C, Hayes A, Hulton A. A test of the first course (emergency medicine) that is globally available for credit and for free. Healthc (Amst). 2016;4(4):317–20.

Fricton J, Anderson K, Clavel A, Fricton R, Hathaway K, Kang W, Jaeger B, Maixner W, Pesut D, Russell J, et al. Preventing chronic pain: a human systems approach - results from a massive open online course. Global Adv Health Med. 2015;4(5):23–32.

Geissler C. Capacity building in public health nutrition. Proc Nutr Soc. 2015;74(04):430–6.

Goldberg LR, Crocombe LA. Advances in medical education and practice: role of massive open online courses. Adv Med Educ Pract. 2017;8:603–9.

Gooding I, Klaas B, Yager JD, Kanchanaraksa S. Massive open online courses in public health. Front Public Health. 2013;1(59). https://doi.org/10.3389/fpubh.2013.00059.

Harvey LA, Glinsky JV, Lowe R, Lowe T. A massive open online course for teaching physiotherapy students and physiotherapists about spinal cord injuries. Spinal Cord. 2014;52(12):911–8.

Harvey LA, Glinsky JV, Muldoon S, Chhabra HS. Massive open online courses for educating physiotherapists about spinal cord injuries: a descriptive study. Spinal Cord Ser Cases. 2017;3:17005.

Heller RF. Learning by MOOC or by crook. Med J Aust. 2014;200(4):192–3.

Henningsohn L, Dastaviz N, Stathakarou N, McGrath C. KIUrologyX: urology as you like it-a massive open online course for medical students, professionals, patients, and laypeople alike. Eur Urol. 2017;72(3):321–2.

Hoedebecke K, Mahmoud M, Yakubu K, Kendir C, D'Addosio R, Maria B, Borhany T, Oladunni O, Kareli A, Gokdemir O, et al. Collaborative global health E-learning: a massive open online course experience of young family doctors. J Family Med Prim Care. 2018;7(5):884–7.

Hossain MS, Shofiqul Islam M, Glinsky JV, Lowe R, Lowe T, Harvey LA. A massive open online course (MOOC) can be used to teach physiotherapy students about spinal cord injuries: a randomised trial. J Physiother. 2015;61(1):21–7.

Hoy MB. MOOCs 101: an introduction to massive open online courses. Med Ref Serv Q. 2014;33(1):85–91.

Inácio P, Cavaco A. Massive Open Online Courses (MOOC): A Tool to Complement Pharmacy Education? Dosis. 2015;31(2):28–36.

Jacquet GA, Umoren RA, Hayward AS, Myers JG, Modi P, Dunlop SJ, Sarfaty S, Hauswald M, Tupesis JP. The Practitioner's guide to Global Health: an interactive, online, open-access curriculum preparing medical learners for global health experiences. Med Educ Online. 2018;23(1):1503914.

Jia M, Gong, Luo J, Zhao J, Zheng J, Li K. Who can benefit more from massive open online courses? A prospective cohort study. Nurse Educ Today. 2019;76:96–102.

Juanes JA, Ruisoto P. Computer Applications in Health Science Education. J Med Syst. 2015;39(9).

Kearney RC, Premaraj S, Smith BM, Olson GW, Williamson AE, Romanos G. Massive open online courses in dental education: two viewpoints viewpoint 1: massive open online courses offer transformative Technology for Dental Education and Viewpoint 2: massive open online courses are not ready for primetime. J Dent Educ. 2016;80(2):121–7.

King C, Robinson A, Vickers J. Online education: targeted MOOC captivates students. Nature. 2014;505(7481):26.

King C, Kelder J-A, Doherty K, Phillips R, McInerney F, Walls J, Robinson A, Vickers J. Designing for quality: the understanding dementia MOOC. Lead Issues elearning. 2015;2:1.

Kononowicz AA, Berman AH, Stathakarou N, McGrath C, Bartyński T, Nowakowski P, Malawski M, Zary N. Virtual Patients in a Behavioral Medicine Massive Open Online Course (MOOC): A Case-Based Analysis of Technical Capacity and User Navigation Pathways. JMIR Med Educ. 2015;1(2):e8.

Lan M, Hou X, Qi X, Mattheos N. Self-regulated learning strategies in world's first MOOC in implant dentistry. Eur J Dent Educ. 2019;23(3):278–85.

Liyanagunawardena TR, Aboshady OA. Massive open online courses: a resource for health education in developing countries. Glob Health Promot. 2018;25(3):74–6.

Lunde L, Moen A, Rosvold EO. Learning Clinical Assessment and Interdisciplinary Team Collaboration in Primary Care. MOOC for Healthcare Practitioners and Students. Stud Health Technol Inform. 2018;250:68.

Magana-Valladares L, Rosas-Magallanes C, Montoya-Rodriguez A, Calvillo-Jacobo G, Alpuche-Arande CM, Garcia-Saiso S. A MOOC as an immediate strategy to train health personnel in the cholera outbreak in Mexico. BMC Med Educ. 2018;18(1):111.

Magana-Valladares L, Gonzalez-Robledo MC, Rosas-Magallanes C, Mejia-Arias MA, Arreola-Ornelas H, Knaul FM. Training primary health professionals in breast Cancer prevention: evidence and experience from Mexico. J Cancer Educ. 2018;33(1):160–6.

Masters K. A Brief Guide To Understanding MOOCs. Internet J Med Educ. 2011;1(2):2.

Maxwell WD, Fabel PH, Diaz V, Walkow JC, Kwiek NC, Kanchanaraksa S, Wamsley M, Chen A, Bookstaver PB. Massive open online courses in U.S. healthcare education: practical considerations and lessons learned from implementation. Curr Pharm Teach Learn. 2018;10(6):736–43.

McCartney PR. Exploring Massive Open Online Courses for Nurses. MCN Am J Matern Child Nurs. 2015;40(4):265.

Medina-Presentado JC, Margolis A, Teixeira L, Lorier L, Gales AC, Perez-Sartori G, Oliveira MS, Seija V, Paciel D, Vignoli R, et al. Online continuing interprofessional education on hospital-acquired infections for Latin America. Braz J Infect Dis. 2017;21(2):140–7.

Milligan C, Littlejohn A, Ukadike O. Professional learning in massive open online courses. In: Proceedings of the 9th international conference on networked learning: 2014; 2014. p. 368–3710.

Pérez-Moreno MA, Peñalva-Moreno G, Praena J, González-González A, Martínez-Cañavate MT, Rodríguez-Baño J, Cisneros JM, Pérez-Moreno MA, Peñalva-Moreno G, González-González A, et al. Evaluation of the impact of a nationwide massive online open course on the appropriate use of antimicrobials. J Antimicrob Chemother. 2018;73(8):2231–5.

Power A, Coulson K. What are OERs and MOOCs and what have they got to do with prep? Br J Midwifery. 2015;23(4):282–4.

Roberts DH, Schwartzstein RM, Weinberger SE. Career development for the clinician-educator. Optimizing impact and maximizing success. Ann Am Thorac Soc. 2014;11(2):254–9.

Robinson R. Delivering a medical school elective with massive open online course (MOOC) technology. PeerJ. 2016;2016(8):e2343 (no pagination).

Rowe M. Developing graduate attributes in an open online course. Br J Educ Technol. 2016;47(5):873–82.

Sitzman KL, Jensen A, Chan S. Creating a Global Community of Learners in Nursing and Beyond: Caring Science, Mindful Practice MOOC. Nurs Educ Perspect. 2016;37(5):269–74.

Skiba D. MOOCs and the future of nursing. Nurs Educ Perspect. 2013;34(3):202–4.

Sneddon J, Barlow G, Bradley S, Brink A, Chandy SJ, Nathwani D. Development and impact of a massive open online course (MOOC) for antimicrobial stewardship. J Antimicrob Chemother. 2018;73(4):1091–7.

Stokes CW, Towers AC, Jinks PV, Symington A. Discover dentistry: encouraging wider participation in dentistry using a massive open online course (MOOC). Br Dent J. 2015;219(2):81–5.

Subhi Y, Andresen K, Bojsen SR, Nilsson PM, Konge L. Massive open online courses are relevant for postgraduate medical training. Dan Med J. 2014;61(10):A4923.

Swinnerton BJ, Morris NP, Hotchkiss S, Pickering JD. The integration of an anatomy massive open online course (MOOC) into a medical anatomy curriculum. Anat Sci Educ. 2017;10(1):53–67.

Szpunar KK, Moulton ST, Schacter DL. Mind wandering and education: from the classroom to online learning. Front Psychol. 2013;4:495.

Takooshian H, Gielen UP, Plous S, Rich GJ, Velayo RS. Internationalizing undergraduate psychology education: trends, techniques, and technologies. Am Psychol. 2016;71(2):136–47.

Online Course Brings Dementia Care Home. 2016. [http://nursing.jhu.edu/news-events/news/archives/news/dementia-mooc2].

Wan HT, Hsu KY. An innovative approach for pharmacists' continue education: massive open online courses, a lesson learnt. Indian J Pharm Educ Res. 2016;50(1):103–8.

Zhao F, Fu Y, Zhang QJ, Zhou Y, Ge PF, Huang HX, He Y. The comparison of teaching efficiency between massive open online courses and traditional courses in medicine education: a systematic review and meta-analysis. Ann Transl Med. 2018;6(23):458.

Herrington J. Authentic e-learning in higher education: Design principles for authentic learning environments and tasks. In: E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education: 2006. Waynesville: Association for the Advancement of Computing in Education (AACE); 2006. p. 3164–73.

Maloney S, Cook DA, Golub R, Foo J, Cleland J, Rivers G, Tolsgaard MG, Evans D, Abdalla ME, Walsh K. AMEE guide no. 123 - how to read studies of educational costs. Med Teach. 2019;41(5):497–504.

Walsh K, Levin H, Jaye P, Gazzard J. Cost analyses approaches in medical education: there are no simple solutions. Med Educ. 2013;47(10):962–8.

Liyanagunawardena TR, Adams AA, Williams SA. MOOCs: a systematic study of the published literature 2008-2012. Int Rev Res Open Distributed Learn. 2013;14(3):202.

Hollands FM, Tirthali D. Resource requirements and costs of developing and delivering MOOCs. Int Rev Res Open Distributed Learn. 2014;15(5).

Maloney S, Haines T. Issues of cost-benefit and cost-effectiveness for simulation in health professions education. Adv Simul. 2016;1(1):13.

Maloney S, Reeves S, Rivers G, Ilic D, Foo J, Walsh K. The Prato statement on cost and value in professional and interprofessional education. J Interprof Care. 2017;31(1):1–4.

Maloney S, Haas R, Keating JL, Molloy E, Jolly B, Sims J, Morgan P, Haines T. Breakeven, cost benefit, cost effectiveness, and willingness to pay for web-based versus face-to-face education delivery for health professionals. J Med Internet Res. 2012;14(2):e47.

Maloney S, Nicklen P, Rivers G, Foo J, Ooi YY, Reeves S, Walsh K, Ilic D. A cost-effectiveness analysis of blended versus face-to-face delivery of evidence-based medicine to medical students. J Med Internet Res. 2015;17(7):e182.

Nicklen P, Rivers G, Ooi C, Ilic D, Reeves S, Walsh K, Maloney S. An approach for calculating student-centered value in education - a link between quality, efficiency, and the learning experience in the health professions. PLoS One. 2016;11(9):e0162941.

Reich J. Rebooting MOOC research. Science. 2015;347:34–5.

Acknowledgements

The authors wish to thank Cassandra Neylon and Jordan Rutherford for their assistance with the data extraction and quality appraisal of studies included within this review.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

MR conceptualised the study, and drafted the initial manuscript. SM, CO and SP prepared the results, conducted the analysis, and interpreted the data. All authors contributed to writing the final article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval and consent was not required for this systematic review of the literature.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Table S1. Quality appraisal of included studies – reviews (AMSTAR checklist). (DOCX 22 kb)

Additional file 2:

Table S2. Quality appraisal of included studies – descriptive / case-series studies (The Joanna Briggs Institute). (DOCX 30 kb)

Additional file 3:

Table S3. Quality appraisal of included studies – randomised controlled trial (Cochrane Risk of Bias Tool). (DOCX 35 kb)

Additional file 4:

Table S4. Quality appraisal of included studies – narrative, expert opinion, text (Joanna Briggs). (DOCX 30 kb)

Additional file 5:

Table S5. Quality appraisal of included studies – cohort/case-control studies (The Joanna Briggs Institute). (DOCX 21 kb)

Additional file 6:

Table S6. Quality appraisal of included studies – qualitative studies (The Joanna Briggs Institute). (DOCX 21 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Rowe, M., Osadnik, C.R., Pritchard, S. et al. These may not be the courses you are seeking: a systematic review of open online courses in health professions education. BMC Med Educ 19, 356 (2019). https://doi.org/10.1186/s12909-019-1774-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-019-1774-9