Abstract

Background

Peer evaluation can provide valuable feedback to medical students, and increase student confidence and quality of work. The objective of this systematic review was to examine the utilization, effectiveness, and quality of peer feedback during collaborative learning in medical education.

Methods

The PRISMA statement for reporting in systematic reviews and meta-analysis was used to guide the process of conducting the systematic review. Evaluation of level of evidence (Colthart) and types of outcomes (Kirkpatrick) were used. Two main authors reviewed articles with a third deciding on conflicting results.

Results

The final review included 31 studies. Problem-based learning and team-based learning were the most common collaborative learning settings. Eleven studies reported that students received instruction on how to provide appropriate peer feedback. No studies provided descriptions on whether or not the quality of feedback was evaluated by faculty. Seventeen studies evaluated the effect of peer feedback on professionalism; 12 of those studies evaluated its effectiveness for assessing professionalism and eight evaluated the use of peer feedback for professional behavior development. Ten studies examined the effect of peer feedback on student learning. Six studies examined the role of peer feedback on team dynamics.

Conclusions

This systematic review indicates that peer feedback in a collaborative learning environment may be a reliable assessment for professionalism and may aid in the development of professional behavior. The review suggests implications for further research on the impact of peer feedback, including the effectiveness of providing instruction on how to provide appropriate peer feedback.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Medical curricula are increasingly integrating collaborative learning [1, 2]. When learning in groups and teams, in which individual students work together to achieve a common goal, such as in team-based learning (TBL) and problem-based learning (PBL), there is an expectation for students to be accountable to both their instructor and peers [3]. One way in which students are held accountable is through the utilization of peer feedback, also known as peer assessment or peer evaluation, which allows students to recognize areas of their strength and weakness as team members. Feedback is essential for learning; it can help students recognize their potential areas of deficiency in their knowledge, skills, or attitude. It is hoped that students use feedback to improve and become effective teammates [4].

There are many advantages to using peer feedback in the medical school curriculum. One advantage is that it can provide a valuable and unique perspective regarding the overall performance of students [5]. Compared to rare encounters with faculty, peers often work together for extended periods of time. Peer assessment is beneficial in assessing areas of proficiency based on multiple observations, rather than one encounter [6]. For this reason, compared to faculty, peers may have the ability to provide more accurate assessments of competencies such as teamwork, communication, and professionalism [7]. Additionally, according to Searby and Ewers, peer assessment may motivate students to produce high-quality work [8]. Overall, peer evaluation may help students improve metacognitive and reflection skills and develop a thorough understanding of coursework by identifying knowledge gaps and reinforcing positive behavior [6, 8,9,10,11].

While the literature suggests that peer evaluation can lead to positive outcomes, there are also limitations that must be addressed prior to implementation. Improper or poorly timed peer feedback may impair student relationships and disrupt team function [11]. Poor implementation of peer assessment may create an undesirable class environment that includes distrust, increased competition, or the tendency to exert less effort than they would working alone [3, 12]. Additionally, many students may feel uncomfortable providing peer feedback because of lack of anonymity, potential bias in scores due to interpersonal relationships, and lack of expertise in making assessments [6, 13,14,15]. Moreover, some students believe peers may be overly nice and not provide honest feedback [13].

Overall, it seems that peer evaluation in a collaborative learning environment has the ability to provide valuable feedback to medical students. It may provide skills necessary to effectively work on inter- and multidisciplinary teams as physicians. It may also increase student confidence and quality of work. The goal of this systematic review was to examine the utilization, effectiveness, and quality of peer feedback in a collaborative learning environment, specifically in undergraduate medical education. The objectives were to determine the role peer feedback plays in student learning and professional development, ascertain the impact peer feedback might have on team dynamics and success, and learn if and how the quality of peer feedback is assessed.

Methods

Data sources

A comprehensive literature search was conducted by one of the authors (M.M.). Databases searched included: PubMed, PsycINFO, Embase, Cochrane Library, CINAHL, ERIC, Scopus, and Web of Science. Search terms included index terms (MeSH terms or subject headings) and free text words (see Appendix for complete search strategies for PubMed): ((education, medical, undergraduate [mh] OR students, medical [mh] OR schools, medical [mh] OR “undergraduate medical education” OR “medical student” OR “medical students” OR “medical schools” OR “medical school”) AND (TBL OR “team-based learning” OR “team based learning” OR “collaborative learning” OR “problem-based learning” OR “problem based learning”) AND ((“peer evaluation” OR “peer feedback” OR “peer assessment”) OR ((peer OR peers OR team OR teams) AND (measur* OR assess* OR evaluat*))). The searches were limited to peer-reviewed English-language journal articles published between 1997 and 2017. Overall, the authors felt a 20-year limit was appropriate to ensure the data being evaluated was applicable in undergraduate medical education today.

Study selection

Duplicates of all articles retrieved were excluded and screened for full-text review if they were original research articles that assessed the use of peer feedback by medical students in a collaborative learning environment during medical school. Editorials, comments, general opinion pieces, letters, survey research studies, and reviews were excluded. All reference lists of selected articles for full-text screening were hand searched for additional relevant articles not discovered in the initial database searches.

Paired reviewers (S.L. and M.E.) screened titles and abstracts of retrieved articles independently. Citations with abstracts that seemed potentially relevant to the selection criteria were included as candidates for full-text screening. The same two reviewers screened these full-text articles independently and in duplicate based on the selection criteria. When a discrepancy arose in article selection between the two reviewers at the full-text article screening stage, the disagreement went to arbitration by a third reviewer (M.M.) who served as a tiebreaker.

Data extraction and quality assessment

The systematic review was guided by the Preferred Reporting Items for Systematic Reviews and Meta-Analysis Statement (PRISMA) [16] and the Best Evidence Medical Education (BEME) Guide No. 10, “The Effectiveness of Self-Assessment on the Identification of Learner Needs, Learner Activity, and Impact on Clinical Practice” [16, 17]. A standard extraction framework was developed and piloted with a small sample of included studies to abstract and code data from the selected studies. The two authors (S.L. and M.E.) read each article independently and used the extraction framework to extract data. Data was extracted on the country where the study took place, type of course, type of participants, sample size, type of collaborative learning environments (team-based learning, problem-based learning, etc.), and impact and outcomes of peer feedback being evaluated. This information can be found in Table 1. In addition, the types of studies, data sources for peer evaluation, peer evaluation grading criteria, and assessment methods for the quality of peer feedback were also extracted. A qualitative systematic review was conducted due to the heterogeneity of the selected studies in terms of research designs, types of peer feedback, types of student participants, settings, and outcome measures of the impact of peer feedback.

We used an adapted Kirkpatrick evaluation model [45] to classify the effectiveness/impact of peer feedback in this review. There are six levels ranging from level 1 (Reaction - participants’ views on the learning experience) to level 4B (Results – improvement in student learning as a direct result of their educational intervention) [45].

We used a grading system, the Gradings of Strength of Findings of the Paper by Colthart et al. to score the strength of study findings on a scale of 1 to 5 [17]. Articles scored 4 or above on the strength of findings were considered to be higher quality papers. Articles scored 3 had conclusions that could probably be based on the results. Articles graded as 2 or 1 were regarded as, “results ambiguous, but there appear to be a trend,” or "No clear conclusions can be drawn (not significant). Two authors (S.L. and M.E.) independently carried out data extraction and quality appraisal. Three authors (S.L., M.E., and M.M.) reviewed and discussed discrepancies and came to a consensus.

Results

Retrieved studies

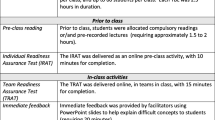

A total of 1301 articles were returned from the literature search. After removal of duplicates, 948 remained. A further 905 articles were excluded after title and/or abstract screening leaving 43 for full-text review. Of these, 26 articles were included in this review in addition to 5 further articles identified through hand searching of references (31 in total). Further details shown in PRISMA flowchart (Fig. 1).

Study characteristics

Of the included studies, the majority were completed in the United States (n = 14), followed by the Netherlands (n = 4), and Australia (n = 4), Canada (n = 3), and the United Kingdom (n = 3). Other countries included Bahrain, Brazil, Finland, and Lebanon.

The sample size for the studies ranged from 30 to 633 students. Most studies included first-year (n = 18) and second-year (n = 9) medical students. Peer feedback was evaluated through collaborative learning activities integrated into preclinical courses (n = 7) and clerkships (n = 2); although many studies did not provide clear information on where peer feedback was evaluated in the curriculum. PBL (n = 18) and TBL (n = 6) were used in the collaborative learning setting.

The research methodology of selected studies included 15 quantitative, 3 qualitative, and 13 mixed methods (defined as including both quantitative and qualitative data). Most studies utilized quantitative questionnaires to obtain data (n = 26). Other data sources included narrative comments (n = 14), focus groups (n = 5), open-discussions (n = 1) and individual interviews (n = 1). Many studies did not describe the grading criteria for the peer feedback (n = 17); of those that did, most were formative (ungraded) in nature (n = 8), while some courses included formative and summative (graded) peer feedback (n = 2) and some only included summative peer feedback (n = 4). Eleven studies reported that students received instruction on how to provide appropriate peer feedback, but no studies provided descriptions on whether or not the quality of feedback was evaluated by faculty.

A total of 17 studies evaluated the effect of peer feedback on professionalism in some manner. There were 12 studies that evaluated the effectiveness of peer feedback for the assessment of professionalism. Of those 12 studies, eight had positive results, two had mixed results, one had negative outcomes, and one was inconclusive. Eight studies evaluated the use of peer feedback for the development of professional behavior, in which seven had positive results and one had mixed results. Ten studies examined the effect of peer feedback on student learning, in which four had positive results, three had mixed results, one had negative outcomes, and three were inconclusive. In addition, there were six studies that examined the role of peer feedback on team dynamics. Of those studies, four had positive outcomes, whereas two had negative results. Table 1 contains more details about the selected articles, including sample size, participants, and setting.

Discussion

This systematic review examined the role of peer feedback in a collaborative learning environment in undergraduate medical education. It revealed a large number of variations in research design, approaches to peer feedback, and definitions of outcome measures. Due to the heterogeneity of these studies, it was difficult to assess the overall effectiveness and utility of peer feedback in collaborative learning for medical students. Despite these differences, the overall outcomes for most of the studies were positive.

Assessment of professionalism

The professional development of medical students is an essential aim of the medical school curriculum [20]. Peer feedback may provide reliable and valid assessment of professionalism. In the systematic review, several studies reported positive outcomes for the assessment of several aspects of professionalism. Chen and colleagues reported peers were able to accurately evaluate respect, communication and assertiveness of student leaders [1]. Dannefer and colleagues found that peer feedback was consistently specific and related to the professionalism behaviors identified by faculty as fundamental to practice. In addition, they found that peers often gave advice on how to improve performance, which is often considered a type of feedback that can result in positive changes in behavior [23, 46]. Emke and colleagues found that the use of peer evaluation in TBL may help identify students who may be at risk for professionalism concerns [25].

Although many studies had encouraging results in regards to the use of peer feedback for the assessment of professionalism, some studies did not show positive results. For example, Roberts and colleagues found that peer assessment of professional learning behavior was highly reliable for within-group comparisons, but poor for across-group comparisons, stating that peer assessment of professional learning behaviors may be unreliable for decision making outside a PBL group [37].

Development of professionalism

According to Emke and colleagues, professional behavior is a cornerstone of the physician-patient relationship, as well as the relationship between colleagues working together on a multidisciplinary team [25]. Many studies reported positive outcomes when assessing the development of professionalism. Nofziger and colleagues found that 65% of students reported important transformations in awareness, attitudes, and behaviors due to high quality peer assessment [31]. Papinczak and colleagues found that peer assessment strengthened the sense of responsibility that group members had for each other, in which several students were enthusiastic and committed to providing helpful and valid feedback to support the learning of their peers [33]. In addition, studies by Tayem and colleagues and Zgheib and colleagues reported improvements in communication skills, professionalism, and ability to work on a team [40, 44].

Student learning

Peer assessment may also be beneficial for student learning. Tayem and colleagues reported that a large percentage of students that participated in peer assessment in TBL felt that peer assessment helped increase their analytical skills as well as their ability to achieve their learning objectives and fulfill tasks related to the analysis of problems [40]. Zgheib and colleagues noted that students learned how to provide peer evaluations that were specific and descriptive, and expressed in terms that were relevant to the recipient’s needs, preparing them for their role in providing feedback as future physicians [44]. While peer assessment may be an important tool to improve student learning, some outcomes were mixed. For example, Bryan and colleagues stated that students were capable of commenting on professional values, but may lack the insight to make accurate evaluations. They recommended that peer-assessment should be used as a training tool to help students learn how to provide appropriate feedback to others [19].

Team dynamics

Students must learn how to effectively work on a team to become successful physicians [47]. Peer feedback may be valuable in the development of medical students in becoming effective team members. For example, Tayem and colleagues reported that a large proportion of participants agreed or strongly agreed that their respect towards the other group members and desire to share information with them had improved. The students also agreed that they had become more dependable as a result of peer assessment [40].

Grading criteria

In many cases, the grading criteria were not clearly described. Of the studies that described the grading criteria, the majority felt that peer feedback was appropriate to use in a formative, or ungraded, manner. Although most studies did not describe the details of their grading criteria, the literature supports the use of formative and summative assessments for peer feedback evaluations. According to Cestone and colleagues, peer assessment in TBL can provide formative information to help individual students improve team performance over time. Formative peer feedback can also aid in the development of interpersonal and team skills that are very important for success in future endeavors [3]. According to Cottrell and colleagues, one evaluation is not adequate, in which implementing the peer assessment multiple times and across a variety of learning contexts lends students opportunities to make formative changes for improvement [20].

Instruction on how to provide high-quality peer feedback

Providing effective feedback for peers is a skill that should be developed early in medical and health professions education [1]. According to Burgess and colleagues, peer review is a common requirement among medical staff, but since formal training in providing quality feedback is not common in the medical school curriculum, physicians are often not well prepared for this task [48].

Out of 31 studies, 11 described the instruction that was provided to students on how to provide effective peer feedback. These studies stressed the important of training students how to provide feedback for strengths as well as areas needing improvement. Without any guidance or training on how to provide peer feedback, students may feel confused or not know how to assess peer properly. In the study by Garner and colleagues, they found that students were unclear about the purpose of peer assessment and felt the exercise was imposed upon them with little preparation or training, creating anxiety for individual students [26]. Nofziger stated that students should receive training to provide specific, constructive feedback and that the institutional culture should emphasize safety around feedback, while committing to rewarding excellence and addressing concerning behaviors [31]. Better instruction on how to provide appropriate feedback may make the goals of peer feedback clearer as well as decrease student anxiety when performing assessments of their peers.

Evaluation of feedback quality

This systematic review also evaluated whether or not the quality of peer feedback was assessed by students or faculty. Most included studies failed to address the importance of the evaluation of peer feedback by students, including the potential importance of educators reviewing the feedback quality. Faculty should be trained on how to evaluate the quality of feedback to make sure students are effectively assessing their peers, as well as help remediate students who are not performing to the best of their abilities.

Limitations

Our systematic review was limited to published studies in English. As a result, potential publication bias and language bias may have been introduced to the review. Other reporting biases in these selected studies may contribute to biased conclusion of the study reports. These biases could potentially present a threat to the validity of any type of review including this systematic review. The presence of negative and inconclusive studies and small effects do not support publication bias. A full analysis for publication bias is not feasible and thus, cannot be ruled out. An advantage is that this review is one of the first to apply standard methods of evaluating both study outcomes and quality of the existing literature related to peer feedback during collaborative learning in undergraduate medical education. The descriptive methods inherently limit potential conclusions that may be drawn from reported results. These proved to be challenging to apply because the majority of the studies were descriptive in nature.

With regards to strength of methods, an overwhelming majority of studies received lower ratings. This pattern was often due to outcomes not specified a priori, no power discussion, unclear primary study objective, or discordant conclusion with originally stated study aim. A few studies seemed to use validated surveys to measure student perceptions but then reported outcomes measures without interpretation of results.

The strongest studies were conducted by Chen, Cottrell, Kamp, Parikh, and Roberts [1, 4, 20, 28, 37]. The strength of Cottrell 2006 was the report of internal consistency of the rubric and generalizability of the results [20]. Kamp 2014 had a sound pre-post design. However, they did not report a power calculation [28].

Finally, the most common study outcomes were evaluated at levels 1 and 2 according to the modified Kirkpatrick Evaluation Model [45]. These corresponded mostly to the feasibility of doing peer feedback in a variety of learning contexts. The second most common outcome was student perceptions of peer feedback. Overall, students had favorable perceptions. Concordance between self and others’ feedback was considered level 1. Lastly, professionalism was the most common level 3 outcome reported.

Conclusions

The objectives of this systematic review were to determine the role peer feedback plays in student learning and professional development, ascertain the impact peer feedback might have on team dynamics and success, and learn if and how the quality of peer feedback is assessed. Our review highlights the heterogeneity of the current literature regarding the use of peer feedback in undergraduate medical education. Overall, peer feedback in a collaborative learning environment may be a reliable assessment for professionalism and aid in the development of professional behavior. Many studies felt that peer feedback was appropriate to use in a formative manner. Most studies do not address the importance of the quality of peer feedback provided by students. Due to the wide variations in the outcomes defined by these studies, it may be beneficial to have more standardized definitions for student learning, team-dynamics, and professionalism. Despite the large variety of contexts and outcomes studied, there seems to be a consistent message. Peer feedback in collaborative learning is feasible and may be useful. Steps to ensure success include training faculty and students on peer feedback methods and purpose. Because developing and implementing peer feedback systems takes significant energy and resources, further studies should increase in methodologic reporting rigor and seek to expand outcomes to include, but not be limited to, quality of peer feedback (including the effectiveness of providing faculty and student training), the effect on academic performance, institutional culture, and benefits to future employers and patients.

Availability of data and materials

Not applicable

Abbreviations

- M.E.:

-

Mary Eng (author)

- M.M.:

-

Misa Mi (author)

- PBL:

-

Problem-based learning

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analysis Statement

- S.L.:

-

Sarah Lerchenfeldt (author)

- TBL:

-

Team-based learning

References

Chen LP, Gregory JK, Camp CL, Juskewitch JE, Pawlina W, Lachman N. Learning to lead: self- and peer evaluation of team leaders in the human structure didactic block. Anat Sci Educ. 2009;2:210–7.

Souba WW, Day DV. Leadership values in academic medicine. Acad Med. 2006;81:20–6.

Cestone CM, Levine RE, Lane DR. Team-based learning: small group Learning’s next big step. In: Michaelsen LK, Fink LD, editors. New directions for teaching and learning. San Francisco: Wiley Periodicals, Inc.; 2008. p. 69–78.

Parikh A, Mcreelis K, Hodges B. Student feedback in problem based learning : a survey of 103 final year students across five Ontario medical schools. Med Educ. 2001;35:632–6.

Sullivan ME, Hitchcock MA, Dunnington GL. Peer and self assessment during problem-based tutorials. Am J Surg. 1999;177(3):266–9.

Papinczak T, Young L, Groves M. Peer assessment in problem-based learning: a qualitative study. Adv Heal Sci Educ. 2007;12:169–86.

Epstein RM. Assessment in medical education. N Engl J Med. 2007;356:387–96.

Searby M, Ewers T. An evaluation of the use of peer assessment in higher education: a case study in the school of music, Kingston university. Assess Eval High Educ. 1997;22(4):371–83.

Ballantyne R, Hughes K, Mylonas A. Developing procedures for implementing peer assessment in large classes developing procedures for implementing peer assessment in large classes using an action research process. Assess Eval High Educ. 2002;27(5):427–41.

McDowell L. The impact of innovative assessment on student learning. Innov Educ Train Int. 1995;32(4):302–13.

Michaelsen LK, Schultheiss EE. Making feedback helpful. Organ Behav Teach Rev. 1989;13(1):109–13.

Levine RE. Team-Based Learning for Health Professions Education. In: Michaelsen LK, Parmalee DX, Mc Mahon KK, Levine RE, editors. Team-Based Learning for Health Professions Education. First. Sterling: Stylus; 2008. p. 103–116.

Gukas ID, Miles S, Heylings DJ, Leinster SJ. Medical students’ perceptions of peer feedback on an anatomy student-selected study module. Med Teach. 2008;30(8):812–4.

Sluijsmans DMA, Moerkerke G, van Merrienboer JJG, Dochy FJR. Peer assessment in problem based learning. Stud Educ Eval. 2001;27:153–73.

Orsmond P, Merry S. The importance of marking criteria in the use of peer assessment. Assess Eval High Educ. 1996;21(3):240–50.

Moher D, Liberati A, Tetzlaff J, Altman D, The PRISMA Group. Preferred reporting Items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009;6:7.

Colthart I, Bagnall G, Evans A, Allbutt H, Haig A, Illing J, et al. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME guide no. 10. Med Teach. 2008;30(2):124–45.

Abercrombie S, Parkes J, McCarty T. Motivational influences of using peer evaluation in problem-based learning in medical education. Interdiscip J Probl Learn. 2015;9(1):33–43.

Bryan RE, Krych AJ, Carmichael SW, Viggiano TR, Pawlina W. Assessing professionalism in early medical education: experience with peer evaluation and self-evaluation in the gross anatomy course. Ann Acad Med Singap. 2005;34:486–91.

Cottrell S, Diaz S, Cather A, Shumway J. Assessing medical student professionalism: an analysis of a peer assessment. Med Educ Online. 2006;11(8):1–8.

Cushing A, Abbott S, Lothian D, Hall A, Westwood OMR. Peer feedback as an aid to learning – what do we want ? Feedback. When do we want it ? Now ! Med Teach. 2011;33:e105–12.

Dannefer EF, Henson LC, Bierer SB, Grady-Weliky TA, Meldrum S, Nofziger AC, et al. Peer assessment of professional competence. Med Educ. 2005;39:713–22.

Dannefer EF, Prayson RA. Supporting students in self-regulation: use of formative feedback and portfolios in a problem-based learning setting. Med Teach. 2013;35(8):655–60.

Emke AR, Cheng S, Dufault C, Cianciolo AT, Musick D, Richards B, et al. Developing professionalism via multisource feedbackin team-based learning. Teach Learn Med. 2015;27(4):362–5.

Emke AR, Cheng S, Chen L, Tian D, Dufault C. A novel approach to assessing professionalism in preclinical medical students using multisource feedback through paired self- and peer evaluations. Teach Learn Med. 2017;0(0):1–9.

Garner J, Mckendree J, Sullivan HO, Taylor D. Undergraduate medical student attitudes to the peer assessment of professional behaviours in two medical schools. Educ Prim Care. 2010;21:32–7.

Kamp RJA, Dolmans DHJM, Van Berkel HJM, Schmidt HG. The effect of midterm peer feedback on student functioning in problem-based tutorials. Adv Heal Sci Educ. 2013;18:199–213.

Kamp RJA, van Berkel HJM, Popeijus HE, Leppink J, Schmidt HG, Dolmans DHJM. Midterm peer feedback in problem-based learning groups: the effect on individual contributions and achievement. Adv Heal Sci Educ. 2014;19:53–69.

Machado JLM, Machado VMP, Grec W, Bollela VR, Vieira JE. Self- and peer assessment may not be an accurate measure of PBL tutorial process. BMC Med Educ. 2008;8:55.

Nieder GL, Parmelee DX, Stolfi A, Hudes PD. Team-based learning in a medical gross anatomy and embryology course. Clin Anat. 2005;18:56–63.

Nofziger AC, Naumburg EH, Davis BJ, Mooney CJ, Epstein RM. Impact of peer assessment on the professional development of medical students : a qualitative study. Acad Med. 2010;85:140–7.

Parmelee DX, DeStephen D, Borges NJ. Medical students’ attitudes about team-based learning in a pre-clinical curriculum. Med Educ Online. 2009;14:1.

Papinczak T, Young L, Groves M, Haynes M. An analysis of peer, self, and tutor assessment in problem-based learning tutorials. Med Teach. 2007;29(5):e122–32.

Pocock TM, Sanders T, Bundy C. The impact of teamwork in peer assessment: a qualitative analysis of a group exercise at a UK medical school. Biosci Educ. 2010;15(1):1–12.

Reiter HI, Eva KW, Hatala RM, Norman GR. Self and peer assessment in tutorials: application of a relative-ranking model. Acad Med. 2002;77:1134–9.

Renko M, Uhari M, Soini H, Tensing M. Peer consultation as a method for promoting problem-based learning during a paediatrics course. Med Teach. 2002;24(4):408–11.

Roberts C, Jorm C, Gentilcore S, Crossley J. Peer assessment of professional behaviours in problem-based learning groups. Med Educ. 2017;51(4):390–400.

Rudy DW, Fejfar MC, Charles H, Griffith I, Wilson JF. Self- and peer assessment in a first-year communication and interviewing course. Eval Heal Prof. 2001;24(4):436–45.

Schönrock-Adema J, Heijne-Penninga M, Van Duijn MAJ, Geertsma J, Cohen-Schotanus J. Assessment of professional behaviour in undergraduate medical education: peer assessment enhances performance. Med Educ. 2007;41:836–42.

Tayem YI, James H, Al-Khaja KAJ, Razzak RLA, Potu BK, Sequeira RP. Medical students’ perceptions of peer assessment in a problem-based learning curriculum. Sultan Qaboos Univ Med J. 2015;15(3):e376–81.

van Mook WNKA, Muijtjens AMM, Gorter SL, Zwaveling JH, Schuwirth LW, van der Vleuten CPM. Web-assisted assessment of professional behaviour in problem-based learning: more feedback, yet no qualitative improvement? Adv Heal Sci Educ. 2012;17:81–93.

Vasan NS, DeFouw DO, Compton S. A survey of student perceptions of team-based learning in anatomy curriculum: favorable views unrelated to grades. Anat Sci Educ. 2009;2:150–5.

White JS, Sharma N. Who writes what? Using written comments in team-based assessment to better understand medical student performance: a mixed-methods study. BMC Med Educ. 2012;12:123.

Zgheib NK, Dimassi Z, Bou Akl I, Badr KF, Sabra R. The long-term impact of team-based learning on medical students’ team performance scores and on their peer evaluation scores. Med Teach. 2016;38(10):1017–24.

Steinert Y, Mann K, Centeno A, Dolmans D, Spencer J, Gelula M, et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME guide no. 8. Med Teach. 2006;28(6):497–526.

Clark I. Formative assessment : assessment is for self-regulated learning. Educ Psychol Rev. 2012;24(2):205–49.

World Health Organization. To err is human: Being an effective team player; 2012. p. 1–5. Available from: https://www.who.int/patientsafety/education/curriculum/course4_handout.pdf

Burgess AW, Roberts C, Black KI, Mellis C. Senior medical student perceived ability and experience in giving peer feedback in formative long case examinations. BMC Med Educ. 2013;13:79.

Acknowledgements

Not applicable

Funding

Not applicable

Author information

Authors and Affiliations

Contributions

SL and ME independently screened titles and abstracts of retrieved articles, and screened the selected full-text articles based on the selection criteria. When a discrepancy arose in article selection between the two reviewers at the full-text article screening stage, the disagreement went to arbitration by the tiebreaker, MM. SL and ME independently carried out data extraction and quality appraisal. SL, ME, and MM reviewed and discussed discrepancies and came to a consensus. All authors were major contributors in writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

SL: The author declares that she has no competing interests.

ME: The author declares that he has no competing interests.

MM: The author declares that she has no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Search Strategies for PubMed Search

(education, medical, undergraduate [mh] OR students, medical [mh] OR schools, medical [mh] OR “undergraduate medical education” OR “medical students” OR “medical student” OR “medical schools” OR “medical school”) AND ((cooperative behavior[mh] AND educational measurement [mh] AND group processes [mh] AND learning [mh]) OR (“cooperative behavior” AND “educational measurement” AND “group processes” AND learning) OR “peer evaluation” OR “peer feedback” OR “peer assessment” OR ((peer OR peers OR team OR teams) AND (measur* OR assess* OR evaluat*))) AND (TBL OR “team-based learning” OR “team based learning” OR “collaborative learning” OR “problem-based learning” OR “problem based learning”)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Lerchenfeldt, S., Mi, M. & Eng, M. The utilization of peer feedback during collaborative learning in undergraduate medical education: a systematic review. BMC Med Educ 19, 321 (2019). https://doi.org/10.1186/s12909-019-1755-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-019-1755-z