Abstract

Background

Community behavioral health clinicians, supervisors, and administrators play an essential role in implementing new psychosocial evidence-based practices (EBP) for patients receiving psychiatric care; however, little is known about these stakeholders’ values and preferences for implementation strategies that support EBP use, nor how best to elicit, quantify, or segment their preferences. This study sought to quantify these stakeholders’ preferences for implementation strategies and to identify segments of stakeholders with distinct preferences using a rigorous choice experiment method called best-worst scaling.

Methods

A total of 240 clinicians, 74 clinical supervisors, and 29 administrators employed within clinics delivering publicly-funded behavioral health services in a large metropolitan behavioral health system participated in a best-worst scaling choice experiment. Participants evaluated 14 implementation strategies developed through extensive elicitation and pilot work within the target system. Preference weights were generated for each strategy using hierarchical Bayesian estimation. Latent class analysis identified segments of stakeholders with unique preference profiles.

Results

On average, stakeholders preferred two strategies significantly more than all others—compensation for use of EBP per session and compensation for preparation time to use the EBP (P < .05); two strategies were preferred significantly less than all others—performance feedback via email and performance feedback via leaderboard (P < .05). However, latent class analysis identified four distinct segments of stakeholders with unique preferences: Segment 1 (n = 121, 35%) strongly preferred financial incentives over all other approaches and included more administrators; Segment 2 (n = 80, 23%) preferred technology-based strategies and was younger, on average; Segment 3 (n = 52, 15%) preferred an improved waiting room to enhance client readiness, strongly disliked any type of clinical consultation, and had the lowest participation in local EBP training initiatives; Segment 4 (n = 90, 26%) strongly preferred clinical consultation strategies and included more clinicians in substance use clinics.

Conclusions

The presence of four heterogeneous subpopulations within this large group of clinicians, supervisors, and administrators suggests optimal implementation may be achieved through targeted strategies derived via elicitation of stakeholder preferences. Best-worst scaling is a feasible and rigorous method for eliciting stakeholders’ implementation preferences and identifying subpopulations with unique preferences in behavioral health settings.

Similar content being viewed by others

Background

The need to improve the quality and outcomes of health and behavioral health services has led to increased emphasis on the implementation of evidence-based practices (EBPs) in community settings [1,2,3,4]. Effective implementation of EBPs requires the cooperation of clinicians, supervisors, and administrators who deliver clinical care. However, little is known about these stakeholders’ values and preferences for specific types of implementation strategies, defined as the active approaches used to improve adoption, implementation, and sustainment of EBPs [5]. It is also not clear how best to elicit, quantify, and segment stakeholders’ implementation preferences. Community stakeholder preferences should be considered when selecting implementation strategies for several reasons. First, the process of eliciting preferences is, in and of itself, a way to increase stakeholder engagement and buy-in, a key component of the implementation process [6,7,8]. Second, there is evidence that tailored implementation strategies (i.e., those that address localized barriers) are more effective than non-tailored strategies [9, 10] and stakeholder preferences may provide insights regarding how to tailor to local contexts [9]. Third, because stakeholder preferences may not align with evidence on what works, understanding preferences is an essential first step in determining where implementation efforts should start in terms of targeted mechanisms of change.

To date, efforts to elicit stakeholder implementation preferences using both qualitative and quantitative approaches have had several limitations. Qualitative interviews are useful for generating deep understanding among a small group; however, they are resource intensive and may have limited generalizability. Recent advances in quantitative measurement include pragmatic Likert-type scales that allow stakeholders to rate the acceptability, feasibility, and appropriateness of implementation strategies [11]. These approaches are relatively low-cost even for large samples; however, because they do not require respondents to consider trade-offs, they typically suffer from strong ceiling effects with many strategies ending up highly-ranked, thus undermining their utility.

Stated preference choice experiments are a promising set of methods for eliciting stakeholder preferences that may overcome these limitations by engaging stakeholders in an intuitive yet powerful set of choice tasks that closely mimic real-life decisions and that can be easily implemented in large samples [12]. By requiring respondents to consider trade-offs across a set of choices, choice experiments generate highly-accurate estimates of implicit preferences for a targeted set of objects (e.g., implementation strategies) in a time-efficient, cost-effective, and generalizable manner [12,13,14,15]. These methods are especially valuable when the set of objects are carefully derived through elicitation work within the target population and when information on actual behavior or decisions are unavailable (or unobtainable), as is typically the case in implementation [16].

Best-worst scaling (BWS) [16, 17] is a type of choice experiment uniquely suited to the task of eliciting implementation preferences. This is because BWS is flexible enough to identify either (a) the most preferred strategy(s) from a list of irreducible and dissimilar strategies, or (b) the most preferred level (e.g., dollar amount) of an attribute (e.g., compensation) that multiple strategies have in common [17]. This is important because there are 73 discrete implementation strategies which can be combined in many permutations [18]. Second, respondents’ BWS choices can be segmented using model-based clustering procedures such as latent class analysis to identify subpopulations that share similar preferences [19, 20]. Segmentation allows planners to optimally target implementation strategies to subpopulations based on their preferences and therefore potentially optimize their impact.

The goals of this study were to apply BWS to (1) characterize and quantify the preferences of clinicians, supervisors, and administrators employed within clinics that deliver publicly-funded behavioral health services for a set of 14 implementation strategies, (2) empirically identify segments of stakeholders that exhibit distinct preferences, and (3) examine differences across segments in professional characteristics (e.g., age, education, primary role in organization).

Methods

Setting

Philadelphia, a city of over 1.5 million residents, is the poorest of the United States’ 10 largest cities (26% of residents live below the poverty level) [21, 22]. The city’s population is 41% African-American, 35% Non-Hispanic White, 15% Hispanic, 8% Asian, and 2% other race [22, 23]. Public behavioral health services (i.e., mental health and substance use treatment) in Philadelphia are financially supported by Medicaid and managed by Community Behavioral Health (CBH), a non-profit managed care organization (i.e., “carve-out”) established by the city that functions as a component of the Department of Behavioral Health and Intellectual disAbility Services (DBHIDS). In 2018, DBHIDS and CBH included 175 in-network provider organizations serving 118,011 unique members [24].

Since 2007, DBHIDS has supported EBP delivery in Philadelphia through a series of “EBP initiatives” that include training, expert consultation, and implementation supports (e.g., booster trainings, implementation meetings) for participating clinicians [25]. These initiatives have supported implementation of several cognitive behavioral therapy models including cognitive therapy, prolonged exposure, trauma-focused cognitive-behavioral therapy, dialectical behavior therapy, and parent child interaction therapy for a range of psychiatric disorders. In 2013, DBHIDS created a centralized infrastructure called the Evidence-based Practice and Innovation Center (EPIC) to oversee EBP implementation efforts. EPIC supports EBP implementation by coordinating and consulting EBP efforts across the clinics within the CBH network (the managed care organization), contracting with treatment experts to deliver EBP training, contracting with treatment providers to deliver EBP, providing EBP consultation and implementation support, hosting events to publicize EBP delivery, maintaining web-based resources (e.g., webinars), designating EBP programs within provider agencies, and providing financial incentives (e.g., enhanced rates) for delivery of EBPs.

Participants

The target population for this study was clinicians, supervisors, and administrators employed within clinics that deliver publicly-funded behavioral health services in the City of Philadelphia. The sample did not include members of EPIC (i.e., it did not include treatment experts or consultants). Because DBHIDS does not maintain a roster of email addresses to directly contact active clinicians, we used a two-pronged recruitment and sampling approach. We sent invitation emails to leaders of behavioral health organizations (n = 210), clinicians (n = 527), and other community stakeholders (e.g., directors of a clinician training organization; n = 6) in Philadelphia. We also e-mailed the invitation to four local electronic mailing lists known to reach large swaths of the CBH network (e.g., managed care organization listserv) and asked organization and network leaders to forward the email. From these contacts, the survey link was opened 654 times and 343 respondents completed the BWS choice experiment.

Study design and procedure

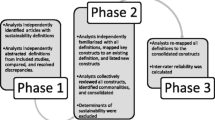

The BWS choice experiment was designed to quantify stakeholders’ preferences for 14 implementation strategies developed through iterative elicitation, pilot, and pre-testing work completed with members of each stakeholder group in the target population [17, 26]. Elicitation of strategies was completed via a system-wide innovation tournament, described elsewhere [27], through which clinicians submitted ideas for strategies to support EBP implementation in Philadelphia. Following the tournament, submitted ideas (N = 65) were analyzed and refined by a team of implementation scientists, behavioral scientists, and clinicians, in order to develop a set of distinct, clearly operationalized implementation strategies with ecological validity for the target system. The analysis process involved combining similar strategies, crafting definitions of each strategy, and ensuring that all strategies were adequately captured by the final set. This process resulted in a set of 14 implementation strategies (see Table 1: List of Implementation Strategies Included in the BWS Experiment), which were subsequently evaluated in pre-testing interviews with clinicians, supervisors, and administrators (n = 9) within the system to ensure that the strategies, as described, spanned the full range of approaches viewed as relevant by stakeholders and were clearly described. The 14 strategies fell into six categories: (1) financial incentives, (2) clinical consultation, (3) clinical support tools, (4) clinician social support and networking, (5) clinician performance feedback/social comparison, and (6) client supports [27]. Notably, the strategies developed through this process addressed 8 out of 9 categories of implementation strategies identified in the Expert Recommendations for Implementing Change (ERIC) project [18], including: use evaluative and iterative strategies, provide interactive assistance, develop stakeholder interrelationships, train and educate stakeholders, support clinicians, engage consumers, utilize financial incentives, and change infrastructure. Supplemental Table 1A in Additional File 2 shows how the strategies from the present study aligned with the discrete implementation strategies identified by the ERIC project.

Because each of the 14 strategies represented a qualitatively unique strategy, we used object case BWS (as opposed to profile case or multi-profile case BWS) [28]. The BWS experimental design was generated using the Sawtooth Discover algorithm which produces randomized choice sets with optimal frequency balance, orthogonality, positional balance, and connectivity for a given sample size [29,30,31,32,33]. Within the design, each participant was shown 11 sets of four randomly selected and randomly ordered strategies and, within each set, asked to choose which strategy was “Most useful” (i.e., best) for supporting clinicians’ implementation of psychosocial EBPs and which strategy was “Least useful” (i.e., worst). The Discover algorithm optimizes 1-way, 2-way, and positional balance within the randomization sequence such that (a) each strategy is presented an equal number of times, (b) each pair of strategies appears in a set an equal number of times, and (c) each strategy is shown in each position an equal number of times. For this study, each strategy was included in at least three sets. Participants were instructed to imagine that their organization had decided to adopt a new psychosocial EBP that exhibited excellent outcomes for their specific client population, and that this treatment was new to the respondent (or to clinicians working in the respondent’s setting; see Additional File 1 for the BWS prompt and an example set of strategies). The prompt explained that initial training in the EBP would be provided and would include active learning approaches, and their input was sought regarding the best implementation strategies that could be used to support clinicians’ implementation of the new practice following training.

Sample size calculations assumed an alpha level of .05, margin of error of 0.1, and 14 implementation strategies to be rated with each strategy appearing in a minimum of 3 sets. Based on these assumptions, the required sample size was N = 244 participants rating 11 sets of 4 strategies each [28, 34].

The BWS experiment was implemented via a web-based computerized survey emailed to clinicians, supervisors, and administrators from March 2019 to April 2019. Consistent with best practices in survey administration, we utilized a process [35] in which participants received a pre-survey priming email, survey invitation email, and three follow-up reminders, delivered approximately 1 week apart. Surveys took approximately 30 min and participants received a $25 gift card.

Measures

In addition to completing the BWS questions, respondents reported on professional and workplace characteristics: primary role (administrator [those who were executive level administrators within the clinics], supervisor [those who supervise clinicians in clinical work], clinician [those who primarily offer direct services to clients]), education level, type of clinic in which they were employed (mental health, substance use, dual diagnosis), salary versus fee-for-service employment, tenure in current agency, years of experience as a clinician, extent to which their graduate training emphasized EBP (ranging from 1 = Never to 7 = Always), average hours worked per week, number of City-sponsored EBP training initiatives in which the respondent had participated (ranging from 0 to 6), number of BWS strategies currently in use by their employing agency (ranging from 0 to 14), age, sex, race, and ethnicity. Because of heterogeneity across roles, administrators and supervisors did not report on salary versus fee-for-service employment, hours worked per week, extent to which their graduate training emphasized EBP, years of experience as a clinician, or number of City-sponsored EBP training initiatives participated in.

Data analysis

Best and worst choice frequencies for each strategy were summarized at the sample level using count analysis which represents the proportion of times a strategy was chosen as most or least useful relative to the number of times it was displayed [17]. Preference weights for each strategy were calculated at the individual level using hierarchical Bayes estimation with a multinomial logit model implemented using CBC/HB software from Sawtooth (version 5) [36,37,38,39,40]. Latent class analysis (LCA) [19, 20, 41] was used to identify segments of the population with different preferences and to estimate preference weights (i.e., part worth utilities) for each segment using Sawtooth Software’s LCA program (version 4.7), which implements the estimation procedure described by DeSarbo and colleagues [19]. We estimated LCA models with 1 through 5 classes. Consistent with best practices, we selected the best-fitting model on the basis of the Bayesian information criterion [42], probabilities of correct classification [43], sufficiently populated classes, and interpretability of classes based on alignment with previous research and theoretical considerations [44]. Differences across segments on professional characteristics were tested using analyses of variance and chi-square tests (SPSS, Version 25). There were no missing data on participants’ preferences. Because very few participants (< 5%) had missing data on professional and sociodemographic variables, these were excluded from analyses on a pairwise basis.

Results

Participants were 76% female. With regard to ethnicity and race, participants endorsed the following categories: White (60%), Black and/or African American (20%), American Indian or Alaskan Native (1%), Asian (3%), Other (7%). The remainder were missing or preferred not to disclose. Participant demographics are largely consistent with previous work we have conducted in the city of Philadelphia [45] and broader national trends [46].

Table 2 shows the best and worst choice frequencies for each strategy. Fig. 1 shows the mean preference weights (i.e., part worth utilities) for each strategy with 95% confidence intervals. The preference weights are logit scaled and represent the average utility or value that this sample of respondents attached to each strategy; higher values indicate greater utility. When 95% confidence intervals do not overlap between two strategies, the strategy with the higher value is significantly more preferred at p < .05. The two strategies viewed as most useful were both within the financial incentives category and included (1) compensation for EBP use per session and (2) compensation for EBP preparation time. Both of these were preferred significantly more than all other strategies (see Fig. 1). Conversely, both performance feedback/social comparison strategies were viewed as significantly less useful than all others (see Fig. 1): (1) performance feedback via leaderboard was the least preferred, followed by (2) performance feedback via email. On average, financial incentive strategies were preferred 9.2 times more than performance feedback/social comparison strategies (Mean Best = .46 vs. .05) and performance feedback/social comparison strategies were disliked 5.1 times more than financial incentive strategies (Mean Worst = .56 vs. .11).

Average Preference Weights for each Strategy (N = 343) Note: Preference weights (i.e., part worth utilities) were estimated via hierarchical Bayes estimation incorporating a multinomial logit model. Values are logit scaled; strategies with higher preference weights are more preferred. Error bars indicate 95% confidence intervals. When 95% confidence intervals do not overlap between two strategies, the strategy with the higher value is significantly more preferred at p < .05

Additional insight into stakeholders’ preferences can be obtained by examining their preferences grouped by the six categories of strategies. As is shown in Fig. 2, strategies in the financial incentives category were preferred significantly more on average than all others (p < .05), followed by clinical support tools, which were the second most preferred and rated significantly higher than all others except financial incentives (p < .05). The clinical consultation and social networking categories were statistically indistinguishable but rated significantly higher than client supports which, in turn, rated significantly higher than performance feedback/social comparison.

Average Preference Weights for each Category. Note: Preference weights (i.e., part worth utilities) were estimated via hierarchical Bayes estimation incorporating a multinomial logit model. Categories with higher average preference weights are more preferred. Error bars indicate 95% confidence intervals. When 95% confidence intervals do not overlap between two categories, the category with the higher value is significantly more preferred at p < .05. See Table 1 for the specific strategies included in each category

Figure 3 shows the preference weights (i.e., part worth utilities) and 95% confidence intervals for each strategy for each of the four segments identified in the optimally-fitting four-class LCA model. These preference weights are interpreted in the same manner as those shown in Fig. 1. Tables 3 and 4 (see Additional File 2) show the distribution of professional and sociodemographic characteristics by segment and for the full sample. Segment 1, labeled Support Therapists through Financial Incentives, included 35% of the sample (n = 121) and exhibited significantly higher preferences for compensation per session, compensation for preparation time, and compensation for certification compared to all other segments. Segment 1 had the highest proportion of administrators (17%, n = 20) relative to the other groups (3 to 5%, p = .006) (see Table 3 included as an additional file (see Additional file 2)).

Preference Weights by Latent Class Segment. Note: N = 343. Segments and preference weights (i.e., part worth utilities) derived via latent class analysis. Values are logit scaled; strategies with higher preference weights are more preferred. Error bars indicate 95% confidence intervals. When 95% confidence intervals do not overlap between two strategies, the strategy with the higher value is significantly more preferred at p < .05. Segment labels reflect the type of implementation support prioritized by the segment relative to others. Segment 1, Support me through Financial Incentives (Compensation), included n = 121 participants; segment 2, Support me through Technology, included n = 80 participants; segment 3, Support me through Autonomy, included n = 52 participants; and segment 4, Support me through Consultation, included n = 90 participants

Segment 2, labeled Support Therapists through Technology, included 23% of the sample (n = 80) and exhibited significantly higher preferences for the client mobile app/texting service and the web-based clinician resource center/mobile app compared to the other segments. This segment exhibited significantly less favorable preferences for the performance feedback email and performance leaderboard relative to other groups. Segment 2 tended to have fewer years of experience in their current agency (p = .061) and to be younger on average (p = .065).

Segment 3, labeled Support Therapists through Autonomy, included 15% of the sample (n = 52). This segment exhibited the only favorable rating of the improved waiting room strategy and these ratings were significantly higher than those of the other segments. This segment also exhibited significantly less preference for EBP consultation led by either experts or peers. Members of this segment exhibited lower than average participation in the EBP initiatives provided by the city (p = .021) and the fewest average hours worked per week (p = .009).

Segment 4, labeled Support Therapists through Consultation, included 26% of the sample (n = 90) and exhibited significantly higher preferences for expert-led monthly consultation, peer-led monthly consultation, and a community-based EBP mentoring program. This segment also exhibited significantly lower preferences than the other groups for compensation per session and compensation for preparation time. This segment had the highest proportion of clinicians (38%) who worked in clinics focused on the treatment of substance use disorders (p = .020), although similar to other segments, most in this group worked in clinics focused on the treatment of mental health disorders (62%).

Discussion

This study provides valuable insights on clinician, supervisor, and administrator preferences for implementation planning in large public behavioral health systems and highlights important directions for future research. Results also illustrate the utility of BWS as a methodology for rigorously and efficiently eliciting stakeholder preferences for implementation strategies in large-scale behavioral health and health systems.

By identifying four distinct subpopulations of clinicians, supervisors, and administrators whose preferences reflected distinct foci for implementation strategies, these findings highlight the heterogeneity of stakeholder preferences and point to the need for a new research agenda that unpacks the relationships between preference, implementation effectiveness, and tailoring of implementation strategies. Even as Segment 1 (35% of the sample) strongly preferred all financial incentive strategies above any other strategy, another group, Segment 4 (26% of the sample), showed much less interest in financial incentives, preferring instead consultation with EBP experts, and yet another group, Segment 2 (23% of the sample) exhibited strong preferences for technology-based strategies. These groups were all distinct from Segment 3 (15% of the sample) which preferred an improved waiting room (to help relax patients and prepare them to engage in an EBP-focused session) and viewed any type of clinical consultation as least helpful. These distinct segments suggest that a one-size-fits-all implementation strategy may not be successful, and certainly will not be preferred, by the majority of stakeholders. Different implementation strategies may need to be matched with these distinct subpopulations. There is growing consensus in implementation science that strategies should be selected and tailored based on contextual factors with regard to the EBP, setting, and individual characteristics [47, 48]. Our results highlight stakeholder preference as a potentially important dimension for tailoring implementation strategies and point to the need for research to better understand how preferences influence EBP implementation.

A few prior studies have used related choice experiment methods such as discrete choice experiments to understand practitioners’ preferences for features of EBP training and their beliefs regarding variables that might influence their adoption of EBP [8, 10, 49]. The present study extends this prior work by focusing on stakeholders’ preferences for post-training implementation strategies drawn from a diverse set of categories that represent the majority of ERIC strategies (e.g., financial incentives vs. client supports vs. clinician social networking vs. performance feedback/social comparison). It is well-established that post-training support is typically necessary in order to generate sustained and meaningful change in practice behaviors [50]; our results provide insight into what types of post-training implementation strategies are viewed as most useful by stakeholders in community mental health as well as the heterogeneity in those preferences. In addition, by using BWS to directly compare multiple dissimilar types of strategies (e.g., financial incentives vs. client supports vs. clinician social networking, etc.), our results offer the first glimpse into stakeholders’ prioritization of these different categories. In some ways, our study provides a view of the forest (i.e., which categories of strategies do stakeholders most prefer?) which primes the field for future work, using discrete choice experiments, to identify stakeholders’ preferences for the design of specific strategies (i.e., trees). For example, discrete choice experiments could be fielded to determine stakeholders’ preferences for the specific features of any of the strategies included in our BWS choice experiment (e.g., the most preferred features of a system that compensates per session).

Targeting implementation strategies based on stakeholders’ preferences may result in more successful EBP implementation in at least three ways. First, if implementation strategies are differentially effective for different individuals and contexts, stakeholder preferences may signal which strategy will be most effective for a given situation. In this case, stakeholder preferences represent a valid signal indicating which strategy will be most effective in their specific circumstances and strategy effectiveness would be optimized by matching strategies to specific individuals or organizations based on the insights of participants. This theory assumes that stakeholders’ preferences are valid indicators of which strategy will work best which has not yet been empirically verified. This is similar to the idea of precision medicine in which the most effective intervention (i.e., implementation strategy) depends on the characteristics of the specific individual in context.

Second, targeting strategies to stakeholders’ preferences may have a general accelerator effect that increases the effectiveness of any strategy compared to its baseline effectiveness due to increased engagement or buy-in. For example, if participants are more engaged or invested in a strategy because they chose it, they may be willing to exert more effort to ensure its success and this may increase the strategy’s effectiveness. In this case, the act of choosing a preferred strategy is in itself an intervention that might improve implementation success. Ideally, research could quantify the magnitude of this ‘preference effect’ to determine how much increase in effectiveness could be expected for any strategy simply by allowing stakeholders to choose it.

Third, assuming that some strategies are universally more effective than others, it may be beneficial to understand stakeholders’ preferences so that policymakers and other leaders can identify stakeholders who do not prefer effective strategies and use supplemental interventions (e.g., a readiness strategy) with these individuals prior to, or concurrent with, the launch of the effective strategy. In this scenario, individual preferences have no accelerator effect on strategies’ effectiveness, nor do they provide a valid guide to the choice of strategy; rather, assessment of preferences allows system leaders to identify subpopulations of stakeholders who may benefit from supplementary interventions (e.g., pre-implementation strategy) to support their engagement with a system-selected, effective strategy that is going to be rolled out.

In contrast to the hypotheses described above, it may be that preference has no effect on the outcome of implementation strategies whatsoever. The identification of four distinct preference subpopulations in this study points to the need for research to determine how preferences relate to implementation effectiveness so that resources devoted to implementing EBPs can be optimized.

Across the full sample, one consistent finding was the overall rejection of performance feedback/social comparison strategies, which were rated lower than all other strategies on average for the full sample and were the lowest rated strategies for 3 out of 4 subpopulations. These findings suggest stakeholders overwhelmingly viewed performance feedback/social comparison strategies as unhelpful for supporting EBP implementation. This is consistent with findings from primary care practices, in which primary care clinicians also disliked strategies using social comparison [51]. Future qualitative inquiry could provide valuable insights into why stakeholders view feedback/social comparison strategies as unhelpful. Potential mechanisms include discomfort with receiving information that is misaligned with one’s perception of one’s performance or feeling as though there will be negative consequences for poor performance.

The large preference gap between feedback/social comparison and other strategies, such as financial incentives, which emerged as the most preferred strategy on average in the full sample and the first or second choice strategy for 3 out of 4 subpopulations, raises an important question about the relative effectiveness of high cost financial incentives compared to lower cost performance feedback strategies, both of which have some evidence of effectiveness [52, 53]. Comparative effectiveness trials that include cost-effectiveness analyses would aid policymakers in selecting among strategies when there is a mismatch between stakeholders’ preferences versus what is known to be effective [54]. The generally favorable view of financial incentives in this sample is not surprising against the backdrop of a publicly-funded behavioral health workforce that is poorly compensated and financially stressed, often employed as independent contractors, and are working within organizations that are also struggling financially [55, 56].

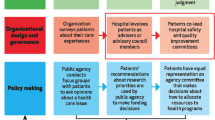

Another issue highlighted by these findings is the question of which stakeholder group’s preferences have the strongest implications for successful implementation. Many systems, such as Philadelphia, focus implementation policies primarily at the program level by designating EBP programs and providing financial incentives to agencies (versus clinicians). This raises the question of to what extent policymakers should focus their attention on the preferences of administrators, who make agency-level decisions about EBP (e.g., whether to respond to agency incentives by implementing an EBP program), versus attending to the preferences of clinicians, who ultimately are responsible for implementing EBPs in direct care. Preliminary evidence regarding pay-for-performance in behavioral healthcare suggests organization-focused financial incentives have minimal impact on practice whereas individual clinician-focused incentives can change provider behavior [53].

Our results are subject to limitations and qualifications. Stated preferences were elicited from a controlled experiment on hypothetical implementation options. Real-world implementation behaviors are complicated by numerous factors not accounted for in our controlled experiment; thus, actual implementation behavior could be different from that predicted by our data. However, several features of the study design were implemented following best practice methods and consequently limit the potential for bias. For example, the scenario and implementation strategies were presented as realistically as possible and the number of questions each respondent answered was limited considering the cognitive burden of choice questions. The use of object case BWS allowed us to estimate respondents’ preferences for a wide range of qualitatively distinct implementation strategies; however, this design choice sacrificed the opportunity to obtain finer-grained information about which features of specific strategies are most preferred (e.g., the design and amount of compensation for EBP use per session). Other types of choice experiments, such as discrete choice experiments and profile case BWS, generate fine-grained estimates of respondents’ preferences for specific levels of strategy features. Studies incorporating those approaches represent a potentially valuable extension of this work. The specific implementation preferences described by this sample of clinicians and administrators in this large public behavioral health system were limited by those generated through the system-wide innovation tournament. In addition, this sample’s preferences may not generalize to clinicians in more rural areas or in cities or states that have not exhibited similar support for EBP. Further, the particular set of strategies is likely tied to the structure of the US behavioral healthcare system and likely would not generalize to other countries with different healthcare systems. The use of a motivated volunteer sample of stakeholders, while preserving internal validity, may also limit generalizability and affect the relative proportions in the latent class analysis. Finally, clinician preferences are but one factor in many that should guide the selection of implementation strategies to support EBP in a specific setting.

Conclusions

Effective implementation of EBP in health and behavioral health systems must include the active participation of stakeholders who receive, deliver, and/or oversee the delivery of clinical care. Numerous groups, including service participants, family members, clinicians, supervisors, administrators, funders, and policymakers, have a stake in implementation decisions and understanding their values and preferences for implementation strategies may be one way to increase stakeholder engagement and implementation effectiveness. Results from this study demonstrate the presence of four distinct subpopulations of clinicians, supervisors, and administrators whose implementation preferences differ and who may not all respond positively to a one-size-fits all implementation strategy. As such, these findings highlight the need for research on how stakeholder preferences intersect with implementation effectiveness and the tailoring of implementation strategies. Furthermore, this study demonstrates that BWS choice experiments are a highly feasible and rigorous method for eliciting stakeholders’ preferences regarding how to support their implementation of EBP.

Availability of data and materials

Data will be made available upon request. Requests for access to the data can be sent to the Penn ALACRITY Data Sharing Committee. This Committee is comprised of the following individuals: Rinad Beidas, PhD, David Mandell, ScD, Kevin Volpp, MD, PhD, Alison Buttenheim, PhD, MBA, Steven Marcus, PhD, and Nathaniel Williams, PhD. Requests can be sent to the Committee’s coordinator, Kelly Zentgraf at zentgraf@upenn.edu, 3535 Market Street, 3rd Floor, Philadelphia, PA 19107, 215–746-6038.

Abbreviations

- BWS:

-

Best-Worst Scaling

- CBH:

-

Community Behavioral Health

- DBHIDS:

-

Philadelphia Department of Behavioral Health and Intellectual Disability Services

- EBP:

-

Evidence-Based Practice

- EPIC:

-

Philadelphia Evidence Based Practice and Innovation Center

- LCA:

-

Latent Class Analysis

References

Insel TR. Translating scientific opportunity into public health impact: a strategic plan for research on mental illness. Arch Gen Psychiatry. 2009;66(2). https://doi.org/10.1001/archgenpsychiatry.2008.540.

Collins PY, Patel V, Joestl SS, March D, Insel TR, Daar AS, et al. Grand challenges in global mental health. Nature. 2011;475(7354). https://doi.org/10.1038/475027a.

Garland AF, Haine-Schlagel R, Brookman-Frazee L, Baker-Ericzen M, Trask E, Fawley-King K. Improving community-based mental health care for children: translating knowledge into action. Adm Policy Ment Health. 2013;40(1). https://doi.org/10.1007/s10488-012-0450-8.

Weisz JR. Agenda for child and adolescent psychotherapy research: on the need to put science into practice. Arch Gen Psychiatry. 2000;57(9). https://doi.org/10.1001/archpsyc.57.9.837.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1). https://doi.org/10.1186/1748-5908-8-139.

Salloum RG, Shenkman EA, Louviere JJ, Chambers DA. Application of discrete choice experiments to enhance stakeholder engagement as a strategy for advancing implementation: a systematic review. Implement Sci. 2017;12(1). https://doi.org/10.1186/s13012-017-0675-8.

Knoepke CE, Ingle MP, Matlock DD, Brownson RC, Glasgow RE. Dissemination and stakeholder engagement practices among dissemination & implementation scientists: results from an online survey. PLoS One. 2019;14(11). https://doi.org/10.1371/journal.pone.0216971.

Cunningham CE, Barwick M, Rimas H, Mielko S, Barac R. Modeling the decision of mental health providers to implement evidence-based children’s mental health services: a discrete choice conjoint experiment. Adm Policy Ment Health. 2018;45(2). https://doi.org/10.1007/s10488-017-0824-z.

Baker R, Camosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, et al. Tailored interventions to address determinants of practice. Cochrane Database Syst Rev. 2015;4. https://doi.org/10.1002/14651858.CD005470.pub3.

Cunningham CE, Henderson J, Niccols A, Dobbins M, Sword W, Chen Y, et al. Preferences for evidence-based practice dissemination in addiction agencies serving women: a discrete-choice conjoint experiment. Addiction. 2012;107(8). https://doi.org/10.1111/j.1360-0443.2012.03832.x.

Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12. https://doi.org/10.1186/s13012-017-0635-3.

Whitty JA, Gonçalves ASO. A systematic review comparing the acceptability, validity and concordance of discrete choice experiments and best–worst scaling for eliciting preferences in healthcare. Patient. 2018;11(3). https://doi.org/10.1007/s40271-017-0288-y.

Starmer C. Developments in non-expected utility theory: the hunt for a descriptive theory of choice under risk. J Econ Lit. 2000;38(2). https://doi.org/10.1257/jel.38.2.332.

Van Houtven G, Johnson FR, Kilambi V, Hauber AB. Eliciting benefit–risk preferences and probability-weighted utility using choice-format conjoint analysis. Med Decis Making. 2011;31(3). https://doi.org/10.1177/0272989X10386116.

Cheung KL, Wijnen BF, Hollin IL, Janssen EM, Bridges JF, Evers SM, et al. Using best–worst scaling to investigate preferences in health care. Pharmacoeconomics. 2016;34(12). https://doi.org/10.1007/s40273-016-0429-5.

Flynn TN, Louviere JJ, Peters TJ, Coast J. Best–worst scaling: what it can do for health care research and how to do it. J Health Econ. 2007;26(1). https://doi.org/10.1016/j.jhealeco.2006.04.002.

Louviere JJ, Flynn TN, Marley AAJ. Best-worst scaling: theory, methods and applications. Cambridge: Cambridge University Press; 2015.

Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2). https://doi.org/10.1177/1077558711430690.

DeSarbo WS, Ramaswamy V, Cohen SH. Market segmentation with choice-based conjoint analysis. Mark Lett. 1995;6(2). https://doi.org/10.1007/BF00994929.

Wedel M, Kamakura WA. Market segmentation: conceptual and methodological foundations. Dordrecht: Kluwer Academic Publishers; 1999.

Table 8: PENNSYLVANIA Offenses Known to Law Enforcement by City, 2017: FBI: Uniform Crime Reporting Program; 2017. Available from: https://ucr.fbi.gov/crime-in-the-u.s/2017/crime-in-the-u.s.-2017/tables/table-8/table-8-state-cuts/pennsylvania. Accessed 28 Jan 2021.

Philadelphia 2019: The state of the city. PEW. 2019 [cited April 11, 2019]. Available from: https://www.pewtrusts.org/en/research-and-analysis/reports/2019/04/11/philadelphia-2019.

Philadelphia 2017: The state of the city. PEW. 2017. Available from: https://www.pewtrusts.org/en/research-and-analysis/reports/2017/04/philadelphia-2017. Accessed 28 Jan 2021.

2018 Annual Report: Philadelphia Behavioral HealthChoices Program. Community Behavioral Health. 2018. Available from: https://cbhphilly.org/cbh-providers/annual-report/. Accessed 28 Jan 2021.

Powell BJ, Beidas RS, Rubin RM, Stewart RE, Wolk CB, Matlin SL, et al. Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Adm Policy Ment Health. 2016;43(6). https://doi.org/10.1007/s10488-016-0733-6.

Bridges JF, Hauber AB, Marshall D, Lloyd A, Prosser LA, Regier DA, et al. Conjoint analysis applications in health—a checklist: a report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14(4). https://doi.org/10.1016/j.jval.2010.11.013.

Stewart RE, Williams NJ, Byeon YV, Buttenheim A, Sridharan S, Zentgraf K, et al. The clinician crowdsourcing challenge: using participatory design to seed implementation strategies. Implement Sci. 2019. https://doi.org/10.1186/s13012-019-0914-2.

Louviere JJ, Hensher DA, Swait JD. Stated choice methods: analysis and applications. Cambridge: Cambridge University Press; 2000.

The MaxDiff System Technical Paper Orem, Utah: Sawtooth Software, Inc.; 2013. Available from: https://www.sawtoothsoftware.com/download/techpap/maxdifftech.pdf. Accessed 28 Jan 2021.

Louviere J, Lings I, Islam T, Gudergan S, Flynn T. An introduction to the application of (case 1) best–worst scaling in marketing research. Int J Res Mark. 2013;30(3). https://doi.org/10.1016/j.ijresmar.2012.10.002.

Discover-MaxDiff: How and Why it Differs from Lighthouse Studio’s MaxDiff Software: Sawtooth Software, Inc.; 2018. Available from: https://www.sawtoothsoftware.com/support/technical-papers/167-support/technical-papers/sawtooth-software-products/1935-discover-maxdiff-how-and-why-it-differs-from-lighthouse-studio-s-maxdiff-software. Accessed 28 Jan 2021.

Orme B. MaxDiff Analysis: Simple Counting, Individual-Level Logit, and HB. Sequim: Sawtooth Software, Inc.; 2009. Available from: https://www.sawtoothsoftware.com/download/techpap/indivmaxdiff.pdf.

Orme BK. Getting started with conjoint analysis: strategies for product design and pricing research. 2nd ed. Madison: Research Publishers, LLC; 2010.

Lipovetsky S, Liakhovitski D, Conklin M. What's the right sample size for my MaxDiff study. Sawtooth Software Conference; 2015 March; Orlando, FL. Provo: Sawtooth Software; 2015.

Dillman DA, Smyth JD, Christian LM. Internet, mail, and mixed-mode surveys: the tailored design method. 3rd ed. Hoboken: Wiley; 2009.

Allenby GM, Ginter JL. Using extremes to design products and segment markets. J Market Res. 1995;32(4). https://doi.org/10.1177/002224379503200402.

Lenk PJ, DeSarbo WS, Green PE, Young MR. Hierarchical Bayes conjoint analysis: recovery of partworth heterogeneity from reduced experimental designs. Mark Sci. 1996;15(2). https://doi.org/10.1287/mksc.15.2.173.

Chib S, Greenberg E. Understanding the Metropolis-Hastings Algorithm. Am Stat. 1995;49(4). https://doi.org/10.1080/00031305.1995.10476177.

Orme B. Accuracy of HB Estimation in MaxDiff Experiments. Sequim: Sawtooth Software, Inc.; 2005. Available from: https://www.sawtoothsoftware.com/download/techpap/maxdacc.pdf.

CBC/HB 5: Software for Hierarchical Bayes Estimation for CBC Data (Updated December 8, 2016) Orem, Utah: Sawtooth Software, Inc.; 2016 [Available from: http://www.sawtoothsoftware.com].

Latent Class v4.7; Software for Latent Class Estimation for CBC Data (Updated December 13, 2013) Orem, Utah: Sawtooth Software, Inc.; 2013 [Available from: http://www.sawtoothsoftware.com].

Nylund KL, Asparouhov T, Muthén BO. Deciding on the number of classes in latent class analysis and growth mixture modeling: A Monte Carlo simulation study. Struct Equ Model Multidiscip J. 2007;14(4). https://doi.org/10.1080/10705510701575396.

Foti RJ, Thompson NJ, Allgood SF. The pattern-oriented approach: A framework for the experience of work. Ind Organ Psychol. 2011;4(1). https://doi.org/10.1111/j.1754-9434.2010.01309.x.

Morin AJ, Morizot J, Boudrias J-S, Madore I. A multifoci person-centered perspective on workplace affective commitment: A latent profile/factor mixture analysis. Organ Res Methods. 2011;14(1). https://doi.org/10.1177/1094428109356476.

Beidas RS, Williams NJ, Becker-Haimes E, Aarons G, Barg F, Evans A, et al. A repeated cross-sectional study of clinicians’ use of psychotherapy techniques during 5 years of a system-wide effort to implement evidence-based practices in Philadelphia. Implement Sci. 2019;14(67). https://doi.org/10.1186/s13012-019-0912-4.

Salsberg E, Quigley L, Mehfoud N, Acquaviva KD, Wyche K, Silwa S. Profile of the social work workforce. Washington, DC: The George Washington University Health Workforce Institute; 2017.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Ser R. 2017;44(2). https://doi.org/10.1007/s11414-015-9475-6.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Public Health Front. 2019;7. https://doi.org/10.3389/fpubh.2019.00003.

Cunningham CE, Barwick M, Short K, Chen Y, Rimas H, Ratcliffe J, et al. Modeling the mental health practice change preferences of educators: A discrete-choice conjoint experiment. School Mental Health. 2014;6(1). https://doi.org/10.1007/s12310-013-9110-8.

Beidas RS, Edmunds JM, Marcus SC, Kendall PC. Training and consultation to promote implementation of an empirically supported treatment: a randomized trial. Psychiatr Serv. 2012;63(7). https://doi.org/10.1176/appi.ps.201100401.

Gong CL, Hay JW, Meeker D, Doctor JN. Prescriber preferences for behavioural economics interventions to improve treatment of acute respiratory infections: a discrete choice experiment. BMJ Open. 2016;6(9). https://doi.org/10.1136/bmjopen-2016-012739.

Meeker D, Linder JA, Fox CR, Friedberg MW, Persell SD, Goldstein NJ, et al. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. JAMA. 2016;315(6). https://doi.org/10.1001/jama.2016.0275.

Garner BR, Godley SH, Dennis ML, Hunter BD, Bair CM, Godley MD. Using pay for performance to improve treatment implementation for adolescent substance use disorders: results from a cluster randomized trial. Arch Pediatr Adolesc Med. 2012;166(10). https://doi.org/10.1001/archpediatrics.2012.802.

Beidas RS, Becker-Haimes EM, Adams DR, Skriner L, Stewart RE, Wolk CB, et al. Feasibility and acceptability of two incentive-based implementation strategies for mental health therapists implementing cognitive-behavioral therapy: a pilot study to inform a randomized controlled trial. Implement Sci. 2017;12. https://doi.org/10.1186/s13012-017-0684-7.

Stewart RE, Adams DR, Mandell DS, Hadley TR, Evans AC, Rubin R, et al. The perfect storm: collision of the business of mental health and the implementation of evidence-based practices. Psychiatr Serv. 2016;67(2). https://doi.org/10.1176/appi.ps.201500392.

Adams DR, Williams NJ, Becker-Haimes EM, Skriner L, Shaffer L, DeWitt K, et al. Therapist financial strain and turnover: interactions with system-level implementation of evidence-based practices. Adm Policy Ment Health. 2019;46(6). https://doi.org/10.1007/s10488-019-00949-8.

Acknowledgements

The authors would like to thank David Mandell, ScD, Kevin Volpp, MD, PhD, and Reid Johnson, PhD for their support in developing and completing this project.

Funding

Research reported in this article was supported by the National Institute of Mental Health of the U.S. National Institutes of Health under award number P50MH113840 (MPIs: Mandell, Beidas, Buttenheim). The funder had no role in decisions regarding the scientific conduct or reporting of the study. The content is solely the responsibility of the authors and does not necessarily represent the official views of the U.S. National Institutes of Health.

Author information

Authors and Affiliations

Contributions

This paper has been developed with contributions from all authors. RB developed the study concept. All authors contributed to the study design. Testing and data collection were performed by YVB and KZ. Data analysis and interpretation were performed by NJW, MC, RES, MB, AMB, and RB. NJW drafted the manuscript, and RB provided critical revisions. The second draft was circulated to all authors for comment and endorsement of the consensus. Following further amendments, all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval for this research was provided by the City of Philadelphia (2017–51) and University of Pennsylvania IRBs (827425). Since the research presented no more than minimal risk of harm to subjects and involved no procedures for which written consent is normally required outside of the research context, we were granted waiver of documentation of consent. The required elements of informed consent were described on the first page of the electronic survey and, if they agreed to participate, participants consented by proceeding to the second page of the electronic survey.

Consent for publication

Not applicable.

Competing interests

Dr. Beidas receives royalties from Oxford University Press and has consulted for the Camden Coalition of Healthcare Providers. She currently consults for United Behavioral Health and serves on the Clinical and Scientific Advisory Board for Optum Behavioral Health. All other authors declare that they have no competing interests to report.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

The BWS prompt and an example set of strategies.

Additional file 2.

Participant Characteristics Overall and by Preference Segment, shows the distribution of professional and sociodemographic characteristics by segment and for the full sample.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Williams, N.J., Candon, M., Stewart, R.E. et al. Community stakeholder preferences for evidence-based practice implementation strategies in behavioral health: a best-worst scaling choice experiment. BMC Psychiatry 21, 74 (2021). https://doi.org/10.1186/s12888-021-03072-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12888-021-03072-x