Abstract

Background

Dose-response meta-analysis (DRMA) is a useful tool to investigate potential dose-response relationship between certain exposure or intervention and the outcome of interest. A large number of DRMAs have been published in the past several years. However, the standard of reporting for such studies is not known.

Methods

Medline, Embase, and Wiley Library were searched for systematic reviews with DRMAs (SR-DRMAs) published from January 2011 to July 2017. We used the combination of PRISMA and MOOSE statements, containing 33 items, to assess the reporting of included SR-DRMAs. The adherence of reporting was defined as the proportion of SR-DRMAs meeting the reporting requirement of an item. We explored the association between five pre-specified variables with the total score of reporting on both fully as well as each domain of the checklist.

Results

In total, 529 SR-DRMAs were eligible. Ten out of 33 items were under reported, and this mainly refers to the methods domain: only a small proportion of SR-DRMAs stated whether a review protocol existed (45, 8.5%); clarified the qualifications of searchers (1.7%); presented full electronic search strategy (25.9%); described any effort to include all available studies (22.9%), described methods for languages other than English (27.4%), and stated the process for selecting studies (20.2%). Multiple regression analysis suggested that studies with more authors (regression coefficient = 0.78; 95% CI: 0.35, 1.20; P < 0.001), published more recently (regression coefficient = 0.38; 95% CI: 0.28 to 0.47; trend P < 0.001), used reporting guideline (regression coefficient = 0.98; 95% CI: 0.68 to 1.32; P < 0.001), and involvement of methodologist (regression coefficient = 0.86; 95% CI: 0.42 to 1.32; P < 0.001) were associated with higher score of reporting. Further regression suggested that the improvement on the quality mainly concentrated on the methods and results domains.

Conclusions

The reporting of SR-DRMAs needs to be further improved, particularly in the issues refer to the methods. The quality of reporting may improve when involving more authors and methodologists and employing any reporting guidelines.

Similar content being viewed by others

Introduction

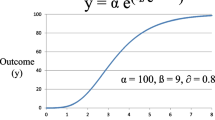

Dose-response meta-analysis (DRMA) represents a specific type of meta-analysis that combines, from studies addressing a same question, dose-specific effect estimates of certain exposure on the outcome of interest [1, 2]. One important merit of DRMA is its capacity to explore potentially differential effects according to varying level of exposure [3, 4], which may better inform clinical decisions, particularly when a putative dose-response effect falls into the topic of clinical interest.

An increasing number of systematic reviews (SR) and DRMAs (SR-DRMAs) have been published over the past several years. Clinically meaningful SR-DRMAs largely rely on rigorous design and conduct of study and analysis of data. Nevertheless, the optimal use of findings from such studies also depends on the reporting of SR-DRMAs. Previous studies have highlighted the importance of reporting for systematic review and meta-analysis, and insufficient reporting often results in less effective use of research evidence in clinical practice [5, 6]. In a survey of random sample of systematic reviews, most were found to be poorly reported, particularly for some important aspects of the methods (e.g. literature search, quality assessment) [7]. Lack of such information would reduce effective assessment of quality of evidence.

Up to now, the standard of reporting of published SR-DRMAs is unclear. Although a few studies have been used for the development of clinical practice guideline [8, 9], concerns remain as to whether the reporting information is adequate to support clinical decisions. This is particularly true for DRMAs because of the sophisticated methodologies used in those studies [10]. In order to fully understand the reporting of published SR-DRMAs, we conducted a cross-sectional study to examine the epidemiology of those SR-DRMAs, the quality of reporting and characteristics influencing the reporting.

Methods

This study was based on a major systematic survey that examined epidemiological characteristics, methodological and reporting quality, and associated characteristics of published SR-DRMAs of exclusively binary outcomes. In this study, we reported the findings about the quality of reporting and associated characteristics.

Eligibility criteria

We included published SR-DRMAs (aggregate) of binary outcomes across all disease areas. The definition of DRMA has been mentioned in the introduction. The definition of systematic review was based on the Cochrane handbook (version 5.2) [11]. We defined aggregate DRMA as a dose-response meta-analysis that uses aggregate data (study level data). We did not consider pooled analysis as it may failed to employ a comprehensive literature search (at least 1 database). We excluded brief report (i.e. a short demonstration of research results), letter, and conference abstracts since such type of publication contains limited information of reporting items.

Literature search and screening

We searched MEDLINE, EMBASE, and Wiley online library for SR-DRMAs published between January 2011 and July 2017. No language restriction was applied. We used both MeSH terms and free-text words to develop the search strategy, which was primarily drafted by one experienced author (CX), and finalized after discussion within a group of four investigators with expertise in literature search. The details of search strategy can be found in Additional file 1.

Two methods-trained authors (CX and YL), independently and in duplicate, screened titles and abstracts of searched reports, as well as full texts of potentially eligible articles. Any disagreement was discussed by the two authors; if no consensus was achieved, a third author (XS) would be involve for the final judgment.

Data collection

Using pre-defined, pilot tested data collection forms, two methods-trained authors (CX and PLJ), independently and in duplicate, extracted data from the eligible articles. They collected the following information from each eligible article: first author’s name, year of publication, journal published in, region of the first author, number of authors listed, affiliations of authors, funding information, databases searched, number of original studies included, primary outcome by disease area, category of subject (i.e. intervention, epidemiology, diagnose, prognosis), reporting checklists employed, type of studies, and polynomial model used for the statistical analysis (e.g. quadratic polynomial, restricted cubic spline).

We used the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) statement [6], with slight modifications, to assess the reporting quality of included SR-DRMAs (CX and YL). We removed the item “structured summary”, since the summaries of the studies reporting SR-DRMAs vary considerably across journals, and many journals reporting unstructured summaries often require sufficient details. Second, we slightly modified the wording for three items to make it appropriate for a DRMA. In details, we modified 1) the wording of item “identify the report as a systematic review, meta-analysis, or both” into “identify the report as a systematic review, dose-response meta-analysis, or both”; 2) the wording of item “present effect estimates and confidence intervals of each study” into “present dose-specific effects and confidence intervals of each study”; and 3) the wording of item “present results of each meta–analysis down, with confidence intervals and measures of consistency (best with forest plot)” into “present results of each dose-response meta–analysis down, with confidence intervals and measures of consistency (best with pooled dose-response curve)”. Considering SR-DRMAs were mostly conducted by observational studies that some quality tips may not be covered by PRISMA, we added another 6 items from the MOOSE (Meta-analyses Of Observational Studies in Epidemiology) checklist [12]. These includes “qualifications of searchers”, “effort to include all available studies”, “use of hand searching”, “method of addressing articles published in languages other than English”, “assessment of confounding”, and “assessment of heterogeneity”. In addition, the item “provide a general interpretation of the results in the context of other evidence, and implications for future research” from PRISMA checklist was presented as two by MOOSE checklist as “alternative interpretation of the results” and “implication for future research”. We then used the idea from the MOOSE checklist. As a result, the modified checklist included 33 items (Additional file 1: Table S1).

To ensure the assessment of quality, we required that each assessor (CX and YL) should spend at least 30 min for assessing each article, and they were asked to complete up to 20 reports each day. For the two assessors, one (CX) is the co-primary author for the “one-stage” DRMA model of the robust error meta-regression (REMR) [4] and another one (YL) has 3 years’ experience of conducting dose-response meta-analysis.

Statistical analysis

We summarized the baseline characteristics (e.g. number of authors, year of publish) by descriptive statistics. These include the median value (quartile range) or mean value (standard error) to measure the central tendency and variability for continuous variables, while the count and percentage to measure dichotomous or categorical variables.

For each item, we calculated the adherence rate of each specific reporting items, which was the percentage of all published SR-DRMAs adhering to the item. We judged that an item was well reported if it was reported by 80% or more of the SR-DRMAs, or under reported if less than 80% [13].

We calculated the adherence of published SR-DRMAs to the pre-specified reporting items. We defined that a reporting item was adhered by an individual study if that study reported the information required by the item, and thus assigned one point for that item. If a study met the reporting requirement of all items, a total of 33 points would be assigned for that study (i.e. the total reporting score), which was commonly employed in similar researches [14, 15]. A higher score means better quality.

We pre-specified five variables for exploring their influence on the reporting quality [15, 16]. These were region of first author (Asia Pacific versus European versus America), number of authors (<=4, 5–6, 7–8, and > 8, according to the inter-quartiles), year of publication, involvement of any methodologist (yes versus no), and use of any reporting checklist (yes versus no). We assessed that a SR-DRMA involved a methodologist if any of the authors and those listed in the acknowledgement section was affiliated with department of epidemiology, statistics, mathematics, evidence-based medicine, and public health. The five variables were assessed against multicollinearity. A correlation of less than 0.4 was considered weakly correlated [17].

We used the weighted least square regression to investigate the association between reporting quality and the five pre-specified variables that all variables were entered simultaneously in the regression model [4]. Given the potential correlations of the reporting quality of SR-DRMAs published in the same journal, each journal was treated as a cluster in the regression model. We used generalized estimating equation regression with robust variance as a sensitivity analysis to see if the results were stable. To better understand the effects of the variables on the quality, we further employed a multivariable regression (post hoc) to see the association between pre-specified variables and the quality score of each domain of the checklist (i.e. title and introduction, methods, results, discussion and funding information).

All the analyses were conducted in the Stata14.0/SE software (STATA, College Station, TX, Serial number: 10699393), with alpha = 0.05 as the criterion for statistical significance.

Results

We searched 7061 records. After excluding duplicates and abstract screening, 1306 reports were potentially eligible. By reading full texts, we finally included 529 SR-DRMAs (Fig. 1). A full list of the included SR-DRMAs was presented in supplementary file (Additional file 1: Table S2).

The 529 SR-DRMAs were published in 174 academic journals (number of publications per journal: 1 to 33). Among those, 410 (77.5%) were published in specialist journals and 119 (22.5%) in general journals; 353 (66.8%) were conducted by authors from Asia-Pacific region, 129 (24.4%) from Europe, and 47 (8.9%) from North America. Most of SR-DRMAs (75.0%) were published after 2014. The median number of authors of was 6 [interquartile range (IQR): 4, 8]. The median number of databases searched was 2 (IQR: 2, 3), and 61 studies (11.5%) searched only 1 database. Regarding the reporting guideline, there were 204 (38.6%) of SR-DRMAs used the MOOSE statement, 109 (20.6%) used PRISMA statement, while 179 (33.8%) did not use any of the reporting guidelines. In addition, of those 529 studies, 349 (66.0%) involved methodologist, and 337 (66.0%) received financial supports (Table 1). The majority of the SR-DRMAs focused on epidemiology (n = 525, 99.2%). The three most commonly disease outcomes were cancer (n = 260, 49.15%), cardiovascular diseases (n = 118, 22.31%), and diabetes (n = 45, 8.5%).

Reporting quality of included SR-DRMAs

Figure 2 presents the adherence of published SR-DRMAs to each of the reporting item. The overall score for reporting quality was 25.52 (standard error: 2.36; median 26, first – third quartile: 24, 27). In summary, of those 33 items, 23 were highly adhered to by the SR-DRMAs. Ten items (10/33) were under reported by these SR-DRMAs, while 7 of which refer to the methods domain. The details were as follows.

For the reporting of title and introduction, all of the three items were highly adhered. These included: identified the report as a systematic review (n = 521, adherence rate = 98.5%), described the rationale in the introduction (n = 519, 98.1%), provided an explicit objective in the introduction (n = 526, 99.4%).

For the reporting of methods domain, 11 out of the 18 items were highly adhered to by these SR-DRMAs, while 7 were under reported. Highly adhered items: specified criteria for eligibility (n = 512, 96.8%), described database sources (n = 527, 99.6%), described the use of hand searching (n = 506, 95.7%), described method of data extraction (n = 440, 83.2%), described any variable definition and data assumption (n = 483, 91.3%), stated the principal summary measures (n = 443, 83.7%), stated the methods for confounding assessment (n = 498, 94.1%), described methods for combining results (n = 525, 99.2%), described methods for heterogeneity assessment (n = 517, 97.7%), stated the methods for publication bias (n = 506, 95.7%), described methods of additional analyses (n = 505, 95.5%). Under reported items: indicated that a review protocol exists (n = 45, 8.5%), clarified the qualifications of searchers (n = 9, 1.7%), presented full electronic search strategy (n = 137, 25.9%), described any (or no) effort to include all available studies (n = 121, 22.9%), described methods for languages other than English (n = 145, 27.4%), stated the process for selecting studies (n = 107, 20.2%), described methods used for assessing risk of bias (n = 326, 61.6%).

For the reporting of results domain (7 items), all but 1 were highly adhered. Highly adhered items: presented the screen process and eligible studies (n = 508, 96.0%), presented characteristics of included studies (n = 522, 98.7%), presented summarized dose-response relationship and confidence interval (n = 512, 96.8%), presented results of each study (n = 528, 99.8%), presented results of publication bias (n = 503, 95.1%), presented results of additional analysis (n = 514, 97.2%). Under adhered item: presented results of risk of bias (n = 295, 55.8%).

For the reporting of discussion and funding information (5 items), 3 of which were highly adhered while 2 were under reported. Highly adhered items: summarized the main findings (n = 519, 98.1%), discussed the general limitations (n = 503, 95.1%), provided general interpretation of the results (n = 460, 86.96%). Under adhered items: provided implications for future research (n = 329, 62.2%), and described sources of funding (n = 392, 74.1%).

Study characteristics associated with reporting quality

Figure 3 presents the distribution of overall reporting quality scores across those 529 studies. There was no obvious multicollinearity among the five variables. In the multivariable regression analysis, studies with a larger number of authors [5 to 6 vs. 4 or less (regression coefficient = 0.78; 95% CI: 0.35, 1.20; P < 0.001)], studies published more recently (regression coefficient = 0.38; 95% CI: 0.28, 0.47; P for trend < 0.001), the use of reporting guideline (regression coefficient = 0.98; 95% CI: 0.63, 1.32), and involvement of methodologist (regression coefficient = 0.86; 95% CI: 0.42, 1.32; P < 0.001) were statistically associated with better reporting quality (Table 2). The sensitivity analysis showed that the results were similar (Table 2).

The correlations of the quality score among the four domains were small (range from 0.003 to 0.357). Therefore, the regression for each domain was conducted separately (Additional file 2). The results showed that, number of authors (more authors), year of publication (more recently), the use of reporting guideline, and involvement of methodologist mainly contributed the reporting quality of the methods and results domains while not associated with the reporting of the title and introduction (Table 3).

Discussion

In this study, we found that the adherence in two-thirds of the reporting items of SR-DRMAs was generally good. Reporting on some items, however, needs to be improved, especially those items refer to the methods domain. These including indication of a review protocol, clarifying the qualifications of searchers, statement of any effort to include all available studies, description methods for languages other than English, presentation of full electronic search strategy, statement about the process for selecting studies, description about methods used for assessing risk of bias, presentation of results about risk of bias, presentation of implications for future research, and statement about sources of funding. In particular, the reporting about study protocol and qualifications of searchers are the two least reported items. The under-reporting about study protocol is partly because some journals have not required registration of systematic reviews. The failure to report the qualifications of searchers is probably due to librarians were seldom involved in such types of meta-analysis. In addition, very few authors failed to report whether they taken any effort to get all available studies.

We also found that studies with more authors and involvement of methodologists were associated with better reporting. Our further analysis suggested that this positive effect was mainly due to the improvement of the methods domain. This highlighted the importance of inviting collaborations in the conduct and reporting of systematic reviews. In particular, SR-DRMAs are usually more sophisticated than traditional meta-analyses of pair-wise comparisons. The methodological sophistication often requires more careful planning and reporting of methods details. Involvement of more authors with diverse backgrounds, particularly those who have methodological expertise, would improve the quality of such studies. In the regression analysis, we also found that the use of reporting guideline was associated with better reporting quality, detailed in the methods domain, the results domain, and the conclusion domain. In addition, the trend test within the multiple regression showed that the quality of reporting has improved over the years. Similarly, the improvement of the reporting mainly reflected in the methods and results domains. This finding is supported by a previous survey of meta-analyses of vascular surgery [18]. These represent a good phenomenon in the scientific reporting of SR-DRMAs.

The findings of adherence were of somewhat similar to an earlier comprehensive survey of the reporting of SRs [19]. In that research, the authors found that less than 6% of the SRs provided a protocol, 38% specified the method for risk of bias assessment, and 30% presented the results of risk of bias. The adherence rate of the three items in current study were 8.5, 61.6, and 55.8% respectively. In addition, in both of our survey on SR-DRMAs and the previous survey on all SRs, the title, introduction, eligible criteria, source of database, summery measurements, and limitations were generally well complied.

In a recent survey on meta-analysis, authors reported that financial support was associated with better reporting [20]. Gagnier et al. [21] observed positive association between reporting of funding source and the whole reporting quality. In our study, we did not include the funding information in the regression analysis, mainly because the item “reporting of financial information” was part of the PRISMA statement. Nevertheless, the reporting of financial information still needs to be improved since about a quarter of the SR-DRMAs failed to provide this information, while this issue is more serious (almost 2/3) in the previous survey [19].

There were differences between our findings and earlier studies. One study [20] included all types of meta-analysis in urological literature and categorized reporting quality as binary outcome (superior quality vs. non-superior), but did not observe any association between number of authors and superior quality. Nagendrababu et al. [22] included all types of meta-analysis in Endodontics also found no association between number of authors and reporting quality. Gagnier et al. assessed the focused on orthopaedic systematic reviews, and found no difference of the reporting quality over publication years [21]. Adie et al. [23] summarized the meta-analyses of surgical interventions, but they did not find significant difference of the reporting quality with the involvement of methodologist. In our study, we observed significant association between number of authors, year of publication, involvement of methodologist and reporting quality. One possible explanation is the different “subject” of the three studies. In our study, we only focus on dose-response meta-analysis while the others considered different type of meta-analysis. Another reason may be that DRMA is more sophisticated than the traditional meta-analysis, and researchers involved in this type of meta-analyses may have been more aware of systematic review methodology, including reporting.

Our study has several strengths. To the best of our knowledge, this is the first study that specifically assessed the reporting of dose-response meta-analyses. We included nearly all of the published SR-DRMAs. Thus the findings would be of highly representative. We pre-specified a limited number of variables for exploring association between study characteristics and reporting quality. In addition, we used rigorous analytical approach to address cluster effect in the regression analysis, and conducted sensitivity analyses which showed robustness of findings.

The study has a few limitations. First, the approach we used to measure quality of reporting did not consider relative importance among items (all the items assumed to carry equal weight). Thus, the scoring scheme on reporting may not be optimal. However, there has not been a validated approach to assign weight to each of the item. The current approach may represent the reality one would have face in the exploration of the study characteristics with the reporting. In addition, this approach has been widely used [15, 19,20,21,22]. Secondly, our survey excluded SR-DRMAs which involved continuous outcomes. This decision was made mainly because very few SR-DRMAs used a continuous outcome. The finding may thus not be applicable to those SR-DRMAs which reported continuous outcomes and further investigation is warranted. In addition, there was no an existing reporting guideline specific for SR-DRMA, we used the combination of PRISMA and MOOSE statements to assess the reporting quality may be insufficient to map the “real” situations, especially for those DRMAs without systematic review.

Conclusions

In conclusion, by the current evidence, the reporting of SR-DRMAs on some domains (introduction, results) were generally good, while suboptimal in the methods domain. However, there were the risk that some potential aspects of the reporting for DRMA were not fully covered and requires further investigation. Further efforts are needed to improve the reporting, particularly on several items, such as study protocol and qualifications of searchers. SR-DRMAs involving more authors and methodologists, used of any reporting guideline, and published more recently may be benefited with better reporting quality. It is necessary for methodologists to develop a reporting guideline specific for DRMA.

References

Berlin JA, Longnecker MP, Greenland S. Meta-analysis of epidemiologic dose-response data. Epidemiology. 1993;4:218–28.

Greenland S, Longnecker MP. Methods for trend estimation from summarized dose-response data, with applications to meta-analysis. Am J Epidemiol. 1992;135:1301–9.

Orsini N, Li R, Wolk A, Khudyakov P, Spiegelman D. Meta-analysis for linear and nonlinear dose-response relations: examples, an evaluation of approximations, and software. Am J Epidemiol. 2012;175:66–73.

Xu C, Doi SA. The robust-error meta-regression method for dose-response meta-analysis. Int J Evid Based Healthc. 2018;16(3):138–44.

Ioannidis JP, Greenland S, Hlatky MA, et al. Increasing value and reducing waste in research design, conduct, and analysis. Lancet. 2014;383(9912):166–75.

Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.

Page MJ, Shamseer L, Altman DG, Tetzlaff J, Sampson M, et al. Epidemiology and reporting characteristics of systematic reviews of biomedical research: a cross-sectional study. PLoS Med. 2016;13(5):e1002028.

Liu TZ, Xu C, Rota M, Cai H, Zhang C, et al. Sleep duration and risk of all-cause mortality: a flexible, non-linear, meta-regression of 40 prospective cohort studies. Sleep Med Rev. 2017;32:28–36.

Cumberbatch MG, Rota M, Catto JW, La Vecchia C. The role of tobacco smoke in bladder and kidney carcinogenesis: a comparison of exposures and meta-analysis of incidence and mortality risks. Eur Urol. 2016;70(3):458–66.

Xu C, Doi SA, Zhang C, Sun X, Chen H, et al. Proposed reporting guidelines for dose– response meta-analysis (Chinese edition). Chin J Evid Based Med. 2016;16:1221–6.

Chandler J, Higgins JPT, Deeks JJ, Davenport C, Clarke MJ. Chapter 1: Introduction. In: Higgins JPT, Churchill R, Chandler J, Cumpston MS (editors), Cochrane Handbook for Systematic Reviews of Interventions version 5.2.0 (updated June 2017), Cochrane, 2017. Available from www.training.cochrane.org/handbook. Accessed 7 Oct 2018.

Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis of observational studies in epidemiology (MOOSE) group. JAMA. 2000;283(15):2008–12.

PL Jia L, Tang JJY, Lee AH, Zhou X, et al. Risk of bias and methodological issues in randomized controlled trials of acupuncture for knee osteoarthritis: a cross-sectional study. BMJ Open. 2018;8(3):e019847.

Pieper D, Jacobs A, Weikert B, Fishta A, Wegewitz U. Inter-rater reliability of AMSTAR is dependent on the pair of reviewers. BMC Med Res Methodol. 2017;17:98.

Moher D, Pham B, Jones AL, Cook DJ, Jadad AR, et al. Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses? Lancet. 1998;352(9128):609–13.

Ioannidis JP, Lau J. Completeness of safety reporting in randomized trials: an evaluation of 7 medical areas. JAMA. 2001;285(4):437–43.

Tuğran E, Kocak M, Mirtagioğlu H, Yiğit S, Mendes M. A simulation based comparison of correlation coefficients with regard to type I error rate and power. J Data Anal Inform Proc. 2015;3:87–101.

Tan WK, Wigley J, Shantikumar S. The reporting quality of systematic reviews and meta-analyses in vascular surgery needs improvement: a systematic review. Int J Surg. 2014;12(12):1262–5.

Pussegoda K, Turner L, Garritty C, Mayhew A, Skidmore B, Stevens A, et al. Systematic review adherence to methodological or reporting quality. Syst Rev. 2017;6(1):131.

Xia LL, Xu J, Guzzo TJ. Reporting and methodological quality of meta-analyses in urological literature. Peer J. 2017;5:e3129.

Gagnier JJ, Kellam PJ. Reporting and methodological quality of systematic reviews in the orthopaedic literature. J Bone Joint Surg Am. 2013;95(11):e771–7.

Nagendrababu V, Pulikkotil SJ, Sultan OS. Methodological and reporting quality of systematic reviews and meta-analyses in endodontics. J Endod. 2018. https://doi.org/10.1016/j.joen.2018.02.013.

Adie S, Ma D, Harris IA, Naylor JM, Craig JC. Quality of conduct and reporting of meta-analyses of surgical interventions. Ann Surg. 2015;261(4):685–94.

Acknowledgments

I (X.C) would like to express my deep appreciation for Prof. Suhail A.R Doi (Qatar University) for his guidance on me of synthesis methods for dose-response data.

Funding

S.X was supported by Natural Science Foundation of China (No.71573183) and X.C was supported by Doctoral Scholarship of Sichuan University.

Availability of data and materials

The dataset supporting the conclusions of this article is included within the article and its additional files.

Author information

Authors and Affiliations

Contributions

LTZ and XC conceived and designed the study; XC drafted the manuscript, conducted the literature search, analyzed the data, interpret the data; SX revised the draft manuscript critically; XC and LY contributed the literature screen and contributed to the quality assessment; JP-L, XC, and LL conducted the data collection; CLL provided statistical suggestions and the conducted part of the data analysis; All authors provided additional comments and revised the manuscript. All authors have read and approved the manuscript for publication.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interest.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Search strategy, modified checklist for quality assessment, and list of included DRMAs. (DOCX 68 kb)

Additional file 2:

The raw data we used for the regression analysis. This contains 6 variables (e.g year of publication, journal), the total reporting score in all, and the total score of each domain. (XLSX 40 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Xu, C., Liu, TZ., Jia, PL. et al. Improving the quality of reporting of systematic reviews of dose-response meta-analyses: a cross-sectional survey. BMC Med Res Methodol 18, 157 (2018). https://doi.org/10.1186/s12874-018-0623-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-018-0623-6