Abstract

Background

Prospective study protocols and registrations can play a significant role in reducing incomplete or selective reporting of primary biomedical research, because they are pre-specified blueprints which are available for the evaluation of, and comparison with, full reports. However, inconsistencies between protocols or registrations and full reports have been frequently documented. In this systematic review, which forms part of our series on the state of reporting of primary biomedical, we aimed to survey the existing evidence of inconsistencies between protocols or registrations (i.e., what was planned to be done and/or what was actually done) and full reports (i.e., what was reported in the literature); this was based on findings from systematic reviews and surveys in the literature.

Methods

Electronic databases, including CINAHL, MEDLINE, Web of Science, and EMBASE, were searched to identify eligible surveys and systematic reviews. Our primary outcome was the level of inconsistency (expressed as a percentage, with higher percentages indicating greater inconsistency) between protocols or registration and full reports. We summarized the findings from the included systematic reviews and surveys qualitatively.

Results

There were 37 studies (33 surveys and 4 systematic reviews) included in our analyses. Most studies (n = 36) compared protocols or registrations with full reports in clinical trials, while a single survey focused on primary studies of clinical trials and observational research. High inconsistency levels were found in outcome reporting (ranging from 14% to 100%), subgroup reporting (from 12% to 100%), statistical analyses (from 9% to 47%), and other measure comparisons. Some factors, such as outcomes with significant results, sponsorship, type of outcome and disease speciality were reported to be significantly related to inconsistent reporting.

Conclusions

We found that inconsistent reporting between protocols or registrations and full reports of primary biomedical research is frequent, prevalent and suboptimal. We also identified methodological issues such as the need for consensus on measuring inconsistency across sources for trial reports, and more studies evaluating transparency and reproducibility in reporting all aspects of study design and analysis. A joint effort involving authors, journals, sponsors, regulators and research ethics committees is required to solve this problem.

Similar content being viewed by others

Background

Incomplete or selective reporting in publications is a serious threat to the validity of findings from primary biomedical research, because inadequate reporting may be subject to bias, and it subsequently impairs evidence-based decision-making [1, 2]. Prospective study protocols and registrations can play a significant role in reducing incomplete or selective reporting, because they are pre-specified blueprints which are available for the evaluation of, and comparison with, full reports [3, 4]. Therefore, for instance, in 2004 the International Committee of Medical Journal Editors (ICMJE) stated that all trials must be included in a trial registry before participant enrollment as a compulsory condition of publication [5], because registry records may include information on either what was planned or what was done during a study. Moreover, one recent study reported that primary outcomes were more consistently reported when a trial had been prospectively registered [6]. With wide acceptance of trial registration, many journals started establishing editorial policies to publish protocols. However, inconsistency was found to be strikingly frequent after comparing protocols or registrations with full reports regarding outcome reporting, subgroup selection, sample size, statistical analysis, among others [7,8,9,10,11]. In this systematic review, which forms part of our series on the state of reporting of primary biomedical research, our objectives were to map the existing evidence of inconsistency between protocols or registrations (i.e., what was planned to be done and/or what was actually done) and full reports (i.e., what was reported in the literature), and to provide recommendations to mitigate such inconsistent reporting, based on findings from systematic reviews and surveys in the literature [12].

Methods

We followed the guidance from the Joanna Briggs Institute [13] and/or the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [14] to conduct and report our review. Details on the methods have been published in our protocol [12].

Eligibility and search strategy

In brief, in this systematic review, we included systematic reviews or surveys that focused on inconsistent reporting when comparing protocols or registration with full reports. A study protocol is defined as the original research plan with comprehensive description of study participants or subjects, outcomes, objective(s), design, methodology, statistical consideration and other related information that cannot be influenced by the subsequent study results. In this review, we defined full reports as the publications that included findings of any of the study key elements, including participants or subjects, interventions or exposures, controls, outcomes, time frames, study designs, analyses, result interpretations and conclusions, and other study-related information, and that had been published after completion of the studies. Therefore full reports may include full-length articles, research letters, or other published reports without peer review. An eligible systematic review was defined as a study that assessed the comparisons between protocols or registrations and full reports, and that had predefined objectives, specified eligibility criteria, at least one database searched, data extraction and analyses, and at least one study included. All the surveys that included primary studies and that compared protocols or registrations and full reports were eligible for inclusion.

Exclusion criteria were: 1) the study was not a systematic review or survey, 2) the study objective did not include comparison between protocols or registration with full reports, 3) the study could not provide data on such comparisons, 4) the study did not focus on primary biomedical studies, or 5) the study was in duplicate. The search process was completed by one reviewer (GL) with the help of an experienced librarian. It was limited to several databases (CINAHL, EMBASE, Web of Science, and MEDLINE) from 1996 to September 30th 2016, restricted to studies in English. Two reviewers (YJ and IN) independently screened the records retrieved from the search. Reference lists from the included studies were also searched by hand in duplicate by the two reviewers (YJ and IN), to avoid the omission of potentially eligible systematic reviews and surveys. The kappa statistic was used to assess the agreement level between the two reviewers [15].

Outcome and data collection

Our primary outcome was the percentage of primary studies in the included systematic reviews and surveys for which an inconsistency was observed between the protocol or registration and the full report, with higher percentages indicating greater inconsistency [12]. Inconsistencies were recorded between protocols or registrations and full reports with respect to study participants or subjects, interventions or exposures, controls, outcomes, time frames, study designs, analyses, result interpretations and conclusions, and other study-related information. A secondary outcome was the factors reported to be significantly associated with the inconsistency between protocols or registration and full reports.

Two independent reviewers (IN and LA) extracted the data from the included studies. Data collected included the general characteristics of the systematic reviews or surveys (author, year of publication, journal, study area, data sources, search frame, numbers and study designs of included primary studies for each systematic review or survey, measure of comparison, country and sample size of primary studies, and funding information), key findings of inconsistent reporting, authors’ conclusions, and the factors reported to be significantly related to inconsistent reporting. The terminologies and their frequency used in the included systematic reviews and surveys to describe the reporting problem were also collected.

Quality assessment and data analyses

Study quality was assessed for the included systematic reviews using the AMSTAR (a measurement tool to assess systematic reviews) [16]; no comparable assessment tool was available for surveys. We excluded two items of the AMSTAR (item 9 “Were the methods used to combine the findings of studies appropriate?” and 10 “Was the likelihood of publication bias assessed?”) because they were not relevant to the included systematic reviews.

Inconsistency was analysed descriptively using medians and interquartile ranges (IQRs). Frequencies of the terminologies that were used to describe the inconsistent reporting problem and that were extracted from the included systematic reviews and surveys were calculated and shown by using word clouds. The word clouds were generated using the online program Wordle (www.wordle.net) with the input of the terminologies and their frequencies. The relative size of the terms in the word clouds corresponded to the frequency of their use. We summarized findings from the included systematic reviews and surveys qualitatively. No pooled analyses were performed in this review.

Results

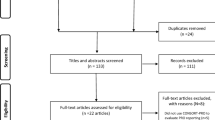

A total of 9123 records were retrieved. After removing duplicates, 8080 records were screened through their titles and abstracts. There were 108 studies accessed for full-text article evaluation (kappa = 0.81, 95% confidence interval: 0.75–0.86). We included 37 studies (33 surveys and 4 systematic reviews) for analysis [7,8,9,10,11, 17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48]. Fig. 1 shows the study inclusion process.

Table 1 displays the characteristics of the included studies that were published between 2002 and 2016. Approximately half studies (n = 13) focused on composite areas in biomedicine, five studies on surgery or orthopaedics, four on oncology, and four on pharmacotherapeutic studies. There were 15 studies that had collected data from the registry entries, with the most commonly-used registries being ClinicalTrials.gov (n = 13), ISRCTN (the International Standard Randomised Controlled Trial Number Registry; n = 8), and WHO ICTRP (World Health Organization International Clinical Trials Registry Platform; n = 7). Eight studies collected protocol data from grant or ethics applications, two from FDA (Food and Drug Administration) reviews, two from internal company documents, one from a journal’s website, and nine from other sources, respectively. Regarding the data sources for full reports, most studies (n = 35) searched databases and/or journal websites to access full reports, while one study collected full reports by searching databases and contacting investigators [17] and another study by contacting the lead investigators only [20]. Most systematic reviews or surveys (n = 36) compared protocols or registrations with full reports in clinical trials; there was only one included survey that investigated inconsistent reporting in both clinical trials and observational research [18]. Measures of comparison between protocols or registrations and full reports included outcome reporting, subgroup reporting, statistical analyses, sample size, participant inclusion criteria, randomization, and funding, among others (Table 1). Most primary studies were conducted in North America and Europe. Among the included systematic reviews and surveys that reported information on sample sizes for the primary studies, the median sample sizes in the primary studies ranged from 16 to 463. There were 15 studies that had received academic funding for their conduct of a systematic review or survey, and 2 studies that had received litigation-related consultant fees.

We assessed study quality for the four systematic reviews using AMSTAR [23, 30, 42, 47]. None of them had assessed the quality of their included primary studies, thus receiving no points for items 7 (“Was the scientific quality of the included studies assessed and documented?”) and 8 (“Was the scientific quality of the included studies used appropriately in formulating conclusions?”). One review scored 5 (out of 9) on AMSTAR, because it did not provided information on duplicate data collection (AMSTAR item 2) or show the list of included and excluded primary studies (item 5) [23]. No indication of a grey literature search (item 4) was found in one systematic review [42], resulting in its score of 6 (out of 9) on AMSTAR.

Among all the 37 included studies, the terminologies most frequently used to describe the reporting problem included selective reporting (n = 35, 95%), discrepancy (n = 31, 84%), inconsistency (n = 27, 73%), biased reporting (n = 15, 41%), and incomplete reporting (n = 13, 35%). Fig. 2 shows the word clouds of all the terminologies used in the included systematic reviews and surveys.

Table 2 presents the key findings and authors’ conclusions of inconsistency by their main measure of comparison between protocols or registrations and full reports. Table 3 presented the detailed information of what had been reported in the 37 included studies regarding the inconsistency between protocols or registrations and full reports. There were 17 studies with a focus of outcome reporting problems, including changing, omitting (or unreported), introducing, incompletely-reporting, and selectively-reporting outcomes. The median inconsistency of outcome reporting was 54% (IQR: 29% - 72%), ranging from 14% (22/155) to 100% (1/1 and 69/69). Six studies found that most inconsistencies (median 71%, IQR: 57% - 83%) favoured a statistically significant result in full reports [21, 24, 27, 38, 43, 45]. Regarding subgroup reporting, inconsistency levels between protocols or registrations and full reports varied from 38% (196/515) to 100% (6/6), with post hoc analyses introduced (ranging from 26% (132/515) to 76% (143/189)) and pre-specified analyses omitted in full reports (from 12% (64/515) to 69% (103/149)). Inconsistencies of statistical analyses were observed, including defining non-inferiority margins, analysis principle selection (intention-to-treat, per-protocol, as-treated), and model adjustment, with an inconsistency level varying from 9% (5/54) to 67% (2/3). The remaining 13 studies reported frequent inconsistencies in multiple measure comparisons, where the multiple measure comparisons were defined as at least two main measures used for comparison between protocols or registrations and full reports (Tables 2 and 3). For instance, inconsistencies were observed in sample sizes (ranged from 27% (14/51) to 60% (34/56)), inclusion or exclusion criteria (from 12% (19/153) to 45% (9/20)), and conclusions (9%, 9/99).

As shown in Table 4, significant factors reported to be related to inconsistent reporting included outcomes with statistically significant results, study sponsorship, type of outcome (efficacy, harm outcome) and disease specialty. Two studies reported higher odds of complete reporting for full reports in primary outcomes with significant results (odds ratios (ORs) ranging from 2.5 to 4.7) [7, 19], while one study found that outcomes with significant results were associated with inconsistent reporting in full reports (OR = 1.38) [34].Other factors related to inconsistent reporting included investigator-sponsored trials, efficacy outcomes, and cardiology and infectious diseases (Table 4).

Discussion

We have presented the mapping of evidence of inconsistent reporting between protocols or registrations (i.e., what was planned to be done and/or what was actually done) and full reports (i.e., what was reported in the literature) in primary biomedical research, based on findings from systematic reviews and surveys in the literature. High levels of inconsistency were found across various areas in biomedicine and in different study aspects, including outcome reporting, subgroup reporting, statistical analyses, and others. Some factors such as outcomes with significant results, sponsorship, type of outcome and disease speciality were reported to be significantly related with inconsistent reporting.

The ICMJE statement that requires all trials to be registered prospectively has been implemented since 2004 [5]. Likewise, the SPIRIT (Standard Protocol Items: Recommendations for Interventional Trials) statement aims to assist in transparent reporting and improve the quality of protocols [49]. However, inconsistent reporting between protocols or registrations and full reports remains a severe problem. In this review, all the included studies revealed that the inconsistent reporting between protocols or registrations and full reports was highly prevalent, common and suboptimal. Inconsistent reporting may impair the evidence’s reliability and validity in the literature, potentially resulting in evidence-biased syntheses [29, 50, 51] and inaccurate decision-making, especially given that most inconsistencies were found to favor statistically significant results (Table 2).One study searched full reports in gastroenterology and hepatology journals published from 2009 to 2012, and concluded that the inconsistent reporting problem had improved; however, there might have been sampling bias involved in reaching this conclusion as it indicated [26]. More evidence to assess the trend of inconsistent reporting, and more efforts to mitigate it, are needed for the primary biomedical community.

We found that the majority of the evidence for inconsistent reporting between protocols or registrations and full reports came from assessments of outcome reporting. It is not uncommon for authors to change, omit, incompletely-report, selectively-report, or introduce new outcomes in full reports. The main reason was that they attempted to show statistically significant findings using an approach of selective reporting of outcomes to cater to the journal’s choices of publications [52,53,54].Therefore outcomes with significant results were more likely to be fully and completely reported, compared with those outcomes with nonsignificant results, as observed in two included surveys [7, 19]. By contrast, another study found that outcomes with significant results were associated with inconsistent reporting [34]. The conflicting findings may be due to their different inclusion criteria (primary outcomes [7, 19] vs. all outcomes [34]), and different definitions of inconsistent reporting (defined as primary outcomes changed, introduced, or omitted [7, 19] vs. defined as addition, omission, non-specification, or reclassification of primary and secondary outcomes [34]). Thus more research is needed to further explore and clarify the relationship between outcomes with significant results and inconsistent reporting. Similarly, other factors (study sponsorship, type of outcome and disease speciality) should be considered with caution, because their associations with inconsistent reporting were observed in only one survey (Table 4).

We identified several methodological issues in the included studies. Some studies used multiple sources to locate protocol or registration documents and full reports. However, we could not study the heterogeneity in the sources of protocols or registrations and full reports used for comparisons, because the sources used were substantially various and some of them could not be publicly accessible. Furthermore, although registrations are publicly available, they usually contain incomplete study information [55]. Protocols can provide more transparent and comprehensive details, but they are often not publicly accessible. This is a major limitation that may make it harder to reproduce the findings and conclusions of comparing protocols or registrations and full reports. Likewise, the definitions of inconsistencies and measures of the level of inconsistencies were not fully and explicitly described in the included studies, potentially impacting the reproducibility and validation of the evaluations. This challenge is further exacerbated by lack of detailed and transparent reporting of the data collection methods in some studies. For example, some surveys only contacted authors for access to full reports, rather than systematically searching the database(s). Such heterogeneity and disagreements across data sources would potentially affect the statistical significances, effect sizes, interpretations, and conclusions of trial results and their subsequent meta-analyses [56]. Also, there were no explanations provided regarding the inconsistencies found between documents. One study conducted a telephone interview with trialists who were identified to experience inconsistent reporting [57]. It was found that most trialists were not aware of the implications for the evidence base of inconsistent reporting in full trial reports. Thus, providing the researchers with some support to help them recognize the importance of consistent reporting, such as including a list of trial modifications as a journal requirement for submission and offering some training sessions with different inconsistent reporting scenarios that could drive different conclusions, would be a worthwhile endeavour. Taken together, these issues raise the importance of establishing appropriate standards for and consensus on conducting scientific studies aimed at comparing the reporting of key trial aspects in different documents so as to enhance the reproducibility of such comparison studies.

There were several systematic reviews assessing cohort studies that compared protocols or registrations and full reports [52, 53, 58, 59]. However, they either focused on outcome reporting [52, 53] or statistical analysis reporting [58]; and therefore there was no study summarizing all the inconsistencies between protocols or registrations and full reports in the primary research literature mapping. One Cochrane review published in 2011 included 16 studies and assessed all aspects of inconsistencies throughout the full reports [59]. Our current review included more up-to-date studies and thus provided more information for the biomedical community. Moreover, while all the reviews restricted their inclusion of clinical trials only [52, 53, 58, 59], our review aimed to include all the biomedical areas and map the existing evidence in the overall primary biomedical community. Furthermore, our study identified several methodological issues in the included systematic reviews and surveys regarding the design, conduct and reproducibility, which could assist with the transparent and standardized processes of future comparison studies in this topic.

With a high prevalence of inconsistent reporting highlighted in this review, efforts are needed to reverse this condition by authors, journals, sponsors, regulators and research ethics committees. For instance, authors are expected to fully interpret the necessary modifications made from protocols or registrations, while journal staff and reviewers should refer to protocols or registrations for rigorous scrutiny in peer-review processes. Moreover, the investigators who share their protocols, full reports, and data in public should be rewarded, because this practice can mitigate the inconsistent reporting problem and increase the scientific value of research [54]. For instance, institutions and funders might consider using some performance metrics to provide credits or promotions for the investigators who are willing to share and disseminate their research in public [54]. The impact of ICMJE and SPIRIT statements on inconsistent reporting remains largely unexplored due to sparse evidence available. However, such standards for the protocol or registration reporting should be strictly adopted for all the biomedical areas, because they can provide a platform for easy evaluation of and comparison with full reports. For example, some studies found that prospective registrations for trials were inadequate and incomplete [24, 25, 32, 35, 43], leaving the comparison between registrations and full reports questionable and unidentifiable. Therefore the possibility of inconsistent reporting remained largely unknown for those trials with inadequate and incomplete registrations, which would exert an unclear impact on our findings in this review. On the other hand, two studies demonstrated that the methodological quality in full reports was poor and could not reflect the actual high quality in protocols [30, 48]. Therefore to improve their quality of reporting and reduce the inconsistent reporting, full reports should rigorously follow the reporting guidelines including the CONSORT (Consolidated Standards of Reporting Trials) for clinical trials, ARRIVE (Animal Research: Reporting In Vivo Experiments) for animal studies, and STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) for observational studies, among others. For instance, one study comparing the quality of trial reporting in 2006 between the CONSORT endorsing and non-endorsing journals found significantly improved reporting quality for the trials published in the CONSORT endorsing journals, especially for the aspect of trial registrations (risk ratio = 5.33; 95% confidence interval: 2.82 to 10.08) [60]. Besides, guidance and/or checklists are needed for authors, editorial staff, reviewers, sponsors, regulators and research ethics committees to advance their easy and prompt assessment of inconsistency between protocols or registrations and full reports.

Some limitations exist in this review. We limited our search to English language, which would restrict the generalizability of our findings to the studies in other languages. We did not search the grey literature for unpublished systematic reviews or surveys, which may omit the data from studies that were in progress or yet to be published. We only included one study exploring non-trial research (Table 1); therefore, the inconsistent reporting in non-trial areas remains largely unknown. A possible explanation for this may be that compared to trials, non-trial or observational studies continue to receive less scrutiny in that there is no requirement for their registration, and also there is less emphasis on publication of their protocols. We could not evaluate the quality of surveys due to lack of quality assessment guidance available, which would impair the strength of evidence presented in our review, because most included studies were surveys.

Conclusion

In this systematic review comparing protocols or registrations with full reports, we highlight that inconsistent reporting in different study aspects is frequent, prevalent and suboptimal in primary biomedical research, based on findings from systematic reviews and surveys in the literature. We also identify methodological issues such as the need for consensus on measuring inconsistency across sources for trial reports, and more studies evaluating transparency and reproducibility in reporting all aspects of study design and analysis. Efforts from authors, journals, sponsors, regulators and research ethics committees are urgently required to reverse the inconsistent reporting problem.

Abbreviations

- AMSTAR:

-

a measurement tool to assess systematic reviews

- ARRIVE:

-

Animal Research: Reporting In Vivo Experiments

- CONSORT:

-

Consolidated Standards of Reporting Trials

- FDA:

-

Food and Drug Administration

- ICMJE:

-

International Committee of Medical Journal Editors

- IQR:

-

Interquartile range

- ISRCTN:

-

the International Standard Randomised Controlled Trial Number

- OR:

-

Odds ratio

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- SPIRIT:

-

Standard Protocol Items: Recommendations for Interventional Trials

- STROBE:

-

Strengthening the Reporting of Observational Studies in Epidemiology

- WHO ICTRP:

-

World Health Organization International Clinical Trials Registry Platform

References

Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, Michie S, Moher D, Wager E. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383(9913):267–76.

Dickersin K, Chalmers I. Recognizing, investigating and dealing with incomplete and biased reporting of clinical research: from Francis bacon to the WHO. J R Soc Med. 2011;104(12):532–8.

Chan AW. Bias, spin, and misreporting: time for full access to trial protocols and results. PLoS Med. 2008;5(11):e230.

Ghersi D, Pang T. From Mexico to Mali: four years in the history of clinical trial registration. J Evid Based Med. 2009;2(1):1–7.

De Angelis C, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, Kotzin S, Laine C, Marusic A, Overbeke AJ, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. CMAJ. 2004;171(6):606–7.

Chan AW, Pello A, Kitchen J, Axentiev A, Virtanen JI, Liu A, Hemminki E. Association of Trial Registration with Reporting of primary outcomes in protocols and publications. JAMA. 2017;318(17):1709–11.

Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004;291(20):2457–65.

Chan AW, Hrobjartsson A, Jorgensen KJ, Gotzsche PC, Altman DG. Discrepancies in sample size calculations and data analyses reported in randomised trials: comparison of publications with protocols. BMJ. 2008;337:a2299.

Kasenda B, Schandelmaier S, Sun X, von Elm E, You J, Blumle A, Tomonaga Y, Saccilotto R, Amstutz A, Bengough T, et al. Subgroup analyses in randomised controlled trials: cohort study on trial protocols and journal publications. BMJ. 2014;349:g4539.

Vedula SS, Li T, Dickersin K. Differences in reporting of analyses in internal company documents versus published trial reports: comparisons in industry-sponsored trials in off-label uses of gabapentin. PLoS Med. 2013;10(1):e1001378.

Dekkers OM, Cevallos M, Buhrer J, Poncet A, Ackermann Rau S, Perneger TV, Egger M. Comparison of noninferiority margins reported in protocols and publications showed incomplete and inconsistent reporting. J Clin Epidemiol. 2015;68(5):510–7.

Li G, Mbuagbaw L, Samaan Z, Jin Y, Nwosu I, Levine M, Adachi JD, Thabane L. The state of reporting of primary biomedical research: a scoping review protocol. BMJ Open. 2017;7(3):e014749.

Peters MD, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Based Healthc. 2015;13(3):141–6.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;339:b2535.

Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37(5):360–3.

Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, Porter AC, Tugwell P, Moher D, Bouter LM. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10.

Al-Marzouki S, Roberts I, Evans S, Marshall T. Selective reporting in clinical trials: analysis of trial protocols accepted by the lancet. Lancet. 2008;372(9634):201.

Boonacker CW, Hoes AW, van Liere-Visser K, Schilder AG, Rovers MM. A comparison of subgroup analyses in grant applications and publications. Am J Epidemiol. 2011;174(2):219–25.

Chan AW, Krleza-Jeric K, Schmid I, Altman DG. Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research. CMAJ. 2004;171(7):735–40.

Hahn S, Williamson PR, Hutton JL. Investigation of within-study selective reporting in clinical research: follow-up of applications submitted to a local research ethics committee. J Eval Clin Pract. 2002;8(3):353–9.

Hannink G, Gooszen HG, Rovers MM. Comparison of registered and published primary outcomes in randomized clinical trials of surgical interventions. Ann Surg. 2013;257(5):818–23.

Hartung DM, Zarin DA, Guise JM, McDonagh M, Paynter R, Helfand M. Reporting discrepancies between the ClinicalTrials.gov results database and peer-reviewed publications. Ann Intern Med. 2014;160(7):477–83.

Hernandez AV, Steyerberg EW, Taylor GS, Marmarou A, Habbema JD, Maas AI. Subgroup analysis and covariate adjustment in randomized clinical trials of traumatic brain injury: a systematic review. Neurosurgery. 2005;57(6):1244–53. discussion 1244-1253

Killeen S, Sourallous P, Hunter IA, Hartley JE, Grady HL. Registration rates, adequacy of registration, and a comparison of registered and published primary outcomes in randomized controlled trials published in surgery journals. Ann Surg. 2014;259(1):193–6.

Korevaar DA, Ochodo EA, Bossuyt PM. Hooft L: publication and reporting of test accuracy studies registered in ClinicalTrials.gov. Clin Chem. 2014;60(4):651–9.

Li XQ, Yang GL, Tao KM, Zhang HQ, Zhou QH, Ling CQ. Comparison of registered and published primary outcomes in randomized controlled trials of gastroenterology and hepatology. Scand J Gastroenterol. 2013;48(12):1474–83.

Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302(9):977–84.

Maund E, Tendal B, Hrobjartsson A, Jorgensen KJ, Lundh A, Schroll J, Gotzsche PC. Benefits and harms in clinical trials of duloxetine for treatment of major depressive disorder: comparison of clinical study reports, trial registries, and publications. BMJ. 2014;348:g3510.

Melander H, Ahlqvist-Rastad J, Meijer G, Beermann B. Evidence b(i)ased medicine--selective reporting from studies sponsored by pharmaceutical industry: review of studies in new drug applications. BMJ. 2003;326(7400):1171–3.

Mhaskar R, Djulbegovic B, Magazin A, Soares HP, Kumar A. Published methodological quality of randomized controlled trials does not reflect the actual quality assessed in protocols. J Clin Epidemiol. 2012;65(6):602–9.

Milette K, Roseman M, Thombs BD. Transparency of outcome reporting and trial registration of randomized controlled trials in top psychosomatic and behavioral health journals: a systematic review. J Psychosom Res. 2011;70(3):205–17.

Nankervis H, Baibergenova A, Williams HC, Thomas KS. Prospective registration and outcome-reporting bias in randomized controlled trials of eczema treatments: a systematic review. J Invest Dermatol. 2012;132(12):2727–34.

Norris SL, Holmer HK, Fu R, Ogden LA, Viswanathan MS, Abou-Setta AM. Clinical trial registries are of minimal use for identifying selective outcome and analysis reporting. Res Synth Methods. 2014;5(3):273–84.

Redmond S, von Elm E, Blumle A, Gengler M, Gsponer T, Egger M. Cohort study of trials submitted to ethics committee identified discrepant reporting of outcomes in publications. J Clin Epidemiol. 2013;66(12):1367–75.

Riehm KE, Azar M, Thombs BD. Transparency of outcome reporting and trial registration of randomized controlled trials in top psychosomatic and behavioral health journals: a 5-year follow-up. J Psychosom Res. 2015;79(1):1–12.

Rising K, Bacchetti P, Bero L. Reporting bias in drug trials submitted to the Food and Drug Administration: review of publication and presentation. PLoS Med. 2008;5(11):e217. discussion e217

Riveros C, Dechartres A, Perrodeau E, Haneef R, Boutron I, Ravaud P. Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med. 2013;10(12):e1001566. discussion e1001566

Rongen JJ, Hannink G. Comparison of registered and published primary outcomes in randomized controlled trials of Orthopaedic surgical interventions. J Bone Joint Surg Am. 2016;98(5):403–9.

Rosati P, Porzsolt F, Ricciotti G, Testa G, Inglese R, Giustini F, Fiscarelli E, Zazza M, Carlino C, Balassone V, et al. Major discrepancies between what clinical trial registries record and paediatric randomised controlled trials publish. Trials. 2016;17(1):430.

Rosenthal R, Dwan K. Comparison of randomized controlled trial registry entries and content of reports in surgery journals. Ann Surg. 2013;257(6):1007–15.

Saquib N, Saquib J, Ioannidis JP. Practices and impact of primary outcome adjustment in randomized controlled trials: meta-epidemiologic study. BMJ. 2013;347:f4313.

Smith SM, Wang AT, Pereira A, Chang RD, McKeown A, Greene K, Rowbotham MC, Burke LB, Coplan P, Gilron I, et al. Discrepancies between registered and published primary outcome specifications in analgesic trials: ACTTION systematic review and recommendations. Pain. 2013;154(12):2769–74.

Su CX, Han M, Ren J, Li WY, Yue SJ, Hao YF, Liu JP. Empirical evidence for outcome reporting bias in randomized clinical trials of acupuncture: comparison of registered records and subsequent publications. Trials. 2015;16:28.

Turner EH, Knoepflmacher D, Shapley L. Publication bias in antipsychotic trials: an analysis of efficacy comparing the published literature to the US Food and Drug Administration database. PLoS Med. 2012;9(3):e1001189.

Vedula SS, Bero L, Scherer RW, Dickersin K. Outcome reporting in industry-sponsored trials of gabapentin for off-label use. N Engl J Med. 2009;361(20):1963–71.

Vera-Badillo FE, Shapiro R, Ocana A, Amir E, Tannock IF. Bias in reporting of end points of efficacy and toxicity in randomized, clinical trials for women with breast cancer. Ann Oncol. 2013;24(5):1238–44.

You B, Gan HK, Pond G, Chen EX. Consistency in the analysis and reporting of primary end points in oncology randomized controlled trials from registration to publication: a systematic review. J Clin Oncol. 2012;30(2):210–6.

Soares HP, Daniels S, Kumar A, Clarke M, Scott C, Swann S, Djulbegovic B. Bad reporting does not mean bad methods for randomised trials: observational study of randomised controlled trials performed by the radiation therapy oncology group. BMJ. 2004;328(7430):22–4.

Chan AW, Tetzlaff JM, Gotzsche PC, Altman DG, Mann H, Berlin JA, Dickersin K, Hrobjartsson A, Schulz KF, Parulekar WR, et al. SPIRIT 2013 explanation and elaboration: guidance for protocols of clinical trials. BMJ. 2013;346:e7586.

Shyam A. Bias and the evidence 'Biased' medicine. J Orthop Case Rep. 2015;5(3):1–2.

Evans JG. Evidence-based and evidence-biased medicine. Age Ageing. 1995;24(6):461–3.

Dwan K, Gamble C, Williamson PR, Kirkham JJ. Systematic review of the empirical evidence of study publication bias and outcome reporting bias - an updated review. PLoS One. 2013;8(7):e66844.

Jones CW, Keil LG, Holland WC, Caughey MC, Platts-Mills TF. Comparison of registered and published outcomes in randomized controlled trials: a systematic review. BMC Med. 2015;13:282.

Chan AW, Song F, Vickers A, Jefferson T, Dickersin K, Gotzsche PC, Krumholz HM, Ghersi D, van der Worp HB. Increasing value and reducing waste: addressing inaccessible research. Lancet. 2014;383(9913):257–66.

Moja LP, Moschetti I, Nurbhai M, Compagnoni A, Liberati A, Grimshaw JM, Chan AW, Dickersin K, Krleza-Jeric K, Moher D, et al. Compliance of clinical trial registries with the World Health Organization minimum data set: a survey. Trials. 2009;10:56.

Mayo-Wilson E, Li T, Fusco N, Bertizzolo L, Canner JK, Cowley T, Doshi P, Ehmsen J, Gresham G, Guo N, et al. Cherry-picking by trialists and meta-analysts can drive conclusions about intervention efficacy. J Clin Epidemiol. 2017;91:95–110.

Smyth RM, Kirkham JJ, Jacoby A, Altman DG, Gamble C, Williamson PR. Frequency and reasons for outcome reporting bias in clinical trials: interviews with trialists. BMJ. 2011;342:c7153.

Dwan K, Altman DG, Clarke M, Gamble C, Higgins JP, Sterne JA, Williamson PR, Kirkham JJ. Evidence for the selective reporting of analyses and discrepancies in clinical trials: a systematic review of cohort studies of clinical trials. PLoS Med. 2014;11(6):e1001666.

Dwan K, Altman DG, Cresswell L, Blundell M, Gamble CL, Williamson PR. Comparison of protocols and registry entries to published reports for randomised controlled trials. The Cochrane Database Syst Rev. 2011;1:MR000031.

Hopewell S, Dutton S, Yu LM, Chan AW, Altman DG. The quality of reports of randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed. BMJ. 2010;340:c723.

Acknowledgments

We acknowledge Dr. Stephen Walter for his professional editing of the manuscript.

Funding

This study received no funding from the public, commercial or not-for-profit sectors.

Availability of data and materials

The data appeared in this study are already publicly available in the literature.

Author information

Authors and Affiliations

Contributions

GL and LT contributed to study conception and design. GL, LPFA, YJ and IN contributed to searching, screening, data collection and analyses. GL was responsible for drafting the manuscript. AL, MM, MW, MB, LZ, NS, BB, CL, IS, HS, YC, GS, LM, ZS, MAHL, JDA, and LT provided comments and made several revisions of the manuscript. All authors read and approved the final version.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that no competing interests exist.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Li, G., Abbade, L.P.F., Nwosu, I. et al. A systematic review of comparisons between protocols or registrations and full reports in primary biomedical research. BMC Med Res Methodol 18, 9 (2018). https://doi.org/10.1186/s12874-017-0465-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-017-0465-7