Abstract

Background

Clinical trial registries can improve the validity of trial results by facilitating comparisons between prospectively planned and reported outcomes. Previous reports on the frequency of planned and reported outcome inconsistencies have reported widely discrepant results. It is unknown whether these discrepancies are due to differences between the included trials, or to methodological differences between studies. We aimed to systematically review the prevalence and nature of discrepancies between registered and published outcomes among clinical trials.

Methods

We searched MEDLINE via PubMed, EMBASE, and CINAHL, and checked references of included publications to identify studies that compared trial outcomes as documented in a publicly accessible clinical trials registry with published trial outcomes. Two authors independently selected eligible studies and performed data extraction. We present summary data rather than pooled analyses owing to methodological heterogeneity among the included studies.

Results

Twenty-seven studies were eligible for inclusion. The overall risk of bias among included studies was moderate to high. These studies assessed outcome agreement for a median of 65 individual trials (interquartile range [IQR] 25–110). The median proportion of trials with an identified discrepancy between the registered and published primary outcome was 31 %; substantial variability in the prevalence of these primary outcome discrepancies was observed among the included studies (range 0 % (0/66) to 100 % (1/1), IQR 17–45 %). We found less variability within the subset of studies that assessed the agreement between prospectively registered outcomes and published outcomes, among which the median observed discrepancy rate was 41 % (range 30 % (13/43) to 100 % (1/1), IQR 33–48 %). The nature of observed primary outcome discrepancies also varied substantially between included studies. Among the studies providing detailed descriptions of these outcome discrepancies, a median of 13 % of trials introduced a new, unregistered outcome in the published manuscript (IQR 5–16 %).

Conclusions

Discrepancies between registered and published outcomes of clinical trials are common regardless of funding mechanism or the journals in which they are published. Consistent reporting of prospectively defined outcomes and consistent utilization of registry data during the peer review process may improve the validity of clinical trial publications.

Similar content being viewed by others

Background

The designation of a clearly defined, pre-specified primary outcome is a critically important component of a clinical trial [1]. Inconsistencies between planned and reported outcomes threaten the validity of trials by increasing the likelihood that chance or selective reporting, rather than true differences between treatment and control groups, account for primary outcome differences as reported at the time of publication. These inconsistencies can have direct consequences for physician decision-making and policies that influence patient care [2].

ClinicalTrials.gov was established in 2000, in large part to encourage consistency and transparency in the reporting of clinical trial outcomes. Since 2005 the International Committee of Medical Journal Editors (ICMJE) has explicitly supported this goal by mandating the registration of clinical trials in a publicly available registry prior to beginning trial enrollment as a condition of publication in member journals [3]. Additionally, both the United States and the European Union have passed legislation requiring the prospective registration of most clinical trials involving drugs or devices [4, 5]. As a result of these policies, trial registration has dramatically increased [6], and registries are now important tools for assessing consistency between planned and published primary outcomes.

Reported discrepancy rates between registered clinical trial outcomes and published outcomes range from less than 10 % of trials to greater than 60 % [7–9]. It is unknown whether this wide range in results stems from differences in characteristics of the included trials or from differences in how investigators assessed outcome consistency. It is also unclear which estimates are most representative of clinical trials in general. A better understanding of the problem of outcome inconsistencies may help define the frequency and impact of inappropriate changes to trial outcomes and identify subgroups of clinical trials that warrant further attention. Additionally, this knowledge may help inform future regulations aimed at improving trial transparency. We conducted a systematic review of published comparisons of registered and published primary trial outcomes in order to provide a comprehensive assessment of the observed prevalence and nature of outcome inconsistencies.

Methods

Search strategy and study selection

We performed a search for studies that evaluated the agreement between primary trial outcomes as documented in clinical trials registries and as described in published manuscripts. Studies were eligible if they included data from clinical trials, utilized either ClinicalTrials.gov or any registry meeting World Health Organization (WHO) Registry Criteria (Version 2.1), and included comparisons to outcomes as defined in published manuscripts. We excluded studies published prior to 2005, because our objective was to characterize inconsistencies between trial registries and publications, and registries were used infrequently prior to the 2005 ICMJE statement requiring trial registration. Manuscripts were also excluded if they were secondary reports of studies for which the relevant findings had previously been published, or if they were published in a language other than English. Reports of individual trials, case reports, and editorials were excluded. We searched MEDLINE via PubMed and CINAHL in August 2014, and EMBASE in September 2014. Full details from each search are available in Additional file 1: Appendix A. Additionally, we reviewed the citation lists from each included manuscript for additional eligible studies.

Two investigators (LGK, WCH) independently screened the titles and abstracts of manuscripts identified through the database searches to identify potentially eligible studies for further assessment. Discrepancies were resolved by a third investigator (CWJ). Following this step, two investigators then independently assessed each remaining full-length manuscript for inclusion in the study. A third investigator again reviewed all discrepancies, and the final list of included studies was determined by group consensus.

The primary outcome of interest for this systematic review was the proportion of trials in which the registered and published primary outcomes were different. Secondary outcomes were differences between registered and published secondary outcomes and the relationship between registered and published outcomes on the statistical significance of published outcomes.

Data extraction

Two investigators independently performed data extraction for each eligible study using a standardized data form. For each study, investigators recorded the total number of trials examined and the number of trials for which a discrepancy was present between the registered and published primary outcome. WHO-approved trial registries must save a history of changes made to registry records, and we also recorded whether study authors reported reviewing these changes. When included studies utilized this feature to report registered outcomes at various time points, we used the primary outcome registered at the time enrollment started as the registered outcome [3]. Additional data fields included the nature of primary outcome discrepancies, categorized according to a modified version of the approach developed by Chan et al. [10] These categories are: a registered primary outcome was omitted in the manuscript; an unregistered primary outcome was introduced in the manuscript; a registered primary outcome was described as a secondary outcome in the manuscript; a published primary outcome was described as a secondary outcome in the registry; and the time of primary outcome assessment differed between the registry and manuscript. When possible, the data abstractors also recorded information about the publishing journals or clinical specialties for trials included in each study, publication year of included trials, registry databases utilized for each study, the timing of trial registration with respect to participant enrollment, and the proportion of outcome discrepancies favoring statistical significance in a published manuscript.

Data synthesis

Data were analyzed in accordance with the methodology recommended in the Cochrane Handbook for Systematic Reviews of Interventions, where relevant, and results are reported in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement (Additional file 2: Appendix B) [11, 12]. For each included study we calculated the proportion of trials with primary outcome discrepancies, along with 95 % confidence intervals. Significant heterogeneity with respect to the methods of the included studies and substantial statistical heterogeneity prevented us from performing pooled analyses. Pre-specified analyses included the assessment of primary outcome discrepancies among prospectively registered trials, secondary outcome discrepancies, and the proportion of outcome discrepancies favoring statistical significance in a published manuscript. Following our initial data analysis, we decided to compare primary outcome discrepancy rates between studies in which authors generated a list of published trials and searched for corresponding registry entries with studies in which a list of registered trials was used as the basis for a publication search, because both strategies were common among the included studies.

We assessed the risk of bias of included studies using a modified version of the Newcastle-Ottawa Scale for assessing the quality of cohort studies, in which the included studies were assessed based on the following factors: representativeness of the included cohort; ascertainment of the link between trial registry and manuscript; method of assessing primary outcome agreement; enrollment window of included trials; and the analysis of prospectively registered outcomes (Additional file 3: Appendix C) [13]. We used the Wilson score method with continuity correction to calculate 95 % confidence intervals [14]. Analyses were conducted using Stata 14 (College Station, TX, USA).

Results

Description of included studies

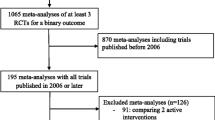

Database searches identified 5208 records. Following exclusions based on title and abstract review, we assessed 57 full manuscripts for eligibility. Twenty-five studies identified by the database search and another two studies identified through the citation review met inclusion criteria for a total of 27 eligible studies that compared registered and published primary outcomes (Fig. 1) [7–9, 15–38]. Characteristics of these studies, including publication dates, a description of included trials, and search methods, are reported in Table 1. Detailed ratings regarding risk of bias for the included studies are provided in Additional file 3: Appendix C. Risk of bias was moderate to high for most studies; five studies were judged to be at low risk of bias [7, 16, 20, 24, 30].

Among the included studies, the median number of trials assessed for outcome discrepancies was 65 (interquartile range [IQR] 25–110), and the median proportion of studies for which a discrepancy was identified between the registered and published primary outcome was 31 %, though this varied substantially across the included studies (range 0 % [0/66] to 100 % [1/1], IQR 17–45 %; Fig. 2).

Sixteen studies provided detailed information about the nature of observed outcome discrepancies (Table 2). Among these studies, discrepancies fitting each of the five categories described by Chan et al. were common [10]: for five studies the most common discrepancy was omission of a registered primary outcome [15, 19, 20, 30, 34]; for four studies the most common discrepancy was publication of an unregistered primary outcome [7, 22, 31, 36]; one study reported equal numbers of these two types of discrepancies [26]; for three studies the most commonly observed discrepancy was reporting the registered primary outcome as a secondary outcome [18, 24, 27]; and for three studies the most common discrepancy was a change in the timing of primary outcome assessment [9, 32, 33].

Subgroup analyses within included studies

Nine studies compared registered and published primary outcomes for trials that received industry funding and those that did not. One study observed that industry sponsored trials were significantly less likely to have outcome inconsistencies than were trials with other funding sources [17]. The remaining eight studies observed no significant relationship between funding source and outcome consistency [9, 15, 16, 24, 33–35, 38].

The authors of three studies compared primary outcome consistency between general medical journals and specialty journals. Of these, one study compared trials published in general medical journals to those published in specialty surgical journals, finding a higher rate of outcome inconsistencies among trials published in the surgical journals [20]. Another study compared trials published in general medical journals to those in gastroenterology journals, and the remaining study compared trials from general medical journals with those published in cardiology, rheumatology, or gastroenterology specialty journals. Neither of the latter two studies observed a difference in discrepancy rate according to journal type [7, 27].

Eight studies analyzed the impact that changes to registered primary outcomes had on the statistical significance of published primary outcomes for included trials (Fig. 3); an additional study reported the impact of an outcome change for a single trial, finding this change to favor statistical significance of the published outcome [30]. When it could be assessed, outcome changes frequently favored the publication of statistically favorable results (median 50 %, IQR 28–64 %). However, in as many as half of the included trials, the impact of outcome changes on the favorability of the published results could not be assessed [7]. Consequently, these data likely underestimate the true impact of outcome changes on the reported favorability of published results.

Heterogeneity among studies

We performed several additional analyses in order to explore the substantial variability in reported outcome agreement between included studies. Of the five studies judged to be at low risk of bias, one included an outcome comparison from just a single trial, finding the registered and published outcomes to be discrepant [30]. The other four studies identified outcome discrepancies in between 27 % (15/55) and 49 % (75/152) of trials [7, 16, 20, 24]. The 22 studies determined to be at moderate to high risk of bias showed less consistency in their reported outcome discrepancies, ranging from 0 % (0/66) to 92 % (23/25), with a median discrepancy rate of 29 % (IQR 14–38 %).

Only one study explicitly limited inclusion to trials completed after the 2005 deadline for registration established by the ICMJE [16], and none limited inclusion to trials completed after the 2007 U.S. Food and Drug Administration Amendment Act expanded registration requirements and authorized civil fines for unregistered trials. However, eight studies limited inclusion to trials published after 2007 [7, 24, 26, 27, 30, 32, 33, 36]. Among these eight studies, the incidence of primary outcome changes between registry entries and manuscripts was similar to the findings observed among all included studies, with a median discrepancy rate of 38 % (IQR 30–50 %).

Eight of the included studies examined the history of changes made to each registry record in order to compare published outcomes with the primary outcome as stated in the registry at either the time of study initiation or prior to completion of enrollment [7, 16, 20, 22, 24, 30, 31, 36]. In six of these cases, outcome discrepancy data were available specifically for trials that had been prospectively registered. Among these studies, the median proportion of prospectively registered studies with discrepancies between the registry and published manuscript was 41 % (range 30 % [13/43] to 100 % [1/1], IQR 33–48 %), and the lowest reported discrepancy rate was 30 % (Fig. 4). One study, published only in abstract form, used the ClinicalTrials.gov history of changes feature to identify trials with consistent outcomes at each of three time points (registry entry at time of initial registration, publication, current registry entry as of July 2010) and found discrepancies in 23 of 25 randomized controlled trials (92 %) [23]. The remaining 18 studies compared the current registry entry with the published outcome and did not take into account registry changes over time; among these the median primary outcome discrepancy rate was 26 % (IQR 14–34 %). The higher observed discrepancy rate among studies that exclusively utilized prospectively defined outcomes suggests that registered outcomes are often retrospectively changed to match published outcomes. This observation also likely contributes to heterogeneity in the reported results among the studies included in this analysis.

In order to match registry data with published manuscripts, 17 studies began with a cohort of published trials and searched trial registries to identify registry entries corresponding to these published manuscripts. Among these studies, the rate of unregistered trials ranged from 1 % to 94 % (median 38 %, IQR 20–71 %). Among the six studies that reported detailed results on the timing of randomized controlled trial registration, compliance with the ICMJE policy requiring prospective trial registration was poor [16, 22, 24, 27, 32, 33]. The median observed rate of prospective registration in these six studies was 15 % (range 9–31 %). Nine studies began with a group of registered trials and searched for corresponding publications [8, 9, 17–19, 21, 28, 34, 37], and one study did not specify which method was used [23]. The median percentage of primary outcome discrepancies among the 17 studies in which authors generated a list of published trials and searched for corresponding registry entries was 32 % (IQR 25–45 %), as compared to 18 % (IQR 6–32 %) among the nine studies in which a list of registered trials was used as the basis for a publication search.

Additional analyses

The authors of the included studies observed high rates of ambiguity in the way primary outcomes were defined in registry entries. Eighteen of the 27 studies reported the number of included registry entries in which primary outcomes were undefined or unclear, with rates of ambiguously defined outcomes ranging from 4 % to 48 % (median 15 %, IQR 7–27 %) [7–9, 15, 17, 20, 22, 24, 26, 28–32, 34, 36–38]. In many cases this ambiguity was noted to limit assessments of outcome consistency. Inconsistencies between registered and published secondary outcomes were also common. Ten studies evaluated the consistency of secondary outcomes; the median rate of observed secondary outcome discrepancies among these studies was 54 % (IQR 33–68 %) [15, 18, 19, 21, 22, 28, 32–34, 37].

Discussion

We identified 27 studies that compared registered and published trial outcomes. Among these studies, the proportion of discrepant primary outcomes between registration and publication was highly variable, with four studies observing outcome changes in more than 50 % of trials, and eight studies observing outcomes changes in fewer than 20 %. The frequency with which discrepancies were identified did not appear to vary based on funding source, journal type, or when trials were published, though our ability to detect potential differences was limited by relatively small sample sizes and unstandardized methods across the included studies. Most of the studies identified were similar in regard to the time frame analyzed and the journals and clinical topics studied. Additionally, nearly all of the included studies used a consistent method of categorizing outcome differences, based on the scheme established by Chan et al. [10]. One important source of the variability in reported results is that most studies compared published outcomes to the outcome listed in the registry at the time of the search, without attempting to identify the outcome registered at the inception of the study. For the six studies that compared published outcomes with the outcomes registered prior to the initiation of patient enrollment, the frequency of discrepancies were more similar to one another and higher than for the remaining 21 studies. Because the comparison with the outcome prior to patient enrollment is the most relevant for those attempting to understand the impact of outcome switching on the validity of results of clinical trials, the median value from these six studies (41 %, IQR 33–48 %) provides an informative estimate for characterizing the extent of this problem.

This finding supports previous observations that registry entries are often either initiated or modified after trial completion [7, 22]. Additionally, it suggests that for surveillance studies of registry practices to be meaningful and reproducible, authors should utilize best practices to improve study validity. These include assessing changes to registry entries over time, establishing clear definitions for discrepant outcomes, and utilizing multiple independent raters to compare registered and published outcomes.

While clinical trial registries and recent legislative efforts mandating their use have the potential to improve registry utilization by trial sponsors and investigators, these measures do little good unless reviewers, journal editors, and educators utilize them. Recent evidence suggests that reviewers do not routinely use trials registries when assessing trial manuscripts [39]. In some cases there are good reasons to change study outcomes or other methodological details while a trial is ongoing, though it is critical that published manuscripts disclose and explain these changes. Other times, outcome changes may represent efforts by investigators to spin favorable conclusions from their data. In either case, reviewers and editors can and should use registry data to ensure that appropriate outcome changes are transparent and that inappropriate changes are prevented.

All but one of the studies included clinical trials that were initiated before 2005, which was when the ICMJE policy mandating prospective trial registration as a condition of publication in member journals went into effect. Registry utilization has increased dramatically since that time, but the quality of registry data remains inconsistent [22, 24]. The single study we examined that was limited to trials initiated after the ICMJE statement still found primary outcome inconsistencies in 15 of 55 (27 %) included trials [16]. Importantly, we did not find evidence of a consistent difference in registered and published outcomes according to trial funding source. As a result, any measures taken to improve the consistency of outcome reporting should target both industry-funded and non-industry-funded trials. As registry utilization continues to evolve, it is unclear whether outcome consistency will improve or whether inconsistencies will remain common. It is likely that this depends in part on the willingness of both journal editors and reviewers to demand that authors are held accountable to registered protocols when publishing results. Unfortunately, very few journals currently prioritize the assessment of registry entries during the peer review process [40].

Since 2008, in addition to facilitating the assessment of methodological consistency, Clinicaltrials.gov has included a results database that allows the registry to serves as a publicly available source of trial outcome data. While utilization of the results database on the part of investigators has been mixed, improved compliance with this feature has the potential to streamline comparisons between planned and completed outcome analyses [41]. Future surveillance studies will be needed to assess improvements in outcome consistency that might result from new policies, greater familiarity of investigators and sponsors with the registry process, and greater scrutiny of registries by editors and reviewers.

Our findings are consistent with those reported in a 2011 Cochrane Review of studies comparing trial protocols with published trial manuscripts [42]. This Cochrane Review identified three studies, all also included in our analysis, that compared registered primary outcomes to published outcomes, with observed primary outcome discrepancy rates between 18 % and 31 %. Additionally, the Cochrane Review identified three studies that compared the consistency between outcomes identified in study protocols and those in published reports, finding discrepancies in 33–67 % of included trials. As with our review, the presence of heterogeneous trial populations and methods prevented the Cochrane authors from performing a meta-analysis, though they observed that discrepancies between study protocols and published manuscripts commonly involved other important aspects of trial design in addition to outcome definitions, including eligibility criteria, sample size goals, planned subgroup analyses, and methods of analysis.

This systematic review has several limitations that should be considered when interpreting these results. First, studies involving outcome discrepancies are challenging to capture via a database search, because relevant studies are not limited to a single disease process or intervention, and many of the key words relevant to this topic are commonly included in unrelated abstracts (e.g., “bias,” “primary outcome,” “clinicaltrials.gov,” “randomized controlled trial”). We addressed this issue by using an intentionally broad search strategy, and by reviewing the reference lists of all included studies. Despite this, we may have missed some relevant publications. Second, the studies identified were heterogeneous with respect to both the characteristics of their included trials and the approaches they used to assess outcome consistency. This limits our ability to pool the data to provide a summary description of discrepancies in registered and published outcomes for trials across these studies. This heterogeneity results partly from the variable time points relative to trial initiation used by authors in defining the registered outcome, and is indicative of possible bias in these results at the level of individual studies. Third, we separately analyzed the subgroup of studies that reported reviewing the history of changes within each registry record in order to identify prospectively defined outcomes; this subgroup analysis may have been incomplete because it is possible that additional studies performed this task without reporting it. Further, several of the included studies had overlapping inclusion criteria, and as a result in some cases individual studies may have been included in more than one study. Additionally, the impact of publication bias on these results is unknown. Finally, many of the included studies noted that ambiguously defined outcomes among trial registries and manuscripts made it difficult to make judgments about whether outcome differences were present.

Conclusions

Published randomized controlled trials commonly report primary outcomes that differ from the primary outcomes specified in clinical trials registries. These inconsistencies in published and reported outcomes are observed among trials across a broad range of clinical topics and a variety of funding sources. When assessing a trial’s methodological quality, editors, reviewers, and readers should routinely compare the published primary outcome to the primary outcome registered at the time of trial initiation. Further study, with attention to the outcome registered at the time of trial initiation, is needed to determine whether inconsistencies between registered and published outcomes remain common among recently initiated trials.

Abbreviations

- ICMJE:

-

International Committee of Medical Journal Editors

- IQR:

-

interquartile range

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- WHO:

-

World Health Organization

References

Moher D, Hopewell S, Schulz KF, Montori V, Gotzsche PC, Devereaux PJ, et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c869. doi:10.1136/bmj.c869.

Newman DH, Shreves AE. Treatment of acute otitis media in children. N Engl J Med. 2011;364(18):1775. doi:10.1056/NEJMc1102207#SA1. author reply 7–8.

DeAngelis CD, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. JAMA. 2004;292(11):1363–4. doi:10.1001/jama.292.11.1363.

Food and Drug Administration Act of 2007, Stat. 2007: 110–85.

Regulation (EU) No 536/2014 of the European Parliament and of the Council of 16 April 2014 on clinical trials on medicinal products for human use, and repealing Directive 2001/20/ED, Stat. 536/2014. 2014.

Califf RM, Zarin DA, Kramer JM, Sherman RE, Aberle LH, Tasneem A. Characteristics of clinical trials registered in ClinicalTrials.gov, 2007–2010. JAMA. 2012;307(17):1838–47. doi:10.1001/jama.2012.3424.

Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302(9):977–84. doi:10.1001/jama.2009.1242.

Ross JS, Mulvey GK, Hines EM, Nissen SE, Krumholz HM. Trial publication after registration in ClinicalTrials.Gov: a cross-sectional analysis. PLoS Med. 2009;6(9):e1000144. doi:10.1371/journal.pmed.1000144.

Smith SM, Wang AT, Pereira A, Chang RD, McKeown A, Greene K, et al. Discrepancies between registered and published primary outcome specifications in analgesic trials: ACTTION systematic review and recommendations. Pain. 2013;154(12):2769–74. doi:10.1016/j.pain.2013.08.011.

Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004;291(20):2457–65. doi:10.1001/jama.291.20.2457.

Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;339:b2535. doi:10.1136/bmj.b2535.

Higgins J, Green S, (editors). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. London, UK: The Cochrane Collaboration; 2011.

Wells GA, Shea B, O’Connell D, Peterson J, Welch V, Losos M, et al. The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. Ottawa Hospital Research Institute. 2014. http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp. Accessed 24 September 2015.

Newcombe RG. Two-sided confidence intervals for the single proportion: comparison of seven methods. Stat Med. 1998;17(8):857–72.

Ewart R, Lausen H, Millian N. Undisclosed changes in outcomes in randomized controlled trials: an observational study. Ann Fam Med. 2009;7(6):542–6. doi:10.1370/afm.1017.

Anand V, Scales DC, Parshuram CS, Kavanagh BP. Registration and design alterations of clinical trials in critical care: a cross-sectional observational study. Intensive Care Med. 2014;40(5):700–22. doi:10.1007/s00134-014-3250-7.

Bourgeois FT, Murthy S, Mandl KD. Outcome reporting among drug trials registered in ClinicalTrials.gov. Ann Intern Med. 2010;153(3):158–66. doi:10.7326/0003-4819-153-3-201008030-00006.

Chahal J, Tomescu SS, Ravi B, Bach Jr BR, Ogilvie-Harris D, Mohamed NN, et al. Publication of sports medicine-related randomized controlled trials registered in ClinicalTrials.gov. Am J Sports Med. 2012;40(9):1970–7. doi:10.1177/0363546512448363.

Gandhi R, Jan M, Smith HN, Mahomed NN, Bhandari M. Comparison of published orthopaedic trauma trials following registration in Clinicaltrials.gov. BMC Musculoskelet Disord. 2011;12:278. doi:10.1186/1471-2474-12-278.

Hannink G, Gooszen HG, Rovers MM. Comparison of registered and published primary outcomes in randomized clinical trials of surgical interventions. Ann Surg. 2013;257(5):818–23. doi:10.1097/SLA.0b013e3182864fa3.

Hartung DM, Zarin DA, Guise JM, McDonagh M, Paynter R, Helfand M. Reporting discrepancies between the ClinicalTrials.gov results database and peer-reviewed publications. Ann Intern Med. 2014;160(7):477–83. doi:10.7326/M13-0480.

Huic M, Marusic M, Marusic A. Completeness and changes in registered data and reporting bias of randomized controlled trials in ICMJE journals after trial registration policy. PLoS One. 2011;6(9):e25258. doi:10.1371/journal.pone.0025258.

Hutfless S, Blair R, Berger Z, Wilson L, Bass EB, Lazarev M. Poor reporting of Crohn’s disease trials in ClinicalTrials.gov. Gastroenterology. 2013;144(5):SUPPL. 1(S232).

Jones CW, Platts-Mills TF. Quality of registration for clinical trials published in emergency medicine journals. Ann Emerg Med. 2012;60(4):458–64. doi:10.1016/j.annemergmed.2012.02.005.

Khan NA, Lombeida JI, Singh M, Spencer HJ, Torralba KD. Association of industry funding with the outcome and quality of randomized controlled trials of drug therapy for rheumatoid arthritis. Arthritis Rheum. 2012;64(7):2059–67. doi:10.1002/art.34393.

Killeen S, Sourallous P, Hunter IA, Hartley JE, Grady HL. Registration rates, adequacy of registration, and a comparison of registered and published primary outcomes in randomized controlled trials published in surgery journals. Ann Surg. 2014;259(1):193–6. doi:10.1097/SLA.0b013e318299d00b.

Li XQ, Yang GL, Tao KM, Zhang HQ, Zhou QH, Ling CQ. Comparison of registered and published primary outcomes in randomized controlled trials of gastroenterology and hepatology. Scand J Gastroenterol. 2013;48(12):1474–83. doi:10.3109/00365521.2013.845909.

Liu JP, Han M, Li XX, Mu YJ, Lewith G, Wang YY, et al. Prospective registration, bias risk and outcome-reporting bias in randomised clinical trials of traditional Chinese medicine: an empirical methodological study. BMJ Open. 2013;3(7):pii: e002968. doi:10.1136/bmjopen-2013-002968.

Mathieu S, Giraudeau B, Soubrier M, Ravaud P. Misleading abstract conclusions in randomized controlled trials in rheumatology: comparison of the abstract conclusions and the results section. Joint Bone Spine. 2012;79(3):262–7. doi:10.1016/j.jbspin.2011.05.008.

Milette K, Roseman M, Thombs BD. Transparency of outcome reporting and trial registration of randomized controlled trials in top psychosomatic and behavioral health journals: a systematic review. J Psychosom Res. 2011;70(3):205–17. doi:10.1016/j.jpsychores.2010.09.015.

Nankervis H, Baibergenova A, Williams HC, Thomas KS. Prospective registration and outcome-reporting bias in randomized controlled trials of eczema treatments: a systematic review. J Invest Dermatol. 2012;132(12):2727–34. doi:10.1038/jid.2012.231.

Pinto RZ, Elkins MR, Moseley AM, Sherrington C, Herbert RD, Maher CG, et al. Many randomized trials of physical therapy interventions are not adequately registered: a survey of 200 published trials. Phys Ther. 2013;93(3):299–309. doi:10.2522/ptj.20120206.

Rosenthal R, Dwan K. Comparison of randomized controlled trial registry entries and content of reports in surgery journals. Ann Surg. 2013;257(6):1007–15. doi:10.1097/SLA.0b013e318283cf7f.

Smith HN, Bhandari M, Mahomed NN, Jan M, Gandhi R. Comparison of arthroplasty trial publications after registration in ClinicalTrials.gov. J Arthroplasty. 2012;27(7):1283–8. doi:10.1016/j.arth.2011.11.005.

Vera-Badillo FE, Shapiro R, Ocana A, Amir E, Tannock IF. Bias in reporting of end points of efficacy and toxicity in randomized, clinical trials for women with breast cancer. Ann Oncol. 2013;24(5):1238–44. doi:10.1093/annonc/mds636.

Walker KF, Stevenson G, Thornton JG. Discrepancies between registration and publication of randomised controlled trials: an observational study. JRSM Open. 2014;5(5):2042533313517688.

Wildt S, Krag A, Gluud L. Characteristics of randomised trials on diseases in the digestive system registered in ClinicalTrials.gov: a retrospective analysis. BMJ Open. 2011;1(2):e000309. doi:10.1136/bmjopen-2011-000309.

You B, Gan HK, Pond G, Chen EX. Consistency in the analysis and reporting of primary end points in oncology randomized controlled trials from registration to publication: a systematic review. J Clin Oncol. 2012;30(2):210–6. doi:10.1200/JCO.2011.37.0890.

Mathieu S, Chan AW, Ravaud P. Use of trial register information during the peer review process. PLoS One. 2013;8(4):e59910. doi:10.1371/journal.pone.0059910.

Chauvin A, Ravaud P, Baron G, Barnes C, Boutron I. The most important tasks for peer reviewers evaluating a randomized controlled trial are not congruent with the tasks most often requested by journal editors. BMC Med. 2015;13:158. doi:10.1186/s12916-015-0395-3.

Zarin DA, Tse T, Williams RJ, Califf RM, Ide NC. The ClinicalTrials.gov results database--update and key issues. N Engl J Med. 2011;364(9):852–60. doi:10.1056/NEJMsa1012065.

Dwan K, Altman DG, Cresswell L, Blundell M, Gamble CL, Williamson PR. Comparison of protocols and registry entries to published reports for randomised controlled trials. Cochrane Database Syst Rev. 2011;1:MR000031. doi:10.1002/14651858.MR000031.pub2.

Acknowledgements

TPM is supported by the National Institute on Aging through Grant K23 AG038548. This sponsor had no role in the study design, data collection, data analysis, data interpretation, or manuscript preparation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

CWJ has received research grants from Roche Diagnostics, Inc. and AstraZeneca. The remaining authors declare that they have no competing interests.

Authors’ contributors

CWJ designed the study, designed the data collection tool, collected the data, analyzed the data, and drafted and revised the manuscript. LGK and WCH both assisted with study design, design of the data collection tool, data collection, and manuscript revision. MCC performed data analyses and assisted with manuscript revision. TPM designed the study, analyzed the data, and drafted and revised the manuscript. All authors read and approved the final manuscript.

Additional files

Additional file 1:

Appendix A. Electronic search strategies. (DOCX 495 kb)

Additional file 2:

Appendix B. PRISMA 2009 checklist. (DOC 61 kb)

Additional file 3:

Appendix C. Assessment of risk of study bias. (DOCX 24 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Jones, C.W., Keil, L.G., Holland, W.C. et al. Comparison of registered and published outcomes in randomized controlled trials: a systematic review. BMC Med 13, 282 (2015). https://doi.org/10.1186/s12916-015-0520-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-015-0520-3