Abstract

Background

Attention provides vital contribution to everyday functioning, and deficits in attention feature in many psychological disorders. Improved understanding of attention may eventually be critical to early identification and treatment of attentional deficits. One step in that direction is to acquire a better understanding of genetic associations with performance on a task measuring reflexive (exogenous) visual attention. Reflexive attention is an important component of overall attention because (along with voluntary selective attention) it participates in determining where attention is allocated and how susceptible to distractors the subject might be. The task that we used involves the presentation of a target that is preceded by one of several different types of cues (none, double, or single, either ipsilateral or contralateral to where the target subsequently appears). We used several different outcome measures depending on the cue presented. We have previously studied the relationship between selected genes and mean response time (RT). Here we report on the contributions of genetic markers to RT increases or decreases over the course of the task (linear trend in RT slope).

Results

Specifically, we find that RT slope for a variety of reflexive attention outcome measures is dependent on DAT1 genotype. DRD4 was near significant for one outcome measure in the final (best) model. APOE, COMT, and DBH were not significant in any models.

Conclusions

It is especially interesting that genotype predicts linear changes in RT across trials (and not just mean differences or moment-to-moment variability). DAT1 is a gene that produces a protein involved in the transport of dopamine from the synapse. To our knowledge, this is the first study that has associated neurotransmitter genotypes with RT slope on a reflexive attention experiment. The direction of these effects is consistent with genetic risk for attention deficit hyperactivity disorder (ADHD). That is, those with two risk alleles for ADHD (6R/6R on the DAT1 intron 8 VNTR) either got slower as the task progressed or had the least improvement. Those with no risk alleles (5R/5R) had the most improvement in RT as the task progressed.

Similar content being viewed by others

Background

Attention is a broad concept that has often been divided in the literature along various dimensions. One such division involves reflexive (exogenous) versus sustained attention. Reflexive attention refers to a stimulus-driven reorienting of the brain’s resources, often to an external object that newly appears, has a relatively salient color, or involves motion. The cued-orienting task that we used in our first study [1] is an example of a reflexive task and is similar to that used by Posner et al. [2]. Stimuli flash briefly on the computer display and subjects automatically move their attention. It is generally assumed that attention has been captured reflexively if subjects were faster at responding to a target that was preceded by the presentation of a brief pre-cue at that same location. Attention can be captured in this way even though the stimulus presentation is too brief to involve eye movements. On the other hand, sustained attention is measured with an effortful task designed to require vigilance over time. For example, the Continuous Performance Test (CPT) [3] and the Sustained Attention to Response Task (SART) [4] involve the presentation of a stream of stimuli, some of which require a response while others require that a response be withheld. Examining slope in response time (RT) over trials (see Fig. 1) on a reflexive attention task involves some elements of reflexive attention and some elements of sustaining attention to the task over time (~20 min). Contributions of reflexive attention to attentional deficits are often overlooked [5–8] but could be useful for a more complete understanding of disorders that have an attentional component, such as ADHD, autism, anxiety, and depression by answering questions about biological contributions to attention using exogenous versus endogenous cues [9, 10]. For example, one question might be whether it is the nature of the task itself (i.e., designed to induced effortful attention) that induces declining performance over time or if non-effortful (reflexive) attention tasks can induce the same decline in performance in some individuals. There are several studies that discuss genetic associations with RT changes across the course of a sustained attention task [11–14]; however, the literature contains almost no genetic-association studies on how RT changes across trials during the course of a reflexive task. Such studies might be helpful in determining if declining performance over time shares similar or has distinct genetic influences in reflexive attention and sustained attention tasks. The genes we selected are related to the availability of neurotransmitters, such as acetylcholine and dopamine (see Table 1). We have previously found some of these genes to be associated with mean RT difference scores [1]. In this paper, we extend those findings with a novel look at the influence of these genes on the slope of RT over the course of the 20-min reflexive attention task. Looking at RT slope is unlike looking at moment-to-moment RT variability because the former is probably dependent on alertness or learning [15, 16] while the latter is probably related to vigilance and the ability to detect a signal beyond neural noise [17, 18].

Hypothetical illustration of two types of variability. The top portion shows three individuals who differ in the spread (moment-to-moment variability) around a regression line after slope is covaried out. The bottom portion represents variability of RT slope between individuals. Some individuals become faster (or slower) over the 20 presentations of a given trial type. We are primarily interested in RT slope variability in this paper

Candidate gene studies that show associations between specific genes and various attentional measures provide evidence of genetic influences on attention. Such an association might occur because a given gene influences the availability of a neurotransmitter. That is, the biological pathway from gene to behavior could include neurotransmitters whose availability impacts those behaviors. For example, attention [19, 20] and memory [21] are known to be influenced by the availability of the neurotransmitters. The varying availability of these neurotransmitters due to genetic differences could make behaviors like attending more or less efficient in some individuals, and this, in turn, could be captured by various measures of attention.

One component of ADHD is difficulty in maintaining attentional arousal. Individuals with ADHD are frequently identified as having this difficulty [11, 12, 22–29]. Usually the connection between maintaining arousal and dopamine is made for moment-to-moment variability. For example, Johnson et al. [30] make the argument that dopamine must be involved in moment-to-moment RT variability because methylphenidate (which increases dopamine availability) reduces RT variability. Exaggerated RT variability could arise because of difficulty in sustaining attention such that RTs rise and fall over the course of a task independently of the task requirements. RT variability may increase with less dopamine availability because it becomes harder to distinguish signal from noise in the nervous system. That is, reduced availability of dopamine could lead to weaker neural signals giving random neural noise a larger impact on target detection and response [17, 18].

Another form of variability over trials would be monotonic increases or decreases in RT. That is, dopamine availability could also be related to RT slope over the course of a task. For example, Bioulac et al. [23] found that children with ADHD decline in performance over time on a Continuous Performance Test. This finding could apply to slight attentional deficits found in nonclinical populations as well. In such populations, there may be variations in neurotransmitters that have been associated with attention generally and these variations could contribute to an increase in RT over the course of a task.

This is interesting because our previous analysis of the data in the current study showed that individuals from the general population differed on their mean RTs depending on their neurotransmitter-related genotypes [1]. Risk alleles for each genetic marker were identified in previous studies as associated with ADHD, greater RT costs, and/or reduced cognitive functioning generally. Each genetic marker was coded such that zero indicates neither parent contributed a risk allele for these cognitive outcomes, one indicates one parent contributed a risk allele, and two indicates that each parent contributed a risk allele. Those findings that used an RT attention task generally associated genetic markers with mean RTs or RT difference scores. As mentioned previously, we could find no studies in the literature on genetic associations with RT slope in reflexive attention tasks.

In the current study we asked a simple question: Are RT slopes on a reflexive attention task associated with specific genes? We have genotyping information for subjects on the dopamine-related genes COMT, DAT1, DBH and DRD4. We also have information on APOE, which carries risk for Alzheimer’s disease, is related to acetylcholine and has been related to cued orienting, even in young adults and adolescents [31–33] and with attention generally [34, 35]. It is useful to examine genes that do not fit with the dopamine hypothesis of attentional deficits and yet have a logical and historical association with attention. Using these data previously on some of these genes [1], we found significant mean RT differences between individuals with different genotypes. Our goal was to determine if there are also genetic associations with RT slopes across trials in an reflexive task. Thus, we are looking for gene-by-trial interactions by modeling logarithm transformed RT as the dependent variable (the outcome in the multilevel model). Such results would be the first to our knowledge to demonstrate such associations. Our interest in RT slope is to give a more complete picture of what is happening in the minds of those individuals who struggle with attentional deficits, which could potentially lead to better environmental supports and treatments.

Results

All markers were in Hardy–Weinberg equilibrium (Ps > 0.90). In the following models, we were specifically interested in gene-by-trial interactions because they signal differences in the response time trends across trials depending on genotype (see Table 2 for correlations between covariates, genetic markers, and the dependent variable.).

Multiple models were tested for each outcome measure (cue-target condition). We began with a full model (including all predictors and covariates) and compared subsequent models for improved model fit (as determined by a reduction in Bayesian Information Criteria; BIC). Full models used logarithm transformed RT as the dependent variable and included predictors of interest (APOE, COMT, DAT1, DBH, DRD4, and trial number), covariates (the standard deviation of logarithm transformed RT, error rate, dummy coded ethnicity, side of target, sleepiness score, and age), and the interactions of interest (the slopes, which are the five gene-by-trial interactions). The final (reduced model) eliminated all covariates except ethnicity. We also tested models removing non-significant interactions, but these did not improve model fit for any cue-target condition. However, BIC did improve and the same gene-by-trial interactions (the slopes) were significant or nearly significant when using a simpler model without including covariates (the standard deviation of the log transformed RT, target side, error rate, sleepiness, or age). For parsimony, we settled on the simpler models which showed substantially the same results and had significantly smaller BIC values (as determined by χ2 deviance statistics). For every cue-target condition, these were the best models and the improvements over models with all predictors (the full models) were significant at p < 0.001. Reporting Akaike’s Information Criterion (AIC) instead of BIC does not change the model building results in any instance.

Estimates from tested models are presented in Tables 3, 4, 5, 6, 7, 8, 9, 10 and 11 (one table per cue-target condition).Footnote 1 Note that statistical significance was determined using log10(RT) as the dependent variable, while the parameter estimates in Tables 3, 4, 5, 6, 7, 8, 9, 10 and 11 have been retransformed to the original RT scale to aid in conceptual clarity.Footnote 2 Additionally, Table 12 shows slopes for the different genotypes from a simple regression of untransformed RT on trial for each genotype to aid in conceptual clarity. The net change in RT across 200 trials can be derived from the slopes in Table 12 by multiplying the slope by 200 trials. For example, for the DAT1 5R/5R genotype for the Single Dim Valid condition: mean(RT change) = 200 trials × (−0.56) ms/trial = −112 ms across 200 trials (subjects with this genotype responded more than 100 ms faster on trials at the end of the experiment than at the beginning). Our primary interest is in whether different genotypes showed non-zero slopes (RT across trial). While an additional slope was significant with the full model (DAT1 on Single Bright Valid), the reduced models had a lower BIC, and so they are described here.

Three of the reduced (final) models had slopes that were significant. In these cases it was the DAT1 by trial interactions that were significant. The model with slopes and without covariates for No Cue Footnote 3 was significantly better than the model with all predictors (the full model) at χ2 (5, N = 2559) = 265.32, p < 0.001. The DAT1 × Trial interaction (the RT slope) for No Cue was the only significant slope, F(2, 2137) = 5.15, p = 0.01. There were also main effects for DAT1 (F[2, 2137] = 6.93, p = 0.001) and DRD4 (F[2, 2137] = 3.48, p = 0.03), but not for Trial (p = 0.36). The zero risk allele group (who had no DAT1 intron 8 alleles associated with ADHD) had slopes that were significantly steeper (averaging 0.11 ms more of a decrease in RT per trial) than the one risk allele group, which in turn averaged 0.08 ms more of a decrease in RT per trial than the two risk allele group. The two-risk allele group had an estimate set to zero in the multilevel model (see Table 7). The zero-risk allele group was significantly different from a flat slope, (t[2137] = −2.40, p = 0.02), as was the one-risk allele group (t[2137] = −2.60, p = 0.01). The differences are easier to visualize in Table 12 where untransformed RT values show that subjects with zero risk alleles (6R/6R) got about 53 ms faster over the course of the task, while those with two risk alleles (5R/5R) slowed down across the course of the experiment by approximately 17 ms.

The final model for Single Dim Valid was significantly better than the model with all predictors at χ2 (5, N = 2559) = 212.48, p < 0.001. The DAT1 × Trial interaction was significant at F(2, 2139) = 3.89, p = 0.02. The main effects were significant for DAT1 (F[2, 2139] = 4.65, p = 0.01), COMT (F[2, 2139] = 5.35, p = 0.01), and Trial (F[1, 2139] = 15.91, p < 0.001). The zero risk group had slopes that were significantly steeper (averaging 0.07 ms faster per trial; see Table 10) than individuals in the one risk allele group (who averaged 0.08 ms faster per trial than those in the two risk allele group). Those in the zero risk allele group had slopes that were marginally different from a flat slope, t(2139) = −1.75, p = 0.08. Those in the one-risk allele group had slopes that were significantly different from a flat slope, t(2139) = −2.50, p = 0.01. As can be seen in Table 12, subjects with zero risk alleles responded approximately 112 ms faster over the course of the task, while those with two risk alleles (5R/5R) were responding only 19 ms faster by the end of the task.

The final model for Single Dim Invalid was significantly better than the model with all predictors at χ2 (5, N = 2513) = 352.66, p < 0.001. The main effects were significant for DAT1 (F[2, 2099] = 3.18, p = 0.04), COMT (F[2, 2099] = 5.78, p = 0.003), DBH (F[2, 2099] = 4.14, p = 0.02), and DRD4 (F[2, 2099] = 5.34, p = 0.01). The main effect for Trial was not significant (p = 0.20).The DAT1 zero risk allele group has slopes that are significantly larger (averaging a decrease of 0.13 ms more per trial than individuals in the one risk allele group (who have slopes that decrease 0.06 ms per trial faster than the two risk allele group). The zero risk allele group had slopes that were significantly different from zero (t[2099] = −2.36, p = 0.02), but the one risk allele group was only marginally different from a flat slope, t(2099) = −1.75, p = 0.08. Subjects with zero risk alleles got about 87 ms faster over the course of the task, while those with two risk alleles (5R/5R) were responding slower by about 17 ms by the end of the task.

Discussion

One can think of variability in RT in an attention task across repeated trials in two ways: (1) random variability from trial to trial that fluctuates around some mean RT, and (2) a systematic RT slope across trials that tends to make latter RTs slower (or faster) on average than earlier RTs. Because it is common to study the moment-to-moment variability of behavioral or physiological measures between genotypes, it is especially interesting to find that genotype also predicts the latter kind of variability in RT in a cued orienting task: the linear component of changes across trials in RT (slope RT). This was true even after covarying sleepiness, age, self-reported ethnicity, error rate, and moment-to-moment variability, although the statistical analysis led us to settle on the simpler models without these covariates. DAT1 was the only gene in the simpler models that interacted with trial number in predicting RT slopes. DAT1 produces a protein involved in the transport of dopamine from the synapse. We note that our task produced the typical costs and benefits of a reflexive attention task [1, 36]. Therefore, our finding is intriguing because it implies that it is not the nature of the task per se (i.e., whether it is designed to involve effortful/sustained attention or not) but possibly that there is a task that transpires across an extended time period.

For DAT1, the mean slope increased (subjects got slower) across genotypes with increasing numbers of risk alleles for three outcome measures that were significant. The significant outcome measures were conditions in which there was no cue and in which the single cues were dim (both valid and invalid). The conditions that were not significantly associated with DAT1 are also interesting. These include the Dual Asymmetric Bright (the target appeared near the brighter of two simultaneous cues) and Dual Asymmetric Dim conditions (the target appeared near the dimmer of two simultaneous cues), and the Neutral Both Bright and Neutral Both Dim conditions (the target appeared near either of two identical cues). The Single Bright Valid and Invalid cues were also not significant. This all suggests that it is not validity that distinguishes genotype groups (because subjects with two risk alleles do not respond more quickly across trials with either valid or invalid pre-cues). It may be, however, that cue luminance matters (because those with two risk alleles do not respond more quickly across trials with single, dim cues) and cue number matters (because dual cues did not distinguish between subjects in the different genotype groups). The risk allele for our genetic marker on DAT1 (six repeats of a VNTR) increases production of the dopamine transporter so that the dopaminergic signal is often terminated too soon [37].

One possible conclusion regarding genetic influence on the no-cue and single, dim cue conditions is that bright cues are too salient to distinguish between genotype groups (i.e., all groups performed at ceiling) because dopamine variability in the general population is sufficient for this performance. Another possible conclusion regarding genetic influence is that the addition of a second cue induces subjects to perform more similarly to each other across trials than a single cue, again, because dopamine is sufficient with two cues. In a followup analysis with DAT1, the number of cues showed a slight statistical trend for association with RT slope (p = 0.15) with steeper improvements in RT across trials for the no risk allele (5R/5R) group with one cue trials than with two cue trials. This finding is worth investigation in a future study because it suggests the possibility of an effect in which RT slopes across trials are approximately equal between genotype groups when there are more cues (perhaps because attention is divided) and if they have any copy of the risk allele (6R). Conversely, they respond more quickly across trials if there is only one cue and they have no risk alleles. In other words, subjects respond more similarly across the course of the task when there are two cues, but single cues reflexively capture attention and require a disengage step, which may become more effortful for some subjects over the course of the task.

Why would the DAT1 genotypes be associated with systematic differences in the rate of responding across trials? There are three possible outcomes when examining the interaction between gene and trial in predicting logarithm-transformed RT: (1) subjects can respond more quickly across trials (negative RT slope as trial number increases), (2) subjects can respond equally quickly across trials, or (3) subjects can respond more slowly across trials (positive RT slope as trial number increases). Also keep in mind that a significant difference in RT slope across trials between genotype groups does not always entail a negative RT slope in one group and a positive RT slope in another group. It could simply mean that the RT slope was less negative in one group than in another, while both groups could show negative slopes. In all cases, the significant effects consisted of less negative or more positive RT slopes across trials associated with more risk alleles for the various genes (as defined by association with ADHD). Recall that possession of more copies of the risk allele on DAT1 would tend to result in faster transport of dopamine from the synapses. The nature of the behavioral effect is that subjects tend to slow down across the course of the experiment, especially with the 5R/6R and 6R/6R genotypes. One possible explanation for this effect, then, is that an optimal level of dopamine is required to maintain alertness [22, 28, 38–42]. This is actually not different from saying that the association between DAT1 and RT slope may be related to vigilance or sustained attention, even though this is a reflexive attention task. There is a large literature for genetic effects of dopamine related genes for sustained attention [22, 28, 41, 42].

In the case of the current results with the reflexive attention task, waning vigilance across the course of the experiment would tend to lead to gradually longer target detection times and longer RTs on average despite the reflexive nature of the cue-target trials. This hypothesis would, of course, predict that possession of more DAT1 risk alleles should have caused a general slowing across trials in all conditions. Instead, this effect was observed in three of nine conditions. It is possible that issues of power prevented detection of the effect in the other conditions. Nevertheless, dopamine availability, cue luminance and cue number could all be important. We have provided evidence that there are genetic influences on RT trends over the course of a reflexive attention task and not simply on terms of moment-to-moment RT variability.

Of course, chance is another possibility that could explain our results. In fact, the biggest limitation of this study regards sample size which all other things being equal tends to make results less replicable. While some authors caution against using sample sizes smaller than 500 to explore interaction effects [43], others have noted sufficient power and stability to explore cross-level interactions in multilevel models with sample sizes of 30–50 level-2 units (individual subjects in this study) [44, 45] and at least 30 level-1 units (the trials in this study) per level-2 unit. Our study certainly met the latter criterion. The best way to handle this question is to replicate this study.

Another limitation could be the heterogeneity of our sample. While we see advantages to including multiple ethnicities and ages, population stratification as an artifact is certainly more likely as the heterogeneity of the sample increases. This would argue for a replication with a larger overall sample and more balanced ethnic subsamples. This could lead to more certainty in the generalizability of the results. It would also be advantageous to explore associations with more genetic markers including those that are associated with neurotransmitters other than dopamine.

Conclusions

Over the course of a reflexive visual attention experiment with repeated trials of the same conditions, some subjects fail to respond more quickly to identical stimuli, but others respond more quickly as the task progresses. This is interesting because it suggests the possibility of similar biological mechanisms for sustaining attention to exogenous and endogenous cues [9, 46, 47]. However, there are other possibilities. Responding more slowly to later presentations of the same stimulus could indicate, for example, fatigue, a build-up of trial-to-trial inhibition, or greater interference from immediately preceding trials. Responding more quickly to the same stimulus in later presentations could be considered a simple form of learning. The subject’s task was simply to make a choice response (right versus left) to a particular target that appeared across trials either unaccompanied by a temporally preceding cue, or accompanied by such a cue that could appear spatially near the target’s position or contralaterally across the visual field from the location of the target.

We asked whether individual differences in these RT slopes in response to the cue-target manipulation were associated with genetic markers for five genes: COMT, DAT1, DBH, DRD4, and APOE. These genes were chosen because they have been shown to be related to various aspects of visual attention in previous research, and because in some cases, there is a plausible biological pathway from the gene to the phenotypes that we used to study visual orienting. DAT1 genotype was associated with variations in these slopes in three different cue-target conditions. The mean slope increased with increasing numbers of risk alleles. A larger positive mean slope implies that the difference in RT between early trials with a given stimulus and later trials with that same stimulus was greater (greater RT slowing) for subjects carrying more of the DAT1 risk alleles. The risk allele was determined based primarily on previous research on related sustained attention tasks.

Previous work, including our own, has shown associations between the mean RT in different conditions and various genes, but such associations are like snapshots of genotype/phenotype relations in the sense that the phenotype is not extended in time. It reflects a static aspect of visual attention. In contrast, the phenotypes that we examined were by definition extended in time because they were defined by the slope of RT across repeated presentations of the same stimulus. It was primarily DAT1 that was associated with these temporally extended phenotypes. The phenotypes reflect how speeded attentional choices change over perhaps a 30 min period filled with repetitions of multiple trial types. However, this temporally extended phenotype could reflect the ability to maintain attentional arousal—the ability to learn to recognize the spatiotemporal configurations of the various trial types in order to respond more discriminatively when they appear again—or other processes that systematically alter RTs across an extended period of time. Our results suggest that the DAT1 gene is a promising candidate for understanding the underlying pathways involved in these more temporally extended aspects of either reflexive or sustained visual attention.

Methods

Subjects and data set

This study was approved by Rice University’s Internal Review Board for Human Subjects in Research and conducted in accordance with ethical guidelines established by the Office for Human Research Protections at the US Department of Health and Human Services. We tested subjects between the ages of 18 and 61 years old from the general population. Most of the subjects (n = 107) were Rice University students, and the remaining subjects (n = 54) were recruited from the surrounding community by posting to neighborhood email groups (see Table 13 for a comparison of these two recruiting sources and Table 14 for demographics). Prior to completing the visual orienting task, subjects signed a consent form and completed an intake questionnaire that included questions on basic demographics, tobacco use, sleepiness (using the Epworth Sleepiness Scale) [48], and attentional disorders affecting the subject and their biological relatives. Subjects provided a saliva sample from which we extracted DNA to obtain information on genotypes for five genetic markers (see Table 1).

A subject’s data were removed from the data set if he or she had error rates over 10 % on the behavioral task, could not be classified into a single ethnicity, or reported a history of a serious neurological disorder. Of the 161 subjects, eight were excluded for having an error rate over 10 %. This decision was based on the distribution of error rates (including catch trial errors). High error-rates could indicate that subjects might not be motivated or understand the task. The error rate for the remaining subjects averaged 2 % (SD = 0.02). One subject was excluded for not being classifiable to a single ethnicity. However, eleven individuals with dual ethnicity were classifiable based on the pattern of their genes. We calculated the proportion of each single ethnic group having each genotype. Then we looked at the genotypes of those individuals reporting two ethnicities. If there was a pattern (e.g., if a person’s genotype matched the most common genotype for Hispanics on 4 out of 5 genes) and one of the two ethnicities they reported was Hispanic, then they were coded as Hispanic. We compared the results to the best distinguishing genotype for each ethnicity (e.g., if a person had 5R/5R on DAT1, then he/she was 83 % likely to be Black). No individuals reported more than two ethnicities. Three individuals were excluded for reporting prior strokes or seizures. In addition, four subjects were excluded for experimenter error. The final data set contained data from 146 individuals who had complete behavioral data.

Subjects reporting ADHD were not automatically excluded because attention symptoms exist in a continuum across the general population [49–51]. However, models including and excluding these subjects were compared to verify that these subjects were not driving the results. We determined that the same statistical decisions would have been reached except for slight differences in p values obtained, so we retained the subjects reporting ADHD. Eight subjects in the final data set reported a diagnosis of ADHD, four of whom were medicated (one additional subject reporting ADHD was excluded for having fewer than 200 trials). However, fewer than half of these eight subjects had the high risk genotype on any given genetic marker so we reasoned that these subjects could not drive any results consistent with an analysis by risk allele. Ninety-four of 107 subjects in the Rice University sample had data that met inclusion criteria (45.74 % male) as did 51 of the 54 community subjects (41.18 % male). Overall, 90.06 % of the subjects had data that was not excluded. The mean age for the university sample was 20.22 years (range 18–38 years), and for the community sample the mean age was 35.45 years (range 18–61 years).

For behavioral data collection, subjects completed a choice response task (responding with a button press to indicate either a left or right target). Subjects viewed a 1024 × 768 pixel CRT monitor with a background luminance of 0.08 cd/m2. A fixation cross, centered on the monitor, was always visible. Subjects were instructed to fixate the central cross and to maintain fixation throughout data collection. Before beginning data collection trials, subjects were dark adapted and completed 20 practice trials.

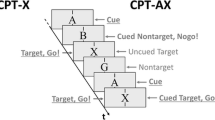

One or two pre-cues were flashed for 67 ms. On all except “catch” trials (for which no target appeared), there was an 83 ms gap after the offset of the cue(s) and prior to the onset of the target. The target remained on display for 1000 ms or until the subject made a key press (see Fig. 2). Subjects were asked to respond as quickly as possible while maintaining accuracy by making a key press to indicate a target either to the left (pressing ‘A’ on the left side of the keyboard) or to the right (pressing ‘L’ on the right side of the keyboard) of fixation. On catch trials, no target appeared, and subjects were instructed to withhold responding. After the subject responded (or the trial timed-out), there was a variable delay (1.3–1.8 s), and the next trial began. The task did not provide feedback.

Representation of stimuli. The pre-cue stimulus flashed on for 67 ms and then off for 83 ms. Targets were on the same side as the single cue 50 % of the time. The target remained on display until a response was made but for no longer than 1000 ms. Dual cues were identical except that two cues appeared simultaneously in the same locations in which the single cues appeared

In a traditional Posner-like reflexive orienting task, there are only single pre-cues (except for a neutral condition used as a baseline, which uses two equiluminant cues). To these we added unequal-luminance (“asymmetric”), dual-cue trials [52] in order to examine the ability to benefit from either of two simultaneous pre-cues with possibly different saliences. As in a traditional Posner-like task, the cues could be valid (i.e., the target would subsequently appear ipsilateral to the cue location) or invalid (i.e., contralateral). For dual cues, the target could appear either near the brighter of the two cues or near the dimmer. Never more than one target was presented and we averaged the RTs for left and right target presentations. Having, therefore, two luminances, we used a total of nine different pre-cue conditions on the display prior to the appearance of the target. These nine conditions varied on cue number (0, 1, or 2) and luminance (bright or dim). In addition, dual cues could be equiluminant or asymmetric. In this task the pre-cues were uninformative because the probability of the target appearing near where the pre-cue (or brighter cue) had appeared was 50 %.

To refer to these various cue-target conditions, we provided unique names. Single Dim Valid indicated a single cue of the dimmer luminance preceding an ipsilateral target. Conversely, Single Dim Invalid indicated a dim cue followed by a contralateral target. There were corresponding valid and invalid configurations for the Single Bright cues. We also included Neutral Bright and Neutral Dim cues. On these trials, identical bright or dim cues were presented simultaneously on both sides of the fixation cross. As mentioned previously, these spatially neutral cues provided baseline measures and were used to calculate alerting effects (that is, reduced RT due to the temporal appearance of the cue). When the dual asymmetric cues are presented, the target can appear either near the brighter (Dual Asymmetric Bright) or dimmer cue (Dual Asymmetric Dim). Targets can also appear uncued (the No Cue condition). RT was measured from the onset of the target. Finally, there was a tenth condition for which we do not have RT data because no target appeared (Catch trials) and subjects were instructed to withhold responding. Each of the 10 conditions (nine target-present plus one target-absent) was presented 20 times (10 times with a left target and 10 times with a right target), yielding 200 trials.

Subjects were told (1) that the cues did not predict the target’s location and (2) to ignore the cues as much as possible. Subjects completed all trials within one session with pauses as necessary.

The brighter and dimmer cue luminances were 11.7 and 2.0 cd/m2, respectively. The target (a square) always had a luminance of 15.5 cd/m2. The centermost edge of the target appeared 5.5° to either side of the fixation cross. The cues were shaped like the letter X, measured 0.8 (width) × 1.0 (height) degrees, and appeared 7.3° (innermost edges) to the left and right of the display’s center.

Genetic methods

Saliva was collected using Oragene-250 kits (DNA Oragene, Kanata, Ontario, Canada). Genetic assays were performed to identify genotypes at known SNPs (see Table 15) using polymerase chain reaction (PCR) amplification. Purification was carried out on products by loading them onto an Applied Biosystems 3730xl DNA analyzer and reading the results using Mutation Surveyor software (SoftGenetics, PA, USA). For more details, please see our earlier paper [1].

Genetic analysis of the VNTR (DAT1 exon 8) was performed using fluorescently labeled PCR products (see Table 13). Similar to the SNPs, the amplified VNTR products were analyzed on an Applied Biosystems 3730xl DNA analyzer. Genemapper 4.0 software was used to assign the allele distribution (Applied Biosystems).

The potential for spurious associations in genetics studies, termed population stratification, is a commonly discussed problem [53]. Stratification in this case is an artifact that occurs when there are systematic differences in a phenotype that have nothing to do with a genetic marker under study, yet the association appears statistically significant. Spurious relationships are possible because ethnicity is related to genetic ancestry. Studies are more at risk for these stratification artifacts when subjects from various ethnic groups are (1) combined in the same analysis, (2) differ on a phenotype, and (3) simultaneously differ for unrelated reasons on the frequencies of target genotypes. In a particular study it is often impossible to determine if ethnic group phenotypic differences are due to genetic differences. However, population stratification is often suspected if a study fails to replicate. To address potential stratification, we used a strategy similar to that recommended by Hutchison et al. [54]. That is, we used self-reported ethnicity as a proxy for genetic subpopulation entered as a covariate in the statistical model.

We examined markers on five genes, COMT, DAT1, DBH, DRD4, and APOE. The first four each have three genotypes based on two alleles (one inherited from each parent, such as AA, AG and GG for COMT). All genes except APOE were coded ordinally so that “0” represents no risk alleles, “1” represents one risk allele, and “2” represents two risk alleles. APOE was coded so that “0” indicates possession of a protective allele, “1” represents the most common “normal” variant, and “2” represents possession of either one or two risk alleles.

The coding for APOE has a slightly different interpretation because it consists of two different markers (a haplotype) in contrast to the other genes that each had only one marker. However, the risk for cognitive deficits appears to be additive and can still be tested for linear effects using similar coding. APOE is based on two SNPs from which we have created three groups (ε2/ε3, ε3/ε3, and any ε4) based on the literature. For example, Hubacek et al. [55] did not consider individuals with the ε2/ε4 genotype since one allele carries risk for cognitive deficits (even in middle-aged adults without Alzheimer’s) [31] and the other provides protection against cognitive deficits [56]. In our data set two individuals had the ε2/ε4 genotype and were therefore excluded from analysis on this gene. Other genotypes, such as ε2/ε2, are rare and do not occur in our data set. The first group (ε2/ε3) represents those with a protective allele; the second group represents those with typical risks, and the third group represents those with at least one risk allele (that is, either one or two ε4 alleles) since even one risk allele carries risk for cognitive deficits. These five genes (COMT, DAT1, DBH, DRD4, and APOE) were entered as potential predictors of RT variability for each cue-target condition in turn.

Statistical analysis

Prior to the main analyses, we determined that assumptions of normality were not met (the RT variables had significant positive skew). Raw RT values were transformed using a base ten logarithm. We did not use incorrect (wrong side) or RT out of range trials, and this reduced the impact of attentional lapses on slope.

Recall that 16 subjects had their data removed for reasons described under Subjects and Data Set. In addition to these exclusions, some subjects had missing genetic information due to the inability to obtain genotypic information from their saliva sample on a particular marker. For APOE, 12 subjects had missing genetic information. For COMT, two subjects had missing genetic information. For DAT1, seven subjects had missing genetic information. For DBH, three subjects had missing genetic information. Finally, for DRD4 the number of subjects with missing genetic information was 13. If subjects were missing genotyping data for a particular marker, they were excluded from that analysis.

We used a multilevel modeling approach in SPSS (IBM, 2012) using the MIXED command. We used a backwards design similar to backwards regression. There were nine final models (one for each cue-target condition). Final models were determined by comparison to an empty model with no predictors and to simpler models. Essentially, the multi-level model is fitting a line for each person through the log transformed RTs of each cue-target condition and then the interaction between trial and genotype indicates if the slope changes by genotype. Epworth sleepiness scores, age, and ethnicity were entered as covariates.

We examined slope using mixed (multilevel) modeling. Multilevel modeling accounts for the nested nature of the data (i.e., that trials can be attributed to individuals). We used this method primarily to account for the cross-level interaction that we were interested in between trial and genotype (i.e., the slope) [57]. In addition, the intraclass correlation indicated that 4.39 % of the variance came from the individual-level data (level-2). Although this is relatively small, using multilevel modeling addresses the nested nature of the data. We entered all five genetic markers, sleepiness, age, side of target (right or left), trial number, standard deviation of base10 logarithm-transformed RT, error rate, and ethnicity (dummy coded). We also entered the interaction between trial number and genetic marker as predictors of RT. These were all fixed effects. Trial was entered as a repeated effect. The output of interest when examining RT slopes over the course of a task is the interaction between the genetic marker and trial number.

Notes

The parameter estimates shown in Tables 3, 4, 5, 6, 7, 8, 9, 10 and 11 have been retransformed. The statistical models were run on the log(10) transformed RT data. For ease of exposition, we took each of these parameter estimates and retransformed them so that their magnitudes would be interpretable in terms of the original, untransformed scale that used RT in msec. Note that it is not appropriate simply to antilog(10) these parameter estimates; that is, it is not appropriate to compute the retransformed value of the parameter β1 as 10β1. Instead, we used the formula shown below to retransform these parameters estimates [58]. Given a parameter, β1, estimated using the log(10) transformed values of RT as the outcome variable, the retransformed value of this parameter estimate is:

$$b_{1} = 10^{{\widehat{{\left( {\log_{10} \left( {RT} \right)} \right)}}}} \left( {\beta_{1} \frac{1}{N}\mathop \sum \limits_{i = 1}^{N} 10^{{e_{i } }} } \right)$$where b 1 is the transformed parameter estimate, β 1 is the original estimate using log(10) transformed RT, \(\widehat{{{ \log }_{10} (RT)}}\) is the mean of the log(10) transformed RT values, and the summation/averaging expression after β1 is the mean of the antilogged residuals.

The names for the various conditions are described in the “Methods”.

References

Lundwall RA, Guo D-C, Dannemiller JL. Exogenous visual orienting is associated with specific neurotransmitter genetic markers: a population-based genetic association study. PLoS One. 2012;7(2):e30731.

Posner MI, Walker JA, Friedrich FJ, Rafal RD. Effects of parietal injury on covert orienting of attention. J Neurosci. 1984;4(7):1863–74.

Conners CK. Continuous performance test computer program (version 2.0). North Tonawanda: Multi-Health Systems; 1992.

Robertson IH, Manly T, Andrade J, Baddeley BT, Yiend J. ‘Oops!’: performance correlates of everyday attentional failures in traumatic brain injured and normal subjects. Neuropsychologia. 1997;35:747–58.

Beane M, Marrocco RT. Norepinephrine and acetylcholine mediation of the components of reflexive attention: implications for attention deficit disorders. Prog Neurobiol. 2004;74(3):167–81.

Jones SAH, Butler B, Kintzel F, Salmon JP, Klein RM, Eskes GA. Measuring the components of attention using the Dalhousie Computerized Attention Battery (DalCAB). Psychol Assess. 2015. doi:10.1037/pas0000148

Natale E, Marzi CA, Girelli M, Pavone EF, Pollmann S. ERP and fMRI correlates of endogenous and exogenous focusing of visual-spatial attention. Eur J Neurosci. 2006;23(9):2511–21.

Visintin E, De Panfilis C, Antonucci C, Capecci C, Marchesi C, Sambataro F. Parsing the intrinsic networks underlying attention: a resting state study. Behav Brain Res. 2015;278:315–22.

Giordano AM. On the temporal dynamics of covert attention. Doctoral Dissertation. New York University. 2008.

Chica AB, Bartolomeo P, Lupiáñez J. Two cognitive and neural systems for endogenous and exogenous spatial attention. Behav Brain Res. 2013;237:107–23.

Bellgrove MA, Hawi Z, Kirley A, Gill M, Robertson IH. Dissecting the attention deficit hyperactivity disorder (ADHD) phenotype: sustained attention, response variability and spatial attentional asymmetries in relation to dopamine transporter (DAT1) genotype. Neuropsychologia. 2005;43:1847–57.

Johnson KA, Kelly SP, Bellgrove MA, Barry E, Cox M, Gill M, Robertson IH. Response variability in attention deficit hyperactivity disorder: evidence for neuropsychological heterogeneity. Neuropsychologia. 2007;45(4):630–8.

Lim J, Ebstein R, Tse CY, Monakhov M, Lai PS, Dinges DF, Kwok K. Dopaminergic polymorphisms associated with time-on-task declines and fatigue in the Psychomotor Vigilance Test. PLoS One. 2012;7(3):e33767.

Maire M, Reichert CF, Gabel V, Viola AU, Krebs J, Strobel W, Landolt HP, Bachmann V, Cajochen C, Schmidt C. Time-on-task decrement in vigilance is modulated by inter-individual vulnerability to homeostatic sleep pressure manipulation. Front Behav Neurosci. 2014;8:1–10.

Barnes KA, Howard JH, Howard DV, Kenealy L, Vaidya CJ. Two forms of implicit learning in childhood ADHD. Dev Neuropsychol. 2010;35(5):494–505.

Dobler VB, Anker S, Gilmore J, Robertson IH, Atkinson J, Manly T. Asymmetric deterioration of spatial awareness with diminishing levels of alertness in normal children and children with ADHD. J Child Psychol Psychiatry. 2005;46(11):1230–48.

Bender S, Banaschewski T, Roessner V, Klein C, Rietschel M, Feige B, Brandeis D, Laucht M. Variability of single trial brain activation predicts fluctuations in reaction time. Biol Psychol. 2015;106:50–60.

Brennan AR, Arnsten AF. Neuronal mechanisms underlying attention deficit hyperactivity disorder: the influence of arousal on prefrontal cortical function. Ann N Y Acad Sci. 2008;1129:236–45.

Ainge JAJTAWP. Induction of c-fos in specific thalamic nuclei following stimulation of the pedunculopontine tegmental nucleus. Eur J Neurosci. 2004;20(7):1827–37.

Greenwood PM, Fossella JA, Parasuraman R. Specificity of the effect of a nicotinic receptor polymorphism on individual differences in visuospatial attention. J Cogn Neurosci. 2005;17(10):1611–20.

Riedel WJ, Klaassen T, Schmitt JAJ. Tryptophan, mood, and cognitive function. Brain Behav Immun. 2002;16(5):581–9.

Bellgrove MA, Hawi Z, Gill M, Robertson IH. The cognitive genetics of attention deficit hyperactivity disorder (ADHD): sustained attention as a candidate phenotype. Cortex. 2006;42:838–45.

Bioulac S, Lallemand S, Rizzo A, Philip P, Fabrigoule C, Bouvard MP. Impact of time on task on ADHD patient’s performances in a virtual classroom. Eur J Paediatr Neurol. 2012;16:514–21.

Castellanos FX, Sonuga-Barke EJ, Scheres A, Di Martino A, Hyde C, Walters JR. Varieties of attention-deficit/hyperactivity disorder-related intra-individual variability. Biol Psychiatry. 2005;57:1416–23.

Huang-Pollock CL, Karalunas SL, Tam H, Moore AN. Evaluating vigilance deficits in ADHD: a meta-analysis of SPT performance. J Abnorm Psychol. 2012;121:360–71.

Hurks PPM, Adam JJ, Hendriksen JG, Vles JS, Feron FJ, Kalff AC, Kroes M, Steyaert J, Crolla IFAM, van Zeben TMCB. Controlled visuomotor preparation deficits in attention-deficit/hyperactivity disorder. Neuropsychology. 2005;19:66–76.

Kebir O, Tabbane K, Sengupta S, Joober R. Candidate genes and neuropsychological phenotypes in children with ADHD: review of association studies. J Psychiatry Neurosci. 2009;34:88–101.

Kollins SH, Anastopoulos AD, Lachiewicz AM, FitzGerald D, Morrissey-Kane E, Garrett ME, Keatts SL, Ashley-Koch AE. SNPs in dopamine D2 receptor gene (DRD2) and norepinephrine transporter gene (NET) are associated with continuous performance task (CPT) phenotypes in ADHD children and their families. Am J Med Genet Part B Neuropsychiatr Genet. 2008;147B(8):1580–8.

Tarantino V, Cutini S, Mogentale C, Bisiacchi PS. Time-on-task in children with ADHD: an ex-Gaussian analysis. J Int Neuropsychol Soc. 2013;19(7):820–8.

Johnson KA, Barry E, Bellgrove MA, Cox M, Kelly SP, Daibhis A, Daly M, Keavey M, Watchorn A, Fitzgerald M, et al. Dissociation in response to methylphenidate on response variability in a group of medication naive children with ADHD. Neuropsychologia. 2008;46(5):1532–41.

Parasuraman R, Greenwood PM, Sunderland T. The apolipoprotein E gene, attention, and brain function. Neuropsychology. 2002;16:254–74.

Lee TW, Yu YW, Hong CJ, Tsai SJ, Wu HC, Chen TJ. The influence of apolipoprotein E Epsilon4 polymorphism on qEEG profiles in healthy young females: a resting EEG study. Brain Topogr. 2012;25(4):431–42.

Rusted J, Evans S, King S, Dowell N, Tabet N, Tofts P. APOE e4 polymorphism in young adults is associated with improved attention and indexed by distinct neural signatures. Neuroimage. 2013;65:364–73.

Espeseth T, Greenwood PM, Reinvang I, Fjell AM, Walhovd KB, Westlye LT, Wehling E, Lundervold AJ, Rostwelt H, Parasuraman R. Interactive effects of APOE and CHRNA4 on attention and white matter volume in healthy middle-aged and older adults. Cogn Affect Behav Ne. 2006;6:31–43.

Marchant NL, King SL, Tabet N, Rusted JM. Positive effects of cholinergic stimulation favor young APOE ɛ4 carriers. Neuropsychopharmacology. 2010;35(5):1090–6.

Posner MI. Orienting of attention. Q J Exp Psychol. 1980;32:3–25.

Giros B, El Mestikawy S, Godinot N, Zheng K, Han H, Yang-Feng T, Caron MG. Cloning, pharmacological characterization, and chromosome assignment of the human dopamine transporter. Mol Pharmacol. 1992;42:383–90.

Cools R, D’Esposito M. Inverted-U-shaped dopamine actions on human working memory and cognitive control. Biol Psychiatry. 2011;69(12):e113–25.

Monte-Silva K, Kuo MF, Thirugnanasambandam N, Liebetanz D, Paulus W, Nitsche MA. Dose-dependent inverted U-shaped effect of dopamine (D2-like) receptor activation on focal and nonfocal plasticity in humans. J Neurosci. 2009;29(19):6124–31.

Williams-Gray CH, Hampshire A, Robbins TW, Owen AM, Barker RA. Catechol O-methyltransferase Val158Met genotype influences frontoparietal activity during planning in patients with Parkinson’s disease. J Neurosci. 2007;27(18):4832–8.

Manor I, Tyano S, Eisenberg J, Bachner-Melman R, Kotler M, Ebstein RP. The short DRD4 repeats confer risk to attention deficit hyperactivity disorder in a family-based design and impair performance on a continuous performance test (TOVA). Mol Psychiatry. 2002;7:790–4.

Young JW, Powell SB, Scott CN, Zhou X, Geyer MA. The effect of reduced dopamine D4 receptor expression in the 5-choice continuous performance task: separating response inhibition from premature responding. Behav Brain Res. 2011;222(1):183–92.

Schmidt AF, Groenwold RH, Knol MJ, Hoes AW, Nielen M, Roes KC, De Boer A, Klungel OH. Exploring interaction effects in small samples increases rates of false-positive and false-negative findings: results from a systematic review and simulation study. J Clin Epidemiol. 2014;67(7):821–9.

Maas CJM, Hox JJ. Sufficient sample sizes for multilevel modeling. Methodology. 2005;1(3):86–92.

Scherbaum CA, Ferreter JM. Estimating statistical power and required sample sizes for organizational research using multilevel modeling. Organ Res Methods. 2009;12(2):347–67.

Carrasco M, McElree B. Covert attention accelerates the rate of visual information processing. Proc Natl Acad Sci USA. 2001;98(9):5363–7.

Carrasco M, Giordano AM, McElree B. Temporal performance fields: visual and attentional factors. Vision Res. 2004;44(12):1351–65.

Johns MW. A new method for measuring daytime sleepiness: the Epworth sleepiness scale. Sleep. 1991;14:540–5.

Chen W, Zhou K, Sham P, Franke B, Kuntsi J, Campbell D, Fleischman K, Knight J, Andreou P, Arnold R, et al. DSM-IV combined type ADHD shows familial association with sibling trait scores: a sampling strategy for QTL linkage. Am J Med Genet Part B Neuropsychiatr Genet. 2008;147B(8):1450–60.

Lubke GH, Hudziak JJ, Derks EM, van Bijsterveldt TC, Boomsma DI. Maternal ratings of attention problems in ADHD: evidence for the existence of a continuum. J Am Acad Child Adolesc Psychiatry. 2009;48(11):1085–93.

Larsson H, Anckarsater H, Rastam M, Chang Z, Lichtenstein P. Childhood attention-deficit hyperactivity disorder as an extreme of a continuous trait: a quantitative genetic study of 8500 twin pairs. J Child Psychol Psychiatry. 2012;53(1):73–80.

Kean M, Lambert A. The influence of a salience distinction between bilateral cues on the latency of target-detection saccades. Br J Psychol. 2003;94:373–88.

Thomas DC, Witte JS. Point: population stratification: a problem for case-control studies of candidate-gene associations? Cancer Epidemiol Biomarkers Prev. 2002;11:505–12.

Hutchison KE, Stallings M, McGeary J, Bryan A. Population stratification in the candidate gene study: fatal threat or red herring? Psychol Bull. 2004;130(1):66–79.

Hubacek JA, Lanska V, Skodova Z, Adamkova V, Poledne R. Sex-specific interaction between APOE and APOA5 variants and determination of plasma lipid levels. Eur J Hum Genet. 2008;16(1):135–8.

Corder EH, Saunders AM, Risch NJ, Strittmatter WJ, Schmechel DE, Gaskell PC, Rimmler JB, Locke PA, Conneally PM, Schmader KE, et al. Protective effect of apolipoprotein E type 2 allele for late onset Alzheimer disease. Nat Genet. 1994;7:180–4.

Huta V. When to use hierarchical linear modeling. Quant Methods Psychol. 2014;10:13–28.

Duan N. Smearing estimate: a nonparametric retransformation method. J Am Stat Assoc. 1983;78(383):605–10.

Starr JM, Fox H, Harris SE, Deary IJ, Whalley LJ. COMT genotype and cognitive ability: a longitudinal aging study. Neurosci Lett. 2007;421(1):57–61.

Palmason H, Moser D, Sigmund J, Vogler C, Hanig S, Schneider A, Seitz C, Marcus A, Meyer J, Freitag CM. Attention-deficit/hyperactivity disorder phenotype is influenced by a functional catechol-O-methyltransferase variant. J Neural Transm. 2010;117(2):259–67.

Brookes KJ, Neale BM, Sugden K, Khan N, Asherson P, D’Souza UM. Relationship between VNTR polymorphisms of the human dopamine transporter gene and expression in post-mortem midbrain tissue. Am J Med Genet B Neuropsychiatr Genet. 2007;144B:1070–8.

Bellgrove MA, Chambers CD, Johnson KA, Daibhis A, Daly M, Hawi Z, Lambert D, Gill M, Robertson IH. Dopaminergic genotype biases spatial attention in healthy children. Mol Psychiatry. 2007;12:786–92.

Franke B, Hoogman M, Arias Vasquez A, Heister JG, Savelkoul PJ, Naber M, Scheffer H, Kiemeney LA, Kan CC, Kooij JJ, et al. Association of the dopamine transporter (SLC6A3/DAT1) gene 9–6 haplotype with adult ADHD. Am J Med Genet Part B Neuropsychiatr Genet. 2008;147B(8):1576–9.

Rommelse NN, Altink ME, Arias-Vasquez A, Buschgens CJ, Fliers E, Faraone SV, Buitelaar JK, Sergeant JA, Franke B, Oosterlaan J. A review and analysis of the relationship between neuropsychological measures and DAT1 in ADHD. Am J Med Genet Part B Neuropsychiatr Genet. 2008;147B(8):1536–46.

Lowe N, Kirley A, Mullins C, Fitzgerald M, Gill M, Hawi Z. Multiple marker analysis at the promoter region of the DRD4 gene and ADHD: evidence of linkage and association with the SNP -616. Am J Med Genet Part B Neuropsychiatr Genet. 2004;131B(1):33–7.

Greenwood PM, Lambert C. Effects of apolipoprotein E genotype on spatial attention, working memory, and their interaction in healthy, middle-aged adults: results from the National Institute of Mental Health’s BIOCARD study. Neuropsychology. 2005;19:199–211.

Poirier J. Apolipoprotein E in the brain and its role in Alzheimer’s Disease. J Psychiatry Neurosci. 1996;21:128–34.

Greenwood PM, Sunderland T, Friz JL, Parasuraman R. Genetics and visual attention: selective deficits in healthy adult carriers of the epsilon 4 allele of the apolipoprotein E gene. Proc Natl Acad Sci USA. 2000;97:11661–6.

Barkley RA, Smith KM, Fischer M, Navia B. An examination of the behavioral and neuropsychological correlates of three ADHD candidate gene polymorphisms (DRD4 7+ , DBH TaqI A2, and DAT1 40 bp VNTR) in hyperactive and normal children followed to adulthood. Am J Med Genet Part B Neuropsychiatr Genet. 2006;141B(5):487–98.

Kopečková M, Paclt I, Goetz P. Polymorphisms of dopamine-β-hydroxylase in ADHD children. Folia Biol. 2006;52:194–201.

Authors’ contributions

Both RAL and JLD participated in all aspects of study design, data collection and analysis, and the writing and approval of the manuscript. Both authors read and approved the final manuscript.

Acknowledgements

The research was supported in part by a grant by the Lynette S. Autrey Fund (to JLD). The funder had no role in the design, data collection, data interpretation, writing, or decision to publish the findings of this study. We express our appreciation to the following research assistants: Susann Szukalski, Bryan Barajas, Chris Tzeng, and Ramya Chockaligam.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Lundwall, R.A., Dannemiller, J.L. Genetic contributions to attentional response time slopes across repeated trials. BMC Neurosci 16, 66 (2015). https://doi.org/10.1186/s12868-015-0201-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12868-015-0201-3