Abstract

Background

Gene networks in living cells can change depending on various conditions such as caused by different environments, tissue types, disease states, and development stages. Identifying the differential changes in gene networks is very important to understand molecular basis of various biological process. While existing algorithms can be used to infer two gene networks separately from gene expression data under two different conditions, and then to identify network changes, such an approach does not exploit the similarity between two gene networks, and it is thus suboptimal. A desirable approach would be clearly to infer two gene networks jointly, which can yield improved estimates of network changes.

Results

In this paper, we developed a proximal gradient algorithm for differential network (ProGAdNet) inference, that jointly infers two gene networks under different conditions and then identifies changes in the network structure. Computer simulations demonstrated that our ProGAdNet outperformed existing algorithms in terms of inference accuracy, and was much faster than a similar approach for joint inference of gene networks. Gene expression data of breast tumors and normal tissues in the TCGA database were analyzed with our ProGAdNet, and revealed that 268 genes were involved in the changed network edges. Gene set enrichment analysis identified a significant number of gene sets related to breast cancer or other types of cancer that are enriched in this set of 268 genes. Network analysis of the kidney cancer data in the TCGA database with ProGAdNet also identified a set of genes involved in network changes, and the majority of the top genes identified have been reported in the literature to be implicated in kidney cancer. These results corroborated that the gene sets identified by ProGAdNet were very informative about the cancer disease status. A software package implementing the ProGAdNet, computer simulations, and real data analysis is available as Additional file 1.

Conclusion

With its superior performance over existing algorithms, ProGAdNet provides a valuable tool for finding changes in gene networks, which may aid the discovery of gene-gene interactions changed under different conditions.

Similar content being viewed by others

Background

Genes in living cells interact and form a complex network to regulate molecular functions and biological processes. Gene networks can undergo topological changes depending on the molecular context in which they operate [1, 2]. For example, it was observed that transcription factors (TFs) can bind to and thus regulate different target genes under varying environmental conditions [3, 4]. Changes of genetic interactions when cells are challenged by DNA damage as observed in [5] may also reflect the structural changes of the underlying gene network. This kind of rewiring of gene networks has been observed not only in yeast [3–6], but also in mammalian cells [7, 8]. More generally, differential changes of gene networks can occur depending on environment, tissue type, disease state, development and speciation [1]. Therefore, identification of such differential changes in gene networks is of paramount importance when it comes to understanding the molecular basis of various biological processes.

Although a number of computational methods have been developed to infer the structure of gene regulatory networks from gene expression and related data [9–12], they are mainly concerned with the static structure of gene networks under a single condition. These methods rely on similarity measures such as the correlation or mutual information [13, 14], Gaussian graphical models (GGMs) [15, 16], Bayesian networks [17, 18], or linear regression models [19–22]. Refer to [12] for description of more network inference methods and their performance. Existing methods for the analysis of differential gene interactions under different conditions typically attempt to identify differential co-expression of genes based on correlations between their expression levels [23]. While it is possible to use an existing method to infer a gene network under different conditions separately, and then compare the inferred networks to determine their changes, such an approach does not jointly leverage the data under different conditions in the inference; thus, it may markedly sacrifice the accuracy in the inference of network changes.

In this paper, we develop a very efficient proximal gradient algorithm for differential network (ProGAdNet) inference, that jointly infers gene networks under two different conditions and then identifies changes in these two networks. To overcome the challenge of the small sample size and a large number of unknowns, which is common to inference of gene networks, our method exploits two important attributes of gene networks: i) sparsity in the underlying connectivity, meaning that the number of gene-gene interactions in a gene network is much smaller than the number of all possible interactions [19, 24–26]; and, ii) similarity in the gene networks of the same organism under different conditions [4, 7], meaning that the number of interactions changed in response to different conditions is much smaller than the total number of interactions present in the network. A similar network inference setup was considered in [27] for inferring multiple gene networks, but no new algorithm was developed there; instead [27] adopted the lqa algorithm of [28] that was designed for generalized linear models. Our computer simulations demonstrated superior performance of our ProGAdNet algorithm relative to existing methods including the lqa algorithm. Analysis of a set of RNA-Seq data from normal tissues and breast tumors with ProGAdNet identified genes involved in changes of the gene network.

The differential gene-gene interactions identified by our ProGAdNet algorithm yield a list of genes that may not be differentially expressed under two different conditions. Comparing with the set of differentially expressed genes under two conditions, this set of genes may provide additional insight into the molecular mechanism behind the phenotypical difference of the tissue under different conditions. Alternatively, the two gene networks inferred by our ProGAdNet algorithm can be used for further differential network analysis (DiNA). DiNA has received much attention recently; the performance of ten DiNA algorithms was assessed in [29] using gene networks and gene/microRNA networks. Given two networks with the same set of nodes, a DiNA algorithm computes a score for each node based on the difference of global and/or local topologies of the two networks, and then ranks nodes based on these scores. Apparently, DiNA relies on the two networks that typically need to be constructed from certain data. Our ProGAdNet algorithm provides an efficient and effective tool for constructing two gene networks of the same set of genes from gene expression data under two different conditions, which can be used by a DiNA algorithm for further analysis.

Methods

Gene network model

Suppose that expression levels of p genes have been measured with microarray or RNA-seq, and let Xi be the expression level of the ith gene, where i=1,…,p. To identify the possible regulatory effect of other genes on the ith gene, we employ the following linear regression model as also used in [19–22]

where μi is a constant and Ei is the error term, and unknown regression coefficients (bji)’s reflect the correlation between Xi and Xj after adjusting the effects of other variables, Xk’s, k∉{i,j}. This adjusted correlation may be the result of possible interaction between genes i and j. The nonzero (bji)’s define the edges in the gene network. Suppose that n samples of gene expression levels of the same organism (or the same type of tissue of an organism) under two different conditions are available, and let n×1 vectors xi and \(\tilde {\mathbf {x}}_{i}\) contain these n samples of the ith gene under two conditions, respectively. Define n×p matrices X:=[x1,x2,…,xp] and \(\tilde {\mathbf {X}}:=[\tilde {\mathbf {x}}_{1}, \tilde {\mathbf {x}}_{2}, \ldots, \tilde {\mathbf {x}}_{p}], p\times 1\) vectors μ=[μ1,…,μp]T and \(\tilde {\boldsymbol {\mu }}=[\tilde {\mu }_{1},\ldots, \tilde {\mu }_{p}]^{T}\), and p×p matrices B and \(\tilde {\mathbf {B}}\) whose element on the ith column and the jth row are bji and \(\tilde {b}_{ji}\), respectively. Letting \(b_{ii}=\tilde {b}_{ii}=0\), model (1) yields the following

where 1 is a vector with all elements equal to 1, and n×p matrices E and \(\tilde {\mathbf {E}}\) contain error terms. Matrices B and \(\tilde {\mathbf {B}}\) characterize the structure of the gene networks under two conditions.

Our main goal is to identify the changes in the gene network under two conditions, namely, those edges from gene j to gene i such that \(b_{ji}-\tilde {b}_{ji}\ne 0, j\ne i\). One straightforward way to do this is to estimate B and \(\tilde {\mathbf {B}}\) separately from two linear models in (2), and then find gene pairs (i,j) for which \(b_{ji}-\tilde {b}_{ji}\ne 0\). However, this approach may not be optimal, since it does not exploit the fact that the network structure does not change significantly under two conditions, that is, most entries of B and \(\tilde {\mathbf {B}}\) are identical. A better approach is apparently to infer gene networks under two conditions jointly, which can exploit the similarity between two network structures and thereby improve the inference accuracy.

If we denote the ith column of B and \(\tilde {\mathbf {B}}\) as bi and \(\tilde {\mathbf {b}}_{i}\), we can also write model (2) for each gene separately as follows: xi=μi1+Xbi+ei and \(\tilde {\mathbf {x}}_{i}=\tilde {\mu }_{i}\mathbf {1}+\tilde {\mathbf {X}}\tilde {\mathbf {b}}_{i}+\tilde {\mathbf {e}}_{i}, i=1,\ldots, p\), where ei and \(\tilde {\mathbf {e}}_{i}\) are the ith column of E and \(\tilde {\mathbf {E}}\), respectively. We can estimate μi and \(\tilde {\mu }_{i}\) using the least square estimation method and substitute the estimates into the linear regression model, which is equivalent to centering each column of X and \(\tilde {\mathbf {X}}\), i.e., subtracting the mean of each column from each element of the column. From now on, we will drop μi and \(\tilde {\mu }_{i}\) from the model and assume that columns of X and \(\tilde {\mathbf {X}}\) have been centered. To remove the constraints bii=0,i=1,…,p, we define matrices X−i:=[x1,…,xi−1,xi+1,…,xp] and \(\tilde {\mathbf {X}}_{-i}:=[\tilde {\mathbf {x}}_{1}, \ldots, \tilde {\mathbf {x}}_{i-1}, \tilde {\mathbf {x}}_{i+1}, \ldots, \tilde {\mathbf {x}}_{p}]\), vectors βi:=[b1i,…,b(i−1)i,b(i+1)i,…,bpi]T and \(\tilde {\boldsymbol {\beta }}_{i}:=[\tilde {b}_{1i}, \ldots, \tilde {b}_{(i-1)i}, \tilde {b}_{(i+1)i}, \ldots, \tilde {b}_{pi}]^{T}\). The regression model for the gene network under two conditions can be written as

Based on (3), we will develop a proximal gradient algorithm to infer βi and \(\tilde {\boldsymbol {\beta }}_{i}\) jointly, and identify changes in the network structure.

Network inference

Optimization formulation

As argued in [19, 30, 31], gene regulatory networks or more general biochemical networks are sparse, meaning that a gene directly regulates or is regulated by a small number of genes relative to the total number of genes in the network. Taking into account sparsity, only a relatively small number of entries of B and \(\tilde {\mathbf {B}}\), or equivalently entries of βi and \(\tilde {\boldsymbol {\beta }}_{i}, i=1,\ldots, p\), are nonzero. These nonzero entries determine the network structure and the regulatory effect of one gene on other genes. As mentioned earlier, the gene network of an organism is expected to have similar structure under two different conditions. For example, the gene network of a tissue in a disease (such as cancer) state may have changed, comparing to that of the same tissue under the normal condition, but such change in the network structure is expected to be small relative to the overall network structure. Therefore, it is reasonable to expect that the number of edges that change under two conditions is small comparing with the total number of edges of the network.

Taking into account sparsity in B and \(\tilde {\mathbf {B}}\) and also the similarity between B and \(\tilde {\mathbf {B}}\), we formulate the following optimization problem to jointly infer gene networks under two conditions:

where ∥·∥ stands for Euclidean norm, ∥·∥1 stands for l1 norm, and λ1 and λ2 are two positive constants. The objective function in (4) consists of the squared error of the linear regression model (1) and two regularization terms \(\lambda _{1}(\parallel \boldsymbol {\beta }_{i}\parallel _{1}+\parallel \tilde {\boldsymbol {\beta }}_{i}\parallel _{1})\) and \(\lambda _{2}\parallel \boldsymbol {\beta }_{i}-\tilde {\boldsymbol {\beta }}_{i}\parallel _{1}\). Note that unlike the GGM, the regularized least squared error approach here does not rely on the Gaussian assumption. The two regularization terms induce sparsity in the inferred networks and network changes, respectively. This optimization problem is apparently convex, and therefore it has a unique and globally optimal solution. Note that the term \(\lambda _{2}\parallel \boldsymbol {\beta }_{i}-\tilde {\boldsymbol {\beta }}_{i}\parallel _{1}\) is reminiscent of the fused Lasso [32]. However, all regression coefficients in the fused Lasso are essentially coupled, whereas here the term \(\lambda _{2}\parallel \boldsymbol {\beta }_{i}-\tilde {\boldsymbol {\beta }}_{i}\parallel _{1}\) only couples each pair of regression coefficients, βij and \(\tilde {\beta }_{ij}\). As will be described next, this enables us to develop an algorithm to solve optimization problem (4) that is different from and more efficient than the algorithm for solving the general fused Lasso problem. Note that an optimization problem similar to (4) was formulated in [27] for inferring multiple gene networks, but no new algorithm was developed, instead the problem was solved with the lqa algorithm [28] that was developed for general penalized maximum likelihood inference of generalized linear models including the fused Lasso. Our computer simulations showed that our algorithm not only is much faster than the lqa algorithm, but also yields much more accurate results.

Proximal Gradient Solver

Define \(\boldsymbol {\alpha }_{i}:=\left [\boldsymbol {\beta }_{i}^{T} ~\tilde {\boldsymbol {\beta }}_{i}^{T} \right ]^{T}\), and let us separate the objective function in (4) into the differentiable part g1(αi) and the non-differentiable part g2(αi) given by

Applying the proximal gradient method [33] to solve the optimization problem (4), we obtain an expression for αi in the rth step of the iterative procedure as follows:

where prox stands for the proximal operator defined as \(\text {prox}_{\lambda f}(\mathbf {t}):=\text {arg min}_{\mathbf {x}} f(\mathbf {x})+\frac {1}{2\lambda }||\mathbf {x}-\mathbf {t}||^{2}\) for function f(x) and a constant vector t, and ∇g1(αi) is the gradient of g1(αi). Generally, the value of step size λ(r) can be found using a line search step, which can be determined from the Lipschitz constant [33]. For our problem, we will provide a closed-form expression for λ(r) later. Since g1(αi) is simply in a quadratic form, its gradient can be obtained readily as \(\nabla g_{1}(\boldsymbol {\alpha }_{i})= \left [\nabla g_{1}(\boldsymbol {\beta }_{i})^{T}, \nabla g_{1}(\tilde {\boldsymbol {\beta }}_{i})^{T}\right ]^{T}\), where \(\nabla g_{1}(\boldsymbol {\beta }_{i})=2\left (\mathbf {X}_{-i}^{T}\mathbf {X}_{-i}\boldsymbol {\beta }_{i}-\mathbf {X}_{-i}^{T}\mathbf {x}_{i}\right)\) and \(\nabla g_{1}(\tilde {\boldsymbol {\beta }}_{i})=2\left (\tilde {\mathbf {X}}_{-i}^{T}\tilde {\mathbf {X}}_{-i}\tilde {\boldsymbol {\beta }}_{i}-\tilde {\mathbf {X}}_{-i}^{T}\tilde {\mathbf {x}}_{i}\right)\).

Upon defining t=βi−λ(r)∇g1(βi) and \(\tilde {\mathbf {t}}=\tilde {\boldsymbol {\beta }}_{i}-\lambda ^{(r)}\nabla g_{1}(\tilde {\boldsymbol {\beta }}_{i})\), the proximal operator in (6) can be written as

It is seen that the optimization problem in proximal operator (7) can be decomposed into p−1 separate problems as follows

where βij and \(\tilde {\beta }_{ij}\) are the jth element of βi and \(\tilde {\boldsymbol {\beta }}_{i}\), respectively, and tj and \(\tilde {t}_{j}\) are the jth element of t and \(\tilde {\mathbf {t}}\), respectively. The optimization problem (8) is in the form of the fused Lasso signal approximator (FLSA) [34]. The general FLSA problem has many variables, and numerical optimization algorithms were developed to solve the FLSA problem [34, 35]. However, our problem has only two variables, which enables us to find the solution of (8) in closed form. This is then used in each step of our proximal gradient algorithm for network inference.

Let us define a soft-thresholding operator S(x,a) as follows

where a is a positive constant. Then as shown in [34], if the solution of (8) at λ1=0 is \(\hat \beta _{ij}^{(0)}\) and \(\hat {\tilde \beta }_{ij}^{(0)}\), the solution of (8) at λ1>0 is given by

where \(\tilde \lambda _{1}=\lambda _{1} \lambda ^{(r)}\). Therefore, if we can solve the problem (8) at λ1=0, we can find the solution of (8) at any λ1>0 from (10). It turns out that the solution of (8) at λ1=0 can be found as

where \(\tilde \lambda _{2}=\lambda _{2} \lambda ^{(r)}\). Therefore, our proximal gradient method can solve the network inference problem (6) efficiently through an iterative process, where each step of the iteration solves the optimization problem (6) in closed form specified by (10) and (11). To obtain a complete proximal gradient algorithm, we need to find the step size λ(r) as will be described next.

Stepsize

As mentioned in [33], if the step size λ(r)∈[0,1/L], where L is the Lipschitz constant of ∇g1(αi), then the proximal gradient algorithm converges to yield the optimal solution. We next derive an expression for L. Specifically, we need to find L such that \(\parallel \nabla g_{1}\left (\boldsymbol {\alpha }_{i}^{(1)}\right)-\nabla g_{1}\left (\boldsymbol {\alpha }_{i}^{(2)}\right)\parallel _{2}\le L\parallel \left (\boldsymbol {\alpha }_{i}^{(1)}-\boldsymbol {\alpha }_{i}^{(2)}\right)\parallel _{2}\) for any \(\boldsymbol {\alpha }_{i}^{(1)}\ne \boldsymbol {\alpha }_{i}^{(2)}\), which is equivalent to

for any \(\left (\boldsymbol {\beta }_{i}^{(1)}, \tilde {\boldsymbol {\beta }}_{i}^{(1)}\right)\ne \left (\boldsymbol {\beta }_{i}^{(2)}, \tilde {\boldsymbol {\beta }}_{i}^{(2)}\right)\). Let γ and \(\tilde \gamma \) be the maximum eigenvalues of \(\mathbf {X}_{-i}^{T}\mathbf {X}_{-i}\) and \(\tilde {\mathbf {X}}_{-i}^{T}\tilde {\mathbf {X}}_{-i}\), respectively. It is not difficult to see that (12) will be satisfied if \(L=2(\gamma +\tilde \gamma)\). Note that \(\mathbf {X}_{-i}^{T}\mathbf {X}_{-i}\) and \(\mathbf {X}_{-i}\mathbf {X}_{-i}^{T}\) have the same set of eigenvalues. And thus, γ can be found using a numerical algorithm with a computational complexity of O((min(n,p))2). After obtaining L, the step size of our proximal gradient algorithm can be chosen to be λ(r)=1/L. Note that λ(r) does not change across iterations, and it only needs to be computed once. Since the sum of the eigenvalues of a matrix is equal to the trace of matrix, another possible value for L is \(2\left (\text {trace}\left (\mathbf {X}_{-i}^{T}\mathbf {X}_{-i}\right)+\text {trace}\left (\tilde {\mathbf {X}}_{-i}^{T}\tilde {\mathbf {X}}_{-i}\right)\right)\), which can save the cost of computing γ and \(\tilde \gamma \). However, this value of L is apparently greater than \(2(\gamma +\tilde \gamma)\), which reduces the step size λ(r), and may affect the convergence speed of the algorithm.

Algorithm

The proximal gradient solver of (4) for inference of differential gene networks is abbreviated as ProGAdNet, and is summarized in the following table.

Maximum values of λ 1 and λ 2

The ProGAdNet solver of (4) is outlined in Algorithm 1 with a specific pair of values of λ1 and λ2. However, we typically need to solve the optimization problem (4) over a set of values of λ1 and λ2, and then either use cross validation to determine the optimal values of λ1 and λ2, or use the stability selection technique to determine nonzero elements of βi and \(\tilde {\boldsymbol {\beta }}_{i}\), as will be described later. Therefore, we also need to know the maximum values of λ1 and λ2. In the following, we will derive expressions for the maximum values of λ1 and λ2.

When we determine the maximum values of λ1 (denoted as λ1 max), λ2 can be omitted in our optimization problem, since when λ1=λ1 max, we have βij=0 and \(\tilde {\beta }_{ij} = 0, \forall i\) and j. Thus, we can use the same method for determining the maximum value of λ in the Lasso problem [36] to find λ1 max, which leads to

The maximum value of λ2,λ2 max depends on λ1. It is difficult to find λ2 max exactly. Instead, we will find an upper-bound for λ2 max. Let us denote the objective function in (4) as \(J(\boldsymbol {\beta }_{i}, \tilde {\boldsymbol {\beta }}_{i})\), and let the jth column of X−i (\(\tilde {\mathbf {X}}_{-i}\)) be zi (\(\tilde {\mathbf {z}}_{i}\)). If the optimal solution of (4) is \(\boldsymbol {\beta }_{i}=\tilde {\boldsymbol {\beta }}_{i}=\boldsymbol {\beta }^{*}\), then the subgradient of \(J(\boldsymbol {\beta }_{i}, \tilde {\boldsymbol {\beta }}_{i})\) at the optimal solution should contain the zero vector, which yields

where s1j=1 if βij>0,=−1 if βij<0, or ∈ [−1,1] if βij=0, and s2j∈ [−1,1], and similarly, \(\tilde {s}_{1j}=1\) if \(\tilde {\beta }_{ij}>0, =-1\) if \(\tilde {\beta }_{ij}<0\), or ∈[−1,1] if \(\tilde {\beta }_{ij}=0\), and \(\tilde {s}_{2j}\in [-1, 1]\). Therefore, we should have \(\lambda _{2}>|2\mathbf {z}_{j}^{T}\left (\mathbf {x}_{i}-\mathbf {X}_{-i}\boldsymbol {\beta }^{*}\right)+\lambda _{1}s_{1j}|\) and \(\lambda _{2}>|2\tilde {\mathbf {z}}_{j}^{T}\left (\tilde {\mathbf {x}}_{i}-\tilde {\mathbf {X}}_{-i}\boldsymbol {\beta }^{*}\right)+\lambda _{1}\tilde {s}_{1j}|\), which can be satisfied if we choose \(\lambda _{2}=\max _{j}\max \{\lambda _{1}+ |2\mathbf {z}_{j}^{T}\left (\mathbf {x}_{i}-\mathbf {X}_{-i}\boldsymbol {\beta }^{*}\right)|, \lambda _{1}+|2\tilde {\mathbf {z}}_{j}^{T}\left (\tilde {\mathbf {x}}_{i}-\tilde {\mathbf {X}}_{-i}\boldsymbol {\beta }^{*}\right)| \}\). Therefore, the maximum value of λ2 can be written as

To find λ2 max from (15), we need to know β∗. This can be done by solving the Lasso problem that minimizes \(J(\boldsymbol {\beta }) = \parallel \mathbf {x}_{i}-\mathbf {X}_{-i}\boldsymbol {\beta }\parallel ^{2} + \parallel \tilde {\mathbf {x}}_{i} - \tilde {\mathbf {X}}_{-i}\boldsymbol {\beta }\parallel ^{2} +2\lambda _{1}\parallel \boldsymbol {\beta }\parallel _{1}\) using an efficient algorithm such as glmnet [37].

Stability selection

As mentioned earlier, parameter λ1 encourages sparsity in the inferred gene network, while λ2 induces sparsity in the changes of the network under two conditions. Generally, larger values of λ1 and λ2 induce a higher level of sparsity. Therefore, appropriate values of λ1 and λ2 need to be determined, which can be done with cross validation [37]. However, the nonzero entries of matrices B and \(\tilde {\mathbf {B}}\), estimated with a specific pair of values of λ1 and λ2 determined by cross validation, may not be stable in the sense that small perturbation in the data may result in considerably different B and \(\tilde {\mathbf {B}}\). We can employ an alternative technique, named stability selection [38], to select stable variables, as described in the following.

We first determine the maximum value of λ1, namely λ1 max, using the method described earlier, then choose a set of k1 values for λ1, denoted as \(\mathcal {S}_{1}=\left \{\lambda _{1\max }, \alpha _{1}\lambda _{1\max }, \alpha _{1}^{2}\lambda _{1\max }, \ldots,\ \alpha _{1}^{k_{1}-1}\lambda _{1\max } \right \} \), where 0<α1<1. For each value \(\lambda _{1}\in \mathcal {S}_{1}\), we find the maximum value of λ2, namely λ2 max(λ1), and then choose a set of k2 values for λ2, denoted \(\mathcal {S}_{2}(\lambda _{1})=\{\lambda _{2\max }(\lambda _{1}), \alpha _{2}\lambda _{2\max }(\lambda _{1}), \ldots, \alpha _{2}^{k_{2}-1}\lambda _{2\max }(\lambda _{1}) \}\), where 0<α2<1. This gives a set of K=k1k2 pairs of (λ1,λ2). After we create the parameter space, for each (λ1,λ2) pair in the space, we randomly divide the data \((\mathbf {X}, \tilde {\mathbf {X}})\) into two subsets of equal size, and infer the network with our proximal gradient algorithm using each subset of the data. We repeat this process for N times, which yields 2N estimated network matrices, \(\hat {\mathbf {B}}\) and \(\hat {\tilde {\mathbf {B}}}\). Typically, N=50 is chosen.

Let \(m_{ij}^{(k)}, \tilde m_{ij}^{(k)}\), and \(\Delta m_{ij}^{(k)}\) be the number of nonzero \(\hat {b}_{ij}\)’s and \(\hat {\tilde b}_{ij}\)’s, and \((\hat {b}_{ij}-\hat {\tilde b}_{ij})\)’s, respectively, obtained with the kth pair of (λ1,λ2). Then, \(r_{ij}=\sum \nolimits _{k=1}^{K} m_{ij}^{(k)}/(NK), \tilde r_{ij}=\sum \nolimits _{k=1}^{K} \tilde m_{ij}^{(k)}/(NK)\), and \(\Delta r_{ij}=\sum \nolimits _{k=1}^{K} \Delta m_{ij}^{(k)}/(NK)\) give the frequency of an edge from gene j to gene i detected under two conditions, and the frequency of the changes for an edge from gene j to gene i, respectively. A larger \(r_{ij}, \tilde r_{ij}\), or Δrij indicates a higher likelihood that an edge from gene j to gene i exists, or the edge from gene j to gene i has changed. Therefore, we will use \(r_{ij}, \tilde r_{ij}\) and Δrij to rank the reliability of the detected edges and the changes of edges, respectively. Alternatively, we can declare an edge from gene j to gene i exists if rij≥c or \(\tilde r_{ij}\ge c\); and similarly the edge between gene j to gene i has changed if Δrij≥c, where c is constant and can be any value in [0.6,0.9] [38].

The software package in Additional file 1 includes computer programs that implement Algorithm 1, as well as stability selection and cross validation. The default values for parameters α1,α2,k1, and k2 in stability selection are 0.7, 0.8, 10, and 10, respectively. In cross validation, a set \(\mathcal {S}_{1}\) of k1 values of λ1 and a set \(\mathcal {S}_{2}(\lambda _{1})\) of k2 values of λ2 for each λ1 were created similarly, and the default values of α1,α2,k1, and k2 are 0.6952, 0.3728, 20, and 8, respectively.

Software glmnet and lqa

Two software packages, glmnet and lqa, were used in computer simulations. The software glmnet [37] for solving the Lasso problem is available at https://cran.r-project.org/web/packages/glmnet. The software lqa [28] used in [27] for inferring multiple gene networks is available at https://cran.r-project.org/web/packages/lqa/.

Results

Computer simulation with linear regression model

We generated data from one of p pairs of linear regression models in (3) instead of all p pairs of simultaneous equations in (2), or equivalently (3), as follows. Without loss of generality, let us consider the first equation in (3). The goal was to estimate β1 and \(\tilde {\boldsymbol {\beta }}_{1}\), and then identify pairs (\(\beta _{i1}, \tilde {\beta }_{i1}\)), where \(\beta _{i1}\neq \tilde {\beta }_{i1}\). Entries of n×(p−1) matrices X−1 and \(\tilde {\mathbf {X}}_{-1}\) were generated independently from the standardized Gaussian distribution. In the first simulation setup, we chose n=100 and p−1=200. Taking into account the sparsity in β1, we let 10% of β1’s entries be nonzero. Therefore, twenty randomly selected entries of β1 were generated from a random variable uniformly distributed over the intervals [0.5,1.5] and [−1.5,−0.5], and remaining entries were set to zero. Similarly, twenty entries of \(\tilde {\boldsymbol {\beta }}_{1}\) were chosen to be nonzero. Since the two regression models are similar, meaning that most entries of \(\tilde {\boldsymbol {\beta }}_{1}\) are identical to those of \(\boldsymbol {\beta }_{1}, \tilde {\boldsymbol {\beta }}_{1}\) was generated by randomly changing 10 entries of β1 as follows: 4 randomly selected nonzero entries were set to zero, and 6 randomly selected zero entries were changed to a value uniformly distributed over the intervals [0.5,1.5] and [−1.5,−0.5]. Of note, since the number of nonzero entries in β1 is greater than the number of zero entries, the number of entries changed from zero to nonzero (which is 6) is greater than the number of entries changed from nonzero to zero (which is 4). The noise vectors e1 and \(\tilde {\mathbf {e}}_{1}\) were generated from a Gaussian distribution with mean zero and variance σ2 varying from 0.01 to 0.05, 0.1, and 0.5, and then x1 and \(\tilde {\mathbf {x}}_{1}\) were calculated from (3).

Simulated data \(\mathbf {x}_{1}, \tilde {\mathbf {x}}_{1}, \mathbf {X}_{-1}\) and \(\tilde {\mathbf {X}}_{-1}\) were analyzed with our ProGAdNet, lqa [28] and glmnet [37]. Since lqa was employed by [27], the results of lqa represent the performance of the network inference approach in [27]. The glmnet algorithm implements the Lasso approach in [39]. Both ProGAdNet and lqa estimate β1 and \(\tilde {\boldsymbol {\beta }}_{1}\) jointly by solving the optimization problem (4), but glmnet estimates β1 and \(\tilde {\boldsymbol {\beta }}_{1}\) separately, by solving the following two problems separately, \(\hat {\boldsymbol {\beta }_{1}}=\text {arg min}_{\boldsymbol {\beta }_{1}}\{ \parallel \mathbf {x}_{1}-\mathbf {X}_{-1}\boldsymbol {\beta }_{1}\parallel ^{2} +\lambda _{1}\parallel \boldsymbol {\beta }_{1}\parallel _{1}\), and \(\hat {\tilde {\boldsymbol {\beta }}}_{1}=\text {arg min}_{\tilde {\boldsymbol {\beta }}_{1}}\parallel \tilde {\mathbf {x}}_{1}-\tilde {\mathbf {X}}_{-1}\tilde {\boldsymbol {\beta }}_{1}\parallel ^{2}+\lambda _{2}\parallel \tilde {\boldsymbol {\beta }}_{1}\parallel _{1}\). The lqa algorithm uses a local quadratic approximation of the nonsmooth penalty term [40] in the objective function, and therefore, it cannot shrink variables to zero exactly. To alleviate this problem, we set \(\hat {\beta }_{i1}=0\) if \(|\hat {\beta }_{i1}|<10^{-4}\), and similarly \(\hat {\tilde {\beta }}_{i1}=0\) if \(|\hat {\tilde {\beta }}_{i1}|<10^{-4}\), where \(\hat {\beta }_{i1}\) and \(\hat {\tilde {\beta }}_{i1}\) represent the estimates of βi1 and \(\tilde {\beta }_{i1}\), respectively. Five fold cross validation was used to determine the optimal values of parameters λ1 and λ2 in the optimization problem. Specifically, for ProGAdNet and lqa, the prediction error (PE) was estimated at each pair of values of λ1 and λ2, and the smallest PE along with the corresponding values of λ1 and λ2,λ1 min and λ2 min, were determined. Then, the optimal values of λ1 and λ2 were the values corresponding to the PE that was two standard error (SE) greater than the minimum PE, and were greater than λ1 min and λ2 min, respectively. For glmnet, the optimal values of λ1 and λ2 were determined separately also with the two-SE rule.

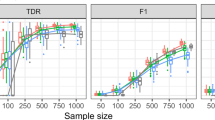

The inference process was repeated for 50 replicates of the data, and the detection power and the false discovery rate (FDR) for \((\boldsymbol {\beta }_{1},\tilde {\boldsymbol {\beta }}_{1})\) and \(\Delta \boldsymbol {\beta }=\boldsymbol {\beta }_{1}-\tilde {\boldsymbol {\beta }}_{1}\) calculated from the results of the 50 replicates in the first simulation setup are plotted in Fig. 1. It is seen that all three algorithms offer almost identical power equal or close to 1, but exhibit different FDRs. The FDR of lqa is the highest, whereas the FDR of ProGAdNet is almost the same as that of glmnet for β1 and \(\tilde {\boldsymbol {\beta }}_{1}\), and the lowest for Δβ1.

In the second simulation setup, we let sample size n=150, noise variance σ2=0.1, and the number of variables p−1 be 500, 800, and 1000. Detection power and FDR are depicted in Fig. 2. Again, the three algorithms have almost identical power, and ProGAdNet offers an FDR similar to that of glmnet, but lower than that of lqa for β1 and \(\tilde {\boldsymbol {\beta }}_{1}\), and the lowest FDR for Δβ1. Simulation results in Figs. 1 and 2 demonstrate that our ProGAdNet offers the best performance when compared with glmnet and lqa. The CPU times of one run of ProGAdNet, lqa, and glmnet for inferring a linear model with n=150,p−1=1,000, and σ2=0.1 at the optimal values of λ1 and λ2 were 5.82, 145.15, and 0.0037 s, respectively.

Computer Simulation with Gene Networks

The GeneNetWeaver software [41] was used to generate gene networks whose structures are similar to those of real gene networks. Note that GeneNetWeaver was also employed by the DREAM5 challenge for gene network inference to simulate golden standard networks [12]. GeneNetWeaver outputs an adjacency matrix to characterize a specific network structure. We chose the number of genes in the network to be p=50, and obtained a p×p adjacency matrix A through GeneNetWeaver. The number of nonzero entries of A, which determined the edges of the network, was 62. Hence the network is sparse, as the total number of possible edges is p(p−1)=2,450. We randomly changed 6 entries of A to yield another matrix \(\tilde {\mathbf {A}}\) as the adjacency matrix of the gene network under another condition. Note that the number of changed edges is small relative to the number of existing edges.

After the two network topologies were generated, the next step was to generate gene expression data. Letting aij be the entry of A on the ith row and the jth column, we generated a p×p matrix B such that bij=0 if aij=0, and bij was randomly sampled from a uniform random variable on the intervals [−1,0) and (0,1] if aij≠0. Another p×p matrix \(\tilde {\mathbf {B}}\) was generated such that \(\tilde {b}_{ij}=b_{ij}\) if \(\tilde {a}_{ij}=a_{ij}\), or \(\tilde {b}_{ij}\) was randomly generated from a uniform random variable on the intervals [−1,0) and (0,1] if \(\tilde {a}_{ij}\ne a_{ij}\). Note that (2) gives X=E(I−B)−1 and \(\tilde {\mathbf {X}}=\tilde {\mathbf {E}}(\mathbf {I}-\tilde {\mathbf {B}})^{-1}\). These relationships suggest generating first entries of E and \(\tilde {\mathbf {E}}\) independently from a Gaussian distribution with zero mean and unit variance, and then finding matrices X and \(\tilde {\mathbf {X}}\) from these two equations, respectively. With real data, gene expression levels X and \(\tilde {\mathbf {X}}\) are measured with techniques such as microarray or RNA-seq, and there are always measurement errors. Therefore, we simulated measured gene expression data as Y=X+V and \(\tilde {\mathbf {Y}}=\tilde {\mathbf {X}}+\tilde {\mathbf {V}}\), where V and \(\tilde {\mathbf {V}}\) model measurement errors that were independently generated from a Gaussian distribution with zero mean and variance σ2 that will be specified later. Fifty pairs of network replicates and their gene expression data were generated independently.

Finally, gene networks were inferred with our ProGAdNet algorithm by solving the optimization problem (4), where \(\mathbf {x}_{i}, \mathbf {X}_{-i}, \tilde {\mathbf {x}}_{i}\), and \(\tilde {\mathbf {X}}_{-i}\) were replaced with the measured gene expression data \(\mathbf {y}_{i}, \mathbf {Y}_{-i}, \tilde {\mathbf {y}}_{i}\), and \(\tilde {\mathbf {Y}}_{-i}\). Stability selection was employed to rank the edges that were changed under two conditions. As comparison, we also used Lasso to infer the network topology under each condition by solving the following optimization problems

Note that each optimization problem can be decomposed into p separate problems that can be solved with Lasso. The glmnet algorithm [37] was again used to implement Lasso. The stability selection technique was employed again to rank the differential edges detected by Lasso. The lqa algorithm was not considered to infer simulated gene networks, because it is very slow and its performance is worse than ProGAdNet and Lasso as shown in the previous section. We also employed the GENIE3 algorithm in [42] to infer B and \(\tilde {\mathbf {B}}\) separately, because GENIE3 gave the best overall performance in the DREAM5 challenge [12]. Finally, following the performance assessment procedure in [12], we used the precision-recall (PR) curve and the area under the PR curve (AUPR) to compare the performance of ProGAdNet with that of Lasso and GENIE3. For ProGAdNet and Lasso, the estimate of \(\Delta \mathbf {B}=\mathbf {B}-\tilde {\mathbf {B}}\) was obtained, and the nonzero entries of ΔB were ranked based on their frequencies obtained in stability selection. Then, the PR curve for changed edges was obtained from the ranked entries of ΔB from pooled results for the 50 network replicates. Two lists of ranked network edges were obtained from GENIE3: one for B and the other for \(\tilde {\mathbf {B}}\). For each cutoff value of the rank, we obtain an adjacency matrix A from B as follows: the (i,j)th entry of Aaij=1 if bij is above the cutoff value, and otherwise aij=0. Similarly, another adjacency matrix \(\tilde {\mathbf {A}}\) was obtained from \(\tilde {\mathbf {B}}\). Then, the PR curve for changed edges detected by GENIE3 was obtained from \(\mathbf {A}-\tilde {\mathbf {A}}\), again from pooled results for the 50 network replicates.

Figures 3 and 4 depict the PR curves of ProGAdNet, Lasso, and GENIE3 for measurement noise variance σ2=0.05 and 0.5, respectively. The number of samples varies from 50, 100, 200 to 300. It is seen from Fig. 3 that our ProGAdNet offers much better performance than Lasso and GENIE3. When the noise variance increases from 0.05 to 0.5, the performance of all three algorithms degrades, but our ProGAdNet still outperforms Lasso and GENIE3 considerably, as shown in Fig. 4. Table 1 lists AUPRs of ProGAdNet, Lasso and GENIE3, which again shows that our ProGAdNet outperforms Lasso and GENIE3 consistently at all sample sizes.

Analysis of breast cancer data

We next use the ProGAdNeT algorithm to analyze RNA-seq data of breast tumors and normal tissues. In The Cancer Genome Atlas (TCGA) database, there are RNA-seq data for 1098 breast invasive carcinoma (BRCA) samples and 113 normal tissues. The RNA-seq level 3 data for 113 normal tissues and their matched BRCA tumors were downloaded. The TCGA IDs of these 226 samples are given in Additional file 2. The scaled estimates of gene expression levels in the dataset were extracted, and they were multiplied by 106, which yielded transcripts per million value of each gene. The batch effect was corrected with the removeBatchEffect function in the Limma package [43] based on the batch information in the TCGA barcode of each sample (the “plate” field in the barcode). The RNA-seq data include expression levels of 20,531 genes. Two filters were used to obtain informative genes for further network analysis. First, genes with their expression levels in the lower 30 percentile were removed. Second, the coefficient of variation (CoV) was calculated for each of the remaining genes, and then genes with their CoVs in the lower 70 percentile were discarded. This resulted in 4310 genes, and their expression levels in 113 normal tissues and 113 matched tumor tissues were used by the ProGAdNet algorithm to jointly infer the gene networks in normal tissues and tumors, and then to identify the difference in the two gene networks. The list of the 4310 genes is in Additional file 3, and their expression levels in tumors and normal tissues are in two data files in the software package in Additional file 1.

Since small changes in bji in the network model (1) may not have much biological effect, we regarded the regulatory effect from gene j to gene i to be changed using the following two criteria rather than the simple criterion \(\tilde b_{ji}\ne b_{ji}\). The first criterion is \(|\tilde b_{ji}- b_{ji} |\ge \min \{ |\tilde b_{ji}|, |b_{ji}|\}\), which ensures that there is at least one-fold change relative to \(\min \{ |\tilde b_{ji}|, |b_{ji}|\}\). However, when one of \(\tilde b_{ji}\) and bji is zero or near zero, this criterion does not filter out very small \(|\tilde b_{ji}- b_{ji} |\). To avoid this problem, we further considered the second criterion. Specifically, nonzero \(\tilde b_{ji}\) and bji for all j and i were obtained, and the 20 percentile value of all \(|\tilde b_{ji}|\) and |bji|, T, was found. Then, the second criterion is \(\max \{|\tilde b_{ji}|, |b_{ji}|\}\ge T\). As in computer simulations, the stability selection was employed to identify network changes reliably. As the number of genes, 4310, is quite big, it is time consuming to repeat 100 runs per λ1 and λ2 pair. To reduce the computational burden, we used five-fold cross validation to choose the optimal values of λ1 and λ2 based on the two-SE rule used in computer simulation, and then performed stability selection with 100 runs for the pair of optimal values. Note that stability selection at an appropriate point of hyperparameters is equally valid compared with that done along a path of hyperparameters [38]. The threshold for Δrij for determining network changes as described in the Method section was chosen to be c=0.9.

Our network analysis with ProGAdNeT identified 268 genes that are involved in at least one changed edge. Names of these genes are listed in Additional file 4. We named the set of these 268 genes as the dNet set. We also extracted the raw read count of each gene from the RNA-seq dataset and employed DESeq2 [44] to detect the differentially expressed genes. The list of 4921 differentially expressed genes detected at FDR < 0.001 and fold change ≥1 is also in Additional file 4. Among 268 dNet genes, 196 genes are differentially expressed, and the remaining 72 genes are not differentially expressed, as shown in Additional file 4.

To assess whether the dNet genes relate to the disease status, we performed gene set enrichment analysis (GSEA) with the C2 gene sets in the molecular signatures database (MSigDB) [45, 46]. C2 gene sets consist of 3777 human gene sets that include pathways in major pathway dabases such as KEGG [47], REACTOME [48], and BIOCARTA [49]. After excluding gene sets with more than 268 genes or less than 15 genes, 2844 gene sets remained. Of note, the default value for the minimum gene set size at the GSEA website is 15. Here we also excluded the gene sets whose size is greater than 268 (the size of the dNet set), because large gene sets may tend to be enriched in a small gene set by chance. Searching over the names of these 2844 gene sets with key words “breast cancer”, “breast tumor”, “breast carcinoma” and “BRCA” through the “Search Gene Sets” tool at the GSEA website identified 258 gene sets that are related to breast cancer. Using Fisher’s exact test, we found that 121 of 2844 C2 gene sets were enriched in the dNet gene set at a FDR of < 10−3. The list of the 121 gene sets is in Additional file 5. Of these 121 gene sets, 31 are among the 258 breast cancer gene sets, which is highly significant (Fisher’s exact test p-value 2×10−7). The top 20 enriched gene sets are listed in Table 2. As seen from names of these gene sets, 11 of the 20 gene sets are breast cancer gene sets, and 7 sets are related to other types of cancer. These GSEA results clearly show that the dNet gene set that our ProGAdNet algorithm identified is very relevant to the breast cancer.

Analysis of kidney cancer data

We also analyzed another dataset in the TCGA database, the kidney renal clear cell carcinoma (KIRC) dataset, which contains the RNA-seq data of 463 tumors and 72 normal tissues. The RNA-seq level 3 data for the 72 normal tissues and their matched tumors were downloaded. The TCGA IDs of these 144 samples are given in Additional file 6. We processed the KIRC data in the same way as in processing the BRCA data. After the two filtering steps, we again got expression levels of 4310 genes. The list of the 4310 genes is in Additional file 7, and their expression levels in 72 tumors and 72 normal tissues are in two data files in Additional file 1.

Analysis of the KIRC data with ProGAdNet identified 1091 genes that are involved in at least one changed edge. We chose the top 460 genes that are involved in at least 3 changed edge to do further GSEA. Names of these 460 genes are listed in Additional file 8. We named the set of these 460 genes as the dNetK set. We also extracted the raw read count of each gene from the RNA-seq dataset and employed DESeq2 [44] to detect the differentially expressed genes. The list of 5432 differentially expressed genes detected at FDR < 0.001 and fold change ≥1 is also in Additional file 8. Among 460 dNetK genes, 395 genes are differentially expressed, and the remaining 65 genes are not differentially expressed, as shown in Additional file 8.

After excluding genes sets with more than 460 genes or less than 15 genes from the 3777 human C2 gene sets in MSigDB, we obtained 3019 gene sets for GSEA. Using Fisher’s exact test, we found 251 of the 3019 C2 gene sets were enriched in the dNetK set of 460 genes at a FDR of < 10−3. The list of the 251 gene sets is in Additional file 9. The top 20 enriched gene sets are listed in Table 3. Among the top 20 gene sets, 2 gene sets are related to kidney diseases, 8 gene sets are related to several different types of cancer, and 5 gene sets are related to the immune system. The 460 genes were ranked according to the number of changed edges that these genes are involved in, and the top 10 genes are NADH Dehydrogenase (Ubiquinone) 1 Alpha Subcomplex 4-Like 2 (NDUFA4L2), Uromodulin (UMOD), Angiopoietin-Like 4 (ANGPTL4), Nicotinamide N-methyltransferase (NNMT), Carbonic anhydrase 9 (CA9), Insulin-like growth factor binding protein 3 (IGFBP3), Apolipoprotein E/C1 (APOE/C1), complement component 3 (C3), vimentin (VIM), and complement C4A. Eight of the top 10 genes except C3 and C4A have been reported to be implicated in renal cell carcinoma (RCC), as discussed in the next section.

Discussion

Computer simulations demonstrated that our ProGAdNet significantly outperformed three other algorithms, glmnet, GENIE3, and lqa, in detecting network changes. The performance gain of ProGAdNet over glmnet and GENIE3 is expected, because glmnet and GENIE3 infer two gene networks separately, while ProGAdNet infers two networks jointly and takes into account the similarity between two networks. Although ProGAdNet and lqa solve the same optimization problem, ProGAdNet significantly outperforms lqa. The performance gap is due to the fact that the lqa algorithm uses an approximation of the objective function, whereas our algorithm solves optimization problem (4) exactly. In other words, our ProGAdNet algorithm can always find the optimal solution to the optimization problem, since the objective function is convex, but the lqa algorithm generally cannot find the optimal solution. Moreover, our computer simulations show that our ProGAdNet algorithm is much faster than the lqa algorithm.

As mentioned earlier, eight of the top 10 genes in the differential gene network of KIRC except C3 and C4A have been reported to be implicated in renal cell carcinoma (RCC). Specifically, NDUFA4L2 is overexpressed in clear cell RCC (ccRCC); its mRNA level is correlated with tumor stage and overall survival time [50, 51]; and the association of NDUFA4L2 with ccRCC is regulated by ELK1 [52]. UMOD expression is downregulated in RCC [53, 54], and the homozygous genotype of UMOD is associated with more aggressive RCC [55]. ANGPTL4 plays an important role in several cancers [56–60]. It has been showed that the serum ANGPTL4 level in RCC patients were higher than the level in patients with other types of solid tumor, such as bladder cancer, breast cancer, gastrointestinal cancer and lung adenocarcinoma, suggesting that the serum ANGPTL4 may be a diagnostic and prognostic biomarker for RCC [61]. NNMT is over-expressed in RCC; its high expression level is significantly associated with unfavorable prognosis of RCC [62]; and it can potentially serve as a biomarker for early detection of RCC [63]. CA9 is a transmembrane member of the carbonic anhydrase family. It is not expressed in healthy renal tissue, but is overexpressed in most ccRCC; it is a promising molecular marker for diagnosis, prognosis and therapy of CCRCC [64, 65]. IGFBP3 shows overexpression in association with markers of poor prognosis in many tumour types [66], including RCC [67]. Single nucleotide polymorphisms (SNPs) at the APOE/C1 locus are found to be associated with RCC risk [68]. The expression of vimentin was upregulated significantly in RCC [69, 70], and the expression level of vimetin was positively correlated with the pathological grade and clinical stage of RCC [70]. These results show that the dNetK gene set that our ProGAdNet algorithm identified from the KIRC dataset is informative about the cancer disease status.

Conclusion

In this paper, we developed a very efficient algorithm, named ProGAdNet, for inference of two gene networks based on gene expression data under two different conditions, which were further used to identify differential changes in the network. Computer simulations showed that our ProGAdNet offered much better inference accuracy than existing algorithms. Analysis of a set of RNA-seq data of breast tumors and normal tissues with ProGAdNet identified a set of genes involved in differential changes of the gene network. A number of gene sets of breast cancer or other types of cancer are significantly enriched in the identified gene set. Network analysis of a kidney cancer dataset also identified a set of genes involved in network changes, and the majority of the top genes identified have been reported to be implicated in kidney cancer. These results show that the identified gene sets are very informative about the disease status of the tissues. As gene network rewiring occurs frequently under different molecular context, our ProGAdNet algorithm provides a valuable tool for identifying changed gene-gene interactions.

Abbreviations

- AUPR:

-

Area under the PR curve

- BRCA:

-

Breast invasive carcinoma

- CoV:

-

Coefficient of variation

- DiNA:

-

Differential network analysis

- FDR:

-

False discovery rate

- FLSA:

-

Fused Lasso signal approximator

- GGM:

-

Gaussian graphical model

- GSEA:

-

Gene set enrichment analysis

- KIRC:

-

Kidney renal clear cell carcinoma

- PE:

-

Prediction error

- PR:

-

Precision-recall

- ProGAdNet:

-

proximal gradient algorithm for differential network

- RCC:

-

Renal cell carcinoma

- SE:

-

Standard error

- SNP:

-

Single nucleotide polymorphism TCGA: The cancer genome atlas

- TF:

-

Transcription factor

References

Ideker T, Krogan NJ. Differential network biology. Mol Syst Biol. 2012; 8:565.

Califano A. Rewiring makes the difference. Mol Syst Biol. 2011; 7:463.

Harbison CT, Gordon DB, Lee TI, Rinaldi NJ, Macisaac KD, Danford TW, Hannett NM, Tagne JB, Reynolds DB, Yoo J, Jennings EG, Zeitlinger J, Pokholok DK, Kellis M, Rolfe PA, Takusagawa KT, Lander ES, Gifford DK, Fraenkel E, Young RA. Transcriptional regulatory code of a eukaryotic genome. Nature. 2004; 431(7004):99–104.

Workman CT, Mak HC, McCuine S, Tagne JB, Agarwal M, Ozier O, Begley TJ, Samson LD, Ideker T. A systems approach to mapping DNA damage response pathways. Science. 2006; 312(5776):1054–9.

Bandyopadhyay S, Mehta M, Kuo D, Sung MK, Chuang R, Jaehnig EJ, Bodenmiller B, Licon K, Copeland W, Shales M, Fiedler D, Dutkowski J, Guenole A, van Attikum H, Shokat KM, Kolodner RD, Huh WK, Aebersold R, Keogh MC, Krogan NJ, Ideker T. Rewiring of genetic networks in response to DNA damage. Science. 2010; 330(6009):1385–9.

Luscombe NM, Babu MM, Yu H, Snyder M, Teichmann SA, Gerstein M. Genomic analysis of regulatory network dynamics reveals large topological changes. Nature. 2004; 431(7006):308–12.

Mani KM, Lefebvre C, Wang K, Lim WK, Basso K, Dalla-Favera R, Califano A. A systems biology approach to prediction of oncogenes and molecular perturbation targets in B-cell lymphomas. Mol Syst Biol. 2008;4. https://doi.org/10.1038/msb.2008.2.

Wang K, Saito M, Bisikirska BC, Alvarez MJ, Lim WK, Rajbhandari P, Shen Q, Nemenman I, Basso K, Margolin AA, Klein U, Dalla-Favera R, Califano A. Genome-wide identification of post-translational modulators of transcription factor activity in human B cells. Nat Biotechnol. 2009; 27(9):829–39.

Bansal M, Belcastro V, Ambesi-Impiombato A, di Bernardo D. How to infer gene networks from expression profiles. Mol Syst Biol. 2007; 3:78.

Markowetz F, Spang R. Inferring cellular networks - a review. BMC Bioinformatics. 2007; 8(Suppl 6):5.

Sun N, Zhao H. Reconstructing transcriptional regulatory networks through genomics data. Stat Methods Med Res. 2009; 18(6):595–617.

Marbach D, Costello JC, Kuffner R, Vega NM, Prill RJ, Camacho DM, Allison KR, Kellis M, Collins JJ, Stolovitzky G. Wisdom of crowds for robust gene network inference. Nat Meth. 2012; 9(8):796–804.

Butte AJ, Tamayo P, Slonim D, Golub TR, Kohane IS. Discovering functional relationships between RNA expression and chemotherapeutic susceptibility using relevance networks. Proc Natl Acad Sci USA. 2000; 97(22):12182–6.

Basso K, Margolin AA, Stolovitzky G, Klein U, Dalla-Favera R, Califano A. Reverse engineering of regulatory networks in human B cells. Nat Genet. 2005; 37(4):382–90.

Dobra A, Hans C, Jones B, Nevins JR, Yao G, West M. Sparse graphical models for exploring gene expression data. J Multivar Anal. 2004; 90:196–212.

Schäfer J, Strimmer K. An empirical Bayes approach to inferring large-scale gene association networks. Bioinform. 2005; 21(6):754–64.

Friedman N, Linial M, Nachman I, Pe’er D. Using Bayesian network to analyze expression data. J Comput Biol. 2000; 7:601–20.

Segal E, Shapira M, Regev A, Pe’er D, Botstein D, Koller D, Fridman N. Module networks: identifying regulatory modules and their condition-specific regulators from gene expression data. Nat Genet. 2003; 34:166–78.

Gardner TS, di Bernardo D, Lorenz D, Collins JJ. Inferring genetic networks and identifying compound mode of action via expression profiling. Science. 2003; 301:102–5.

di Bernardo D, Thompson MJ, Gardner TS, Chobot SE, Eastwood EL, Wojtovich AP, Elliott SJ, Schaus SE, Collins JJ. Chemogenomic profiling on a genome-wide scale using reverse-engineered gene networks. Nat Biotechnol. 2005; 23(3):377–83.

Schäfer J, Strimmer K. A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Stat Appl Genet Mol Biol. 2005; 4:32.

Bonneau R, Reiss D, Shannon P, Facciotti M, Hood L, Baliga N, Thorsson V. The inferelator: an algorithm for learning parsimonious regulatory networks from systems-biology data sets de novo. Genome Biol. 2006; 7(5):36.

De la Fuente A. From ‘differential expression’ to ‘differential networking’ – identification of dysfunctional regulatory networks in diseases. Trends Genet. 2010; 26(7):326–33.

Tegner J, Yeung MK, Hasty J, Collins JJ. Reverse engineering gene networks: integrating genetic perturbations with dynamical modeling. Proc Natl Acad Sci USA. 2003; 100(10):5944–9.

Jeong H, Mason SP, Barabássi AL, Oltvai ZN. Lethality and centrality in protein networks. Nature. 2001; 411(6833):41–2.

Thieffry D, Huerta AM, Pérez-Rueda E, Collado-Vides J. From specific gene regulation to genomic networks: a global analysis of transcriptional regulation in Escherichia coli. Bioessays. 1998; 20(5):433–40.

Omranian N, Eloundou-Mbebi JM, Mueller-Roeber B, Nikoloski Z. Gene regulatory network inference using fused lasso on multiple data sets. Sci Rep. 2016; 6:20533.

Ulbricht J. lqa: penalized likelihood inference for glms. 2012. http://CRAN.R-project.org/package=lqa.

Lichtblau Y, Zimmermann K, Haldemann B, Lenze D, Hummel M, Leser U. Comparative assessment of differential network analysis methods. Brief Bioinform. 2016; 18(5):837–50.

Thieffry D, Huerta AM, Pérez-Rueda E, Collado-Vides J. From specific gene regulation to genomic networks: a global analysis of transcriptional regulation in escherichia coli. Bioessays. 1998; 20(5):433–40.

Tegner J, Yeung MS, Hasty J, Collins JJ. Reverse engineering gene networks: integrating genetic perturbations with dynamical modeling. Proc Natl Acad Sci. 2003; 100(10):5944–9.

Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. J R Stat Soc Ser B Stat Methodol. 2005; 67:91–108.

Parikh N, Boyd S. Proximal algorithms. Foundations and Trends Ⓡ in Optimization. 2014; 1(3):127–239.

Friedman J, Hastie T, Hofling H, Tibshirani R. Pathwise coordinate optimization. Ann Appl Stat. 2007; 1(2):302–32.

Hoefling H. A path algorithm for the fused lasso signal approximator. J Comput Graph Stat. 2010; 19(4):984–1006.

Tibshirani R, Bien J, Friedman J, Hastie T, Simon N, Taylor J, Tibshirani RJ. Strong rules for discarding predictors in lasso-type problems. J R Statist Soc B. 2012; 74:245–66.

Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J Stat Softw. 2010; 33(1):1–1722.

Meinshausen N, Bühlmann P. Stability selection. J R Statist Soc B. 2010; 72(4):417–73.

Tibshirani R. Regression shrinkage and selection via the Lasso. J R Stat Soc Ser B. 1996; 58:267–88.

Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Stat Assoc. 2001; 96:1348–60.

Schaffter T, Marbach D, Floreano D. GeneNetWeaver: in silico benchmark generation and performance profiling of network inference methods. Bioinformatics. 2011; 27(16):2263–70.

Irrthum A, Wehenkel L, Geurts P, et al.Inferring regulatory networks from expression data using tree-based methods. PloS ONE. 2010; 5(9):12776.

Smyth GK. Limma: linear models for microarray data In: Gentleman R, Carey VJ, Huber W, Irizarry RA, Dudoit S, editors. Bioinformatics and Computational Biology Solutions Using R and Bioconductor. New York: Springer: 2005. p. 397–420.

Love MI, Huber W, Anders S. Moderated estimation of fold change and dispersion for RNA-seq data with DESeq2. Genome Biol. 2014; 15(12):550.

Subramanian A, Tamayo P, Mootha VK, Mukherjee S, Ebert BL, Gillette MA, Paulovich A, Pomeroy SL, Golub TR, Lander ES, et al.Gene set enrichment analysis: a knowledge-based approach for interpreting genome-wide expression profiles. Proc Natl Acad Sci. 2005; 102(43):15545–50.

Liberzon A, Birger C, Thorvaldsdóttir H, Ghandi M, Mesirov JP, Tamayo P. The molecular signatures database hallmark gene set collection. Cell Syst. 2015; 1(6):417–25.

Kanehisa M, Goto S, Sato Y, Furumichi M, Tanabe M. Kegg for integration and interpretation of large-scale molecular data sets. Nucleic Acids Res. 2011; 40(D1):109–14.

Fabregat A, Sidiropoulos K, Garapati P, Gillespie M, Hausmann K, Haw R, Jassal B, Jupe S, Korninger F, McKay S, et al.The reactome pathway knowledgebase. Nucleic Acids Res. 2015; 44(D1):481–7.

Nishimura D. Biocarta. Biotech Softw Internet Rep Comput Softw J Scient. 2001; 2(3):117–20.

Minton DR, Fu L, Mongan NP, Shevchuk MM, Nanus DM, Gudas LJ. Role of NADH dehydrogenase (ubiquinone) 1 alpha subcomplex 4-like 2 in clear cell renal cell carcinoma. Clin Cancer Res. 2016; 22(11):2791–801.

Liu L, Lan G, Peng L, Xie X, Peng F, Yu S, Wang Y, Tang X. NDUFA4L2 expression predicts poor prognosis in clear cell renal cell carcinoma patients. Ren Fail. 2016; 38(8):1199–205.

Wang L, Peng Z, Wang K, Qi Y, Yang Y, Zhang Y, An X, Luo S, Zheng J. NDUFA4L2 is associated with clear cell renal cell carcinoma malignancy and is regulated by ELK1. PeerJ. 2017; 5:4065.

Eikrem OS, Strauss P, Beisland C, Scherer A, Landolt L, Flatberg A, Leh S, Beisvag V, Skogstrand T, Hjelle K, et al.Development and confirmation of potential gene classifiers of human clear cell renal cell carcinoma using next-generation RNA sequencing. Scand J Urol. 2016; 50(6):452–62.

Sandim V, de Abreu Pereira D, Kalume DE, Oliveira-Carvalho AL, Ornellas AA, Soares MR, Alves G, Zingali RB. Proteomic analysis reveals differentially secreted proteins in the urine from patients with clear cell renal cell carcinoma. Urol Oncol: Semin Orig Inv. 2016; 34(1):e5–e11.

Trevisani F, Larcher A, Cinque A, Capitanio U, Ripa F, Vago R, Bettiga A, Benigni F, Carenzi C, Muttin F, Bertini R. The association of uromodulin genotype with renal cancer aggressiveness. Eur Urol Focus. 2017. PMID: 28753889. [Epub ahead of print].

Li H, Ge C, Zhao F, Yan M, Hu C, Jia D, Tian H, Zhu M, Chen T, Jiang G, et al. Hypoxia-inducible factor 1 alpha–activated angiopoietin-like protein 4 contributes to tumor metastasis via vascular cell adhesion molecule-1/integrin β1 signaling in human hepatocellular carcinoma. Hepatology. 2011; 54(3):910–9.

Kim SH, Park YY, Kim SW, Lee JS, Wang D, DuBois RN. ANGPTL4 induction by prostaglandin E2 under hypoxic conditions promotes colorectal cancer progression. Cancer Res. 2011; 71(22):7010–20.

Zhang H, Wong C, Wei H, Gilkes D, Korangath P, Chaturvedi P, Schito L, Chen J, Krishnamachary B, Winnard Jr P, et al.HIF-1-dependent expression of angiopoietin-like 4 and L1CAM mediates vascular metastasis of hypoxic breast cancer cells to the lungs. Oncogene. 2012; 31(14):1757.

Le Jan S, Amy C, Cazes A, Monnot C, Lamandé N, Favier J, Philippe J, Sibony M, Gasc J-M, Corvol P, et al.Angiopoietin-like 4 is a proangiogenic factor produced during ischemia and in conventional renal cell carcinoma. Am J Pathol. 2003; 162(5):1521–8.

Verine J, Lehmann-Che J, Soliman H, Feugeas J-P, Vidal J-S, Mongiat-Artus P, Belhadj S, Philippe J, Lesage M, Wittmer E, et al.Determination of angptl4 mRNA as a diagnostic marker of primary and metastatic clear cell renal-cell carcinoma. PLoS ONE. 2010; 5(4):10421.

Dong D, Jia L, Zhou Y, Ren L, Li J, Zhang J. Serum level of ANGPTL4 as a potential biomarker in renal cell carcinoma. Urol Oncol: Semin Orig Inv. 2017;35(5):279–85.

Zhang J, Xie X-y, Yang S-w, Wang J, He C. Nicotinamide N-methyltransferase protein expression in renal cell cancer. J Zhejiang Univ Sci B. 2010; 11(2):136–43.

Kim DS, Choi YD, Moon M, Kang S, Lim J-B, Kim KM, Park KM, Cho NH. Composite three-marker assay for early detection of kidney cancer. Cancer Epidemiol Prev Biomark. 2013; 22(3):390–8.

Tostain J, Li G, Gentil-Perret A, Gigante M. Carbonic anhydrase 9 in clear cell renal cell carcinoma: a marker for diagnosis, prognosis and treatment. Eur J Cancer. 2010; 46(18):3141–8.

Tostain J, Li G, Gentil-Perret A, Gigante M. Carbonic anhydrase 9 in clear cell renal cell carcinoma: a marker for diagnosis, prognosis and treatment. Eur J Cancer; 46(18):3141–8.

Baxter RC. IGF binding proteins in cancer: mechanistic and clinical insights. Nat Rev Cancer. 2014; 14(5):329.

Takahashi M, Papavero V, Yuhas J, Kort E, Kanayama H-O, Kagawa S, Baxter RC, Yang XJ, Gray SG, Teh BT. Altered expression of members of the IGF-axis in clear cell renal cell carcinoma. Int J Oncol. 2005; 26(4):923–31.

Moore LE, Brennan P, Karami S, Menashe I, Berndt SI, Dong LM, Meisner A, Yeager M, Chanock S, Colt J, et al.Apolipoprotein E/C1 locus variants modify renal cell carcinoma risk. Cancer Res. 2009; 69(20):8001–8.

Ho MY, Tang SJ, Chuang MJ, Cha TL, Li JY, Sun GH, Sun KH. TNF- α induces epithelial–mesenchymal transition of renal cell carcinoma cells via a GSK3 β-dependent mechanism. Mol Cancer Res. 2012; 10(8):1109–19.

Shi Z-G, Li S-Q, Li Z-J, Zhu X-J, Xu P, Liu G. Expression of vimentin and survivin in clear cell renal cell carcinoma and correlation with p53. Clin Transl Oncol. 2015; 17(1):65–73.

Acknowledgement

Not applicable.

Funding

This work was supported by the National Science Foundation (CC-1319981 to XC) and National Institute of General Medical Sciences (5R01GM104975 to XC). The funding body did not played any roles in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Availability of data and materials

The software package implementing the ProGAdNet algorithm and computer simulations is in Additional file 1. The RNA-Seq data of 113 breast tumors and 113 normal tissues used in differential gene network analysis can be downloaded from the website of Gnomic Data Commons at https://Gd.cancer.gov/using the TCGA sample IDs in Additional file 2. The RNA-Seq data of 72 KIRC and 72 normal tissues used in network analysis can also be downloaded from the Gnomic Data Commons website using the TCGA sample IDs in Additional file 6.

Author information

Authors and Affiliations

Contributions

XC conceived the idea and supervised the project. XC, CW, and FG designed the algorithm. CW performed computer simulation with linear regression models and analysis of real data. FG performed computer simulation with gene networks. GBG contributed to the development of the algorithm and computer simulations. GD contributed to real data analysis. XC, CW, and FG wrote the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

No ethics approval was needed for this study.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1

Software package for the ProGAdNet algorithm and computer simulations. (ZIP 8786 kb)

Additional file 2

The list of the TCGA IDs of 113 BRCA and 113 normal tissue samples. (XLSX 10 kb)

Additional file 3

The list of 4310 genes whose expression levels in BRCA and normal tissues are analyzed by ProGAdNet. The two files for the expression levels of these genes in tumors and normal tissues are in the software package in Additional file 1. (XLSX 78 kb)

Additional file 4

The list of 268 genes that are involved in at least one changed network edges identified from the gene expression data of BRCA and normal tissues. Also included is the list of differentially expressed genes in BRCA. (XLSX 582 kb)

Additional file 5

The list of 121 MSigDB C2 gene sets that are significantly enriched in the set of 268 dNet genes in Additional file 4 identified from the BRCA data. (XLSX 15 kb)

Additional file 6

The list of the TCGA IDs of 72 KIRC and 72 normal tissue samples. (XLSX 6.55 kb)

Additional file 7

The list of 4310 genes whose expression levels in KIRC and normal tissues are analyzed by ProGAdNet. The two files for the expression levels of these genes in tumors and normal tissues are in the software package in Additional file 1. (XLSX 76 kb)

Additional file 8

The list of 460 genes that are involved in at least three changed network edges identified from the gene expression data of KIRC and normal tissues. Also included is the list of differentially expressed genes in KIRC. (XLSX 646 kb)

Additional file 9

The list of 251 MSigDB C2 gene sets that are significantly enriched in the set of 460 dNetK genes in Additional file 8 identified from the KIRC data. (XLSX 22 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Wang, C., Gao, F., Giannakis, G.B. et al. Efficient proximal gradient algorithm for inference of differential gene networks. BMC Bioinformatics 20, 224 (2019). https://doi.org/10.1186/s12859-019-2749-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12859-019-2749-x