Abstract

Background

This paper presents a case study that demonstrates the evolution of a project entitled "Enhancing QUality-of-care In Psychosis" (EQUIP) that began approximately when the U.S. Department of Veterans Affairs' Quality Enhancement Research Initiative (QUERI), and implementation science were emerging. EQUIP developed methods and tools to implement chronic illness care principles in the treatment of schizophrenia, and evaluated this implementation using a small-scale controlled trial. The next iteration of the project, EQUIP-2, was further informed by implementation science and the use of QUERI tools.

Methods

This paper reports the background, development, results and implications of EQUIP, and also describes ongoing work in the second phase of the project (EQUIP-2). The EQUIP intervention uses implementation strategies and tools to increase the adoption and implementation of chronic illness care principles. In EQUIP-2, these strategies and tools are conceptually grounded in a stages-of-change model, and include clinical and delivery system interventions and adoption/implementation tools. Formative evaluation occurs in conjunction with the intervention, and includes developmental, progress-focused, implementation-focused, and interpretive evaluation.

Results

Evaluation of EQUIP provided an understanding of quality gaps and how to address related problems in schizophrenia. EQUIP showed that solutions to quality problems in schizophrenia differ by treatment domain and are exacerbated by a lack of awareness of evidence-based practices. EQUIP also showed that improving care requires creating resources for physicians to help them easily implement practice changes, plus intensive education as well as product champions who help physicians use these resources. Organizational changes, such as the addition of care managers and informatics systems, were shown to help physicians with identifying problems, making referrals, and monitoring follow-up. In EQUIP-2, which is currently in progress, these initial findings were used to develop a more comprehensive approach to implementing and evaluating the chronic illness care model.

Discussion

In QUERI, small-scale projects contribute to the development and enhancement of hands-on, action-oriented service-directed projects that are grounded in current implementation science. This project supports the concept that QUERI tools can be useful in implementing complex care models oriented toward evidence-based improvement of clinical care.

Similar content being viewed by others

Background

Shortly after the inauguration of the U.S. Department of Veterans Affairs (VA) Quality Enhancement Research Initiative (QUERI) in 1998, a Request for Proposals (RFP) was released for Investigator-Initiated Research (IIR) projects that focused on implementing clinical guidelines in VA healthcare facilities. Recognizing that implementation of guidelines was not a straightforward endeavor, the RFP suggested particular attention be paid to barriers to guideline implementation, such as "provider issues (knowledge, attitudes, and behavior) and system issues (e.g., resources, culture, patient population, etc.)." At that time, "implementation" was to be operationalized "in terms of observed changes in practice and, when possible, changes in patient and system outcomes (cost, quality of care, average length of stay, policy or procedure changes, practice variations), i.e., not mere dissemination of, or pronouncements about, guidelines." The science of implementation was still in development; specific methods for engaging in implementation science had not yet been spelled out, and instead, more traditional approaches were being used to design and assess the process of implementation.

In this paper, we present the evolution of a project that began approximately when QUERI and implementation science began, and that has been transformed, with continued funding, into a project that explicitly engages in implementation science as it is currently defined and operationalized within QUERI [1]. The initial project, "Enhancing QUality-of-care In Psychosis," or EQUIP, developed methods and tools to apply a chronic illness care model in schizophrenia, and evaluated the implementation of this care model using a small-scale controlled trial. The EQUIP intervention used strategic tools to increase the adoption and improve the implementation of this care model. It included substantial qualitative methods, though its formative evaluation was modest by current standards.

Evaluation of EQUIP led to a more recent project, EQUIP-2, which is a larger-scale trial of the chronic illness care model implementation currently in progress. Tools from EQUIP have been refined, and improvements have been made to the original implementation method. In addition, EQUIP-2 incorporates a more complete formative evaluation to optimize future, broader implementation of the EQUIP intervention. Our ability to design the project in this way reflects recent advances that have been made in the science of implementation, particularly with regard to the various types of formative evaluation that can be used over the stages of a project [2]. In describing the evolution of the EQUIP project, we illustrate the value of the QUERI expectation that study development and refinement should occur in implementation research within and across phased, improvement-focused projects. We hope the paper will stimulate additional scientific discussion about the challenges of implementation.

This article is one in a Series of articles documenting implementation science frameworks and approaches developed by the U.S. Department of Veterans Affairs (VA) Quality Enhancement Research Initiative (QUERI). QUERI is briefly outlined in Table 1 and described in more detail in previous publications [3, 4]. The Series' introductory article [1] highlights aspects of QUERI that are related specifically to implementation science, and describes additional types of articles contained in the QUERI Series.

Below we provide a brief overview of the Mental Health QUERI Center which supports the current project. We then briefly describe EQUIP and the evaluation research methods used in the project, followed by a presentation of the EQUIP findings. The second half of the paper concentrates on a description of EQUIP-2 methods, which was funded through a different mechanism than EQUIP, and, as noted above, is more clearly a project that engages in implementation science. We conclude by reflecting on the utility of the QUERI process and proposing directions for future hands-on, action-oriented research [4].

The Mental Health QUERI (MHQ) focus on schizophrenia

Schizophrenia is a chronic medical disorder that occurs in about 1% of the population, and results in substantial morbidity and mortality when poorly treated. Although evidence-based practices (EBPs) improve outcomes in schizophrenia, these treatments are not often used [5, 6]. Some EBPs, such as 'Assertive Community Treatment' and 'Individualized Placement and Support,' have not been widely implemented, thereby limiting patient access. For other EBPs, such as clozapine (commonly used drug for schizophrenia) and caregiver services, clinicians may lack the competencies to deliver them, and typical clinic organization is not consistent with their use. In addition, evidence-based quality improvement (EBQI, [7]) has been nearly impossible in treating schizophrenia because existing medical records (including electronic medical records) lack reliable information regarding patient symptoms, side-effects, and functioning [8]. Although national organizations, including the VA, have made implementation of appropriate care for schizophrenia a high priority [9, 10], there has been only modest success in developing interventions to overcome implementation barriers [11–13]. Clearly, interventions that enhance the implementation of evidence-based treatments are needed in schizophrenia. The interventions tested in EQUIP and EQUIP-2 involve tools for supporting the implementation of chronic illness care principles in schizophrenia.

EQUIP Methods

This section describes the EQUIP project and implementation intervention methods in more detail, and the methods used in the formative evaluation component of EQUIP.

EQUIP overview and specific aims

Funded in 2001, the goals of EQUIP were to develop, implement and evaluate a strategy designed to apply the chronic illness care model to the outpatient treatment of schizophrenia in a Step 4, Phase 1 QUERI project (see Table 1). As noted above, projects responding to the 1998 RFP were geared toward "guideline implementation." In schizophrenia, application of a chronic illness care model requires attention to several sets of pertinent guidelines, including established principles of chronic illness management [14, 15] and national treatment guidelines for schizophrenia. At the time of EQUIP, these guidelines included the American Psychiatric Association guidelines [16], the Agency for Healthcare Research and Quality Patient Outcomes Research Team (PORT) treatment recommendations [17], and a VA treatment algorithm (These guidelines have subsequently been updated [18, 19]). Taken together, the EQUIP care model focused on improving treatment in three domains: 1) treatment assertiveness and care coordination, 2) guideline-concordant medication management of symptoms and side-effects, and 3) family services.

The specific aims of EQUIP were to: 1) Assess, in a randomized, controlled trial, the effect of a chronic illness care model for schizophrenia relative to usual care on: a) clinician attitudes regarding controlling symptoms and side-effects, and regarding family/caregiver involvement in care; b) clinician practice patterns and adherence to guideline recommendations; c) patient compliance with treatment recommendations; d) patient clinical outcomes (e.g., symptoms, side-effects, quality of life, and satisfaction); and e) patient utilization of treatment services; and 2) Assess, using mixed qualitative and quantitative methods, the success of the implementation strategy's impact on uptake of the model.

EQUIP research design and methods

The chronic illness care principles were evaluated at two outpatient mental health clinics within two large, urban VA medical centers in Southern California. At these two clinics, psychiatrists were randomized to the best practice intervention (care model) or control (treatment as usual). Case managers and patients were assigned to the same study arm as the psychiatrists with whom they were associated. At the third clinic, within one of the medical centers, all the clinicians and patients were assigned to the control group. The chronic illness care model intervention was developed, implemented and fully operational in January 2003 and was sustained for more than 15 months. The relevant institutional review boards approved all trial procedures.

Clinicians were eligible for the study if they practiced at one of the clinics. Eligible clinicians were given information about the study and the opportunity to enroll. Patients were eligible if they were at least 18 years old, had a diagnosis of schizophrenia or schizoaffective disorder, had at least one visit with an enrolled psychiatrist during a four-month sampling period immediately before the enrollment period (i.e., "visit-based sampling" [6]), and had at least one clinic visit during a five-month enrollment period. When an eligible patient came into the clinic during the enrollment period, he or she was provided with information about the study and was given the opportunity to enroll. The intervention included 32 psychiatrists, 1 nurse practitioner, 3 nurse case managers, and 173 patients. The control group included 43 psychiatrists, 1 psychiatric pharmacist, 3 nurse case managers, and 225 patients. Informed consent was obtained from all patients, or their legal conservators, and all clinicians.

The study included clinical interventions, delivery system interventions, and adoption/implementation tools. These interventions and tools are presented in Table 2. One of the innovations of EQUIP was the use of the Medical Informatics Network Tool (MINT) to provide instant, summarized clinical information (via a "PopUp" window, see Table 2) to clinicians as they accessed the patient's medical record. As noted in the table, adoption and implementation tools also were utilized to enhance the utility and effectiveness of the intervention.

EQUIP formative evaluation methods

In addition to the implementation strategy described above, EQUIP involved formative evaluation. Table 3 depicts the methods that were utilized in the evaluation.

Pre- and post-implementation semi-structured interviews and surveys were conducted to assess experience with research, clinical practice and competencies, plus expectations and observations of the implementation. At the time of the post-implementation survey, the research team was already planning EQUIP-2 (described below) and, therefore, specific feedback was desired for the next phase of implementation. These pre- and post-implementation interviews and surveys were conducted by research staff. There was an attempt to interview and survey as many psychiatrists as possible from both the intervention and control arms of the study.

Mid-implementation interviews and surveys were conducted to assess the process of intervention implementation. These mid-intervention interviews were conducted by an independent contractor (A. Brown). Via surveys, clinicians were asked specifically how the informatics system was working for them, as well as about the effect of the Quality Report. Both the mid-implementation interview and survey were conducted with a sub-sample of psychiatrists- those who were most involved with the implementation due to higher caseloads of patients in the sample. As a result of the timing of this mid-implementation feedback, changes were made midway through, to make relevant interventions more effective and appealing.

EQUIP results

Main evaluation findings

The evaluation of EQUIP provided an understanding of quality gaps and how to address related problems in schizophrenia. Our findings are summarized in the left column of Table 4.

EQUIP revealed that solutions to quality problems in schizophrenia differ by treatment domain. For example, challenges to implementing family services proved to be very different from challenges to implementing weight management using wellness groups. Improving family services required assessment of each patient-caregiver relationship, intensive negotiation with patients and caregivers, major care reorganization to accommodate family involvement, and attention to clinician competencies (e.g., knowledge, attitude, and skills). Improving weight and wellness required assessment of the problem in each patient, the establishment of therapeutic groups, involvement of nutrition and recreational services, and help with referrals and follow-ups.

EQUIP also revealed that quality problems can arise from poor clinician competencies [20]. For example, we found clinician competency problems in the use of clozapine. This clozapine competency problem is well established anecdotally, although there is little empirical evidence of it. The main competency problems that we encountered, in at least a subset of clinicians, were: 1) clinicians were not trained in the use of clozapine, or had not used it despite training; 2) clinicians were not credentialed to use clozapine in their settings; 3) clinicians were discouraged by the possibility that having patients on clozapine would necessitate longer clinical visits with more clinical effort; and/or 4) clinicians did not believe clozapine would be helpful. Quality problems can also arise due to difficulty in changing psychiatric treatments. In EQUIP, we noted that psychiatrists made minimal use of data showing that their patients had high levels of symptoms and side-effects (Quality Reports), and they also made minimal use of the guidelines that were easily accessible via the MINT "PopUp" (see Table 2) that was available on their computer at every clinical encounter [21].

Quality problems such as these can be exacerbated by a general lack of awareness of evidence-based practices, such as approaches to managing increased weight or treatment-refractory psychosis. During the course of implementation, it became apparent to the research team that increasing the intensity of follow-up (e.g., adding clinic visits) for severely ill patients was of limited use. Clinicians typically did not change treatments in response to clinical data. Therefore, additional treatment visits were of limited value because they were not likely to lead to appropriate changes in treatment in response to psychosis or medication side-effects.

Based on what we were seeing in terms of these persistent quality problems, we began to conclude that improving care required creating resources to support clinicians and reorganizing care to help them easily implement changes in their clinical practices. Also, there was a need for intensive education and product champions who would work with clinicians to encourage awareness and use of these resources. Care managers and the informatics system did help physicians identify clinical problems in their patients, but these interventions and tools needed reassessment and possibly redesign. For example, we learned, through the involvement of the case managers, that tools designed for clinicians may not have the same appeal across types of clinicians. We found that psychiatrists and case managers (though the sample was small) had differing perspectives on the value of being provided with clinical data by their computer (e.g., PopUps) during the encounter. This supported the assertion that formative evaluation data must be gathered from multiple perspectives. As Lyons et al. point out, it is essential to examine the perspectives of multiple individuals: the "single-provider focus does not well represent clinical reality as experienced by interdisciplinary teams [22]."

Finally, we learned that improving care within the VA healthcare system (and perhaps other large healthcare organizations) can require high-level organizational involvement. For example, implementing wellness groups or clozapine clinics required active involvement from nutrition and pharmacy, respectively, which were medical center-wide services. Indeed, sometimes management of these services resided at the level of the Veterans Integrated Service Network (VISN; the 21 VA regions of the United States). Nutrition and pharmacy services did not respond to requests from staff at the level of mental health clinics, and this lack of responsiveness impeded our ability to implement a clozapine clinic or to involve the nutrition department in the wellness programs.

EQUIP-2 Methods

This section describes: 1) the EQUIP-2 project and conceptual framework, 2) the evolution of the EQUIP-2 implementation strategy (i.e., interventions and tools), and 3) the formative evaluation component of EQUIP-2.

EQUIP-2 overview

As noted above, the next phase of work building toward national roll-out of the EQUIP intervention is EQUIP-2 – a Step 4, Phase 2 multi-site evaluation (See Table 1). As the Overview to the Series notes [1], projects within this phase are considered "clinical trials to further refine and evaluate an improvement/implementation program." These trials involve a small sample of facilities conducting the implementation program under somewhat idealized conditions. Moreover, it is noted [4] that these projects require active research team support and involvement, plus modest real-time refinements to maximize the likelihood of success and to study the process for replication requirements. They employ formative evaluation (to monitor and feed back information regarding implementation and acceptance and impacts), as well as development and use of formal measurement tools and evaluation methods.

EQUIP-2 is a three-year project that was funded in January 2006, and aimed at our implementation strategy refinement and broad formative evaluation in eight sites across four VISNs that used the implementation approaches adopted by QUERI. As noted above, EQUIP-2 was funded as an SDP [4], which involves a unique set of expectations in terms of addressing what are called "quality gaps" (i.e., the current lack of evidence-based care for schizophrenia, described above).

The project reflects the growth in knowledge, both at the researcher and study reviewer levels, regarding implementation science. More specifically, unlike EQUIP, this study includes a conceptually-driven study of the process of implementation that includes the effect of various interventions on patients, clinicians, and organizations, and a more conceptually-based implementation strategy. The early implementation efforts described above also prompted the EQUIP-2 investigators to incorporate and/or strengthen several components of the multi-phasic evaluation as described by Stetler and colleagues [2]. These authors recommend: diagnostic analysis of organizational readiness (e.g., using relevant surveys) and interviews regarding attitudes and beliefs; implementation-focused evaluation examining the context where change is taking place; maintenance and optimization of research implementation interventions; and provision of feedback, e.g., regarding progress on targeted goals. They also recommend collecting data from experts, representative clinicians/administrators, and other key informants regarding both pre-implementation barriers and facilitators and post-implementation perceptions of the evidence-based practice and implementation strategy. All of these elements are being utilized in EQUIP-2 and are described further below with regard to the formative evaluation.

Conceptual framework: Simpson Transfer Model & PRECEDE

Though informed by more than one conceptual framework, EQUIP-2 is organized around the Simpson Transfer Model (STM). This model guides the development and refinement of a diversified, flexible menu of tools and interventions to improve schizophrenia care. Incorporating the notion of readiness to change [23] at both the individual and organizational levels, Simpson developed a program change model for transferring research into practice [24]. The STM has provided important conceptual input to many studies in technology transfer [25–27]. This model involves four action stages: exposure, adoption, implementation, and practice. Exposure is dedicated to introducing and training in the new technology; adoption refers to an intention to try a new technology/innovation through a program leadership decision and subsequent support; implementation refers to exploratory use of the technology/innovation; and practice refers to routine use of the technology/innovation, likely with the help of customization of the technology/innovation at the local level. Crucial to moving from exposure to implementation are personal motivations of staff and resources provided by the institution (e.g., training, leadership), organizational characteristics such as "climate for change" (e.g., staff cohesion, presence of product champions, openness to change), staff attributes (e.g., adaptability, self-efficacy), and characteristics of the innovations themselves (e.g., complexity, benefit, observability).

EQUIP-2 also draws upon the PRECEDE planning model for designing behavior change initiatives [28]. Because the STM model does not recommend specific behavior change tools to be used in a knowledge transfer intervention, additional guidance is necessary regarding development of the implementation framework. The PRECEDE acronym stands for "predisposing, reinforcing, and enabling factors in diagnosis and evaluation." PRECEDE stresses the importance of applying multiple interventions to influence the adoption of targeted clinician behaviors. These include: 1) academic detailing and consultation with an opinion leader or clinical expert, which can help predispose clinicians to be willing and able to make the desired changes; 2) patient screening technologies, clinical reminders, and/or other clinical support tools that can enable clinicians to change; and 3) social or economic incentives that can reinforce clinicians' implementation of targeted behaviors.

A key part of the PRECEDE model is the active participation of the target audience in defining the issues and factors that influence targeted behaviors, and in developing and implementing solutions [28]. This participation principle is consistent with the social marketing framework, which emphasizes the importance of understanding a target audience's initial and ongoing perceptions of the innovation, in order to facilitate behavior change [29, 30]. Both PRECEDE and social marketing theory state that messages and interventions should be tailored to perceptions in order to influence the desired behavior change.

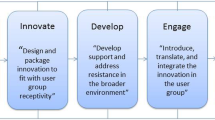

Taken together, the models and frameworks discussed above suggest that the impact of implementation efforts will be maximized when they: 1) are based on assessments of the needs, barriers, and incentives of targeted end users; 2) are based on an understanding of the local context; 3) involve representatives of diverse stake-holder groups in the planning process; 4) use expert involvement in planning, especially when behaviors to be adopted and/or changed are complex; 5) draw on marketing principles for developing and disseminating intervention tools; and 6) secure support and involvement from top level management and product champions [31–33]. Each of these factors is integrated into the STM, which guides the EQUIP-2 strategy and formative evaluation. Table 5 provides an overview of how we will engage in each phase of the STM.

Evolution of the schizophrenia implementation strategy

Several modifications were made in EQUIP-2 as a result of the findings and observations in EQUIP. An overview of each type of strategy is provided below; Table 4 (right column) notes the specific changes made in EQUIP-2 based on findings from EQUIP.

Evidence-based clinical/therapeutic practices

EQUIP-2 is more targeted than EQUIP in its approach to strengthening specific evidence-based practices within the care model. EQUIP-2 focuses on quality improvement by assisting staff to implement specific evidence-based practices that have shown strong impacts on outcomes [7]. In addition, since EQUIP's onset, the VA has made a national commitment to implementing "recovery-oriented" practices in schizophrenia, which is embodied in the President's New Freedom Commission on Mental Health that was established in 2002 [34], and the VA's Mental Health Strategic Plan [10]. Thus, EQUIP-2 provides implementation support on evidence-based practices that support recovery. Each VISN involved was asked to choose two evidence-based practices from a list of four practices that EQUIP-2 was prepared to support (Table 6). All four VISNs chose the same two targets – wellness and supported employment.

Delivery system interventions

During the intervention period, there is a monthly quality meeting at each intervention clinic. This quality meeting is "local" and the site PI (principal investigator), quality coordinator, product champion, and clinicians attend this meeting. During the meeting, each clinician is given his/her personal "Quality Report." Quality meetings: 1) allow pervasive quality problems to be identified, 2) optimize teamwork by encouraging group problem-solving on patient management problems, and 3) identify resources needed to address care problems. Lastly, high-achieving clinicians are discussed (i.e., those who are accomplishing the specific goals of each care target) and incentives are distributed.

Adoption/implementation tools

In terms of marketing, all of the sites had an explicit project "kick-off" that signalled the start of the project and promoted a sense of excitement. Educational activities and trainings commenced both at the coordinating center and at the individual clinics.

In order to promote further engagement and collaboration, additional levels of personnel are involved in the project from its inception. Prior to enrolment, we have had monthly planning calls involving clinic staff, regional managers, and medical center leadership. These calls address practical issues regarding study set-up, as well as plans for marketing. Once enrollment begins, we will have monthly Implementation Team calls involving site PIs, site project directors, product champions, VISN-level staff, and the research team. These calls will examine and address all implementation issues as they arise and will work toward sustainability of the model. During the course of implementation, we maintain the research nurse position from EQUIP in the form of "Quality Coordinators." These individuals were reported to make a difference in EQUIP, not only to clinicians, in that they provided additional clinical information about patients, but also to patients, in that they provided an additional source of support. Further, we engage case managers from the beginning of the project.

We encourage staff to identify who they go to for expertise in the chosen care targets, and ask that individual to volunteer as product champions for the project. We identify product champions based on this information and ask them to participate in monthly Implementation Team calls, as well as other mechanisms of involvement.

Formative evaluation

As noted above, this Phase 2 implementation project involves a more formal evaluation component, due to the importance at this stage of program refinement. Below we describe each component of the formative evaluation.

Developmental evaluation

In EQUIP, we observed that organizational climate and staff engagement and structure significantly affected the degree to which the tools presented in the project were effective. This observation is consistent with emerging implementation science, which itself is increasingly recognizing the importance of context. In order to better understand and "diagnose" [2] the organizational climate of the sites, the Simpson Transfer Model organizational readiness measures will be used in EQUIP-2. We also conduct key informant interviews in order to better understand the clinics' preparedness for the intervention.

Implementation-focused evaluation

Each month during implementation, there are Implementation Team meetings, which serve to link intervention sites and the research team from the coordinating center. Here, barriers and facilitators to implementation are identified and discussed, and group problem-solving and any needed reorganization of care is planned and documented. Product champions and other site personnel also report on any informal feedback they have received about problems with the implementation. As implementation continues, this team works toward sustainability of the model. Minutes from these meetings, project managers' field notes, and quality coordinators' logs are analyzed to evaluate implementation throughout the intervention period. In addition, midway through the intervention, the research team conducts semi-structured interviews with clinicians and clinic managers to evaluate the operationalization of the intervention, necessary refinements to the intervention, and areas of desired guidance. In order to reduce burn-out, promote and maintain enthusiasm for the project, and to optimize successful implementation overall, various interventions are modified if feedback and other formative data indicate that change is necessary.

Progress-focused evaluation

During the course of the project, in order to monitor progress toward the project's goals, we evaluate the degree to which physicians respond to the patient self-assessments. For example, do they provide the necessary and/or requested referrals to supported employment, and do they refer patients to wellness groups for weight management. We also assess the Quality Reports for other outcome progress. When we find that progress is not being made toward the goals, we work in coordination with the clinics to identify barriers to achieving the goals and strategies for addressing and mitigating the barriers.

Interpretive evaluation

At the conclusion of the project, we will conduct semi-structured interviews with the clinicians and clinic managers regarding the usefulness of the EQUIP-2 strategy, their satisfaction with the implementation process, barriers to and facilitators of implementation, and recommendations for future refinements [2]. In order to re-evaluate the delivery system interventions, we will collect quantitative data about the usability of the informatics system. Measures of organizational readiness will be repeated, in order to describe changes in organizational climate during the course of the project, as another potential influence on successful implementation. And the extent to which the care model has become "institutionalized," (i.e., degree to which the care model has become part of routine clinical practice) will be examined.

For the final interpretive evaluation, we will explore all formative evaluation data in light of our outcome data in order to provide: alternative explanations of results; clarification of our implementation effort success (or failure); and assessment of the potential for reproducibility of our implementation strategy in a broader segment of the VA [4].

Discussion

The evolution of the EQUIP implementation program took shape during the development of the field of implementation science. The experience in EQUIP, combined with the guidance received from the subsequent EQUIP-2 protocol, led to a Step 4, Phase 2 activity that more explicitly engages in evidence-based quality improvement and in formal evaluation. Although the formative evaluation in EQUIP was more limited compared to recently developed formative evaluations, it produced important new information regarding quality improvement in schizophrenia. It has been widely acknowledged that there are major problems with the quality of routine care for schizophrenia, but there has been limited research on how to improve this care and on the challenges to improving care. EQUIP identified effective and ineffective methods and strategies for improving care, and provided results that can be of substantial use to people working to improve treatment and outcomes in this disorder.

We agree with Kitson, Harvey, & McCormack [35] that the level and nature of evidence, the environment in which research is placed, and the method in which the process of implementation is undertaken can be equally important in successful implementation: "Implementation may not be successful within a context that is receptive to change, because there is non-existent or ineffective facilitation ... For implementation to be successful, there needs to be a clear understanding of the nature of evidence being used, the quality of context in terms of its ability to cope with change and type of facilitation needed to ensure a successful change process" (p. 152). Accordingly, our approach in EQUIP-2 addresses the interventions, environment, and process equally, and involves thorough assessment of each component.

Clearly a multi-faceted evaluation is needed to develop a comprehensive understanding of barriers to and facilitators of implementation of the chronic illness care model in schizophrenia. In this disorder, barriers to improving care in EQUIP varied by evidence-based practice, and included under-developed clinician competencies, burn-out among clinicians, limited availability of psychosocial treatments, inadequate attention to medication side-effects, and organization of care that was not consistent with high quality practice. Facilitators to improving care included interest among clinicians and policymakers in improving care, and robust specialty mental health services. Summative evaluation is not sufficient to understand these components. Instead, as begun in EQUIP and more fully developed in EQUIP-2, we believe that a conceptually-driven formative evaluation can provide more detail as to the interactions between interventions, process, and context. Research such as EQUIP-2 should help to determine the relative importance of each component, providing direction as to when one component needs more attention than another during the course of a quality improvement implementation project [36]. Scientifically-based qualitative evaluations of quality improvement in schizophrenia may guide project development, strengthen future stages of intervention development (as illustrated in the development of EQUIP-2), and inform future mixed methods evaluation within the field of implementation science.

Disclaimer

The findings and conclusions in this document are those of the authors, who are responsible for its contents, and do not necessarily represent the views of the U.S. Department of Veterans Affairs.

References

Stetler CB, Mittman BS, Francis J: QUERI series overview paper. 2008

Stetler CB, Legro MW, Wallace CM, Bowman C, Guihan M, Hagedorn H, Kimmel B, Sharp ND, Smith JL: The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med. 2006, 21 Suppl 2: S1-8.

McQueen L, Mittman BS, Demakis JG: Overview of the Veterans Health Administration (VHA) Quality Enhancement Research Initiative. Journal of the American Medical Informatics Association. 2004, 11: 339-343. 10.1197/jamia.M1499.

Demakis JG, McQueen L, Kizer KW, Feussner JR: Quality Enhancement Research Initiative (QUERI): A collaboration between research and clinical practice. Med Care. 2000, 38 (6 Suppl 1): I17-25.

Lehman AF: Quality of care in mental health: the case of schizophrenia. Health Aff (Millwood). 1999, 18 (5): 52-65. 10.1377/hlthaff.18.5.52.

Young AS, Sullivan G, Burnam MA, Brook RH: Measuring the quality of outpatient treatment for schizophrenia. Arch Gen Psychiatry. 1998, 55 (7): 611-617. 10.1001/archpsyc.55.7.611.

Rubenstein LV, Meredith LS, Parker LE, Gordon NP, Hickey SC, Oken C, Lee ML: Impacts of Evidence-Based Quality Improvement on Depression in Primary Care. J Gen Intern Med. 2006, 21 (10): 1027-1035. 10.1111/j.1525-1497.2006.00549.x. Epub 2006 Jul 7.

Cradock J, Young AS, Sullivan G: The accuracy of medical record documentation in schizophrenia. J Behav Health Serv Res. 2001, 28 (4): 456-465. 10.1007/BF02287775.

Institute of Medicine: Improving the quality of healthcare for mental health and substance-use conditions: quality chasm series. 2006, Washington, D.C., National Academies Press, [http://www.nap.edu/catalog.php?record_id=11470#toc]

Veterans Health Administration: VHA comprehensive mental health strategic plan. [http://www.ha.osd.mil/afeb/meeting/dec2004meeting/Brown-VHA_Comprehensive_Mental_Health_Strategic_Plan.ppt#256]

Lehman AF, Postrado LT, Roth D, NcNary SW, Goldman HH: Continuity of care and client outcomes in the Robert Wood Johnson Foundation Program on chronic mental illness. Milbank Quarterly. 1994, 72 (1): 105-122. 10.2307/3350340.

Young AS: Evaluating and improving the appropriateness of treatment for schizophrenia. Harvard Review of Psychiatry. 1999, 7 (2): 114-118. 10.1093/hrp/7.2.114.

Miller AL, Crismon ML, Rush AJ, Chiles J, Kashner TM, Toprac M, Carmody T, Biggs M, Shores-Wilson K, Witte B, Bow-Thomas C, Velligan DI, Trivedi M, Suppes T, Shon S: The Texas medication algorithm project: clinical results for schizophrenia. Schizophr Bull. 2004, 30 (3): 627-647.

Bodenheimer T, Wagner EH, Grumbach K: Improving primary care for patients with chronic illness: the chronic care model, Part 2. JAMA. 2002, 288 (15): 1909-1914. 10.1001/jama.288.15.1909.

Wagner EH, Austin BT, Von Korff M: Organizing care for patients with chronic illness. Milbank Q. 1996, 74 (4): 511-544. 10.2307/3350391.

Practice guideline for the treatment of patients with schizophrenia. American Psychiatric Association. Am J Psychiatry. 1997, 154 (4 Suppl): 1-63.

Lehman AF, Steinwachs DM: Translating research into practice: the Schizophrenia Patient Outcomes Research Team (PORT) treatment recommendations. Schizophr Bull. 1998, 24 (1): 1-10.

Lehman AF, Kreyenbuhl J, Buchanan RW, Dickerson FB, Dixon LB, Goldberg R, Green-Paden LD, Tenhula WN, Boerescu D, Tek C, Sandson N, Steinwachs DM: The Schizophrenia Patient Outcomes Research Team (PORT): updated treatment recommendations 2003. Schizophr Bull. 2004, 30 (2): 193-217.

Lehman AF, Lieberman JA, Dixon LB, McGlashan TH, Miller AL, Perkins DO, Kreyenbuhl J, American Psychiatric Association, Steering Committee on Practice Guidelines: Practice guideline for the treatment of patients with schizophrenia, second edition. Am J Psychiatry. 2004, 161 (2 Suppl): 1-56. [http://www.psychiatryonline.com/content.aspx?aid=45859]

Hoge MA, Paris M, Adger H, Collins FL, Finn CV, Fricks L, Gill KJ, Haber J, Hansen M, Ida DJ, Kaplan L, Northey WF, O'Connell MJ, Rosen AL, Taintor Z, Tondora J, Young AS: Workforce competencies in behavioral health: an overview. Adm Policy Ment Health. 2005, 32 (5-6): 593-631. 10.1007/s10488-005-3259-x.

Young AS, Mintz J, Cohen AN, Chinman MJ: A network-based system to improve care for schizophrenia: the medical informatics network tool (MINT). J Am Med Inform Assoc. 2004, 11 (5): 358-367. 10.1197/jamia.M1492.

Lyons SS, Tripp-Reimer T, Sorofman BA, Dewitt JE, Bootsmiller BJ, Vaughn TE, Doebbeling BN: VA QUERI informatics paper: information technology for clinical guideline implementation: perceptions of multidisciplinary stakeholders. J Am Med Inform Assoc. 2005, 12 (1): 64-71. 10.1197/jamia.M1495.

Prochaska JO, DiClemente CC, Norcross JC: In search of how people change. Applications to addictive behaviors. Am Psychol. 1992, 47 (9): 1102-1114. 10.1037/0003-066X.47.9.1102.

Simpson DD: A conceptual framework for transferring research to practice. J Subst Abuse Treat. 2002, 22 (4): 171-182. 10.1016/S0740-5472(02)00231-3.

Dansereau DF, Dees SM: Mapping training: the transfer of a cognitive technology for improving counseling. J Subst Abuse Treat. 2002, 22 (4): 219-230. 10.1016/S0740-5472(02)00235-0.

Liddle HA, Rowe CL, Quille TJ, Dakof GA, Mills DS, Sakran E, Biaggi H: Transporting a research-based adolescent drug treatment into practice. J Subst Abuse Treat. 2002, 22 (4): 231-243. 10.1016/S0740-5472(02)00239-8.

Roman PM, Johnson JA: Adoption and implementation of new technologies in substance abuse treatment. J Subst Abuse Treat. 2002, 22 (4): 211-218. 10.1016/S0740-5472(02)00241-6.

Green LW, Kreuter MW, Deeds SG, Partridge KB: Health education planning model (PRECEDE). Health education planning: a diagnostic approach. Edited by: Green LW, Kreuter MW, Deeds SG, Partridge KB. 1980, Mountain View, CA , Mayfield Publishers

McDonald KM, Graham ID, Grimshaw J: Toward a theoretic basis for quality improvement interventions. Closing the quality gap: a critical analysis of quality improvement strategies. Edited by: Shojania KG, McDonald KM, Wachter RM, Owens DK. 2004, Rockville, MD , Stanford University - University of California San Francisco Evidenced-base Practice Center, Agency for Healthcare Research and Quality, 1: diabetes mellitus and hypertension:

Weinreich NK: Hands-on social marketing. 1999, Thousand Oaks, CA , Sage Publications

Bartholomew LK, Parcel GS, Kok G, Gottleib NH: Changing behavior and environment: how to plan theory- and evidence-based disease management programs. Changing patient behavior: improving outcomes in health and disease management. Edited by: Patterson R. 2001, San Francisco , Joey-Bass, 73-112.

Rosenheck RA: Organizational process: A missing link between research and practice. Psychiatr Serv. 2001, 52 (12): 1607-1612. 10.1176/appi.ps.52.12.1607.

Rubenstein LV, Mittman BS, Yano EM, Mulrow CD: From understanding health care provider behavior to improving health care: the QUERI framework for quality improvement. Quality Enhancement Research Initiative. Medical Care. 2000, 38 (6 Suppl 1): I129-I141.

President’s New Freedom Commission on Mental Health. [http://www.mentalhealthcommission.gov]

Kitson A, Harvey G, McCormack B: Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care. 1998, 7 (3): 149-158.

Rycroft-Malone J, Kitson A, Harvey G, McCormack B, Seers K, Titchen A, Estabrooks C: Ingredients for change: revisiting a conceptual framework. Qual Saf Health Care. 2002, 11 (2): 174-180. 10.1136/qhc.11.2.174.

Desert Pacific MIRECC. [http://www.desertpacific.mirecc.va.gov/equip]

Lewis JR: IBM computer usability satisfaction questionnaires: psychometric evaluation and instructions for use. International Journal of Human-Computer Interaction. 1995, 7: 57-78.

McEvoy JP, Lieberman JA, Stroup TS, Davis SM, Meltzer HY, Rosenheck RA, Swartz MS, Perkins DO, Keefe RS, Davis CE, Severe J, Hsiao JK: Effectiveness of clozapine versus olanzapine, quetiapine, and risperidone in patients with chronic schizophrenia who did not respond to prior atypical antipsychotic treatment. Am J Psychiatry. 2006, 163 (4): 600-610. 10.1176/appi.ajp.163.4.600.

Lieberman JA, Stroup TS, McEvoy JP, Swartz MS, Rosenheck RA, Perkins DO, Keefe RS, Davis SM, Davis CE, Lebowitz BD, Severe J, Hsiao JK: Effectiveness of antipsychotic drugs in patients with chronic schizophrenia. N Engl J Med. 2005, 353 (12): 1209-1223. 10.1056/NEJMoa051688.

Menza M, Vreeland B, Minsky S, Gara M, Radler DR, Sakowitz M: Managing atypical antipsychotic-associated weight gain: 12-month data on a multimodal weight control program. J Clin Psychiatry. 2004, 65 (4): 471-477.

Glynn SM, Cohen AN, Dixon LB, Niv N: The potential impact of the recovery movement on family interventions for schizophrenia: opportunities and obstacles. Schizophr Bull. 2006, 32 (3): 451-463. 10.1093/schbul/sbj066.

Cook JA, Lehman AF, Drake R, McFarlane WR, Gold PB, Leff HS, Blyler C, Toprac MG, Razzano LA, Burke-Miller JK, Blankertz L, Shafer M, Pickett-Schenk SA, Grey DD: Integration of psychiatric and vocational services: a multisite randomized, controlled trial of supported employment. Am J Psychiatry. 2005, 162 (10): 1948-1956. 10.1176/appi.ajp.162.10.1948.

Acknowledgements

The authors thank Daniel Auerbach, MD; Michelle Briggs, RN; Qing Chen; Kimmie Kee, PhD; Kirk McNagny, MD; Daniel Mezzacapo, RN; Jim Mintz, PhD; Jennifer Pope; Christopher Reist, MD; Kuo-Chung Shih; and Julia Yosef, MA.

This project was supported by the Department of Veterans Affairs through the Health Services Research & Development Service (RCD 00-033 and CPI 99–383) and the Desert Pacific Mental Illness Research, Education and Clinical Center (MIRECC); and by the National Institute of Mental Health (NIMH) UCLA-RAND Center for Research on Quality in Managed Care (MH 068639).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

AHB conducted the independent qualitative study, analyzed the data, and drafted the manuscript. ANC served as the project director, and she conducted the pre-post EQUIP semi-structured interviews, analyzed the data, and helped draft the manuscript. MJC collaborated on instrument development and analyses, and helped draft the manuscript. CK served as a product champion for the project, and helped draft the manuscript. ASY conceived of the study, participated in its design and coordination, and helped draft the manuscript. All authors read and approved the final manuscript.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Brown, A.H., Cohen, A.N., Chinman, M.J. et al. EQUIP: Implementing chronic care principles and applying formative evaluation methods to improve care for schizophrenia: QUERI Series. Implementation Sci 3, 9 (2008). https://doi.org/10.1186/1748-5908-3-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1748-5908-3-9