Abstract

Background

The extended Consolidated Standards of Reporting Trials (CONSORT) Statement for Abstracts was developed to improve the quality of reports of randomized controlled trials (RCTs) because readers often base their assessment of a trial solely on the abstract. To date, few data exist regarding whether it has achieved this goal. We evaluated the extent of adherence to the CONSORT for Abstract statement for quality of reports on RCT abstracts by four high-impact general medical journals.

Methods

A descriptive analysis of published RCT abstracts in The New England Journal of Medicine (NEJM), The Lancet, The Journal of American Medical Association (JAMA), and the British Medical Journal (BMJ) in the year 2010 was conducted by two reviewers, independently extracting data from a MEDLINE/PubMed search.

Results

We identified 271 potential RCT abstracts meeting our inclusion criteria. More than half of the abstracts identified the study as randomized in the title (58.7%; 159/271), reported the specific objective/hypothesis (72.7%; 197/271), described participant eligibility criteria with settings for data collection (60.9%; 165/271), detailed the interventions for both groups (90.8%; 246/271), and clearly defined the primary outcome (94.8%; 257/271). However, the methodological quality domains were inadequately reported: allocation concealment (11.8%; 32/271) and details of blinding (21.0%; 57/271). Reporting the primary outcome results for each group was done in 84.1% (228/271). Almost all of the abstracts reported trial registration (99.3%; 269/271), whereas reports of funding and of harm or side effects from the interventions were found in only 47.6% (129/271) and 42.8% (116/271) of the abstracts, respectively.

Conclusions

These findings show inconsistencies and non-adherence to the CONSORT for abstract guidelines, especially in the methodological quality domains. Improvements in the quality of RCT reports can be expected by adhering to existing standards and guidelines as expressed by the CONSORT group.

Similar content being viewed by others

Background

Randomized controlled trials (RCT) are considered evidence of the highest grade in the hierarchy of research designs [1]. Because these reports can have a powerful and immediate impact on patient care, accurate and complete reporting concerning the design, conduct, analysis, and generalizability of the trial should be conveyed [2]. Interpretation of RCT results becomes difficult, if not impossible, with inadequate reports causing biased results to receive false reliability [3]. Thus, adequate reporting is essential for the reader in evaluating how a clinical trial was conducted and in judging its validity [4].

Regarding the methodological details in describing medical research, focusing on RCTs [5], the most prominent guideline, the Consolidated Standards of Reporting Trials (CONSORT), was published [2] and has been updated regularly [3, 6, 7]. Because abstracts are the only substantive portion of a paper that many readers read, authors need to be vigilant that they precisely reflect the content of the article. Thus, the International Committee of Medical Journal Editors (ICMJE) emphasized that articles reporting clinical trials should contain abstracts that include CONSORT items identified as essential [8]. Preliminary appraisals suggest that the use of CONSORT items is associated with improvements in the quality of RCTs being reported [9]. Nevertheless, inconsistent [10] and suboptimal [11, 12] results have also been found. With respect to the recent recommendations from CONSORT for Abstracts [7], the reporting quality of RCT abstracts published in four major general medical journals, The Journal of American Medical Association (JAMA), British Medical Journal (BMJ), The Lancet, and The New England Journal of Medicine (NEJM) in 2006 was found to be suboptimal [12]. This evaluation will offer an update as to whether CONSORT for Abstracts improves the quality of reports, given the suboptimal reporting by these highly esteemed journals.

Adherence to CONSORT requires continual appraisal for improving the precision and transparency of published trials. Consequently, in an effort to promote quality reports of RCT abstracts, we evaluated the extent of adherence to the CONSORT for Abstracts statement for quality of reports in four high-impact general medical journals published during the year 2010.

Methods

Data sources

We selected four high-impact general medical journals: JAMA, BMJ, The Lancet, and NEJM. These journals endorsed the CONSORT in 1996, except the NEJM, which did so in 2005 [10]. Under the article submission instructions for the authors, the two journals, BMJ and The Lancet, clearly recommended following the CONSORT for Abstracts guidelines, whereas JAMA and NEJM referred to the ICMJE’s ‘Uniform Requirements for Manuscripts Submitted to Biomedical Journals’ where the abstract section is required to prepare in accordance with the CONSORT for Abstracts guidelines [13–16]. We conducted a MEDLINE/PubMed search to identify all RCTs published between January and December 2010 with the following search strategy: “Lancet” [Jour] OR “JAMA”[Jour] OR “The New England journal of Medicine”[Jour] OR “BMJ”[Jour] AND (Randomized Controlled Trial[ptyp] AND medline[sb] AND (“2010/01/01”[PDAT] : “2010/12/31”[PDAT])).

Study selection

RCT abstracts of preventive and therapeutic interventions were selected. We included abstracts in which the allocation of participants to interventions was described by the words random, randomly allocated, randomized, or randomization. However, the abstracts of other study designs were excluded: observational studies, economic analyses on RCTs, quasi-randomized trials, cluster randomized trials, diagnostic or screening tests, follow-up studies of previously reported RCTs, editorials, and letters. The modified CONSORT extension for cluster randomized trials [17] and separate Standards for the Reporting of Diagnostic Accuracy (the STARD) statement for reporting studies of diagnostic tests [18] prevented us from including these studies.

Data extraction

Two reviewers (SG and EjK) underwent training in evaluating RCTs using the CONSORT for Abstracts [7] and begun the data extraction. A ‘yes’ or ‘no’ answer was assigned for each item indicating whether the author had reported it. A pilot study was performed with randomly selected abstracts to assess inter-observer agreement using the kappa value [19] and to resolve any discrepancies during the independent data-extraction process. With the assurance of uniformity in the interpretation, the data extraction process was carried out in duplicate for the remaining abstracts.

The data extraction items included the following descriptive information: name of the journal, author addresses including postal address, email or telephone numbers, and medical specialties (e.g. clinical/primary care, surgical/anesthesiology, gynecology/obstetrics, oncology, psychiatry, pediatrics); trial design (e.g., parallel, factorial, superiority, non-inferiority, crossover). Additionally, the following criteria were applied: mentioned randomized or randomly allocated in title, abstract, or both; defined the objective/hypothesis (e.g., to compare the effectiveness of A with B for condition C) or brief background if lacking an objective/hypothesis; identified participant eligibility criteria, settings of data collection, or both; identified interventions intended for each group (intervention and control group); defined primary outcome of the trial; described methods of random sequence generation; described blinding/masking using a generic (simply stating single-blind or double-blind) or detailed (explanation blinding between patients and caregivers, investigators, or outcome assessors) description; provided number of participants randomized and analyzed in each group; identified trial status (whether complete or stopped prematurely); included primary outcome for each group and the estimated effect size with its precision (confidence interval); explained harm reporting; provided conclusions with general interpretation of results or discussed benefit and risks from the intervention; provided information on trial registration and funding. Finally, the abstract format (structured or unstructured) was recorded.

Regarding the assessment of the abstract quality domain, the following characteristics were identified: allocation concealment (simply mentioned or briefly explained the concealment procedures, such as central randomization by telephone or Internet-based system, computer-generated randomization sequence, permuted blocks, sealed envelope with stratification) and blinding (as mentioned above).

Data analysis

Data for descriptive statistics were analyzed using Microsoft Excel 2007 and the SPSS software (ver. 19.0, IBM SPSS®). We determined the overall number and proportion (%) of RCT abstracts that included each of the items recommended by CONSORT for Abstracts. The kappa statistic was used to measure chance-adjusted inter-observer agreement for reporting aspects of trial quality and significance of results.

Results

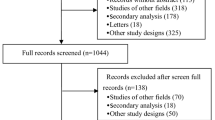

Our search strategy identified 332 RTC abstracts from the four high-impact general medical journals during the initial search. Of these, 61 RCT abstracts (10 observational studies, 7 economic analyses, 20 cluster RCTs, 8 diagnostic tests, 1 quasi-randomized trial, 4 follow-up studies, 5 editorials and letters, and 6 cohort studies) were excluded, as shown in Figure 1. Finally, in total, 271 RCT abstracts were selected for the analysis.

Study characteristics

The numbers of RCTs published in the four high-impact general medical journals are shown in Table 1. Among the 271 included RCT abstracts, 41.7% (113/271) were published in NEJM, followed by Lancet 29.5% (80/271), JAMA 15.9% (43/271), and BMJ 12.9% (35/271). All these journals adopted structured abstract formats: IMRAD (introduction/background, method, results, and discussion) in NEJM and Lancet, and an eight-heading format (objective, design, setting, participants, intervention, main outcome measure, results, and conclusions) in JAMA and BMJ [20].

Reporting of general items

Table 2 shows the assessment of CONSORT checklist items reported in the 271 included RCTs. Almost all RCTs (98.9%; 268/271) identified “randomized” in the abstract, but only 58.7% (159/271) reported the same in the title; among these, reporting by NEJM was very low (4.4%; 5/113) compared with the other journals. Of the included abstracts, 70.5% (191/271) provided the authors’ postal and email addresses. Just 23.3% (63/271) of these RCT abstracts provided a description of the trial design (parallel, factorial, crossover, etc.).

Reporting of trial methodology

In total, 60.9% (165/271) of the abstracts reported both eligibility criteria of participants and settings of data collection; among these, NEJM reporting was only 25.7% (29/113). In contrast, 99.3% (269/271) reported only the eligibility criteria of participants. Most of the abstracts (90.8%; 246/271) described details of the intervention including denomination, usage, and course of treatment for both groups. The trial objective/hypothesis was mentioned in 72.7% (197/271), with the remaining 27.3% (74/271) reporting a background or rationale for the study rather than an objective/hypothesis as recommended by CONSORT. This was clearly seen in the NEJM data: only 35.4% (40/113) of abstracts had clear objectives, compared with 97.7% (42/43) in JAMA and 100% in both Lancet (80/80) and BMJ (35/35). Reporting of a clearly defined primary outcome occurred in 94.8% (257/271) of abstracts overall: it occurred in 100% of abstracts in three of the journals but only 87.6% (99/113) of those in NEJM. Only 31% (84/271) of abstracts mentioned the method of random sequence generation, with better reporting in Lancet (88.6%; 71/80) than in the other journals. Kappa values for methods (participants, interventions, objective, outcome, and randomization) were 0.88 or above.

Reporting of results

From 271 included abstracts, 70.5% (191/271) reported the number of participants randomized to each group, and 68.7% (186/271) reported the number of participants included for analysis (kappa, 0.66 and 0.80). A total of 84.1% (228/271) of abstracts reported the results for the primary outcome measure for each group; however, BMJ reported this value in only 68.6% (24/35) and NEJM 76.9% (87/113), compared with two other journals, which had reporting rates above 90%. The proportion of abstracts describing effect size (ES) and confidence intervals (CI) for the primary outcome was 47.2% (128/271); 63.8% (81 out of 127 trials with binary outcomes) and 40.5% (15 out of 37 trials with continuous outcome) reported both ES and CI. Adverse events or side effects of the intervention were reported in 42.8% (116/271) of abstracts. Inter-observer agreement on abstract reporting of results was 0.86 or above.

Reporting of conclusions

Regarding the conclusions section of the abstracts, only 20.3% (55/271) stated the benefits and harm from the therapy, in contrast to the 72.8% (212/271) of abstracts that reported only benefits. Overall, most of the RCTs reviewed (99.3%; 269/271) reported that the trial was registered, and 47.6% (129/271) reported funding; however, no abstracts in JAMA or BMJ reported funding. Kappa values for conclusions, trial registration, and funding were 0.95 or above.

Reporting of trial quality

In total, 11.8% (32/271) of the abstracts mentioned details of allocation concealment, but this information was lacking from abstracts in NEJM and JAMA. With respect to blinding, 37.6% (102/271) mentioned it in generic terms as single blind or double blind, and 21% (57/271) described blinding in detail. Kappa values for quality domains were 0.82 or above.

Assessing methodology using equal proportion of abstracts per journal

An additional analysis to assess the CONSORT checklist items using an equal proportion of abstracts per journal was carried out. Since BMJ constituted the lowest number of abstracts (n = 35), we selected an equal number of RCT abstracts from each journal using a computer-generated simple random sampling method. Table 3 shows the reporting of methodological quality or key features of trial designs and trial results using an equal proportion of abstracts per journal. The details of trial design were included in 21.4% (30/140), reporting of method of random sequence generation in 30.0% (42/140), allocation concealment in 9.3% (13/140), details of blinding in 20.7% (29/140), number of participants analyzed for each group in 69.3% (97/140), and primary outcome result for each group in 82.1% (115/140).

Discussion

We carried out a descriptive cross-sectional study to assess the quality of abstracts for reports of randomized controlled trials from four high-impact general medical journals published in the year 2010, that is, after the release of the CONSORT for Abstracts guidelines in 2008 [7]. Some of the checklist items such as participant eligibility criteria, details of intervention, definition of primary outcome, reporting of primary outcome results for each group, and trial registration were adequately reported. However, harm and funding were reported in fewer than 50.0% of these abstracts. This inadequate reporting is consistent with previous findings for reporting harm [12, 21] and funding [21, 22]. Two journals, JAMA and BMJ, did not report the source of funding, probably reflecting more of the house style of these journals [13, 15].

Contrary to our expectations, the methodological quality domains were still inadequately reported, with no sign of improvement despite the CONSORT recommendations. Table 4 shows a comparison between the assessment of methodological quality domains in the current study and results of a similar study performed previously in relation to the endorsement of CONSORT for abstracts. As compared to other studies, reporting of allocation concealment (11.8%) and details of blinding (21.0%) is higher but still suboptimal in the current study. Abstract reporting is often subject to space constraints and journal formats, which may lead to disparities between full paper results and abstract results. However, a previous study showed that with a word limit of 250–300 words, the checklist items can easily be incorporated [23]. Nevertheless, when an abstract lacks key details about a trial, the assessment of the validity and the applicability of the results become difficult.

We also assessed reporting of checklist items for individual journals to compare adherence patterns between journals. Although NEJM and Lancet both adopted the IMRAD abstract format, reporting of the specific objective/hypothesis was minimal in NEJM (35.4%), with only background descriptions in 64.6% of abstracts. NEJM has a very specific style for reporting background rather than objectives [16]; thus, the findings here reflect the specific house style of the journal rather than implementation of the CONSORT for Abstracts guideline. Similarly, for the methodological quality domain, reporting in NEJM and JAMA was 0% for the allocation concealment item, a value comparable to that found in a Chinese study [26]. Funding was also reported in 0% of abstracts in JAMA and BMJ. It has previously been shown that studies funded by pharmaceutical companies have four times the odds of having outcomes favorable to the sponsor than do studies funded by other sources, causing publication bias [27]. Regarding the reporting of conclusions, all four journals failed to provide a balanced summary that noted both benefits and harm from the interventions (11.6%–25.7%), an unsatisfactory value compared with the results from a Chinese study (1.0%) [22]. Between-journal assessments also showed that responses to most of the checklist items were suboptimal for NEJM; these results were consistent with past studies [5, 28]. In this inadequate reporting of trials, one positive reporting domain was identified by this study: trial registration, which was reported at a rate of 97.5% by Lancet and 100% by the other three journals. The World Health Organization (WHO), facilitating international collaboration by establishing a clinical trials registry platform [29], and ICMJE’s strict policy of considering trials for publication only if they are registered [30] were likely influences.

Our study has some limitations. Assessment of specialized journals was beyond the scope of this study. Also, we assessed only structured abstracts. Although some studies have suggested that the adoption of structured abstracts by the journals can improve the reporting of trials [31–33], the findings from our study suggest otherwise. The sample of abstracts is influenced by one journal, the NEJM (41.7% of the overall sample); however, a similar trend occurred in 2006 showing the influence of NEJM (41.9%) on overall findings [12]. Moreover, our finding reflects the cross-sectional design. Also, NEJM often published the largest number of RCTs among the other three journals [34]. To minimize the impact of disproportionate abstract samples per journal, we carried out an additional analysis using an equal proportion of abstracts per journal, but the findings were similar with respect to reporting of methodological quality or key features of trial designs and trial results. The analytical strategy known as intention-to-treat (ITT), considered as an optimal approach for preserving the integrity of randomization [35], has been advocated in the full CONSORT 2010 updated guideline [6]; however, this approach has not been incorporated in the CONSORT for Abstracts guideline. Thus, we did not consider inclusion of ITT approach in our assessment. Despite this, our study has several strengths. We reviewed a large number of RCT abstracts published in four high-impact major general medical journals that have universal acceptance among the medical research community. We also conducted an objective data-extraction process, with the domains that were included in the abstracts marked as ‘yes’ and those not reported as ‘no’ based on standard checklist items recommended by the CONSORT group and without reviewer inference. Thus, this study’s methodology is reproducible. Additionally, the period after the CONSORT for Abstracts guidelines were published provides an adequate time frame for the dissemination and endorsement of the CONSORT statement by the research community.

Conclusions

The findings from our study demonstrate inconsistencies and patterns of non-adherence to the CONSORT for Abstracts guidelines, especially in the methodological quality domains. CONSORT is an evolving guideline [6, 36] not considered to be an absolute standard. However, improvements in the quality of RCT reports can be expected by adherence, on the parts both of authors and journal editors during peer review, to existing standards and guidelines as expressed by the CONSORT group. We hope that this will occur in the future.

References

Concato J, Shah N, Horwitz RI: Randomized, controlled trials, observational studies, and the hierarchy of research designs. N Engl J Med. 2000, 342: 1887-1892. 10.1056/NEJM200006223422507.

Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I, Pitkin R, Rennie D, Schulz KF, Simel D, Stroup DF: Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996, 276: 637-639. 10.1001/jama.1996.03540080059030.

Moher D, Schulz KF, Altman DG: The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. Ann Intern Med. 2001, 134: 657-662.

Sanchez-Thorin JC, Cortes MC, Montenegro M, Villate N: The quality of reporting of randomized clinical trials published in Ophthalmology. Ophthalmology. 2001, 108: 410-415. 10.1016/S0161-6420(00)00500-5.

Kane RL, Wang J, Garrard J: Reporting in randomized clinical trials improved after adoption of the CONSORT statement. J Clin Epidemiol. 2007, 60: 241-249. 10.1016/j.jclinepi.2006.06.016.

Moher D, Hopewell S, Schulz KF, Montori V, Gotzsche PC, Devereaux PJ, Elbourne D, Egger M, Altman DG: CONSORT 2010 Explanation and Elaboration: Updated guidelines for reporting parallel group randomised trials. J Clin Epidemiol. 2010, 63: e1-e37. 10.1016/j.jclinepi.2010.03.004.

Hopewell S, Clarke M, Moher D, Wager E, Middleton P, Altman DG, Schulz KF: CONSORT for reporting randomized controlled trials in journal and conference abstracts: explanation and elaboration. PLoS Med. 2008, 5: e20-10.1371/journal.pmed.0050020.

Uniform requirements for manuscripts submitted to biomedical journals: Writing and editing for biomedical publication. J Pharmacol Pharmacother. 2010, 1: 42-58.

Plint AC, Moher D, Morrison A, Schulz K, Altman DG, Hill C, Gaboury I: Does the CONSORT checklist improve the quality of reports of randomised controlled trials? A systematic review. The Medical Journal of Australia. 2006, 185: 263-267.

Folkes A, Urquhart R, Grunfeld E: Are leading medical journals following their own policies on CONSORT reporting?. Contemp Clin Trials. 2008, 29: 843-846. 10.1016/j.cct.2008.07.004.

Mills EJ, Wu P, Gagnier J, Devereaux PJ: The quality of randomized trial reporting in leading medical journals since the revised CONSORT statement. Contemp Clin Trials. 2005, 26: 480-487. 10.1016/j.cct.2005.02.008.

Berwanger O, Ribeiro RA, Finkelsztejn A, Watanabe M, Suzumura EA, Duncan BB, Devereaux PJ, Cook D: The quality of reporting of trial abstracts is suboptimal: survey of major general medical journals. J Clin Epidemiol. 2009, 62: 387-392. 10.1016/j.jclinepi.2008.05.013.

BMJ:http://www.bmj.com/about-bmj/resources-authors/article-types/research,

TheLancet:http://www.thelancet.com/lancet-information-for-authors/article-types-manuscript-requirements,

NEJM:http://www.nejm.org/page/author-center/manuscript-submission,

Campbell MK, Elbourne DR, Altman DG: CONSORT statement: extension to cluster randomised trials. BMJ (Clinical Research ed). 2004, 328: 702-708. 10.1136/bmj.328.7441.702.

Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou PP, Irwig LM, Moher D, Rennie D, de Vet HC, Lijmer JG: The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. Clinical Chemistry. 2003, 49: 7-18. 10.1373/49.1.7.

Viera AJ, Garrett JM: Understanding interobserver agreement: the kappa statistic. Family Medicine. 2005, 37: 360-363.

Nakayama T, Hirai N, Yamazaki S, Naito M: Adoption of structured abstracts by general medical journals and format for a structured abstract. Journal of the Medical Library Association: JMLA. 2005, 93: 237-242.

Xu L, Li J, Zhang M, Ai C, Wang L: Chinese authors do need CONSORT: reporting quality assessment for five leading Chinese medical journals. Contemporary clinical trials. 2008, 29: 727-731. 10.1016/j.cct.2008.05.003.

Wang L, Li Y, Li J, Zhang M, Xu L, Yuan W, Wang G, Hopewell S: Quality of reporting of trial abstracts needs to be improved: using the CONSORT for abstracts to assess the four leading Chinese medical journals of traditional Chinese medicine. Trials. 2010, 11: 75-10.1186/1745-6215-11-75.

Hopewell S, Clarke M, Moher D, Wager E, Middleton P, Altman DG, Schulz KF: CONSORT for reporting randomised trials in journal and conference abstracts. Lancet. 2008, 371: 281-283. 10.1016/S0140-6736(07)61835-2.

Mann E, Meyer G: Reporting quality of conference abstracts on randomised controlled trials in gerontology and geriatrics: a cross-sectional investigation. Zeitschrift fur Evidenz, Fortbildung und Qualitat im Gesundheitswesen. 2011, 105: 459-462. 10.1016/j.zefq.2010.07.011.

Hopewell S, Clarke M, Askie L: Reporting of trials presented in conference abstracts needs to be improved. Journal of Clinical Epidemiology. 2006, 59: 681-684. 10.1016/j.jclinepi.2005.09.016.

Zhang D, Yin P, Freemantle N, Jordan R, Zhong N, Cheng KK: An assessment of the quality of randomised controlled trials conducted in China. Trials. 2008, 9: 22-10.1186/1745-6215-9-22.

Lexchin J, Bero LA, Djulbegovic B, Clark O: Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ (Clinical research ed). 2003, 326: 1167-1170. 10.1136/bmj.326.7400.1167.

Moher D, Jones A, Lepage L: Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA: the Journal of the American Medical Association. 2001, 285: 1992-1995. 10.1001/jama.285.15.1992.

Gulmezoglu AM, Pang T, Horton R, Dickersin K: WHO facilitates international collaboration in setting standards for clinical trial registration. Lancet. 2005, 365: 1829-1831. 10.1016/S0140-6736(05)66589-0.

De Angelis CD, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, Kotzin S, Laine C, Marusic A, Overbeke AJ: Is this clinical trial fully registered? A statement from the International Committee of Medical Journal Editors. Lancet. 2005, 365: 1827-1829. 10.1016/S0140-6736(05)66588-9.

Hopewell S, Eisinga A, Clarke M: Better reporting of randomized trials in biomedical journal and conference abstracts. Journal of Information Science. 2007, 34: 162-173. 10.1177/0165551507080415.

Taddio A, Pain T, Fassos FF, Boon H, Ilersich AL, Einarson TR: Quality of nonstructured and structured abstracts of original research articles in the British Medical Journal, the Canadian Medical Association Journal and the Journal of the American Medical Association. CMAJ: Canadian Medical Association journal = Journal de l’Association Medicale Canadienne. 1994, 150: 1611-1615.

Dupuy A, Khosrotehrani K, Lebbe C, Rybojad M, Morel P: Quality of abstracts in 3 clinical dermatology journals. Archives of Dermatology. 2003, 139: 589-593. 10.1001/archderm.139.5.589.

Hildebrandt M, Vervolgyi E, Bender R: Calculation of NNTs in RCTs with time-to-event outcomes: a literature review. BMC Medical Research Methodology. 2009, 9: 21-10.1186/1471-2288-9-21.

Polit DF, Gillespie BM: Intention-to-treat in randomized controlled trials: recommendations for a total trial strategy. Research in Nursing and Health. 2010, 33: 355-368. 10.1002/nur.20386.

Schulz KF, Altman DG, Moher D: CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. Trials. 2010, 11: 32-10.1186/1745-6215-11-32.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

SG and EjK both participated in the design, conduct, assessment, and drafting of the manuscript. EyK and WK conceived the study, interpreted the data, and revised the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Ghimire, S., Kyung, E., Kang, W. et al. Assessment of adherence to the CONSORT statement for quality of reports on randomized controlled trial abstracts from four high-impact general medical journals. Trials 13, 77 (2012). https://doi.org/10.1186/1745-6215-13-77

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1745-6215-13-77