Abstract

Background

Open access (OA) journals are becoming a publication standard for health research, but it is not clear how they differ from traditional subscription journals in the quality of research reporting. We assessed the completeness of results reporting in abstracts of randomized controlled trials (RCTs) published in these journals.

Methods

We used the Consolidated Standards of Reporting Trials Checklist for Abstracts (CONSORT-A) to assess the completeness of reporting in abstracts of parallel-design RCTs published in subscription journals (n = 149; New England Journal of Medicine, Journal of the American Medical Association, Annals of Internal Medicine, and Lancet) and OA journals (n = 119; BioMedCentral series, PLoS journals) in 2016 and 2017.

Results

Abstracts in subscription journals completely reported 79% (95% confidence interval [CI], 77–81%) of 16 CONSORT-A items, compared with 65% (95% CI, 63–67%) of these items in abstracts from OA journals (P < 0.001, chi-square test). The median number of completely reported CONSORT-A items was 13 (95% CI, 12–13) in subscription journal articles and 11 (95% CI, 10–11) in OA journal articles. Subscription journal articles had significantly more complete reporting than OA journal articles for nine CONSORT-A items and did not differ in reporting for items trial design, outcome, randomization, blinding (masking), recruitment, and conclusions. OA journals were better than subscription journals in reporting randomized study design in the title.

Conclusion

Abstracts of randomized controlled trials published in subscription medical journals have greater completeness of reporting than abstracts published in OA journals. OA journals should take appropriate measures to ensure that published articles contain adequate detail to facilitate understanding and quality appraisal of research reports about RCTs.

Similar content being viewed by others

Background

Randomized controlled trials (RCTs) are considered the best way to compare therapeutic or preventive interventions in medicine [1]. Clear, transparent, and complete reporting of RCTs is necessary for their use in practice and in health evidence synthesis [2, 3]. It is important that presentations of RCTs in abstracts are also complete and clear, because trial validity and applicability can then be quickly assessed. Also, in some settings, such as in developing countries, an abstract may be the only source of information for health professionals because of limited access to the full texts, and the use of abstracts as sole sources of information may adversely influence healthcare decisions [3]. To improve the quality of reporting of RCT abstracts, an extension of the Consolidated Standards of Reporting Trials (CONSORT) statement was developed in 2008 [2, 3]. The Consolidated Standards of Reporting Trials Checklist for Abstracts (CONSORT-A) statement specifies a minimum set of items that authors should include in the abstract of an RCT [3]. So far, evidence shows poor adherence in general and specialty medical journals [4,5,6,7].

Currently, more than half of the studies indexed in the largest biomedical bibliographical database Medline are in open access (OA) [8]. It was claimed that the advent of OA journals would lead to the erosion of scientific quality control. This opinion was based on the assumption that the OA publishers would take over an increasing part of the publishing industry and would not provide the same level of rigorous peer review as traditional subscription publishers, which would result in a decline in the quality of scholarly publishing [9]. However, there is evidence that the overall quality of OA journal publishing is comparable to that in traditional subscription publishing [10, 11]. The aim of this study was to assess the completeness of results reporting in abstracts of RCTs published in traditional subscription journals (members of the International Committee of Medical Journal Editors [ICMJE] [12]) and in OA journals (two oldest journal consortia: Public Library of Science [PLoS] journals and BioMedCentral [BMC] series journals).

Methods

This cross-sectional study included all abstracts of articles about RCTs published in four subscription journals (New England Journal of Medicine [NEJM], JAMA [Journal of the American Medical Association], Annals of Internal Medicine [AIM], and The Lancet) and two collections of OA journals (BMC series journals and PLoS journals) from January 2016 to December 2017. BMJ (British Medical Journal), which is an ICMJE member, was not included in this group, because it has a combination of OA and subscription publishing options and was previously a fully OA journal [13].

Two researchers independently screened the articles for inclusion of articles describing the basic study design for which CONSORT was developed: randomized, double-blind, two-group parallel design. The following study designs were thus excluded: crossover trials, cluster trials, factorial studies, pragmatic studies, superiority trials, noninferiority trials, megatrials, sequential trials, open-label studies, nonblinded studies, single-blind studies, pilot studies, secondary analysis of primary trials, and combined studies (RCT plus other study designs). The literature search, outlined in Additional file 1, was undertaken in the MEDLINE database using the OvidSP interface.

The completeness of reporting in the abstracts was independently assessed by two researchers using the CONSORT-A checklist with 16 items. We did not include the item “authors” (i.e., “contact details for the corresponding author”), because this item is specific to conference abstracts [3]. The completeness of reporting was presented as the percentage of articles in two journal groups reporting the individual items, the average percentage and 95% confidence interval (CI) of reported items for the two journal groups, the median number (95%CI) of reported items for each article group, and the mean difference (95% CI) between abstracts published in 2016 and 2017. The results were compared using the chi-square test, t test, and Mann-Whitney test (MedCalc Statistical Software, Ostend, Belgium).

Results

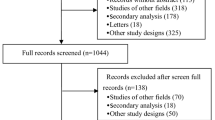

A MEDLINE search retrieved 2329 abstracts published in the subscription journals and 18,011 abstracts published in the OA journals. After screening, 149 abstracts published in the subscription journals (63 [42%] in NEJM, 44 [30%] in Lancet, 36 [24%] in JAMA, 6 [4%] in AIM) and 119 abstracts published in the OA journals (56 [47%] in BMC series journals and 63 [53%] in PLoS journals) remained for analysis (Fig. 1).

Articles in subscription journals had, on average, 79% (95% CI, 77–81%) completely reported items of total 16 items of the CONSORT-A, compared with 65% (95% CI, 63–67%) for articles in OA journals (P < 0.001, chi-square test). The abstracts in subscription journals had a median of 13 (95% CI, 12–13) reported items, and OA journals had a median of 11 (95% CI, 10–11) reported items, of a total of 16 CONSORT-A items (P < 0.001, Mann-Whitney test).

Table 1 presents the completeness of reporting of individual CONSORT-A items. Recruitment was the most completely reported item for both journal groups. The item randomization in subscription journals and the item funding in OA journals had the least complete reporting. Only two abstracts in OA journals contained information about funding (one from pharmaceutical company and one from noncommercial sources). Among the abstracts in subscription journals that reported funding (112 of 149 total [75%]), 63% were from pharmaceutical companies, 30% were from noncommercial sources, and 7% were from both sources. At the level of individual CONSORT-A checklist items, the abstracts in subscription journals had significantly more complete reporting than those in OA journals for all items except trial design, outcome, randomization, blinding (masking), recruitment, and conclusions, where there was no difference in reporting. Abstracts in articles published in OA journals had significantly more complete reporting than subscription journals for the title item. This was due to the fact that in one of the subscription journals, NEJM, the title of the article indicated the study type in only 2 (3%) of 63 abstracts, which represented 42% of the subscription journal article sample. The results for individual journals are presented in Additional file 2.

There was no difference in the completeness of reporting between the two publication years analyzed in our study: 2016 (total n = 145 abstracts) and 2017 (total n = 123 abstracts): 2016–2017 mean difference (MD), − 4.07; 95% CI, − 8.11% to − 0.02% for subscription journals (P = 0.0487); and MD, 3.99; 95% CI, − 0.32% to 8.31% (P = 0.0692) for OA journals.

Discussion

We found that the abstracts of articles on RCTs published in subscription medical journals had better reporting completeness according to CONSORT-A than abstracts published in OA journals. There was no difference in the completeness of reporting between 2016 and 2017 in both journal groups, indicating that this was a real phenomenon reflecting a standard practice and not a temporal fluctuation. It is important to keep in mind that all journals included in our study state explicitly that they follow reporting standards as set in reporting guidelines, such as CONSORT.

The limitations of the study include the fact that we included only well-known traditional and OA journals, so that the results may represent best practices and underestimate adequate reporting in health journals. We had very strict inclusion criteria and restricted the comparison only to two-group, double-blind, parallel trial design, which left out many other trial study designs. The CONSORT statement was originally created for the “standard” two-group parallel design, and CONSORT-A was developed for the original CONSORT checklist; therefore, we decided to take this basic design as the inclusion criterion, because it is possible that journals from the two groups in our study may differ in the types and complexity of the trials they publish, which may represent a significant bias. The journals in our study were predominantly general medical journals and published in developed countries, so they may not be fully representative of the general population of medical journals. We also assessed the completeness of results reporting in the abstracts and not the full text. We decided to include only abstracts because they are available in bibliographical databases, which are often the primary route of access to information for many health professionals [14]. This is especially true for settings where health professionals have limited access to the full texts and read only abstracts of journal articles. In such cases, inadequate reporting in abstracts could seriously mislead a reader regarding interpretation of the trial findings [15, 16]. Although an article abstract should be a clear and accurate reflection of what is included in the article, several studies have highlighted problems in the accuracy and quality of abstracts [17,18,19,20].

The greatest differences between the OA and subscription journals were in adequate descriptions of outcomes and harms, which were more often reported in subscription than in OA journals. In general, underreporting of selective reporting of outcomes is a serious problem, particularly when harms are not reported [21,22,23]. Although subscription journals published this information at least twice as often as OA journals did, the level of reporting of outcomes and harms is below desirable complete reporting (43% of abstracts fully describing outcomes and 77% describing harms). This underreporting has serious consequences because it may impede the interpretation of the benefit-to-risk relationship.

Both OA and subscription journals adhered to the registration policy; all abstracts in subscription journals had trial registration numbers compared with 84% in OA journals. Only 2% of the abstracts in OA journals reported funding, compared with 75% in subscription journals. It is difficult to draw conclusions about these differences in funding reporting, because only 2 abstracts of 119 in OA journals contained this information. However, it is clear that subscription journals practice greater transparency in reporting funding in abstracts of clinical trials.

A possible explanation for the observed differences in trial-reporting completeness in abstracts in our study is that subscription journals have more resources than OA journals, but this is most probably not the case for the journals included in our study. The representatives of OA journals in our study were well-established PLoS journals and BMC series journals: PLoS journals were started with a US$9 million grant [24], and BMC series journals are published by the Springer Nature group, one of the largest scientific publishers [25]. Article processing fees are up to US$3000 for PLoS Medicine [26] and US$3170 for BMC Medicine [27]. It is difficult to compare the revenues of OA journals with those of major ICMJE subscription journals in our study because their revenues are not generally known [28], but there is no reason to believe that OA journals included in our study did not have resources for implementing reporting guidelines and ensuring the completeness of published abstracts. All journals included in the study are selective and have high volumes of submissions, with an acceptance rate of approximately 5% for subscription journals [29,30,31,32]. In the OA group, PLoS Medicine has a 3% acceptance rate [33], whereas BMC series journals have a higher acceptance rate, 45–55%, with some of its journals having acceptance rates below 10% [34].

On the one hand, it can be argued that authors are responsible for completeness of reporting of their studies, including in the abstract. On the other hand, it has been shown that editorial interventions after manuscript acceptance significantly improve the quality of abstracts [35]. Journals are thus well-positioned to ensure that reporting guidelines are followed. They can also help their authors by endorsing tools that have been developed to help authors improve the completeness of their reports, such as the web writing tool based on CONSORT [36]. Recent developments in this field include the Penelope decision-making tool, developed by Penelope Research and the Enhancing the Quality and Transparency of Health Research (EQUATOR) Network [37]. The tool was tested in four BMC series journals in 2016, where it is presented to authors as an embedded element in the manuscript submission system [37]. On the one hand, this indicates that OA journals are open to innovations for better reporting and that they may be more advanced than subscription journals in that respect. On the other hand, subscription journals traditionally offer full editorial support to authors to improve their manuscripts for publication, including abstracts [38, 39].

Conclusion

Our study showed that reporting of RCTs in article abstracts is less complete in OA journals than in subscription journals. OA journals should address this problem and demonstrate that they can publish high-quality articles. After the launch of the cOAlition S initiative to provide full and immediate open access to research publications a reality by 2020 in Europe, OA journals may gain even more importance in publishing [40]. In order to fulfill their expected role, OA journals publishing health research should take appropriate measures to ensure that published articles contain adequate detail to facilitate understanding and quality appraisal of research reports about RCTs.

Availability of data and materials

The data are available from the corresponding author. The datasets generated and analyzed during the current study will be available in the Croatian Digital Academic Archives and Repositories (https://dabar.srce.hr/repozitoriji).

Abbreviations

- AIM :

-

Annals of Internal Medicine

- BMC:

-

BioMedCentral

- BMJ :

-

British Medical Journal

- CI:

-

Confidence interval

- CONSORT-A:

-

Consolidated Standards of Reporting Trials Checklist for Abstracts

- ICMJE:

-

International Committee of Medical Journal Editors

- JAMA :

-

Journal of the American Medical Association

- MD:

-

Mean difference

- NEJM :

-

New England Journal of Medicine

- OA:

-

Open access

- PLoS:

-

Public Library of Science

- RCT:

-

Randomized controlled trial

References

Concato J, Shah N, Horwitz RI. Randomized, controlled trials, observation studies, and the hierarchy of research design. N Engl J Med. 2000;342:1887–92.

Schulz KF, Altman DG, Moher D, CONSORT Group. CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c332.

Hopewell S, Clarke M, Moher D, et al. CONSORT for reporting randomised trials in journal and conference abstracts. Lancet. 2008;371:281–3.

Ghimire S, Kyung E, Kang W, et al. Assessment of adherence to the CONSORT statement for quality of reports on randomized controlled trial abstracts from four high-impact general medical journals. Trials. 2012;13:77.

Can OS, Yilmaz AA, Hasdogan M, et al. Has the quality of abstracts for randomised controlled trials improved since the release of Consolidated Standards of Reporting Trial guideline for abstract reporting? A survey of four high-profile anaesthesia journals. Eur J Anaesthesiol. 2011;28:485–92.

Kuriyama A, Takahashi N, Nakayama T. Reporting of critical care trial abstracts: a comparison before and after the announcement of CONSORT guideline for abstracts. Trials. 2017;18:32.

Mbuagbaw L, Thabane M, Vanniyasingam T, et al. Improvement in the quality of abstracts in major clinical journals since CONSORT extension for abstracts: a systematic review. Contemp Clin Trials. 2014;38:245–50.

Keiko K, Tomoko M, Keiko Y, et al. Remarkable growth of open access in the biomedical field: analysis of PubMed articles from 2006 to 2010. PLoS One. 2013;8:e60925.

National Institutes of Health (NIH). NIH public access policy. 2012. http://publicaccess.nih.gov/public_access_policy_implications_2012.pdf. Accessed 20 May 2019.

Björk BC, Solomon D. Open access versus subscription journals: a comparison of scientific impact. BMC Med. 2012;10:73.

Pastorino R, Milovanovic S, Stojanovic J, et al. Quality assessment of studies published in open access and subscription journals: results of a systematic evaluation. PLoS One. 2016;11:e0154217.

International Committee of Medical Journal Editors. Recommendations for the conduct, reporting, editing, and publication of scholarly work in medical journals. 2017 [updated Dec 2018]. http://www.icmje.org/recommendations/. Accessed 20 May 2019.

Delamothe T, Smith R. Open access publishing takes off. BMJ. 2004;328:1–3.

Borrego Á, Anglada L. Faculty information behaviour in the electronic environment: attitudes towards searching, publishing and libraries. New Libr World. 2016;117:173–85.

Ioannidis JP, Lau J. Completeness of safety reporting in randomized trials: an evaluation of 7 medical areas. JAMA. 2001;285:437–43.

The impact of open access upon public health [editorial]. PLoS Med. 2006;3:e252.

Berwanger O, Ribeiro RA, Finkelsztejn A, et al. The quality of reporting of trial abstracts is suboptimal: survey of major general medical journals. J Clin Epidemiol. 2009;62:387–92.

Hopewell S, Eisinga A, Clarke M. Better reporting of randomized trials in biomedical journal and conference abstracts. J Info Sci. 2007;34:162–73.

Pitkin RM, Branagan MA, Burmeister LF. Accuracy of data in abstracts of published research articles. JAMA. 1999;281:1110–1.

Froom P, Froom J. Deficiencies in structured medical abstracts. J Clin Epidemiol. 1993;46:591–4.

Tang E, Ravaud P, Riveros C, et al. Comparison of serious adverse events posted at ClinicalTrials.gov and published in corresponding journal articles. BMC Med. 2015;13:189.

Riveros C, Dechartres A, Perrodeau E, et al. Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med. 2013;10:e1001566.

McGauran N, Wieseler B, Kreis J, et al. Reporting bias in medical research – a narrative review. Trials. 2010;11:37.

Gordon and Betty Moore Foundation. Public Library of Science to launch new, free-access biomedical journals with $9 million grant. 2002. https://www.moore.org/article-detail?newsUrlName=public-library-of-science-to-launch-new-free-access-biomedical-journals-with-$9-million-grant-from-the-gordon-and-betty-moore-foundation. Accessed 20 May 2019.

Milliot J. The World’s 54 Largest Publishers, 2018. Publishers Weekly. 2018. https://www.publishersweekly.com/pw/by-topic/industry-news/publisher-news/article/78036-pearson-is-still-the-world-s-largest-publisher.html. Accessed 20 May 2019.

PLOS. Publication fees. https://www.plos.org/publication-fees. Accessed 20 May 2019.

BMC Medicine. Fees and funding: article-processing charges. https://bmcmedicine.biomedcentral.com/submission-guidelines/fees-and-funding. Accessed 20 May 2019.

Lundh A, Barbateskovic M, Hróbjartsson A, et al. Conflicts of interest at medical journals: the influence of industry-supported randomised trials on journal impact factors and revenue – cohort study. PLoS Med. 2010;7:e1000354.

New England Journal of Medicine. NEJM author center. https://www.nejm.org/author-center/home. Accessed 20 May 2019.

JAMA. JAMA Network for Authors: About JAMA. https://jamanetwork.com/journals/jama/pages/for-authors#fa-about. Accessed 20 May 2019.

Lancet. The Lancet: Information for Authors. https://www.thelancet.com/pb/assets/raw/Lancet/authors/lancet-information-for-authors.pdf. Accessed 20 May 2019.

Annals of Internal Medicine. Annals of Internal Medicine: Author info. https://annals.org/aim/pages/authors. Accessed 20 May 2019.

PLOS Medicine. PLOS Medicine: Journal Information. https://journals.plos.org/plosmedicine/s/journal-information. Accessed 20 May 2019.

BioMed Central. Publishing your research in BioMed Central journals. http://www.ibp.cas.cn/xxfw/xxsypxzn/200903/W020121031404887510757.pdf. Accessed 20 May 2019.

Wager E, Middleton P. Effects of technical editing in biomedical journals: a systematic review. JAMA. 2002;287:2821–4.

Barnes C, Boutron I, Giraudeau B, et al. Impact of an online writing aid tool for writing a randomized trial report: the COBWEB (Consort-based WEB tool) randomized controlled trial. BMC Med. 2015;13:221.

EQUATOR network. Tools and templates for implementing reporting guidelines. https://www.equator-network.org/toolkits/using-guidelines-in-journals/tools-and-templates-for-implementing-reporting-guidelines/#wizard. Accessed 20 May 2019.

Pitkin RM, Branagan MA, Burmeister LF. Effectiveness of a journal intervention to improve abstract quality. JAMA. 2000;283:481.

Winker MA. The need for concrete improvement in abstract quality. JAMA. 1999;31(281):1129–30.

Science Europe. Open access. https://www.scienceeurope.org/coalition-s/. Accessed 20 May 2019.

Acknowledgements

We appreciate the help with statistical analysis provided by Ivan Buljan, Department of Research in Biomedicine and Health, University of Split School of Medicine, Split, Croatia.

Funding

This research was funded by the Croatian Science Foundation (grant no. IP-2014-09-7672, “Professionalism in Health Care”). The funder had no role in the design of this study during its execution and or in data interpretation.

Author information

Authors and Affiliations

Contributions

IJMC and AM designed and performed the study and wrote the manuscript. They are both responsible for all aspects of the study. Both authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

AM is a member of the Steering Group of the EQUATOR Network (https://www.equator-network.org/). She is the editor of an open access journal, Journal of Global Health. IJMC declares no conflicts of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Search strategies for open access and subscription journals.

Additional file 2.

Reporting of CONSORT for Abstracts for individual journals.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Jerčić Martinić-Cezar, I., Marušić, A. Completeness of reporting in abstracts of randomized controlled trials in subscription and open access journals: cross-sectional study. Trials 20, 669 (2019). https://doi.org/10.1186/s13063-019-3781-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-019-3781-x