Abstract

In this paper, global synchronization problem for a class of Markovian switching complex networks (MSCNs) with mixed time-varying delays under the delay-partition approach is investigated. A novel delay-partition approach is developed to derive sufficient conditions for a new class of MSCNs with mixed time-varying delays. The proposed delay-partition approach can give global synchronization results lower conservatism. Two numerical examples are provided to illustrate the effectiveness of the theoretical results.

Similar content being viewed by others

1 Introduction

In recent years, complex networks have received a lot of research attention since the pioneering work of Watts and Strogatz [1]. The main reason is two-fold: the first reason is that complex networks can be found in almost everywhere in real world, such as the Internet, WWW, the World Trade Web, genetic networks, and social networks; the second reason is that the dynamical behaviors of complex networks have found numerous applications in various fields such as physics, technology, and so on [2–4]. Complex networks are a set of inter-connected nodes, in which each node is a basic unit with specific contents or dynamics. Among all of dynamical behaviors of complex networks, synchronization is one of the most interesting topics and has been extensively investigated [5, 6]. Synchronization phenomena are very common and important in real world networks, such as synchronization phenomena on the Internet, synchronization transfer of digital or analog signals in communication networks, and synchronization related to biological neural networks. Hence, synchronization analysis in complex networks is important both in theory and application [7, 8].

On the one hand, the actual systems may experience abrupt changes in their structure and parameters caused by phenomena such as component failures or repairs, changing subsystem interconnections, and abrupt environmental disturbances. In general, these systems can be modeled by using Markov chains [9–11]. For example, in [12], mean-square exponential synchronization of Markovian switching stochastic complex networks with time-varying delays by pinning control was proposed and in [13], synchronization of Markovian jumping stochastic complex networks with distributed time delays and probabilistic interval discrete time-varying delays was considered. Besides these, the other strategies, which usually include data-driven approaches, support vector machine and multivariate statistical methods, and can be used to process these systems [14–18].

On the other hand, because of the limited speed of signals traveling through the links and the frequently delayed couplings in complex networks, gene regulatory networks, static networks and multi-agent networks, time delays often occur [19–22]. Therefore, recently, synchronization problems in neural networks with mixed-time delays have been extensively studied [23–28]. Although there is some literature [29–33] to investigate synchronization issues of complex networks, to the best of our knowledge, until now, global synchronization of MSCNs via using the delay-partition approach is still rarely paid attention to.

Inspired by the above discussions and idea of [34, 35], we utilize the delay-partition approach to effectively solve mixed time-varying delays of MSCNs such that less conservative conditions of global synchronization can be achieved in this paper. Firstly, a new model for a class of MSCNs with mixed time-varying delays is proposed. Secondly, with using a novel Lyapunov-Krasovskii stability functional, stochastic analysis techniques, and a delay-partition approach, some sufficient synchronization criteria are derived, respectively. Finally, two numerical examples are used to demonstrate the usefulness of derived results. The main contributions of this paper are as follows:

-

(1)

MSCNs model aspect. A new model for a class of MSCNs with mixed time-varying delays is proposed.

-

(2)

A novel delay-partition approach is developed to solve global synchronization for a new class of MSCNs with mixed time-varying delays. This causes our results to have lower conservatism.

Notations: Throughout this paper, the following mathematical notations will be used. denotes the n-dimensional Euclidean space and is the set of real matrices. The superscript T denotes the matrix transposition. means an n-dimensional identity matrix. , where , means that the matrix is real positive semi-definite. For symmetric block matrices or long matrix expressions, an asterisk ⋆ is used to represent a term that is induced by symmetry. stands for a block-diagonal matrix. The Kronecker product of matrices and is a matrix in , which is denoted as . Let be a complete probability space with a filtration satisfying the usual conditions (i.e., the filtration contains all P-null sets and is right continuous). means the expectation of the random variable x. If the dimensions of matrices are not explicitly indicated, that means they are suitable for any algebraic operations.

2 Problem formulation and preliminaries

In this section, the problem formulation and preliminaries are briefly introduced.

Let be a right-continuous Markov chain on the probability space taking values in a finite state space with a generator () given by

where , , () is the transition probability from mode i to mode j, and .

Due to distributed time-varying delays, discrete time-varying delays of complex networks widely existing in signal traveling and complex networks topologies structures being governed by Markov chains, we consider the following MSCNs with mixed time-varying delays:

where is the continuous-time Markov process which describes the evolution of the mode at time t. and are matrices with real values in mode . , and represent node discrete time-varying delay, discrete time-varying coupling delay and distributed time-varying coupling delay, respectively. is the coupling strength in mode , where . is the inner-coupling matrix in mode . represents the outer-coupling matrix, and the diagonal elements of matrix are defined by (, ). Here, .

Remark 1 The MSCN (1), which contains Markovian switching parameters and mixed time-varying delays, in this paper is more practical than that of [29–31]. Although time delays are considered in [29–31], Markovian switching cannot be taken to describe the addressed systems. Furthermore, the MSCN (1) of this paper is clearly different from that of [32, 33]. Their primary differences are mixed time-varying delays. In [32], mixed time-varying delays include node discrete time-varying and distributed time-varying delays. In [33], mixed time-varying delays are comprised of node discrete stochastic time-varying, discrete stochastic time-varying coupling, and distributed time-varying delays.

With the Kronecker product, we can rewrite system (1) in the following compact form:

where

For notational simplicity, we denote matrices , , , , , , , , scalars , , and as , , , , , , , , , , and (), respectively. Therefore, system (2) can be rewritten as follows:

Definition 1 The MSCN (1) is said to achieve global asymptotic synchronization if

where , is a solution of an isolated node and satisfying .

Assumption 1 Time-varying delays in the MSCN (1) satisfy

where .

Assumption 2 (Khalil [36])

For , the continuous nonlinear function f satisfies the following sector-bounded condition:

where , are real constant matrices with .

Assumption 3 There exist positive-definite matrices , (, ), and they satisfy

Lemma 1 (Langville and Stewart [37])

The Kronecker product has the following properties:

-

(1)

,

-

(2)

,

-

(3)

,

-

(4)

.

Lemma 2 (Liu et al. [32])

Let , , , , where , , if and each row sum of μ is equal to zero, then

Lemma 3 (Boyd et al. [38])

Given constant matrices X, Y, Z where , and . Then if and only if

Lemma 4 (Gu [39])

For any positive-definite matrix , scalar , and vector function such that the integrations concerned are well defined, then the following inequality holds:

Lemma 5 (Boyd et al. [38])

For any vector and one positive-definite matrix , the following inequality holds:

Lemma 6 Let , , , , , , , then

where

3 Main results

In this section, global synchronization of the MSCN (1) is investigated by utilizing the Lyapunov-Krasovskii functional method, the stochastic analysis techniques and the delay-partition approach. Furthermore, in order to show the merits of the delay-partition approach, Corollary 1 can also be given, according to Theorem 1.

Theorem 1 Under Assumptions 1-3, Definition 1, for given constants , , , , , and any integer , , , system (1) in the delay-partition approach is globally asymptotically synchronized if there exist positive-definite matrices , , , , arbitrary matrices , , , , , with appropriate dimensions, and positive scalars , , , such that the following LMI holds for all :

where

Proof Construct a Lyapunov-Krasovskii functional candidate as

where

Computing along the trajectory of system (3), and according to Assumption 1, (6)-(8), we can obtain

By Assumption 3, we have

Similar to inequality (13), we get inequality (14) directly from Assumption 3:

It follows from Lemma 4 that

Let = [, , , , , , , … , , , … , , ], where , , … , , then by using the Newton-Leibniz formula, the following equalities are true for any matrices , , (, ) with appropriate dimensions:

Denote , then

where scalar .

By Lemmas 2, 5 and combining (16)-(17), there exist positive-definite matrices and (, ), such that

According to Assumption 2, for and , we can obtain

Substitute (10)-(24) into (9), then taking the expectation on both sides of (9) and using Lemmas 1, 2, 6, we get

By Lemma 3 and Theorem 1, we have

According to Definition 1, the MSCN (1) is global asymptotic synchronization. The proof is completed. □

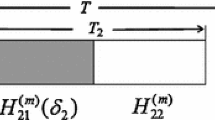

Remark 2 In Theorem 1, the criterion which is the MSCN (1) with mixed time-varying delays under the delay-partition approach can achieve global asymptotic synchronization is established. In proving Theorem 1, it is clear that the time-varying delays and are divided into r and slices, respectively. In [34, 35], the delay-partition approach is used to solve state estimation and stability analysis problems of neural networks with time-varying delay. Although synchronization problems of complex network with time delays were investigated in [29–32], our results in the delay-partition approach in this paper has lower conservatism. The reason is that the integers r and become larger, and the allowable upper bounds of the time-varying delays and will be larger. This will also be analyzed in Remark 3 and be shown in numerical examples.

Corollary 1 Under Assumptions 1-2, Definition 1, for given constants , , , , , system (1) is globally asymptotically synchronized if there exist positive-definite matrices , , , , , , arbitrary matrices , , , , with appropriate dimensions and positive scalars , , , such that the LMI (4) holds for all , , and .

Remark 3 In Corollary 1, the delay-partition approach is not used to solve the synchronization problem of the MSCN (1). Therefore, the upper bounds of the time-varying delays and are and . From the analysis in Remark 2, we know that and can be divided into r and slices by using the delay-partition approach in Theorem 1. Therefore, the allowable upper bounds of the time-varying delays and of Corollary 1 are smaller than that of Theorem 1. That means conservatism of Theorem 1 is lower than that of Corollary 1.

4 Numerical example

In this section, two numerical examples are given to illustrate the effectiveness of the derived results. The initial conditions of the numerical simulations are taken as , , . The synchronization total error of the network are defined as . For given transition rate matrix, a Markov chain can be generated. We consider the following transition rate matrix:

The Markov chain is described in Figure 1.

Example 1 In this example, we investigate global synchronization of the MSCN (1) comprised of three coupled nodes:

where is the state variable of the i th (). All parameters are given as follows:

For given , , , and , combining the above parameters of system (28) and employing the LMI toolbox in MATLAB to solve the LMI defined in Theorem 1, it is easy to verify that

It is obvious that under the above feasible solution, system (28) is globally asymptotically synchronized. The simulation results of system (28) with are shown in Figures 2-3.

Example 2 In this example, in order to test Corollary 1, we make and . For given , , by using the LMI toolbox in MATLAB and Corollary 1, , and must satisfy and if we still choose , , , , , , Q, and system (28) in Example 1. The simulation results of system (28) with are shown in Figures 4-5.

Remark 4 From Examples 1-2, it is clear that and of Example 1 are larger than that of Example 2. That means allowable upper bounds of and of Example 1 are larger than that of Example 2. This further proves that the analysis in Remarks 2-3 is reasonable.

5 Conclusions

In this paper, we study global synchronization for a new class of MSCNs with mixed time-varying delays in the delay-partition approach. Sufficient conditions of global synchronization for the new class of MSCNs with mixed time-varying delays are derived by the new delay-partition approach. The advantage of the delay-partition approach is that the obtained results have lower conservatism. With two numerical examples, the theoretical results proposed are proved to be effective.

References

Watts DJ, Strogatz SH: Collective dynamics of ‘small-world’ networks. Nature 1998, 393: 440-442. 10.1038/30918

Lu J, Ho DWC: Stabilization of complex dynamical networks with noise disturbance under performance constraint. Nonlinear Anal., Real World Appl. 2011, 12(4):1974-1984. 10.1016/j.nonrwa.2010.12.013

Chen G, Zhou J, Celikovsky S: On LaSalle’s invariance principle and its application to robust synchronization of general vector Liénard equation. IEEE Trans. Autom. Control 2005, 49(6):869-874.

Li X, Wang X, Chen G: Pinning a complex dynamical network to its equilibrium. IEEE Trans. Circuits Syst. I 2004, 51(10):2074-2087. 10.1109/TCSI.2004.835655

Tang Y, Wong WK: Distributed synchronization of coupled neural networks via randomly occurring control. IEEE Trans. Neural Netw. Learn. Syst. 2013, 24(3):435-447.

Lu J, Ho DWC, Cao J: Synchronization in an array of nonlinearly coupled chaotic neural networks with delay coupling. Int. J. Bifurc. Chaos 2008, 18(10):3101-3111. 10.1142/S0218127408022275

Tang Y, Gao H, Zou W, Kurths J: Distributed synchronization in networks of agent systems with nonlinearities and random switchings. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 2013, 43(1):358-370.

Pikovsky A, Rosenblum M, Kurths J: Synchronization: A Universal Concept in Nonlinear Sciences. Cambridge University Press, New York; 2001.

Mao X, Yuan C: Stochastic Differential Equations with Markovian Switching. Imperial College Press, London; 2006.

Yang X, Cao J, Lu J: Synchronization of randomly coupled neural networks with Markovian jumping and time-delay. IEEE Trans. Circuits Syst. I 2013, 60(2):363-376.

Zhang W, Fang J, Miao Q, Chen L, Zhu W: Synchronization of Markovian jump genetic oscillators with nonidentical feedback delay. Neurocomputing 2013, 101: 347-353.

Wang J, Xu C, Feng J, Kwong M, Austin F: Mean-square exponential synchronization of Markovian switching stochastic complex networks with time-varying delays by pinning control. Abstr. Appl. Anal. 2012., 2012: Article ID 298095

Li H, Yue D: Synchronization of Markovian jumping stochastic complex networks with distributed time delays and probabilistic interval discrete time-varying delays. J. Phys. A, Math. Theor. 2010, 43: 1-25.

Yin S, Ding S, Xie X, Luo H: A review on basic data-driven approaches for industrial process monitoring. IEEE Trans. Ind. Electron. 2014, 61(11):6418-6428.

Yin S, Wang G, Yang X: Robust PLS approach for KPI-related prediction and diagnosis against outliers and missing data. Int. J. Syst. Sci. 2014, 45(7):1-8.

Yin S, Li X, Gao H, Kaynak O: Data-based techniques focused on modern industry: an overview. IEEE Trans. Ind. Electron. 2014, PP(99):1. 10.1109/TIE.2014.2308133

Yin S, Gao X, Karimi HR, Zhu X: Study on support vector machine-based fault detection in Tennessee Eastman process. Abstr. Appl. Anal. 2014., 2014: Article ID 836895

Yin S, Zhu X, Karimi HR: Quality evaluation based on multivariate statistical methods. Math. Probl. Eng. 2013., 2013: Article ID 639652

Zhang W, Fang J, Tang Y: New robust stability analysis for genetic regulatory networks with random discrete delays and distributed delays. Neurocomputing 2011, 74: 2344-2360. 10.1016/j.neucom.2011.03.011

Li L, Ho DWC, Lu J: A unified approach to practical consensus with quantized data and time delay. IEEE Trans. Circuits Syst. I 2013, 60(10):2668-2678.

Lu J, Ho DWC, Kurths J: Consensus over directed static networks with arbitrary finite communication delays. Phys. Rev. E 2009., 80(6): Article ID 066121

Zhou W, Wang T, Mou J: Synchronization control for the competitive complex networks with time delay and stochastic effects. Commun. Nonlinear Sci. Numer. Simul. 2012, 17: 3417-3426. 10.1016/j.cnsns.2011.12.021

Gan Q: Exponential synchronization of stochastic Cohen-Grossberg neural networks with mixed time-varying delays and reaction-diffusion via periodically intermittent control. Neural Netw. 2012, 31: 12-21.

Li X, Bohnerb M: Exponential synchronization of chaotic neural networks with mixed delays and impulsive effects via output coupling with delay feedback. Math. Comput. Model. 2010, 52: 643-653. 10.1016/j.mcm.2010.04.011

Gan Q, Hu R, Liang Y: Adaptive synchronization for stochastic competitive neural networks with mixed time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 2012, 17: 3708-3718. 10.1016/j.cnsns.2012.01.021

Liu Z, Lü S, Zhong S, Ye M: p th moment exponential synchronization analysis for a class of stochastic neural networks with mixed delays. Commun. Nonlinear Sci. Numer. Simul. 2010, 15: 1899-1909. 10.1016/j.cnsns.2009.07.018

Gan Q: Adaptive synchronization of Cohen-Grossberg neural networks with unknown parameters and mixed time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 2012, 17: 3040-3049. 10.1016/j.cnsns.2011.11.012

Zhu Q, Zhou W, Tong D, Fang J: Adaptive synchronization for stochastic neural networks of neutral-type with mixed time-delays. Neurocomputing 2013, 99: 477-485.

Liang Y, Wang X: Synchronization in complex networks with non-delay and delay couplings via intermittent control with two switched periods. Physica A 2014, 395: 434-444.

Li H, Ning Z, Yin Y, Tang Y: Synchronization and state estimation for singular complex dynamical networks with time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 2013, 18: 194-208. 10.1016/j.cnsns.2012.06.023

Li H: Cluster synchronization and state estimation for complex dynamical networks with mixed time delays. Appl. Math. Model. 2013, 37: 7223-7244. 10.1016/j.apm.2013.02.019

Liu Y, Wang Z, Liu X: Exponential synchronization of complex networks with Markovian jump and mixed delays. Phys. Lett. A 2008, 372: 3986-3998. 10.1016/j.physleta.2008.02.085

Yi J, Wang Y, Xiao J, Huang Y: Exponential synchronization of complex dynamical networks with Markovian jump parameters and stochastic delays and its application to multi-agent systems. Commun. Nonlinear Sci. Numer. Simul. 2013, 18: 1175-1192. 10.1016/j.cnsns.2012.09.031

Huang H, Feng G: State estimation of recurrent neural networks with time-varying delay: a novel delay partition approach. Neurocomputing 2011, 74: 792-796. 10.1016/j.neucom.2010.10.006

Duan J, Hu M, Yang Y, Guo L: A delay-partitioning projection approach to stability analysis of stochastic Markovian jump neural networks with randomly occurred nonlinearities. Neurocomputing 2014, 128: 459-465.

Khalil HK: Nonlinear Systems. Prentice Hall, Upper saddle River; 1996.

Langville AN, Stewart WJ: The Kronecker product and stochastic automata networks. J. Comput. Appl. Math. 2004, 167: 429-447. 10.1016/j.cam.2003.10.010

Boyd S, Ghaoui LE, Feron E, Balakrishnan V: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia; 1994.

Gu K: An integral inequality in the stability problem of time-delay systems. Proceedings of 39th IEEE Conference on Decision and Control 2000, 2805-2810. Sydney, Australia

Acknowledgements

The work is supported by the Education Commission Scientific Research Innovation Key Project of Shanghai under Grant 13ZZ050, the Science and Technology Commission Innovation Plan Basic Research Key Project of Shanghai under Grant 12JC1400400, Education Department Scientific Project of Zhejiang Province under Grant Y201326804, and the Scientific and Technological Innovation Plan Projects of Ningbo City under Grants 2012B71011 and 2011B710038.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

XW carried out the main part of this manuscript. JF participated in the discussion and corrected the main theorem. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

To view a copy of this licence, visit https://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, X., Fang, Ja., Dai, A. et al. Global synchronization for a class of Markovian switching complex networks with mixed time-varying delays in the delay-partition approach. Adv Differ Equ 2014, 248 (2014). https://doi.org/10.1186/1687-1847-2014-248

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2014-248