Abstract

In this paper, a class of neutral delay Hopfield neural networks with time-varying delays in the leakage term on time scales is considered. By utilizing the exponential dichotomy of linear dynamic equations on time scales, Banach’s fixed point theorem and the theory of calculus on time scales, some sufficient conditions are obtained for the existence and exponential stability of almost periodic solutions for this class of neural networks. Finally, a numerical example illustrates the feasibility of our results and also shows that the continuous-time neural network and the discrete-time analogue have the same dynamical behaviors. The results of this paper are completely new and complementary to the previously known results even when the time scale .

Similar content being viewed by others

1 Introduction

The dynamical properties for delayed Hopfield neural networks have been extensively studied since they can be applied into pattern recognition, image processing, speed detection of moving objects, optimization problems and many other fields. Besides, due to the finite speed of information processing, the existence of time delays frequently causes oscillation, divergence, or instability in neural networks. Therefore, it is of prime importance to consider the delay effects on the stability of neural networks. Up to now, neural networks with various types of delay have been widely investigated by many authors [1–20].

However, so far, very little attention has been paid to neural networks with time delay in the leakage (or ‘forgetting’) term [21–35]. Such time delays in the leakage terms are difficult to handle and have been rarely considered in the literature. In fact, the leakage term has a great impact on the dynamical behavior of neural networks. Also, recently, another type of time delays, namely, neutral-type time delays which always appear in the study of automatic control, population dynamics and vibrating masses attached to an elastic bar, etc., has drawn much research attention. So far there have been only a few papers that have taken neutral-type phenomenon into account in delayed neural networks [33–43].

In fact, both continuous and discrete systems are very important in implementation and applications. But it is troublesome to study the existence of almost periodic solutions for continuous and discrete systems respectively. Therefore, it is meaningful to study that on time scales which can unify the continuous and discrete situations (see [44–50]).

To the best of our knowledge, up to now, there have been no papers published on the existence and stability of almost periodic solutions to neutral-type delay neural networks with time-varying delays in the leakage term on time scales. Thus, it is important and, in effect, necessary to study the existence of almost periodic solutions for neutral-type neural networks with time-varying delay in the leakage term on time scales.

Motivated by above, in this paper, we propose the following neutral delay Hopfield neural networks with time-varying delays in the leakage term on time scale  :

:

where  is an almost periodic time scale that will be defined in the next section, denotes the potential (or voltage) of cell i at time t, represents the rate at which the i th unit will reset its potential to the resting state in isolation when disconnected from the network and external inputs at time t, , , and represent the delayed strengths of connectivity and neutral delayed strengths of connectivity between cell i and j at time t, respectively, and are the kernel functions determining the distributed delays, , , and are the activation functions in system (1.1), is an external input on the i th unit at time t, and correspond to the transmission delays of the i th unit along the axon of the j th unit at time t.

is an almost periodic time scale that will be defined in the next section, denotes the potential (or voltage) of cell i at time t, represents the rate at which the i th unit will reset its potential to the resting state in isolation when disconnected from the network and external inputs at time t, , , and represent the delayed strengths of connectivity and neutral delayed strengths of connectivity between cell i and j at time t, respectively, and are the kernel functions determining the distributed delays, , , and are the activation functions in system (1.1), is an external input on the i th unit at time t, and correspond to the transmission delays of the i th unit along the axon of the j th unit at time t.

If , then system (1.1) is reduced to the following continuous-time neutral delay Hopfield neural network:

and if , then system (1.1) is reduced to the discrete-time neutral delay Hopfield neural network

where and . When , , , , Bai [37] and Xiao [38] studied the almost periodicity of (1.2), respectively. However, even when , , the almost periodicity to (1.3), the discrete-time analogue of (1.2), has been not studied yet.

For convenience, for any almost periodic function defined on  , we define , .

, we define , .

The initial condition associated with system (1.1) is of the form

where denotes a real-value bounded Δ-differentiable function defined on and .

Throughout this paper, we assume that:

(H1) with , , , , , , , , , and are all almost periodic functions on  , , , , , for , .

, , , , , for , .

(H2) There exist positive constants , , , such that for ,

where and .

(H3) For , the delay kernels are continuous and integrable with

Our main purpose of this paper is to study the existence and global exponential stability of the almost periodic solution to (1.1). Our results of this paper are completely new and complementary to the previously known results even when the time scale . The organization of the rest of this paper is as follows. In Section 2, we introduce some definitions and make some preparations for later sections. In Section 3 and Section 4, by utilizing Banach’s fixed point theorem and the theory of calculus on time scales, we present some sufficient conditions which guarantee the existence of a unique globally exponentially stable almost periodic solution for system (1.1). In Section 5, we present examples to illustrate the feasibility and effectiveness of our results obtained in previous sections. We draw a conclusion in Section 6.

2 Preliminaries

In this section, we shall first recall some basic definitions and lemmas which will be useful for the proof of our main results.

Let  be a nonempty closed subset (time scale) of ℝ. The forward and backward jump operators and the graininess are defined, respectively, by

be a nonempty closed subset (time scale) of ℝ. The forward and backward jump operators and the graininess are defined, respectively, by

A point is called left-dense if and , left-scattered if , right-dense if and , and right-scattered if . If  has a left-scattered maximum m, then ; otherwise . If

has a left-scattered maximum m, then ; otherwise . If  has a right-scattered minimum m, then ; otherwise .

has a right-scattered minimum m, then ; otherwise .

A function is right-dense continuous provided it is continuous at right-dense point in  and its left-side limits exist at left-dense points in

and its left-side limits exist at left-dense points in  . If f is continuous at each right-dense point and each left-dense point, then f is said to be a continuous function on

. If f is continuous at each right-dense point and each left-dense point, then f is said to be a continuous function on  .

.

For and , we define the delta derivative of , , to be the number (if it exists) with the property that for a given , there exists a neighborhood U of t such that

for all .

If y is continuous, then y is right-dense continuous, and if y is delta differentiable at t, then y is continuous at t.

Let y be right-dense continuous. If , then we define the delta integral by

A function is called regressive if

for all . The set of all regressive and rd-continuous functions will be denoted by . We define the set .

If r is a regressive function, then the generalized exponential function is defined by

with the cylinder transformation

Let be two regressive functions, we define

Then the generalized exponential function has the following properties.

Definition 2.1 [51]

Let be two regressive functions, define

Lemma 2.1 [51]

Assume that are two regressive functions, then

-

(1)

and ;

-

(2)

;

-

(3)

;

-

(4)

;

-

(5)

;

-

(6)

if , then .

Definition 2.2 [51]

Assume that is a function and let . Then we define to be the number (provided it exists) with the property that given any , there is a neighborhood U of t (i.e., for some ) such that

for all . We call the delta (or Hilger) derivative of f at t. Moreover, we say that f is delta (or Hilger) differentiable (or, in short, differentiable) on provided exists for all . The function is then called the (delta) derivative of f on .

Definition 2.3 [52]

A time scale  is called an almost periodic time scale if

is called an almost periodic time scale if

Definition 2.4 [52]

Let  be an almost periodic time scale. A function is called an almost periodic function if the ε-translation set of

be an almost periodic time scale. A function is called an almost periodic function if the ε-translation set of

is a relatively dense set in  for all ; that is, for any given , there exists a constant such that each interval of length contains a such that

for all ; that is, for any given , there exists a constant such that each interval of length contains a such that

τ is called the ε-translation number of f and is called the inclusion length of .

Definition 2.5 [52]

Let be an rd-continuous matrix on  , the linear system

, the linear system

is said to admit an exponential dichotomy on  if there exist positive constants k, α, projection P and the fundamental solution matrix of (2.1), satisfying

if there exist positive constants k, α, projection P and the fundamental solution matrix of (2.1), satisfying

where is a matrix norm on  (say, for example, if , then we can take ).

(say, for example, if , then we can take ).

Consider the following almost periodic system:

where is an almost periodic matrix function, is an almost periodic vector function.

Lemma 2.2 [52]

If the linear system (2.1) admits exponential dichotomy, then system (2.2) has a unique almost periodic solution

where is the fundamental solution matrix of (2.1).

Lemma 2.3 [53]

Let be an almost periodic function on  , where , , , and , then the linear system

, where , , , and , then the linear system

admits an exponential dichotomy on  .

.

One can easily prove the following.

Lemma 2.4 Suppose that is an rd-continuous function and is a positive rd-continuous function satisfying . Let

where , then

3 Existence of almost periodic solutions

Let , and

For , if we define induced modulus , where

and , then  is a Banach space.

is a Banach space.

Theorem 3.1 Assume that (H1)-(H3) and

(H4)

hold, then there exists exactly one almost periodic solution of system (1.1) in the region , where

Proof Rewrite (1.1) in the form

For any , we consider the following system:

Since , it follows from Lemma 2.2 and Lemma 2.3 that system (3.1) has a unique almost periodic solution which can be expressed as follows:

where

Now, we define a mapping by , .

By the definition of , we have

Hence, for any , one has

Next, we will show that . In fact, for any , we have

and

Thus, we obtain

which implies , so the mapping T is a self-mapping from to .

Finally, we prove that T is a contraction mapping. Taking , we have that

and

Noticing that , it means that T is a contraction mapping. Thus, there exists a unique fixed point such that . Then system (1.1) has a unique almost periodic solution in the region . This completes the proof. □

4 Exponential stability of the almost periodic solution

Definition 4.1 The almost periodic solution of system (1.1) with initial value is said to be globally exponentially stable. If there exist positive constants λ with and such that every solution of system (1.1) with initial value satisfies

where

and .

Theorem 4.1 Assume that (H1)-(H4) hold, then system (1.1) has a unique almost periodic solution which is globally exponentially stable.

Proof From Theorem 3.1, we see that system (1.1) has at least one almost periodic solution . Suppose that is an arbitrary solution. Set , , then it follows from system (1.1) that

where and for ,

From (H2) we have that for ,

and

The initial condition of (4.1) is

Let and be defined by

and

By (H3), for , we get

and

Since , are continuous on and , , as , so there exist such that and for , for , .

By choosing , we have , , . So, we can choose a positive constant such that

which implies that

and

where . Let

by (H3) we have . Thus

Rewrite (4.1) in the form

Multiplying the both sides of (4.4) by and integrating over , we get

It is easy to see that

We claim that

To prove (4.6), we first show that for any , the following inequality holds:

If (4.7) is not true, then there must be some and some such that

and

Therefore, there must exist a constant such that

and

By (4.5), (4.8), (4.9) and (H1)-(H3), we obtain

By Lemma 2.4 and (4.5), we have, for ,

Thus, it follows from (4.8), (4.9) and (4.11) that

In view of (4.10) and (4.12), we get

which contradicts (4.8), and so (4.7) holds. Letting , then (4.6) holds. Hence, the almost periodic solution of system (1.1) is globally exponentially stable. This completes the proof. □

Remark 4.1 When , , , Theorem 3.1 and Theorem 4.1 are reduced to Theorem 2.3 and Theorem 3.1 in [37], respectively.

Remark 4.2 According to Theorem 3.1 and Theorem 4.1, we see that the existence and exponential stability of almost periodic solutions for system (1.1) only depend on time delays (the delays in the leakage term) and do not depend on time delays and .

5 An example

In this section, we give an example to illustrate the feasibility and effectiveness of our results obtained in Sections 3 and 4.

Example 5.1 Let . Consider the following neutral Hopfield neural network on time scale  :

:

where and the coefficients are as follows:

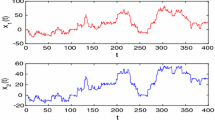

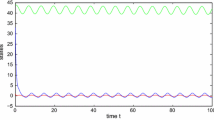

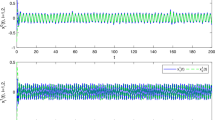

Take , , () to be arbitrary almost periodic functions. If , then and if , then . By calculating, we can easily check, for above two cases, that , . By Theorem 3.1 and Theorem 4.1, we know that system (5.1) has a unique almost periodic solution that is globally exponentially stable. This shows that the almost periodicity of system (5.1) does not depend on time scale  . In particular, the continuous-time neural network and the discrete-time analogue described by (5.1) have the same dynamical behaviors (see Figures 1-4).

. In particular, the continuous-time neural network and the discrete-time analogue described by (5.1) have the same dynamical behaviors (see Figures 1-4).

6 Conclusion

In this paper, a class of neutral delay Hopfield neural networks with neutral time-varying delays in the leakage term on time scales is investigated. For the model, we have given some sufficient conditions ensuring the existence and global exponential stability of almost periodic solutions by using the exponential dichotomy of linear dynamic equations on time scales, Banach’s fixed point theorem and the theory of calculus on time scales. These obtained results are new and complement previously known results. Furthermore, a simple example is given to demonstrate the effectiveness of our results and the example also shows that the continuous-time neural network and the discrete-time analogue have the same dynamical behaviors.

References

Gu HB, Jiang HJ, Teng ZD: Existence and globally exponential stability of periodic solution of BAM neural networks with impulses and recent-history distributed delays. Neurocomputing 2008, 71: 813–822. 10.1016/j.neucom.2007.03.007

Huang CX, Cao JD: Almost sure exponential stability of stochastic cellular neural networks with unbounded distributed delays. Neurocomputing 2009, 72: 3352–3356. 10.1016/j.neucom.2008.12.030

Kwon OM, Park JH: Delay-dependent stability for uncertain cellular neural networks with discrete and distribute time-varying delays. J. Franklin Inst. 2008, 345: 766–778. 10.1016/j.jfranklin.2008.04.011

Li YK, Yang L: Anti-periodic solutions for Cohen-Grossberg neural networks with bounded and unbounded delays. Commun. Nonlinear Sci. Numer. Simul. 2009, 14: 3134–3140. 10.1016/j.cnsns.2008.12.002

Li YK: Global exponential stability of BAM neural networks with delays and impulses. Chaos Solitons Fractals 2005, 24: 279–285. 10.1016/S0960-0779(04)00561-2

Li CZ, Li YK, Ye Y: Exponential stability of fuzzy Cohen-Grossberg neural networks with time delays and impulsive effects. Commun. Nonlinear Sci. Numer. Simul. 2010, 15: 3599–3606. 10.1016/j.cnsns.2010.01.001

Cai Z, Huang L, Guo Z, Chen X: On the periodic dynamics of a class of time-varying delayed neural networks via differential inclusions. Neural Netw. 2012, 33: 97–113.

Akhmet MU, Yılmaz E: Global exponential stability of neural networks with non-smooth and impact activations. Neural Netw. 2012, 34: 18–27.

Kwon OM, Park JH, Lee SM, Cha EJ: New results on exponential passivity of neural networks with time-varying delays. Nonlinear Anal., Real World Appl. 2012, 13: 1593–1599. 10.1016/j.nonrwa.2011.11.017

Liu PC, Yi FQ, Guo Q, Yang J, Wu W: Analysis on global exponential robust stability of reaction-diffusion neural networks with S-type distributed delays. Physica D 2008, 237: 475–485. 10.1016/j.physd.2007.09.014

Mathiyalagan K, Sakthivel R, Marshal Anthoni S: Exponential stability result for discrete-time stochastic fuzzy uncertain neural networks. Phys. Lett. A 2012, 376: 901–912. 10.1016/j.physleta.2012.01.038

Mohamad S, Gopalsamy K, Akca H: Exponential stability of artificial neural networks with distributed delays and large impulses. Nonlinear Anal., Real World Appl. 2008, 9: 872–888. 10.1016/j.nonrwa.2007.01.011

Sakthivel R, Mathiyalagan K, Marshal Anthoni S: Design of a passification controller for uncertain fuzzy Hopfield neural networks with time-varying delays. Phys. Scr. 2011., 84: Article ID 045024

Zhou DM, Zhang LM, Cao JD: On global exponential stability of cellular neural networks with Lipschitz-continuous activation function and variable delays. Appl. Math. Comput. 2004, 151(2):379–392. 10.1016/S0096-3003(03)00347-3

Li YK, Fan XL: Existence and globally exponential stability of almost periodic solution for Cohen-Grossberg BAM neural networks with variable coefficients. Appl. Math. Model. 2009, 33: 2114–2120. 10.1016/j.apm.2008.05.013

Li YK, Liu CC, Zhu LF: Global exponential stability of periodic solution for shunting inhibitory CNNs with delays. Phys. Lett. A 2005, 337: 46–54. 10.1016/j.physleta.2005.01.008

Li YK: Global stability and existence of periodic solutions of discrete delayed cellular neural networks. Phys. Lett. A 2004, 333: 51–61. 10.1016/j.physleta.2004.10.022

Li YK, Zhang TW, Xing ZW: The existence of nonzero almost periodic solution for Cohen-Grossberg neural networks with continuously distributed delays and impulses. Neurocomputing 2010, 73: 3105–3113. 10.1016/j.neucom.2010.06.012

Li YK, Zhao KH: Robust stability of delayed reaction-diffusion recurrent neural networks with Dirichlet boundary conditions on time scales. Neurocomputing 2011, 74: 1632–1637. 10.1016/j.neucom.2011.01.006

Song QK, Cao JD: Stability analysis of Cohen-Grossberg neural network with both time-varying and continuously distributed delays. J. Comput. Appl. Math. 2006, 197: 188–203. 10.1016/j.cam.2005.10.029

Liu BW: Global exponential stability for BAM neural networks with time-varying delays in the leakage terms. Nonlinear Anal., Real World Appl. 2013, 14: 559–566. 10.1016/j.nonrwa.2012.07.016

Balasubramaniam P, Kalpana M, Rakkiyappan R: Existence and global asymptotic stability of fuzzy cellular neural networks with time delay in the leakage term and unbounded distributed delays. Circuits Syst. Signal Process. 2011, 30: 1595–1616. 10.1007/s00034-011-9288-7

Li X, Cao J: Delay-dependent stability of neural networks of neutral type with time delay in the leakage term. Nonlinearity 2010, 23: 1709–1726. 10.1088/0951-7715/23/7/010

Li X, Rakkiyappan R, Balasubramanian P: Existence and global stability analysis of equilibrium of fuzzy cellular neural networks with time delay in the leakage term under impulsive perturbations. J. Franklin Inst. 2011, 348: 135–155. 10.1016/j.jfranklin.2010.10.009

Balasubramanian P, Nagamani G, Rakkiyappan R: Passivity analysis for neural networks of neutral type with Markovian jumping parameters and time delay in the leakage term. Commun. Nonlinear Sci. Numer. Simul. 2011, 16: 4422–4437. 10.1016/j.cnsns.2011.03.028

Lakshmanan S, Park JH, Jung HY, Balasubramaniam P: Design of state estimator for neural networks with leakage, discrete and distributed delays. Appl. Math. Comput. 2012, 218: 11297–11310. 10.1016/j.amc.2012.05.022

Balasubramaniam P, Vembarasan V, Rakkiyappan R: Leakage delays in T-S fuzzy cellular neural networks. Neural Process. Lett. 2011, 33: 111–136. 10.1007/s11063-010-9168-3

Li X, Fu X, Balasubramaniam P, Rakkiyappan R: Existence, uniqueness and stability analysis of recurrent neural networks with time delay in the leakage term under impulsive perturbations. Nonlinear Anal., Real World Appl. 2010, 11: 4092–4108. 10.1016/j.nonrwa.2010.03.014

Gopalsamy K: Leakage delays in BAM. J. Math. Anal. Appl. 2007, 325: 1117–1132. 10.1016/j.jmaa.2006.02.039

Li C, Huang T: On the stability of nonlinear systems with leakage delay. J. Franklin Inst. 2009, 346: 366–377. 10.1016/j.jfranklin.2008.12.001

Peng S: Global attractive periodic solutions of BAM neural networks with continuously distributed delays in the leakage terms. Nonlinear Anal., Real World Appl. 2010, 11: 2141–2151. 10.1016/j.nonrwa.2009.06.004

Balasubramaniam P, Kalpana M, Rakkiyappan R: State estimation for fuzzy cellular neural networks with time delay in the leakage term, discrete and unbounded distributed delays. Comput. Math. Appl. 2011, 62: 3959–3972.

Li YK, Li YQ: Existence and exponential stability of almost periodic solution for neutral delay BAM neural networks with time-varying delays in leakage terms. J. Franklin Inst. 2013, 350: 2808–2825. 10.1016/j.jfranklin.2013.07.005

Chen ZB: A shunting inhibitory cellular neural network with leakage delays and continuously distributed delays of neutral type. Neural Comput. Appl. 2013, 23: 2429–2434. 10.1007/s00521-012-1200-2

Zhao CH, Wang ZY: Exponential convergence of a SICNN with leakage delays and continuously distributed delays of neutral type. Neural Process. Lett. 2014. 10.1007/s11063-014-9341-1

Li YK, Zhao L, Chen XR: Existence of periodic solutions for neutral type cellular neural networks with delays. Appl. Math. Model. 2012, 36: 1173–1183. 10.1016/j.apm.2011.07.090

Bai C: Global stability of almost periodic solutions of Hopfield neural networks with neutral time-varying delays. Appl. Math. Comput. 2008, 203: 72–79. 10.1016/j.amc.2008.04.002

Xiao B: Existence and uniqueness of almost periodic solutions for a class of Hopfield neural networks with neutral delays. Appl. Math. Lett. 2009, 22: 528–533. 10.1016/j.aml.2008.06.025

Park JH, Park CH, Kwon OM, Lee SM: A new stability criterion for bidirectional associative memory neural networks of neutral-type. Appl. Math. Comput. 2008, 199: 716–722. 10.1016/j.amc.2007.10.032

Rakkiyappan R, Balasubramaniam P: New global exponential stability results for neutral type neural networks with distributed time delays. Neurocomputing 2008, 71: 1039–1045. 10.1016/j.neucom.2007.11.002

Rakkiyappan R, Balasubramaniam P: LMI conditions for global asymptotic stability results for neutral-type neural networks with distributed time delays. Appl. Math. Comput. 2008, 204: 317–324. 10.1016/j.amc.2008.06.049

Zhang Z, Liu W, Zhou D: Global asymptotic stability to a generalized Cohen-Grossberg BAM neural networks of neutral type delays. Neural Netw. 2012, 25: 94–105.

Liu PL: Improved delay-dependent stability of neutral type neural networks with distributed delays. ISA Trans. 2013, 52: 717–724. 10.1016/j.isatra.2013.06.012

Li YK, Chen XR, Zhao L: Stability and existence of periodic solutions to delayed Cohen-Grossberg BAM neural networks with impulses on time scales. Neurocomputing 2009, 72: 1621–1630. 10.1016/j.neucom.2008.08.010

Li YK, Shu JY: Anti-periodic solutions to impulsive shunting inhibitory cellular neural networks with distributed delays on time scales. Commun. Nonlinear Sci. Numer. Simul. 2011, 16: 3326–3336. 10.1016/j.cnsns.2010.11.004

Li YK, Wang C: Almost periodic solutions of shunting inhibitory cellular neural networks on time scales. Commun. Nonlinear Sci. Numer. Simul. 2012, 17: 3258–3266. 10.1016/j.cnsns.2011.11.034

Liang T, Yang YQ, Liu Y, Li L: Existence and global exponential stability of almost periodic solutions to Cohen-Grossberg neural networks with distributed delays on time scales. Neurocomputing 2014, 123: 207–215.

Zhang ZQ, Liu KY: Existence and global exponential stability of a periodic solution to interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales. Neural Netw. 2011, 24: 427–439. 10.1016/j.neunet.2011.02.001

Li YK, Zhang TW: Global exponential stability of fuzzy interval delayed neural networks with impulses on time scales. Int. J. Neural Syst. 2009, 19(6):449–456. 10.1142/S0129065709002142

Li YK, Gao S: Global exponential stability for impulsive BAM neural networks with distributed delays on time scales. Neural Process. Lett. 2010, 31: 65–91. 10.1007/s11063-009-9127-z

Bohner M, Peterson A: Advances in Dynamic Equations on Time Scales. Birkhäuser, Boston; 2003.

Li YK, Wang C: Uniformly almost periodic functions and almost periodic solutions to dynamic equations on time scales. Abstr. Appl. Anal. 2011., 2011: Article ID 341520

Li YK, Wang C: Almost periodic functions on time scales and applications. Discrete Dyn. Nat. Soc. 2011., 2011: Article ID 727068

Acknowledgements

This study was supported by the National Natural Sciences Foundation of People’s Republic of China under Grant 11361072.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the manuscript and typed, read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Li, L., Li, Y. & Yang, L. Almost periodic solutions for neutral delay Hopfield neural networks with time-varying delays in the leakage term on time scales. Adv Differ Equ 2014, 178 (2014). https://doi.org/10.1186/1687-1847-2014-178

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2014-178