Abstract

Stochastic models have an important role in modeling and analyzing epidemic diseases for small size population. In this article, we study the generation of stochastic models for epidemic disease susceptible-infective-susceptible model. Here, we use the separation variable method to solve partial differential equation and the new developed modified probability generating function (PGF) of a random process to include a random catastrophe to solve the ordinary differential equations generated from partial differential equation. The results show that the probability function is too sensitive to μ, β and γ parameters.

Similar content being viewed by others

1 Deterministic susceptible-infective-susceptible model

Figure 1 shows a deterministic susceptible-infective-susceptible model for an epidemic disease. In this figure, S is the susceptible population, I is the infective population, is the natural death rate, is the removal rate which is a constant. Note that because they represent the number of people. The infection rate, λ, depends on the number of partners per individual per unit time () and the transmission probability per partner (). In this system, the first susceptible population in class S is going to be infected, then infected population in class I is going to be susceptible again. The following system of ODE’s describes this susceptible-infective-susceptible model [1]

Figure 1 illustrates the system (1). This system is nonlinear due to the form of .

2 Generation stochastic susceptible-infective-susceptible model

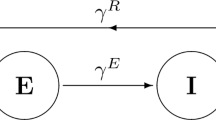

In this section, we present the state of the generation stochastic [2–5] susceptible-infective-susceptible model. The stochastic susceptible-infective-susceptible model is similar to the deterministic susceptible-infective-susceptible model, for the deterministic model we can find an exact function but for the stochastic model, we cannot obtain an exact function. Figure 2 shows the state diagram for the stochastic [2–5] susceptible-infective-susceptible model [1, 6–10].

At , if m is infective and n is susceptible, namely , then is the probability function in the time t and stage i. Here, our goal is to determine . Table 1 shows the transition diagram for this model. To determine ’s, we should create the Kolmogorov equations. From Figure 2, we have

and

Now, to produce the forward Kolmogorov equations, we have

So,

then

Having limited from both sides of Eq. (5), when , we have

Therefore, the forward Kolmogorov equations for this model will be as follows:

The probabilities function is found from Eq. (7). Also, from probability generating functions (PGFs) and partial differential functions equations (PDEs), the probabilities function can be obtained. Probability generating functions can be written as

Now, the partial derivative of with respect to t will be , so one can write the partial derivative as follows:

Having simplified Eq. (9), we can write

The separation variable method is employed to solve Eq. (10). If , then we have

Two sides of Eq. (11) are equal, so

To include a random catastrophe presented by Gani and Swift in 2006 [11], we develop the modified probability generating function (PGF) of a random process to solve Eq. (10) as follows:

with , here is the answer of Eq. (10) when , . So, we can put , with in Eq. (12)

This equation was solved by Bailey in 1963 [12]. After having solved Eq. (14) by Maple, we have

where is a hypergeometric function.

Notation 1 The standard hypergeometric function is as follows:

where with is the Pochhammer symbol. The derivatives of are given by

Also, from equation , we have . So,

In Eq. (17) we can take ; , here ϵ is so small parameter. Then from Eq. (17) and Eq. (13), one can write

Thus,

where

Now, to find , one can calculate as follows:

So,

Hence, and are obtained as follows:

Therefore,

with

3 Numerical results

Some numerical examples illustrate the behavior of the probability function with the following parameters: ; ; ; .

Figure 3(a) and (b) show the behavior of the probability functions, and with and when and .

Figure 3(a) shows that with an increase in t and β, the probability function increases fast, but in Figure 3(b) decreases fast.

Figure 4(a) and (b) show the behavior of the probability functions, and with and when and . Figure 4(a) shows that as t increases, the probability function increases, but with an increase in μ, the probability function decreases. Figure 4(b) shows that as t increases, the probability function decreases, but with an increase in μ, the probability function increases.

Figure 5(a) and (b) display the probability functions, and with and . From Figure 5(a) when and , the probability function is nearly zero, but for as t increases, slowly increases, and as γ increases, sharply increases. In Figure 5(b), is almost constant for , but with a decrease in γ from 1 to 0, increases; also, Figure 5(b) shows the highest value of when and .

Figure 6(a) and (b) show the probability functions, and with and . Figure 6(a) depicts that for , as μ increases from 0 to 1, the probability function increases. Figure 6(b) shows that for , with an increase in β and μ, the probability function increases.

Figure 7(a) and (b) illustrate the probability functions, and with and . Figure 7(a) shows that the probability function decreases with an increase in μ, but inversely it increases with an increase in γ. In Figure 7(a) we observe a separation which means that in the probability function we have . Figure 7(b) depicts that the probability function increases when μ increases, although it decreases with an increase in γ.

4 Conclusions

We have presented the generation of a stochastic model for the susceptible-infective-susceptible model. The separation variable method has been applied to solve a partial differential equation of this generation. So, two ordinary differential equations have been achieved which relate to the parameter x. To solve this equation, we used the developed modified probability generating function (PGF) of a random process to consider a random catastrophe. Numerical results showed the behavior of the probability function when and .

References

Hethcote HW: The mathematics of infectious diseases. SIAM Rev. 2000, 42: 599. 10.1137/S0036144500371907

Tomasz RB, Jacek J, Mariusz N: Study of dependence for some stochastic processes: symbolic Markov copulae. Stoch. Process. Appl. 2012, 122: 930–951. 10.1016/j.spa.2011.11.001

Nathalie E: Stochastic order for alpha-permanental point processes. Stoch. Process. Appl. 2012, 122: 952. 10.1016/j.spa.2011.11.006

Said H, Jianfeng Z: Switching problem and related system of reflected backward SDEs. Stoch. Process. Appl. 2010, 120: 403. 10.1016/j.spa.2010.01.003

Richard AD, Li S: Functional convergence of stochastic integrals with application to statistical inference. Stoch. Process. Appl. 2012, 122: 725. 10.1016/j.spa.2011.10.007

Seddighi Chaharborj S, Abu Bakar MR, Fudziah I: Study of stochastic systems for epidemic disease models. Int. J. Mod. Phys.: Conf. Ser. 2012, 9: 373–379.

Seddighi Chaharborj S, Gheisari Y: Study of reproductive number in epidemic disease modeling. Adv. Stud. Biol. 2011, 3: 267–271.

Seddighi Chaharbor S, Gheisari Y: Study of reproductive number in SIR-SI model. Adv. Stud. Biol. 2011, 3: 309–317.

Seddighi Chaharborj S, Abu Bakar MR, Fudziah I, Noor Akma I, Malik AH, Alli V: Behavior stability in two SIR-style models for HIV. Int. J. Math. Anal. 2010, 4: 427–434.

Seddighi Chaharborj S, Abu Bakar MR, Malik AH, Mehrkanoon S: Solving the SI model for HIV with the homotopy perturbation method. Int. J. Math. Anal. 2009, 22: 211–218.

Swift RJ, Gani J: A simple approach to birth processes with random catastrophes. J. Comb. Inf. Syst. Sci. 2006, 31: 325–331.

Norman TJB: The simple stochastic epidemic: a complete solution in terms of known functions. Biometrika 1963, 50: 235.

Acknowledgements

The authors thank the referees for valuable comments and suggestions which improved the presentation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors carried out the proof and conceived of the study. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Seddighi Chaharborj, S., Fudziah, I., Abu Bakar, M. et al. The use of generation stochastic models to study an epidemic disease. Adv Differ Equ 2013, 7 (2013). https://doi.org/10.1186/1687-1847-2013-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2013-7