Abstract

A continuous time multivariate stochastic model is proposed for assessing the damage of a multi-type epidemic cause to a population as it unfolds. The instants when cases occur and the magnitude of their injure are random. Thus, we define a cumulative damage based on counting processes and a multivariate mark process. For a large population we approximate the behavior of this damage process by its asymptotic distribution. Also, we analyze the distribution of the stopping times when the numbers of cases caused by the epidemic attain levels beyond certain thresholds. We focus on introducing some tools for statistical inference on the parameters related with the epidemic. In this regard, we present a general hypothesis test for homogeneity in epidemics and apply it to data of Covid-19 in Chile.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The damage caused by an epidemic or pandemic could be enormous. It is the case of the recent coronavirus disease, which has profoundly impacted the health, economy, and life of all humanity. On the economic impact of COVID-19, we cite the recent articles (Liu et al. 2022; Tsiotas and Tselios 2022; Yildirim et al. 2022). Several ways exist for measuring the mentioned damage. The insufficient number of highly complex hospital beds available for the attention of seriously ill patients is an example. In Mancini and Paganoni (2019) (see also (Gasperoni et al. 2017)), the authors study the heart failure prevalence by means of a marked point process, where the length of stay in the hospital acts as the marks of the process. The total time of occupation of each is a matter, but it is important to know too when a patient starts using this bed. Moreover, these times are multivariate, one for each bed. The cumulative damage process studied in this work accounts for this concern. Several problems produced by epidemics motivated us to introduce a model for a multi-type epidemic process contributing to approaching these pathologies. Usually, cumulative shock models for a system are stochastic. One of their main characteristics is the deterioration due to successive shocks suffered over time. The damage that an epidemic produces to a (human or not) population is an example of this situation. Other models, known as extreme models, assume the cause of the mentioned deterioration to be a shock exceeding a certain critical level. Since epidemic models used to be from the former type, in this work, we are concerned with the first type of model and assume the damage to the population is additive. Epidemics develop into different compartments where individuals interact among themselves. Some mathematical models for infectious epidemics are of the type SIR (Kermack 1927) or some of its variants. The acronyms correspond to susceptible, infected, and removed cases. There are a number the articles dealing with this subject, which, for deterministic models, are based on differential equations and, for stochastic ones, on stochastic differential equations of continuous and discontinuous time. As a recent reference we cite (Ball and Neal 2022) and references therein. Also, discrete-time versions of these models abound in the literature. Our process, defined as cumulative damage for epidemics, would estimate the number of infections (epidemic size), and the multivariate limit time estimates the times for the disease to go extinct (epidemic duration) (Bolzoni et al. 2019). These models describe the dynamic of the epidemic and are Markovian, almost always. Since we are mainly interested in the damage of the epidemic, we relax this last condition. Indeed, we introduce a model based on a multivariate temporal point process (the ground process) and a multivariate mark process. The first process gives an account of when an event occurs, while the second represents the damage caused for this event. We assume independence between the ground and mark processes, but their components should be correlated. Moreover, future states could depend on the history of the epidemic, as in the Hawkes process, which is a remarkable non-Markovian temporal point process used in epidemic modeling (see (Chiang et al. 2022; Holbrook et al. 2022; Hollinghurst et al. 2022; Mancini and Paganoni 2019), for instance).

Hence, we model the cumulative damage of the epidemic as a multi-time multivariate degradation process, where the tolerable damage of the components of the epidemics occurs when the corresponding cumulative epidemic events reach some deterministic predetermined levels. Moreover, the times when the cumulative epidemic events attain these levels are assumed to be random and correlated.

It is worth noting that there are natural phenomena that are not an epidemic themselves. However, they have been modeled as if they were. It is the case of some earthquake mathematical models, known as ETAS models (Kassay and Rădulescu 2019; Molkenthin et al. 2022; Ogata 1988; Ogata and Zhuang 2006). Also, mathematical epidemic models apply to describe how computational viruses act. With respect to this issue, we cite (Cohen 1987; Shang 2013; Weera et al. 2023).

The complexity produced by the correlation among the model components governing the epidemics and the lack of independence of the epidemic events inter-arrival times stress us to state asymptotic results for the cumulative damage distribution. Moreover, a random vector represents the times when the respective components of this cumulative damage exceed some critical threshold. We also study the joint asymptotic distribution of these random times. Moreover, one of the objectives of this paper is to provide tools allowing us to carry out asymptotic inference on the parameters of the model. An article aiming at this objective, based on discrete-time models, was published in Fierro et al. (2018).

Since the homogeneity concept has a distinguished place, we devote a complete section to introducing a model for a multi-type infectious disease. In this section, we present a general hypothesis test of homogeneity, and we apply this test to the COVID-19 epidemic in Chile.

The paper is structured as follows. Section 2 divides into two subsections. The first one is devoted to motivating the development of this work, while the second one aims to describe the main finding of this study. In Sect. 3, we introduce an application to infective diseases. We have subdivided this work into three subsections containing a hypothesis test of homogeneity, a simulation of a simple hypothesis test, and a numerical data analysis for the COVID-19 epidemic in Chile. We present the asymptotic results in Sect. 4, which includes CLTs for the multi-time processes and their main consequences. The multivariate time of failure associated with the epidemic process and its asymptotic distribution are present in Sect. 5. The proof of results of this work are in Sect. 6. Finally, in Sect. 7, we make some conclusions about this paper and project future work.

2 Preliminaries

2.1 Motivation

Although the mathematical support of this paper allows us to approach other facets of the epidemics theory, we focus on the study of the homogeneity of the population in the setting where an epidemic develops, and we are interested mainly in infectious diseases. The homogeneities can occur among different regions where the epidemic has a place, or else, among age characteristics of the infected individuals. Moreover, other classifications are also possible.

Some comments about epidemiological studies related to homogeneity motivate the current work.

Studies in Mancini and Paganoni (2019); Mazzali et al. (2016) considered four groups as a division of patients presenting heart failure, according to data from their first hospitalizations. This division into groups performs according to medical criteria. However, no statistical analysis is present on the homogeneity of data in each group. We think our methodology would apply to this concern.

In Hacohen et al. (2022), the authors prove that an efficient strategy for disseminating drugs and vaccines considers the homogeneity of regions for providing such dissemination. This strategy may sometimes slow down the supply rate in some locations. However, thanks to its egalitarian nature, which mimics the flow of pathogens, it provides a jump in overall mitigation efficiency, potentially saving more lives with orders of magnitude fewer resources.

According to Zachreson et al. (2022), computational models of infectious disease can categorize into two types. Individual-based (agent-based) or compartmental models. While the first ones, in principle, account for all known heterogeneity in population structure and behavior, in practice, only a few factors can include in any given framework. The latter is not without criticism either. Although these are advantageous due to their simple formulation and small parameter space, they deserve two primary critiques. First, they are based on differential equations and consequently ignore the past. A second common critique is that compartmental models are limited in their capacity to account for heterogeneities in population properties and structure. In the compartmental approach, individuals in each compartment have identical disease susceptibility, infectiousness, and contact frequency with others. As we see, it is convenient to know how much homogeneity there is in each subpopulation. A deterministic model accounting for this issue appears in Hethcote and Van Ark (1987).

Regarding COVID-19, the study, carried out in Hananel et al. (2022), examines the relationship between urban diversity and epidemiological resilience by assessing the neighborhood homogeneity versus the probability of being infected. This study shows the more homogeneous the population is, the more probable of contracting the disease. Although in a different context, the authors in He et al. (2022) attain similar conclusions. According to this model, individualism and egalitarianism act a favor of disease prevalence, while cultural heterogeneity was associated with a more robust public health response. Consistent with this model, the culture and state action act as substitutes in motivating compliance with COVID-19 policy.

As we see, the knowledge of different homogeneities in epidemics contributes to the strategies to face the disease prevalence.

2.2 Main findings

In what follows, \(\{\varvec{\xi }_{k}\}_{k\in \mathbb {N}}\) stands for a sequence of independent and identically distributed p-variate random vectors with mean \(\varvec{\mu }=(\mu _1,\dots ,\mu _p)^\top \) and covariance matrix \({\varvec{\Sigma }}=(\sigma _{ij};1\le i,j\le p)\). Moreover, \(N^1,\dots ,N^p\) denote p nonexplosive counting processes. We define the cumulative damage epidemic model, at the multi-time \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}^p_+\), as \(\varvec{W}_{\varvec{t}}=(W^1_{t_1},\dots ,W^p_{t_p})\), where

Different interpretations this random component could have. A first example: \(W^j_t\) is the number of ill individuals at time t in the place j, or having age j. A second example: \(W^j_t\) is the total time, before t, that the bed j in a hospital has remained occupied.

Let \(\{a_n\}_{n\in \mathbb {N}}\) be a sequence of strictly positive real numbers converging to \(\infty \) and, for each \(n\in \mathbb {N}\), \(\varvec{W}_{\varvec{t}}^n=(1/a_n)\varvec{W}_{n\varvec{t}}\). The main mathematical achievement of this work is to obtain the limit (or asymptotic) distribution of \(\varvec{W}^n_{\varvec{t}}\). Because observing the development of the epidemic, this fact allows knowing the limit distributions for the estimators of \(\varvec{\mu }\) and \(\varvec{\Sigma }\).

Let us denote the random multi-time \(\varvec{T}^n=(T^n_1,\dots ,T^n_p)\) describing the times when the components of the epidemic model attain critical values. That is

where \(\omega _j^*\) is the critical admissible value for the j-th component of the epidemic model. From the asymptotic distribution of \(\varvec{W}^n_{\varvec{t}}\), we obtain the limit distribution of \(\varvec{T}^n\).

Also, the asymptotic distribution of \(\varvec{W}^n_{\varvec{t}}\) allows us to derive tests of homogeneity for diverse subsets of \(\{\mu _1,\dots ,\mu _p\}\). We apply this result to, in general, the COVID-19 epidemic and, in particular, the development of this epidemic in Chile.

3 A model for multi-type infectious diseases

Suppose an infectious epidemic is affecting a population of size k, which is subdivided into p subpopulations labeled by a set \(J=\{1,\dots ,p\}\). For each \(j\in J\), the jth subpopulation consists of \(k_i\) individuals and we denote \(\pi _j=k_j/k\). Infections are occurring, in the whole population, in some times of jumps of a counting process N, with cumulative intensity \(\Lambda =\{\Lambda _t\}_{t\ge 0}\). The random vectors, included before in our general formulation, are used for determining in what subpopulation the infection takes place. Indeed, we assume \(\{\varvec{\xi }_{k}\}_{k\in \mathbb {N}}\) is a sequence of independent and identically distributed random vectors taking values in \(\{0,1\}^p\), where for each \(k\in \mathbb {N}\setminus \{0\}\), one and only one of the components of \(\varvec{\xi }_k=(\xi _k^1,\dots ,\xi _k^p)^\top \) takes the value 1. Also, it is assumed \(\{\varvec{\xi }_{k}\}_{k\in \mathbb {N}}\) independent of N. By defining \(\mu _j=\mathbb {P}(\xi _k^j=1)\), we have \(\varvec{\xi }_{k}\) has mean \(\varvec{\mu }=(\mu _1,\dots ,\mu _p)^\top \) and covariance matrix \({\varvec{\Sigma }}=(\sigma _{ij};1\le i,j\le p)\), where

It is easy to see that, for each \(j=1,\dots ,p\), \(N^j\), defined as \(N^j_t=\sum _{k=1}^{N_t}\xi ^j_k\), is a counting process with cumulative intensity \(\Lambda ^j=\mu _j\Lambda \). Notice that \(N^j_t\) denotes the number of infections taking place in the jth compartment into the time interval [0, t]. Moreover, \(\mu _1,\dots ,\mu _p\) are parameters giving account of the virulence of the infection for the corresponding subpopulation. Notice that \(N=N^1+\dots +N^p\) and, for each \(j\in J\), \(N^j\) has cumulative intensity \(\Lambda ^j=\mu _j\Lambda \). The counting process \(N^j\) describes the adequate contacts among infected and susceptible individuals in subpopulation j. An adequate contact of an infective is an interaction that results in infection of the other individual if he or she were susceptible (see (Hethcote and Van Ark 1987)). We want to know how the infectious disease affects the subpopulations. In particular, we are interested in finding out if individuals belonging to some groups of subpopulations have the same rate of infectiousness. To this purpose, the set J is partitioned into r nonempty subsets \(J_1,\dots ,J_r\), with \(r<p\). That is, \(J=J_1\cup \dots \cup J_r\) with \(J_u\cap J_v=\emptyset \), for \(u\ne v\).

3.1 A hypothesis test of homogeneity

For each \(j\in \{1,\dots ,p\}\) and \(u=1,\dots ,r\), let \(\beta _j=\mu _j/k_j\) and p(u) be the cardinality of \(J_u\). That is, \(\beta _j\) is the infection rate per capita within subpopulation j and \(p(1)+\dots +p(r)=p\). In order to find out whether the events affect homogeneously some groups of subpopulations, a hypothesis test is proposed. For this purpose, the null hypothesis is stated as: for each \(u=1,\dots ,r\), \(\beta _i=\beta _j\), for all \(i,j\in J_u\). By denoting \(\beta (u)=\frac{1}{p(u)}\sum _{j\in J_u}\beta _j\), for each \(u=1,\dots ,r\), the null hypothesis is summarized as

Notice that, for each \(u=1,\dots ,r\), \(\beta (u)\) represents the infection rate per capita within the subpopulation u. For each \(j=1,\dots ,p\) and \(n\in \mathbb {N}\), the cumulative intensity of \(\{N^j_{nt}\}_{t\ge 0}\) at \(t\ge 0\), is given by \(\Lambda ^{n,j}_t=k_j\beta _j\Lambda _{nt}\). These processes are observed during a time interval \([0,\theta ]\), where \(\theta >0\) is a time threshold. The likelihood function, \(\mathcal {\mathcal {L}}\), for \(\varvec{\beta }=(\beta _1,\dots ,\beta _p)^\top \) (see (Brémaud 1981; Karr 1991), for instance), satisfies

where \(C_n=\prod _{j=1}^p\exp \left( \int _0^{n\theta }\log (k_j\lambda _s)\mathrm {\,d}N^j_s+n\theta \right) \) and \(\lambda =\{\lambda _t\}_{t\ge 0}\) denotes the intensity of N, i.e. \(\Lambda _t=\int _0^t\lambda _s\mathrm {\,d}s\), for all \(t\ge 0\). Under \(\mathcal {H}_0\), this likelihood satisfies

Consequently, maximum likelihood estimators for \(\beta _j\), \(j=1,\dots ,p\), and \(\beta (u)\), \(u=1,\dots ,r\), are given by

respectively. Hence,

where \(\pi _j(u)=k_j/k(u)\) and \(k(u)=\sum _{m\in J_u}k_m\), for all \(j\in J_u\) and \(u=1,\dots ,r\).

The likelihood ratio, for testing \(\mathcal {H}_0\) against \(\mathcal {H}_0\) fails to be true, is given by

Hence,

and taking into account that \(\log x=(x-1)-\frac{1}{2}(x-1)^2+O((x-1)^3)\), we have

Accordingly, we reject the null hypothesis if the test statistic \(-2\log \mathcal {R}_n\) is too large. To test \(\mathcal {H}_0\) against local alternatives, the following theorem is stated.

Theorem 1

Let \(\{a_n\}_{n\in \mathbb {N}}\) be an increasing sequence of positive real numbers and suppose that \(\{\Lambda _t\}_{t\ge 0}\) is uniformly integrable and there exists \(h:\mathbb {R}_+\rightarrow \mathbb {R}_+\) such that, \(h(\theta )>0\) and

Let \(\{\mathcal {H}_n\}_{n\in \mathbb {N}}\) be the sequence of local alternatives to the null hypothesis defined as

where \((\Delta _j(u);j\in J_u)\in \mathbb {R}^{p(u)}\) and \(\sum _{j\in J_u}\Delta _j(u)=0\), for all \(u=1,\dots ,r\). Then, under \(\{\mathcal {H}_n\}_{n\in \mathbb {N}}\), \( -2\log \mathcal {R}_n\) has non central \(\chi ^2\)-asymptotic distribution with \(p-r\) degrees of freedom and non-centrality parameter

3.2 Simple hypothesis testing

A natural application of Theorem 1 is to calculate the approximate power of the test relative to

against the alternative

where \(\beta (1),\dots ,\beta (r)\in \mathbb {R}_+\) and, for each \(u=1,\dots ,r\), \(\varvec{\delta }_u=\{\delta _j(u)\}_{j\in J_u}\) is given and satisfies \(\sum _{j\in J_u}\delta _j(u)=0\).

Suppose that the critical region is \(\{-2\log \mathcal {R}_n>r_0\}\), where \(r_0\) has been calculated for a level of significance \(\alpha \) based upon the null hypothesis asymptotic chi-square distribution of \(-2\log \mathcal {R}_n\). For each \(u=1,\dots ,r\), we interpret \(\Delta _1(u),\dots ,\Delta _{p_u}(u)\) in \(\mathcal {H}_n\) (defined as in Theorem 1) as \(\sqrt{a_n}\delta _1(u),\dots ,\sqrt{a_n}\delta _{p_u}(u)\) and approximate the power of the test by means of the probability of \(\{\chi ^2\le r_0\}\), where \(\chi ^2\) is a random variable having chi square distribution with \(p-r\) degrees of freedom and non-centrality parameter

Let \(\lambda _n:\mathbb {R}^p\rightarrow [0,1]\) be the power function defined as \(\lambda _n(\varvec{\delta })=\mathbb {P}(-2\log \mathcal {R}_n> r_0|\mathcal {H}_1(\varvec{\delta }))\). It follows from Sun et al. in Sun et al. (2010) that the cumulative distribution function corresponding to a non-central \(\chi ^2\)-distribution is decreasing with respect to its non-centrality parameter. Accordingly, the power of the test is increasing with respect to this parameter, which at the same time, depends on the direction of \(\varvec{\delta }\).

To compare the power of a test, for different values of \(\varvec{\delta }\), we restrict the power function to the set of all \(\varvec{\delta }=\{\delta _j(u)\}_{u=1,\dots ,r,j\in J_u}\) satisfying

To analyze the power of the test proposed, at the beginning of this subsection, a simulation is conducted. Indeed, we suppose a population of 120 individuals is subdivided into 12 subpopulations. These subpopulations belong to one of three compartments with 3, 4, and 5 subpopulations each, respectively. The respective parameters are \(\beta (1)=0.05\), \(\beta (2)=0.03\), and \(\beta (3)=0.01\). We consider \(k_1=\dots =k_{12}=10\) and suppose N is a Poisson process with cumulative intensity \(\Lambda _t=t+t^{1/2}\) and \(a_n=n\). Hence \(h(\theta )=\theta \) and we consider \(\theta =30\). Let \(\varvec{\gamma }=(0.408283,0.408283,-0.8164966)^\top \) and consider the following four vectors \(\varvec{\delta }_1\), \(\varvec{\delta }_2\), \(\varvec{\delta }_3\) and \(\varvec{\delta }_4\) defined as

where, for \(i=3,6,9\), \(\varvec{0}_i\) denotes the zero vector in \(\mathbb {R}^i\). The non-centrality parameters of the test, for these vectors, are respectively given, by

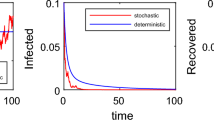

Based on these directions, four profiles \(\lambda ^i:(-4/100,4/100)\rightarrow \mathbb {R}\) (\(i=1,2,3,4\)) of the power function are defined as \(\lambda ^i(t)=\lambda _n(t\varvec{\delta }_i)\). That is \(\lambda ^i(t)=\mathbb {P}(-2\log \mathcal {R}_n>r_0|\mathcal {H}_1(t\varvec{\delta }_i))\), where \(r_0\) is determined by means of \(\mathbb {P}(-2\log \mathcal {R}_n>r_0|\mathcal {H}_0)=0.05\). Since under \(\mathcal {H}_0\), \(-2\log \mathcal {R}_n\) has an approximate chi square distribution with 9 degrees of freedom, we have \(r_0=16,9190\). In Fig. , we show the graphics of these four profiles, for \(n=5\) and \(n=10\). Since the non-centrality parameter of the test increases with \(i=1,2,3,4\), we have \(\lambda _1\le \lambda _2\le \lambda _3\le \lambda _4\).

3.3 An application with real data

Important applicability of our results is found in the infectious diseases field. In particular, we apply the asymptotic results of this work to the pandemic Covid 19 in Chile. This country is divided into sixteen regions and, according to the last census carried out in 2017, the population distribution and its density, for each region, is given in Table .

Like all countries in the world, Chile has been affected by the aforementioned pandemic and we assume the number of contacts, in this country, occurs according to a counting process, \(N=\{N_t\}_{t\ge 0}\) with cumulative intensity \(\Lambda =\{\Lambda _t\}_{t\ge 0}\). Since \(\Lambda \) is unknown, we estimate such a cumulative intensity, at time t, as \(\Lambda _t=N_t\). We divide the population into three zones, namely, North, South, and Central zones, and investigate if the infection has a homogeneous behavior within each zone. To this purpose, hypothesis test, with a significance level of \(\alpha =0.05\) is carried out and the pandemic is followed up from March 29 to April 30, 2021. Data was obtained from the database of Ministry of Health of the Chilean Government, which can be found on the website https://www.gob.cl/coronavirus/cifrasoficiales/#datos.

The null hypothesis is defined as follows

According to (2), the infection rate per capita, for the zones North, Central and South are estimated by

respectively. From Theorem 1, \(-2\log \mathcal {R}_n\) has chi square distribution with 13-degree freedom. The calculated value of \(-2\log \mathcal {R}_n\) gave us 6, 618, which is too large compared with 22.362. Consequently, the null hypothesis is rejected. But, observing Fig. , it seems possible that four pairs of subpopulations have a homogeneous behavior. Let \(G_i\), \(i=1,\dots ,4\) be defined according Table . The statistics \(-2\log \mathcal {R}_n\) has, in this case, chi square distribution with 4-degree freedom. It is obtained 6.692 as the calculated value of \(-2\log \mathcal {R}_n\). Since this value is smaller than 10.712, it is not possible to reject the homogeneity behavior of the pandemic in the population, with a significance level of \(\alpha =0,05\). The p-value turns out to be \(p=0.155\).

4 Asymptotic results

In the sequel, \((\Omega ,\mathcal {F},\mathbb {P})\) stands for a probability space and every random variable or stochastic process is defined on this space. For a matrix or vector A, \(A^\top \) denotes the transpose of A. Let \(\mathbb {F}\) be a filtration on \((\Omega ,\mathcal {F},\mathbb {P})\) and \(\varvec{M}\) be a p-variate square integrable martingale with respect to \((\mathbb {F},\mathbb {P})\). In this case, we denote the matrix \(\langle \varvec{M}\rangle =(\langle \varvec{e}_i^\top \varvec{M}\varvec{e}_j\rangle ;1\le i,j\le p)\), where \(\{\varvec{e}_1,\dots ,\varvec{e}_p\}\) is the canonical basis in \(\mathbb {R}^p\) and \(\langle \varvec{e}_i^\top \varvec{M}\varvec{e}_j\rangle \) is the predictable increasing process associated to the one-dimensional \((\mathbb {F},\mathbb {P})\)-martingale \(\varvec{e}_i^\top \varvec{M}\varvec{e}_j\). Given \((a_1,\dots ,a_p)^\top \in \mathbb {R}^p\), we denote by \(\varvec{\textrm{Diag}}(a_1,\dots ,a_p)\) the diagonal matrix whose respective elements in its diagonal are \(a_1,\dots ,a_p\).

Natural componentwise definitions for \(\prec \), \(\preceq \) and \(\succ \), \(\succeq \) on \(\mathbb {R}^p\) could be given as follows. Let \( \varvec{a}=(a_1,\dots ,a_p)^\top \) and \( \varvec{b}=(b_1,\dots ,b_p)^\top \) in \(\mathbb {R}^p\). We put \( \varvec{a}\preceq \varvec{b}\) (respectively, \( \varvec{a}\prec \varvec{b}\)), if and only if, for each \(i=1,\dots ,p\), \(a_i\le b_i\) (respectively, \(a_i<b_i\)). Moreover, \( \varvec{a}\succ \varvec{b}\) and \( \varvec{a}\succeq \varvec{b}\) mean \( \varvec{b}\prec \varvec{a}\) and \( \varvec{b}\preceq \varvec{a}\), respectively. As usual, for \(a,b\in \mathbb {R}\), the maximum and minimum of the set \(\{a,b\}\) are denoted by \(a\vee b\) and \(a\wedge b\), respectively. Also, \(\varvec{a}\vee \varvec{b}=(a_1\vee b_1,\dots ,a_p\vee b_p)^\top \) and \(\varvec{a}\wedge \varvec{b}=(a_1\wedge b_1,\dots ,a_p\wedge b_p)^\top \). Moreover, we denote \([\varvec{a},\varvec{b}]=[a_1,b_1]\times \dots \times [a_p,b_p]\), \((\varvec{a},\varvec{b}]=(a_1,b_1]\times \dots \times (a_p,b_p]\), \([\varvec{a},\varvec{b})=[a_1,b_1)\times \dots \times [a_p,b_p)\) and \((\varvec{a},\varvec{b})=(a_1,b_1)\times \dots \times (a_p,b_p)\).

In what follows, \(\{\varvec{\xi }_{k}\}_{k\in \mathbb {N}}\) stands for a sequence of independent and identically distributed p-variate random vectors with mean \(\varvec{\mu }=(\mu _1,\dots ,\mu _p)^\top \) and covariance matrix \({\varvec{\Sigma }}=(\sigma _{ij};1\le i,j\le p)\). For each \(k\in \mathbb {N}\) and \(j=1,\dots ,p\), \(\xi _{k}^j\) denotes the j-th component of \(\varvec{\xi }_k\). Let \(N^1,\dots ,N^p\) be p nonexplosive counting processes, adapted to a filtration \(\mathbb {G}=\{\mathcal {G}_t\}_{t\ge 0}\) and such that, for each \(j=1,\dots ,p\), \(N^j\) has predictable compensator \(\Lambda ^j\), which we assume continuous, i.e., there exists a \(\mathbb {G}\)-progressive and nonnegative process \(\lambda ^j\) such that, \(\Lambda ^j_t=\int _0^t\lambda ^j_s\mathrm {\,d}s\), for all \(t\ge 0\). As a consequence, we have \(N^j-\Lambda ^j\) is a \((\mathbb {G},\mathbb {P})\)-martingale, for for each \(j=1,\dots ,p\). These counting processes could be dependent, but we assume that they are independent of the sequence \(\{\varvec{\xi }_{k}\}_{k\in \mathbb {N}}\) of random vectors. To be more precise, in the sequel, we assume the sequence \(\{\varvec{\xi }_{k}\}_{k\in \mathbb {N}}\) is independent of \(\mathcal {G}_\infty \), the \(\sigma \)-algebra generated by \(\bigcup _{t\ge 0}\mathcal {G}_t\).

One of the most important aims of this work consists of studying the asymptotic distribution of the cumulative damage of the epidemic, which is represented, at the multi-time \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}^p_+\), by the p-random multi-indexed vector \(\varvec{W}_{\varvec{t}}=(W^1_{t_1},\dots ,W^p_{t_p})^\top \) defined by

Accordingly, \(W^j_t\) represents the cumulative damage of the epidemic, at time \(t\ge 0\), of the j-th component of the epidemic.

For any pair \(i,j=1,\dots ,p\), let

where \(\{T^{(i,j)}_m\}_{m\in \mathbb {N}}\) is the sequence of stopping times defined by recurrence as \(T^{(i,j)}_0=0\) and

The compensator of \(N^{(i,j)}\), with respect to \(\mathbb {G}\), is denoted by \(\Lambda ^{(i,j)}\) and we assume \(\Lambda ^{(i,j)}\) has continuous trajectories. Consequently \(N^{(i,j)}\) is a left quasi continuous process.

Let \(\{h_{ij};1\le i,j \le p\}\) be a family of nonnegative functions from \(\mathbb {R}_+\) to \(\mathbb {R}\) and \(\{a_n\}_{n\in \mathbb {N}}\) be a sequence of strictly positive real numbers converging to \(\infty \) and such that, for each pair \(i,j=1,\dots , p\) and \(t\ge 0\), \(\{\Lambda ^{(i,j)}_{nt}/a_n\}_{n\in \mathbb {N}}\) is uniformly integrable and \(\{\Lambda ^{(i,j)}_{n\cdot }/a_n\}_{n\in \mathbb {N}}\) converges uniformly in probability to \(h_{ij}\), on compact subsets of \(\mathbb {R}_+\). For each \(j=1,\dots , p\), we denote \(h_j=h_{jj}\). Since the cumulative damage of the epidemic is represented by the random sums \(W^j_t\) (\(j=1,\dots ,p, t\in \mathbb {R}_+\)), we are interested in investigating the convergence in law of the sequences \(\{\varvec{U}^n\}_{n\in \mathbb {N}}\) and \(\{\varvec{V}^n\}_{n\in \mathbb {N}}\) of p-variate random processes \(\varvec{U}^n=(U^{n,1},\dots ,U^{n,p})^\top \) and \(\varvec{V}^n=(V^{n,1},\dots ,V^{n,p})^\top \) defined as

Theorem 2

Let \(\varvec{A}(t)=(\sigma _{ij}h_{ij}(t);1\le i,j\le p)\) and \(\varvec{B}(t)=(\mu _i\mu _jh_{ij}(t);1\le i,j\le p)\), for each \(t\ge 0\). Then, the following two conditions hold:

-

(i)

\(\{\varvec{U}^n\}_{n\in \mathbb {N}}\) converges in law to a continuous Gaussian p-variate square integrable martingale, \(\varvec{U}\) starting at \(\varvec{0}\) with \(\langle \varvec{U}\rangle _t=\varvec{A}(t)\), for each \(t\ge 0\), and

-

(ii)

\(\{\varvec{V}^n\}_{n\in \mathbb {N}}\) converges in law to a continuous Gaussian p-variate square integrable martingale, \(\varvec{V}\) starting at \(\varvec{0}\) with \(\langle \varvec{V}\rangle _t=\varvec{A}(t)+\varvec{B}(t)\), for each \(t\ge 0\).

Proposition 3

Let \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}_+^p\) and for each \(n\in \mathbb {N}\), \(\varvec{{\widetilde{U}}}^n_{\varvec{t}}=(U^{n,1}_{t_1},\dots ,U^{n,p}_{t_p})^\top \) and \(\varvec{{\widetilde{V}}}^n_{\varvec{t}}=(V^{n,1}_{t_1},\dots ,V^{n,p}_{t_p})^\top \). Then, the sequences \(\{\varvec{{\widetilde{U}}}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) and \(\{\varvec{{\widetilde{V}}}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) converge in distribution to two p-variate normal random vectors \(\varvec{{\widetilde{U}}_{t}}\) and \(\varvec{{\widetilde{V}}_{t}}\) with mean zero and covariance matrices \(\varvec{{\widetilde{\Sigma }}_U(t)}=\left( {\widetilde{\sigma }}^{U}_{ij}(\varvec{t});1\le i,j\le p\right) \) and \(\varvec{{\widetilde{\Sigma }}_V(t)}=\left( {\widetilde{\sigma }}^{V}_{ij}(\varvec{t});1\le i,j\le p\right) \), respectively, defined by

Let \(\varvec{t}=(t_1,\dots ,t_p)\in \mathbb {R}_+^p\). Since for each \(j=1,\dots ,p\), \(W^j_{nt}-\mu _jN^j_{nt}\) and \(W^j_{nt}-\mu _j\Lambda ^j_{nt}\) are martingales, the p-variate parameter \(\varvec{\mu }=(\mu _1,\dots ,\mu _p)^\top \) can be estimate by \(\varvec{{\widehat{\mu }}}^n_{\varvec{t}}=({\widehat{\mu }}^n_1(t_1),\dots ,{\widehat{\mu }}^n_p(t_p))^\top \) and \(\varvec{{\widehat{\nu }}}^n_{\varvec{t}}=({\widehat{\nu }}^n_1(t_1),\dots ,{\widehat{\nu }}^n_p(t_p))^\top \), where for each \(j=1,\dots ,p\),

For each \(\varvec{t}=(t_1,\dots , t_p)^\top \) and \(n\in \mathbb {N}\setminus \{0\}\), let \(\varvec{N}^n_{\varvec{t}}=(N^1_{n t_1},\dots ,N^p_{n t_p})^\top \) and \(\varvec{\Lambda }^n_{\varvec{t}}=(\Lambda ^1_{n t_1},\dots ,\Lambda ^p_{n t_p})^\top \).

Corollary 4

With notations of Proposition 3, for each \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}^p_+\), \(\{\varvec{{\widehat{\mu }}}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) and \(\{\varvec{{\widehat{\nu }}}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) converge in probability to \(\varvec{\mu }\). Furthermore, the sequences of random vectors \(\{\sqrt{a_n}(\varvec{{\widehat{\mu }}}^n_{\varvec{t}}-\varvec{\mu })\}_{n\in \mathbb {N}}\) and \(\{\sqrt{a_n}(\varvec{{\widehat{\nu }}}^n_{\varvec{t}}-\varvec{\mu })\}_{n\in \mathbb {N}}\) converge in distribution to a p-variate normal random vector with mean zero and covariance matrices \(\varvec{D}^{-1}\varvec{{\widetilde{\Sigma }}_U(t)}\varvec{D}^{-1}\) and \(\varvec{D}^{-1}\varvec{{\widetilde{\Sigma }}_V(t)}\varvec{D}^{-1}\), respectively, where \(\varvec{D}=\varvec{\textrm{Diag}}(h_1(t_1),\dots ,h_p(t_p))\).

Let \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}_+^p\) and \(\varvec{W}_{\varvec{t}}=(W^1_{t_1},\dots ,W^p_{t_p})^\top \), where for each \(j=1,\dots ,p\) and \(t\ge 0\), \(W^j_t\) is defined as before. Accordingly, \(W^j_t\) represents the cumulative damage, at time \(t\ge 0\), of the j-th component of the epidemic. The study of the asymptotic behavior of this cumulative damage is based on the sequence \(\{\varvec{W}^n\}_{n\in \mathbb {N}}\) of random vectors defined as \(\varvec{W}^n_{\varvec{t}}=(W^1_{nt_1},\dots ,W^p_{nt_p})^\top \), for all \(\varvec{t}=(t_1,\dots ,t_p)\in \mathbb {R}_+^p\).

In the sequel, for each \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}_+^p\), we denote \(\varvec{w}_{\varvec{t}}=(w^1_{t_1},\dots ,w^p_{t_p})^\top \), where for each \(j=1,\dots ,p\) and \(t\ge 0\), \(w^j_t=\mu _jh_j(t)\).

Proposition 5

For each \(\varvec{t}=(t_1,\dots ,t_p)\in \mathbb {R}^p_+\), \(\{(1/a_n)\varvec{W}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) converges in probability to \(\varvec{w}_{\varvec{t}}\).

Proposition 5 motivates the existence of a large deviation principle (LDP) for the sequence \(\{(1/a_n)\varvec{W}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\).

Let (E, d) be a metric space. A good rate function is any lower semicontinuous function \(L^*\) from E to \([0,\infty ]\) such that for each \(c>0\), \(\{L^*\le c\}\) is compact. Given a sequence \(\{Z_n\}_{n\in \mathbb {N}}\) of random elements taking values in E and a good rate function \(L^*\) defined on E, in this work, we say that \(\{Z_n\}_{n\in \mathbb {N}}\) obeys an LDP with the good rate function \(L^*\), whenever there exists a sequence \(\{a_n\}_{n\in \mathbb {N}}\) of strictly positive real numbers converging to \(\infty \) such that the following two conditions hold:

-

i)

for each closed subset F in E, \(\limsup \frac{1}{a_n}\log \mathbb {P}(Z_n\in F)\le -\inf _{\varvec{x}\in F}L^*(\varvec{x})\), and

-

ii)

for each open subset G in \(\mathbb {R}^p\), \(\liminf \frac{1}{a_n}\log \mathbb {P}(Z_n\in G)\ge -\inf _{\varvec{x}\in G}L^*(\varvec{x})\).

The following LDP holds.

Theorem 6

Suppose \(\mathbb {E}(\mathop {\textrm{e}^{\varvec{\theta }^\top \varvec{\xi }_0}})<\infty \), for each \(\theta \in \mathbb {R}^p\). Let \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}_+^p\) and \(L_{\varvec{t}}:\mathbb {R}^p\rightarrow [0,\infty ]\) be defined as

Then, \(\{(1/a_n)\varvec{W}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) obeys an LDP with the good rate function \(L^*_{\varvec{t}}:\mathbb {R}^p\rightarrow [0,\infty ]\), the Fenchel-Legendre transform of \(L_{\varvec{t}}\), i.e.

5 A multivariate stopping time

As before, in this section, it is assumed that \(\{\varvec{\xi }_k\}_{k\in \mathbb {N}}\) and \(\mathcal {G}_{\infty }\) are independent. Additionally, we suppose \(\varvec{\xi }_0\succeq \varvec{0}\), \(\mathbb {P}\)-almost surely, and, in order to avoid trivial special cases, it is assumed \(\mu _j>0\), for each \(j=1,\dots ,p\). In order to know the useful life of the system, we define a multivariate stopping time \(\varvec{T}^n=(T^n_1,\dots ,T^n_p)^\top \) as

where \(\omega _j^*\) is the critical admissible value for the j-th component of the epidemic. It seems reasonable to pay attention to this component when the time \(T^n_j\) has been attained. In the sequel, we denote \(\varvec{\omega }^*=(\omega _1^*,\dots ,\omega _p^*)^\top \).

Corollary 7

Suppose \(\mathbb {E}(\mathop {\textrm{e}^{\varvec{\theta }^\top \varvec{\xi }_0}})<\infty \), for each \(\theta \in \mathbb {R}^p\). Let \(\varvec{t}\in \mathbb {R}_+^p\) and \(L_{\varvec{t}}^*\) be defined as in (4). Then,

A function \(h:\mathbb {R}_+\rightarrow \mathbb {R}_+\) is said to be homogeneous with degree of homogeneity \(k>0\), whenever for all \(\alpha , t \in \mathbb {R}_+\), \(h(\alpha t)=\alpha ^kh(t)\). We say that a nonexplosive counting process, N is asymptotically homogeneous with degree of homogeneity \(k>0\), whenever \(\{(1/a_n) N_{nt}\}_{n\in \mathbb {N}}\) converges in probability to h(t), for all \(t\in \mathbb {R}_+\), where \(h:\mathbb {R}_+\rightarrow \mathbb {R}_+\) is homogeneous with degree of homogeneity k.

It is clear that the standard Poisson process is a Markovian process and asymptotically homogeneous with degree of homogeneity 1. The standard Hawkes process is also a counting process asymptotically homogeneous with degree of homogeneity 1, however, this process is not Markovian (c.f. (Bacry et al. 2013; Fierro 2015)).

Example

Suppose \(N^1,\dots ,N^p\) are asymptotically homogeneous processes with degree of homogeneity 1, rate 1, and for each \(k\in \mathbb {N}\), \(\mathbb {P}(\varvec{\xi }_k=\varvec{e}_j)=\pi _j\), where \(\varvec{e}_1,\dots ,\varvec{e}_p\) are the vectors of the canonical basis on \(\mathbb {R}^p\) and \(\pi _1,\dots ,\pi _p\) are strictly positive numbers such that \(\pi _1+\dots +\pi _p<1\). In this case, we have \(a_n=n\) and \(h(t)=t\), where \(h_1=\dots =h_p=h\) and since \( \mathbb {E}(\mathop {\textrm{e}^{\theta _{j}\xi ^{j}_1}})=(1-\pi _j)+\pi _j\mathop {\textrm{e}^{\theta _j}}, \) we have, for each \(\varvec{x}=(x_1,\dots ,x_p)^\top \in \mathbb {R}^p\) and \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}^p\),

and hence

Moreover, by assuming for each \(j=1,\dots ,p\), \(\mu _jt_j\le \omega _j\), Corollary 7 implies that

In the sequel, we denote \(\varvec{\theta }^*=(\theta _1,\dots ,\theta _p)^\top \), where \(\theta _j=h_j^{-1}(\omega ^*_j/\mu _j)\), for each \(j=1,\dots ,p\). From Proposition 5, \(\varvec{w_t}\) is a parameter whose components represent the asymptotic charge of the epidemic at the multi-time \(\varvec{t}\). Hence, it is expected that \(\varvec{w_t}\) to be close to \(\varvec{\omega }^*\) when \(\varvec{t}\) is close to \(\varvec{\theta }^*\). Theorem 8 below states the asymptotic behavior of \(\left\{ \varvec{T}^n\right\} _{n\in \mathbb {N}\setminus \{0\}}\) around \(\varvec{\theta }^*\).

Theorem 8

Suppose, for each \(j=1,\dots ,p\), the following two conditions hold:

-

(i)

for each \(t>0\), \(\left\{ \sqrt{a_n}\sup _{0\le s\le t}\left| \frac{1}{a_n}\Lambda ^j_{ns}-h_j(s)\right| \right\} _{n\in \mathbb {N}}\) converges in probability to zero, and

-

(ii)

\(h_j\) is differentiable at \(\theta ^*_j\) with derivative \(h'_j(\theta ^*_j)>0\).

Then, \(\left\{ \varvec{T}^n\right\} _{n\in \mathbb {N}\setminus \{0\}}\) converges in probability to \(\varvec{\theta }^*\) and \(\left\{ \sqrt{a_n}(\varvec{T}^n-\varvec{\theta }^*)\right\} _{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to a normal random vector with mean zero and covariance matrix \(\varvec{\Psi }(\varvec{\theta }^*)=(\psi _{ij}(\varvec{\theta }^*);1\le i,j\le p)\), where

Corollary 9

Suppose, for each \(j=1,\dots ,p\), \(h_j\) is homogeneous with degree of homogeneity \(k>0\) and the following two conditions hold:

-

(i)

for each \(t>0\), \(\{n^{-k/2}\sup _{0\le s\le t}|\Lambda ^j_{ns}-h_j(ns)|\}_{n\in \mathbb {N}}\) converges in probability to zero, and

-

(ii)

\(h_j\) is differentiable at \(\theta ^*_j\), with derivative \(h'_j(\theta ^*_j)>0\).

Then, \(\left\{ \varvec{T}^n\right\} _{n\in \mathbb {N}\setminus \{0\}}\) converges in probability to \(\varvec{\theta }^*\) and \(\left\{ n^{k/2}(\varvec{T}^n-\varvec{\theta }^*)\right\} _{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to a normal random vector with mean zero and covariance matrix

Example

Suppose \(N^1,\dots ,N^p\) are asymptotically homogeneous processes with degree of homogeneity \(k>0\) and continuous and strictly positive intensities \(\lambda _1,\dots ,\lambda _p\), which are homogeneous with degree of homogeneity \(k>0\). In this case, \(\Lambda ^1,\dots ,\Lambda ^p\) are homogeneous with degree of homogeneity \(k+1\) and for each \(j=1,\dots ,p\) and \(t\ge 0\), \(\Lambda ^j(t)=h_j(t)\), where \(h_j(t)=\int _0^t\lambda _j(s)\mathrm {\,d}s\). Accordingly, conditions (i) and (ii) in Corollary 9 hold and consequently, \(\left\{ n^{(k+1)/2}(\varvec{T}^n-\varvec{\theta }^*)\right\} _{n\in \mathbb {N}}\) converges in distribution to a normal random vector with mean zero and covariance matrix

We are assuming that, for each pair \(i,j=1,\dots , p\) and \(t\ge 0\), \(\{\Lambda ^{(i,j)}_{nt}/a_n\}_{n\in \mathbb {N}}\) is uniformly integrable and \(\{\Lambda ^{(i,j)}_{n\cdot }/a_n\}_{n\in \mathbb {N}}\) converges uniformly in probability to \(h_{ij}\), on compact subsets of \(\mathbb {R}_+\). Even though, the distribution of each \(N_i\), \(i=1,\dots ,p\), depends only on its intensity \(\lambda _i\), the functions \(h_{ij}\), \(1\le i<j\le p\) depend on the joint distribution of \(N^1,\dots ,N^p\). For instance, for \(N^1,\dots ,N^p\) without common jumps, we have

and, for \(N^1=\dots =N^p\), the covariance matrix is given by

where for each \(j=1,\dots ,p\) and \(t\ge 0\), \(\Lambda ^j(t)=h(t)=\int _0^t\lambda (s)\mathrm {\,d}s\).

If, additionally, the random vectors \(\varvec{\xi }_k\), \(k\in \mathbb {N}\), are distributed as in Example 5, then \(\varvec{\Psi }(\varvec{\theta }^*)\) is a diagonal matrix, irrespective of whether \(N^1,\dots ,N^p\) are equal or they have no common jumps. Indeed, in any case we have

Both in Theorem 8 and Corollary 9, the asymptotic covariance matrix \(\varvec{\Psi }(\varvec{\theta }^*)\) depends on the parameter \(\varvec{\theta }^*\). This fact produces some difficulties when applying these results to statistical inference about the parameter \(\varvec{\theta }^*\). To avoid these complications, we denote by \(\varvec{L}(\varvec{\theta }^*)\) the matrix in the Cholesky descomposition of \(\varvec{\Psi }(\varvec{\theta }^*)\), i.e. \(\varvec{L}(\varvec{\theta }^*)\) is the unique lower triangular matrix satisfying \(\varvec{\Psi }(\varvec{\theta }^*)=\varvec{L}(\varvec{\theta }^*)\varvec{L}(\varvec{\theta }^*)^\top \). After that, studentized versions of the above results are stated as follows.

Corollary 10

Suppose, for each \(j=1,\dots ,p\), \(h_j\) is continuously differentiable at \(\theta ^*_j\) with derivative \(h_j(\theta ^*_j)>0\). Then, the sequence \(\{\sqrt{a_n}\varvec{L}(\varvec{\theta ^*})^{-1}(\varvec{T}^n-\varvec{\theta ^*})\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to a normal random vector with mean zero and covariance matrix \(\varvec{\textrm{I}}_p\), the \(p\times p\)-identity matrix.

Corollary 11

Suppose, for each \(j=1,\dots ,p\), \(h_j\) is continuously differentiable at \(\theta ^*_j\) with derivative \(h_j(\theta ^*_j)>0\). Then, the sequence \(\{\sqrt{a_n}\varvec{L}(\varvec{T}^n)^{-1}(\varvec{T}^n-\varvec{\theta ^*})\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to a normal random vector with mean zero and covariance matrix \(\varvec{\textrm{I}}_p\), the \(p\times p\)-identity matrix.

Corollary 12

Suppose, for each \(j=1,\dots ,p\), \(h_j\) is continuously differentiable at \(\theta ^*_j\) with derivative \(h_j(\theta ^*_j)>0\). Then, \(\{a_n(\varvec{T}^n-\varvec{\theta ^*})^\top \varvec{\Psi }(\varvec{T}^n)^{-1}(\varvec{T}^n-\varvec{\theta ^*})\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to a chi-square random variable with p degrees of freedom.

6 Proofs of results

As usual, for a stochastic process, \(X:\mathbb {R}_+\times \Omega \rightarrow \mathbb {R}\) and \(t\ge 0\), \(X_t\) and \(\Delta X_t\) denote the random variables \(X(t,\cdot )\) and \(X_t-X_{t-}\), respectively, where \(X_{t-}=\lim _{s\uparrow t}\), for each \(t>0\) and \(X_{0-}=0\).

Notice that, for each \(t>0\) and \(i,j=1,\dots ,p\),

and hence

Given a family \(\mathcal {C}\) of random variables, \(\sigma (\mathcal {C})\) denotes the \(\sigma \)-algebra generated by \(\mathcal {C}\). For any \(j=1,\dots ,p\) and \(m,n\in \mathbb {N}\), let \(\mathcal {C}^{j,m,n}_t=\{\xi ^j_0,\dots , \xi ^j_{m}\}\cup \mathcal {G}_{nt}\). A new filtration \(\mathbb {F}^n=\{\mathcal {F}^n_t\}_{t\ge 0}\), on \((\Omega ,\mathcal {F},\mathbb {P})\), is defined as

It is easy to see that indeed \(\mathbb {F}^n\) is a filtration on \((\Omega ,\mathcal {F},\mathbb {P})\) and that for each \(t>0\),

where \(\mathcal {C}^{j,m,n}_{t-}=\{\xi ^j_0,\dots , \xi ^j_{m}\}\cup \mathcal {G}_{nt-}\).

As usual, \(\textrm{D}(\mathbb {R}_+,\mathbb {R}^p)\) stands for the Skorohod space of all right-continuous functions from \(\mathbb {R}_+\) to \(\mathbb {R}^p\) having left-hand limits.

The above technical resources are used for proving the convergence in law of certain \((\mathbb {F}^n,\mathbb {P})\)-martingales associated to the cumulative damage of the epidemic.

Proof of Theorem 2

We start proving that \(\varvec{U}^n\) and \(\varvec{V}^n\) are two p-variate square integrable \((\mathbb {F}^n,\mathbb {P})\)-martingales. Since \(\mathcal {G}_{nt}\subseteq \mathcal {F}^n_t\), it is clear that, for each \(n\in \mathbb {N}\), \(\varvec{U}^n\) and \(\varvec{V}^n\) are \(\mathbb {F}^n\)-adapted. For each \(m\in \mathbb {N}\), let \(M^{j,n}_{m}=\frac{1}{\sqrt{a_n}}\sum _{k=1}^m (\xi _k^j-\mu _j)\), \(t\ge 0\), and notice that

Analogously, since \(\{\Lambda ^{j}_{nt}/a_n\}_{n\in \mathbb {N}}\) is uniformly integrable and converges in probability, we have

On the other hand, for each \(s,t\in \mathbb {R}_+\) such that \(s\le t\) and \(A\in \mathcal {F}^n_s\), it is obtained

due to, for each \(m,r\in \mathbb {N}\) such that \(m\le r\), \(A\cap \{N^j_{ns}=m\}\cap \{N^j_{nt}=r\}\in \mathcal {C}^{j,m,n}_t\) and

This proves that \(\varvec{U}^n\) is a p-variate \((\mathbb {F}^n,\mathbb {P})\)-square integrable martingale.

Let \(s,t\in \mathbb {R}_+\) such that \(s\le t\), \(j=1,\dots ,p\), and \(A\in \mathcal {F}^n_s\). By the independence of \(\{\varvec{\xi }_k\}_{k\in \mathbb {N}}\) and \(\{N^1,\dots , N^p\}\), for each \(m\in \mathbb {N}\), we have

and hence, by the dominated convergence theorem, we obtain

i.e. \(\{N^j_{nt}-\Lambda ^j_{nt}\}_{t\ge 0}\) is an \((\mathbb {F}^n,\mathbb {P})\)-martingale, and since for each \(t\ge 0\)

and \(\mathbb {E}((N^j_{nt}-\Lambda ^j_{nt})^2/a_n)=\mathbb {E}(\Lambda ^j_{nt}/a_n)<\infty \), we have \(\varvec{V}^n\) is a p-variate square integrable \((\mathbb {F}^n,\mathbb {P})\)-martingale.

For each pair \(i,j=1,\dots ,p\) and \(s\in \mathbb {R}_+\), we have

Let us prove that

Indeed, let \(A\in \mathcal {F}^n_{s-}\), \(u,v\in \mathbb {N}\setminus \{0\}\) such that \(u< v\) and

Notice that \(B\in \sigma (\mathcal {C}^{i,u-1,n}_{s-})\cap \sigma (\mathcal {C}^{j,v-1,n}_{s-})\subset \sigma (\mathcal {C}^{i,u,n}_{s})\) and

From this fact and (8), we have

due to \(\xi ^j_{v}-\mu _j\) is independent of \(\sigma (\mathcal {C}^{i,u,n}_{s})\). Thus, (9) holds. This fact along with (8) imply that

and consequently, for each \(t\ge 0\),

Hence, from assumption, \(\{\langle U^{n,i},U^{n,j}\rangle \}_{n\in \mathbb {N}}\) converges uniformly in probability to \(\sigma _{ij}h_{ij}\), on compact subsets of \(\mathbb {R}_+\). On the other hand,

which implies that \(\{\sup _{0\le s\le t}|\Delta U^{n,i}_s|\}_{n\in \mathbb {N}}\) is uniformly integrable. Moreover, by the Kolmogorv inequality, for any \(\epsilon >0\) and \(t\ge 0\), we have

and thus \(\mathbb {P}\left( \sup _{0\le s\le t}|\Delta U^{n,i}_s|>\epsilon \right) \le \sigma ^2_{ii}\mathbb {E}(\Lambda ^i_{nt})/a_n^2\epsilon ^2\). Hence, \(\{\sup _{0\le s\le t}|\Delta U^{n,i}_s|\}_{n\in \mathbb {N}}\) converges in probability to zero. These facts together with Corollary 12 in Chapter II by Rebolledo in Rebolledo (1979) imply that \(\{\varvec{U}^n\}_{n\in \mathbb {N}}\) converges in law to a continuous Gaussian p-variate martingale \(\varvec{U}\) starting at \(\varvec{0}\) with \(\langle \varvec{U}\rangle =\varvec{A}\).

From (7), due to \(\{\varvec{\xi }_k\}_{k\in \mathbb {N}}\) and \(\mathcal {G}_{\infty }\) are independent, for each \(t\ge 0\), we have

and consequently \(\{\langle \varvec{V}^n\rangle \}_{n\in \mathbb {N}}\) converges uniformly in probability to \(\varvec{A}+\varvec{B}\). As before, for the jumps of \(U^{n,i}\), it is obtained that the sequence \(\{\sup _{0\le s\le t}|\Delta V^{n,i}_s|\}_{n\in \mathbb {N}}\) is uniformly integrable and converges in probability to zero. By applying again Corollary 12 in Chapter II by Rebolledo in Rebolledo (1979), we obtain that \(\{\varvec{V}^n\}_{n\in \mathbb {N}}\) converges in law to a continuous Gaussian p-variate martingale \(\varvec{V}\) starting at \(\varvec{0}\) with \(\langle \varvec{V}\rangle =\varvec{A}+\varvec{B}\). Therefore the proof is complete. \(\square \)

Remark

Let \(\varvec{Z}=(Z^1,\dots ,Z^p)^\top \), where \(Z^j_t=\sum _{k=0}^{N^j_t}\xi _k-\mu _jN^j_t\), and suppose \(\varvec{Z}\) is a stationary Markovian process. Hence, for all measurable and bounded function, \(f:\mathbb {R}^p\rightarrow \mathbb {R}\), we have

where \(M^f\) is a martingale with

Ł is the infinitesimal generator of \(\varvec{Z}\), and \(g:\mathbb {R}^p\rightarrow \mathbb {R}\) is another measurable and bounded function. Let \(\pi _j:\mathbb {R}^p\rightarrow \mathbb {R}\) be defined as \(\pi _j(\text {z})=z_j\), where \(\varvec{z}=(z_1,\dots ,z_p)^\top \). We have Ł \(\pi _j(\text {z})=0\) and Ł \((\pi _i\pi _j)(\text {z})=\lambda _{i,j}(\text{ z})\mathbb {E}((\xi ^i_1-\mu _i)(\xi ^j_1-\mu _j))\), where \(\Lambda _t^{(i,j)}=\int _0^t\lambda _{ij}(\text {Z}_s)\mathrm {\,d}s\). Consequently, \(\langle M^{\pi _i},M^{\pi _j}\rangle _t=\sigma _{ij}\Lambda _t^{(i,j)}\). Since \(Z^j=M^{\pi _j}\), from this, we directly obtain (10) and, due to \(\{\varvec{\xi }_k\}_{k\in \mathbb {N}}\) and \(\mathcal {G}_{\infty }\) are independent, from (7), for each \(t\ge 0\), we have

Proof of Proposition 3

The function \(\pi _{\text {t}}:\textrm{D}(\mathbb {R}_+,\mathbb {R}^p)\rightarrow \mathbb {R}^p\) defined as

is continuous in \(\omega \), whenever \(\omega \) is continuous at \(t_1,\dots ,t_p\in \mathbb {R}_+\). In particular, \(\pi _{\varvec{t}}\) is continuous on \(\textrm{C}(\mathbb {R}_+,\mathbb {R}^p)\). This fact along with Theorem 2 imply that \(\{\pi _{\varvec{t}}(\varvec{U}^n)\}_{n\in \mathbb {N}}\) and \(\{\pi _{\varvec{t}}(\varvec{V}^n)\}_{n\in \mathbb {N}}\) converge in distribution to \(\pi _{\varvec{t}}(\varvec{U})\) and \(\pi _{\varvec{t}}(\varvec{V})\), respectively, where \(\varvec{U}\) and \(\varvec{V}\) are defined in Theorem 2. By defining \(\varvec{{\widetilde{U}}_{t}}=\pi _{\varvec{t}}(\varvec{U})\) and \(\varvec{{\widetilde{V}}_{t}}=\pi _{\varvec{t}}(\varvec{V})\), these p-variate random vectors have normal distribution with mean zero and covariance matrices \(\varvec{{\widetilde{\Sigma }}_U(t)}\) and \(\varvec{{\widetilde{\Sigma }}_V(t)}\) defined by (4). Thus, the proof is complete. \(\square \)

Proof of Corollary 4

In this proof, we use notations of Proposition 3. Let \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}^p_+\) and \(\varvec{\varphi }:\mathbb {R}^p\rightarrow \mathbb {R}^p\) be defined as \(\varvec{\varphi }(\varvec{u},\varvec{\eta })=(u_1/\eta _1,\dots ,u_p/\eta _p)^\top \), where \(\varvec{\eta }=(\eta _1,\dots ,\eta _p)^\top \) and \(\varvec{u}=(u_1,\dots ,u_p)^\top \). We have \(\sqrt{a_n}(\varvec{{\widehat{\mu }}}^n_{\varvec{t}}-\varvec{\mu })=\varvec{\varphi }(a_n\varvec{{\widetilde{U}}}^n_{\varvec{t}},\varvec{N}^n_{\varvec{t}})\) and \(\sqrt{a_n}(\varvec{{\widehat{\nu }}}^n_{\varvec{t}}-\varvec{\nu })=\varvec{\varphi }(a_n\varvec{{\widetilde{V}}}^n_{\varvec{t}},\varvec{\Lambda }^n_{\varvec{t}})\). Moreover, by Proposition 3 and the continuity of \(\varvec{\varphi }\), \(\{\varvec{\varphi }(a_n\varvec{{\widetilde{U}}}^n_{\varvec{t}},\varvec{N}^n_{\varvec{t}})\}_{n\in \mathbb {N}\setminus \{0\}}\) and \(\{\varvec{\varphi }(a_n\varvec{{\widetilde{V}}}^n_{\varvec{t}},\varvec{\Lambda }^n_{\varvec{t}})\}_{n\in \mathbb {N}\setminus \{0\}}\) converge in distribution to \(\varvec{\varphi }(\varvec{{\widetilde{U}}}_{\varvec{t}},\varvec{h}(\varvec{t}))\) and \(\varvec{\varphi }(\varvec{{\widetilde{V}}}_{\varvec{t}},\varvec{h}(\varvec{t}))\), respectively, where \(\varvec{h}(\varvec{t})=(h_1(t_1),\dots ,h_p(t_p))^\top \). Since \(\varvec{\varphi }(\varvec{{\widetilde{U}}}_{\varvec{t}},\varvec{h}(\varvec{t}))\) and \(\varvec{\varphi }(\varvec{{\widetilde{V}}}_{\varvec{t}},\varvec{h}(\varvec{t}))\) are random vectors having normal distribution with mean \(\varvec{0}\) and covariance matrices \(\varvec{D}^{-1}\varvec{{\widetilde{\Sigma }}_U(t)}\varvec{D}^{-1}\) and \(\varvec{D}^{-1}\varvec{{\widetilde{\Sigma }}_V(t)}\varvec{D}^{-1}\), respectively, the proof is complete. \(\square \)

Proof of Proposition 5

Let \(\varvec{t}=(t_1,\dots ,t_p)^\top \in \mathbb {R}^p_+\). With notations stated in Proposition 3, we have

Hence, this proposition follows from Proposition 3 and the convergence in probability of \(\{\Lambda ^j_{nt_j}/a_n\}_{n\in \mathbb {N}}\) to \(h_j(t_j)\), for each \(j=1,\dots ,p\). \(\square \)

Proof Theorem 6

Let \(L^n_{\varvec{t}}:\mathbb {R}^p\rightarrow [0,\infty ]\) be defined as \(L^n_{\varvec{t}}(\varvec{\theta })=\log \mathbb {E}(\mathop {\textrm{e}^{(1/a_n)\varvec{\theta }^\top \varvec{W}^n_{\varvec{t}}}})\) and for each \(\varvec{t}\in \mathbb {R}^p_+\), let \(\sigma =\sigma _{\varvec{t}}:\{0,1,\dots ,p\}\rightarrow \{0,1,\dots ,p\}\) be the permutation satisfying \(\sigma (0)=0\) and \(0=t_{\sigma (0)}\le t_{\sigma (1)}\le \dots \le t_{\sigma (p)}\). Consequently, for each \(j=1,\dots ,p\), the random variables \(N^{\sigma (j)}_{nt_{\sigma (1)}},N^{\sigma (j)}_{nt_{\sigma (2)}}-N^{\sigma (j)}_{nt_{\sigma (1)}},\dots ,N^{\sigma (j)}_{nt_{\sigma (j)}}-N^{\sigma (j)}_{nt_{\sigma (j-1)}}\) are independent.

Since

we have

By applying in (11), first, \(\mathbb {E}(\cdot |N^1,\dots ,N^p)\), second, \(\mathbb {E}\), and then \(\log \), we obtain

and accordingly

Consequently,

Since \(\mathcal {D}=\{\varvec{\theta }\in \mathbb {R}^p:L_{\varvec{t}}(\varvec{\theta })<\infty \}=\mathbb {R}^p\) and, by dominated convergence, \(L_{\varvec{t}}\) is differentiable in \(\mathcal {D}\), the Gärtner-Ellis theorem (see (Dembo and Zeitouni 1998), for instance) implies that \(L^*_{\varvec{t}}\), given by (5), is a good rate function and \(\{(1/a_n)\varvec{W}^n_{\varvec{t}}\}_{n\in \mathbb {N}}\) obeys an LDP with the rate function \(L^*_{\varvec{t}}\). This concludes the proof. \(\square \)

Proof Corollary 7

From Theorem 6, we have

due to the nonnegative function \(L^*_{\varvec{t}}\) has a minimum at \(\varvec{x}= \varvec{w}_{\varvec{t}}\) (\(L^*_{\varvec{t}}(\varvec{w}_{\varvec{t}})=0\)) and it is increasing on the set \(\{\varvec{x}\in \mathbb {R}^p: \varvec{x}\succeq \varvec{w}_{\varvec{t}}\}\). On the other hand,

Therefore, condition (6) holds and the proof is complete. \(\square \)

Proof of Theorem 8

Let \(\varvec{t}{=}(t_1,\dots ,t_p)^\top {\in }\mathbb {R}_{+}^p\). From Proposition 5, \(\{(1/a_n)\varvec{W}^n_{\varvec{t}}\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in probability to \(\varvec{w_t}\) and hence

But \(\varvec{w_t}\succ \varvec{\omega ^*}\), if and only if, \(\varvec{t}\succ \varvec{\theta }^*\). Consequently, \(\{\varvec{T}^n\}_{n\in \mathbb {N}}\) converges in distribution, and hence in probability, to \(\varvec{\theta ^*}\).

Moreover,

and since, for each \(j=1,\dots ,p\),

for each \(\delta >0\), we have

Hence, by using notations stated in Proposition 3, it is obtained that

where \(\Vert {\cdot }\Vert \) stands for the Euclidean norm in \(\mathbb {R}^p\). On the other hand, for each \(j=1,\dots ,p\), we have

and hence \(\lim _{n\rightarrow \infty }\sqrt{a_n}\mu _j\left( h_j(\theta _j^*)-h_j(\theta _j^*+t/\sqrt{a_n})\right) =-\mu _jth_j'(\theta ^*_j)\). This fact along with (12) and (13) imply that

Accordingly, by Proposition 3, we have

where \(\varvec{D} =\varvec{\textrm{Diag}}(\mu _1h_1'(\theta ^*_1),\dots ,\mu _ph_p'(\theta ^*_p))\). Since \(\varvec{D}^{-1}\varvec{{\widetilde{V}}}_{\varvec{\theta }^*}\) has multivariate normal distribution with mean zero and covariance matrix \(\varvec{\Psi }(\varvec{\theta }^*)\), the proof is complete. \(\square \)

Proof Corollary 11

Let \(\varvec{Y}^n=\sqrt{a_n}\varvec{L}(\varvec{T}^n)^{-1}(\varvec{T}^n-\varvec{\theta ^*})\) and \(\varvec{Z}^n=\sqrt{a_n}\varvec{L}(\varvec{\theta }^*)^{-1}(\varvec{T}^n-\varvec{\theta ^*})\). From Corollary 10, \(\{\varvec{Z}^n\}_{n\in \mathbb {N}}\) converges in distribution to a normal random vector \(\varvec{Z}\), with mean zero and covariance matrix \(\varvec{\textrm{I}}_p\). Since \(\{\varvec{T}^n\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in probability to \(\varvec{\theta }^*\) and, by assumption, \(\varvec{L}(\cdot )^{-1}\) is continuous, it follows from the Slutsky theorem that \(\{\varvec{Y}^n\}_{n\in \mathbb {N}\setminus \{0\}}\) and \(\{\varvec{Z}^n\}_{n\in \mathbb {N}\setminus \{0\}}\) have the same asymptotic distribution. Therefore, \(\{\varvec{Y}^n\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to \(\varvec{Z}\) and the proof is complete. \(\square \)

Proof Theorem 1

For each \(u=1,\dots ,r\) and \(m\in J_u\), let

\(\varvec{X}^n(u)=(X^n_m(u);m\in J_u)\), and

Hence, under \(\mathcal {H}_n\), for each \(u=1,\dots ,r\), and \(j\in J_u\), we have

and consequently,

where \(\varvec{\textrm{I}}_p\) stands for the \(p\times p\)-identity matrix, \(\Vert {\cdot }\Vert \) denotes the Euclidean norm in \(\mathbb {R}^p\) and

is the random matrix defined, for \(u=1,\dots ,r\), as \(\varvec{C}^n(u)=(c^n_{jm}(u);j,m\in J_u)\) with

Thus, from (3) and (14), we have \(-2\log \mathcal {R}_n\asymp \Vert {(\varvec{\textrm{I}}_p-\varvec{C}^n)\varvec{X}^n}\Vert ^2\). On the other hand, Corollary 4 and Slutsky’s theorem imply that, under \(\{\mathcal {H}_n\}_{n\in \mathbb {N}}\), \(\{\varvec{X}^n\}_{n\in \mathbb {N}}\) converges in distribution to a normal random vector \(\varvec{X}\) with mean \(\varvec{\mu }_{\varvec{X}}\) and covariance matrix \(\varvec{\Sigma }_{\varvec{X}}=\varvec{\textrm{I}}_p\), where

with

Moreover, under \(\{\mathcal {H}_n\}_{n\in \mathbb {N}}\), \(\{\varvec{C}^n\}_{n\in \mathbb {N}}\) converges in probability to

where, for \(u=1,\dots ,r\), \(\varvec{C}(u)=(c_{ij}(u);i,j\in J_u)\) is given by \(c_{ij}(u)=\sqrt{\pi _i(u)\pi _j(u)}\).

Accordingly, \(\{-2\log \mathcal {R}_n\}_{n\in \mathbb {N}\setminus \{0\}}\) converges in distribution to \(\Vert {(\varvec{\textrm{I}}_p-\varvec{C})\varvec{X}}\Vert ^2\) and, since each \(\varvec{C}(u)\) (\(u=1,\dots ,r\)) is an idempotent and symmetric matrix with rank 1, we have \(\varvec{\textrm{I}}_p-\varvec{C}\) is idempotent with rank \(p-r\). Moreover, we have

and hence, by Theorem in Sect. 3.5 in Serfling (1980), \(\Vert {(\varvec{\textrm{I}}_p-\varvec{C})\varvec{X}}\Vert ^2\) has a non-central \(\chi ^2\)-distribution with \(p-r\) degree of freedom and non-centrality parameter \(\varvec{\mu }_{\varvec{X}}^\top (\varvec{\textrm{I}}_p-\varvec{C})\varvec{\mu }_{\varvec{X}}\). But \( \varvec{\mu }_{\varvec{X}}^\top (\varvec{\textrm{I}}_p-\varvec{C})\varvec{\mu }_{\varvec{X}}=\Vert {\varvec{\mu }_{\varvec{X}}}\Vert ^2-\Vert {\varvec{C} \varvec{\mu }_{\varvec{X}}}\Vert ^2\), and therefore,

which concludes the proof. \(\square \)

7 Conclusions and possible future work

A continuous-time stochastic model has been proposed and studied for the damage caused by a multi-type epidemic or pandemic, when the events are occurring at random times and where the magnitude of each of this events, or infections in case the epidemic is infectious, is also random. Central limit theorems are stated for two sequences of martingales, which allow knowing the asymptotic distribution of the cumulative damage of the epidemic at any multi-time. Thresholds for the components of the epidemic model are stated and the asymptotic distribution of the multivariate stopping time, when the damage of each component attains the corresponding threshold, is studied.

The importance of this work is validated by means of an application to infectious diseases. In particular, the asymptotic results of this work are applied in studying the possible homogeneity of the infection in the population, for the pandemic Covid-19 in Chile. However, we think our results apply to other type of problems caused by epidemics, such as the insufficient number of highly complex hospital beds available for the attention of seriously ill patients. We hope to investigate this topic in future work.

Data availibility statement

All data generated or analysed during this study are included in this published article.

References

Bacry E, Delattre S, Hoffmann M, Muzy JF (2013) Some limit theorems for Hawkes processes and application to financial statistics. Stoch Process Appl 123(7):2475–2499

Ball F, Neal P (2022) An epidemic model with short-lived mixing groups. J Math Biol 85:63

Bolzoni L, Bonacini E, Marca RD, Groppi M (2019) Optimal control of epidemic size and duration with limited resources. Math Biosci 315:108232

Brémaud P (1981) Point Processes and Queues, Martingale Dynamics. Springer, New York

Chiang WH, Liu X, Mohler G (2022) Hawkes process modeling of Covid-19 with mobility leading indicators and spatial covariates. Int J Forecast 38:505–520

Cohen F (1987) Computer viruses: theory and experiments. Comput Secur 6(1):22–35

Dembo A, Zeitouni O (1998) Large deviations techniques and applications. Springer, New York

Fierro R (2015) The Hawkes process with different exciting functions and its asymptotic behavior. J Appl Probab 52(1):37–54

Fierro R, Leiva V, Maidana JP (2018) Cumulative damage and times of occurrence for a multicomponent system: A discrete time approach. J Multivar Anal 168:323–333

Gasperoni F, Ieva F, Barbati G, Scangetto A, Iorio A, Sinagra G et al (2017) Multi-state modelling of heart failure care path: a population based investigation from Italy. PLos ONE 12(6):1–15

Hacohen A, Cohen R, Efroni A, Bachelet I, Barzel B (2022) Distribution equality as an optimal epidemic mitigation strategy. Sci Rep 12:10430

Hananel R, Fishman R, Malovicki-Yaffe N (2022) Urban diversity and epidemic resilience: the case of the COVID-19. Cities 122:103526

He Z, Jiang Y, Chakraborti R, Berry TD (2022) The impact of national culture on COVID-19 pandemic outcomes. Int J Soc Econ 49(3):313–335

Hethcote HW, Van Ark JW (1987) Epidemiological models for heterogeneous populations: proportionate mixing, parameter estimation, and immunization programs. Math Biosci 84(1):85–118

Holbrook AJ, Ji X, Suchard MA (2022) From viral evolution to spatial contagion: a biologically modulated Hawkes model. Phylogenetics 38(7):1846–1856

Hollinghurst J, North L, Emmerson Ch, Akbari A, Torabi F, Williams Ch, Lyons RA, Hawkes AG, Bennett E, Gravenor MB, Fry R (2022) Intensity of Covid-19 in care homes following hospital discharge in the early stages of the UK epidemic. Age Ageing 51:403–408

Karr AF (1991) Point processes and their statistical inference. Marcel Dekker, New York

Kassay G, Rădulescu V (2019) Equilibrium problems and applications. Academic Press, London

Kermack WO (1927) A contribution to the mathematical theory of epidemics. Proccedings of the Royal Society of London. Ser A, Contain Papers Math Phys Character 115(772):700–721

Liu Y, Liao Ch, Zhuo L, Tao H (2022) Evaluating effects of dynamic interventions to control COVID-19 pandemic: a case study of Guangdong, China. Int J Environ Res Public Health 19:10154

Mancini L, Paganoni AM (2019) Marked point process models for the admissions of heart failure patients. Stat Anal Data Min 28(6):125–135

Mazzali C, Paganoni AM, Ieva F, Masella C, Maistrello M, Agostoni O, Scalvini S, Frigerio M (2016) Methodological issues on the use of administrative data in healthcare research: the case of heart failure hospitalizations in Lombardy region, 2000–2012. BMC Health Serv Res 16(234):10

Molkenthin Ch, Donner Ch, Reich S, Zöller G, Sebastian Hainzl S, Holschneider M, Opper M (2022) GP-ETAS: semiparametric Bayesian inference for the Spatio-temporal epidemic type aftershock sequence model. Stat Comput 32:29

Ogata Y (1988) Statistical models for earthquake occurrences and residual analysis for point processes. J Am Stat Assoc 83(401):9–27

Ogata Y, Zhuang J (2006) Space-time ETAS models and an improved extension. Tectonophysics 413(401):13–23

Rebolledo R (1979) La méthode des martingales appliquée à l’etude de la convergence en loi de processus. Mémoires de la Société Mathématique de France 62(2):1–125

Serfling RJ (1980) Approximation theorems of mathematical statistics. Wiley, New York

Shang Y (2013) Optimal control strategies for virus spreading in inhomogeneous epidemic dynamics. Can Math Bull 56(13):621–629

Sun Y, Baricz A, Zhou S (2010) On the monotonicity, log-concavity, and tight bounds of the generalized Marcum and Nuttall-functions. IEEE Trans Inf Theory 56(3):1166–1186

Tsiotas D, Tselios V (2022) Understanding the uneven spread of COVID-19 in the context of the global interconnected economy. Sci Rep 12:666

Weera W, Botmart T, La-inchua T, Sabir Z, Sandoval R, Abukhaled M, García J (2023) A stochastic computational scheme for the computer epidemic virus with delay effects. Mathematics 8(1):148–163

Yildirim DC, Esen O, Ertuğrul HM (2022) Impact of the COVID-19 pandemic on return and risk transmission between oil and precious metals: evidence from DCC-GARCH model. Resour Policy 79:102939

Zachreson C, Chang S, Harding N, Prokopenko M (2022) The effects of local homogeneity assumptions in metapopulation models of infectious disease. Royal Soc Open Sci 9(211919):277–295

Acknowledgements

First of all, we are very grateful for an anonymous referee because their valuable suggestions allowed us to improve the content of this paper. This research was partially supported by Chilean Council for Scientific and Technological Research, grant FONDECYT 1200525.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fierro, R. Cumulative damage for multi-type epidemics and an application to infectious diseases. J. Math. Biol. 86, 47 (2023). https://doi.org/10.1007/s00285-023-01880-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-023-01880-1