Abstract

This work is devoted to studying the application of fixed point theory to the stability analysis of complex neural networks. We employ the new method of contraction mapping principle to investigate the stability of impulsive cellular neural networks with time-varying delays. Some novel and concise sufficient conditions are presented to ensure the existence and uniqueness of a solution and the global exponential stability of the considered system at the same time. These conditions are easily checked and do not require the differentiability of delays.

Similar content being viewed by others

1 Introduction

Cellular neural networks (CNNs), proposed by Chua and Yang in 1988 [1, 2], have become a hot topic for their numerous successful applications in various fields such as optimization, linear and nonlinear programming, associative memory, pattern recognition and computer vision.

Due to the finite switching speed of neurons and amplifiers in the implementation of neural networks, it turns out that time delays should not be neglected; and therefore, the model of delayed cellular neural networks (DCNNs) is put forward, which is naturally of better realistic significance. In fact, besides delay effects, stochastic and impulsive as well as diffusion effects are also likely to exist in the neural networks. Accordingly, many experts are showing a growing interest in the dynamic behavior research of complex CNNs such as impulsive delayed reaction-diffusion CNNs and stochastic delayed reaction-diffusion CNNs, followed by a mass of achievements [3–9] obtained.

Synthesizing the reported results about the complex CNNs, we find that the existing research skill for dealing with the stability is mainly based on Lyapunov theory. However, we also notice that there are still lots of difficulties in the applications of corresponding theory to the specific problems [10–16]. It is therefore necessary to seek some new techniques to overcome those difficulties.

It is inspiring that in a few recent years, Burton and other authors have applied fixed point theory to investigate the stability of deterministic systems and obtained some more applicable results; for example, see the monograph [17] and the papers [18–29]. In addition, more recently, there have been a few papers where fixed point theory is employed to deal with the stability of stochastic (delayed) differential equations; see [10–16, 30]. Particularly, in [11–13], Luo used fixed point theory to study the exponential stability of mild solutions for stochastic partial differential equations with bounded delays and with infinite delays. In [14, 15], fixed point theory is used to investigate the asymptotic stability in the p th moment of mild solutions to nonlinear impulsive stochastic partial differential equations with bounded delays and with infinite delays. In [16], the exponential stability of stochastic Volterra-Levin equations is studied based on fixed point theory. As is known to all, although Lyapunov functions play an important role in Lyapunov stability theory, it is not easy to find the appropriate Lyapunov functions. This difficulty can be avoided by applying fixed point theory. By means of fixed point theory, refs. [11–16] require no Lyapunov functions for stability analysis, and the delay terms need no differentiability.

Naturally, for the complex CNNs which have great application values, we wonder if fixed point theory can be used to investigate the stability, not just the existence and uniqueness of a solution. With this motivation, in the present paper, we aim to discuss the stability of impulsive CNNs with time-varying delays via fixed point theory. It is worth noting that our research skill is contraction mapping theory which is different from the usual method of Lyapunov theory. We use the fixed point theorem to prove the existence and uniqueness of a solution and the global exponential stability of the considered system all at once. Some new and concise algebraic criteria are provided; moreover, these conditions are easy to verify and do not require even the differentiability of delays, let alone the monotone decreasing behavior of delays.

2 Preliminaries

Let denote the n-dimensional Euclidean space and represent the Euclidean norm. , , corresponds to the space of continuous mappings from the topological space X to the topological space Y.

In this paper, we consider the following impulsive cellular neural network with time-varying delays:

where and n is the number of neurons in the neural network. corresponds to the state of the i th neuron at time t. , is the activation function of the j th neuron at time t and represents the activation function of the j th neuron at time , where corresponds to the transmission delay along the axon of the j th neuron and satisfies (τ is a constant). The constant represents the connection weight of the j th neuron on the i th neuron at time t. The constant denotes the connection strength of the j th neuron on the i th neuron at time . The constant represents the rate with which the i th neuron will reset its potential to the resting state when disconnected from the network and external inputs. The fixed impulsive moments () satisfy , and . and stand for the right-hand and left-hand limit of at time , respectively. shows the abrupt change of at the impulsive moment and .

Throughout this paper, we always assume that for and . Denote by the solution to Eqs. (1)-(2) with the initial condition

where and .

The solution of Eqs. (1)-(3) is, for the time variable t, a piecewise continuous vector-valued function with the first kind discontinuity at the points (), where it is left-continuous, i.e., the following relations are valid:

Definition 2.1 Equations (1)-(2) are said to be globally exponentially stable if for any initial condition , there exists a pair of positive constants λ and M such that

The consideration of this paper is based on the following fixed point theorem.

Theorem 2.1 [31]

Let ϒ be a contraction operator on a complete metric space Θ, then there exists a unique point for which .

3 Main results

In this section, we investigate the existence and uniqueness of a solution to Eqs. (1)-(3) and the global exponential stability of Eqs. (1)-(2) by means of the contraction mapping principle. Before proceeding, we introduce some assumptions listed as follows:

(A1) There exist nonnegative constants such that for any ,

(A2) There exist nonnegative constants such that for any ,

(A3) There exist nonnegative constants such that for any ,

Let , and let () be the space consisting of functions , where satisfies:

-

(1)

is continuous on ();

-

(2)

and exist; furthermore, for ;

-

(3)

on ;

-

(4)

as , where α is a positive constant and satisfies ,

here () and () are defined as shown in Section 2. Also, ℋ is a complete metric space when it is equipped with a metric defined by

where and .

In what follows, we give the main result of this paper.

Theorem 3.1 Assume that the conditions (A1)-(A3) hold. Provided that

-

(i)

there exists a constant μ such that ,

-

(ii)

there exist constants such that for and ,

-

(iii)

,

then Eqs. (1)-(2) are globally exponentially stable.

Proof The following proof is based on the contraction mapping principle, which can be divided into three steps.

Step 1. The mapping is needed to be determined. Multiplying both sides of Eq. (1) with gives, for and ,

which yields, after integrating from () to (),

Letting in (4), we have, for (),

Setting () in (5), we get

which generates by letting

Noting , (6) can be rearranged as

Combining (5) and (7), we derive that

is true for (). Further,

holds for (). Hence,

which produces, for ,

Noting in (8), we define the following operator π acting on ℋ for :

where () obeys the rule as follows:

on and on .

Step 2. We need to prove . Choosing (), it is necessary to testify .

First, since on and , we immediately know is continuous on . Then, for a fixed time , it follows from (9) that

where

Owing to , we see that is continuous on (). Moreover, as , and exist, in addition, .

Consequently, when () in (10), it is easy to find that as for , and so is continuous on the fixed time (). On the other hand, as () in (10), it is not difficult to find that as for . Furthermore, if letting be small enough, we have

which implies . While if letting be small enough, we get

which yields .

According to the above discussion, we see that is continuous on (), and for (), and exist; furthermore, .

Next, we will prove as for . First of all, it is obvious that for . In addition, owing to for , we know . Then, for any , there exists a such that implies . Choose . It is derived from (A1) that

which leads to

Similarly, for any , since , there also exists a such that implies . Select . It follows from (A2) that

which results in

Furthermore, from (A3), we know that . So,

As , we have . Then, for any , there exists a non-impulsive point such that implies . It then follows from the conditions (i) and (ii) that

which produces

From (11), (12) and (13), we deduce as . We therefore conclude that (), which means .

Step 3. We need to prove π is contractive. For and , we estimate , where

Note

and

and

It hence follows from (14), (15) and (16) that

which implies

Therefore,

In view of the condition (iii), we see π is a contraction mapping, and thus there exists a unique fixed point of π in ℋ, which means is the solution to Eqs. (1)-(3) and meets as . This completes the proof. □

Theorem 3.2 Assume the conditions (A1)-(A3) hold. Provided that

-

(i)

,

-

(ii)

there exist constants such that for and ,

-

(iii)

,

then Eqs. (1)-(2) are globally exponentially stable.

Proof Theorem 3.2 is a direct conclusion by letting in Theorem 3.1. □

Remark 3.1 In Theorem 3.1, we see that it is fixed point theory that deals with the existence and uniqueness of a solution and the global exponential stability of impulsive delayed neural networks at the same time, while the Lyapunov method fails to do this.

Remark 3.2 The presented sufficient conditions in Theorems 3.1-3.2 do not require even the differentiability of delays, let alone the monotone decreasing behavior of delays which is necessary in some relevant works.

Remark 3.3 In [4], the abrupt changes are assumed linear with the coefficient , while in our paper, this restriction is removed and the abrupt changes can be linear and nonlinear. On the other hand, the activation functions in [6] are assumed to satisfy , where f is an activation function. However, in this paper, we relax this restriction and instead suppose an activation function f satisfies .

4 Example

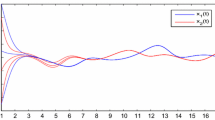

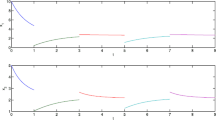

Consider the following two-dimensional impulsive cellular neural network with time-varying delays:

with the initial conditions , on , where , , , , , , , , , (), for and , (). It is easy to see that and as well as .

Select and compute . From Theorem 3.1, we conclude that this two-dimensional impulsive cellular neural network with time-varying delays is globally exponentially stable.

5 Conclusion

This work aims to seek new methods to study the stability of complex CNNs. From what have been discussed above, we find that the application of fixed point theory to the stability analysis of complex CNNs is successful. We utilize the contraction mapping principle to deal with the existence and uniqueness of a solution and the global exponential stability of the considered system at the same time, for which Lyapunov theory feels helpless. Now that there are different kinds of fixed point theorems and complex neural networks, our future work is to continue the study on the application of fixed point theory to the stability analysis of complex neural networks.

References

Chua LO, Yang L: Cellular neural networks: theory. IEEE Trans. Circuits Syst. 1988, 35: 1257-1272. 10.1109/31.7600

Chua LO, Yang L: Cellular neural networks: applications. IEEE Trans. Circuits Syst. 1988, 35: 1273-1290. 10.1109/31.7601

Stamov GT, Stamova IM: Almost periodic solutions for impulsive neural networks with delay. Appl. Math. Model. 2007, 31: 1263-1270. 10.1016/j.apm.2006.04.008

Ahmad S, Stamova IM: Global exponential stability for impulsive cellular neural networks with time-varying delays. Nonlinear Anal. 2008, 69: 786-795. 10.1016/j.na.2008.02.067

Li K, Zhang X, Li Z: Global exponential stability of impulsive cellular neural networks with time-varying and distributed delays. Chaos Solitons Fractals 2009, 41: 1427-1434. 10.1016/j.chaos.2008.06.003

Qiu J: Exponential stability of impulsive neural networks with time-varying delays and reaction-diffusion terms. Neurocomputing 2007, 70: 1102-1108. 10.1016/j.neucom.2006.08.003

Wang X, Xu D: Global exponential stability of impulsive fuzzy cellular neural networks with mixed delays and reaction-diffusion terms. Chaos Solitons Fractals 2009, 42: 2713-2721. 10.1016/j.chaos.2009.03.177

Zhang Y, Luo Q: Global exponential stability of impulsive delayed reaction-diffusion neural networks via Hardy-Poincarè inequality. Neurocomputing 2012, 83: 198-204.

Zhang Y, Luo Q: Novel stability criteria for impulsive delayed reaction-diffusion Cohen-Grossberg neural networks via Hardy-Poincarè inequality. Chaos Solitons Fractals 2012, 45: 1033-1040. 10.1016/j.chaos.2012.05.001

Luo J: Fixed points and stability of neutral stochastic delay differential equations. J. Math. Anal. Appl. 2007, 334: 431-440. 10.1016/j.jmaa.2006.12.058

Luo J: Fixed points and exponential stability of mild solutions of stochastic partial differential equations with delays. J. Math. Anal. Appl. 2008, 342: 753-760. 10.1016/j.jmaa.2007.11.019

Luo J: Stability of stochastic partial differential equations with infinite delays. J. Comput. Appl. Math. 2008, 222: 364-371. 10.1016/j.cam.2007.11.002

Luo J, Taniguchi T: Fixed points and stability of stochastic neutral partial differential equations with infinite delays. Stoch. Anal. Appl. 2009, 27: 1163-1173. 10.1080/07362990903259371

Sakthivel R, Luo J: Asymptotic stability of impulsive stochastic partial differential equations with infinite delays. J. Math. Anal. Appl. 2009, 356: 1-6. 10.1016/j.jmaa.2009.02.002

Sakthivel R, Luo J: Asymptotic stability of nonlinear impulsive stochastic differential equations. Stat. Probab. Lett. 2009, 79: 1219-1223. 10.1016/j.spl.2009.01.011

Luo J: Fixed points and exponential stability for stochastic Volterra-Levin equations. J. Comput. Appl. Math. 2010, 234: 934-940. 10.1016/j.cam.2010.02.013

Burton TA: Stability by Fixed Point Theory for Functional Differential Equations. Dover, New York; 2006.

Becker LC, Burton TA: Stability, fixed points and inverses of delays. Proc. R. Soc. Edinb. A 2006, 136: 245-275. 10.1017/S0308210500004546

Burton TA: Fixed points, stability, and exact linearization. Nonlinear Anal. 2005, 61: 857-870. 10.1016/j.na.2005.01.079

Burton TA: Fixed points, Volterra equations, and Becker’s resolvent. Acta Math. Hung. 2005, 108: 261-281. 10.1007/s10474-005-0224-9

Burton TA: Fixed points and stability of a nonconvolution equation. Proc. Am. Math. Soc. 2004, 132: 3679-3687. 10.1090/S0002-9939-04-07497-0

Burton TA: Perron-type stability theorems for neutral equations. Nonlinear Anal. 2003, 55: 285-297. 10.1016/S0362-546X(03)00240-2

Burton TA: Integral equations, implicit functions, and fixed points. Proc. Am. Math. Soc. 1996, 124: 2383-2390. 10.1090/S0002-9939-96-03533-2

Burton TA, Furumochi T: Krasnoselskii’s fixed point theorem and stability. Nonlinear Anal. 2002, 49: 445-454. 10.1016/S0362-546X(01)00111-0

Burton TA, Zhang B: Fixed points and stability of an integral equation: nonuniqueness. Appl. Math. Lett. 2004, 17: 839-846. 10.1016/j.aml.2004.06.015

Furumochi T: Stabilities in FDEs by Schauder’s theorem. Nonlinear Anal. 2005, 63: 217-224. 10.1016/j.na.2005.02.057

Jin C, Luo J: Fixed points and stability in neutral differential equations with variable delays. Proc. Am. Math. Soc. 2008, 136: 909-918.

Raffoul YN: Stability in neutral nonlinear differential equations with functional delays using fixed-point theory. Math. Comput. Model. 2004, 40: 691-700. 10.1016/j.mcm.2004.10.001

Zhang B: Fixed points and stability in differential equations with variable delays. Nonlinear Anal. 2005, 63: 233-242. 10.1016/j.na.2005.02.081

Hu K, Jacob N, Yuan C: On an equation being a fractional differential equation with respect to time and a pseudo-differential equation with respect to space related to Levy-type processes. Fract. Calc. Appl. Anal. 2012, 15(1):128-140.

Smart DR: Fixed Point Theorems. Cambridge University Press, Cambridge; 1980.

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grants 60904028, 61174077 and 71171116.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

YZ carried out the main part of this manuscript. QL participated in the discussion and gave the example. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Zhang, Y., Luo, Q. Global exponential stability of impulsive cellular neural networks with time-varying delays via fixed point theory. Adv Differ Equ 2013, 23 (2013). https://doi.org/10.1186/1687-1847-2013-23

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2013-23