Abstract

Background

Problem-based curricula have provoked controversy amongst educators and students regarding outcome in medical graduates, supporting the need for longitudinal evaluation of curriculum change. As part of a longitudinal evaluation program at the University of Adelaide, a mixed method approach was used to compare the graduate outcomes of two curriculum cohorts: traditional lecture-based ‘old’ and problem-based ‘new’ learning.

Methods

Graduates were asked to self-assess preparedness for hospital practice and consent to a comparative analysis of their work-place based assessments from their intern year. Comparative data were extracted from 692 work-place based assessments for 124 doctors who graduated from the University of Adelaide Medical School between 2003 and 2006.

Results

Self-assessment: Overall, graduates of the lecture-based curriculum rated the medical program significantly higher than graduates of the problem-based curriculum. However, there was no significant difference between the two curriculum cohorts with respect to their preparedness in 13 clinical skills. There were however, two areas where the cohorts rated their preparedness in the 13 broad practitioner competencies as significantly different: problem-based graduates rated themselves as better prepared in their ‘awareness of legal and ethical issues’ and the lecture-based graduates rated themselves better prepared in their ‘understanding of disease processes’.

Work-place based assessment: There were no significant differences between the two curriculum cohorts for ‘Appropriate Level of Competence’ and ‘Overall Appraisal’. Of the 14 work-place based assessment skills assessed for competence, no significant difference was found between the cohorts.

Conclusions

The differences in the perceived preparedness for hospital practice of two curriculum cohorts do not reflect the work-place based assessments of their competence as interns. No significant difference was found between the two cohorts in relation to their knowledge and clinical skills. However results suggest a trend in ‘communication with peers and colleagues in other disciplines’ (χ 2 (3, N = 596) =13.10, p = 0.056) that requires further exploration. In addition we have learned that student confidence in a new curriculum may impact on their self-perception of preparedness, while not affecting their actual competence.

Similar content being viewed by others

Background

In 2000, the University of Adelaide Medical School adopted a new curriculum. This curriculum switch from traditional discipline lecture-based ‘old’ (TLB) to problem-based learning ‘new’ (PBL) as part of a worldwide trend and represented our most significant change since the 1960’s [1]. In the adoption of any new curriculum, it is vital to evaluate its effectiveness, ensuring that standards and quality are maintained or enhanced. The full impact of changes to curricula is not known for some time after graduation, requiring a long-term approach to curriculum evaluation [2]. This study has evaluated how graduates of TLB and PBL curricula perceived their preparedness (self-reflection assessment) for hospital practice after completion of their intern year, in comparison to the work-place based assessment (WPBA) assessment.At the University of Adelaide, years 1–3 in the TLB curriculum were didactic in style, with the program organised into many separate subjects delivered by individual disciplines, primarily in a lecture mode. Years 4–6 were clinically focussed. There was little emphasis on clinical reasoning and relatively little small group learning. The subjects were not integrated in any way with each other, so that a student could be studying the anatomy of the brain, the pharmacology of heart failure, the characteristics of Staphylococcus, and the history of public health all at the same time. Communication skills were delivered in lecture format by staff from psychology, with very little opportunity for students to practise (Figure 1).

The ‘new’ PBL curriculum was centrally planned, with integrated multidisciplinary content, delivered in lectures and student-centred small group (n = 8) sessions. The use of clinical scenarios, designed to encourage students to form links between clinical practice and the basic medical sciences, commenced in 1st year. The scenarios were simple cases of common conditions, and progressed to more complex cases with multiple co-morbidities in 3rd year. Tutors fulfilled primarily a facilitative role and group discussions occupied 6–20 hours per week. There was an increase in emphasis on communication skills (allied health colleagues, patients, peers and supervisors) with opportunities to practise communication skills were introduced using actors, with audio visual recordings for students to review their own performance. Assessment was centrally organised and integrated, and included testing of knowledge and clinical reasoning [3].

In the evaluation of outcomes from an overall curriculum, student satisfaction alone is insufficient [4], and attention must be paid to impacts on student progression, student and graduate satisfaction, career choices or preferences, and career retention.

The effects of different curricula on such an elite group of students, shown to pass examinations irrespective of teaching methods [5], has been relatively inconclusive [1, 6, 7]. Studies comparing TLB with PBL curricula vary in their findings, from no clear differences in outcomes [1, 6, 8–10], to observed differences in the areas of social and cognitive dimensions [7], tests of factual knowledge [11], clinical examinations [11, 12], and licensing examinations [13].

The outcomes research to date has mainly employed a self-report study design [9, 14] and ‘seldom include workplace points of view’ [15]. It is important to widen the focus of evaluation beyond traditional educational outcomes, to include external assessment such as WPBA assessments during the intern year [9, 16, 17].

In Australia, at the time of this study, all medical graduates spent their first postgraduate year as an ‘intern’, in accredited public hospitals. Throughout Australia, intern assessment processes vary. However, all WPBA are made by senior clinicians and their supervising team and are endorsed by the Medical Board of Australia. In South Australia, an intern has on average five rotations. At the completion of each rotation, the clinical supervisors provide a WPBA report that identifies strengths and weaknesses and gives an overall appraisal of intern performance. The intern end of rotation assessment is ‘high stakes’, however the concept of pass/fail is not used, the intern is assessed as having made satisfactory, borderline or unsatisfactory progress in acquiring intern competencies. If a rotation has not been satisfactory, remedial measures are implemented and progress recorded. A single unsatisfactory rotation will not necessarily need to be repeated if good progress is made during the rest of the year.

The aim of stages I and II of the Medical Graduates Outcomes Program was to follow and compare long-term outcomes of graduates from the two types of curricula: lecture-based (graduates 2003, 2004) and problem-based (graduates 2005, 2006) curricula. To assess how well prepared these graduates felt for their internship and compare this self-assessment with the clinical supervisor-assessment results of their intern year.

Methods

Participants, procedure and study design

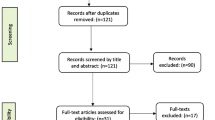

The cohorts studied graduated from the University of Adelaide Medical program between 2003–2006, with graduates from 2003 and 2004 comprising the TLB cohort, and graduates from 2005 and 2006 comprising the PBL cohort. Methodological triangulation involved data collection via a self-administered questionnaire at the completion of the intern year (one year after graduation), and an audit of intern WPBA reports from five South Australian public hospitals.

Between December 2006 and May 2007 graduates were sent an information pack containing an introduction to the project, a consent form, the Preparedness for Hospital Practice questionnaire and a contact details form to allow data collection for the next two stages of the study. The six month period of contact and follow-up ensured that all graduates had completed their intern year. Graduates who completed their intern year outside of Australia were excluded from this analysis. The audit of intern reports was carried out in June and July 2009 (Audit form available as Additional file 1).

Graduates were asked to rate how well the medical program had prepared them in 13 broad practitioner competencies and 13 areas of clinical and hospital practice using a 5 point Likert scale, from ‘Very well’ through to ‘Not at all well’. The questionnaire was based on two previously validated questionnaires [9, 14]. The different areas represent a diverse range of skills and are divided into three sections: Preparation for Hospital Practice (ie history taking and diagnosis); Clinical Skills & Preparedness (ie procedures including consent, prescribing and cannulation), and Resilience (ie level of responsibility and meeting challenges as intern).

The intern audit form was developed based on the structure and content of the WPBA across each hospital. Commonly assessed criteria were identified. The audit was carried out between June and August 2009. Fourteen criteria, ‘Achieving Appropriate Level of Competence’ and an ‘Overall Appraisal’ rating were assessed in the audit. A five point Likert Scale was used to record the competence, from ‘High level of competence’ through to ‘Low competence’.

Ethical approval was obtained from the University of Adelaide Human Research Ethics Committee (H-019-2006 and H-099-2010) and the Ethics Committees of the five public hospitals.

Analysis

The data were recoded by compression from a 5- to 3-point scale (e.g. ‘strongly agree and agree’, ‘neutral’, and ‘disagree and strongly disagree’). Descriptive statistics (frequencies) were completed for all items by curriculum type. Differences between the curriculum types were examined using separate chi-square tests. In order to account for multiple testing we adjusted for the number of comparisons made (Bonferroni method [18]) to reduce the issue of multiplicity (ie increased rate of type I error). Results presented for each chi-squared test are the adjusted p-values.

Results

A total of 166 graduates (39% of the total number contacted) completed the Preparedness for Hospital Practice Questionnaire (Table 1). Matched WPBA data were available for 124 graduates. The demographics of the responding graduates do not differ significantly from their respective cohort populations for gender (χ 2 (1, N = 458) =0.69, p = 0.405). The number of international students from the PBL cohort who responded to the survey was almost double that of the TLB (15 vs 8), but there was no significant difference between the cohorts in terms of domestic or international status (χ 2 (2, N = 165) =4.28, p = 0.118). The respondents’ ages ranged from 23–45 years at the time of survey, with 80% (134) of respondents being aged 28–31 years, and no significant difference between the curriculum cohorts (χ 2 (11, N = 165) =11.03, p = 0.440). There was no significant difference in the proportion of respondents from each of the 5 hospital training sites (χ 2 (5, N = 166) =5.66, p = 0.342) (Table 1).

Respondent self-assessment

For the overall evaluation, graduates from the TLB curriculum were more likely to rate the medical program as ‘excellent/good’ than were the graduates from the PBL curriculum (χ 2 (4, N = 160) =15.55, p = 0.004) (Figure 2).

Preparedness for hospital practice

In two of 13 ‘broad practitioner’ competencies the two cohorts reported significantly different levels of preparedness. The TLB cohort reported higher levels of preparedness for ‘Understanding disease processes’ (χ 2 (4, N = 166) =20.11, p < 0.001) while the PBL cohort reported greater preparedness in ‘Being aware of legal and ethical issues’ (χ 2 (4, N = 166) =15.85, p = 0.039) (Table 2).

Resilience

There was no difference between cohorts for any of the three criteria. The cohorts felt equally prepared to ‘Accept the level of responsibility expected of an intern’ (χ 2 (4, N = 166) = 1.12, p = 0.891), ‘Meet the variety of challenges they faced’ (χ 2 (4, N = 166) = 3.62, p = 0.460) and in ‘Dealing with the differing relationships in the hospital context’ (χ 2 (4, N = 166) = 5.39, p = 0.250) (Table 2).

Clinical skills & preparedness

There were no significant differences between cohorts in the 13 clinical skill competencies (Table 3).

WPBA

The range in number of reports of the interns competence varied from one (three interns) to nine reports (one intern) with the majority of interns having four (n = 24, 18.2%), five (n = 71, 53.8%) and six (n = 25, 18.9%) reports. There were no clear associations of number of reports with cohorts, hospitals, or rotations. A total of 82.0% (N = 533) of reports were signed by the intern, indicating they had received feedback on their rotation.

There was no significant difference between curriculum cohorts for ‘Achieving Appropriate Level of Competence’ (χ 2 (1, N = 574) = 1.27, p = 0.260) and ‘Overall Appraisal’ (χ 2 (3, N = 615) = 0.22, p = 0.974). A comparison of overall appraisal by WPBA and graduates’ self-assessment of preparedness for internship is presented in Figure 3.

A similar pattern is seen for both cohorts, in that graduates assessed themselves more harshly than did their supervisors. Nine (7.3%) graduates received a rating of ‘Variable’ or ‘Low Competence’ on at least one of their individual assessments as an intern. However of these nine only three (2.4%) received an overall rating of ‘Variable Competence’ for that rotation and no graduate received an overall rating of ‘Low Competence’. The low supervisor ratings had no significant relationship to cohort, hospital or rotation.

A comparison of the WPBA assessment of intern competence in 14 skills areas, found only one area where a difference was noted between the two curriculum cohorts (Table 4). There was a trend for graduates of the PBL curriculum to be rated as having higher competence in their ‘Interactions with peers and colleagues from other disciplines’ (χ 2 (3, N = 596) =13.10, p = 0.056).

Discussion

We have found that the graduates from both medical curricula were equally competent in their clinical skills as assessed by their clinical supervisors, supporting the findings of previous research [1, 6, 8–10]. We also found there was a trend for graduates of the PBL curriculum to be rated as better communicators than those from the TLB curriculum. These important communication skills are transferable between clinical settings, research environments and future medical supervisors and teachers. The issues around improved communication skills and team work require further research, ie., does the problem-based curriculum increase these skills or are students today selected for these skills?

Our graduates’ self-assessment of their preparedness for hospital practice varied between curriculum cohorts. PBL graduates self-assessed as being less prepared in two clinical skills (‘Clinical exam & selection and interpretation of tests’ and ‘Understanding disease processes’), while the TLB graduates assessed themselves as less prepared for two of the broader practitioner skills (‘Obtaining consent’ and ‘Legal and ethical issues’). Jones et al. similarly found in a PBL course graduates rated their ability in ‘Understanding disease processes’ less favourably than the TLB graduates [9].

Differences perceived by graduates may be due to differences in student expectations, medical education, the working environment or health care systems [19]. Differences in perception may also relate to specialty bias, for example understanding of disease processes may be more important in an internal medicine rotation than psychiatry. A Kings College School of Medicine and Dentistry survey [20] found that although over 70% of graduates reported their education had satisfactorily equipped them for medical practice, there were significant differences between those in primary care and hospital medicine regarding the relative importance of subjects within the curriculum. However, Ochsmann [19] found deficits in feelings of preparedness irrespective of chosen specialty. The area of how preparedness relates to specialty choice requires further study.

Feelings of preparedness are important in the successful transition from being a student to a practising doctor [19, 21]. However, the question of preparedness continues to be an ambiguous one. ‘When junior doctors say they feel prepared, they may not mean they think they are competent’ [22] and it is only by a comparison of self- and supervisor-assessment that we can explore the accuracy of their self–assessment. Our study did not find an association between self- and WPB assessment, supporting Bingham et al’s findings, where trainees assessed themselves more harshly, while their supervisors assessed of trainees as ‘at or above expected level’ for ‘every item in every term’ (43% vs 98.5%) [23]. Qualitative data from the Stage I Preparedness Questionnaire, found two key differences between the TBL and the PBL graduates. The PBL cohorts were much more positive in their responses to how well the program had developed their attitudes to skill development, whilst asking for a greater emphasis on learning basic sciences.

A variety of studies have found that many graduates feel inadequately prepared for the role of junior doctor [24–26] and criticisms that medical schools do not prepare graduates for early medical practice are not new. Goldacre et al. explored UK junior doctors’ views on preparedness in 2010, and found that the level of agreement that medical school had prepared them well for work varied between medical schools and changed over time, ranging from 82% to 30% at one year, to 70% - 27% (respectively) at three year’s post graduation [21].

Both medical schools and medical graduates have questioned preparation and preparedness for early medical practice [21]. Kilminster et al. suggest that the ‘Emphasis on preparedness (is) misplaced’[27], and as a result the focus of their work is on exploring the challenges associated with the transition from student to doctor. Interestingly, our graduates from both curricula reported feeling equally well prepared in ‘meeting the challenges’, to ‘accept the level of responsibility’ of an intern, and in ‘dealing with the different relationships in the hospital context’.

Feelings of preparedness may be affected by a number of factors, both internal and external. A comparison of three diverse UK medical schools found that medical graduates’ feelings of preparedness may be affected by individual learning style and personality, but the majority of graduates reported external factors as having the greatest impact [28]. Graduates made reference to external factors such as clinical placements; shadowing and hospital induction procedures and the support of others as important. Illing et al. [28] suggest that perception of preparedness, with respect to external factors, can be addressed by improving hospital induction processes, increased structure and consistency in clinical placements, and addressing perceived weaknesses in clinical procedures identified by the graduates.

There may have been variations in feeling of preparedness from the experience gained during clinical placements in the variety of intern rotations, as ‘institutions and wards have their own learning cultures…’ [27]. However, unlike Illing [28], our study did not demonstrate significant variation between hospitals or rotations, except with respect to the signing of the intern reports and therefore potentially the feedback received by the interns.

There may also be variation in preparedness of the graduates from the two curricula that relate more to their confidence in their learning method. Millan et al. [29] suggest that as graduates are aware of the research purpose, TLB graduates ‘may overestimate values’ comparing one learning method to another. The graduates we surveyed were aware they were the last two cohorts of the TLB and the first two of the PBL curriculum. The problem-based cohorts may have felt insecure because their curriculum was newly implemented [29] and they may have felt they were missing out on something. This lack of confidence in the PBL cohort may also have been reinforced by some teachers and clinicians who felt disenfranchised and were not fully supportive of the change.

Consideration should also be given when comparing the self-assessment skills of graduates of TLB curriculum with those of PBL, as we may be comparing apples with oranges [30]. Our PBL graduates learned to self-assess using concepts such as pass/fail instead of numerical grades, and may have greater difficulty evaluating their skills [29]. Millan suggests that PBL graduates ‘might view their performance in a different manner’.

Feedback during internship

The giving and receiving of feedback is important in any training situation, with trainees commonly requesting feedback on their strengths and weaknesses [23]. However, just under 20% of our graduates did not sign their reports (acknowledging feedback). There may be a variety of reasons for this, such as the lack of provision of adequate time for assessment and feedback with the report completed after the intern had left the ‘hospital site’, a lack of training for both medical graduates and supervisors in assessment methods, or possibly a lack of interest in the particular area. In South Australia, demand for some rotations is higher than places available and most interns ultimately undertake rotations that are not within their area of interest, potentially reducing their desire to follow-up on feedback provided. A recent Australian retrospective study of 3390 assessment forms of prevocational trainees found that the forms may underreport performance and do not provide trainees with ‘enough specific feedback to guide professional development’ [23].

Strengths and limitations

Although the findings reported here are for graduates from one institution’s medical program this may be considered a limitation, however a major strength of this study is the methodological triangulation of two types of data gathered – questionnaire and the audit of intern reports. In addition, each intern was assessed in multiple specialty environments, in one of five large public hospitals, by multiple clinical supervisors, on a range of aspects of clinical knowledge and practice. The range and diversity of the WPBAs thus provides a reliable method of assessment.

Another limitation relates to missing data in the audit of supervisor assessments, which can be traced in the main to two particular rotations: nights and relieving. Comments provided by some supervisors highlight their reluctance to rate interns in these rotations, as they did not observe the interns performing certain skills.

The overall response rate for the longitudinal study of 41.7% may be considered low, but the nature of retrospective longitudinal studies carries with it the inherent issues of loss to follow-up. However, there was no significant difference in the age or gender of our non-responders and responders, and the responders were broadly representative of the four graduating cohorts.

Future research

The Medical Graduates Outcomes Evaluation Program includes a further 3 stages: ‘Admissions and Selection’, ‘Early (first 5 years)’ and ‘late (10 years)’ postgraduate years. These next stages will provide our university and the broader medical community with a comparison of long-term outcomes between two curriculum cohorts. Our study adds to the body of knowledge that highlights the need for education research in the areas of self-assessment and the giving and receiving of feedback. Curriculum changes based on self-assessment alone run the risk of ‘throwing the baby out with the bathwater’. We suggest that further research is required into the impact of career specialty choices on the perception of how well medical programs prepare their graduates.

Conclusions

Self- and WPB assessments are both valuable contributors to curriculum evaluation as well as guiding professional development. Our findings demonstrate that the curriculum change from TLB to PBL at our University has ‘done no harm’ to our graduates’ clinical practice in the intern year while potentially improving their communication skills and their attitude to skill development. Medical students and graduates, on the whole, are high achieving individuals, who ‘leading up to medical school are groomed and selected for success in a traditional curriculum’ and who would succeed under either curriculum (88).

In addition we have learned that student confidence in a new curriculum may impact on their self-perception of preparedness, while not affecting their actual competence. The transition period from student to intern is a stressful time for all graduates, and it has been reported previously that graduates tend to underestimate when asked to self-assess ‘how well they were prepared for hospital practice’. This perception is not to be discounted, but nor should it be used to support unevaluated curriculum change.

Abbreviations

- (TLB) ‘old’:

-

Traditional (Discipline) lecture-based

- (PBL) ‘new’:

-

Problem-based learning

- (WPBA) assessment:

-

Work-place based assessment.

References

Polyzois I, Claffey N, Mattheos N: Problem-based learning in academic health education. A systematic literature review. Eur J Dent Educ. 2010, 14 (1): 55-64.

Morrison J: ABC of learning and teaching in medicine: evaluation. BMJ. 2003, 326 (7385): 385-387.

Anderson K, Peterson R, Tonkin A, Cleary E: The assessment of student reasoning in the context of a clinically oriented PBL program. Med Teach. 2008, 30 (8): 787-794.

Maudsley G: What issues are raised by evaluating problem-based undergraduate medical curricula? Making healthy connections across the literature. J Eval Clin Pract. 2001, 7 (3): 311-324.

Morrison J: Where now for problem based learning?. Lancet. 2004, 363 (9403): 174.

Cohen-Schotanus J, Muijtjens AMM, Schönrock-Adema J, Geertsma J, Van Der Vleuten CPM: Effects of conventional and problem-based learning on clinical and general competencies and career development. Med Educ. 2008, 42 (3): 256-265.

Choon-Huat Koh G, Hoon Eng K, Mee Lian W, Koh D: The effects of problem-based learning during medical school on physician competency: a systematic review. CMAJ. 2008, 178 (1): 34-41.

Albanese M: Problem-based learning: why curricula are likely to show little effect on knowledge and clinical skills. Med Educ. 2000, 34 (9): 729-738.

Jones A, McArdle P, O’Neill P: Perceptions of how well graduates are prepared for the role of pre-registration house officer: a comparison of outcomes from a traditional and an integrated PBL curriculum. Med Educ. 2002, 36 (1): 16-25.

Watmough S, Taylor D, Garden A: Educational supervisors evaluate the preparedness of graduates from a reformed UK curriculum to work as pre-registration house officers (PRHOs): a qualitative study. Med Educ. 2006, 40 (10): 995-1001.

Vernon D, Blake R: Does problem-based learning work? A meta-analysis of evaluative research. Acad Med. 1993, 68 (7): 550-563.

Albanese M, Mitchell S: Problem-based learning: a review of literature on its outcomes and implementation issues. Acad Med. 1993, 68 (1): 52-81.

Blake R, Hosokawa M, Riley S: Student performances on step 1 and step 2 of the united states medical licensing examination following implementation of a problem-based learning curriculum. Acad Med. 2000, 75 (1): 66-70.

Hill J, Rolfe I, Pearson S, Heathcote A: Do junior doctors feel they are prepared for hospital practice? A study of graduates from traditional and non-traditional medical schools. Med Educ. 1998, 32 (1): 19-24.

Lindberg O: ‘The Next Step’ – alumni students’ views on their preparation for their first position as a physician2010 16. 2010, 15(4884 - doi:10.3402/meo.v15i0.4884). Available from: http://med-ed-online.net/index.php/meo/article/view/4884, September 16 September 2010]

Eva K, Regehr G: Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005, 80 (10): S46-S54.

Mitchell C, Bhat S, Herbert A, Baker P: Workplace-based assessments in foundation programme training: do trainees in difficulty use them differently?. Med Educ. 2013, 47 (3): 292-300.

Shaffer JP: Multiple hypothesis testing. Annu Rev Psychol. 1995, 46: 561-576.

Ochsmann E, Zier U, Drexler H, Schmid K: Well prepared for work? Junior doctors’ self-assessment after medical education. BMC Med Educ. 2011, 11 (1): 99-PubMed PMID: doi:10.1186/1472-6920-11-99

Clack GB: Medical graduates evaluate the effectiveness of their education. Med Educ. 1994, 28: 418-431.

Goldacre M, Taylor K, Lambert T: Views of junior doctors about whether their medical school prepared them well for work: questionnaire surveys. BMC Med Educ. 2010, 10 (1): 78.

Cave J, Woolf K, Jones A, Dacre J: Easing the transition from student to doctor: how can medical schools help prepare their graduates for starting work?. Med Teach. 2009, 31 (5): 403-408. PubMed PMID: 19142797

Bingham CM, Crampton R: A review of prevocational medical trainee assessment in New South Wales. Med J Aust. 2011, 195 (7): 410-412. PubMed PMID: 21978350. Epub 2011/10/08. eng

Watmough S, O’Sullivan H, Taylor D: Graduates from a traditional medical curriculum evaluate the effectiveness of their medical curriculum through interviews. BMC Med Educ. 2009, 9: 7-PubMed PMID: ISI:000284713800001. English

Bojanic K, Schears G, Schroeder D, Jenkins S, Warner D, Sprung J: Survey of self-assessed preparedness for clinical practice in one Croatian medical school. BMC Res Notes. 2009, 2 (1): 152-PubMed PMID: doi:10.1186/1756-0500-2-152

Guest AR, Roubidoux MA, Blane CE, Fitzgerald JT, Bowerman RA: Limitations of student evaluations of curriculum. Acad Radiol. 1999, 6 (4): 229-235.

Kilminster S, Zukas M, Quinton N, Roberts T: Preparedness is not enough: understanding transitions as critically intensive learning periods. Med Educ. 2011, 45 (10): 1006-1015.

Illing J, Morrow G, Kergon C, Burford B, Spencer J, Peile E, Kergon C, Burford B, Spencer J, Peile E, Davies C, Baldauf B, Allen M, Johnson N, Morrison J, Donaldson M, Whitelaw M, Field M: How Prepared Are Medical Graduates To Begin Practice? A Comparison Of Three Diverse UK Medical Schools. Final Report for the GMC Education Committee. General Medical Council/Northern Deanery. 2008, Available from: http://www.gmc-uk.org/FINAL_How_prepared_are_medical_graduates_to_begin_practice_September_08.pdf_29697834.pdf

Millan LPB, Semer B, Rodrigues JMS, Gianini RJ: Traditional learning and problem-based learning: self-perception of preparedness for internship. Rev Assoc Med Bras. 2012, 58: 594-599.

Neville AJ: Problem-based learning and medical education forty years on. Med Princ Pract. 2009, 18 (1): 1-9.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6920/14/123/prepub

Acknowledgements

The authors thank: the graduates for taking the time to participate; Dr Nancy Briggs and Mrs Michelle Lorimer for their statistical analysis and expertise; Mrs Teresa Burgess, Dr Ted Cleary, Professor Paul Rolan and Mrs Carole Gannon for their contribution to the initial conception of this project and Professors Ian Chapman and Mitra Guha for their ongoing contribution advice and guidance to the longitudinal project.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

DK and AT conceived and discussed the scope and design of the longitudinal evaluation project. DK, GL, AT, and PD contributed to the conception and design of stage II. GL conducted the searches, administered the questionnaire Stage I, conducted the audit Stage II and discussed the strategies used with DK. DK, GL, and AT, were jointly involved in the interpretation and data analysis. GL led the writing of the paper and each author contributed significantly to multiple subsequent revisions. All authors approved the final version of the manuscript submitted.

Gillian Laven, Dorothy Keefe, Paul Duggan and Anne Tonkin contributed equally to this work.

Electronic supplementary material

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly credited.

About this article

Cite this article

Laven, G., Keefe, D., Duggan, P. et al. How was the intern year?: self and clinical assessment of four cohorts, from two medical curricula. BMC Med Educ 14, 123 (2014). https://doi.org/10.1186/1472-6920-14-123

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6920-14-123