Abstract

Background

The current paradigm of arthroscopic training lacks objective evaluation oftechnical ability and its adequacy is concerning given the acceleratingcomplexity of the field. To combat insufficiencies, emphasis is shiftingtowards skill acquisition outside the operating room and sophisticatedassessment tools. We reviewed (1) the validity of cadaver and surgicalsimulation in arthroscopic training, (2) the role of psychomotor analysisand arthroscopic technical ability, (3) what validated assessment tools areavailable to evaluate technical competency, and (4) the quantification ofarthroscopic proficiency.

Methods

The Medline and Embase databases were searched for published articles in theEnglish literature pertaining to arthroscopic competence, arthroscopicassessment and evaluation and objective measures of arthroscopic technicalskill. Abstracts were independently evaluated and exclusion criteriaincluded articles outside the scope of knee and shoulder arthroscopy as wellas original articles about specific therapies, outcomes and diagnosesleaving 52 articles citied in this review.

Results

Simulated arthroscopic environments exhibit high levels of internal validityand consistency for simple arthroscopic tasks, however the ability totransfer complex skills to the operating room has not yet been established.Instrument and force trajectory data can discriminate between technicalability for basic arthroscopic parameters and may serve as useful adjunctsto more comprehensive techniques. There is a need for arthroscopicassessment tools for standardized evaluation and objective feedback oftechnical skills, yet few comprehensive instruments exist, especially forthe shoulder. Opinion on the required arthroscopic experience to obtainproficiency remains guarded and few governing bodies specify absolutequantities.

Conclusions

Further validation is required to demonstrate the transfer of complexarthroscopic skills from simulated environments to the operating room andprovide objective parameters to base evaluation. There is a deficiency ofvalidated assessment tools for technical competencies and little consensusof what constitutes a sufficient case volume within the arthroscopycommunity.

Similar content being viewed by others

Background

The evolution of diagnostic and therapeutic techniques has made arthroscopy one ofthe most commonly performed orthopaedic procedures [1]. Despite its prevalence, arthroscopy is technically demanding requiringvisual-spatial coordination to manipulate instruments while interpretingthree-dimensional structures as two-dimensional images. These skills aretraditionally acquired through the apprenticeship model of step-wise involvement inthe operating room, but the process is inefficient in terms of time and cost andassociated with iatrogenic injury to the patient [2–5]. With the increasing complexity of arthroscopic procedures and theimplementation of work-hour restrictions, the adequacy of arthroscopic trainingduring residency has become a concern [6, 7].

To combat insufficiencies, emphasis in post-graduate training is shifting towardsspecific skill acquisition and the achievement of technical competencies [8]; this is the rationale behind improving arthroscopic skill developmentoutside of the operating room. The advent of surgical simulation, psychomotorconditioning and the cadaveric bioskills laboratory as useful training adjuncts isencouraging [4, 5, 9–15]. Despite these efforts, evidence suggests that residents feel lessprepared in arthroscopic training compared to open procedures and a substantialnumber of procedures may be required to become proficient [16–18]. The necessary operative experience and instruction to attain competencyis uncertain. Currently, the Residency Review Committee for the AccreditationCouncil of Graduate Medical Education (ACGME) requires only a record of completedarthroscopic procedures and does not specify what constitutes a sufficient casevolume [19].

As pressures for training standardization and certification mount, there remains noobjective testing to evaluate arthroscopic competency at the end of an orthopaedicresidency [20–22]. The identification of effective arthroscopic teaching methods andevaluation tools is first necessary to determine what constitutes sufficienttraining. There is need for comprehensive assessment using true indicators ofcompetence as consensus on defining competence and quantifying arthroscopicproficiency has not been established.

In this article, we reviewed knee and shoulder arthroscopy with respects to (1) thevalidity of cadaveric models and surgical simulation in arthroscopic training, (2)the role of psychomotor analysis and arthroscopic technical ability, (3) whatvalidated assessment tools are available to evaluate technical competency, and (4)how arthroscopic proficiency is quantified by the regulating bodies and orthopaedicsocieties.

Methods

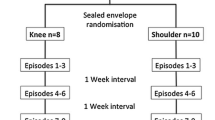

A comprehensive search of the Ovid MedLine (Figure 1) andEMBASE (Figure 2) databases published in the Englishliterature was performed. Search terms were altered for each database according toits method of subheading mapping. The search results and number of studies found ateach stage are listed below:

Ovid MedLine: 1996 to February Week 2, 2013

-

1.

exp Clinical Competence: 47 976

-

2.

exp Learning curve: 4 588

-

3.

exp Task Performance and Analysis: 18 681

-

4.

1 OR 2 OR 3: 69 539

-

5.

exp Arthroscopy: 11 490

-

6.

4 AND 5: 79

-

7.

Limit 6 to English language and Humans: 74

EMBASE: 1980 to February Week 2, 2012

-

1.

exp competence: 82 920

-

2.

exp surgical training: 10 573

-

3.

exp task performance: 93 865

-

4.

exp learning curve: 1 862

-

5.

1 or 2 or 3 or 4: 185 919

-

6.

exp arthroscopy: 18 210

-

7.

5 and 6: 132

-

8.

Limit 6 to English language and Humans: 104

Two reviewers (JLH, CV) independently evaluated the abstracts of the search results.Studies selected underwent fulltext reviews and were original research or reviewarticles pertaining to (1) arthroscopic competence, (2) arthroscopic assessment andevaluation, and/or (3) objective measures of arthroscopic technical skill. Exclusioncriteria included article topics (1) outside the scope of knee and shoulderarthroscopy, (2) therapeutic treatments and outcomes, (3) diagnostic imaging, and(4) case series. Studies were excluded only if there was mutual agreement betweenthe two reviewers. Relevant references from each of the remaining articles wereexamined for inclusion. Articles were then cross-referenced to discard repeatedreferences, leaving 52 orthopaedic articles cited in this review.

Results

Cadaveric training models and surgical simulation

Advancing technical complexity taught within reduced work-hour training programshas driven the need for alternative strategies in arthroscopic skilldevelopment. Traditionally, the cadaver specimen in the bioskills laboratory hasremained the highest fidelity model [23]. Few would contest the likeness of the human cadaveric specimen toreproduce arthroscopy conditions or the value of positional and tactile feedbackusing instrumentation in this environment. The use of fresh cadaveric specimensas the primary teaching platform in instructional courses for board certifiedsurgeons supports this claim. The educational benefits of managing the nuancesof arthroscopic equipment and troubleshooting problems with fluid management,the light source, and shavers should also not be underestimated [17]. In addition, when arthroscopic trained surgeons were polled ontraining methods contributing to self-perceived proficiency in all-arthroscopicrotator cuff repair, practice on cadaveric models was third, second only tofellowship training and hands-on courses [24].

Financial considerations as well as specimen availability limit formalarthroscopic training on cadavers within most orthopaedic program curricula [4]. The cost of acquiring specimens combined with the inherent costs ofmaintaining the equipment and personnel of a bioskills laboratory are difficultto quantify and usually depend on industry support and/or sponsorship. There arealso concerns regarding uniformity between specimens with variability in bothanatomy and internal pathology [4].

To avoid these obstacles, the concept of computer-based simulation forarthroscopic training and skill acquisition has emerged. Embraced by theaviation industry, simulators remain a core competency in pilot training andcredentialing [11]. The development of less-expensive high performance computerscombined with advances in graphical and force-feedback technology (haptics) hasaccelerated this movement. Proposed simulators would allow for the quantitativeassessment of technical ability performed within the confines of a safe andcontrolled environment. Advantages include the absence of time constraints orsupervising faculty, uniform training scenarios of adjustable complexity andpathology as well as substantial saving from costly disposable equipment andtraining time within the operating room [15, 23].

The use of laparoscopic and endoscopic simulators has been incorporated into manytraining programs, as the validity of such models has been previouslyestablished [25–30]. A systematic review of randomized controlled trials of laparoscopicsimulators reported improved task performance by trainees and a greaterreduction in operating time, error and unnecessary movements as compared tostandard laparoscopic training [31].

In contrast to laparoscopy, the focus of the arthroscopic literature has been thevalidation of particular simulators as this technology continues to be refined(Table 1). The notion of construct validity, thecorrelation between arthroscopy expertise and simulator performance, has beendemonstrated within the shoulder [5] and knee models [12, 13, 32, 33]. Alternatively, transfer validity is the correlation betweenperformance in the simulator and that in a cadaver model or actual surgicalprocedures.

Knee simulators have been shown to reliably distinguish between novice and expertarthroscopists [12, 32] and demonstrate the learning potential of identifying anatomicallandmarks and triangulation skills [35]. There is only a single study demonstrating the transfer validity ofarthroscopic skills to the operating room for diagnostic knee arthroscopy [13]. However, there was no true control group having only comparedsimulator training versus no training.

Outcome measures that were able to discriminate skill level and expertise inshoulder simulators include; time to completion of tasks, distance and pathtraveled by probe and the number of probe collisions [5, 11, 15, 34]. A follow-up study conducted at the 3-year period showedsignificantly improved simulator performance after an increase in arthroscopicexperience [10]. A positive correlation of arthroscopic task performance betweensimulator and cadaveric models has also been observed in shoulder arthroscopy [15]. A subsequent investigation demonstrated a significant relationshipbetween the performance of basic arthroscopic tasks in a simulator model andresident arthroscopic experience, supporting the use of simulators as beneficialeducational tools to improve arthroscopic skills [36].

Technological advances have made the potential widespread use of simulators moreaffordable, but additional hurdles exist. The availability of content experts,mainly surgeons that can provide domain-specific surgical knowledge to allowdevelopers to generate realistic simulations is a limiting factor [9]. Further understanding of the psychomotor and cognitive components ofthe surgical process is still necessary for its translation into the virtualworld.

Psychomotor analysis and arthroscopic technical ability

The technical capabilities of the surgeon continue to expand as minimallyinvasive surgery evolves. This is especially true in arthroscopy, wheretriangulation and visual-spatial coordination are essential for task completion.This has been accompanied by growing interest in methods of evaluation tofurther refine psychomotor skills. Measuring a sensitive technical parametercould provide an objective marker of arthroscopic technical ability used tovalidate simulators and evaluate trainee performance [37]. These parameters can be characterized into those measuring forcepatterns (haptics) and those focused on trajectory data and motion analysis(Table 2).

Analysis of force sensors has been reported as a valuable method to assessinterference between surgical tools and tissue in endoscopic sinus surgery andlaparoscopic surgical training [41, 42]. In arthroscopy, excessive force applied through instruments mayresult in iatrogenic damage to the patient, often as damage to articularcartilage [11, 23, 43]. Therefore, measurements of force may provide an objective means ofevaluating tactile surgical performance. Assessment of force torque signatureshave been shown to correlate with level of arthroscopic experience in the knee,where expert surgeons had fewer collision incidences, greater variety of forcemagnitudes and superior efficiency [38]. The use of excessive and unnecessary force patterns by trainees wasalso demonstrated in a knee simulator when compared to that of experiencedsurgeons [32]. However, distinguishing harmful from harmless contact in tissuemanipulation and dissection can be challenging and these studies were small andoften lacking a complete assessment of each area of the knee. The concept ofabsolute maximum probing force (AMPF) during menisci manipulation has beenintroduced and significant differences between the expert and novicearthroscopists have been identified [39].

Electromagnetic motion tracking systems have been employed to plot instrument tiptrajectory as an objective evaluation tool. The validity of motion analysis toassess surgical skills in terms of precision and economy of movement has beenshown within the laparoscopy literature [44, 45]. In knee arthroscopic simulators, level of expertise has beenassociated with reduced probe path traveled and number of movements and improvedeconomy of movements [37]. Similarly, the path length of the probe and scissors wassubstantially shorter and probe velocity faster in more experienced surgeonswhen performing partial meniscectomy in knee models [32]. These finding have also been demonstrated in virtual realitysimulators of the shoulder where probe path length was shorter for specialistsand probe velocity was nearly double that of novices [5]. Reduced traveled probe distance has been suggested to correlate withsmoothness of scope manipulation during shoulder joint inspection and probingtasks. Yet, motion analysis investigations have only been performed within asimulated environment and only involving basic arthroscopic tasks. It is unclearif improved efficiency of movements in these models translates into improvedperformance within the operating theatre.

In addition to force and motion analysis, simple visual parameters have beendescribed as an objective method for evaluating technical skill [40]. The prevalence of instrument loss, lookdowns and triangulation timeis able to discriminate novice, resident and expert skill levels in a kneesimulator.

Validated assessment tools

The current paradigm of arthroscopic training relies on the apprenticeship modelwhere residents are evaluated by a precepting surgeon as their level ofinvolvement is subsequently increased. The subjectivity of this method has beencriticized and shown to not necessarily reflect the actual level of skill [23, 43]. This assessment is not based on a pre-determined level ofperformance, but rather on global assessment by the precepting surgeon partlydetermined by that surgeon’s experience and spectrum of patients withintheir practice [9]. Ideally, an assessment tool should be feasible and practical whileremaining as objective as possible [37, 46].

The implementation of various procedure-specific checklists [47–49] and global rating scales [50–52] has been well described in other surgical disciplines and theObjective Structured Assessment of Technical Skill (OSATS) is the most widelyaccepted “gold standard” for objective skills assessment [53]. Yet, evidence suggests that these methods are valid for feedback andmeasuring progress of training rather than examination or credentialing [54].

Within orthopaedics, particularly arthroscopy, research into objective evaluationtechniques is more limited (Table 3). The BasicArthroscopic Knee Scoring System (Additional file 1:Appendix 1-A, 1-B) is a two-part assessment that has been validated in cadaverspecimens [17]. It is composed of a task-specific checklist (TSCL) measuring whatcomponents of a diagnostic arthroscopy and partial meniscectomy a subjectcompletes and a global rating scale (GRS) documenting how well these tasks arecompleted. Both the TSCL and then GRS have been shown to differentiate levels ofarthroscopic skill and objectively evaluate basic arthroscopic proficiency inthe bioskills laboratory [17].

The Orthopaedic Competence Assessment Project, developed by the BritishOrthopaedic Specialist Advisory Committee, is part of the competency-basedtraining structure implemented by the surgical royal colleges in the UnitedKingdom [55, 58]. It consists of an intra-operative technique section comprised of 14criteria, but has not been subjected to independent testing. However, amodification of this procedural-based assessment (Additional file 2: Appendix 2-A) combined with an OSATS global ratingscale (Additional file 2: Appendix 2-B) was developedto evaluate the transfer validity of a simulator in diagnostic knee arthroscopy [13]. Although improved performance in the simulator-trained group wasdemonstrated, the only comparison was an untrained group of individuals.

Recently, more comprehensive knee scoring systems have been introduced. TheArthroscopic Skills Assessment Form is a 100-point tool used to objectivelyevaluate diagnostic knee arthroscopy assigning points for correctly identifyingstructures and time to completion as well as point deductions for iatrogeniccartilage injury [56]. It was able to distinguish between the novice, experienced andexpert arthroscopists in the cadaver knee model. The Objective Assessment ofArthroscopic Skills (OAAS) instrument consists of multiple skill domains eachrated on an expertise-based scale with 5 skill-level options [57]. When combined with an anatomical checklist, the OAAS discriminatedbetween skills levels of various levels of training with excellent internalconsistency and test-retest reliability.

Quantifying arthroscopic proficiency

Despite being amongst the most commonly performed procedures by orthopaedicsurgeons, consensus on what constitutes arthroscopic competence and the numberof procedures to attain it remains uncertain [16, 18]. This is compounded by increasing technical sophistication ofprocedures and the demand for accountability and satisfactory outcomes bypatients [59]. Competency in arthroscopy typically develops during completion of aresidency curriculum as defined by the Residency Review Committee for the ACGME,but there is no suggestion for a recommended case volume of procedures [19]. Certification examinations test for proficiency in contentcomprehension and decision-making capabilities, yet there is no objectivetesting to evaluate arthroscopic technical competencies at the end of residency [20–22].

Objective data regarding competence in arthroscopy is sparse and guidelinesspecifying achievement and maintenance of competence are vague. The ArthroscopyAssociation of North America (AANA) does not quantify competence, but onlyrequires that 50 arthroscopic cases be performed annually to maintain activemembership [60]. However, the AANA does acknowledge that completion of an orthopaedicresidency does not guarantee competence in arthroscopy and that privilegesshould be granted by the regulating bodies of individual hospitals and shouldconsist of an observational period for direct skill assessment [61]. The American Board of Orthopedic Surgery (ABOS) requires a one-yearaccredited ACGME sports medicine fellowship and at least 75 arthroscopy cases tobe eligible for subspecialty certification in sports medicine [62].

Considerable variation exists when attempting to assign a numerical value forarthroscopic competency in the literature. A survey of U.S. orthopaedicdepartment chairs and sports medicine fellowship directors identifiedsubstantial variability in the number of repetitions to become proficient inarthroscopy [18]. For instance, the average number for diagnostic arthroscopy of theknee was 45 with suggested repetitions ranging from 8 to 250. There was also atendency for physicians who perform little or no arthroscopy to underestimatethe experience needed for proficiency. This finding was confirmed in a similarsurvey performed in Europe amongst orthopaedic residents and attending staff aswell as the trend for residents to overestimate the average number of casesrequired for competency [63]. Here, a mean of 120 procedures was estimated by residents forarthroscopic ACL reconstruction compared to 90 by staff physicians.

Discussion

The current paradigm of arthroscopic training combined with increased complexity andfrequency of procedures has led to questioning of its adequacy. This review examinesarthroscopic skill development constructs, objective assessment tools, andguidelines regarding arthroscopic competencies.

Cadaver specimens are a highly regarded training modality for arthroscopic technicalskill development and remain the gold standard for training outside of the operatingroom. Despite concerns regarding pathology consistency in specimens, cost andavailability are the primary constraints to their widespread use [4]. The introduction of synthetic and plastic bone models have the advantageof anatomical reproducibility without maintenance or ethical issues, but have beencriticized for a lack of face validity [64].

Computer-based simulators are moving from the experimental stages with establishedconstruct validity in knee and shoulder arthroscopy [5, 12, 13, 32, 33]. Improved, less-expensive computer hardware has made the technology morereadily available fueling the investigation of their training potential inarthroscopic task performance. These studies have high levels of internal validityand consistency, although most involve only basic arthroscopic skills, such asorientation and triangulation or only demonstrate improved performance inindividuals with no previous arthroscopic experience [65]. Likewise, most validated simulators are only sensitive enough todiscriminate between expert and novice skill levels [66]. The ability to detect smaller, yet clinically significant differencesbetween intermediate skill levels is required to establish benchmarks and provideobjective feedback to the training population of residents.

Studies focusing on complex tasks, such as simulated arthroscopic meniscal repairhave exhibited learning curves and skill retention, but whether this translates intoimproved performance within the operating room has not yet been established [67]. Two systematic reviews have failed to identify sufficient evidence oftransfer validity within the arthroscopic literature [66, 68]. This is also complicated by the heterogenicity of existing simulatorsbeing subjected to validity testing [66]. Further high-quality studies are required before the widespreadacceptance of these tools into mainstream arthroscopic training programs. Thisincludes the establishment of skill-sensitive simulators with standardized validityprotocols that consistently translate into improved technical performance in theoperating room.

Surgical dexterity focusing on parameters extracted from instrument force andtrajectory data may provide an alternate means of objective evaluation. A greatervariety of force signatures and a reduction in excessive and unnecessary probeforces by expert compared to novice arthroscopists has been demonstrated within theknee model [32, 38]. The use of motion analysis to discriminate between levels ofarthroscopic experience in terms of economy of instrument movement and probevelocity has been validated in both knee and shoulder simulation [5, 32, 37, 44, 45]. As with arthroscopic simulations and cadavers, psychomotor analysis hasonly been validated when performing basic arthroscopic tasks predominantly insimulated environments and does not provide a comprehensive assessment ofperformance. However, these parameters may serve as potential adjuncts totraditional means of evaluation and have a role in selecting individuals forsurgical disciplines based on innate arthroscopic ability. Significant differencesin multiple motion analysis parameters was shown in medical students who failed toachieve competence despite sustained practice when completing an arthroscopic taskin knee and shoulder models compared to those who achieved competence [69, 70].

Traditional arthroscopic training during residency lacks a standardized, objectiveevaluation system. The existing preceptor-dependent model is subjective andinefficient in terms of time and cost [2, 11, 23, 43]. There are a limited number of studies dedicated to the development andvalidation of comprehensive assessments of arthroscopic technical skills. The BasicArthroscopic Knee Scoring System can discriminate between different levels ofarthroscopic expertise, but has only been validated in cadaver specimens and whenperforming basic arthroscopic tasks [17]. Similarly, modifications to the intra-operative technique guidelines ofthe Orthopaedic Competence Assessment Project and the addition of a tailed OSATSscale were applied to assess diagnostic knee arthroscopy [13, 55]. The project demonstrated transfer validity to the operating theatre, butsimulator-trained subjects were compared to those with no training at all. Morerecently, comprehensive global assessment instruments such as the ArthroscopicSkills Assessment Form and the OAAS instrument have been shown to discriminatebetween various skills levels of training and provide additional domains ofevaluation with high levels of internal consistency. Objective assessment tools areessential for effective and efficient learning as deficiencies in performance aredifficult to correct without objective feedback [17]. Yet, few such instruments exist within the arthroscopic literature,particularly for the shoulder.

The case volume required to be considered competent in a specific arthroscopicprocedure remains uncertain [16, 18]. The Residency Review Committee for the ACGME only requires a log ofaccumulated arthroscopic procedures and no objective evaluation of technical skillsexists at the completion of residency [20–22]. The AANA does not designate a numerical value to be proficient inarthroscopy and concedes that residency training alone does not guarantee competency [60, 61]. Consensus on what constitutes a sufficient repetition of a procedurevaries considerably when surveying the orthopaedic community and there is a tendencyfor underestimation by those who perform arthroscopy sparingly [18, 63]. There is suggestion that proficiency in arthroscopy is only attainedafter completing a case range equivalent to that of a sports medicine fellowship [17]. Few would contest that there is no substitute for experience, but howmuch is needed and when proficiency is achieved remains unknown.

Conclusion

There is uncertainty concerning the adequacy of arthroscopic training and the bestmeans to achieve technical competencies. Skill acquisition utilizing surgicalsimulation requires further demonstration of transfer validity and the applicationof complex arthroscopic tasks in these environments. Valid assessment toolsevaluating technical performance are required to establish objective parameters inarthroscopic training to generate standardized benchmarks of competency andultimately improve technical proficiency.

References

Garrett WE, Swiontkowski MF, Weinstein JN, Callaghan J, Rosier RN, Berry DJ, Harrast J, Derosa GP: American Board of Orthopaedic Surgery Practice of the Orthopaedic Surgeon:Part-II, certification examination case mix. J Bone Joint Surg Am. 2006, 88: 660-667. 10.2106/JBJS.E.01208.

Farnworth LR, Lemay DE, Wooldridge T, Mabrey JD, Blaschak MJ, DeCoster TA, Wascher DC, Schenck RC: A comparison of operative times in arthroscopic ACL reconstruction betweenorthopaedic faculty and residents: the financial impact of orthopaedicsurgical training in the operating room. Iowa Orthop J. 2001, 21: 31-35.

Bridges M, Diamond DL: The financial impact of teaching surgical residents in the operating room. Am J Surg. 1999, 177: 28-32. 10.1016/S0002-9610(98)00289-X.

Cannon WD, Eckhoff DG, Garrett WE, Hunter RE, Sweeney HJ: Report of a group developing a virtual reality simulator for arthroscopicsurgery of the knee joint. Clin Orthop Relat Res. 2006, 442: 21-29. 10.1097/01.blo.0000197080.34223.00.

Gomoll AH, O’Toole RV, Czarnecki J, Warner JJ: Surgical experience correlates with performance on a virtual realitysimulator for shoulder arthroscopy. Am J Sports Med. 2007, 35: 883-888. 10.1177/0363546506296521.

Irani JL, Mello MM, Ashley SW: Surgical residents' perceptions of the effects of the ACGME duty hourrequirements 1 year after implementation. Surgery. 2005, 138 (2): 246-253. 10.1016/j.surg.2005.06.010.

Zuckerman JD, Kubiak EN, Immerman I, Dicesare P: The early effects of code 405 work rules on attitudes of orthopaedicresidents and attending surgeons. J Bone Joint Surg Am. 2005, 87 (4): 903-908. 10.2106/JBJS.D.02801.

Ritchie WP: The measure of competence: Current plans and future initiatives of theAmerican Board of Surgery. Bull Am Coll Surg. 2001, 86: 10-15.

Michelson JD: Simulation in orthopaedic education: an overview of theory and practice. J Bone Joint Surg Am. 2006, 88: 1405-1411. 10.2106/JBJS.F.00027.

Gomoll AH, Pappas G, Forsythe B, Warner JJ: Individual skill progression on a virtual reality simulator for shoulderarthroscopy: a 3-year follow-up study. Am J Sports Med. 2008, 36: 1139-1142. 10.1177/0363546508314406.

Pedowitz RA, Esch J, Snyder S: Evaluation of a virtual reality simulator for arthroscopy skillsdevelopment. Arthroscopy. 2002, 18: E29-10.1053/jars.2002.33791.

McCarthy A, Harley P, Smallwood R: Virtual arthroscopy training: do the “virtual skills” developedmatch the real skills required?. Stud Health Technol Inform. 1999, 62: 221-227.

Howells NR, Gill HS, Carr AJ, Price AJ, Rees JL: Transferring simulated arthroscopic skills to the operating theatre: arandomised blinded study. J Bone Joint Surg Br. 2008, 90: 494-499. 10.1302/0301-620X.90B4.20414.

Srivastava S, Youngblood PL, Rawn C, Hariri S, Heinrichs WL, Ladd AL: Initial evaluation of a shoulder arthroscopy simulator: establishingconstruct validity. J Shoulder Elbow Surg. 2004, 13: 196-205. 10.1016/j.jse.2003.12.009.

Martin KD, Belmont PJ, Schoenfeld AJ, Todd M, Cameron KL, Owens BD: Arthroscopic basic task performance in shoulder simulator model correlateswith similar task performance in cadavers. J Bone Joint Surg Am. 2011, 93 (21): e1271-e1275.

Hall MP, Kaplan KM, Gorczynski CT, Zuckerman JD, Rosen JE: Assessment of arthroscopic training in U.S. orthopedic surgery residencyprograms--a resident self-assessment. Bull NYU Hosp Jt Dis. 2010, 68 (1): 5-10.

Insel A, Carofino B, Leger R, Arciero R, Mazzocca AD: The development of an objective model to assess arthroscopic performance. J Bone Joint Surg Am. 2009, 91 (9): 2287-2295. 10.2106/JBJS.H.01762.

O'Neill PJ, Cosgarea AJ, Freedman JA, Queale WS, McFarland EG: Arthroscopic proficiency: a survey of orthopaedic sports medicine fellowshipdirectors and orthopaedic surgery department chairs. Arthroscopy. 2002, 18 (7): 795-800. 10.1053/jars.2002.31699.

Residency Review Committee: Orthopaedic Surgery Program Requirements 2007. 2007, Chicago, Illinois: The Accreditation Council for Graduate MedicalEducation.

Bergfeld JA: Issues with accreditation and certification of orthopedic surgeryfellowships. J Bone Joint Surg Am. 1998, 80: 1833-1836.

Omer GE: Certificates of added qualifications in orthopaedic surgery: A position insupport of the certificates. J Bone Joint Surg Am. 1994, 76: 1599-1602.

Sarmiento A: Certificates of added qualification in orthopaedic surgery: A positionagainst the certificates. J Bone Joint Surg Am. 1994, 76: 1603-1605.

Mabrey JD, Gillogly SD, Kasser JR, Sweeney HJ, Zarins B, Mevis H, Garrett WE, Poss R, Cannon WD: Virtual reality simulation of arthroscopy of the knee. Arthroscopy. 2002, 18: E28-10.1053/jars.2002.33790.

Vitale MA, Kleweno CP, Jacir AM, Levine WN, Bigliani LU, Ahmed CS: Training resources in arthroscopic rotator cuff repair. J Bone Joint Surg Am. 2007, 89: 1393-1398. 10.2106/JBJS.F.01089.

Adrales GL, Park AE, Chu UB, Witzke DB, Donnelly MB, Hoskins JD, Mastrangelo MJ, Gandsas A: A valid method of laparoscopic simulation training and competenceassessment. J Surg Res. 2003, 114: 156-162. 10.1016/S0022-4804(03)00315-9.

Fried GM, Feldman LS, Vassiliou MC, Fraser SA, Stanbridge D, Ghitulescu G, Andrew CG: Proving the value of simulation in laparoscopic surgery. Ann Surg. 2004, 240: 518-528. 10.1097/01.sla.0000136941.46529.56.

Brunner WC, Korndorffer JR, Sierra R, Dunne JB, Yau CL, Corsetti RL, Slakey DP, Townsend MC, Scott DJ: Determining standards for laparoscopic proficiency using virtual reality. Am Surg. 2005, 71: 29-35.

Schijven M, Jakimowicz J: Construct validity: experts and novices performing on the Xitact LS500laparoscopy simulator. Surg Endosc. 2003, 17: 803-810. 10.1007/s00464-002-9151-9.

Dorafshar AH, O’Boyle DJ, McCloy RF: Effects of a moderate dose of alcohol on simulated laparoscopic surgicalperformance. Surg Endosc. 2002, 16: 1753-1758. 10.1007/s00464-001-9052-3.

Ahlberg G, Heikkinen T, Iselius L, Leijonmarck CE, Rutqvist J, Arvidsson D: Does training in a virtual reality simulator improve surgicalperformance?. Surg Endosc. 2002, 16: 126-129. 10.1007/s00464-001-9025-6.

Gurusamy K, Aggarwal R, Palanivelu L, Davidson BR: Systematic review of randomized controlled trials on the effectiveness ofvirtual reality training for laparoscopic surgery. Br J Surg. 2008, 95: 1088-1097. 10.1002/bjs.6344.

Tashiro Y, Miura H, Nakanishi Y, Okazaki K, Iwamoto Y: Evaluation of skills in arthroscopic training based on trajectory and forcedata. Clin Orthop Relat Res. 2009, 467: 546-552. 10.1007/s11999-008-0497-8.

Sherman KP, Ward JW, Wills DP, Sherman VJ, Mohsen AM: Surgical trainee assessment using a VE knee arthroscopy training system(VE-KATS): Experimental results. Stud Health Technol Inform. 2001, 81: 465-470.

Smith S, Wan A, Taffinder N, Read S, Emery R, Darzi A: Early experience and validation work with Procedicus VA—The Prosolviavirtual reality shoulder arthroscopy trainer. Stud Health Technol Inform. 1999, 62: 337-343.

Bliss JP, Hanner-Bailey HS, Scerbo MW: Determining the efficacy of an immersive trainer for arthroscopy skills. Stud Health Technol Inform. 2005, 111: 54-56.

Martin KD, Cameron K, Belmont PJ, Schoenfeld A, Owens BD: Shoulder arthroscopy simulator performance correlates with resident andshoulder arthroscopy experience. J Bone Joint Surg Am. 2012, 94 (21): e160.

Howells NR, Brinsden MD, Gill RS, Carr AJ, Rees JL: Motion analysis: a validated method for showing skill levels inarthroscopy. Arthroscopy. 2008, 24 (3): 335-342. 10.1016/j.arthro.2007.08.033.

Chami G, Ward JW, Phillips R, Sherman KP: Haptic feedback can provide an objective assessment of arthroscopicskills. Clin Orthop Relat Res. 2008, 466 (4): 963-968. 10.1007/s11999-008-0115-9. Epub 2008 Jan 23.

Tuijthof G, Horeman T, Schafroth M, Blankevoort L, Kerkhoffs GMM: Probing forces of menisci: what levels are safe for arthroscopic surgery?. Knee Surg Sports Traumatol Arthrosc. 2011, 19 (2): 248-254. 10.1007/s00167-010-1251-9.

Alvand A, Khan T, Al-Ali S, Jackson WF, Price AJ, Rees JL: Simple visual parameters for objective assessment of arthroscopic skill. J Bone Joint Surg Am. 2012, 94 (13): e97.

Rosen J, Hannaford B, MacFarlane MP, Sinanan MN: Force controlled and teleoperated endoscopic grasper for minimally invasivesurgery: experimental performance evaluation. IEEE Trans Biomed Eng. 1999, 46: 1212-1221. 10.1109/10.790498.

Rosen J, Hannaford B, Richards CG, Sinanan MN: Markov modeling of minimally invasive surgery based on tool/tissueinteraction and force/torque signatures for evaluating surgical skills. IEEE Trans Biomed Eng. 2001, 48: 579-591. 10.1109/10.918597.

Darzi A, Smith S, Taffinder N: Assessing operative skill. Needs to become more objective. BMJ. 1999, 318 (7188): 887-888. 10.1136/bmj.318.7188.887.

Datta V, Mackay S, Mandalia M, Darzi A: The use of electromagnetic motion tracking analysis to objectively measureopen surgical skill in the laboratory-based model. J Am Coll Surg. 2001, 193: 479-485. 10.1016/S1072-7515(01)01041-9.

Taffinder N, Smith S, Mair J, Russell R, Darzi A: Can a computer measure surgical precision? Reliability, validity andfeasibility of ICSAD. Surg Endosc. 1999, 13 (suppl 1): 81.

Sidhu RS, Grober ED, Musselman LJ, Reznick RK: Assessing competency in surgery: where to begin?. Surgery. 2004, 135: 6-20. 10.1016/S0039-6060(03)00154-5.

Eubanks TR, Clements RH, Pohl D, Williams N, Schaad DC, Horgan S, Pellegrini C: An objective scoring system for laparoscopic cholecystectomy. J Am Coll Surg. 1999, 189: 566-574. 10.1016/S1072-7515(99)00218-5.

Larson JL, Williams RG, Ketchum J, Boehler ML, Dunnington GL: Feasibility, reliability and validity of an operative performance ratingsystem for evaluating surgery residents. Surgery. 2005, 138: 640-647. 10.1016/j.surg.2005.07.017.

Sarker SK, Chang A, Vincent C: Technical and technological skills assessment in laparoscopic surgery. JSLS. 2006, 10: 284-292.

Bramson R, Sadoski M, Sanders CW, van Walsum K, Wiprud R: A reliable and valid instrument to assess competency in basic surgical skillsin second-year medical students. South Med J. 2007, 100: 985-990. 10.1097/SMJ.0b013e3181514a29.

Chou B, Bowen CW, Handa VL: Evaluating the competency of gynecology residents in the operating room:validation of a new assessment tool. Am J Obstet Gynecol. 2008, 199: 571.e1-571.e5. 10.1016/j.ajog.2008.06.082.

Doyle JD, Webber EM, Sidhu RS: A universal global rating scale for the evaluation of technical skills in theoperating room. Am J Surg. 2007, 193: 551-555. 10.1016/j.amjsurg.2007.02.003.

Martin JA, Regehr G, Reznick R, MacRae H, Murnaghan J, Hutchison C, Brown M: Objective structured assessment of technical skill (OSATS) for surgicalresidents. Br J Surg. 1997, 84: 273-278. 10.1002/bjs.1800840237.

van Hove PD, Tuijthof GJ, Verdaasdonk EG, Stassen LP, Dankelman J: Objective assessment of technical surgical skills. Br J Surg. 2010, 97 (7): 972-987. 10.1002/bjs.7115.

Orthopaedic Competence Assessment Project. . http://www.ocap.rcsed.ac.uk/site/717/default.aspx (date lastaccessed 16 Dec. 2011).

Elliott MJ, Caprise PA, Henning AE, Kurtz CA, Sekiya JK: Diagnostic knee arthroscopy: a pilot study to evaluate surgical skills. Arthroscopy. 2012, 28 (2): 218-224. 10.1016/j.arthro.2011.07.018.

Slade Shantz JA, Leiter JR, Collins JB, MacDonald PB: Validation of a global assessment of arthroscopic skills in a cadaveric kneemodel. Arthroscopy. 2013, 29 (1): 106-112. 10.1016/j.arthro.2012.07.010.

Intercollegiate Surgical Curriculum Project. . http://www.iscp.ac.uk (date last accessed 16 Dec. 2011)

Rineberg BA: Managing change during complex times. J Bone Joint Surg Am. 1990, 72: 799-800.

Arthroscopy Association of North America: Suggested guidelines for the practice of arthroscopic surgery. 2011, Rosemont, IL

Morris AH, Jennings JE, Stone RG, Katz JA, Garroway RY, Hendler RC: Guidelines for privileges in arthroscopic surgery. Arthroscopy. 1993, 9: 125-127. 10.1016/S0749-8063(05)80359-7.

The American Board of Orthopedic Surgery: Available athttps://www.abos.org/certification/sports-subspecialty.aspx.Accessed on December 16, 2011.

Leonard M, Kennedy J, Kiely P, Murphy PG: Knee arthroscopy: how much training is necessary? A cross-sectional study. Eur J Orthop Surg Traumatol. 2007, 17 (4): 359-362. 10.1007/s00590-007-0197-1.

Leong JJ, Leff DR, Das A, Aggarwal R, Reilly P, Atkinson HD, Emery RJ, Darzi AW: Validation of orthopaedic bench models for trauma surgery. J Bone Joint Surg Br. 2008, 90 (7): 958-965. 10.1302/0301-620X.90B7.20230.

Andersen C, Winding TN, Vesterby MS: Development of simulated arthroscopic skills. Acta Orthop. 2011, 82 (1): 90-95. 10.3109/17453674.2011.552776.

Slade Shantz JA, Leiter JR, Gottschalk T, Macdonald PB: The internal validity of arthroscopic simulators and their effectiveness inarthroscopic education. Knee Surg Sports Traumatol Arthrosc. 2012, 10.1007/s00167-012-2228-7. Epub ahead of print.

Jackson WF, Khan T, Alvand A, Al-Ali S, Gill HS, Price AJ, Rees JL: Learning and retaining simulated arthroscopic meniscal repair skills. J Bone Joint Surg Am. 2012, 94 (17): e132.

Modi CS, Morris G, Mukherjee R: Computer-simulation training for knee and shoulder arthroscopic surgery. Arthroscopy. 2010, 26 (6): 832-840. 10.1016/j.arthro.2009.12.033.

Alvand A, Auplish S, Gill H, Rees J: Innate arthroscopic skills in medical students and variation in learningcurves. J Bone Joint Surg Am. 2011, 93 (19): e115(1-9).

Alvand A, Auplish S, Khan T, Gill HS, Rees JL: Identifying orthopaedic surgeons of the future: the inability of some medicalstudents to achieve competence in basic arthroscopic tasks despite training:a randomised study. J Bone Joint Surg Br. 2011, 93 (12): 1586-1591. 10.1302/0301-620X.93B12.27946.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6920/13/61/prepub

Acknowledgements

There are no acknowledgements that the authors wish to disclose.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

Each author certifies that he or she has no commercial associations (eg,consultancies, stock ownership, equity interest, patent/licensing arrangements, etc)that might pose a conflict of interest in connection with the submitted article.

Authors’ contributions

JLH Conceived the manuscript, conducted the literary search and helped draft themanuscript. CV Participated in the design of the manuscript, helped draft, reviseand critically appraise manuscript content. Both authors read and approved the finalmanuscript.

Justin L Hodgins and Christian Veillette contributed equally to this work.

Electronic supplementary material

12909_2012_730_MOESM2_ESM.docx

Additional file 2: Appendix 2-A. Intra-operative technique section of theOrthopaedic Competence Assessment Project arthroscopy procedure-basedassessment. Appendix 2-B: Objective Structured Assessment ofTechnical Skill (OSATS) Global Rating Scale. (DOCX 15 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative CommonsAttribution License (http://creativecommons.org/licenses/by/2.0), whichpermits unrestricted use, distribution, and reproduction in any medium, provided theoriginal work is properly cited.

About this article

Cite this article

Hodgins, J.L., Veillette, C. Arthroscopic proficiency: methods in evaluating competency. BMC Med Educ 13, 61 (2013). https://doi.org/10.1186/1472-6920-13-61

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6920-13-61