Abstract

Background

There is a lack of consensus regarding the use of quality scores in diagnostic systematic reviews. The objective of this study was to use different methods of weighting items included in a quality assessment tool for diagnostic accuracy studies (QUADAS) to produce an overall quality score, and to examine the effects of incorporating these into a systematic review.

Methods

We developed five schemes for weighting QUADAS to produce quality scores. We used three methods to investigate the effects of quality scores on test performance. We used a set of 28 studies that assessed the accuracy of ultrasound for the diagnosis of vesico-ureteral reflux in children.

Results

The different methods of weighting individual items from the same quality assessment tool produced different quality scores. The different scoring schemes ranked different studies in different orders; this was especially evident for the intermediate quality studies. Comparing the results of studies stratified as "high" and "low" quality based on quality scores resulted in different conclusions regarding the effects of quality on estimates of diagnostic accuracy depending on the method used to produce the quality score. A similar effect was observed when quality scores were included in meta-regression analysis as continuous variables, although the differences were less apparent.

Conclusion

Quality scores should not be incorporated into diagnostic systematic reviews. Incorporation of the results of the quality assessment into the systematic review should involve investigation of the association of individual quality items with estimates of diagnostic accuracy, rather than using a combined quality score.

Similar content being viewed by others

Background

Quality assessment is as important in systematic reviews of diagnostic accuracy studies as it is for any other systematic review. One method of incorporating quality into a review is to use a quality score. Quality scores combine the individual items from a quality assessment tool to provide an overall single score. One of the main problems with quality scores is determining how to weight each item to provide an overall quality score. There is no objective way of doing this and different methods are likely to produce different scores that may lead to different results if these scores are used in the analysis.

There has been much discussion regarding the use of quality scores in the area of clinical trials[1–4, 4–8]. Although this discussion has not been specific to diagnostic accuracy studies much of these discussions also apply to this topic area. Previous work illustrating the problems associated with quality scores has used different scales, which not only weighted items differently but also included different items[9]. It has been argued that it was the differences in the items covered by the tools that contributed to the differences found, rather than the use of a combined quality score[2, 3, 6]. The debate regarding quality scores remains and quality scores continue to be used as part of the quality assessment process in both therapeutic and diagnostic systematic reviews [10–14]. The Jadad scale, one of the most commonly used quality assessment tools for therapeutic studies, incorporates a quality score[15], as does one of the commonly used diagnostic quality assessment tools[16]. A recent review of existing quality assessment tools for diagnostic accuracy studies found that 12 of 67 tools (18%) incorporated a quality score[17]. A further review of how quality assessment has been incorporated into systematic reviews found that 16% of reviews that performed some form of quality assessment used quality scores as part of this assessment[18].

We are not aware of any work that has looked at the effect of using different weightings for the same quality assessment tool to produce an overall quality score or that has been done in the area of diagnostic accuracy studies. This project presents a practical example of the problems associated with the use of quality scores in systematic reviews. The aim is to use QUADAS, a quality assessment tool that we recently developed to assess the quality of diagnostic accuracy studies included in systematic reviews[19], to investigate the effect of different weightings on estimates of test performance.

Methods

Scoring methods

QUADAS does not incorporate a quality score. We therefore developed five different schemes for weighting QUADAS (Table 1) to produce an overall study quality score:

1. Equal weighting

All items were weighted equally and scored 1 for yes and 0 for no or unclear.

2. Equal weighting accounting for unclear

All items were weighted equally but scored 2 for yes, 1 for unclear and 0 for no.

3. Weighting according to item type

Items which aimed to detect the presence of bias were scored 3 for yes (items 3, 4, 5, 6, 7, 10, 11, 12), items which aimed to detect sources of variation between studies were scored 2 for yes (item 1) and items which were related to the quality of reporting were scored 1 for yes (items 2, 8, 9, 13, 14). All items were scored 0 for no or unclear.

4. Weighting based on the evidence

The evidence used in the development of QUADAS was used to determine item weighting[18]. Two systematic reviews of the diagnostic literature provided an evidence base for the development of QUADAS. The first was a review of evidence on factors that can lead to bias or variation in the results of diagnostic accuracy studies[20]. For each source of bias or variation, the number of studies that found that a particular source of bias or variation impacted on estimates of diagnostic accuracy was summarised. The second review considered all existing quality assessment tools designed for diagnostic accuracy studies[17]. The proportion of tools that covered each of a list of possible items relating to the quality of diagnostic accuracy studies was summarised. To estimate quality scores using this weighting scheme, items for which there was evidence of bias or variation from at least 5 studies or which were included in at least 75% of existing quality assessment tools were scored 3 for yes (items 1, 5, 10, 11, 12); items for which there was evidence of bias from at least 2 studies and which were included in at least 50% of existing quality assessment tools were scored 2 points for yes (items 3, 6). All other items were given 1 point for yes (items: 4, 7, 8, 9, 13, 14). All items were scored 0 for no or unclear.

5. Subjective scoring

Each item was scored from 1 – 10 based on one of the author's subjective opinion of its importance. This allowed items which the author considered to be of greater importance to receive a much greater weighting than items considered less important. For example items such as inclusion of an appropriate patient spectrum and the use of an appropriate reference standard were judged to be much more important than items such as reporting of selection criteria or details of the reference standard. This is reflected in the weightings given to these items.

These weighting schemes are summarised in Table 1. Each different weighting scheme was used to produce an overall quality score, giving a total of five different scores for each study. As the total maximum possible points differed across the scoring schemes, the scores were expressed as the percentage of the maximum possible points for each scoring scheme so that the quality scores could be compared across schemes.

Data set

We selected a data set consisting of 28 studies that looked at ultrasound for the diagnosis of vesico-ureteral reflux in children. These came from a systematic review on the diagnosis and further investigation of urinary tract infection (UTI) in children under 5[21]. The studies were selected as they provided a set of studies that were heterogeneous in terms of quality and individual study results. They provide two separate data sets within one larger data set as they can be split according to the type of ultrasound used: contrast-enhanced (16 studies) or standard ultrasound (12 studies). Although both types of study evaluated ultrasound and so involve similar quality issues, there were differences in accuracy between the ultrasound types: contrast-enhanced ultrasound is a much more accurate test for vesico-ureteral reflux in children than standard-ultrasound.

Thus we were able to investigate whether different quality scores have the same impact on two separate data sets. QUADAS was used in this review to assess the quality of studies. All studies had previously been coded using QUADAS as yes, no or unclear. This coding was carried out by one reviewer and checked by a second reviewer.

Analysis

Methods for investigating the effects of the quality scores on test performance We used three different methods to investigate the effects of quality scores on test performance. Each method was performed separately for the standard ultrasound studies and for the contrast-enhanced ultrasound studies. For each of the steps involving pooling of studies, standard SROC (summary receiver operating characteristic) methods were used to pool individual study results[22]. The SROC model was estimated by regressing D (log(DOR), where DOR is the diagnostic odds ratio) against S (logit (sensitivity) + logit (1-specificity)), weighting according to sample size, for each study. To account for zero cells in the 2 × 2 tables, 0.5 was added to every cell for all 2 × 2 tables as recommended by Moses et al.[22]. All analyses were carried out using STATA version 8 (StataCorp, College Station, Texas).

a. Ranking of studies

Studies were ranked according to quality score and we investigated whether the ranking of each study was different according to the method used to weight the quality scores. This allowed investigation of whether the use of a summary quality score in a table as an overall indicator of quality is appropriate.

b. Difference in estimates diagnostic accuracy between high and low quality studies

We stratified studies into "high" and "low" quality studies using the quality score. The median quality score was calculated for each scoring scheme. Studies with scores higher than the median score were classified as "high" quality studies, while studies with the median quality score or lower were classified as "low" quality studies. A relative diagnostic odds ratio (RDOR) was calculated for each of the different quality scores by dividing the pooled diagnostic odds ratio (DOR) for the high quality studies by that for the low quality studies.

c. Quality score as a possible source of heterogeneity

The effects of quality on test performance were investigated using meta-regression analysis. The SROC model was extended to include "quality score" as a continuous variable, assuming a linear relationship between quality score and log DOR. We calculated the RDOR for a 10 point increase in quality by multiplying the coefficient for the quality score obtained from the regression analysis by 10 and then anti-logging it.

Results

Table 2 summarises the results for the 28 studies included in this study. It presents the 2 × 2 table results for each study, the results of the quality assessment, and the summary quality scores produced using each of the five scoring schemes. Reading table 2 vertically per item allows readers to make some judgments about which items might contribute to variations in the scores. Figure 1 shows the results of the studies plotted in receiver operating characteristic (ROC) space, giving an indication of the heterogeneity between studies.

a. Ranking of studies

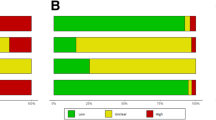

The ranking of the studies using the different quality scores is summarised in Figure 2. For standard ultrasound, all scoring schemes ranked the same three studies as being the best studies, and ranked these in the same order. All scoring schemes also ranked the same study as being of the worst quality. For contrast enhanced ultrasound, scores 1, 2, 3 and 5 ranked the same two studies as being of the best quality. Score 4 ranked these two studies as having the second highest quality score. The study ranked as being the best quality study by score 4 was ranked as being of intermediate quality by the other scoring schemes. All scores ranked the same three studies as being of worst quality, with scores 1, 2, 3 and 4 ranking them in the same order. For both types of ultrasound the different scoring schemes ranked the more intermediate quality studies in different orders.

b. Difference in estimates of diagnostic accuracy between high and low quality studies

The RDOR comparing studies classified as "high" to those classified as "low" quality using each of the five scoring schemes is shown in Figure 3, separately for standard ultrasound and contrast enhanced ultrasound. For standard ultrasound, scores 1,2, and 3 gave RDORs suggesting that high quality studies produced lower estimates of diagnostic accuracy than low quality studies. In contrast, the results from schemes 4 and 5 suggested that there was no difference in estimates of the DOR between high and low quality studies. For contrast-enhanced ultrasound, scores 1, 3, 4 and 5 all classified the same set of studies as being of high and low quality. The RDORs for these quality scores suggested that high quality studies produce higher DORs than low quality studies. In contrast, scheme 2 produced an RDOR suggesting that high quality studies produce lower estimates of diagnostic accuracy than low quality studies.

c. Quality score as a possible source of heterogeneity

Figure 4 shows the RDORs for a 10 point increase in quality score for each of the five different quality scores, separately for standard and contrast-enhanced ultrasound. For standard ultrasound, all scoring schemes suggested that high quality studies produce lower DORs than low quality studies. For contrast-enhanced ultrasound, scores 1, 3, 4 and 5 suggested that higher quality studies produce higher DORs than lower quality studies, while score 2 suggested that they produced lower estimates. However, the confidence intervals around these estimates were wide and all included one.

Discussion

This study has shown that using different methods of weighting individual items from the same quality assessment tool can produce different quality scores. Incorporating these quality scores into the results of a review can lead to different conclusions regarding the effect of study quality on estimates of diagnostic accuracy.

Although the ordering of studies using the different quality scores were broadly similar, there were some differences which could lead to different conclusions if they were used in a systematic review. For example, for the contrast enhanced ultrasound studies, if quality scoring scheme 4 or 5 was used then the study by Bergius and colleagues[23] would be considered to be one of the best quality studies. However, if scoring schemes 1, 2, or 3 were used then this study would be considered to be an average quality study. This suggests that quality scores should not be used as a summary indicator of quality in results tables in systematic reviews. Instead either the results of the whole quality assessment, or key components of the quality assessment, should be reported.

Stratifying studies into high and low quality studies according to quality score also varied according to the scoring scheme used. Although the confidence intervals for all comparisons were wide and all but one included one, the conclusions regarding the association of study quality and diagnostic accuracy differ according to the scoring scheme used. It is important to note that in practice a reviewer would only use one scoring scheme and so the results from the other scoring schemes would not be available to them: they would have to draw conclusions from the results for the single scoring scheme that they selected. For standard ultrasound, two of the schemes assessed produced an overall quality score that suggested no association between study quality and the diagnostic odds ratio. However, if the other three schemes were used then the conclusion would have been that high quality studies tend to produce lower estimates of diagnostic accuracy than low quality studies. Similarly for contrast-enhanced ultrasound, the conclusion for four of the scoring schemes was that high quality studies tend to produce higher estimates of diagnostic accuracy than low quality studies. In contrast, if the other scoring scheme had been used the conclusions would have been reversed. These results suggest that the use of quality scores to stratify studies into high and low quality studies should be avoided.

The inclusion of quality score as a continuous variable in the meta-regression showed fewer differences between scoring schemes. There were larger associations between quality score and the DOR for standard ultrasound than for contrast enhanced ultrasound. This would be expected as there was more heterogeneity between studies of standard ultrasound and so there was more variation that could have been explained by differences in quality. For standard ultrasound the direction of the association between study quality and test performance was the same for all scoring schemes. For contrast enhanced ultrasound the associations reported for quality scores were close to one with wide confidence intervals. This suggests very little association between quality score and diagnostic accuracy, although scoring scheme 2 again produced an association in the opposite direction to the other scoring schemes. The investigation of the association of an overall quality score with a summary effect estimate can be complicated. If no association is found between the two, this does not mean that quality does not affect the summary estimate. It may be that there is no association with any of the components of quality incorporated into the score; there may be associations with one or more components but that these have very little weight and are lost in the overall quality score; or it may be that there are association with two or more components but that these act in opposite directions cancelling each other out[7].

It is interesting to note that for the contrast enhanced ultrasound studies that it was generally scoring scheme 2 that produced different results to the other scoring schemes. All other scoring schemes scored studies that answered "unclear" to an item in the same way as studies that answered "no". Scoring scheme 2 scored these studies higher than those that answered "no". The difference between scoring scheme 2 and the other scoring schemes may therefore be related to the quality of reporting of studies: studies that were poorly reported and answered "unclear" to many of the QUADAS items would be rated higher using this scoring scheme than the other schemes.

The results of this study support the finding of Juni and colleagues that using summary scores to identify high quality studies is problematic[9]. We did not find such large differences between the different scoring schemes included in this study as Juni et al. This would be expected as we were using different methods of weighting the same quality assessment tool whereas they used different quality assessment tools, each of which not only weighted items differently but also included different items. In addition, we used only five different scoring schemes whereas Juni et al. used 25 different quality scales.

Our study was limited by the relatively few primary studies included: for standard ultrasound we included 12 studies, and for contrast-enhanced ultrasound we included 16 studies. The greater the number of studies included in a meta-analysis, the greater the power for detecting associations between study quality and estimates of diagnostic accuracy. If additional primary studies had been available, more precise estimates of the association between quality score and diagnostic accuracy would have been produced and the differences between these associations for the different scoring schemes could have been assessed in more detail. An additional limitation was the poor quality of the reporting of the studies. This resulted in a large proportion of "unclear" responses to the quality assessment.

A further limitation of this study was the lack of a gold standard against which to compare the quality scoring schemes. Lack of agreement between different scoring systems could be expected and does not necessarily invalidate all the scoring systems. The problem in this situation is determining which quality scoring scheme is the most valid. This is an inherent problem with using a quality score, and there is no reliable way of doing this.

Conclusion

This study, in the area of diagnostic systematic reviews, supports the evidence from previous work in the area of therapeutics suggesting that quality scores should not be incorporated into systematic reviews. Incorporation of the results of the quality assessment into the systematic review should involve a component approach, where the association of individual quality items with test accuracy are investigated individually, rather than using a combined quality score.

References

Juni P, Altman DG, Egger M: Assessing the quality of controlled trials. BMJ. 2001, 323: 42-46. 10.1136/bmj.323.7303.42.

Assendelft JJ, Koes BW, van Tulder MW, Bouter LM: Scoring the quality of clinical trials [letter]. JAMA. 2000, 283: 1421-10.1001/jama.283.11.1421.

ter Riet G, Leffers P, Zeegers M: Scoring the quality of clinical trials [letter]. JAMA. 2000, 283: 1421-10.1001/jama.283.11.1421.

Berlin JA, Rennie D: Measuring the quality of trials: the quality of quality scales. JAMA. 1999, 282: 1083-5. 10.1001/jama.282.11.1083.

Juni P, Egger M: Scoring the quality of clinical trials [letter]. JAMA. 2000, 283: 1422-3.

Klassen T: Bias against quality scores. 2001, 2002:

Greenland S: Invited commentary: A critical look at some popular meta-analytic methods. American Journal of Epidemiology. 1994, 140: 290-296.

Greenland S: Quality scores are useless and potentially misleading. American Journal of Epidemiology. 1994, 140: 300-302.

Juni P, Witschi A, Bloch RM, Egger M: The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999, 282: 1054-1060. 10.1001/jama.282.11.1054.

Seffinger MA, Najm WI, Mishra SI, Adams A, Dickerson VM, Murphy LS, Reinsch S: Reliability of spinal palpation for diagnosis of back and neck pain: a systematic review of the literature. Spine. 2004, 29: E413-E425. 10.1097/01.brs.0000141178.98157.8e.

Ejnisman B, Andreoli CV, Soares BG, Fallopa F, Peccin MS, Abdalla RJ, Cohen M: Interventions for tears of the rotator cuff in adults. Cochrane Database Syst Rev. 2004, CD002758-

Macdermid JC, Wessel J: Clinical diagnosis of carpal tunnel syndrome: a systematic review. J Hand Ther. 2004, 17: 309-319.

Warren E, Weatherley-Jones E, Chilcott J, Beverley C: Systematic review and economic evaluation of a long-acting insulin analogue, insulin glargine. Health Technol Assess. 2004, 8: 1-72.

Mowatt G, Vale L, Brazzelli M, Hernandez R, Murray A, Scott N, Fraser C, McKenzie L, Gemmell H, Hillis G, Metcalfe M: Systematic review of the effectiveness and cost-effectiveness, and economic evaluation, of myocardial perfusion scintigraphy for the diagnosis and management of angina and myocardial infarction. Health Technol Assess. 2004, 8: iii-207.

Jadad AR, Moore A, Carroll D, Jenkinson C, Reynolds DJ, Gavaghan DJ, McQuay HJ: Assessing the quality of reports of randomised clinical trials: is blinding necessary?. Control Clin Trials. 1996, 17: 1-12. 10.1016/0197-2456(95)00134-4.

Mulrow CD, Linn WD, Gaul MK, Pugh JA: Assessing quality of a diagnostic test evaluation. J Gen Intern Med. 1989, 4: 288-295.

Whiting P, Rutjes AWS, Dinnes J, Reitsma JB, Bossuyt PM, Kleijnen J: A systematic review finds that diagnostic reviews fail to incorporate quality despite available tools. Journal of Clinical Epidemiology. 2005, 58: 1-12. 10.1016/j.jclinepi.2004.04.008.

Whiting P, Rutjes AWS, Dinnes J, Reitsma JB, Bossuyt PMM, Kleijnen J: Development and validation of methods for assessing the quality and reporting of diagnostic accuracy studies. Health Technol Assess. 2004, 8: iii, 1-234.

Whiting P, Rutjes AWS, Reitsma JB, Bossuyt PM, Kleijnen J: The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Medical Research Methodology. 2003, 3: 25-10.1186/1471-2288-3-25.

Whiting P, Rutjes AWS, Reitsma JB, Glas AS, Bossuyt PM, Kleijnen J: Sources of Variation and Bias in Studies of Diagnostic Accuracy: A Systematic Review. Annals of Internal Medicine. 2004, 140: 189-202.

Whiting P, Westwood M, Ginnelly L, Palmer S, Richardson G, Cooper J, Watt I, Glanville J, Sculpher M, Kleijnen J: A systematic review of tests for the diagnosis and evaluation of urinary tract infection (UTI) in children under five years. Health Technology Assessment. 2005

Moses LE, Shapiro D, Littenberg B: Combining independent studies of a diagnostic test into a summary ROC curve: data-analystic approaches and some additional considerations. Stat Med. 1993, 12: 1293-1316.

Bergius AR, Niskanen K, Kekomaki M: Detection of significant vesico-ureteric reflux by ultrasound in infants and children. Z Kinderchir. 1990, 45: 144-5.

Baronciani D, Bonora G, Andreoli A, Cambie M, Nedbal M, Dellagnola CA: The Value of Ultrasound for Diagnosing the Uropathy in Children with Urinary-Tract Infections. Rivista Italiana di Pediatria-Italian Journal of Pediatrics. 1986, 12: 214-220.

Dura TT, Gonzalez MR, Juste RM, Gonzalez de DJ, Carratala MF, Moya BM, Verdu RJ, Caballero CO: [Usefulness of renal scintigraphy in the assessment of the first febrile urinary infection in children]. An Esp Pediatr. 1997, 47: 378-382.

Evans ED, Meyer JS, Harty MP, Bellah RD: Assessment of increase in renal pelvic size on post-void sonography as a predictor of vesicoureteral reflux. Pediatr Radiol. 1999, 29: 291-4. 10.1007/s002470050591.

Foresman WH, Hulbert WCJ, Rabinowitz R: Does urinary tract ultrasonography at hospitalization for acute pyelonephritis predict vesicoureteral reflux?. J Urol. 2001, 165: 2232-4. 10.1097/00005392-200106001-00004.

Mage K, Zoppardo P, Cohen R, Reinert P, Ponet M: [Imaging and the first urinary infection in children. Respective role of each test during the initial evaluation apropos of 122 cases]. J Radiol. 1989, 70: 279-283.

Mahant S, Friedman J, MacArthur C: Renal ultrasound findings and vesicoureteral reflux in children hospitalised with urinary tract infection. Arch Dis Child. 2002, 86: 419-420. 10.1136/adc.86.6.419.

Morin D, Veyrac C, Kotzki PO, Lopez C, Dalla VF, Durand MF, Astruc J, Dumas R: Comparison of ultrasound and dimercaptosuccinic acid scintigraphy changes in acute pyelonephritis. Pediatr Nephrol. 1999, 13: 219-222. 10.1007/s004670050596.

Muensterer OJ: Comprehensive ultrasound versus voiding cysturethrography in the diagnosis of vesicoureteral reflux. Eur J Pediatr. 2002, 161: 435-437. 10.1007/s00431-002-0990-0.

Oostenbrink R, van der Heijden AJ, Moons KG, Moll HA: Prediction of vesico-ureteric reflux in childhood urinary tract infection: a multivariate approach. Acta Paediatr. 2000, 89: 806-10. 10.1080/080352500750043693.

Salih M, Baltaci S, Kilic S, Anafarta K, Beduk Y: Color flow Doppler sonography in the diagnosis of vesicoureteric reflux. Eur Urol. 1994, 26: 93-7.

Tan SM, Chee T, Tan KP, Cheng HK, Ooi BC: Role of renal ultrasonography (RUS) and micturating cystourethrogram (MCU) in the assessment of vesico-ureteric reflux (VUR) in children and infants with urinary tract infection (UTI). Singapore Med J. 1988, 29: 150-152.

Verber IG, Strudley MR, Meller ST: 99mTc dimercaptosuccinic acid (DMSA) scan as first investigation of urinary tract infection. Arch Dis Child. 1988, 63: 1320-1325.

Alzen G, Wildberger JE, Muller-Leisse C, Deutz FJ: [Ultrasound screening of vesico-uretero-renal reflux]. Klin Padiatr. 1994, 206: 178-180.

Berrocal T, Gaya F, Arjonilla A, Lonergan GJ: Vesicoureteral reflux: diagnosis and grading with echo-enhanced cystosonography versus voiding cystourethrography. Radiology. 2001, 221: 359-365.

Berrocal Frutos T, Gaya Moreno F, Gomez Leon N, Jaureguizar Monereo E: Ecocistografia con contraste: una nueva modalidad de imagen para diagnosticar elreflujo vesicoureteral. [Cystosonography with echoenhancer. A new imaging technique for the diagnosis of vesicoureteral reflux]. An Esp Pediatr. 2000, 53: 422-30.

Haberlik A: Detection of low-grade vesicoureteral reflux in children by color Doppler imaging mode. Pediatr Surg Int. 1997, 12: 38-43.

Kessler RM, Altman DH: Real-time sonographic detection of vesicoureteral reflux in children. Am J Roentgenol. 1982, 138: 1033-1036.

McEwing RL, Anderson NG, Hellewell S, Mitchell J: Comparison of echo-enhanced ultrasound with fluoroscopic MCU for the detection of vesicoureteral reflux in neonates. Pediatr Radiol. 2002, 32: 853-858. 10.1007/s00247-002-0812-6.

Mentzel HJ, Vogt S, John U, Kaiser WA: Voiding urosonography with ultrasonography contrast medium in children. Pediatr Nephrol. 2002, 17: 272-276. 10.1007/s00467-002-0843-0.

Nakamura M, Wang Y, Shigeta K, Shinozaki T, Taniguchi N, Itoh K: Simultaneous voiding cystourethrography and voiding urosonography: an in vitro and in vivo study. Clin Radiol. 2002, 57: 846-849.

Piaggio G, gl' Innocenti ML, Toma P, Calevo MG, Perfumo F: Cystosonography and voiding cystourethrography in the diagnosis of vesicoureteral reflux. Pediatr Nephrol. 2003, 18: 18-22. 10.1007/s00467-002-0974-3.

Schneider K, Jablonski C, Wiessner M, Kohn M, Fendel H: Screening for vesicoureteral reflux in children using real-time sonography. Pediatr Radiol. 1984, 14: 400-3.

Siamplis D, Vasiou K, Giarmenitis S, Frimas K, Zavras G, Fezoulidis I: Sonographic detection of vesicoureteral reflux with fluid and air cystography. Comparison with VCUG. Rofo Fortschr Geb Rontgenstr Neuen Bildgeb Verfahr. 1996, 165: 166-9.

Valentini AL, Salvaggio E, Manzoni C, Rendeli C, Destito C, Summaria V, Campioni P, Marano P: Contrast-enhanced gray-scale and color Doppler voiding urosonography versus voiding cystourethrography in the diagnosis and grading of vesicoureteral reflux. J Clin Ultrasound. 2001, 29: 65-71. 10.1002/1097-0096(200102)29:2<65::AID-JCU1000>3.0.CO;2-I.

Uhl M, Kromeier J, Zimmerhackl LB, Darge K: Simultaneous voiding cystourethrography and voiding urosonography. Acta Radiol. 2003, 44: 265-268. 10.1034/j.1600-0455.2003.00065.x.

Von Rohden L, Bosse U, Wiemann D: [Reflux sonography in children with an ultrasound contrast medium in comparison to radiologic voiding cystourethrography]. Paediat Prax. 2004, 49: 49-58.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/5/19/prepub

Acknowledgements

No financial or material support was provided for this study. We would like to thank Marie Westwood for help in performing the quality assessment of the primary studies.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

Penny whiting contributed to the conception and design of the study, acquisition of data, analysis and interpretation of data, and drafted the manuscript. Roger Harbord and Jos Kleijnen contributed to the analysis and interpretation of data and the critical review of the manuscript for important intellectual content.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Whiting, P., Harbord, R. & Kleijnen, J. No role for quality scores in systematic reviews of diagnostic accuracy studies. BMC Med Res Methodol 5, 19 (2005). https://doi.org/10.1186/1471-2288-5-19

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-5-19