Abstract

Let be an array of random variables with and for some . For any sequences and of positive real numbers, sets of sufficient conditions are given for complete q th moment convergence of the form , , where . From these results, we can easily obtain some known results on complete q th moment convergence.

Similar content being viewed by others

1 Introduction

The concept of complete convergence was introduced by Hsu and Robbins [1]. A sequence of random variables is said to converge completely to the constant θ if

Hsu and Robbins [1] proved that the sequence of arithmetic means of i.i.d. random variables converges completely to the expected value if the variance of the summands is finite. Erdös [2] proved the converse.

The result of Hsu, Robbins, and Erdös has been generalized and extended in several directions. Baum and Katz [3] proved that if is a sequence of i.i.d. random variables with , (, ) is equivalent to

Chow [4] generalized the result of Baum and Katz [3] by showing the following complete moment convergence. If is a sequence of i.i.d. random variables with and for some , , and , then

where . Note that (1.2) implies (1.1). Li and Spătaru [5] gave a refinement of the result of Baum and Katz [3] as follows. Let be a sequence of i.i.d. random variables with , and let , , , and . Then

if and only if

Recently, Chen and Wang [6] proved that for any , any sequences and of positive real numbers and any sequence of random variables,

and

are equivalent. Therefore, if is a sequence of i.i.d. random variables with and , , , and , then the moment condition (1.3) is equivalent to

When , the complete q th moment convergence (1.4) is reduced to complete moment convergence.

The complete q th moment convergence for dependent random variables was established by many authors. Chen and Wang [7] showed that (1.3) and (1.4) are equivalent for φ-mixing random variables. Zhou and Lin [8] established complete q th moment convergence theorems for moving average processes of φ-mixing random variables. Wu et al. [9] obtained complete q th moment convergence results for arrays of rowwise -mixing random variables.

The purpose of this paper is to provide sets of sufficient conditions for complete q th moment convergence of the form

where , and are sequences of positive real numbers, and is an array of random variables satisfying Marcinkiewicz-Zygmund and Rosenthal type inequalities. When , similar results were established by Sung [10]. From our results, we can easily obtain the results of Chen and Wang [7] and Wu et al. [9].

2 Main results

In this section, we give sets of sufficient conditions for complete q th moment convergence (1.5). The following theorem gives sufficient conditions under the assumption that the array satisfies a Marcinkiewicz-Zygmund type inequality.

Theorem 2.1 Let and let be an array of random variables with and for and . Let and be sequences of positive real numbers. Suppose that the following conditions hold:

-

(i)

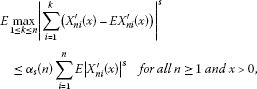

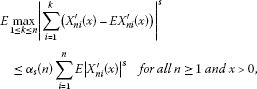

for some s (), there exists a positive function such that

(2.1)

(2.1)

where ,

-

(ii)

,

-

(iii)

,

-

(iv)

.

Then (1.5) holds.

Proof It is obvious that

We first show that . For and , define

Then we have by , Markov’s inequality, and (i) that

It follows that

Hence by (ii) and (iii).

We next show that . By the definition of , we have that

We also have by and (iv) that

Hence to prove that , it suffices to show that

If , then and so

which implies that

Hence by (iii).

Finally, we show that . We get by Markov’s inequality and (i) that

Using a simple integral and Fubini’s theorem, we obtain that

Similarly to ,

Hence by (ii) and (iii). □

The next theorem gives sufficient conditions for complete q th moment convergence (1.5) under the assumption that the array satisfies a Rosenthal type inequality.

Theorem 2.2 Let and let be an array of random variables with and for and . Let and be sequences of positive real numbers. Suppose that the following conditions hold:

-

(i)

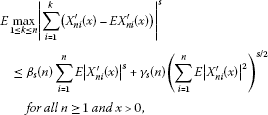

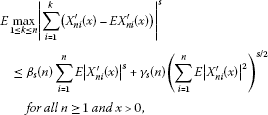

for some (r is the same as in (v)), there exist positive functions and such that

(2.2)

(2.2)

where ,

-

(ii)

,

-

(iii)

,

-

(iv)

,

-

(v)

for some .

Then (1.5) holds.

Proof The proof is similar to that of Theorem 2.1. As in the proof of Theorem 2.1,

Similarly to in the proof of Theorem 2.1, we have by the -inequality that

Hence by (ii), (iii), and (v).

As in the proof of Theorem 2.1, to prove that , it suffices to show that

The proof of is same as that of in the proof of Theorem 2.1.

For , we have by Markov’s inequality and (i) that

Similarly to in the proof of Theorem 2.1, we get that

Hence by (ii) and (iii).

Finally, we show that . By the -inequality,

Hence by (v). □

Remark 2.1 Marcinkiewicz-Zygmund and Rosenthal type inequalities hold for dependent random variables as well as independent random variables.

-

(1)

Let be an array of rowwise negatively associated random variables. Then, for , (2.1) holds for . For , (2.2) holds for and (see Shao [11]). Note that and are multiplied by the factor since .

-

(2)

Let be an array of rowwise negatively orthant dependent random variables. By Corollary 2.2 of Asadian et al. [12] and Theorem 3 of Móricz [13], (2.1) holds for , and (2.2) holds for and , where and are constants depending only on s.

-

(3)

Let be a sequence of identically distributed φ-mixing random variables. Set for and . By Shao’s [14] result, (2.2) holds for a constant function and a slowly varying function . In particular, if , then (2.2) holds for some constant functions and .

-

(4)

Let be a sequence of identically distributed ρ-mixing random variables. Set for and . By Shao’s [15] result, (2.2) holds for some slowly varying functions and . In particular, if , then (2.2) holds for some constant functions and .

-

(5)

Let be a sequence of -mixing random variables. Set for and . By the result of Utev and Peligrad [16], (2.2) holds for some constant functions and .

3 Corollaries

In this section, we establish some complete q th moment convergence results by using the results obtained in the previous section.

Corollary 3.1 (Chen and Wang [7])

Let be a sequence of identically distributed φ-mixing random variables with , and let , , , and . Assume that (1.3) holds. Furthermore, suppose that

if and . Then

Proof Let and for , and let for and . Then, for , (2.2) holds for a constant function and a slowly varying function (see Remark 2.1(3)). Under the additional condition that , (2.2) holds for some constant functions and . In particular, for , (2.1) holds for a constant function under this additional condition.

By a standard method, we have that

where C is a positive constant which is not necessarily the same one in each appearance. Hence, the conditions (i)-(iv) of Theorem 2.2 hold if we take . Under the additional conditions that and , all conditions of Theorem 2.1 hold if we take . Therefore, the result follows from Theorems 2.1 and 2.2 if we only show that the condition (v) of Theorem 2.2 holds when or . To do this, we take if and if . If or , then and so we can choose large enough such that . Then

Hence the condition (v) of Theorem 2.2 holds. □

Let be a sequence of positive even functions satisfying

for some .

Corollary 3.2 Let be a sequence of positive even functions satisfying (3.1) for some . Let be an array of random variables satisfying for and , and (2.1) for some constant function . Let and be sequences of positive real numbers. Suppose that the following conditions hold:

-

(i)

,

-

(ii)

.

Then (1.5) holds.

Proof First note by that is an increasing function. Since ,

Since and ,

It follows that all conditions of Theorem 2.1 are satisfied and so the result follows from Theorem 2.1. □

Corollary 3.3 Let be a sequence of positive even functions satisfying (3.1) for some and . Let be an array of random variables satisfying for and , and (2.2) for some constant functions and . Let and be sequences of positive real numbers. Suppose that the following conditions hold:

-

(i)

,

-

(ii)

,

-

(iii)

.

Then (1.5) holds.

Proof The proof is similar to that of Corollary 3.2. By the proof of Corollary 3.2 and the condition (iii), all conditions of Theorem 2.2 are satisfied and so the result follows from Theorem 2.2. □

Remark 3.1 When for , the condition (i) of Corollaries 3.2 and 3.3 is reduced to the condition , and so the condition (ii) of Corollaries 3.2 and 3.3 follows from this reduced condition. For a sequence of -mixing random variables, (2.1) holds for some constant function if , and (2.2) holds for some constant functions and if (see Remark 2.1(5)). Wu et al. [9] proved Corollaries 3.2 and 3.3 when for , and is an array of rowwise -mixing random variables.

References

Hsu PL, Robbins H: Complete convergence and the law of large numbers. Proc. Natl. Acad. Sci. USA 1947, 33: 25–31. 10.1073/pnas.33.2.25

Erdös P: On a theorem of Hsu and Robbins. Ann. Math. Stat. 1949, 20: 286–291. 10.1214/aoms/1177730037

Baum LE, Katz M: Convergence rates in the law of large numbers. Trans. Am. Math. Soc. 1965, 120: 108–123. 10.1090/S0002-9947-1965-0198524-1

Chow YS: On the rate of moment convergence of sample sums and extremes. Bull. Inst. Math. Acad. Sin. 1988, 16: 177–201.

Li D, Spătaru A: Refinement of convergence rates for tail probabilities. J. Theor. Probab. 2005, 18: 933–947. 10.1007/s10959-005-7534-2

Chen PY, Wang DC: Convergence rates for probabilities of moderate deviations for moving average processes. Acta Math. Sin. 2008, 24: 611–622. 10.1007/s10114-007-6062-7

Chen PY, Wang DC: Complete moment convergence for sequences of identically distributed φ -mixing random variables. Acta Math. Sin. 2010, 26: 679–690. 10.1007/s10114-010-7625-6

Zhou XC, Lin JG: Complete q -moment convergence of moving average processes under φ -mixing assumption. J. Math. Res. Expo. 2011, 31: 687–697.

Wu Y, Wang C, Volodin A:Limiting behavior for arrays of rowwise -mixing random variables. Lith. Math. J. 2012, 52: 214–221. 10.1007/s10986-012-9168-2

Sung SH: Moment inequalities and complete moment convergence. J. Inequal. Appl. 2009., 2009: Article ID 271265

Shao QM: A comparison theorem on moment inequalities between negatively associated and independent random variables. J. Theor. Probab. 2000, 13: 343–356. 10.1023/A:1007849609234

Asadian N, Fakoor V, Bozorgnia A: Rosenthal’s type inequalities for negatively orthant dependent random variables. J. Iran. Stat. Soc. 2006, 5: 69–75.

Móricz F: Moment inequalities and the strong laws of large numbers. Z. Wahrscheinlichkeitstheor. Verw. Geb. 1976, 35: 299–314. 10.1007/BF00532956

Shao QM: A moment inequality and its applications. Acta Math. Sin. Chin. Ser. 1988, 31: 736–747.

Shao QM: Maximal inequalities for partial sums of ρ -mixing sequences. Ann. Probab. 1995, 23: 948–965. 10.1214/aop/1176988297

Utev S, Peligrad M: Maximal inequalities and an invariance principle for a class of weakly dependent random variables. J. Theor. Probab. 2003, 16: 101–115. 10.1023/A:1022278404634

Acknowledgements

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology (2010-0013131).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author declares that he has no competing interests.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Sung, S.H. Complete q th moment convergence for arrays of random variables. J Inequal Appl 2013, 24 (2013). https://doi.org/10.1186/1029-242X-2013-24

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1029-242X-2013-24