Abstract

This paper proposes to generate awareness for developing Artificial intelligence (AI) ethics by transferring knowledge from other fields of applied ethics, particularly from business ethics, stressing the role of organizations and processes of institutionalization. With the rapid development of AI systems in recent years, a new and thriving discourse on AI ethics has (re-)emerged, dealing primarily with ethical concepts, theories, and application contexts. We argue that business ethics insights may generate positive knowledge spillovers for AI ethics, given that debates on ethical and social responsibilities have been adopted as voluntary or mandatory regulations for organizations in both national and transnational contexts. Thus, business ethics may transfer knowledge from five core topics and concepts researched and institutionalized to AI ethics: (1) stakeholder management, (2) standardized reporting, (3) corporate governance and regulation, (4) curriculum accreditation, and as a unified topic (5) AI ethics washing derived from greenwashing. In outlining each of these five knowledge bridges, we illustrate current challenges in AI ethics and potential insights from business ethics that may advance the current debate. At the same time, we hold that business ethics can learn from AI ethics in catching up with the digital transformation, allowing for cross-fertilization between the two fields. Future debates in both disciplines of applied ethics may benefit from dialog and cross-fertilization, meant to strengthen the ethical depth and prevent ethics washing or, even worse, ethics bashing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence (AI) ethics as a discipline is still in the making—given not only the novelty of pervasive and powerful AI as such but even more the societal and commercial use and implications, raising questions of moral reasoning, normative controversy, as well as governance and regulation [149, 154]. This article argues that some structural analogies from other applied ethics fields may serve as knowledge transfer bridges for AI ethics and provide cross-fertilization. We thereby build on a long tradition of cross-fertilization and knowledge transfer between subfields of applied ethics [38, 74, 123, 124]. Recently, a proposal to adopt medical ethics insights to the broader field of AI ethics has been published with important spillovers [166]. In analogy, we propose to adopt insights from the field of business ethics to advance the institutionalization of organizational AI ethics, as ethical concern about environmental and societal issues have produced experience with institutionalization, regulation, and proposing management procedures for tackling ethical issues in corporate environments [24, 133]. Thus, insights about the success and failures of institutionalizing ethics may help advance the discussion about AI ethics [32, 69, 149]. In turn, business ethics can gain by catching up with the recent challenges arising with AI in organizational contexts.

Broadly defined, business ethics focuses on “the study of business situations, activities, and decisions where issues of right and wrong are addressed” [31]. In this sense, business ethics comprises the study of the collective or ‘legal entity’ that produces goods and services, as well as its individual members and external stakeholders related to the business. From this perspective, technology corporations can be seen as powerful and pioneering collectives that maintain close relationships with governments and regulators via public affairs, lobbying, and corporate political activity, sometimes also close collaborations regarding security and intelligence [6, 71].

Corporate misconduct and shady business practices have substantially contributed to the growth of business ethics as a discipline and, in a broader vein, the advancement of governance and regulation [132, 161]. Over the past decades, business ethics has built a profound literature body dealing with various corporate scandals and implementing positive organizational ethics [24, 122]. Some of these ethics and (corporate) social responsibility discussions have been gradually formalized and led to voluntary (soft), and mandatory (hard) law, guiding business practices in both national and transnational realms [28, 133], thus, triggering an institutionalization of organizational ethics [83, 98, 146]. Nonetheless, corporate misconduct and challenges with implementing ethics in firms’ daily practices persist, indicating limitations of institutionalizing ethics in businesses [7, 8, 95, 119, 126]. Consequently, AI ethics may gain from insights into both the success and failures of institutionalizing business ethics in recent decades.

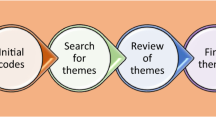

In the following, we suggest five well-researched and institutionalized topics and concepts established for decades in business ethics that may help to advance the current debate and institutionalization of AI ethics: (1) stakeholder management, (2) reporting on non-financial digital issues, (3) corporate governance and regulation, (4) AI/tech ethics in tertiary education, and, the overarching topic, (5) Greenwashing and digital ethics washing. Thus, we will outline the new and thriving discourse on AI ethics of recent years as a (sub-) discipline of the broader field of applied ethics, conjointly with business ethics as a valuable source of knowledge about the success and failures of institutionalizing organizational ethics [32, 51, 69, 142, 149]. We then depict each of the five core concepts by elaborating on the current AI ethics challenges while highlighting the knowledge transfer bridge from business ethics. Ultimately, we point out where limitations and potential for cross-fertilization between business and AI ethics arise, stressing the value of joining forces between applied ethics fields.

2 AI ethics as a (sub-) discipline of applied ethics

In the following, we outline that AI ethics is recommended to be integrated into the canon of applied ethics as a distinct field. So far, applied ethics as a field of ethics deals with “all systematic efforts to understand and to resolve moral problems that arise in some domain of practical life, […] or with some general issue of social concern” [174]. Within the (traditional) field of applied ethics, as Winkler continues, three major subfields are salient: biomedical, environmental, and business ethics. Whereas bio(medical) ethics revolves around issues of ethical concern in medicine and biomedical research, environmental ethics is more future-oriented, focusing on the sustainable preservation of the earth’s ecosystems and the biosphere [174]. Further, business ethics, which will be central to the arguments presented below, is concerned with ethical issues arising in professional contexts, and that goes along with corporations’ conduct. All three subfields have grown over several decades, building a substantial research body and transitioning beyond a philosophical sub-discipline of applied ethics. For example, business ethics is considered an established field in general management and academic discipline, with “chairs, societies, master’s programs, conferences, and dedicated journals” [122, 139]. Similarly, bioethics is considered a well-established field in biomedical research and public health, substantially contributing to advancing the practice of medicine [165]. Beyond these salient subfields, several other important research areas of applied ethics exist [for a profound overview, see, e.g., [23].

A new and thriving discourse of applied ethics has gradually moved into the public and scholarly spotlight in the past seven years: AI ethics. AI ethics as “a part or an extension of computer ethics” is thereby closely related to or sometimes used as an equivalent for digital ethics, cyberethics, tech ethics, information ethics, data ethics, internet ethics, and machine and robot ethics [35, 73, 98, 103, 128, 149, 154, 156]. AI has spread rapidly across many areas of everyday life, driven by advancements in several approaches of computer sciences and particularly machine learning techniques, such as decision trees, support vector machines, neural nets, and deep learning (see, e.g., [29, 164]). With the gradual commercialization and deployment of AI across multiple sectors, such as self-driving cars, job applicant assessment, bank lending, and autonomous weapon systems, AI decision-making questions have become a major concern [17, 94, 98, 125].

Critique has been raised about AI’s predictive and classificatory potential, leading to systemic errors and adverse outcomes for individuals and society. Numerous recent examples have shown that even unintended errors in AI’s design and training process can trigger profound ethical challenges regarding discrimination, fairness, privacy, and accountability [10, 39]. As a consequence, the study of the ethics of AI has become a salient focal point of academic discourse and drawn the attention of practitioners, policymakers, and the general public [114]. Seen as a continuation and extension of the computer ethics discourse, AI ethics is still in an early stage dealing with the institutionalization of ethics to address ethical challenges raised in organizational environments [69, 98, 149]. As Powers and Ganascia [125] note: “[t]he ethics of AI may be a “work in progress,” but it is at least a call that has been answered.”

2.1 AI ethics building on the tradition of knowledge transfer and cross-fertilization in applied ethics

This article strives to contribute to the progressing work in AI ethics, seeking to shed light on knowledge transfer bridges from the field of business ethics [98]. Thus, underlining how AI ethics may benefit from drawing on previous knowledge and cross-fertilizing with other applied ethics disciplines. In this regard, we build on a long tradition of reciprocal enrichment and knowledge sharing between subfields of applied ethics [38, 74, 123, 124]. To illustrate this point, one may consider Eiser et al. [38] and Poitras [123] outlining the common ground between medical and business ethics; Hoffman [74] exploring the interconnections between environmental and business ethics; and Potter [124] linking medical and environmental ethics. The most recent, hands-on approach of such a mutually enriching knowledge transfer has been published by Carissa Véliz [166]. Véliz [166] adopts insights from medical ethics to AI ethics. The analogy between the two “is quite close,” but as Véliz also writes, “the analogy between medical and digital ethics is not perfect, however. The digital context is much more political than the medical one, as well as more dominated by private forces, and it will have to develop its own ethical practices” [166]. In light of this accurate description, this article proposes to adopt insights in analogy to Vèliz from the well-established field of business ethics to AI ethics. Therefore, this paper is not to present a ‘better’ analogy but to complement and empower Véliz's [166] work with a selection of well-researched and institutionalized topics and concepts established for decades in business ethics. Thereby hoping to advance AI ethics by a multilateral perspective, ideally joining forces from various established ethics fields (see also [32, 51]). Given the scale and frequency of business scandals of the past decades, there is a lot to learn from business ethics on tackling ethical challenges concerning institutionalizing organizational ethics [83, 146]. As Venkataramakrishnan notes [167]: “[t]he assumption that tech ethics is mutually exclusive with innovation is at best lazy; so is the view that ethical treatment is an “optional” extra for companies that can afford it. […] Other sectors, such as coal and oil, have faced a reckoning over the impact they have on society; there is no reason why technology should not do the same.” Over the past decades, many ethical and social responsibility debates have been translated into voluntary and mandatory regulation in national and transnational perspectives. Consequently, in the following, we portray five knowledge bridges to connect business and AI ethics, explaining today’s challenge in AI ethics followed by the respective business ethics concept we propose to adopt concerning the institutionalization of organizational AI ethics (Fig. 1).

3 Five business ethics areas to advance AI ethics

3.1 Stakeholder management

The digital transformation driven by AI and algorithmic decision-making comes with new and often invisible or unclear societal impacts [3]. Related ethical issues often remain blurry (job loss, dehumanization, singularity) or technologically mediated (face recognition, privacy (by design)), and few influential non-governmental organizations (NGOs) address AI ethics.Footnote 1 Initially, AI ethics boards and committees were created to engage with societal concerns. However, some have mired in controversy, dissolved shortly after their launch [94], or have ethicists step down [4]. Thus, they are considered a public relations measure or ethical façade, which critics have labeled as ‘ethics washing’ or ‘machine washing’ [11, 108, 148].

Here, the stakeholder management approach may offer guidance on openly and systematically addressing ethical issues of AI to initiate dialog, participation, and deliberation. In 1984 stakeholder theory and management were introduced to counter the dominance of shareholder or stockholder theory [52, 54]. Whereas shareholder theory claims that the social responsibility of corporations is to increase profits, stakeholder theory underlines that corporations have to actively manage relations with stakeholders, defined as everyone who affects or is affected by the company [14]. In the course of the past decades, stakeholder theory became a highly influential framework to analyze ethical problems that arise in corporate decision-making [53]. However, stakeholder theory also attracted criticism, helpful to understand where its theoretical and practical limitations lie. Orts and Strudler [119] summarized the central weaknesses of stakeholder theory in three points: (1) the issue of defining and identifying stakeholders (2) the semantic vagueness and conceptual flexibility when it comes to approaching concrete stakeholder problems; and (3) the challenge of balancing conflicting stakeholder interests in corporate decision-making without a common measure. Banerjee [7] also takes up this point, warning about “stakeholder colonialism” when stakeholder theory is utilized as a means to regulate stakeholder behavior. As a consequence, the risk arises that corporations focus unilaterally on stakeholders with a financial or competitive influence on the firm, while disregarding the interests of marginalized stakeholder groups [7].

First attempts to define and engage stakeholders in AI ethics exist [3, 5, 97, 169]. Still, a similar rollout as has happened in business ethics 35 years ago is yet to come. In this regard, stakeholder theory as a concept for strategic organizational management provides a systematic approach to identifying, addressing, and balancing the competing demands of individuals and groups affected by technology companies [36, 53]. As stressed by Ayling and Chapman [5], stakeholders of technology firms are those “who either have direct roles in the production and deployment of AI technologies or who have legitimate interests in the usage and impact of such technologies.” In light of the critique above, the complexity and opposing nature of stakeholder interests make applying stakeholder theory in practice challenging [119]. However, the relative adaptability of the theory as a managerial instrument to respond to moral demands of internal and external stakeholders has been outlined in many types of organizations and sectors, including healthcare institutions, NGOs, and recently, on-demand labor platforms [100]. Proper stakeholder management in AI ethics means to go beyond an ethical façade and address the interests and demands of everyone who affects or is affected by products and services featuring AI, regardless of their saliency or relative power [5, 53]. Consequently, engaging with stakeholder theory can advance practice-driven approaches of AI ethics, while shedding new light on the theory of the firm when discussing narrow and broad definitions of stakeholders in digital environments [70].

3.2 Reporting on non-financial digital issues

A core challenge of AI ethics is the opacity and obfuscation of algorithms and third-party data collection [22, 40]. Open source as a transparent alternative remains to a large extent, a niche segment. The reasons for that are manifold (patents, protecting business models, value chains). However, frequent scandals shed light on ethical issues, where AI and data are used for political surveillance, disinformation, or manipulation [58, 67, 131]. Often organizations and AI developer teams make profound claims about how effectively their developed algorithms perform. Yet, without giving insights into neither the training data nor the code underlying the algorithm. The opacity and obfuscation are most striking in the context of law enforcement technology involving AI. A recent scandal involving the corporation Clearview, offering a facial recognition app to law enforcement agencies, made this evident [72]. Hill [72] outlines that the corporation built a large-scale database with millions of photos scrapped without permission from various social media sites and used to train their opaque AI system. Similarly, the AI system outlined in an academic publication made headlines claiming that the “[w]ith 80 percent accuracy and with no racial bias, the software can predict if someone is a criminal based solely on a picture of their face. The software is intended to help law enforcement prevent crime" [48]. An open response letter to the publication outlet signed by over 1000 experts and researchers made clear that “there is no way to develop a system that can predict or identify “criminality” that is not racially biased—because the category of “criminality” itself is racially biased” [10, 27].

Major scandals (see, e.g., Enron) led to new standards in business ethics, with businesses disclosing information on ethically sensitive topics like integrity and societal or environmental impacts [132, 161]. Corporate Social Responsibility (CSR) reporting or non-financial reporting has substantially evolved in recent years, providing an essential resource for standardizing and comparing corporate conduct for both strategic and ethical reasons [96, 141, 162]. The non-profit organization Global Reporting Initiative (GRI) and IIRC offer standardized key performance indicators for CSR reporting plus reporting guidance for the material disclosure of stakeholder dialog engagement [88, 102, 171]. The CSR reporting standards strive to support corporations in their efforts to transparently share economic, environmental, and social information with their stakeholders [88]. The main goal is to enhance the quality and comparability of the reported information, based on specified disclosure metrics [59]. Thus, allowing stakeholders to assess reliable and consistent information about a firms societal and environmental impacts. CSR reporting can even match mandatory financial reporting standards via digital-data-based reporting taxonomies like XBRL as established by the U.S. securities and exchange commission for financial reporting [138]. Some technology companies such as the German Telekom already include ‘data protection and data security’ chapters in their CSR reporting [33]. And (local) governments are moving forward with legislation that requires reporting on automated decision systems, although remaining vague about what needs to be disclosed [30, 158]. These examples show that transparent and standardized disclosure on algorithms and data collection processes are undoubtedly part of the future of a more institutionalized AI ethics as corporate digital responsibility [70].

Whereas CSR reporting has come a long way from often incomparable ‘glossy’ public relations reports to established standards with clearly delineated disclosure metrics, AI ethics reporting can build on these insights to advance the recent discourse about ‘what and how’ to report about AI systems [7, 77, 163, 168]. The GRI and similar standards for CSR information disclosure can serve as a starting point to determine the type of information that can help stakeholders assess corporate conduct and increase the transparency of AI systems and the handling of personal data [86]. The challenge for AI ethics reporting lies in the timely provision reliable and comparable information on algorithmic systems based on disclosure metrics [59]. Recent research has already begun outlining types of information that may be relevant in this regard, such as fairness metrics, impact assessments, bias testing, system accuracy, and workflow verification [16, 92, 101, 145]. Analogue to the Environment, Social, and Governance (ESG) framework Herden et al. [70], outline a list of 20 items indicating highly relevant information domains, such as energy and carbon footprint, socially compatible automation, and data responsibility and stewardship.

Recent proposals for the regulation of AI in the European Union foresee reporting obligations for providers of high-risk AI systems, which encompass the disclosure of AI-related incidents and malfunctioning [44]. In addition, the EU’s General Data Protection Regulation (GDPR) strives to establish transparent insights into algorithmic decision-making aimed at individuals as well as third-party and regulatory oversight [85]. In contrast to CSR reporting, this regulatory approach goes beyond the provision of a single report to cover all stakeholders and includes an “individual right to explanation.” Thus, providing so-called data-subjects and experts distinct kinds of information [85]. In practice, this profound approach to transparency creates high costs for corporations and raises doubts about how “meaningful” the presented information is for individuals [34]. In light of these and other challenges, Edwards and Veale [37] suggest to focus on creating better algorithms a priori, via certification systems and privacy by design requirements. Overall stimulating the discussion on the formalization of AI ethics information disclosure is an important topic for practitioners and academics.

3.3 Corporate governance and regulation

Next to ethics boards and committees, corporations and legislators have created soft-law guidelines to govern AI ethics. Soft-law guidelines represent voluntary measures to govern the ethical development and deployment of AI [110]. In essence, the guidelines build on high-level ethical principles and values to align AI systems with the common good [159]. Over 200 such soft-law guidelines have been issued by public and private actors within the past five years compiled and clustered in the repository of Standards Watch [117, 150]. A recent systematic review by Jobin et al. [79] has analyzed the ethical content of 84 of them, finding that the guidelines converge in terms of five major principles: (1) transparency, (2) justice and fairness, (3) non-maleficence, (4) responsibility, and (5) privacy. However, Jobin [80] cautions, highlighting that “despite an apparent convergence on certain ethical principles on the surface level, there are substantive divergences on how these principles are interpreted, why they are deemed important, what issue, domain or actors they pertain to, and how they should be implemented.” Thus, ethical guidelines have been criticized as not having the moral authority to represent the public good [159]. Their inherent vagueness is understood by critiques as instrumental communication to engage in self-regulation while preventing governmental regulation [11].

Thereby revealing a striking analogy to business ethics, where self-regulation was praised for closing regulatory gaps in global governance and as means for gaining ‘moral legitimacy’ (see, e.g., [51, 121, 137, 173]). Analogue to AI ethics, critics warned about the weaknesses of ascribing “mid-level principles” to individuals and ignoring the complexity of organizational contexts and lacking philosophical depth [8, 95]. As business ethics literature evolved over the years, several authors have warned about industry self-regulation’s conceptual and practical limits [51, 107, 130]. Leading critics to condemn it as a lobbying strategy. In a public health study published in the Lancet, Moodie et al. [112] describe industry self-regulation as a means that lacks proof of effectiveness and safety. In a press interview about the study, Rob Moodie vividly summarizes the core issue at hand: “[s]elf-regulation is like having burglars install your locks” [120]. Thus, business ethics literature indicates persistent challenges that go along with the implementation of ethics in organizational contexts, particularly the gap between theoretical principles and practice [8].

Doubts about industry self-regulation are also characteristic of the current AI ethics debate. Governing the ethical challenges of AI has become a high priority for legislators worldwide [51, 91, 113]. Particularly, the European Union has recently made headlines for its moves to go beyond the self-regulatory pledges of technology corporations [42, 43, 101]. These steps have not remained unnoticed by private sector companies engaged in the development and deployment of AI [153]. In 2020, the budget of Big Tech companies to lobby, e.g., in the European Union, has reached an all-time high to fight upcoming legislation, as a leaked document published by the NYT revealed [136]. Thus, although hard-law regulation for AI is on the way, at least in some jurisdictions, [45], AI ethics may still benefit from business ethics research. Especially as current AI regulations come with many omissions and gaps meant to be filled by soft-law instruments and combinations of co- and self-regulation [26, 32, 101, 143]. Business ethics research, with its “conceptualization of CSR as a form of co-regulation that includes elements of both voluntary and mandatory regulation,” can be beneficial in this regard [57]. Consequently, past experiences and learnings from CSR may illuminate the institutionalization of AI ethics and industry standard-setting [147] to advance the AI ethics field [2, 28, 151].

Instead of waiting for a major AI scandal with fatal implications, it would make sense to anticipate such events [115]. Business ethics was substantially advanced after the Enron WorldCom scandal in the U.S. in 2001, which subsequently led to the Sarbanes–Oxley Act in 2002, enforcing accountability and demanding an ethics officer and a dedicated code of ethics putting values into practice [109, 132]. A recent publication by Mökander and Floridi [111] provides a glimpse into one possible pathway of transferring principles into practices via ethics-based auditing of AI. Besides, one of the crucial business ethics achievements and central to codes of ethics is whistleblowing and whistleblower protection, a mechanism that may have helped in the recent Google case of Timnit Gebru [78, 80]. Similarly, the trend for disclosure of ethical content is headed towards mandatory CSR reporting in the EU, and India just revised its Company Act geared towards mandatory social responsibilities [57]. Consequently, AI ethics may benefit from these insights.

3.4 AI/Tech ethics in tertiary education

The more AI spreads beyond traditional boundaries, the more paramount becomes the question of how to embed ethics in the higher education of software developers, engineers, and AI practitioners in general [60, 153]. Graduates will be working for organizations that are inventing the future, including upcoming scandals and potential disasters. Johnson [81], therefore, recently stressed, “[t]he question is not whether engineers make moral decisions (they do!), but whether and how ethical decision-making can be taught.” Higher education institutions are increasingly demanding to offer curricula that prepare students for the practical challenges arising with AI’s development and deployment and provide them with a comprehensive understanding of AI’s ethical and philosophical impacts on the broader society [20, 153]. Some institutions, such as Harvard, have already begun experimenting with pilot courses on ethical reasoning embedded in their computer science curricula conjointly organized with philosophy departments [66]. However, little is known about such ethics courses in AI/tech curricula on a global scale. A recent review of 115 tech ethics syllabi from university technology ethics courses by Fiesler et al. [50] found a lack of consistency in the course content taught and a lack of standards. Course content may cover topics as diverse as law, policy, privacy, and surveillance, as well as social and environmental impact, cybersecurity, and medical/health [50]. For Fiesler et al. [50], this broad topic range and inconsistency in teaching content across syllabi do not come as a surprise, given the current lack of standards enabling educators with leeway to design courses according to their own discretion. As Garret et al. [56] note, “if AI education is in the infancy stage of development, then AI ethics education is barely an embryo.”

Here, a glimpse at business ethics may give some indication about ways in which ethical reasoning may become institutionalized as an integral part of computer science education. In the past, the way to teach business ethics varied widely, with business school courses ranging from compulsory to elective or to no business ethics courses at all [47, 55]. In addition, the integration of business ethics into curricula was often facilitated by non-experts and characterized by a lack of monitoring over their integration process [87, 134]. However, scandals such as Enron-WorldCom in 2001 led to more formalized implementation processes and legal prescriptions such as the Sarbanes–Oxley Act in the US [132, 161]. In response to the outrage over ethical and financial misconduct, the Sarbanes–Oxley Act legislated ethical behavior for corporations listed on the stock market and their auditors [132]. This included that a code of ethics became a legal requirement for publicly traded companies. Due to the new legislation, business school accreditors began to ask for dedicated business ethics courses and professors that reflect the legal prescriptions [106, 161]. Suppose a business department wants to achieve the so-called Triple Crown Accreditation (AASCSB (U.S.), AMBA (U.K.), and EQUIS (EU)), ethics courses and dedicated faculty are a must today to prepare students for ethical dilemmas they may face in their future careers [132, 161].

This institutionalization process can be a helpful analogy to advance AI ethics curricula and revitalize a debate that already started in computer ethics several years ago [13, 25, 161]. Currently, the U.S. is one of the few examples where the integration of ethics into accredited computer science programs moves in this direction. The Accreditation Board for Engineering and Technology (ABET) requires students to have “[a]n understanding of professional, ethical, legal, security and social issues and responsibilities” [1, 135]. However, the precise implementation of ethics in the curricula is left to the institutions and professors [56]. In this regard, curricula design may draw on the rich and multifaceted literature already established in applied ethics [3, 69, 104, 105, 128, 139], to provide a diverse scope stretching across Western and Eastern ethics [41], and to include topical approaches, even informed by other scientific fields, such as cognitive (neuro) science [62, 63]. Further, AI ethics education can build on innovative approaches [20, 21] and education technologies that have not been present two decades ago, opening new pathways for engaging with ethical reflection [127].

In addition, closer attention needs to be paid to the role of regulatory bodies making legal prescriptions about the ethics content in AI education, analog to the Sarbanes–Oxley Act [49, 135]. The EU’s GDPR and more recent regulatory proposals of the EU are indicative for more concrete prescriptions to provide future employees with profound knowledge about sensitive ethical topics such as data privacy and security, bias avoidance, and equal treatment [44, 144]. Thus, even without explicit legal prescriptions, the need regulatory compliance will certainly increase the demand for more institutionalized AI ethics education. Another pathway may build on the 2009 decision “no ethics, no grant” from the U.S. National Science Foundation [116], which decided that institutions receiving funds have to teach ethics. A similar road could be taken for AI curricula: No AI/tech ethics, no degree—or no accreditation.

3.5 Greenwashing and ethics washing

As shown, AI ethics is both high in demand and on the rise. Yet, the previous points also indicate that some damage has already been caused regarding the credibility and moral authority of AI ethics [148]. Critics point to the possibility that “ethical AI” or “responsible AI” represents an invention by Big Tech to manipulate academia and to avoid regulation [32, 61, 118, 160]. Recently, the terms “ethics washing” and “machinewashing” have been coined [108, 170], referring to “a strategy that organizations adopt to engage in misleading behavior (communication and/or action) about ethical Artificial Intelligence” [142]. In light of this deceptive strategy, also “ethics bashing” entered the scene criticizing the “trivialization of ethics and moral philosophy now understood as discrete tools or pre-formed social structures such as ethics boards, self-governance schemes or stakeholder groups” [11]. This abuse of ethics and the disregard of reflexive moral reasoning is particularly worrisome and stakeholders are certainly right to strongly object such corporate practices. However, as shown above, applied ethics as a discipline of reflexive moral reasoning and inquiry goes much deeper and offers a range of analytic and practical tools that can help prevent unethical behavior in corporate contexts [123, 126]. As in every academic discipline, limitations exist, consider Tenbrunsel and Smith-Crowe [157 discussing weaknesses of normative theory and how biases impede rational moral decision-making] or Bartlett [8 highlighting the persistent gap between theory and practice]. Admitting and actively engaging with such limitations, is what characterizes ethics as a discipline of critical inquiry about morals, and what helps to develop, and improve analytic and practical tools used by organizations [7, 95, 119, 157]. In sum, “[e]thics has powerful teeth, but these are barely being used in the ethics of AI today” creating the risk for ethics washing and ethics bashing [129].

The reputational damage going along with ethics washing proves the vicinity of AI ethics and business ethics, as private entities are crucial actors striving to dominate both market and non-market spheres [142]. On the one hand, ethics washing may be seen as an instrumentalization of societal values to gain a larger market share, such as promoting “AI for good” while vending surveillance technology [61, 82, 160]. On the other hand, ethics washing serves as a means to avoid external regulation, as in the case of corporate lobbying and self-regulatory approaches to prevent and influence regulation favorable for technology firms [61, 129, 160]. Thus, as shown by business ethics research, persuasion and lobbying fulfill instrumental purposes as “non-market strategies” to not only succeed in market competition but to conquer and dominate markets through shaping legal frameworks [75, 142].

In business ethics, greenwashing has been researched since the mid-1980s, when environmental ethics and the green movement gained traction [9]. NGOs like Greenpeace raised awareness by presenting specific criteria for identifying corporate greenwashing, and governmental actors, such as the U.S. Federal Trade Commission, followed suit with regulatory guidelines to help practitioners avoid making unfair or deceptive environmental claims [46, 65]. Particularly, this role of governing bodies for green corporate communication is deemed suitable to inform ethics washing and anticipate challenges and advancements of AI ethics.

Over the years, a profound body of business ethics literature, has observed greenwashing practices through various theoretical lenses, ranging from the individual level (e.g., agency theory [15]), over the organizational level (e.g., organizational institutionalism [64]), up to the institutional level (e.g., legitimacy theory [152]). Thus, greenwashing literature provides a range of typologies to better understand misleading corporate claims and explore the thin red line between deceptive and non-deceptive communication and action [90, 93, 99, 142]. Consequently, there is no need to reinvent the wheel, but a rich body of greenwashing research that can inform the study of AI ethics washing and the accompanying deceptive practices of technology corporations [61].

4 Limitations and future research

Recognizing a new and prevalent AI ethics emerging in recent years, this article drew on five salient areas of business ethics literature to elaborate potential for knowledge transfer and spillovers between these applied ethics fields concerning the institutionalization of organizational ethics. Against this background, future research is needed to study the five depicted insights in depth. One major limitation, however, is the scope of this knowledge spill-over: Just as business ethics over the past decades failed to make the business world ethical, so it may not to be expected that AI ethics will make AI fully ethical. This, however, is by far no reason not to keep pushing the application of ethics to societal and technologically important fields such as AI and business and their overlaps. Thus, beyond the scope of the underlying paper, fruitful avenues for future research open up when it comes to: (1) defining, identifying, and engaging with AI stakeholders and, for instance, mapping their salience; (2) the type of information on algorithms specific stakeholders may need, value, and can comprehend concerning AI; (3) building effective governance mechanism that helps to connect ethical codes to practice; (4) designing AI/Tech and ethics courses and generating teaching content, which provides ethical backgrounds while preparing students for ethical challenges they will face in their future careers; and (5) gaining more profound understandings of causes and outcomes of ethics washing, particularly in light of different stakeholders. Overall, the five knowledge bridges represent a non-exhaustive list of concepts business ethics literature may offer. Thus, opening space for future research to extend this initial set and identify topic areas where spillover effects and cross-fertilization between AI ethics and business ethics may occur. Such mutually beneficial knowledge creation may even emerge in areas where business and AI ethics currently differ, as will be discussed next.

From an AI ethics perspective, the depicted business ethics concepts may provide new insights that can help to develop the research agenda. However, it is essential to stress the potential limits of the structural analogy of the two applied ethics fields. Whereas corporations can be seen as artificial entities or legal persons [12], AI and automated decision-making systems (currently) lack such a status [19, 84]. Thus, in the case of the violation of others’ rights, AI respectively, the algorithmic decision-maker that has caused an infringement cannot be held accountable like a corporation. Although in some jurisdictions (e.g., European Union, UK), legal framework adjustments are considered regarding the civil and criminal liability of AI, such legal overhauls are regarded as troublesome and unlikely [19, 76]. However, Jowitt [84] argues that legal personhood for AI should be granted if the threshold of “bare, noumenal agency in the Kantian sense” is reached. With constant progress made, AI may undoubtedly advance in the coming years, further fueling this complex debate. Nevertheless—at least from a short-term perspective —it remains intangible to treat AI as a legal person, and thus, potential stakeholder. Regarding business ethics literature, AI as a latent stakeholder is also an important topic for future research, showing where the two fields of applied ethics can produce cross-fertilizing insights.

A second area where business and AI ethics structural analogy diverges emerges from how AI is developed and distributed. The twentieth century business context was characterized by scale economies, where corporations focused on standardized goods, produced, distributed, and marketed in mass [155]. However, the digital business environment of today substantially differs. AI as a product or service may be costly to develop in the first place but can be digitally replicated at close to zero cost. On top of this comes unprecedented customization due to AI’s ability to adapt to an individual level based on fine-grained user data [175]. Thus, once developed, AI can be quickly reproduced and offered even at a personal level.

Consequently, small and less visible firms can successfully compete in the market, often out of sight of the public eye. This represents a substantial difference to the twentieth century MNE, whose business conduct could be easily and closely followed by the public and dedicated NGOs alike. In light of a more significant number of small and less exposed firms, the watchdogs of public concerns could become less effective, spotting irresponsible business practices. Further, whereas ethical issues related to goods and services produced, distributed, and marketed in mass were relatively salient and easy to spot, AI’s digital nature and individual adaptability render ethical challenges much more complex and challenging to uncloak. Ethical issues, such as biases and discrimination triggered by AI, can remain hidden, with the individual unaware [140]. Ultimately, from a business ethics perspective, the increasing adaptation of AI in business products and processes make it necessary to critically revisit established concepts and theories in light of computer science knowledge that AI ethics can provide.

5 Conclusions

This manuscript strives to create awareness for further expanding the recent discourse of AI ethics by highlighting topics and concepts about the institutionalization of organizational ethics discussed in other applied ethics fields. Particularly business ethics has a long history of dealing with the challenges of institutionalizing ethics in organizational contexts. By building on this established research body, our manuscript strived to highlight the common ground between business ethics and AI ethics, discussing topics and concepts about the institutionalization of organizational ethics that can trigger a joint debate. Given the rapid deployment and use of AI across multiple areas of life, the new and thriving discourse on AI ethics as a discipline will undoubtedly become increasingly important for other applied ethics fields. For instance, the business ethics debate can benefit from the discussions on algorithmic biases that have recently expanded [10, 68, 89, 172]. Thus, both systematic and practical potential lies in joining forces between ethics of AI, medicine, business, and beyond. Here, ethics is understood as an academic discipline meant to prevent ethics washing (and not fuel it). Therefore, the contribution of ethics lies not in adding another box to be ticked in the sense of an offer in service as a supplier, but to reflect and contribute to the human discourse characterized by fuzziness, openness, and guided by theory and reason. This is the ‘tool’ that AI ethics may provide as an ‘early indication system,’ but not turning it into a measure for the quantitative toolbox, which is not what ethics (as a philosophical discipline) is about in the first place [18].

Notes

Algorithm Watch and Opendatawatch are one of the few exceptions, but still not (yet) on the level as well-known social and environmental NGOs, such as Greenpeace, Amnesty International or LobbyWatch.

References

Accreditation Board for Engineering and Technology (ABET). Criteria for Accrediting Computing Programs, pp. 1–21 (2017)

Aguilera, R.V., Jackson, G.: Comparative and international corporate governance. Acad. Manag. Ann. 4, 485–556 (2010)

Aizenberg, E., van den Hoven, J.: Designing for human rights in AI. Big Data Soc. (2020). https://doi.org/10.1177/2053951720949566

AlgorithmWatch. AlgorithmWatch Study debunks Facebook’s GDPR Claims, Mackenzie Nelson (AlgorithmWatch). (2020)

Ayling, J., Chapman, A.: Putting AI ethics to work: are the tools fit for purpose? AI Ethics (2021). https://doi.org/10.1007/s43681-021-00084-x

Baars, G., Spicer, A. (eds.): The corporation: a critical, multi-disciplinary handbook. Cambridge University Press, Cambridge (2017)

Banerjee, S.B.: Corporate social responsibility: the good, the bad and the ugly. Crit. Sociol. 34, 51–79 (2008)

Bartlett, D.: Management and business ethics: a critique and integration of ethical decision-making models. Br. J. Manag. 14, 223–235 (2003)

Becker-Olsen, K., Potucek, S.: Greenwashing. In: Encycl. Corp. Social Responsible, pp. 1318–1323. Springer, Berlin (2013)

Benjamin, R.: Race After Technology: Abolitionist Tools for the New Jim Code. Polity Press, Cambridge (2019)

Bietti, E.: From ethics washing to ethics bashing: a view on tech ethics from within moral philosophy. In: Proc. ACM FAT* Conf. (FAT* 2020), ACM, New Yoork, pp. 210–219 (2020)

Blair, M.M.: Of corporations, courts, personhood, and morality. Bus. Ethics Q. 25, 415–431 (2015)

Borenstein, J., Howard, A.: Emerging challenges in AI and the need for AI ethics education. AI Ethics. 1, 61–65 (2021)

Bose, U.: An ethical framework in information systems decision making using normative theories of business ethics. Ethics Inf. Technol. 14, 17–26 (2012)

Bosse, D.A., Phillips, R.A.: Agency theory and bounded self-interest. Acad. Manag. Rev. 41, 276–297 (2016)

Brandon, J.: Using unethical data to build a more ethical world. AI Ethics. 1, 101–108 (2021)

Broussard, M.: Artificial Unintelligence: How Computers Misunderstand the World. MIT Press, Cambridge (2018)

Brusseau, J.: What a philosopher learned at an AI ethics evaluation. AI Ethics J. 1, 1–8 (2020)

Bryson, J.J., Diamantis, M.E., Grant, T.D.: Of, for, and by the people: the legal lacuna of synthetic persons. Artif. Intell. Law. 25, 273–291 (2017)

Burton, E., Goldsmith, J., Koenig, S., Kuipers, B., Mattei, N., Walsh, T.: Ethical considerations in artificial intelligence courses. AI Mag. 38, 22–34 (2017)

Burton, E., Goldsmith, J., Mattei, N.: How to teach computer ethics through science fiction. Commun. ACM. 61, 54–64 (2018)

Castelvecchi, D.: Can we open the black box of AI? Nature 538, 20–23 (2016)

Chadwick, R.: Encyclopedia of Applied Ethics. Elsevier Inc., London (2012)

Chakrabarty, S., Bass, A.E.: Institutionalizing ethics in institutional voids: building positive ethical strength to serve women microfinance borrowers in negative contexts. J. Bus. Ethics. 119, 529–542 (2014)

Chaudhry, M.A., Kazim, E.: Artificial Intelligence in Education (AIEd): a high-level academic and industry note 2021. AI Ethics. (2021). https://doi.org/10.1007/s43681-021-00074-z

Clarke, R.: Regulatory alternatives for AI. Comput. Law Secur. Rev. 35, 398–409 (2019)

Coalition for Critical Technology. Abolish the #TechToPrisonPipeline: Crime prediction technology reproduces injustices and causes real harm, Medium. (2020)

Cominetti, M., Seele, P.: Hard soft law or soft hard law? A content analysis of CSR guidelines typologized along hybrid legal status. Uwf Umwelt Wirtschafts Forum 24, 127–140 (2016)

Corea, F.: AI Knowledge Map: how to classify AI technologies, Medium. (2018)

Council of Europe, Ad Hoc Committee on Artificial Intelligence (CAHAI): Feasibility Study (2020)

Crane, A., Matten, D.: Business Ethics: Managing Corporate Citizenship and Sustainability in the Age of Globalization. Oxford University Press, Oxford (2016)

Delacroix, S., Wagner, B.: Constructing a mutually supportive interface between ethics and regulation. Comput. Law Secur. Rev. 40, 1–24 (2021)

Deutsche Telekom, A.G.: Data protection and data security, (2021)

Dexe, J., Ledendal, J., Franke, U.: An empirical investigation of the right to explanation under GDPR in insurance. In: Lect. Notes Comput. Sci. (Including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), Springer International Publishing, pp. 125–139 (2020)

Dubber, M., Pasquale, F., Das, S. eds.: The Oxford Handbook of Ethics of AI, in: Oxford Handb. Ethics Artif. Intell., Oxford University Press, Oxford, United Kingdom, (2020)

Dunfee, T.W.: Stakeholder Theory. Oxford University Press, Oxford (2009)

Edwards, L., Veale, M.: Slave to the algorithm? Why a right to explanationn is probably not the remedy you are looking for, duke law. Technol. Rev. 16, 18–84 (2017)

Eiser, A.R., Goold, S.D., Suchman, A.L.: The role of bioethics and business ethics. J. Gen. Intern. Med. 14, 58–62 (1999)

Eitel-Porter, R.: Beyond the promise: implementing ethical AI. AI Ethics. 1, 73–80 (2021)

Englehardt, S., Narayanan, A.: Online tracking: a 1-million-site measurement and analysis. In: Proc. ACM Conf. Comput. Commun. Secur. 24–28-Octo 1388–1401 (2016)

Ess, C.: Computer-mediated colonization, the renaissance, and educational imperatives for an intercultural global village. In: Ethics Inf. Technol., Routledge, pp. 11–22 (2002)

European Commission.: High-Level Expert Group on Artificial Intelligence, pp. 2–36 (2019)

European Commission.: White Paper On Artificial Intelligence—A European approach to excellence and trust, (2020)

European Commission.: Proposal for a Regulation of the European Parliament and of the Council: Laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts, 0106, pp. 1–108 (2021)

European Parliament and the Council of the European Union, Regulation on a European Approach for Artificial Intelligence, AI Regul. Draft. 1–81 (2021)

Federal Trade Commission (FTC).: Part 260 - Guides for the use of Environmental Marketing Claims, 77 FR 62124. (2012)

Felton, E.L., Sims, R.R.: Teaching business ethics: targeted outputs. J. Bus. Ethics. 60, 377–391 (2005)

Felton, J.: Over 1000 experts call out "Racially Biased" AI designed to predict crime based on your face, IFLScience. (2020)

Fiesler, C., Garrett, N., Beard, N.: What dowe teach whenwe teach tech ethics? a syllabi analysis. Annu. Conf. Innov. Technol. Comput. Sci. Educ. ITiCSE, pp. 289–295 (2020)

Fiesler, C., Garrett, N., Beard, N.: What do we teach when we teach tech ethics?. In: Proc. 51st ACM Tech. Symp. Comput. Sci. Educ., ACM, New York, NY, USA, pp. 289–295 (2020)

Flyverbom, M., Deibert, R., Matten, D.: the governance of digital technology, big data, and the internet: new roles and responsibilities for business. Bus. Soc. 58, 3–19 (2019)

Freeman, R.E.: Strategic management: a stakeholder approach. Pitman Publishing Inc., Massachusetts (1984)

Freeman, R.E., Harrison, J.S., Wicks, A.C., Parmar, B., de Colle, S.: Stakeholder Theory: The State Of The Art. Cambridge University Press, Cambridge (2010)

Friedman, M.: The social responsibility of business is to increase its profits, N. Y. Times Mag. (1970) September 13.

Gandz, J., Hayes, N.: Teaching business ethics. J. Bus. Ethics. 7, 657–669 (1988)

Garrett, N., Beard, N., Fiesler, C.: More than “If Time Allows”. In: Proc. AAAI/ACM Conf. AI, Ethics, Soc., ACM, New York, NY, USA, pp. 272–278 (2020)

Gatti, L., Vishwanath, B., Seele, P., Cottier, B.: Are we moving beyond voluntary CSR? Exploring theoretical and managerial implications of mandatory CSR resulting from the New Indian Companies Act. J. Bus. Ethics. 160, 961–972 (2019)

Gebru, T.: Race and Gender. In: Dubber, M.D., Pasquale, F., Das, S. (eds.) Oxford Handb, pp. 251–269. Oxford University Press, Ethics AI (2020)

Global Reporting Initiative.: GRI 1: Foundation 2021, (2021)

Goldsmith, J., Burton, E.: Why teaching ethics to AI practitioners is important. AAAI Work. - Tech. Rep. WS-17–01, pp. 110–114 (2017)

Gotterbarn, D., Kreps, D.: Being a data professional: give voice to value in a data driven society. AI Ethics. 1, 195–203 (2021)

Greene, J.D.: Moral tribes: emotion, reason, and the gap between us and them. Penguin Books, New York (2014)

Greene, J.D.: Beyond point-and-shoot morality: why cognitive (neuro)science matters for ethics. Law Ethics Hum. Rights. 9, 141–172 (2015)

Greenwood, R., Oliver, C., Lawrence, T.B., Meyer, R.E. (eds.): The SAGE handbook of organizational institutionalism. SAGE Publications Ltd, London (2018)

Greer, J., Bruno, K.: Greenwash: The Reality Behind Corporate Environmentalism. Third World Network, Penang (1996)

Grosz, B.J., Grant, D.G., Vredenburgh, K., Behrends, J., Hu, L., Simmons, A., Waldo, J.: Embedded EthiCS: Integrating Ethics Broadly Across Computer Science Education. Commun. ACM. 62, 54–61 (2019)

Hagendorff, T.: The ethics of AI ethics: an evaluation of guidelines. Minds Mach, pp. 1–22 (2020)

Hassani, B.K.: Societal bias reinforcement through machine learning: a credit scoring perspective. AI Ethics. 1, 239–247 (2021)

Häußermann, J.J., Lütge, C.: Community-in-the-loop: towards pluralistic value creation in AI, or—why AI needs business ethics. AI Ethics, pp. 1–22 (2021)

Herden, C.J., Alliu, E., Cakici, A., Cormier, T., Deguelle, C., Gambhir, S., Griffiths, C., Gupta, S., Kamani, S.R., Máté, Y.K., Lange, G., Moles, L., Laura, D.M., Moreno, T., Alain, H., Nunez, B., Pilla, V., Raj, B., Roe, J., Skoda, M., Song, Y., Kumar, P., Edinger-schons, L.M., Edinger-schons, L.M.: Corporate digital responsibility. NachhaltigkeitsManagementForum. (2021) 13–29.

Hersh, M.A.: Professional ethics and social responsibility: military work and peacebuilding. AI Soc. (2021)

Hill, K.: The Secretive Company That Might End Privacy as We Know It. New York Times, New York (2020)

Himma, K.E.: The relationship between the uniqueness of computer ethics and its independence as a discipline in applied ethics. Ethics Inf. Technol. 5, 225–237 (2003)

Hoffman, W.M.: Business and environmental ethics. Bus. Ethics Q. 1, 169–184 (1991)

den Hond, F., Rehbein, K.A., de Bakker, F.G.A., Lankveld, H.K.: Playing on two chessboards: reputation effects between corporate social responsibility (CSR) and corporate political activity (CPA). J. Manag. Stud. 51, 790–813 (2014)

van den Hoven van Genderen, R.: Do we need new legal personhood in the age of robots and AI?. In: Perspect. Law, Bus. Innov., pp. 15–55 (2018)

Innerarity, D.: Making the black box society transparent. AI Soc. 36, 975–981 (2021)

Jobin, A.: Why Dr. Timnit Gebru Is Important for All of Us, Mediu. (2020)

Jobin, A., Ienca, M., Vayena, E.: The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399 (2019)

Jobin, A., Man, K., Damasio, A., Kaissis, G., Braren, R., Stoyanovich, J., Van Bavel, J.J., West, T.V., Mittelstadt, B., Eshraghian, J., Costa-jussà, M.R., Tzachor, A., Jamjoom, A.A.B., Taddeo, M., Sinibaldi, E., Hu, Y., Luengo-Oroz, M.: AI reflections in 2020. Nat. Mach. Intell. 3, 2–8 (2021)

Johnson, D.G.: Can engineering ethics be taught?. In: Bridg., pp. 59–64 (2017)

Johnson, K.: How AI companies can avoid ethics washing, VentureBeat. (2019)

Jose, A., Thibodeaux, M.S.: Institutionalization of ethics: the perspective of managers. J. Bus. Ethics. 22, 133–143 (1999)

Jowitt, J.: Assessing contemporary legislative proposals for their compatibility with a natural law case for AI legal personhood. AI Soc. (2020)

Kaminski, M.E.: The right to explanation, explained. Berkeley Technol. Law J. 34, 190–218 (2019)

Khalil, S., Saffar, W., Trabelsi, S.: Disclosure standards, auditing infrastructure, and bribery mitigation. J. Bus. Ethics. 132, 379–399 (2015)

Kretzschmar, L., Bentley, W.: Applied ethics and tertiary education in South Africa: teaching business ethics at the University of South Africa. Verbum Eccles. 34, 1–9 (2013)

Kücükgül, E., Cerin, P., Liu, Y.: Enhancing the value of corporate sustainability: an approach for aligning multiple SDGs guides on reporting. J. Clean. Prod. 333, 130005 (2022)

Laakasuo, M., Herzon, V., Perander, S., Drosinou, M., Sundvall, J., Palomäki, J., Visala, A.: Socio-cognitive biases in folk AI ethics and risk discourse, AI Ethics. (2021)

Laufer, W.S.: Social accountability and corporate greenwashing. J. Bus. Ethics. 43, 253–261 (2003)

Lauwaert, L.: Artificial intelligence and responsibility. AI Soc. 36, 1001–1009 (2021)

Lee, M.S.A., Floridi, L., Singh, J.: Formalising trade-offs beyond algorithmic fairness: lessons from ethical philosophy and welfare economics, AI Ethics. (2021)

Lekakos, G., Vlachos, P., Koritos, C.: Green is good but is usability better? Consumer reactions to environmental initiatives in e-banking services. Ethics Inf. Technol. 16, 103–117 (2014)

Liao, S.M. (ed.): Ethics of Artificial Intelligence. Oxford University Press, New York (2020)

Lippke, R.L.: A critique of business ethics. Bus. Ethics Q. 1, 367–384 (1991)

Lock, I., Seele, P.: Analyzing sector-specific CSR reporting: social and environmental disclosure to investors in the chemicals and banking and insurance industry. Corp. Soc. Responsib. Environ. Manag. 22, 113–128 (2015)

Lock, I., Seele, P.: Theorizing stakeholders of sustainability in the digital age. Sustain. Sci. 12, 235–245 (2017)

Lütge, C.: There is not enough business ethics in the ethics of digitization. In: Ethical Bus. Leadersh. Troubl. Times, Edward Elgar Publishing, pp. 280–295 (2019)

Lyon, T.P., Montgomery, A.W.: The means and end of greenwash. Organ. Environ. 28, 223–249 (2015)

Ma, N.F., Yuan, C.W., Ghafurian, M., Hanrahan, B.V.: Using stakeholder theory to examine drivers’ stake in uber. In: Proc. 2018 CHI Conf. Hum. Factors Comput. Syst., ACM, New York, NY, USA, pp. 1–12 (2018)

Maccarthy, M., Propp, K.: Machines learn that Brussels writes the rules: the EU’s new AI regulation, Brookings Inst. (2021)

Machado, B.A.A., Dias, L.C.P., Fonseca, A.: Transparency of materiality analysis in GRI‐based sustainability reports. In: Corp. Soc. Responsib. Environ. Manag. Online Ver (2020) csr.2066.

Mahieu, R., van Eck, N.J., van Putten, D., van Den Hoven, J.: From dignity to security protocols: a scientometric analysis of digital ethics. Ethics Inf. Technol. 20, 1–13 (2018)

Marques, J.: Shaping morally responsible leaders: infusing civic engagement into business ethics courses. J. Bus. Ethics. 135, 279–291 (2014)

Maurushat, A.: The benevolent health worm: comparing Western human rights-based ethics and Confucian duty-based moral philosophy. Ethics Inf. Technol. 10, 11–25 (2008)

McCraw, H., Moffeit, K.S., O’Malley, J.R.: An analysis of the ethical codes of corporations and business schools. J. Bus. Ethics. 87, 1–13 (2009)

Mehrpouya, A., Willmott, H.: Making a Niche: the marketization of management research and the rise of ‘knowledge branding.’ J. Manag. Stud. 55, 728–734 (2018)

Metzinger, T.: EU guidelines: ethics washing made in Europe, Der Tagesspiegel. (2019)

Mingers, J., Walsham, G.: Toward ethical information systems: the contribution of discourse ethics. MIS Q. 34, 833–854 (2010)

Mittelstadt, B.: Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 1, 501–507 (2019)

Mökander, J., Floridi, L.: Ethics—based auditing to develop trustworthy AI. Minds Mach. (2021)

Moodie, R., Stuckler, D., Monteiro, C., Sheron, N., Neal, B., Thamarangsi, T., Lincoln, P., Casswell, S.: Profits and pandemics: prevention of harmful effects of tobacco, alcohol, and ultra-processed food and drink industries. Lancet 381, 670–679 (2013)

Mozur, P., Kang, C., Stariano, A., McCabe, D.: A global tipping point for reining in Tech Has Arrived, New York Times (2021)

Muller, V.C.: Ethics of artificial intelligence and robotics (stanford encyclopedia of philosophy), Stanford Encycl. Philos, pp. 1–30 (2020)

Nagler, J., van den Hoven, J., Helbing, D.: An Extension of Asimov’s Robotics Laws. In: Towar. Digit. Enlight., Springer International Publishing, Cham, pp. 41–46 (2019)

Nature, No ethics, no grant, Nature. 461, 433–433 (2009)

Nesta, AI Governance Database, (2020)

Ochigame, R.: The invention of “Ethical AI”—How Big Tech Manipulates Academia to Avoid Regulation, Intercept. (2019)

Orts, E.W., Strudler, A.: Putting a stake in stakeholder theory. J. Bus. Ethics. 88, 605–615 (2009)

Oswald, K.: Industry involvement in public health ‘like having burglars fit your locks,’ News-Medical.Net - An AZoNetwork Site. (2013)

Palazzo, G., Scherer, A.G.: Corporate legitimacy as deliberation: a communicative framework. J. Bus. Ethics. 66, 71–88 (2006)

Poff, D.C., Michalos, A.C.: Citation Classics from the Journal of Business Ethics. Springer, Dordrecht (2013)

Poitras, G.: Business ethics, medical ethics and economic medicalization. Int. J. Bus. Gov. Ethics. 4, 372–389 (2009)

Potter, V.R.: Bridging the gap between medical ethics and environmental ethics. Glob. Bioeth. 6, 161–164 (1993)

Powers, T.M., Ganascia, J.-G.: The ethics of the ethics of AI. In: Dubber, M.D., Pasquale, F., Das, S. (eds.) Oxford Handb, pp. 25–51. Oxford University Press, Ethics AI (2020)

Prasad, A., Mills, A.J.: Critical management studies and business ethics: a synthesis and three research trajectories for the coming decade. J. Bus. Ethics. 94, 227–237 (2010)

Regan, P.M., Jesse, J.: Ethical challenges of edtech, big data and personalized learning: twenty-first century student sorting and tracking. Ethics Inf. Technol. 21, 167–179 (2019)

Rehg, W.: Discourse ethics for computer ethics: a heuristic for engaged dialogical reflection. Ethics Inf. Technol. 17, 27–39 (2015)

Rességuier, A., Rodrigues, R.: AI ethics should not remain toothless! A call to bring back the teeth of ethics. Big Data Soc. 7, 1–5 (2020)

Rhodes, C., Fleming, P.: Forget political corporate social responsibility. Organization 27, 943–951 (2020)

Richardson, R., Schultz, J.M., Crawford, K.: Dirty data, bad predictions: how civil rights violations impact police data, predictive policing systems, and justice. New York Univ. Law Rev. 94, 15–55 (2019)

Rockness, H., Rockness, J.: Legislated ethics: from enron to sarbanes-oxley, the impact on corporate America. J. Bus. Ethics. 57, 31–54 (2005)

Rossouw, D., Van Vuuren, L.: Institutionalising ethics. In: Bus. Ethics, 5th editio, Oxford University Press, pp. 273–289 (2013)

Rossouw, G.J.: Rossouw—business ethics in South Africa, pp. 1539–1547 (1997)

Saltz, J., Skirpan, M., Fiesler, C., Gorelick, M., Yeh, T., Heckman, R., Dewar, N., Beard, N.: Integrating ethics within machine learning courses. ACM Trans. Comput. Educ. 19, 1–26 (2019)

Satariano, A., Stevis-Gridneff, M.: Big tech turns its lobbyists loose on Europe, Alarming Regulators, New York Times. (2020)

Scherer, A.G., Palazzo, G., Baumann, D.: Global rules and private actors: toward a new role of the transnational corporation in global governance. Bus. Ethics Q. 16, 505–532 (2006)

Seele, P.: Digitally unified reporting: how XBRL-based real-time transparency helps in combining integrated sustainability reporting and performance control. J. Clean. Prod. 136, 65–77 (2016)

Seele, P.: What makes a business ethicist? A reflection on the transition from applied philosophy to critical thinking. J. Bus. Ethics. 150, 647–656 (2018)

Seele, P., Dierksmeier, C., Hofstetter, R., Schultz, M.D.: Mapping the ethicality of algorithmic pricing: a review of dynamic and personalized pricing. J. Bus. Ethics. 170, 697–719 (2021)

Seele, P., Lock, I.: Instrumental and/or deliberative? A typology of CSR communication tools. J. Bus. Ethics. 131, 401–414 (2015)

Seele, P., Schultz, M.D.: From greenwashing to machinewashing: a model and future directions derived from reasoning by analogy. J. Bus. Ethics. (2022). https://doi.org/10.1007/s10551-022-05054-9

Senden, L.A.J., Kica, E., Hiemstra, M., Klinger, K.: Mapping Self- and Co-regulation Approaches in the Eu Context: Explorative Study for the European Commission, DG Connect. Utrecht University, Renforce (2015)

Sharma, S.: Data Privacy and GDPR Handbook. John Wiley & Sons Inc, Hoboken (2020)

Shneiderman, B.: Bridging the gap between ethics and practice: guidelines for reliable, safe, and trustworthy human-centered AI systems. ACM Trans. Interact. Intell. Syst. 10 (2020)

Sims, R.R.: The institutionalization of organizational ethics. J. Bus. Ethics. 10, 493–506 (1991)

Spiekermann, S.: What to expect from IEEE 7000: the first standard for building ethical systems. IEEE Technol. Soc. Mag. 40, 99–100 (2021)

Stahl, B.C.: Emerging technologies as the next pandemic? Possible consequences of the Covid crisis for thefuture of responsible research and innovation. Ethics and Inf. Technol. 23(S1), 135–37 (2021).https://doi.org/10.1007/s10676-020-09551-1

Stahl, B.C.: From computer ethics and the ethics of AI towards an ethics of digital ecosystems. AI Ethics. (2021). https://doi.org/10.1007/s43681-021-00080-1

StandICT.eu, Standards Watch, StandICT.Eu. (2020)

Steurer, R.: Disentangling governance: a synoptic view of regulation by government, business and civil society. Policy Sci. 46, 387–410 (2013)

Suddaby, R., Bitektine, A., Haack, P.: Legitimacy. Acad. Manag. Ann. 11, 451–478 (2017)

Taebi, B., van den Hoven, J., Bird, S.J.: The importance of ethics in modern universities of technology. Sci. Eng. Ethics. 25, 1625–1632 (2019)

Tajalli, P.: AI ethics and the banality of evil. Ethics Inf. Technol. 3, 97–108 (2021)

Taneja, H., Maney, K.: The end of scale. MIT Sloan Manag. Rev. 59, 67–72 (2018)

Tavani, H.T.: The state of computer ethics as a philosophical field of inquiry: some contemporary perspectives, future projections, and current resources. Ethics Inf. Technol. 3, 97–108 (2001)

Tenbrunsel, A.E., Smith-Crowe, K.: 13 Ethical decision making: where we’ve been and where we’re going. Acad. Manag. Ann. 2, 545–607 (2008)

The New York City Council.: Reporting on automated decision systems used by city agencies, (2019)

Theodorou, A., Dignum, V.: Towards ethical and socio-legal governance in AI. Nat. Mach. Intell. 2, 10–12 (2020)

Tigard, D.W.: Responsible AI and moral responsibility: a common appreciation. AI Ethics. 1, 113–117 (2021)

Towell, E., Thompson, J.B., McFadden, K.L.: Introducing and developing Professional Standards in the information systems curriculum. Ethics Inf. Technol. 6, 291–299 (2004)

Tschopp, D., Huefner, R.J.: Comparing the evolution of CSR reporting to that of financial reporting. J. Bus. Ethics. 127, 565–577 (2015)

Uyar, A., Karaman, A.S., Kilic, M.: Is corporate social responsibility reporting a tool of signaling or greenwashing? Evidence from the worldwide logistics sector. J. Clean. Prod. 253, 119997 (2020)

Varian, H.R.: Big data: new tricks for econometrics. J. Econ. Perspect. 28, 3–28 (2014)

Veatch, R.M., Guidry-Grimes, L.K.: The Basics of Bioethics, 4th edn. Routledge Taylor & Francis Group, New York (2020)

Véliz, C.: Three things digital ethics can learn from medical ethics. Nat. Electron. 2, 316–318 (2019)

Venkataramakrishnan, S.: Why business cannot afford to ignore tech ethics. Financ. Times. (2020)

Vigneau, L., Humphreys, M., Moon, J.: How do firms comply with international sustainability standards? Processes and consequences of adopting the global reporting initiative. J. Bus. Ethics. 131, 469–486 (2014)

Vogt, J.: Where is the human got to go? Artificial intelligence, machine learning, big data, digitalisation, and human–robot interaction in Industry 4.0 and 5.0. Ai Soc. 36, 1083–1087 (2021)

Wagner, B.: Ethics as an escape from regulation: from ethics-washing to ethics-shopping? In: Emre, B., Irina, B., Liisa, J., Mireille, H. (eds.) Being Profiled—Cogitas Ergo Sum, pp. 84–89. Amsterdam University Press, Amsterdam (2018)

Wagner, R., Seele, P.: Uncommitted deliberation? Discussing regulatory gaps by comparing GRI 3.1 to GRI 4.0 in a political CSR perspective. J. Bus. Ethics. 146, 1–19 (2017)

Wich, M., Eder, T., Al Kuwatly, H., Groh, G.: Bias and comparison framework for abusive language datasets. AI Ethics (2021). https://doi.org/10.1007/s43681-021-00081-0

Willke, H., Willke, G.: Corporate moral legitimacy and the legitimacy of morals: a critique of palazzo/scherer’s communicative framework. J. Bus. Ethics. 81, 27–38 (2008)

Winkler, E.R.: Applied ethics, overview. Encycl. Appl. Ethics 1, 174–178 (2012)

Zuboff, S.: The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. Profile Books Ltd, London (2019)

Funding

Open access funding provided by Università della Svizzera italiana.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schultz, M.D., Seele, P. Towards AI ethics’ institutionalization: knowledge bridges from business ethics to advance organizational AI ethics. AI Ethics 3, 99–111 (2023). https://doi.org/10.1007/s43681-022-00150-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43681-022-00150-y