Abstract

In recent days, progressive rate of diabetic retinopathy (DR) becomes high and it is needed to develop an automated model for effective diagnosis of DR. This paper presents a new deep neural network with moth search optimization (DNN-MSO) algorithm based detection and classification model for DR images. The presented DNN-MSO algorithm involves different processes namely preprocessing, segmentation, feature extraction and classification. Initially, the contrast level of the DR images is improved using contrast limited adaptive histogram equalization model. After that, the preprocessed images are segmented using histogram approach. Then, Inception-ResNet V2 model is applied for feature extraction. Finally, extracted feature vectors are given to the DNN-MSO based classifier model to classify the different stages of DR. An extensive series of experiments were carried out and the results are validated on Messidor DR dataset. The obtained experimental outcome stated the superior characteristics of the DNN-MSO model by attaining a maximum accuracy, sensitivity and specificity of 99.12%, 97.91% and 99.47% respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, diabetes occurs when the glucose level has been increased in blood. When the same constraint is balanced for longer period, then it leads to severe damage in blood vessels. An individual influenced with diabetes is more prone to kidney failure, vision loss, weaker teeth, lower limb confiscation, leg wounds, nerve damage etc. This also tends to cause heart attack and stroke for such persons. According to the damaged area where the glucose has been raised in blood, it is named as Diabetic Nephropathy. It can be defined as the nephron in kidney gets failed, and neuron present in brain is affected. Then, diabetic retinopathy (DR) is another fact where the retina from eye gets damaged severely. Based on the study of World Health Organization (WHO), it is predicted that diabetes captures the seventh place that belongs to fatal disease [1]. DR is a significant cause of visual loss occurring all over the globe and is considered as a major reason of visual impairment among the persons in the age group of 25–75. Among most of the patients, no symptoms have appeared till it become severe. Since the progressive rate is high, earlier detection and medication finds helpful to reduce the growth rate of the disease. It also reveals that more than 10 lakhs people affected with diabetes has been improved to 40 lakhs respectively.

As the statistical survey states that, people above 18 years have been affected this is increased day by day. People below the poverty line are assumed to be more vulnerable to diabetes. Moreover, from India, a person like 6.5 million at the age of 24–74 has been identified with diabetic disease. Secondly, it may be increased to 10.2 million by 2030 [2]. Hence, the existence of diabetes might be found with unusual portion of the body. It can be detected by using few predictive methods like venous beading, micro aneurysms, and hemorrhage and so on. It has been assumed to be more important to use. The Micro aneurysms indicates the blood clot size that is around 100–120 μm which is in the circular shape. Hemorrhage occurs because of the massive quantity of blood released from the influenced part of the blood vessel. In addition, unusual development of blood vessels is said to be neovascularization. Here, venous beading is described as the expansion of veins which is placed nearby occluded arterioles. The DR is classified as Non-Proliferative DR (NPDR) as well as Proliferative DR (PDR). Based on the intensity of diabetes, NPDR is further divided into different stages, respectively.

In general, DR is assumed to be a significant part in avoiding the loss of eye sight in case of predefined prediction of disease in primary phase. Once the abnormality has been predicted, then, affected individual must follow routine treatment to analyze the disease. To resolve the limitations, a novel technique is developed to detect and divide fundus images that are useful in preventing the vision loss as DR is used as main remedy. Different process has been used to derive précised identification of DR. The process of searching and classifying work of DR takes place by dividing the fundus images into tiny portions that tends to indentify existence of exudates, lesions, micro aneurysms and so on.

A technique applied to determine diverse attributes of anomalous foveal zone and micro aneurysms which is existed is introduced by [3]. Typically, it is uses curvelet coefficients that is obtained from the fundus images as well as angiogram. It might be segmented into 3 phases of classification that is used for several diabetic patients. Thus, the proposed model attains a best sensitivity measure of with complete efficiency. The DR image classifying method is projected in [4] that are processed according to already present micro aneurysms. Additionally, the circularity and part of micro aneurysms is applied for feature extraction. Few database such as DIARETDB1, DRIVE as well as ROC is used in this paper. Moreover, the developed technique reaches optimal classification with respect to more sensitivity, specificity when compared with other modules. Also, it has been applied with Principal Component Analysis (PCA) method to classify the images from optic disc of fundus image. Therefore, with the help of improved MDD classification, it can accomplish more optimized classification accuracy along with best forecasting measure. As a result, different images are used to compute the above work including classification process and result in usual as well as unusual images [5,6,7,8].

Cunha-Vaz [9] evaluated the real time result of Support Vector Machine (SVM) which is obtained from 3 reputed dataset and to reach optimal accuracy measure. At the same time, texture features are retrieved from local binary pattern [10] that helps to detect the existence of exudates which produces an optimized classification process. Therefore, a secondary classifier is developed by [11] that is comprised with boot strapped Decision Tree (DT) which is applicable of segmenting the fundus images. In addition, a 2-binary vessel map has been originated by decreasing the amplitude of feature vectors.

A new model named as Gabor filtering and SVM classification is employed in [12] for classifying the DR photography. In prior to apply the classifying process, Circular Hough Transform (CHT) as well as CLAHE approach is produced for input images that assist to accomplish best predictive measure in STARE dataset. Only few morphological processes applies the intensity level of pictures as threshold metrics to segment the DR images [13].

Bhatkar and Kharat [14] defined about the parameters of CNN model including the data augmentation while DR images are classified. Thus, the intensity of DR images is constrained with 5 stages that are sampled under the application of Kaggle dataset.

Partovi et al. [15] introduced a model with fault based autonomous networks which is applicable in dividing the images. It is validated using dataset that has remote sensing images. Deep CNN (DCNN) provides latest feature extraction technique for classifying the clinical image. Simultaneously, [16] applies DCNN to minimize the human creations as well as tries to improve the feature representation in histopathological colon to isolate the cancer image from alternate photography. Shen et al. [17] designed a method of multi-crop pooling that is used in DCNN to hold the object salient data which is applicable to classify the lung cancer under the application of CT images. Several other researchers have used DNN for image based disease classification [18,19,20,21,22,23]. Though various DCNN models have been presented in the literature, it is needed to increase the learning rate of DCNN. It can be considered as an optimization problem, and is solved by the use of bio-inspired optimization algorithm. In this view, moth search optimization (MSO) algorithm can be applied in this study.

Since the progressive rate is high, earlier detection and medication finds helpful to reduce the growth rate of the disease. This paper presents a new deep learning based detection and classification model for DT images using deep neural network with moth search optimization (DNN-MSO) algorithm. The presented DNN-MSO algorithm involves different processes namely preprocessing, segmentation, feature extraction and classification. Initially, the contrast level of the DR images is improved using Contrast Limited Adaptive Histogram Equalization (CLAHE) model. After that, the preprocessed images are segmented using histogram approach. Then, Inception-ResNet V2 model is applied for feature extraction. Finally, extracted feature vectors are given to the DNN-MSO based classifier model to classify the different stages of DR. An extensive series of experiments were carried out and the results are validated on Messidor DR dataset. The obtained experimental outcome stated the superior characteristics of the DNN-MSO model over the compared methods.

The remaining sections of the study are organized as follows. Section 3 presents the DNN-MSO model and validation of the proposed model takes place in Sect. 3. At last, Sect. 4 concludes the study.

2 The proposed DNN-MSO model for DR classification

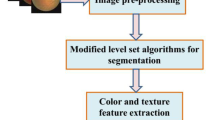

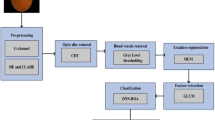

The proposed DNN-MSO technique is constrained with diverse processes such as preprocessing, segmentation, feature extraction as well as classification. First, contrast level of DR images should be enhanced by applying CLAHE model. Once the process is completed, images which are preprocessed undergo segmentation with the help of histogram approach. Afterwards, Inception-ResNet V2 scheme is used in extracting features. At last, the obtained feature vectors have been provided to DNN-MSO classification process to divide the diverse phases of DR. Figure 1 shown the overall process of DNN-MSO method.

2.1 Preprocessing: Contrast Enhancement

For attaining the optimal classification and segmentation of DR images, the CLAHE model is used for enhancing the intensity of contrast attribute of image. Here, the expanded model of CLAHE is more applicable to avoid the unnecessary noise amplification. Additionally, different histograms has been determined with the help of CLAHE model, where all the nodes are based on reputed image area and histogram is shred to eliminate the extra amplification and re-mapping the intensity values that takes place using distributed histogram. In case of medical images, the introduced CLAHE method is developed to increase the contrast behavior of an image. To define the effectiveness of CLAHE technique, there are few steps to be used, such as Generating whole input, Pre-processing the input, Computing the background area which leads to built a mapping to grayscale as well as gathering the last CLAHE image obtained from arbitrate gray level mapping.

2.2 Histogram based Segmentation Process

The segmentation process is described as, dividing an image into set of non-overlapping portions correspondingly. With the help of finding best thresholds, successful segmentation process can be reached when compared with other methods which are relied on histogram processing. Therefore, the image histogram represents the relative frequency for different color occurrence like gray levels of an image. Also, a digital image could be present along with the measure of L colors, and histogram may be as discrete function that is defined as

which refers the number of pixels \(n_{k}\) along with color \(g_{k}\) and considered as fraction of whole pixels N. For the images existed with bi-modal histograms, a single global threshold T is used to divide the image histogram. Hence, a unique constraint of objects and background could be indicated in the histogram of image by using a deep as well as narrow valley from the peaks. Therefore, value of threshold is chosen from the end of valley.

Usually, a global threshold is performed quite-well when there is an existence of peaks in image histogram in terms of objects of interest and background is classified. Moreover, it is evident that the presented model is not useful for more brightened images as well as valleys of histogram is often lengthy and extended with various metric of heights. Besides, the local adaptive threshold identifies an individual threshold for all pixels that is based on the range of intensities obtained from local neighborhood. The thresholding process takes place for an image along with a histogram that is composed with absence of actual peaks. In addition, image is classified as sub-images using particular model and usage of mean value for all sub-images. If there is a certain feature present for an image then, the operation is termed as priori, from where the process of choosing a threshold may be easier as well as the major reason for selecting threshold is to ensure the satisfaction of current operation. A novel method named as P-tile uses such types of data as object which is darker than alternate background and utilizes a defined fraction like percentile (1/p) of entire image portion, even for printed sheet. The threshold could be found by analyzing the intensity from where the destination fraction of image pixels is minimum when compared with predefined value. Therefore, intensity is identified using cumulative histogram:

Value of threshold \(T\) is declared as \(c\left( {T } \right) = 1/p\). For a darker background, it may be fixed as \(c\left( {T } \right) = 1 - 1/p.\)

2.3 Inception based Feature Extraction

The fundamental method of Inceptions has been used to train various parts as every monotonous block are classified into diverse sub-networks that is applied for enabling the entire method in memory. But, Inception technique is said to be simple and indicates the possibility for changing the count of filters obtained from standard layers which could not influence the reputation of entire trained system. To improve the training speed, the layer size must be switched to appropriate manner to reach the trade-off from various sub-networks. In contrary, by applying the TensorFlow, latest Inception models has been developed with no duplicate portioning. It is due to the application of modern storage optimizing in back propagation that is accomplished by allowing the tensors which are more required for estimating the gradient and computing the minimized values. Additionally, inception-v4 is presented to remove the unwanted process that created same model for Inception blocks present in every grid size.

2.3.1 Residual Inception Blocks

Every inception blocks are applied through a filter-expansion layer that helps to enhance the dimension of filter bank, in prior to calculate the depth of input. Therefore, it is an essential process to replace the dimensional cutback which is intended by Inception block. From various methods of Inception, Inception-ResNet V2 has lower speed, since it is existing with numerous numbers of layers. The additional orientation among residual as well as non-residual Inception is named as batch-normalization which is used in conventional layers, than to employ on residual calculation. Hence, it is considered to logical while anticipating a larger application of batch-normalization of maximum benefits. However, the batch-normalization of TensorFlow utilizes maximum amount of storage as well as to decrease entire count of layers, while batch-normalization is applied in different locations.

2.3.2 Scaling of the Residuals

From this section, it is pointed that a filter value is identified to be more than 2000 then, remaining technique demonstrates that an unstable network is expired at primary level of training that denotes the target layer in prior to initiate pooling layer to generate zeros from different process. But, it cannot be removed by reducing the training value. Also, the minimized values appended in prior to find the stable learning. Generally, scaling factors are present inside the radius of 0.1–0.3 which is employed for scaling the accumulated layer activations respectively.

2.4 Classification on DNN-MSO

2.4.1 DNN

The DNN classifier is explained in this segment. These techniques are proper to learn discriminative as well as active parameters. Massive amount of unlabelled information of DNN has been implemented. The attempt to classify the data is assumed to be more promising issue as it creates difficulties in pattern learning. To manage this problem, through the sparse auto-encoders (AE) an enhanced DNN is proposed. For learning the feature model based on presented manner, an AE that is adaptive are joined by model of denoising technique. Furthermore to the neural network (NN) classification this feature learned is given as input. The whole process of presented model is described in output subsections. Primarily, the planning of adaptive AEwas described. While, a substantial action id exposed through NN with respect to classifier conversely with utilizing the NN, it fails to attempt the multi-objective function and the information which has maximum dimension.

In the early phase of DNN training, it is has to regard the average activation function value closer to 0 because of neurons idleness. It is executing penalty for maximum activation function. It is implemented by different form of substantial average activation function value. The notation of penalty is provided in Eq. (3)

where \(s_{2}\) denotes the entire number of secret layer neurons, KL \(\left( \cdot \right)\) implies the Kullback–Leibler divergence (KL divergence) and provided as:

\(KL\left( {\rho //\rho_{n} } \right) = 0\) for \(\rho_{n} = p\) or it stands for increased divergence, generally considered as adaptive constant. It is attained by executing the cost function which is represented by,

β indicates the penalty weight executed with KL divergence technique. The recognition of weight ‘w’ and biases ‘b’ becomes a prominent role because of its cost function, so, these 2 constraints are started to relate one another thus that it is concern the action of whole method. It can be solved with formulating an additional optimize problem and the outcome of this equivalent problem is considered to decrease the, \(C_{adaptive} \left( {w,b} \right)\) . This appeared optimize problem is resolved by introducing a novel MSO method. It executes the optimized method to iteratively inform bias and weight. It is written as:

And

In these \(\varepsilon\), indicates the learning rate of DNN.

2.4.2 MSO technique

Moth is a kind of bug, which generally belongs to the butterfly family of Lepidoptera. Globally, total on 160,000 moth species has been discovered, that exits mostly during night-time. When compared with other moth attributes, the phototaxis as well as Levy flight has been assumed to be a significant feature which is described in the upcoming sections. Therefore, weight of NN is provided as input for MSO. These techniques recognized the optimization weights with executing the exploring function.

2.4.2.1 Phototaxis

The procedure behind moths fly is that, it surrounds the light which is termed as phototaxis. Yet, an accurate model of phototaxis could not be found, that has major hypotheses for defining the phototaxis technique. Among other methods, it is a crucial hypothesis in celestial navigation which is implemented in transverse orientation while flying. In order to save an applicable angle for celestial light such as moon, moths would travel in a straight line. Simultaneously, the angle exists from light source and moth could be oriented, but it is unable to seek the transform, since the celestial object is identified to be outlying distance. It moves towards light source, as moth would naturally adopt the flight orientation to a better position. Therefore, it allows airborne moths to fall downwards. They shape a spiral-path to travel nearer to source light.

2.4.2.2 Levy flights

Heavy-tailed, non-Gaussian statistics is recognized as general models in different functions of massive insects as well as animals. Levy flight is a kind of arbitrary progress, thus in natural surroundings we assumed as one of the main flight design. Another moth fly, the Drosophila also show this Levy flight, but this flight is approximated as a power law supply containing the feature exponent closer to 3/2. In general, this Levy distribution could be exposed in the type of power-law and it can be explained in following Eq. (8),

where \(1 < \beta \le 3\) is recognized as an index.

The moth’s individuals containing the distance as nearby the optimal one will fly in the Levy flight approach approximately the fittest one. Otherwise they will inform their locations with applying the Levy flight in terms of Eq. (9), but the moth id informed with utilizing following Eq. (9):

where \(X_{j}^{a}\) and \(X_{j}^{a + 1}\) indicates the actual and updated position at generation \(a\), but present generation is signified as,\(a\). This step that achieved from Levy flight is indicated as,\(L\left( s \right)\). To these problems of interest, the scale factor is implies with the parameter; \(\delta\). The expression to this \(\delta\) is depicting in under Eq. (10):

Now, \(W_{{ {\text{max }}}}\) implies highest walk step and the value to this \(W_{{ {\text{max }}}}\) are set dependent on the accessible issue. \(L\left( s \right)\) in the above equation is rewritten as

In \(s\) is found higher than 0. \(\varGamma \left( x \right)\), implies the gamma function. While declared before, from the \(L\left( s \right)\) containing \(\alpha = 1.5\) the moths Levy flight is drawn.

2.4.2.3 Fly straight

The current moths are separated from light source would flutter in straight line towards the light. This notation of moth \(j\) is formulated as

where \(X_{best}^{a}\) signifies the fittest moth at \(a\) generation,\(\lambda\) implies the scale factor. \(\varphi\), is an acceleration factor.

Otherwise the moth will fly away from the light source to end position. The formulated executed to compute the end location of moth \(j\) is provided in Eq. (13),

To achieve integrity, the position of moth j is informed with executing the Eqs. (12, 13) by half percentage possibility. The actual, updated, and optimal position of moth is indicated as \(X_{j} ,X_{j,new}\) and, \(X_{best} .\lambda\), manage the techniques meeting speed along with improve the population diversity. The optimization weight enhances the action of the DNN classification. Because of optimization outcomes is achieved with this technique. The DNN is executed to classifier functions. This technique real and forged videos is recognized and classifier with this DNN. The DNN having a number of secret layers, thus number of iterations is executed in this classification. At last, an accurate classifier is given with this DNN classification. The function of MSO technique together DNN, moreover improves the classifier action. The algorithm to total procedure is depicting in under.

Algorithm for entire process

-

Input: DR images

-

Output: Classified image

-

Step 1: Provide the input image

-

Step 2: Preprocess the image using CLAHE

-

Step 3: Segment preprocessed image using Histogram segmentation.

-

Step 4: Extract features from segmented images using Inception-ResNet-V2 model

-

Step 5: Based on these extracted features, the segmented frames are classified using DNN-MSO model

-

Step 6: Update weight (W) parameter of DNN with MSO fitness function

-

Step 7: DNN-MSO classifies the DR image.

-

Step 8: Evaluate the performance by means accuracy, sensitivity and specificity.

3 Performance and validation

The performance of the DNN-MSO model has been validated on the benchmark MESSIDOR dataset. The details related to the dataset and obtained results are discussed in the following subsections in a detailed way. The parameter settings of the proposed model are given as follows. No. of search agents: 30, number of features: 345, No. of rounds: 30 and dimension: 345.

3.1 Dataset Description

MESSIDOR [24] dataset includes a total of 1200 color fundus images with corresponding annotations. The images in the dataset come under a set of four levels namely normal, level 1, level 2 and level 3. The information related to the dataset is given in Table 1 and sample images under different classes are illustrated in Fig. 2.

3.2 Results analysis

Table 2 visualizes the results offered by the DNN-MSO model on the applied dataset while classifying different levels of DR. The first, second, third, and fourth columns denotes the class label, input image, corresponding segmented and classified images respectively. It can be noted that the first image under normal category is effectively classified as normal image. Then, the image under level 1 class is also efficiently classified as level 1. At the same time, the image under levels 2 and 3 are also classified into level 2 and level 3 respectively.

Table 3 displays the generated confusion matrix for the DNN-MSO model on the tested images. It is shown that a total of 540 images were properly classified into normal level, 148 images into level 1, 241 images into level 2 and 250 images into level 3. These values are manipulated interms of true positive (TP), true negative (TN), false positive (FP) and false negative (FN) as shown in Table 4.

Table 4 provides the manipulation of the confusion matrix in terms of TP, TN, FP and FN.

Table 5 and Fig. 3 provide a detailed classifier outcome of proposed model in terms of three different measures. It is shows that the DR images under normal category are classified by the accuracy of 99.49, sensitivity of 98.90 and specificity of 100. Next, the DR images under level 1 category are classified by the accuracy of 98.83, sensitivity of 97.57 and specificity of 99.05. Simultaneously, the DR images under level 2 categories are classified with the accuracy of 98.74, sensitivity of 97.57 and specificity of 99.05. In the same way, the DR images under level 3 categories are classified by the accuracy of 99.41, sensitivity of 98.43 and specificity of 99.68. These values showed that the proposed model effectively grades the DR images under respective classes.

Table 6 and Figs. 4, 5 and 6 provides a brief comparative study of the DNN-MSO with the existing models such as M-AlexNet [25], AlexNet, VggNet-s, VggNet-16, VggNet-19, GoogleNet and ResNet takes place on the applied same set of DR images.

Figure 4 shows the sensitivity analysis of diverse models on the applied dataset. On evaluating the performance of the classifier models interms of specificity, the DNN-MSO model shows supreme classification over the compared models. The GoogleNet model classifies the DR images in an ineffective way and offered a least specificity of 93.45%. On continuing with, a slightly higher specificity of 94.07% has been offered by the AlexNet model which is superior to earlier model, but not than the rest of the models. Simultaneously, VggNet-16 and ResNet shows moderate as well as near identical specificity values of 94.32% and 95.56% respectively. Next to that, the VggNet-s model tried to show optimal results and offered a high specificity value of 97.43%. In line with, the M-AlexNet model offered near optimal classifier results and provided a higher specificity of 97.45%. However, the DNN-MSO model showed excellent classification and exhibited a maximum specificity value of 99.47%.

Figure 5 shows the specificity analysis of diverse models on the applied dataset. On evaluating the performance of the classifier models in terms of sensitivity, the DNN-MSO model shows supreme classification over the compared models. The GoogleNet model classifies the DR images in an ineffective way and offered a least sensitivity of 77.66%. On continuing with, a slightly higher sensitivity of 81.27% has been offered by the AlexNet model which is superior to earlier model, but not than the rest of the models. Then, an even better result is offered by the VggNet-s model with the sensitivity value of 86.47%. Simultaneously, VggNet-19 and ResNet shows moderate as well as near identical sensitivity values of 89.31% and 88.78% respectively. Next to that, the VggNet-16 model tried to show optimal results and offered a high sensitivity value of 90.78%. In line with, the M-AlexNet model offered near optimal classifier results and provided a higher sensitivity of 92.35%. However, the DNN-MSO model showed excellent classification and exhibited a maximum sensitivity value of 97.91%.

The above mentioned tables and figures ensured the effective detection and grading performance of the presented DNN-MSO model on the applied DR image dataset with a maximum accuracy, sensitivity and specificity of 99.12%, 97.91% and 99.47% respectively.

3.3 Discussion

Figure 6 shows the accuracy analysis of diverse models on the applied dataset. In case of determining the classifier outcome in terms of accuracy, the DNN-MSO model shows supreme classification over the compared models. The AlexNet model classifies the DR images in an ineffective way and offered a least accuracy of 89.75%. On continuing with, a slightly higher accuracy of 90.40% has been offered by the ResNet model which is superior to earlier model, but not than the rest of the models. Simultaneously, VggNet-s, VggNet-16 and GoogleNet shows moderate as well as near identical accuracy values of 93.17%, 93.73% and 93.36% respectively. Next to that, the VggNet-s model tried to show optimal results and offered a high accuracy value of 96%. In line with, the M-AlexNet model offered near optimal classifier results and provided a higher accuracy of 96%. However, the DNN-MSO model showed excellent classification and exhibited a maximum accuracy value of 99.12%.

The above mentioned tables and figures ensured the effective detection and grading performance of the presented DNN-MSO model on the applied DR image dataset with a maximum accuracy, sensitivity and specificity of 99.12%, 97.91% and 99.47% respectively.

4 Conclusion

This paper has presented detection and classification model for DT images using DNN-MSO algorithm. The presented DNN-MSO algorithm involves different processes namely preprocessing, segmentation, feature extraction and classification. The application of the CLAHE model for preprocessing helps to enhance the performance of the classification process. Besides, the inclusion of MSO algorithm for DNN also assists to improvise the effectiveness of the DNN model. An extensive series of experiments were carried out and the results are validated on Messidor DR dataset. The above mentioned tables and figures ensured the effective detection and grading performance of the presented DNN-MSO model on the applied DR image dataset with a maximum accuracy, sensitivity and specificity of 99.12%, 97.91% and 99.47% respectively. In future, the prospoed model can be implement in the cloud based environment to assist patients and doctors to diagnose the diseases in a remote way.

References

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60(May (6)):84–90

Whiting DR, Guariguata L, Weil C, Shaw J (2011) IDF diabetes atlas: global estimates of the prevalence of diabetes for 2011 and 2030. Diabetes Res Clin Pract 94(Dec (3)):311–321

Sb S, Singh V (2012) Automatic detection of diabetic retinopathy in non-dilated RGB retinal fundus images. Int J Comput Appl 47(Jun (19)):26–32

Singh N, Tripathi RC (2010) Automated early detection of diabetic retinopathy using image analysis techniques. Int J Comput Appl 8(Oct (2)):18–23

Shankar K, Sait ARW, Gupta D, Lakshmanaprabu SK, Khanna A, Pandey HM (2020) Automated detection and classification of fundus diabetic retinopathy images using synergic deep learning model. Pattern Recognit Lett

Elhoseny M, Shankar K, Uthayakumar J (2019) Intelligent diagnostic prediction and classification system for chronic kidney disease. Sci Rep 9(1):1–14

Shankar K, Lakshmanaprabu SK, Khanna A, Tanwar S, Rodrigues JJ, Roy NR (2019) Alzheimer detection using Group Grey Wolf Optimization based features with convolutional classifier. Comput Electr Eng 77:230–243

Shankar K, Lakshmanaprabu SK, Gupta D, Maseleno A, De Albuquerque VHC (2018) Optimal feature-based multi-kernel SVM approach for thyroid disease classification. J Supercomput 76:1128–1143

Cunha-Vaz BSPJG (2002) Measurement and mapping of retinal leakage and retinal thickness: surrogate outcomes for the initial stages of diabetic retinopathy. Curr Med Chem Immunol Endocr Metab Agents 2(Jun (2)):91–108

Anandakumar H, Umamaheswari K (2017) Supervised machine learning techniques in cognitive radio networks during cooperative spectrum handovers. Clust Comput 20:1–11

Omar M, Khelifi F, Tahir MA (2016) Detection and classification of retinal fundus images exudates using region based multiscale LBP texture approach. In: 2016 international conference on control, decision and information technologies (CoDIT), Apr 2016

Welikala RA, Fraz MM, Williamson TH, Barman SA (2015) The automated detection of proliferative diabetic retinopathy using dual ensemble classification. Int J Diagn Imaging 2(Jun (2)):72–89

Haldorai A, Ramu A, Chow C-O (2019) Editorial: big data innovation for sustainable cognitive computing. Mob Netw Appl 24(Jan):221–223

Bhatkar AP, Kharat GU (2015) Detection of diabetic retinopathy in retinal images using MLP classifier. In: 2015 IEEE international symposium on nanoelectronic and information systems, Dec 2015

Partovi M, Rasta SH, Javadzadeh A (2016) Automatic detection of retinal exudates in fundus images of diabetic retinopathy patients. J Anal Res Clin Med 4(May (2)):104–109

Xu Y, Mo T, Feng Q, Zhong P, Lai M, Eric I, Chang C (2014) Deep learning of feature representation with multiple instance learning for medical image analysis. In: Proceedings of IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 72–89

Shen W, Zhou M, Yang F, Yu D, Dong D, Yang C, Zang Y, Tian J (2017) Multi-crop convolutional neural networks for lung nodule malignancy suspiciousness classification. Pattern Recognit 61:663–673

Frid-Adar M, Diamant I, Klang E, Amitai M, Goldberger J, Greenspan H (2018) GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 321:321–331

Kayalibay B, Jensen G, van der Smagt P (2017) CNN-based segmentation of medical imaging data. arXiv preprint arXiv:1701.03056

Yadav SS, Jadhav SM (2019) Deep convolutional neural network based medical image classification for disease diagnosis. J Big Data 6(1):113

Kumar A, Kim J, Lyndon D, Fulham M, Feng D (2016) An ensemble of fine-tuned convolutional neural networks for medical image classification. IEEE J Biomed Health Inform 21(1):31–40

Rama A, Kumaravel A, Nalini C (2019) Construction of deep convolutional neural networks for medical image classification. Int J Comput Vis Image Process 9(2):1–15

Khatami A, Babaie M, Tizhoosh HR, Khosravi A, Nguyen T, Nahavandi S (2018) A sequential search-space shrinking using CNN transfer learning and a Radon projection pool for medical image retrieval. Expert Syst Appl 100:224–233

http://www.adcis.net/en/third-party/messidor/. Accessed 14 Jun 2019

Shanthi T, Sabeenian RS (2019) Modified Alexnet architecture for classification of diabetic retinopathy images. Comput Electr Eng 76:56–64

Acknowledgements

This article has been written with the financial support of RUSA–Phase 2.0 grant sanctioned vide Letter No. F. 24-51/2014-U, Policy (TNMulti-Gen), Dept. of Edn. Govt. of India, Dt. 09.10.2018.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shankar, K., Perumal, E. & Vidhyavathi, R.M. Deep neural network with moth search optimization algorithm based detection and classification of diabetic retinopathy images. SN Appl. Sci. 2, 748 (2020). https://doi.org/10.1007/s42452-020-2568-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-2568-8