Abstract

Image fusion is the combining process of complementary information of multiple same scene images into an output image. The resultant output image that is named fused image, produces more precise description of the scene than any of the individual input images. In this paper, we propose a novel simple and fast strategy for infrared (IR) and visible images based on local important areas of IR image. The fusion method is completed in three step approach. Firstly, only the segmented regions in the infrared image is extracted. Next, the image fusion is applied on segmented area and finally, contour lines are also used to improve the quality of the results of the second step of fusion method. Using a publicly available database, the proposed method is evaluated and compared to the other fusion methods. The experimental results show the effectiveness of the proposed method compared to the state of the art methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Increasing demand and interest of military and security institutions to have fast and accurate methods of combining different images from one scene have resulted in many studies on this branch of image processing. In fact, data from different frequency images are combined to enhance the knowledge from the expected scene information.

In most cases, two types of visible and infrared images are used to have integrated information from several camera sensors. The following is an explanation of the advantages and disadvantages of using these types of images.

Visual information of visible images has natural light and contains complete detail and in fact, it has a good representation of the appearance of scenes and objects. But when the amount of light is not enough—for example, in pictures taken at night—they do not have the required performance. Also, the problem of occlusion, that is, the existence of an object hidden behind another object, is an inherent limitation of visible images. The content of infrared images is composed from the thermal radiation of the environment objects; therefore, these images do not have issues expressed about visible images. But unfortunately, the spatial resolution of these images is low and it is not possible to expect details and contexts from them.

To enjoy the advantages of various types of images, a branch has been established in the image processing science called image fusion. In this widely used category, it is possible to combine valuable information from two or more images—usually provided with multiple cameras. The main purpose of this work is to provide an image that provides more information about objects and people in a scene, and researchers attempt to provide a more efficient method than existing methods that can transfer important information from input images to the output image without distortion and loss.

The applications of this image processing technique include: image enhancement [1], medical imaging [2, 3], remote sensing [4,5,6,7], objects recognition and target detection [8, 9], object detection [10, 11], pattern recognition [12, 13] and the manufacturing of modern military equipment [14, 15].One of the security applications of this technology is to detect weapons and suicide bombs hidden under people's clothes, which increase public security in public places—where it is impossible to maintain order among the flow of people. The use of fusion methods has many advantages in increasing the efficiency of different systems such as: Improving detection and recognition, improving stability and reducing human errors, increasing spatial convergence and increasing the accuracy of position estimation, reducing computations and, consequently, reducing reaction time.

Of course, due to human errors and the limitations of hardware, often these two images (infrared and visible images) are not perfectly aligned when taken; hence, in the first step, it is necessary to apply image registration on two images; that is, the corresponding pixels of the input images are aligned with the same. Different and appropriate image registration methods have been presented [16], which are beyond the scope of this paper. Therefore, in this paper registered image databases are used.

In recent fusion researches, fusion method based on multi-scale decomposition (MSD) have been widely studied. Usually, different scales of image have overlap in the spatial domain. Textures or details of the image are observed in small-scale levels, on the other hand, larger objects are available in the large-scale ones. Multi-scale fusion methods are based on spatially-overlapped features extraction which are separated in scales with a multi-scale decomposition method. Then, if separated features have the same spatial area they are combined in different scales. Recently, various MSD methods such as laplacian pyramid (LAP) [17], ratio of low pass pyramid (ROLP) [18], morphological pyramid (MOP) [19], curvelet transform (CVT) [20, 21], non-subsampled contour transform (NSCT) [22, 23] non subsampled Shearlet transform [24] and discrete wavelet transform (DWT) [2, 25], have been used in image fusion.

In [26], high brightness characteristics of thermal targets are maintained and the appearance of both source images are transferred to the output fused image. They extract target layer, as base layer, from the thermal image. Details of appearance, as details layer, are employed from visible image using a filter called weighted least squares (WLS). Therefore, the detail and base layers of both source images are used. The design basis of this method is target recognition therefore, is not general and also, not applicable in wide range of images and fusion algorithms.

The multi-scale fusion methods based on various MSDs have great success in image fusion however, separating the scales in fusion process is very important. Fusion method proposed in [27] is an effective method therefore, it is used as a proper method for comparison.

Ma et al. [28] propose an IR and visible image fusion algorithm based on total variation minimization (TVM). They maintain thermal radiation and detailed appearance information of the source images, simultaneously. Fusion is formulated as a TV minimization problem in their research. Ma et al. [29] proposed a fusion approach based on gradient transfer and TVM. They named the proposed algorithm as Gradient Transfer Fusion (GTF). Data fidelity and regularization term is used to preserve the main intensity distribution in the infrared image and the gradient variation in the visible image, respectively. The proposed method fuses image pairs without pre-registration therefore, enhances its capability as a registration method. An adversarial game between infrared image intensities and details of visible image is proposed in [30]. Authors establish an algorithm to use a generator and a discriminator. The generator aims to preserve major infrared intensities together with additional visible gradients. The discriminator aims to maintain more details of visible images. A generative adversarial network, named as FusionGAN, and an end-to-end model is used to carry out these goals. The fusion of infrared and visible images and their applications is surveyed comprehensively in [31].

In this paper, a method for image fusion is presented which has a good performance compared to similar methods. The main idea of the method is that the most important regions of an infrared image are those parts that have greater illuminance intensity than other parts. People or military equipment often are brighter than other parts of the infrared image because of the heat they produce. Of course, in some cases, the buildings, especially the parts near to their ceilings, are brighter, which fortunately, the selection of these regions does not cause any problems for the results; it also suggests the existence of life in those regions of the image.

Innovations of the study are listed below:

-

A new, simple, fast and accurate method for the fusion of visible and infrared images is presented.

-

Fusion operation is limited to the most important regions of the infrared image which increases the speed and the efficiency of the method.

-

A curve of the important regions of the image is made and this curve helps the user to detect the targets.

The rest of the paper is organized as follows. In the next section, the proposed algorithm is presented. In the third section, the experimental results are presented and the results of the proposed methods and other methods are compared based on different measurement criteria. Finally, conclusion is presented in the last section.

2 The proposed method

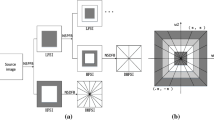

In this paper, a new and efficient method for rapid fusion of visible and infrared images is presented. In this method, various image processing techniques including dynamic thresholding, morphology, and weighted fusion methods have been used. The flowchart of the proposed method is presented in Fig. 1.

The proposed algorithm consists of the following steps:

-

1.

Calculating input infrared image histogram.

-

2.

Finding the peak of the histogram.

-

3.

Put threshold on the infrared image based on the illuminance intensity of the peak of the histogram.

-

4.

Selecting pixels above the threshold.

-

5.

Integrating the regions of step 4 and obtaining the filtered version of the important regions of the infrared image.

-

6.

Extracting the filtered contour of the important regions of the infrared image.

-

7.

Selecting non-filtered regions from the visible image and transferring them to the output image.

-

8.

Weighted fusion between the image of step 7 and the image of step 5.

-

9.

Considering the filtering contours for the image of step 8 (for better visualization).

-

10.

Obtaining the final fused image.

In the following, these steps are fully explained along with sample images. For this purpose, one of the most famous images of this branch called “Bench” is used, whose infrared and visible images are shown in Figs. 2 and 3, respectively.

2.1 Determining the appropriate threshold

In this step, input infrared image histogram is obtained (Fig. 4). Then the peak (illuminance intensity with maximum frequency) is calculated from this histogram.

If this peak occurs at any illuminance intensity, 25% is added to illuminance intensity in order to select the important data of the infrared image (This amount is extracted experimentally on the database). Of course, if this relative threshold is higher or lower than a certain value, a fixed threshold is performed. In fact, due to the nature of infrared images and important data in the infrared databases, we expect that suitable and sufficient data from infrared images extracted when margin of 25% is used around the peak value of histogram. But, if including this margin leads to select more than 50% of the infrared image pixels, the threshold value is set in such a way that maximally 50% of the image pixels are being selected. In the minimum case, this event is set to 5%.

Therefore, thresholding is performed on input infrared image and the pixels with higher illuminance intensity than the threshold are selected (Fig. 5).

2.2 Preparing the important regions of the infrared image

In the previous step, pixels with higher illuminance intensity than the threshold were selected from the infrared image. The selected pixels are called “important regions of the infrared image”. A binary image or mask is extracted using the spatial coordinates of pixels of important regions of infrared image.

Generally, the resultant binary image is noisy, therefore, these regions are smoothed in the proposed method. It is important that, due to the required precision for the method, this should be done in such a way that the total area of selected regions should not be changed and also it should be slightly softer and small internal regions should be removed. So, this goal can be achieved using the morphological Closing operation on pixels of binary image (Fig. 6). Closing is defined simply as a dilation followed by an erosion using the same structuring element for both operations. The closing of a binary image A by a structuring element B is the erosion of the dilation of that set as follows:

Apply closing operator on Fig. 5

where ·, \({ \oplus }\) and \({ \ominus }\) denote the closing, dilation and erosion, respectively.

Of course, the size of morphological structural element changes according to the dimensions of the image. So during this step, "the filtered version of the important regions of the infrared image" is obtained. In order to know about the efficiency, the steps of the method were broken down into three steps, each of which has more processing stages than the method of the previous step.

2.2.1 The first fusion method (step 1)

The first proposed fusion method is to select regions of the visible image in areas where equivalent pixels are not selected in the infrared image. As shown in Fig. 6 these regions often cover a large area of the image. The selected regions are transferred without any change to the output image which is referred to as "transferred visible image" in order to maintain the details of the visible image. In this method, the important regions of the infrared image take the place of the empty areas of the transferred visible image without any processing and the fused image is obtained (Fig. 7).

Since the infrared image is used as a mask (spatial filter), the number of pixels in visible and infrared images will be the same. To adjust images with different resolutions, a spatial mask is produced by down sampling.

High speed and low computational costs are the advantages of this method. Of course, the infrared objects in output image is highlighted, it can be described as another advantage of this proposed method.

2.2.2 The second fusion method (step 2)

Here, weighted fusion is used in selected regions. Actually, important regions of infrared image are fused with equivalent pixels of visible image. Therefore, a percentage of the illuminance level of the fused image in these regions is from the visible image and the rest is from the infrared image. In this method, information from two images in selected areas are used to form output image. As before, “transferred visible image” regions are exported directly to the output image from visible image. This image is the output of the second method of proposed fusion method and although it is time-consuming, it has more efficiency than other proposed methods according to the measurement criterias (in the section of experimental results) (Fig. 8).

2.2.3 The third fusion method (step 3)

In some practical tests, it was observed that the second proposed method does not have the adequate performance to differentiate the targets in the output image. To solve this shortcoming, the third method has been proposed. Therefore, the third method of proposed fusion method is to make the important regions of the infrared image clearly visible. The reason for this suggestion was one of the images of the database (“lake”). Where, the man is hidden in the middle of the image, despite being detected, is not clear because of the lack of differentiation of illuminance intensity between the man and the visible image (Fig. 9).

The filtering contour of the important regions of the infrared image was extracted previously to overcome this deficiency. As a result, two contours were obtained in white and black colors. Our proposed method for achieving these two contours is to use morphological Dilation operator with a one-pixel disk. The dilation operation usually uses a structuring element for probing and expanding the shapes contained in the input image. In Fig. 10a, the expanded of the integrated filtered image (Fig. 6) and in Fig. 10b, the smaller contour obtained from the difference between Figs. 6 and 10a are shown.

a Dilated image of the smoothed image of Fig. 7, b The edges of (a), c Expanded version of (a), d The edges of (c)

The same thing was repeated once again and in Fig. 10c, expansion of Fig. 10a—and in 10d, the larger contour obtained from the difference between Fig. 10a and c are shown. According to the obtained results, this method of edge detection is more accurate, more suitable and simpler than other edge detections.

The reason for selecting two contours at this stage is to more and better differentiation of important regions from other regions in output image. Because, in some images dark regions (“tank”) and in some other images brighter regions (“duine”) are more visible. Therefore, as mentioned before, for better visual representation, the output image of the second method of proposed fusion method is applied to the contours of the important regions of infrared image and the resulted image is called “output of the third method of proposed fusion method”. The result of drawing these two contours is shown in Fig. 11. The final explanation is that the white curve is one pixel larger than the contour of the important regions of the infrared image, and the black curve is one pixel larger than the white curve.

Now a final fused image for the sample image is produced. The final fused image is also presented for the image of the “lake” in which the head of the hidden person in the middle of the image is specified (Fig. 12).

Next section includes the results of the proposed methods (with three steps fusion results) which are compared with different measurement criteria. Also, the results are compared with other methods.

3 Experimental results

The proposed method has been implemented in MATLAB using Windows 7 operating system, the operating frequency of 2.67 GHz and 6 GB of RAM. And also evaluated by the following famous visual data from the following database:

http://figshare.com/articles/TNO_Image_Fusion_Dataset/1008029

“Bunker”, “Lake”, “Tank”, “Bench”, “Sand path”, “Nato camp”, “Duine”, “Two men in front of the house”, “Soldier in trench”, “Soldier behind smoke”.

Various methods were implemented in order to make a comparison:

Image fusion methods like simple minimum, simple maximum, simple average, weighted average, Discrete Wavelet Transform (DWT) method in three methods of averaged-average, maximum–minimum and maximum-maximal methods, Principal Component Analysis (PCA) method and the combination of PCA method with DWT method in the form of Maximum–Maximum and also the proposed method in [27] are used to compare the methods.

In simple minimum, simple maximum and simple average methods, the fused image is produced by selecting the minimum, maximum and average illuminance intensity among the corresponding pixels of two input images, respectively. The fused image in weighted average method is obtained by calculating the average of given weight among illuminance intensity of the corresponding pixels of the two input images.

Two-dimensional discrete wavelet transform is a tool for multiresolution decomposition of the image. After applying decomposition, approximation and small components, the image can be transferred from the spatial domain to the frequency domain. This kind of fusion is provided with the command of wfusimg in MATLAB software. In this function, the approximation and the details of the two input images can be combined with each other in different ways, and three methods of average-average, maximum-minimum, and maximum-maximum were used.

In PCA method, the fused image is obtained using eigenvector correspondent with the largest matrix eigenvalues of each image.

Common requirements for an image fusion process are the protection all valid and usable information of original images; however, the fused image should not have any distortion. Therefore, it is appropriate to use efficiency criteria to measure the possible benefits of fusion and also to evaluate the results of different algorithms. Some of these criteria used in this paper are presented below.

3.1 Mutual information (MI) criteria

This criterion is introduced in [32]. The higher value of this criterion for a method indicates that the method is more efficient. Figure 13 shows the different values of this criterion for different methods and images.

As can be seen from the results of Fig. 13, the proposed method in all three methods has an appropriate efficiency compared to other methods in all evaluated images in terms of mutual information criterion. However, this criterion is equal in value in the proposed method and simple minimum method for the image of "soldier after smoke". It is necessary to explain that the result of the second method of the proposed method is superior to the results of the first and third methods in a range of criteria which will be mentioned in the following.

3.2 Feature mutual information (FMI) criteria

The next criterion is the feature mutual information (FMI), which is introduced in [33] and the higher value of this criterion indicates that it is a more efficient method. Figure 14, shows the diagram of different values of this criterion for different methods and images.

The superiority of the proposed method over all methods, especially its second method, is evident in terms of FMI criteria in Fig. 14.

3.3 Normalized cross correlation (NCC) criteria

The next criterion is normalized cross correlation (NCC). This criterion is defined between visible image (V) and the fused image (F) as Eq. (2):

Figure 15 shows the diagram of different values of this criterion for different methods and images.

In terms of NCC criterion, it is simple to obtain the average. Of course, given the definition of this criterion, this conclusion is not unexpected. However, the uniform behavior in the third method of the proposed method is admirable (after simple maximization).

3.4 Peak signal to noise ratio (PSNR) criteria

Peak signal to noise ratio is used to calculate the similarity between two images. PSNR between the visible image (V) and fused image (F) is defined as the following:

where MSE is the mean square errors and is defined as:

Therefore, the higher value of PSNR for a method indicates a better fusion of that method. Figure 16 shows the diagram of different values of this criterion for different methods and images.

As we expected, according to Fig. 17, proposed method—in particular, the second method of the proposed method—has better efficiency than all methods in all images in terms of PSNR criteria.

3.5 Entropy

The next criterion is entropy, which is usually used to calculate the size of the information. The higher entropy value indicates that the information is increased and the fusion efficiency is improved.

Figure 17 shows the diagram of different values of this criterion for different methods and images.

Fortunately, again, all three methods of the proposed method in terms of entropy criterion have higher values than all methods in all images. The important point is that the proposed method has better results than the second method in the image of the "soldier behind the smoke" (last image), and the reason is the different nature of the visible image because, unlike the usual, the visible image has less information. So, when the infrared image itself—without fusion with a visible image—is replaced in the desired parts, it leads to a better result in terms of entropy criterion. Figure 18 shows the comparison of proposed method base on entropy criteria.

Finally, all the fused image in this paper are presented in the same way as mentioned—and are shown in all figures from Figs. 14, 15, 16, 17, 18—as well as the final output of the method in Fig. 19. As can be seen in this figure, the proposed method, while maintaining the details and illuminance intensity of the visible image (valuable information of the image) in all images, can detect the important information of the infrared image and reflect it in the output.

4 Conclusion

An efficient method for fusion of visible and infrared images is presented. The proposed method is explained in three steps. Three methods are presented in order to achieve fast and optimal solution. Firstly, only the segmented data in the infrared image is used as a spatial mask on visible image. This method highlights the infrared objects in visible image. Then, in second method, the image fusion is applied on segmented regions of infrared image with equivalent pixels in visible image. Therefore, more details of the infrared image will be included in the output image. Finally, In the third method of proposed fusion method, contour lines are also used to improve the quality of the results of the second method of fusion method. The presented method has better results than other methods in a set of comparative criteria, both quantitative and qualitative. According to the results, the proposed algorithm can be used for various uses, including military-security applications. The proposed method deals with IR and visible image fusion problem, however, it is general and can be also applied to other image processing problems such as super-resolution. Proposed method, as others, cannot distinguish between IR target and brightness of visible image in gray images. We want to focus on this aspect as future work.

References

Zhou Z, Dong M, Xie X, Gao Z (2016) Fusion of infrared and visible images for night-vision context enhancement. Appl Opt 55:6480–6490

Li H, Manjunath B, Mitra SK (1995) Multisensor image fusion using the wavelet transform. Graph Models Image Process 57:235–245

Bhatnagar G, Wu QJ, Liu Z (2015) A new contrast based multimodal medical image fusion framework. Neurocomputing 157:143–152

Wei Z, Han Y, Li M, Yang K, Yang Y, Luo Y et al (2017) A small UAV based multi-temporal image registration for dynamic agricultural terrace monitoring. Remote Sens 9:904

Yang K, Pan A, Yang Y, Zhang S, Ong SH, Tang H (2017) Remote sensing image registration using multiple image features. Remote Sens 9:581

Chen C, Li Y, Liu W, Huang J (2014) Image fusion with local spectral consistency and dynamic gradient sparsity. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2760–2765.

Dong L, Yang Q, Wu H, Xiao H, Xu M (2015) High quality multi-spectral and panchromatic image fusion technologies based on Curvelet transform. Neurocomputing 159:268–274

Kong SG, Heo J, Boughorbel F, Zheng Y, Abidi BR, Koschan A et al (2007) Multiscale fusion of visible and thermal IR images for illumination-invariant face recognition. Int J Comput Vision 71:215–233

Toet A, Ijspeert JK, Waxman AM, Aguilar M (1997) Fusion of visible and thermal imagery improves situational awareness. Displays 18:85–95

Yang C, Ma J, Qi S, Tian J, Zheng S, Tian X (2015) Directional support value of Gaussian transformation for infrared small target detection. Appl Opt 54:2255–2265

Ghaneizad M, Kavehvash Z, Aghajan H (2017) Human detection in occluded scenes through optically inspired multi-camera image fusion. JOSA A 34:856–869

Ma J, Zhao J, Ma Y, Tian J (2015) Non-rigid visible and infrared face registration via regularized Gaussian fields criterion. Pattern Recogn 48:772–784

Wang N, Ma Y, Zhan K (2014) Spiking cortical model for multifocus image fusion. Neurocomputing 130:44–51

Meng F, Guo B, Song M, Zhang X (2016) Image fusion with saliency map and interest points. Neurocomputing 177:1–8

Li Y, Tao C, Tan Y, Shang K, Tian J (2016) Unsupervised multilayer feature learning for satellite image scene classification. IEEE Geosci Remote Sens Lett 13:157–161

Yang Y, Ong SH, Foong KWC (2015) A robust global and local mixture distance based non-rigid point set registration. Pattern Recogn 48:156–173

Burt P, Adelson E (1983) The Laplacian pyramid as a compact image code. IEEE Trans Commun 31:532–540

Toet A (1989) Image fusion by a ratio of low-pass pyramid. Pattern Recogn Lett 9:245–253

Toet A (1989) A morphological pyramidal image decomposition. Pattern Recogn Lett 9:255–261

Nencini F, Garzelli A, Baronti S, Alparone L (2007) Remote sensing image fusion using the curvelet transform. Inf Fusion 8:143–156

Zhenfeng S, Jun L, Qimin C (2012) Fusion of infrared and visible images based on focus measure operators in the curvelet domain. Appl Opt 51:1910–1921

Adu J, Gan J, Wang Y, Huang J (2013) Image fusion based on nonsubsampled contourlet transform for infrared and visible light image. Infrared Phys Technol 61:94–100

Zhang Q, Maldague X (2016) An adaptive fusion approach for infrared and visible images based on NSCT and compressed sensing. Infrared Phys Technol 74:11–20

Huang Y, Bi D, Wu D (2018) Infrared and visible image fusion based on different constraints in the non-subsampled shearlet transform domain. Sensors 18:1169

El-Khamy SE, Hadhoud MM, Dessouky MI, Salam BM, El-Samie FEA (2005) Blind multichannel reconstruction of high-resolution images using wavelet fusion. Appl Opt 44:7349–7356

Zhou Y, Gao K, Dou Z, Hua Z, Wang H (2018) Target-aware fusion of infrared and visible images. IEEE Access 6:79039–79049

Ma J, Zhou Z, Wang B, Zong H (2017) Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys Technol 82:8–17

Ma Y, Chen J, Chen C, Fan F, Ma J (2016) Infrared and visible image fusion using total variation model. Neurocomputing 202:12–19

Ma J, Chen C, Li C, Huang J (2016) Infrared and visible image fusion via gradient transfer and total variation minimization. Inf Fusion 31:100–109

Ma J, Yu W, Liang P, Li C, Jiang J (2019) FusionGAN: a generative adversarial network for infrared and visible image fusion. Inf Fusion 48:11–26

Ma J, Ma Y, Li C (2019) Infrared and visible image fusion methods and applications: a survey. Inf Fusion 45:153–178

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38:313–315

Haghighat MBA, Aghagolzadeh A, Seyedarabi H (2011) A non-reference image fusion metric based on mutual information of image features. Comput Electr Eng 37:744–756

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abbasi Aghamaleki, J., Ghorbani, A. Infrared and visible image fusion based on optimal segmenting and contour extraction. SN Appl. Sci. 3, 369 (2021). https://doi.org/10.1007/s42452-020-04050-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-020-04050-w