Abstract

Economic forecasting may go badly awry when there are structural breaks, such that the relationships between variables that held in the past are a poor basis for making predictions about the future. We review a body of research that seeks to provide viable strategies for economic forecasting when past relationships can no longer be relied upon. We explain why model mis-specification by itself rarely causes forecast failure, but why structural breaks, especially location shifts, do. That serves to motivate possible approaches to avoiding systematic forecast failure, illustrated by forecasts for UK GDP growth and unemployment over the recent recession.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Overview

The ‘Great Recession’, 2008–2012 has forcefully reminded us that the Business Cycle is most certainly not dead. As Victor Zarnowitz (2004) expressed it:

‘Business cycles, even if less of a threat, are far from conquered and still represent the most serious form of macroeconomic instability’.

Thanks to China’s growth and automatic stabilizers in ‘advanced’ economies, the downturn and resulting distress were not on the scale of the Great Depression of the 1930s, but it has still proved difficult to resolve. Moreover, while forecasting remains central to the policy process in most OECD economies, forecast failure—a significant deterioration in forecast performance relative to the anticipated outcome—was a common occurrence over the ‘Great Recession’. We consider such a failure for UK GDP below, and contrast that with the ease with which unemployment could be forecasted, suggesting a change in the relationship between these key variables.

From the early days of model-based economic forecasting, the difficulties posed by breaks have been recognized: salient examples include Smith (1929) (Judging the Forecast for 1929, published in early 1929), Shoup et al. (1941) (‘The times are so different now [i.e., October 1941] from 1935–1939 that relations existing then may not exist at all today.’—even prior to the USA entering World War II), and Klein (1947) (‘Would the econometrician merely substitute into his equations of peacetime behavior patterns in order to forecast employment in a period during which there will be a war?’).

The onsets of Business Cycle downturns are typically not easy to forecast. The ‘Great Recession’ is but the latest of many historical episodes of forecast failure, revealing problems with the traditional approach to economic forecasting. We have argued that structural breaks are the main culprit: other putative causes of forecast failure, such as model mis-specification, turn out to be relatively benign in the absence of breaks. Fortunately, there are partial remedies, which fall into two broad groups: (1) automatic devices for robustifying forecasts, as the forecast origin moves forward in time, to avoid systematic failure after future unknown breaks occur, and (2) forecasting as the break unfolds, when there is partial information on the changes that are taking place.

Here we review some of these developments, and illustrate the relative forecasting performances of models during periods when the economy was subject to substantive upheavals, along with the impacts of applying various robustification strategies (remedy 1 above). We do not attempt to forecast using additional information on breaks, although relevant research on this is discussed. The strategies we explore are not always successful and typically come with a cost—inflated forecast-error variances—but have the potential to prevent systematic runs of forecast errors of the same sign.

In Sect. 2, we review a traditional approach, explaining what might go wrong when there are breaks. Sections 3 and 4 respectively sketch why model mis-specification by itself rarely causes forecast failure, but why structural breaks do. The discussion of location shifts in Sect. 4 serves to motivate one potential remedy, discussed in Sect. 5, with some alternatives noted. Section 6 briefly considers research on forecasting during a break, using the ever-increasing amounts of data that are available (including social media data) which create the potential for more accurate readings of the state of the economy at the forecast origin. Section 7 presents our two illustrations: forecasts of output growth over the recent recession and of UK unemployment. For the unemployment rate, we take a longer-run view, but also consider a short-sample example and note two earlier episodes where we find that well-specified models in-sample are not the best models out-of-sample. Section 8 concludes.

2 Two Theories to Economic Forecasting

The traditional theory of economic forecasting assumes, at least implicitly, that (see, e.g., Klein 1971):

-

1.

the forecasting model is a good representation of the process; and

-

2.

the structure of the economy will remain relatively unchanged over the forecast horizon.

Under these assumptions, the natural forecasting strategy, or operational procedure for forecasting, is simply to use the best in-sample model, estimated from the best available data. What we have termed forecast failure ought not to occur: out-of-sample performance should be broadly similar to how well the model fits the in-sample data. In Sect. 3, we provide a simple illustration to clarify these statements. Unfortunately, as recognized by a number of authors, econometric models are inevitably mis-specified and economies are subject to unanticipated shifts (see, e.g., Stock and Watson 1996) so episodes of forecast failure have been all too common. As Friedman (2014) recounts, the unpredicted onset of the Great Depression in the USA did great damage to the reputation of economic forecasting by what he calls ‘Fortune Tellers’.

To overcome the limitations of traditional forecasting theory, Clements and Hendry (1998, 1999) make two less stringent assumptions that seem more realistic:

-

1.

models are simplified representations, incorrect in many ways; and

-

2.

economies both evolve and occasionally shift abruptly.

In this setting, simply using the best in-sample model may not be the best approach, and as shown by e.g., Clements and Hendry (2006) and Castle et al. (2010), forecasting by popular (vector) equilibrium-correction models with well-defined long-run solutions may be especially harmful when there are shifts in equilibrium means. Models which are deliberately mis-specified in-sample, for example, by omitting equilibrium (or error)-correcting terms, may adapt more rapidly to changed circumstances out-of-sample and produce more accurate forecasts.

It seems fairly intuitive that forecast failure could result from structural breaks that render past relationships between variables a poor guide to the future. It is perhaps less clear that many forms of model mis-specification, including unmodelled changes in parameter values, need not do so. In Sect. 4, we examine the effects of shifts in equilibrium-correction models to motivate one of the robust strategies we subsequently discuss.

3 Model Mis-specification and Lack of Forecast Failure

In a stationary world, least-squares estimated equations are consistent for their associated conditional expectations (when second moments exist), so forecasts on average attain their expected accuracy unconditionally (see, e.g., Miller 1978; Hendry 1979). Clements and Hendry (2002, pp 550–552) also illustrate that model mis-specification need not result in forecast failure in the absence of structural breaks.

Providing the data under analysis are and remain stationary, then any model thereof is isomorphic to a mean-zero representation, a well-known result in elementary regression derivations, and a consequence of the famous Frisch and Waugh (1933) theorem. Omitting any subset of variables in any equation will not bias its forecasts because the omission is of zero-mean terms. Let the stationary data generation process (DGP) for the variable, \(y_t\) to be forecast be given by:

where \(\beta _0,\ldots ,\beta _ N\) are constant, \(\kappa _i\) is the population mean of \(z_i\), so \(\theta _ 0=\beta _0+\sum ^N_{i=1}\beta _i\kappa _i\) and \(\epsilon _{t}\sim \mathsf {IN}[ 0,\sigma ^2_{\epsilon }] \), denoting an independent normal random variable with mean \(\mathsf {E}[\epsilon _{t}] =0\) and variance \(\mathsf {V}[ \epsilon _{t}] =\sigma ^2_{\epsilon }\) that is independent of all the \(z_{i,t-1}\). The parameters in (1) can be unbiasedly estimated from a sample \(t=1,\ldots ,T\). From (1), when the distributions remain the same over a forecast horizon, \(h=T+1,\ldots , T+H\), then forecasting by:

where \(\overline{z}_i\) is the sample mean of \(z_i\), leads to \(\mathsf {E}[y _{T+h}-\widehat{y}_{T+h|T+h-1}] =0\).

However, the researcher only includes \(z_{i,t-1}, i=1,\ldots , M<N\) in her forecasting model, unaware that \(z_{j,t-1}, j=M+1,\ldots , N\) matter, so forecasts by:

Since all the included \((z_{i,T+h-1}-\overline{z}_i)\) still have zero means, and hence \(\mathsf {E}[\widetilde{\theta }_0]=\theta _ 0\), then \(\mathsf {E}[y _{T+h}-\widetilde{y}_{T+h|T+h-1}] =0\). The fit will be inferior to the correctly-specified representation (2), but the resulting model will on average forecast according to its in-sample operating characteristics. Thus, a test for forecast failure based on comparing the in-sample fit of a model like (3) to its out-of-sample forecast performance will not find any indication of forecast failure. In fact, forecasts from mis-specified models may be more or less accurate than those from the estimated DGP depending on the precision with which parameters are estimated, since although they are technically invalid, zero restrictions on coefficients that are close to zero can improve forecast accuracy: see Clements and Hendry (1998, chs.11 & 12).

Thus, model mis-specification per se cannot account for forecast failure in stationary processes, because the model’s out-of-sample forecast performance will be consistent with what would have been expected based on how well the model fitted the historical data. However, an exception arises to the extent that inconsistently estimated standard errors are used to judge forecast accuracy, or if deterministic terms are mis-specified, violating the mean-zero requirement, as we now discuss. Corsi et al. (1982) show that residual autocorrelation, perhaps induced by other mis-specifications, leads to excess rejection on parameter-constancy tests as untreated positive residual autocorrelation can downward bias estimated standard errors, which thereby induces excess rejections on constancy tests. In practice, an investigator is likely to add extra lags to remove any residual autocorrelation. This strategy will help make the model congruent (see, e.g., Hendry 1995) even though it remains mis-specified: by having innovation residuals, excess rejections in parameter constancy tests will not occur. Finally, when \(\theta _ 0\ne 0\), a failure to include an intercept will lead to biased forecasts from models with either N or M variables.

Conversely, model mis-specification is necessary for forecast failure, because otherwise the model coincides with the DGP at all points in time, so never fails. This is consistent with the result in Clements and Hendry (1998) that causal variables will always dominate over non-causal in forecasting when the model coincides with the DGP (or that DGP is stationary), but need not do so when the model is mis-specified for a DGP that is subject to location shifts, the topic we now address. We illustrate the impact of shifts in \(\theta _ 0\ne 0\) when the forecasting model is the actual DGP (1), then consider a cointegrated system which provides a more realistic representation of the situation confronting forecasting business cycles.

4 Structural Breaks and Forecast Failure

Our intrepid researcher has managed to discover the exact in-sample DGP as in (1):

but at the forecast origin T, the DGP shifts to:

Denoting her forecasts from (4) by \(\widehat{y}_{T+h|T+h-1}\), then \(\mathsf {E}[y_{T+h}-\widehat{y}_{T+h|T+h-1}]=\theta _{0}^{*}-\theta _{0}\ne 0\), so are biased, and will remain biased until she changes her ‘estimate’ of \(\theta _{0}\). The change from \(\theta _{0}\) to \(\theta _{0}^{*}\) is a location shift, as the mean value of \(y_{t}\) shifts from the former value to the latter.

Contrast that outcome of failure from one parameter shifting, namely the long-run mean of the dependent variable, to \(\theta _0\) staying constant, but every other parameter changing, so all \(\beta _i,\; \kappa _i,\; i=1,\ldots ,N\) shift to \(\beta ^{*} _i,\; \kappa ^{*} _i,\; i=1,\ldots ,N\) where \(\mathsf {E}[z_{i,T+h-1}] =\kappa ^{*} _i\). Then \(\mathsf {E}[y _{T+h}-\widehat{y}_{T+h|T+h-1}] =\theta _0-\theta _0 = 0\), so there is no systematic bias, but an increase in the forecast-error variance. Moreover, this result continues to hold even if the forecasting model omits some of the relevant variables. Clearly, not all shifts are equal, but matters get even stranger when we consider a cointegrated system.

We now borrow the model and notation from Castle et al. (2015) to show that vector equilibrium correction models (henceforth, VEqCMs) are not robust when forecasting after breaks, specifically, unanticipated location shifts, and are then liable to systematic forecast failure. Similar analyses and extensions are provided in Clements and Hendry (1999, 2006) inter alia.

We consider an n-vector time series \(\{ \mathbf {x}_{t} {, }t=0,1,\ldots ,T\} \) generated by the cointegrated system:

where \({\epsilon }_{t}\sim \mathsf {IN}_{n}[ \mathbf {0},\mathbf {\Omega }_{\epsilon }] \). In addition to lags of the \(\Delta \mathbf {x}_{t}\)s that are not explicitly shown for simplicity, \(\Delta \mathbf {x}_{t}\) depends on k explanatory variables denoted \(\mathbf {z}_{t}\), which may include variables other than those in \(\Delta \mathbf {x}_{t-i}\), and/or principal components or factors, as discussed in Castle et al. (2013). Either way, the \(\mathbf {z} _{t}\) are assumed to be integrated of order zero, denoted I(0), so that the form of the model implies that \(\mathbf {x}_{t}\) is I(1), with r linear combinations \({\beta }^{\prime }\mathbf {x}_{t}\) that cointegrate, so are I(0), where \({\beta }\) is n by \(r< n\).Footnote 1 Hence \(\Delta \mathbf {x}_{t}\) responds to disequilibria between \(\mathbf {z}_{t-1}\) and its mean \(\mathsf {E}[\mathbf {z}_{t-1}] ={\kappa }\), so the DGP is equilibrium-correcting in the \(\mathbf {z}_{t}\), as well as to disequilibria between \({\beta }^{\prime }\mathbf {x}_{t-1}\) and \({\mu }\). In this setup, we can analyze the effects of model mis-specification by supposing that the forecasting model omits \(\mathbf {z}_{t-1}\), typically because the investigator is unaware of its relevance. In (6), both \(\Delta \mathbf {x}_{t}\) and \({\beta }^{\prime }\mathbf {x}_{t}\) are I(0), with average growth \(\mathsf {E}[ \Delta \mathbf {x}_{t}] ={\gamma }\) in-sample and equilibrium mean \(\mathsf {E}[ {\beta }^{\prime }\mathbf {x}_{t}] ={\mu }\).

Consider now a forecasting model that omits \(\mathbf {z}_{t-1}\). The estimated forecasting model becomes:

where \(\mathsf {E}[\widehat{{\gamma }}] ={\gamma }\) and \(\mathsf {E}[\widehat{{\mu }}] ={\mu }\) because although the model is mis-specified by omitting \(\mathbf {z}_{t-1}\), its effect has a mean of zero. We also suppose that the population value of \(\widehat{{\alpha }}\) is still \({\alpha }\), despite omitting \(\mathbf {z}_{t-1}\), as well as that the population value of \(\widehat{{\beta }}\) remains \({\beta }\), since cointegrating relationships are little affected by the inclusion or omission of I(0) variables. Referring back to Sect. 3, providing \(\mathsf {E}[\mathbf {z}_{T+h-1}] ={\kappa }\) over the forecast horizon, this omission will not even bias the forecasts, though it will increase the forecast-error variance.

When there are shifts in the means, so that \({\gamma }\), \({\mu }\) and \({\kappa }\) shift to \({\gamma }^{*}\), \({\mu }^{*}\) and \({\kappa }^{*}\) at the forecast origin at time T, the DGP becomes:

where we have allowed the coefficient vector of the omitted variables to change as well. Then the 1-step ahead forecasts from using (7) to forecast \(\Delta \mathbf {x}_{T+1}\) from period T are given by:

and the forecasts errors, given by subtracting (9) from (8), have a mean of:

A closely related issue is the accuracy of data at the forecast origin, as an incorrect value \(\widehat{\mathbf {x}}_{T}\) for \(\mathbf {x}_{T}\) acts like a location shift at the forecast origin. Consequently, nowcasting to produce more accurate values at the forecast origin can be valuable. We do not address real-time forecasting here in order to focus on the impact of shifts. However, in real-time the robustification strategies discussed below will typically be based on data measured with error, subject to subsequent revision as later vintages are released, which might curtail their efficacy in practice, an issue considered for nowcasting in Castle et al. (2009) and by Castle et al. (2015), who analyse the impact of measurement errors at the forecast origin. Further research in this area is warranted given the relevance of data revisions for macro-data.

When (9) is still used to forecast the outcomes from (8) 1-step ahead even after several periods have elapsed:

then the resulting forecast error \(\widehat{{\epsilon }} _{T+h|T+h-1}=\Delta \mathbf {x}_{T+h}-\Delta \widehat{\mathbf {x}}_{T+h|T+h-1}\) remains biased as:

Even assuming \(\mathsf {E}[\mathbf {z}_{T+h-1}]={\kappa }^{*}={\kappa }\), the first two components in (12) will continue to cause systematic forecast failure. The problem is that the model lacks adaptability, a difficulty for all members of the equilibrium-correction class including regressions, vector autoregressions (VARs) as well as cointegrated systems: the equilibrium correction always corrects back to the old equilibrium, determined by \({\gamma }\) and \({\mu }\), irrespective of how much the new equilibrium has shifted.

Importantly, forecast failure does not require a model to be mis-specified in-sample, seen by forecasting from the pre-break DGP. The resulting forecasts are given by:

so even when \({\kappa }^{*}={\kappa }\), has a forecast error of:

which has the same form as (10) when \({\kappa }^{*}={\kappa }\), creating persistent forecast errors of the same sign as the forecast origin moves through time despite using the in-sample DGP.

These examples treat the parameters as ‘variation free’ in that each can be shifted separately from any of the others. That is unlikely in practice, as e.g., a fall in the equilibrium mean is liable to alter the growth rate. Hence, although the terms in (14) could in principle cancel, that does not seem likely. Similarly, changes in \({\kappa }\) are likely to alter \(\mathbf {\mu }\). Finally, although we have implemented the impacts of shifts as instantaneous, they are more than likely to take time to complete in dynamic systems, so that, e.g., in the early stages after a shift from \({\kappa }\) to \({\kappa }^{*}\), one would anticipate that \(\mathsf {E}[\mathbf {z}_{T+1}]\ne {\kappa }^{*}\). Thus, location shifts will usually not produce a neat step in observable data, but smoother responses of the shape often seen in time series.

5 Robust Forecasting Devices

Robustification against the adverse effects of a ‘break’ on forecasts is a form of ‘insurance’, in that there is a cost, but the strategies confer benefits in a bad state of nature. The cost typically manifests in a higher forecast-error variance, and the benefits are largely unbiased forecasts subsequent to the occurrence of breaks. Changes in the probability of bad states and the costs of insuring affect the efficacy of robustification strategies, which therefore depends on factors such as the frequencies and magnitudes of location shifts, the underlying predictability of the series, amongst other things. We consider a number of robustification strategies for forecasting after location shifts, as well as improved adaptability to breaks, and averaging across forecasting devices or information sources. Robustification includes: intercept correction, differencing, forecast-error correction mechanisms, and pooling:

Intercept correction (IC) is a widely-adopted strategy, which can offset location shifts. Unfortunately, ICs can also exacerbate a break, as with stochastic regime-switching processes where a correction is in-built, and may require pre-testing for inclusion each period in every equation, yet the forms, timings, and durations of shifts are unknown. Although a work-horse of forecasters, ICs can be inadequate: many past failures occurred despite their use.

Differencing robustifies forecasts after location shifts, partly by adding a unit root, and partly by reducing deterministic polynomials by one order. In the literature, strategies have typically focused on differencing prior to model specification and estimation, as in Box and Jenkins (1970) and differenced vector autoregressions. We consider the impact of differencing after estimation, so parts of models (such as their equilibrium-correction feedbacks) are transformed to differences in forecast mode, which increases forecast-error variances, but may reduce bias in the face of location shifts.

Forecast-error correction mechanisms (FErCMs): a classic FErCM is the exponentially-weighted moving-average model, which does well in forecasting competitions (see, e.g., Makridakis and Hibon 2000) and while primarily designed to correct recent past measurement errors, is also relatively robust to location shifts.

Forecast pooling has generated a vast literature—see Clemen (1989) for an early bibliography. Hendry and Clements (2004) show that when unanticipated location shifts have different effects on differently mis-specified models, the pooled model may give more accurate forecasts.

Information pooling is an alternative to pooling forecasts. Current approaches include diffusion indices and factor models: see Stock and Watson (1998), Forni et al. (2000) and Castle et al. (2013).

As an example of a robustification strategy, we consider differencing, using the model and notation established in Sect. 4. Suppose instead of forecasting with the estimated model, and calculating forecasts using (11), we take the first difference of the estimated model, yielding for 1-step forecasts:

An immediately apparent feature of (15) is the absence of the parameters shifted above; less obvious at a glance is that (15) is double differenced, in that \(\Delta ^2 \mathbf {x}_{T+h}\) is being forecast by the difference of the equilibrium-correction term. Castle et al. (2015) provide a number of possible interpretations of this differencing procedure, and of why the resulting forecasts might be more accurate. One suggestion they offer is to re-write the expression in (15) as:

and then regard \(\Delta \mathbf {x}_{T+h-1}\) as a highly adaptive estimator \(\widetilde{{\gamma }}\) of the current growth rate, and the previous value of the cointegrating combination, \(\widehat{{\beta }}^{\prime }\mathbf {x}_{T+h-2}=\widetilde{{\mu }}\) as an estimator of \({\mu }\). We use \(\widetilde{{\gamma }}\) and \(\widetilde{{\mu }}\) as shorthand for these estimates, although they both depend on T and h. In this interpretation, both \({\gamma }\) and \({\mu }\) are replaced by instantaneous estimators that are unbiased both before and some time after the population parameters have shifted, since for \(h>2\), \(\mathsf {E}[{\beta }^{\prime }\mathbf {x}_{T+h-2}] \simeq {\mu }^{*}\) and \(\mathsf {E}[\Delta \mathbf {x}_{T+h-1}] \simeq {\gamma }^{*}\).

This reinterpretation of the approach originally due to Hendry (2006) suggests a class of forecasting devices given by:

where the instantaneous estimates \(\widetilde{{\gamma }}\) and \(\widetilde{{\mu }}\) are replaced by local averages when \(r>1\) and \(m>1 \). The performance of these differenced models is considered in Sect. 7. Castle et al. (2015) analyze the performance of these forecasts under a number of scenarios, including when the DGP is unchanged, so that the original model’s forecasts are optimal, when there are measurement errors, and crucially, when there are location shifts, as in Sect. 4. For the latter, they find that these strategies reduce root mean-square forecast errors (RMSFEs) when the parameter shift is ‘large’ relative to the variance of the disturbance term in the original model. Further, when r and m span the available sample, the resulting estimators are close to \(\widehat{{\gamma }}\) and \(\widehat{{\mu }}\).

Finally, differencing the model as in (15) is equivalent to a particular form of intercept correction, specifically, adding the estimated model residual at the forecast origin to the forecast. To see this, write (15) as:

where we have added and subtracted \(\mathbf {\widehat{\gamma }}- \mathbf {\widehat{\alpha }\widehat{\mu }}\) on the right-hand side. Intercept corrections of this form were considered as a possible remedy by Clements and Hendry (1996), but have a long history as ‘add factors’, or a means of putting the forecasts ‘back on track’ in macro-modelling.

6 Partial Information to Help to Forecast a Break

In some instances, it may be possible to forecast a break, but that requires (1) the break to be predictable; (2) there is information relevant to that predictability; (3) the information is available at the forecast origin; (4) the forecasting model already embodies that source of information; (5) there is an operational method for selecting an appropriate model; and (6) the resulting forecasts are usefully accurate. Castle et al. (2011) consider the conditions under which this will be possible, and in so doing distinguish between two information sets: one for ‘normal forces’ and one for ‘break drivers’. The break drivers need not be conventional economic data, but could encompass legislative changes, acts of terrorism, war or natural disasters, or other events. High-frequency information such as Google Trends and prediction markets may also be useful for determining shifts. Break drivers could be modelled as a non-linear ogive, so would need to feature within non-linear, dynamic models with multiple breaks, leading to the possibility that there may be more candidate variables, N, than observations, T. Automatic model selection algorithms, available in software such as Autometrics, allow for \(N>T\), and so facilitate such an approach (see Doornik 2009a, b).

When, as often seems likely, accurate forecasting of breaks is not possible, it may be possible to model breaks during their progress, perhaps by threshold models as in Teräsvirta et al. (2011). However, Castle et al. (2011) find that modelling the progress of a break requires theoretical assumptions about the shape of the break function and restrictions on the number of its parameters to be estimated, so after a location shift, is not much better than using robust devices.

Indicator saturation using flexible break functions provides a new possibility when similar breaks have occurred previously. Pretis et al. (2016) apply this approach in dendrochronology to estimate the impacts of volcanic eruptions on temperature. Modelling breaks at or near the forecast origin can also improve nowcasting: see inter alia, Castle and Hendry (2010), Marcellino and Schumacher (2010), and the survey article in Bánbura et al. (2011). Breaks at the forecast origin with just one data point are observationally equivalent to measurement errors, which may be revised later. This is an important problem for nowcasting, as ‘corrections’ for breaks or measurement errors work in opposite directions, so incorrect attribution can exacerbate a nowcast error, although higher frequency data and the behaviour of revisions can help distinguish the source after a few periods.

7 Empirical Illustration

We provide two illustrations of forecasting. The first considers UK output growth, denoted \(\Delta y_{t}\) during the Great Recession, and the second extends the example of forecasting the UK unemployment rate, \(U_{r,t}\), in Clements and Hendry (2006). That the second half of the last century and first decade of this century were punctuated by relatively sudden shifts is evident from Fig. 1, which plots the annual changes in quarterly UK real GDP over this period, as well as the dates of estimated shifts in the growth rate. Many of the shifts found correspond to major unanticipated policy changes, so even the growth rate is not a stationary process, although its time series ‘looks like’ erratic cyclical behaviour.

The ‘well-specified’ models we consider include univariate (AR) and vector autoregressions (VARs), and cointegrated systems, VEqCMs, selected by Autometrics as parsimonious, congruent reductions of a more general unrestricted model (GUM), allowing for in-sample outliers and location shifts. Outliers and shifts are detected by impulse-indicator saturation (IIS: see Hendry et al. 2008; Johansen and Nielsen 2009; Castle et al. 2012) and step-indicator saturation respectively (SIS: see Castle et al. 2015). Thus, if \(\left\{ 1_{\left\{ j=t\right\} },t=1,\ldots ,T\right\} \) denotes the complete set of T impulse indicators and \(\left\{ 1_{\left\{ j\le t\right\} },t=2,\ldots ,T\right\} \) the corresponding set of \(T-1\) step indicators, then \(\left\{ 1_{\left\{ j=t\right\} },t=1,\ldots ,T\right\} \) and \(\left\{ 1_{\left\{ j\le t\right\} },t=2,\ldots ,T\right\} \) are included in the initial set of candidate variables in the GUM to search over. Exemplars of the robust device class include random walks (RWs), double differenced devices like (15) (DDDs), and smoothed variants thereof as in (17) above (SDDs), differenced VARs (DVARs) and linear combinations of all of these (Pooled), defined in Table 1.

Forecasts for variables denoted \(x_{T+j}, j=1,\ldots ,H\) are evaluated using 1-step biases and RMSFEs:

where \(x_{t}\) is \(\Delta _{4}y_{t}\), \(\Delta y_{t}\) or \(U_{r,t}\).Footnote 2 RMSFE is valid for 1-step ahead forecasts, but Clements and Hendry (1993a, b) propose using the generalised forecast-error second-moment criterion (GFESM) which accounts for covariances between forecasts at different horizons: Hendry and Martinez (2015) provide an extension.

7.1 Output Growth

We apply a range of forecasting models to UK GDP growth over the ‘Great Recession’. We use the preliminary release of GDP data available at the end of the forecast horizon, so although the forecasts are not based on real-time data, they replicate the information available in 2011Q2, so ex post revisions only available after the forecast horizon are not used. We focus on a single vintage rather than real-time data to directly analyse the impact of structural breaks. Although in practice policy-makers must work with real-time data, using the time-series dimension of real-time vintages includes any changes in methodology, concepts or classifications, inducing apparent structural breaks which are an artifact of changing the measurement system.Footnote 3 By analysing a single vintage we abstract from these additional important effects. For the multivariate forecasts, we extend the dataset to include a measure of inflation and interest rates.Footnote 4 Table 1 summarises the forecasting models examined.

The in-sample estimation period is 1958Q1 to 2007Q4 with 14 forecast period observations from 2008Q1 to 2011Q2. 1-step ahead forecasts are computed for annual and quarterly GDP growth (for quarterly GDP growth replace \(\Delta _{4} y_{t}\) with \(\Delta y_{t}\) in Table 1). Note that differenced impulse and step indicators have no effect on the forecasts. Table 2 records the bias and RMSFE, with smallest bias or RMSFE in bold and second smallest in italics: as shown in Clements and Hendry (1993a), the ranking varies with the transformation of \(\Delta _4\) or \(\Delta \). Figure 2 records the forecasts for a range of forecasting models and devices for annual GDP growth, and Fig. 3 records the squared forecast errors from these models: both figures are plotted on the same vertical axis scale for comparison.

The non-robust models, including AR, AR_IS, VAR and VAR_IS, are designed to capture the in-sample data characteristics and therefore have the smallest in-sample standard errors of the models considered. However, they are not robust to location shifts, an example of which is clearly evident in the data in 2008. The figures demonstrate the large forecast errors made using these models over the ‘Great Recession’. In contrast, intercept correction or differencing results in no significant forecast failure over this deep recession. Alternative robust devices also avoid the significant forecast failure evident in the ‘model-based’ forecasts, at the cost of a large forecast error variance seen by the wider \(\pm 2\widehat{\sigma }\) bands.

The best performing models over the great recession are devices that robustify the ‘congruent’ in-sample models, using their parameter estimates. For annual GDP growth, the AR models with intercept correction are preferred on a RMSFE criterion, though the DVAR is preferred for quarterly output growth. Although the VAR produces some of the worst forecasts, using the same parameter estimates in a robust DVAR delivers some of the best forecasts. This highlights the divergence between selecting models based on in-sample congruency criteria, and using the resulting models for forecasting.

7.2 Unemployment Rate

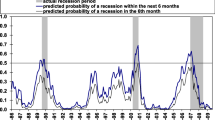

As a comparison to the GDP growth forecasts—which highlighted the need for robust forecasting devices when location shifts are present—we now consider forecasts of the UK unemployment rate over roughly the same period. We use an annual dataset of the unemployment rate over 1865–2014, updated over 1870–1913 from archival records by Boyer and Hatton (2002), and develop ‘model-based’ forecasts of the unemployment rate based on Clements and Hendry (2006), who show that the unemployment rate and the real interest rate minus the real growth rate, which we denote \(R_{r,t}\), are cointegrated, or co-break. Therefore, our dataset consists of the annual unemployment rate and \(R_{r,t} = R_{l,t}-\Delta p_{t}-\Delta y_{t}\), where \(R_{l,t}\) is the long-term bond interest rate, \(\Delta p_{t}\) is annual inflation measured by the implicit deflator of GDP, and \(\Delta y_{t}\) is annual real GDP growth. This ‘structural’ model is based on steady-state growth theory, such that the unemployment rate rises when the real interest rate exceeds the real growth rate, and vice versa. Hendry (2001) explains the basis for this relationship, which has held relatively constantly for 150 years with a long-run equilibrium unemployment rate of 5 % having a 1–1 response to \(R_{r,t}\), with a t-value of 10, established well before the Great Recession.

Figure 4 compares short-sample models of UK unemployment: (a) using \(R_{r,t}\); (b) using \(\Delta y_{t}\). As can be seen, the forecasts of the former are far better. A fall in GDP of 6 % would usually lead to a large rise in unemployment in the UK, which did not happen, but is what panel (b) illustrates. \(R_{r,t}\) reflects the dramatic reduction in \(R_{l,t}\) brought about by the Bank of England lowering its discount rate to 0.5 % and undertaking massive quantitative easing to lower longer-term interest rates, even though inflation remained above target for some time.

The models are estimated over 1865–2008, with 6 observations retained for forecasting from 2009–2014. As there are few forecast observations, a comparison was also made with two other periods in which structural breaks had or were occurring, namely 1944–1949 and 1975–1980. Table 3 lists the range of models and devices considered. For 1-step ahead forecasts, the differenced VAR/VEqCM and intercept-corrected models’ forecasts are identical.

Figures 5 and 6 record the forecasts and squared forecast errors respectively for the models and devices considered. The key result is that there is no forecast failure for unemployment over this ‘Great Recession’ period. Despite the difficulty of forecasting GDP growth, there is little difficulty in forecasting unemployment regardless of the model or device used. ‘Model-based’ forecasts with IIS and SIS for in-sample breaks and outliers forecast the best, both on Bias and RMSFE. Robustification through differencing or intercept correction yields no benefit in the situation where there is no evident location shift in the data. This is most clearly observed in Fig. 6, where in 2010 the differenced/intercept-corrected devices for both the VAR and EqCM are worse than the model-based forecasts.

The results in Table 4 are sample specific: for alternative periods where the unemployment rate exhibits a location shift, then the robust devices beat the ‘model-based’ forecasts. For the period 1944–1949, the RW and DDD are the preferred devices based on minimizing Bias and RMSFE, whereas for 1975–1980, VAR_IS_IC and the DDD are preferred. Thus, best in-sample fit is no guarantee of forecast success when shifts can occur (results available on request).

8 Conclusions

We reviewed a range of issues confronting empirical modellers and forecasters facing wide-sense non-stationary processes subject to both stochastic trends and location shifts. A key finding is that traditional models, such as the VAR or VEqCM need not be the best models out-of-sample. The stark contrast in forecast performance of these models for GDP growth and the unemployment rate highlight the importance of out-of-sample location shifts for forecast rankings. The GDP growth results are in line with the forecasting theory described above: robust devices are able to adapt more rapidly to location shifts. The severity of the recession was not captured well by the ‘model-based’ forecasts, but systematic mistakes were avoided by using intercept corrections and differencing. This does not render the in-sample models useless. The parameter estimates from these models were essential in forming the robust devices. Hence, the ‘model-based forecasting theory’ is still used, but in a transformed way to make the forecasts more robust after location shifts. The devices that used such information tended to forecast better than the ad hoc robust devices such as the RW and DDD that do not use such information. The Pooled forecast never came first or second here.

It is remarkable that the unemployment rate can be forecast with ease over the ‘Great Recession’, a period that experienced substantial structural change.Footnote 5 The 2 % fall in real GDP at the end of the ‘Lawson boom’ in 1991–1992 led to unemployment rising from 7 to 10 %, whereas the 6 % fall in real GDP in 2008–2009 was followed by unemployment rising from 5.5 % to just 8 %, an incredibly small response for the largest output fall in the post-war period. Nevertheless, the results for unemployment also support our forecasting theory, which established that forecast failure is mainly due to structural breaks, hence the absence of location shifts in the data over the forecast horizon entails that the ‘model-based’ forecasts should do well. What may have occurred is a significant extent of co-breaking in the determining variables of the unemployment rate, so that the location shifts that are clearly evident in the real growth rate, a key variable in \(R_{r,t}\), are cancelled by shifts in the real interest rate. This suggests ‘Quantitative Easing’ (QE) could have been useful. Factors such as more flexible labour markets, more part-time work and shorter contract hours, an increase in underemployment, people moving out of the labour force during the recession, more self-employment, and more flexible pay enabling firms to weather weaker demand without lay-offs, all helped limit the rise in unemployment. Our simplistic ‘traditional’ models, the VAR and EqCM, do not model any of these interactions, and yet they forecast well, so the plethora of effects on the unconditional mean of the data must have approximately cancelled. The various robustification methods give markedly different results, so being able to predict which is likely to do well is one of the challenges for the future. And as business cycles have not ceased, their modelling and forecasting remains a key activity to avoid excessive social costs from the volatility of economic activity.

Notes

Detailed results are available on request.

Examples of methodological changes to GDP measurement over the sample period include the European System of Accounts 1995, introduced in 1998, results from the Annual Business Inquiry and improvements to the Business Register from which the ONS conducts its surveys in 2001, and annual chain-linking along with improvements in some price deflators in 2003.

The GDP data are given by the July 2011 vintage release of ABMI, seasonally adjusted real GDP at market prices. The measure of inflation used is the GDP deflator, vintage July 2011, ONS code: YBHA/ABMI. The data are taken from the Bank of England real-time database, see http://www.bankofengland.co.uk/statistics/pages/gdpdatabase/default.aspx. The interest rate is the Bank of England base rate available from www.bankofengland.co.uk. For the multivariate models, the GDP deflator data is only available to 2011Q1 for the given vintage, so we compute forecasts for the 13 out-of-sample observations rather than 14 observations for all the other forecast devices.

Similar findings of some variables easy to forecast and others hard are reported by Bårdsen et al. (2012).

References

Bánbura, M., Giannone, D., & Reichlin, L. (2011). Nowcasting. In Clements, M. P., & Hendry, D. F. (Eds.). Oxford handbook of economic forecasting, Chap 7. Oxford: Oxford University Press.

Banerjee, A., Dolado, J. J., Galbraith, J. W., & Hendry, D. F. (1993). Co-integration, error correction and the econometric analysis of non-stationary data. Oxford: Oxford University Press.

Bårdsen, G., Kolsrud, D., & Nymoen, R. (2012). Forecast robustness in macroeconometric models. Working paper, Norwegian University of Science and Technology, Trondheim.

Box, G. E. P., & Jenkins, G. M. (1970). Time series analysis, forecasting and control. San Francisco: Holden-Day.

Boyer, G. R., & Hatton, T. J. (2002). New estimates of British unemployment, 1870–1913. Journal of Economic History, 62, 643–675.

Castle, J. L., Clements, M. P., & Hendry, D. F. (2013). Forecasting by factors, by variables, by both or neither? Journal of Econometrics, 177, 305–319.

Castle, J. L., Clements, M. P., & Hendry, D. F. (2015). Robust approaches to forecasting. International Journal of Forecasting, 31, 99–112.

Castle, J. L., Doornik, J. A., & Hendry, D. F. (2012). Model selection when there are multiple breaks. Journal of Econometrics, 169, 239–246.

Castle, J. L., Doornik, J. A., Hendry, D. F., & Pretis, F. (2015). Detecting location shifts during model selection by step-indicator saturation. Econometrics, 3(2), 240–264.

Castle, J. L., Fawcett, N. W. P., & Hendry, D. F. (2009). Nowcasting is not just contemporaneous forecasting. National Institute Economic Review, 210, 71–89.

Castle, J. L., Fawcett, N. W. P., & Hendry, D. F. (2010). Forecasting with equilibrium-correction models during structural breaks. Journal of Econometrics, 158, 25–36.

Castle, J. L., Fawcett, N. W. P., & Hendry, D. F. (2011). Forecasting breaks and during breaks. In M. P. Clements & D. F. Hendry (Eds.), Oxford handbook of economic forecasting, (pp. 315–353). Oxford: Oxford University Press.

Castle, J. L., & Hendry, D. F. (2010). Nowcasting from disaggregates in the face of location shifts. Journal of Forecasting, 29, 200–214.

Clemen, R. T. (1989). Combining forecasts: A review and annotated bibliography. International Journal of Forecasting, 5, 559–583.

Clements, M. P., & Hendry D. F. (1993a). On the limitations of comparing mean squared forecast errors. Journal of Forecasting 12, 617–637. (With Discussion).

Clements, M. P., & Hendry, D. F. (1993b). On the limitations of comparing mean squared forecast errors: A reply. Journal of Forecasting, 12, 669–676.

Clements, M. P., & Hendry, D. F. (1996). Intercept corrections and structural change. Journal of Applied Econometrics, 11, 475–494.

Clements, M. P., & Hendry, D. F. (1998). Forecasting economic time series. Cambridge: Cambridge University Press.

Clements, M. P., & Hendry, D. F. (1999). Forecasting non-stationary economic time series. Cambridge, MA: MIT Press.

Clements, M. P., & Hendry, D. F. (2002). Explaining forecast failure in macroeconomics. In M. P. Clements & D. F. Hendry (Eds.), A companion to economic forecasting (pp. 539–571). Oxford: Blackwells.

Clements, M. P., & Hendry, D. F. (2006). Forecasting with breaks. In G. Elliott, C. W. J. Granger, & A. Timmermann (Eds.), Handbook of econometrics on forecasting (pp. 605–657). Amsterdam: Elsevier.

Corsi, P., Pollock, R. E., & Prakken, J. C. (1982). The Chow test in the presence of serially correlated errors. In G. C. Chow & P. Corsi (Eds.), Evaluating the reliability of macro-economic models. New York: Wiley.

Doornik, J. A. (2009). Autometrics. In J. L. Castle, & N. Shephard (Eds.), The methodology and practice of econometrics, (pp. 88–121). Oxford: Oxford University Press.

Doornik, J. A. (2009b). Econometric model selection with more variables than observations. Working paper, Economics Department, University of Oxford.

Forni, M., Hallin, M., Lippi, M., & Reichlin, L. (2000). The generalized factor model: Identification and estimation. Review of Economics and Statistics, 82, 540–554.

Friedman, W. A. (2014). Fortune tellers: The story of America’s first economic forecasters. Princeton: Princeton University Press.

Frisch, R., & Waugh, F. V. (1933). Partial time regression as compared with individual trends. Econometrica, 1, 221–223.

Hendry, D. F. (1979). The behaviour of inconsistent instrumental variables estimators in dynamic systems with autocorrelated errors. Journal of Econometrics, 9, 295–314.

Hendry, D. F. (1995). Dynamic econometrics. Oxford: Oxford University Press.

Hendry, D. F. (2001). Modelling UK inflation, 1875–1991. Journal of Applied Econometrics, 16, 255–275.

Hendry, D. F. (2006). Robustifying forecasts from equilibrium-correction models. Journal of Econometrics, 135, 399–426.

Hendry, D. F., & Clements, M. P. (2004). Pooling of forecasts. The Econometrics Journal, 7, 1–31.

Hendry, D. F., Johansen, S., & Santos, C. (2008). Automatic selection of indicators in a fully saturated regression. Computational Statistics 33, 317–335. (Erratum, 337–339).

Hendry, D. F., & Juselius, K. (2000). Explaining cointegration analysis: Part I. Energy Journal, 21, 1–42.

Hendry, D. F., & Juselius, K. (2001). Explaining cointegration analysis: Part II. Energy Journal, 22, 75–120.

Hendry, D. F., & Martinez, A. B. (2015). Evaluating multi-step system forecasts with relatively few forecast-error observations. Economics Department, Oxford University, Unpublished paper.

Johansen, S. (1988). Statistical analysis of cointegration vectors. Journal of Economic Dynamics and Control, 12, 231–254.

Johansen, S., & Nielsen, B. (2009). An analysis of the indicator saturation estimator as a robust regression estimator. In J. L. Castle, & N. Shephard (Eds.), The methodology and practice of econometrics (pp. 1–36). Oxford: Oxford University Press.

Klein, L. R. (1947). The use of econometric models as a guide to economic policy. Econometrica, 15, 111–151.

Klein, L. R. (1971). An essay on the theory of economic prediction. Chicago: Markham Publishing Company.

Makridakis, S., & Hibon, M. (2000). The M3 competition: Results, conclusions and implications. International Journal of Forecasting, 16, 451–476.

Marcellino, M., & Schumacher, C. (2010). Factor-MIDAS for nowcasting and forecasting with ragged-edge data: A model comparison for German GDP. Oxford Bulletin of Economics and Statistics, 72, 518–550.

Miller, P. J. (1978). Forecasting with econometric methods: A comment. Journal of Business, 51, 579–586.

Pretis, F., Schneider, L., Smerdon, J. E., & Hendry, D. F. (2016). Detecting volcanic eruptions in temperature reconstructions by designed break-indicator saturation. Journal of Economic Surveys, 30, 403–429.

Shoup, C., Friedman, M., & Mack, R. P. (1941). Amount of taxes needed in June 1942 To Avert Inflation: A Preliminary Report Submitted to a Joint Committee of the Carnegie Corporation and the Institute of Public Administration. Washington, DC: Mimeo: Institute of Public Administration.

Smith, B. B. (1929). Judging the forecast for 1929. Journal of the American Statistical Association, 24, 94–98.

Stock, J. H., & Watson, M. W. (1996). Evidence on structural instability in macroeconomic time series relations. Journal of Business and Economic Statistics, 14, 11–30.

Stock, J. H., & Watson, M. W. (1998). Diffusion indices. Working paper, 6702, NBER, Washington.

Teräsvirta, T., Tjøstheim, D., & Granger, C. W. J. (2011). Modelling nonlinear economic time series. Oxford: Oxford University Press.

Zarnowitz, V. (2004). An important subject in need of much new research. Journal of Business Cycle Measurement and Analysis, 1, 1–7.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was supported in part by grants from the Institute for New Economic Thinking, Robertson Foundation, and Statistics Norway (through Research Council of Norway Grant 236935). We are indebted to Jurgen A. Doornik, Andrew B. Martinez, Bent Nielsen, Felix Pretis, two anonymous referees and the Editor of the Journal of Business Cycle Research for helpful comments on an earlier version.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Castle, J.L., Clements, M.P. & Hendry, D.F. An Overview of Forecasting Facing Breaks. J Bus Cycle Res 12, 3–23 (2016). https://doi.org/10.1007/s41549-016-0005-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41549-016-0005-2