Abstract

Rather than relying on a potentially poor point estimate of a coefficient break date when forecasting, this paper proposes averaging forecasts over sub-samples indicated by a confidence interval or set for the break date. Further, we examine whether explicit consideration of a possible variance break and the use of a two-step methodology improves forecast accuracy compared with using heteroskedasticity robust inference. Our Monte Carlo results and empirical application to US productivity growth show that averaging using the likelihood ratio-based confidence set typically performs well in comparison with other methods, while two-step inference is particularly useful when a variance break occurs concurrently with or after any coefficient break.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The pervasiveness of structural breaks in many macroeconomic time series is widely acknowledged (Stock and Watson 1996; Paye and Timmermann 2006) and they are an important source of a forecast failure (Hendry 2000; Hendry and Clements 2003). This paper considers a scenario in which a discrete and permanent change in model coefficients may occur during the sample period used for estimation. Various forecast methods and strategies are proposed in the literature to deal with such a possibility, and these can be broadly classified into those that employ an estimated break date and robust methods that treat the break date as unknown.

When the timing of a break is known or estimated precisely, unbiased estimates of the coefficients can be obtained using observations after the break, leading to the post-break window forecast. However, even with the accurate estimate of a break, if the length of the post-break sample is relatively short, the post-break coefficients and size of the break may be poorly estimated. Although the use of pre-break observations introduces estimation bias, it is often optimal to include some pre-break observations in the estimation sample to reduce the forecast error variance, as shown analytically and empirically by Pesaran and Timmermann (2004, 2005, 2007). The forecast accuracy of such methods heavily relies on how well the true break date is estimated and, in practice, theoretical gains may not be fully exploited as estimates of break dates can be imprecise (Elliott 2005; Paye and Timmermann 2006). A different approach is taken by Inoue et al. (2017), who propose using a rolling window with the optimal estimation window size selected by minimizing the conditional mean square forecast error.

Rather than producing a forecast based on a single estimation window, recent findings suggest that forecast combination methods that average over a model estimated with different sizes of windows often produce more accurate forecasts (Pesaran and Pick 2011; Eklund et al. 2013; Tian and Anderson 2014; Koo and Seo 2015; Boot and Pick 2020). Such methods typically assume the date of a break is unknown, and hence distortions from imprecise break date estimates are generally mitigated by averaging. However, other factors play a role, with forecast combination methods working well when breaks are small, occur frequently, towards the end of the sample or affect only the variance, and can perform relatively poorly in the presence of large breaks (Pesaran and Timmermann 2007; Pesaran and Pick 2011; Eklund et al. 2013). On the other hand, large breaks are easier to detect and hence a carefully selected single estimation window based on break date information can be preferable for forecasting (Pesaran and Timmermann 2007; Pesaran et al. 2013).

These results suggest that information on the nature of a possible break is important in deciding whether to use such information when forecasting or to adopt forecast methods that are robust to a presence of a possible break. This paper proposes a simple but intuitive approach based on forecast combinations and explicitly using break date estimates. To be specific, we propose employing a confidence interval or confidence set for the estimated break date instead of relying on a point estimate which may be poorly identified. We treat each date in the confidence interval/set as one of a sequence of choices for the potential break date and the corresponding post-break window forecasts are averaged. This incorporates information on the size of a break, since a break that is large (relative to the sample size) implies a narrow interval. Our approach is designed to improve on existing robust methods that combine forecasts from all possible windows (Pesaran and Pick 2011), by excluding windows that use less relevant data and which can yield large forecast errors. Koo and Seo (2015) employ a similar approach in the context of a misspecified model, but our interest lies in the situation where the model is correctly specified.Footnote 1 We employ the break date confidence interval originally proposed by Bai (1997), which is widely employed in the structural break methodology of Bai and Perron (1998), and the confidence set of Eo and Morley (2015). Furthermore, to shed light on the nature of a detected break and to gain efficiency in estimation, a stepwise testing approach for changes in individual coefficients can be incorporated into the method.

A second contribution of this paper lies in the consideration given to the role of variance breaks. Although these are often overlooked in the forecast literature, it is known that tests for the presence of coefficient breaks are affected when breaks can also occur in the disturbance variance (Bai and Perron 2006). To be explicit, we consider the situation where both the coefficients and disturbance variance may be subject to a single structural break during the sample period, but these two breaks do not necessarily occur concurrently. Under such circumstances, the forecaster may apply a heteroskedasticity consistent (HC) procedure to test for a coefficient break. Another option is to explicitly examine the possibility of variance change and we investigate whether taking this route by use of a two-step break point testing methodology can improve forecast accuracy compared with HC testing. The procedure we employ, which allows the possibility of distinct coefficient and variance breaks occurring during the estimation sample, is built on Bataa et al. (2013) and also adapted by Altansukh et al. (2017).

Forecast performance is assessed through both Monte Carlo simulations and an empirical application to US productivity growth series. Our confidence interval/set approach is compared with widely advocated forecast approaches, including post-break, trade-off, cross-validation and window averaging methods proposed by Pesaran and Timmermann (2007) and Pesaran and Pick (2011). The simulation results show that our method performs well when the confidence set of Eo and Morley (2015) is employed, regardless of the size and nature of a break, with the empirical application supporting its usefulness for improving forecast accuracy in the presence of structural change. Further, the two-step testing approach is also generally beneficial, and this is especially the case when either no coefficient break applies or when a coefficient break occurs concurrently with or prior to a variance break.

This paper proceeds as follows. Section 2 outlines the confidence interval forecast method and describes the structural break inference methods that we employ. Section 3 sets up the Monte Carlo simulations and the simulation results are presented in Sect. 4. Section 5 examines the performance of forecast methods for US productivity growth and Sect. 6 concludes.

2 Methodology

This section outlines the forecasting methods that we employ, focusing particularly on variance breaks and exploiting information contained in coefficient break date confidence intervals/sets.

2.1 Forecast methods

For forecasting purposes, consider the dynamic model

where \({\mathbf {x}}_{t-1}\) is a \(k\times 1\) vector of regressors whose values are known at time \(t-1\), \({\beta }_{t}\) is the \(k\times 1\) coefficient vector for \({{x}}_{t-1},\)while \(\varepsilon _{t}\) is an error term that is serially uncorrelated and uncorrelated with \({\mathbf {x}} _{t-1}\). The regressor vector \({\mathbf {x}}_{t-1}\) will typically include at least one lag of \(y_{t}\), but in the absence of structural breaks in (1) \({\mathbf {x}}_{t-1}\) is covariance stationary. Using sample period data for \(t=1,\ldots ,T\), our interest lies in forecasting future values of y when \({\beta }_{t}\) and the disturbance variance \(\sigma _{t}^{2}\,\) may each be subject to a single within sample structural break, with the two types of change not necessarily coinciding. In particular, \(y_{T+h}\) (\( h=1,2,..\)) is to be forecast using the observationsFootnote 2\(\Gamma _{T}=\{{\mathbf {x}}_{t}:t=1,2,\ldots ,T\},\) recognizing that, in practice, neither the occurrence of breaks nor the date(s) at which they occur are known. Our aim is to exploit structural break inference information to improve forecast accuracy.Footnote 3

Ignoring any possible structural break(s), the full sample one-step ahead forecast is

where \({{\hat{\beta }}}_{1:T}\) is obtained by applying OLS estimation to (1) using all T sample observations. Even when breaks may occur, this full sample forecast provides a benchmark for assessing the performance of methods which allow the possibility of breaks. Now suppose that, by some appropriate method, a structural break in the coefficient vector is estimated to have occurred at \(t={\hat{T}}_{c}\), where \(1<{\hat{T}}_{c}<T\). With sufficient observations available to estimate the coefficient vector in the period after the estimated break, the usual post-break forecast is

where \({{\hat{\beta }}}_{{\hat{T}}_{c}+1:T}\) is obtained by OLS using observations \(t={\hat{T}}_{c}+1,{\hat{T}}_{c}+2,\ldots ,T\).

If variance breaks may be present, heteroskedastic consistent (HC) inference can be employed for coefficient break testing, with OLS then applied to (2) or (3) as appropriate. Although asymptotically valid, the simulation evidence of Bai and Perron (2006) and Pitarakis (2004) indicates that HC inference leads to over-sized coefficient break tests in finite samples. This can be serious for forecasting, because a false conclusion that a break exists leads to a reduced effective sample size for coefficient estimation, implying a loss of efficiency and an increase in theoretical mean square forecast error.

Following Pitarakis (2004), an alternative to HC inference is to use a feasible generalized least squares (FGLS) procedure for coefficient break inference. To our knowledge, study to date has not examined whether the use of FGLS improves forecast accuracy over OLS in the presence of possible structural breaks. Sect. 2.2 provides details of our FGLS structural break testing methodology. Denoting the period of a variance break as \(t=\) \(T_{v}\) (\(1<T_{v}<T),\) it should be noted that if \({\hat{T}}_{c}\ge {\hat{T}}_{v}\), then the OLS and FGLS estimators will be identical in (3).

Clearly, even if a coefficient break has occurred, the point estimate \(\hat{T }_{c}\) does not capture the uncertainty associated with break date estimation. To reflect this, we also investigate whether use of a confidence interval (or set) can improve forecast accuracy compared with a possibly poor single coefficient break date estimate. For convenience of exposition, assume that the dates within the confidence interval are contiguous and denote the interval as \([{\hat{T}}_{cL},{\hat{T}}_{cU}]\), where \({\hat{T}}_{cL}\) and \({\hat{T}}_{cU}\) are the lower and upper bounds of the confidence interval, respectively (see Sect. 2.3). Treating each date in the interval as one of a sequence of choices for the potential break date, the corresponding post-break window forecasts can be averaged to yield the confidence interval forecast

The expression in (4) is also appropriately amended in the obvious way when the forecast is obtained by averaging over a (non-contiguous) confidence set for the break date.

Note that averaging as in (4) effectively gives greatest weight to sample observations for \(t>{\hat{T}}_{cL}\), since these contribute to each forecast in the average, with progressively less weight given to observations earlier in the confidence interval or set. Since the interval or set will be longer when \(\sigma _{t}\) in (1) is larger or the magnitude of the break is smaller, these circumstances lead to the forecast in (4) giving relatively greater weight to sample observations for \(t> {\hat{T}}_{cL}\) compared with a low volatility or large break setting. In other words, in circumstances when the timing of the break is doubtful, greater weight is placed on observations that can be reliably classified as post-break, but with some weight also placed on earlier observations that also fall within the interval or set.

To shed light on the nature of a detected coefficient break and to gain (potential) efficiency in estimation when the null hypothesis of no break is rejected, we also examine whether testing for change in the individual coefficients of the model improves forecast accuracy. To allow for possible variance change, a standard HC t test is applied to each individual coefficient in the model to examine whether the values differ in the pre- and post-break sub-samples, treating the coefficient break date as known. If all changes are significant, forecasts are obtained using the post-break sub-sample, as in (3). Otherwise, the coefficient with the least significant change is restricted to be constant over time and the model is re-estimated. The remaining coefficients are again tested individually and the procedure continues until all remaining coefficients are either specified as constant or exhibit significant change at the estimated break date. If the model reduces to one in which only one coefficient has a break and the change in this coefficient is not significant, the whole sample forecast is used despite the initial finding of a coefficient break. This stepwise testing approach is combined with the confidence interval forecast of (4) by conducting stepwise coefficient equality testing at each potential break date in the interval.

When variance breaks are explicitly taken into account through FGLS estimation, the procedure just described is applied using standard (OLS) t tests for breaks in each individual coefficient of the FGLS-transformed model. It might also be noted that the application of individual coefficient tests implies the use of some pre-break data in obtaining \({\hat{\beta }}_{{\hat{T}}_{c}+1:T}\) and in this case the OLS and FGLS estimators are no longer necessarily identical when \({\hat{T}}_{c}\ge {\hat{T}}_{v}\).

The methodology employed for structural break testing is discussed in the next subsection, with the subsequent subsection considering the construction of confidence intervals for the break date. Throughout the paper, all hypothesis tests are conducted at the nominal (asymptotic) 5% level of significanceFootnote 4 and the nominal confidence of all confidence intervals/sets is 95%. To ensure sufficient observations are available for estimation and structural break inference, the range of possible break dates is restricted to \({\underline{w}}<{\hat{T}} _{i}<T-{\underline{w}}\) for both the coefficients and variance (\(i=c\) or v). Our results use \({\underline{w}}=0.1T\), so that the minimum estimation window for the post-sample estimator of (3) is 10% of the full sample data. When a confidence interval forecast is employed, \({\hat{T}} _{cU}+1 \) in the summation of (4) is replaced by \(\min ({\hat{T}} _{cU}+1,T-{\underline{w}})\) and if \(T-{\underline{w}}<{\hat{T}}_{cU}+1\) the denominator is correspondingly adjusted.

Through a simulation analysis in Sect. 4, the performance of the methods we propose are compared with trade-off and cross-validation procedures proposed in an influential paper by Pesaran and Timmermann (2007) . Our comparison also includes the forecast combination method that averages over all possible estimation windows, which is proposed by Pesaran and Timmermann (2007) and analytically developed by Pesaran and Pick (2011).Footnote 5 Further information relating to these methods is provided in “Appendix A”, but note that the cross-validation methods we employ do not use estimated break date information.Footnote 6

2.2 Structural break testing methodology

The most commonly employed methodology for structural break inference in econometrics is that of Bai and Perron (1998), and our approach is based on their methodology. We investigate both the HC approach of Bai and Perron (1998) and also a two-step FGLS procedure when testing for a coefficient break in (1). In both cases, the outcome of the test determines whether the full-sample or post-break forecast is employed.

Our two-step FGLS method is based on Bataa et al. (2013), who generalize an approach suggested by Pitarakis (2004) to allow the possibility that coefficient and variance breaks are not necessarily concurrent.Footnote 7 In outline, our procedure is:

-

Step 1 Preliminary coefficient break test The Bai and Perron (1998) structural break testing procedure is applied to \( {\beta }\) of (1) employing HC inference. After allowing for a detected coefficient break, the residuals (\({\hat{\varepsilon }}_{t}\)) are employed in the test regression

$$\begin{aligned} \sqrt{\frac{\pi }{2}}|{\hat{\varepsilon }}_{t}|=\zeta +\epsilon _{t} \end{aligned}$$(5)to which the homoskedastic testing is applied. If a break is detected in ( 5) at \({\hat{T}}_{v}\), the estimates of \(\zeta \) from the regimes \(t=1,\ldots ,{\hat{T}}_{v}\) and \({\hat{T}}_{v+1},\ldots ,T\) yield the estimated standard deviations for the respective detected variance regimes.

-

Step 2 Re-assessment of coefficient break If a break is detected for (5), the FGLS transformation is applied to the data; otherwise the original data are used. The presence of a coefficient break is then re-assessed employing homoskedastic inference.

The absolute value of the residuals from the initial OLS estimation is used in (5) rather than the mean of squared residuals because this is more robust to non-normality (Davidian and Carroll 1987; McConnell and Perez-Quiros 2000) and, further, our preliminary analysis found it yielded a better variance break date estimate.

When testing for a coefficient break using either HC inference or in the two-step procedure, the distribution of regressors is allowed to change at the break date,Footnote 8 but the disturbances are assumed to be serially uncorrelated. Since our interest focuses on the possibility of a single structural break in each of the coefficients and variance, a maximum of one break is considered in each step.

2.3 Confidence interval/set estimation

As already noted in the Introduction, after testing for coefficient breaks as described in Sect. 2.2, we employ two procedures for computing coefficient break dates confidence intervals/sets. The first is that of Bai (1997), which is widely available as part of the inference procedure of Bai and Perron (1998). The confidence interval is constructed using the asymptotic framework of break date estimation and relates to dates in the “neighbourhood” of the estimate \({\hat{T}}_{c}\) (see Bai 1997; Bai and Perron 1998); consequently, the confidence interval is contiguous around \({\hat{T}}_{c}\).

Despite the popularity of the Bai and Perron (1998) procedure, the coverage rates for the associated confidence intervals are often substantially below the nominal rates, as shown by Elliott and Müller (2007) and the simulation results of Bai and Perron (2006). The approach of Elliott and Müller (2007) and Eo and Morley (2015) is to invert the test statistic for a break, yielding a confidence set (not necessarily an interval) for the break date. We employ the confidence set of Eo and Morley (2015), which employs the likelihood ratio test statistic, as they find it provides good coverage with a smaller set of potential break dates than that of Elliott and Müller (2007).

Whether two-step or HC inference is employed for coefficient break date estimation, the original data are used for computing the confidence interval or set, with some account taken of a possible variance break (albeit contemporaneous with any coefficient break) by computing the confidence interval or set allowing variances to change with coefficient break regimes. In both cases, the distribution of regressors is allowed to change at the break date.

3 Monte Carlo simulations

Monte Carlo simulations are conducted to evaluate the forecast methodologies proposed in Sect. 2, with these based on the simulation setup of Pesaran and Timmermann (2007), with similar settings also adopted by Clark and McCracken (2005) and Tian and Anderson (2014). However, in addition to the simulation settings in these papers, we also consider data generating processes (DGPs) that exhibit change only in the intercept and DGPs with changes in both coefficients and variances with these changes not necessarily occurring at the same time.

The basic DGP is the bivariate VAR(1) process

with coefficient matrix

so that x Granger causes y and not vice versa, and disturbances that are normally distributed with covariance matrix

If \({\mathbf {A}}_{t}\) is time invariant and has eigenvalues strictly less than unity, the unconditional mean vector corresponding to (6) and (7) is

However, change in either \(\alpha _{t}\) or \(A_{t}\) leads to change in the corresponding steady-state means of (9).

Our interest is in situations where the marginal distribution for \(x_{t}\) (the driving variable) is constant over time, but that for \(y_{t}\) can exhibit structural breaks in the coefficients and/or disturbance variance. The cases considered (where the DGPs refer to Table 1) are:

-

1.

All coefficients constant over time, with \(\alpha =( \begin{array}{cc} 0.5&0.5 \end{array} )^{\prime }\), \(\beta _{11}=0.9,\) \(\beta _{12}=1,\) \(\beta _{22}=0.9\), together with time-invariant \(\sigma _{y}=1\) (DGP1), increasing variance (DGP2) or decreasing variance (DGP3);

-

2.

Dynamics that exhibit change (through \(\beta _{11t}\) and/or \(\beta _{12t}\)) but \(\mu _{t}\) constant over time with values as in #1 (hence \( \alpha _{t}\) changes), with either constant or changing variance (DGP4 to DGP10);

-

3.

Means that exhibit change through a break in the value of \(\alpha _{y}\) but constant dynamics as in #1, with either constant or changing variance (DGP11 to DGP14).

Details of parameter values are provided in Table 1.

The benchmark case, which uses OLS and all observations, is anticipated to provide the best forecasting performance for DGP1. DGPs 2 and 3 illustrate the effects of increasing and decreasing disturbance variances, with the post-break standard deviations increasing by a factor of 4 and declining by a factor of two, respectively. In DGPs 4 and 5, the autoregressive coefficient, \(\beta _{11t}\), decreases, with these labelled as small and large changes after the break, respectively. Similarly, the effects of small and large increases in the coefficient of the lagged exogenous variable, \( \beta _{12t}\), are considered in DGPs 6 and 7. DGP8 combines DGP4 and DGP7, with a single break affecting both \(\beta _{11t}\) and \(\beta _{12t}\) coefficients simultaneously. DGP9 and DGP10 introduce changes in both coefficients and variances, by combining DGP8 with 2 and 3, respectively. A break affecting only the mean of the series, namely an intercept shift, is illustrated in DGPs 11 and 12, considered as small and large, respectively. Finally, DGPs 13 and 14 examine situations in which a large mean increase is combined with increasing or decreasing variances after the break.

In order to examine the sensitivity of the break point location for forecasting performance, the simulations consider a single coefficient break occurring at 0.25T, 0.5T or 0.75T of the full sample of T observations. Further, in DGPs with changing variances, the single variance break occurs in the middle of the sample in combination with each coefficient break location or at 0.75T in combination with a mid-point coefficient break. Therefore, we consider scenarios where the coefficient and variance breaks occur either concurrently or at different times, and also whether a variance break precedes or follows a coefficient break. It can be noted that breaks occur only in the equation for \(y_{t}\) in (6 ) and inference is applied to this equation only.

We employ the Bai and Perron (1998) procedure to test for breaks in which we allow the possibility of one break with trimming \(\epsilon =0.10\) (10% of the full sample). All hypothesis tests are conducted at a nominal 5% level of significance, using the asymptotic critical values provided by Bai and Perron (1998), while the confidence intervals/sets have nominal 95% coverage. In line with Pesaran and Timmermann (2007), the methods of cross-validation or averaging across all samples to T consider a minimum sample size of 0.1T, while cross-validation reserves 0.25T observations for an out-of-sample evaluation.Footnote 9

To assess the impact of the sample size on forecasting performance, \(T=100\) and 200 are employed in the simulations. The DGP process in Eq. (6) starts from its pre-break unconditional mean. Specifically, each replication of each DGP is initialized using \((\begin{array}{cc} y_{t-1}&x_{t-1}\end{array})^{\prime }=\alpha _{t}=(\begin{array}{cc} 0.5&0.5\end{array})^{\prime }\), and simulating \( T_{0}+T+3\) observations for the corresponding DGP with \(T_{0}=100\). After discarding the first \(T_{0}\) observations, the observations \(1,\dots ,T\) are used for the parameter estimation to generate the forecasts.Footnote 10 In all cases, 5000 replications are employed.Footnote 11

Forecast accuracy is assessed using the empirical Mean Squared Forecast Error (MSFE), namely the average squared difference between forecast and realized values, computed as

where S denotes the number of simulations. In the results reported, the computed MSFE for each method is divided by the MSFE of the benchmark model which ignores the presence of possible breaks by applying the full sample OLS estimator. Ratios lower than 1 indicate better performances of the corresponding methods than the benchmark, and higher than 1 points to worse performances compared to the benchmark model.

4 Simulation results

Our simulation results are discussed in the first subsection for the special case where coefficient and disturbance breaks, when they occur, are concurrent at the mid-point of a sample of \(T=100\) observations. Subsequent subsections consider non-concurrent breaks for one-step ahead forecasts and \(T=100\) and (finally) larger sample results for \(T=200\).

4.1 Concurrent mid-sample breaks

Table 2 provides background inference results, while Table 3 and Appendix Table 8 report relative MSFEs (in relation to the benchmark model) for a range of forecast methods when any (coefficient or variance) break occurs in the middle of the sample of \(T=100\) observations. Table 3 and Appendix Table 8 differ only in that the latter sets the forecast period disturbance \(\varepsilon _{T+1}=0\) in (1).Footnote 12 By focusing on the trade-off between bias in coefficient estimation when pre-break information is included and efficiency gains from these additional observations (Pesaran and Timmermann 2007), Appendix Table 8 provides a clearer distinction between methods. However, the apparent gains it indicates are unattainable in realistic settings and hence our discussion focuses on Table 3.

From the empirical coefficient structural break test rejection rates (the percentage of cases in which a break is detected) in Table 2, it is evident that the two-step procedure improves on HC inference by substantially reducing the number of over-rejections in the DGPs with constant coefficients (DGP1-DGP3) while increasing the number of rejections in the DGPs where the HC test has low empirical power in DGPs 9 and 13. Since individual coefficients are tested only when an overall break is detected, these rates (expressed as the percentage of total replications for which constancy is rejected) are always less than the overall rejection rate. The empirical variance test rejection rate is also shown for the two-step method of Sect. 2.2.

For convenience, we use the abbreviation CI in Table 2 to refer to both confidence intervals and sets, with those associated with Bai and Perron (1998) and Eo and Morley (2015) referred to as BP and EM, respectively. In line with previous studies (including Bai and Perron 2006; Elliott and Müller 2007; Chang and Perron 2018; Bai 1997; Eo and Morley 2015), the EM set almost always has greater coverage of the true break date than the nominal 95%, whereas the BP interval exhibits under-coverage and this is often substantial. Although used only when coefficient constancy is rejected, the average CI length is shown for cases where coefficient breaks are rejected and where they are not, together with all cases.Footnote 13 With no coefficient or variance break in DGP1 and allowing for 10% trimming, the vast majority of sample points admissible as potential breaks fall within the EM set; hence it performs well in indicating the lack of information in the data about a coefficient break, whereas the BP interval includes substantially fewer observations; these results are in line with Eo and Morley (2015) and carry over in the presence of a variance break (DGPs 2, 3). Across all DGPs and methods, inclusion of only cases where the coefficient test is rejected reduces (or leaves unchanged) the average CI lengths, because these are cases where stronger evidence of a break is detected. As anticipated, both methods include more potential break points in the interval/set when the break is small compared with large breaks.

Due to the over-sizing of the HC break test (Table 2), post-break OLS estimation leads to poor forecasting results for DGP1 (no break) and DGP2 (variance increase) in Panel A of Table 3 compared with the full-sample benchmark model. With improved inference on coefficient breaks, the two-step method leads to improved accuracy in Panel B for these DGPs. The effect of over-sizing on forecast accuracy is less severe in DGP3 since the variance decrease implies that the less volatile sub-sample is employed for estimation when a coefficient break is erroneously detected. In line with Pesaran and Timmermann (2007),Footnote 14 the trade-off method, which also hinges on the estimated break date, improves accuracy over the simple use of the post-break sample, but averaging based on either the BP interval or (particularly) the EM set does better, with further improvement when combined with stepwise coefficient testing. Indeed, averaging over the EM set combined with stepwise coefficient testing reduces the MSFE to a little over one in both panels, implying a relatively small accuracy loss relative to using the full-sample OLS estimator. The methods in Panel C (which do not rely on an estimated break date, namely cross-validation and averaging over all windows) also perform relatively well for these DGPs: these are more accurate than simple post-break (OLS or FGLS) estimation, with the method that averages with cross-validation weights yielding the most accurate forecasts for DGPs 1 and 3 (though the former is less accurate than the benchmark model). The effects discussed here and elsewhere in this subsection are emphasized in Appendix Table 8, where the forecast period disturbance is shut down.

When a break increases the dynamic coefficients with a time-invariant disturbance variance (DGPs 4–8, Table 3), it is unsurprising that all forecasting methods which take account of a possible break perform better than the benchmark. However, it is worth noting that in these DGPs the presence of a coefficient break is relatively easy to detect even if it is “small” (DGPs 4 and 6, Table 2), since the variance is constant while the intercept changes in addition to the dynamic coefficients due to the constant mean assumption; see (9). Therefore, the forecast accuracy results for these DGPs in Table 3 are very similar across Panels A and B. Although cross-validation performs well for these DGPs, the EM set average is more accurate.

Almost all methods of Panels A and B are less accurate than the full-sample OLS estimator for DGP9. The variance break makes it more difficult to detect the coefficient break (compare the overall coefficient test rejections for DGP9 with DGP8 in Table 2) and, in any case, the benefits of detecting the coefficient break can be out-weighed by using only noisier post-break data.Footnote 15 Nevertheless, two-step inference reduces the MSFE in Panel B compared with HC inference in Panel A of Table 3, while using either the EM set average or the methods in Panel C results in forecasts that are very close in accuracy to (or for averaging over windows and using cross-validation weights, better than) the benchmark case. On the other hand when the variance decreases in DGP10, using break information is highly beneficial relative to use of full-sample OLS because the coefficient break is always detected and the latter part of the sample is less noisy. All methods that use break information yield very similar results and reduce MSFEs by about 80% compared with the benchmark, while averaging over windows or using cross-validation weights does less well.

Finally, when a coefficient break in DGPs 11–14 affects only the intercept, Table 2 shows that stepwise coefficient testing assists in pin-pointing the nature of the break, with constancy of the intercept rejected more frequently than constancy of either dynamic coefficient. However, when the variance declines (DGP14) stepwise testing can result in slightly increased MSFE values, whether applied alone or in combination with averaging over a confidence interval or set; this also applies in DGP9 where breaks occur in both lag coefficients and the variance increases. In general, small mean breaks are hard to identify accurately and ignoring rather than modelling them often leads to more accurate forecasts (Pesaran and Timmermann 2005; Boot and Pick 2020). In line with such findings, the smallest MSFE values for DGP11 in Table 3 are achieved by methods which average over all windows, followed by the stepwise testing method combined with averaging over the EM confidence set.

The results for DGPs 9 and 13 emphasize that, with either HC or two-step inference, use of a post-break estimator can lead to a deterioration in forecast accuracy compared with full-sample OLS when a coefficient break occurs. However, averaging over the EM set combined with testing down effectively eliminates this deterioration. In these cases averaging using cross-validation weights or over all windows (Panel C) also performs very well, with the latter having the lowest relative MSFE across all methods considered. Many of the forecasts which are averaged over all windows include a substantial number of less volatile pre-break observations and their associated smaller forecast errors help to reduce overall forecast errors. On the other hand, these two averaging methods of Panel C perform substantially worse than methods that explicitly use coefficient break date inference in both DGPs 10 and 14, when coefficients change alongside a decline in the disturbance variance.

The results just discussed shed new light on the importance of break inference for forecasting. In particular, the better inference properties of the EM confidence set compared with the BP confidence interval (Table 2) yield more accurate averaged forecasts in almost all DGPs in Table 3.

4.2 Non-concurrent breaks

The forecast accuracy results in Table 3 represent a special case in which, when both occur, the coefficient and disturbance variance breaks are concurrent. However, in practice such breaks may not coincide and the two-step structural break testing method of Sect. 2.2 is designed to cope with this. To assess the impacts of different locations of a coefficient break point, Table 4 examines cases where this occurs earlier (at 0.25T) in the upper part of the table and later (0.75T ) in the lower part, with any variance break applying at the sample mid-point; once again \(T=100\).Footnote 16 Results for DGPs 1–3 are excluded since these are unchanged from Table 3. Further, results for the large coefficient break cases of DGPs 5 and 7 are omitted, since the pattern of results carries over from Table 3. Although results are unchanged for DGPs 8 and 12, these are included to facilitate comparison with DGPs 9–10 and 13–14, respectively. To conserve space, methods that employ the BP interval are also excluded, as these are almost always inferior to those using the EM set, and stepwise coefficient testing is included only in combination with the EM set, as (in common with Table 3) this effectively dominates use of stepwise testing without averaging over possible break dates.

Despite coefficient and disturbance variance break dates not being concurrent, the results for the early coefficient break case in Panels A and B of Table 4 show broadly similar patterns to those in Table 3. In particular, two-step inference is beneficial when breaks are small or especially when the disturbance variance increases (DGPs 4, 6, 9, 13). However, unlike in Table 3, forecast accuracy also improves when using two-step over HC inference when the variance declines (DGPs 10 and 14). In circumstances when detection and dating of a coefficient break are difficult (DGPs 4, 6, 9, 11, 13), averaging over the EM confidence set reduces MSFE relative to using a point estimate of the break date and a post-break estimator. Nevertheless, even for DGPs 11–13, and in contrast to the corresponding cases in Table 3, there is generally little benefit here from using stepwise coefficient testing with the EM set, presumably because there is now a larger number of observations available after the true coefficient break date. Averaging using cross-validation weights performs well and yields the lowest MSFE across all methods when the breaks are small in DGPs 6 and 11, and also for the mean shift and variance increase case of DGP13.

When the true coefficient break date occurs relatively late in the sample (lower part of Table 4), the use of HC versus two-step inference has little impact on the MSFE values. As noted in Sect. 2, when \({\hat{T}}_{c}\ge {\hat{T}}_{v}\) and for a given coefficient break date estimate, the post-break estimator (3) uses OLS whether HC or two-step inference is employed. Since the true \(T_{c}>T_{v}\) here, less gain may be anticipated from the two-step method compared to the upper part where \(T_{c}<T_{v}\). Otherwise, the relative performances of the methods of Panels A and B are broadly similar to those for other coefficient break locations in Table 3 and the upper part of Table 4. However, the methods of Panel C are generally quite poor when the coefficient break occurs late in the sample. For the cross-validation methods, this is explained by the final 25% of the sample being reserved for a pseudo forecasting exercise, while averaging over all windows gives relatively less weight to the true post-break observations when the break occurs in the latter part of the sample.

Finally, Table 5 considers the case of a late (0.75T) variance break in combination with a mid-point coefficient break; hence in common with the upper part of Table 4, the DGPs of Table 5 have \(T_{c}<T_{v}\) when breaks in both components occur. Results are shown for the same methods as in Table 4, but for a different set of DGPs. In particular, DGPs 1, 4–8 and 11–12 have constant variance and hence have unchanged results from Table 3, and these results are not repeated. The results in Table 5 confirm the benefits of using two-step structural break over HC inference when the variance break (especially an increase) occurs after the coefficient break.Footnote 17 It is also noteworthy that averaging with cross-validation weights in Panel C also performs well when the disturbance variance increases, but less well in the presence of a variance decrease.

4.3 Larger sample size

The effects of a larger sample size on forecast performance are explored using \(T=200\) in Appendix Tables 9, 10 and 11, which show corresponding results to those of Tables 3, 4 and 5. Since break sizes are fixed across the sample sizes, it is not surprising that the larger sample improves estimation of the break date and hence there is relatively less gain from averaging over a confidence interval/set compared with using the post-break estimator. Nevertheless, gains typically apply when the EM confidence set is used and either there is no coefficient break (DGPs 1–3) or the variance increases alongside a coefficient break (DGPs 9 and 13), with very little or no loss of accuracy in other cases. It may also be noted that, for this larger sample size and a relatively parsimonious model, the performance of stepwise testing (either alone or in combination with averaging over a confidence interval or set) performs well only for pure intercept shifts when the variance is constant or increasing (DGPs 11–13).

It is also not surprising that the two-step method achieves less gain in forecast accuracy over the use of HC coefficient break inference for this larger sample size, with forecast gains particularly apparent for methods that rely on a point estimate of the coefficient break date (that is, the post-break, trade-off and stepwise coefficient testing methods) and when there is no parameter break of either type (DGP1) or the disturbance variance increases (DGPs 9 and 13). The only exception to this statement is for DGP9 in Appendix Table 10, where a coefficient break at 0.75T is combined with a variance break at the sample mid-point; in this case HC inference leads to better forecast performance. On the other hand, when the timing of the two breaks is reversed in Appendix Table 11 , two-step inference leads to substantially more accurate forecasts for this DGP.

Finally, considering the methods of Panel C that do not use a break date estimator, while the cross-validation methods perform relatively well in relation to other methods in some cases, use of averaging over the EM confidence provides more accurate forecasts overall.

4.4 Summary

The main findings from the simulations are as follows:

-

1.

Although our context of possibly distinct coefficient and variance breaks differs from other studies (Pesaran and Timmermann 2004, 2007; Tian and Anderson 2014) our results underline their finding that forecast accuracy gains can be achieved over the use of a simple post-break coefficient estimator.

-

2.

Averaging across potential break dates as in (4) typically improves forecast accuracy relative to methods based on a point estimate of the break date, including trade-off and stepwise coefficient testing methods. For this purpose, the EM confidence set performs better overall than the BP confidence interval, due essentially to the poorer coverage of the true break date (when there is one) and smaller length of the latter compared with the former.

-

3.

Employing two-step rather than HC inference for detecting and dating a coefficient structural break generally reduces forecast errors in moderate or small samples when the coefficients are, in fact, constant over time or when the disturbance variance exhibits change (particularly an increase) at the same time as or at a period subsequent to any coefficient break. Further, even when it does not improve forecast accuracy, two-step inference leads to little or no accuracy deterioration, because two-step inference reduces over-rejections when no coefficient break occurs and also more often detects true breaks when the variance increases during the sample period.

-

4.

When the disturbance variance is constant or increases over the sample period, attempting to pinpoint the nature of a coefficient break by testing down typically leads to improved forecast accuracy over treating all coefficients as changing. However, when either HC or two-step inference is used, benefits do not reliably accrue when the variance decreases, irrespective of whether this is adopted in conjunction with interval/set averaging.

-

5.

Averaging using cross-validation weights (recommended by Pesaran and Timmermann (2007)) performs well relative to other methods in the presence of a disturbance variance increase, but relatively poorly when the variance decreases and is not suited to cases where a coefficient break occurs late in the sample period.

In summary, combining information from structural break tests and confidence intervals/sets can improve forecast accuracy, particularly in small samples.

5 Application to US productivity growth

In order to investigate how well our proposed methods work with observed data, we undertake a forecasting exercise for US labour productivity growth. The apparent slowdown of US productivity growth in the current century and its possibly changing dynamics are well documented in a number of studies [and more] (Syverson 2017; Jorgenson et al. 2008; Benati 2007; Hansen 2001), so it is of interest to see how well the range of methods we consider in Sect. 4 perform in a pseudo forecasting exercise for such series.

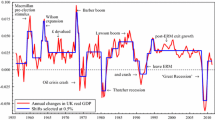

We analyse three measures of labour productivity growth (growth in real output per hour worked) published by the Bureau of Labor Statistics,Footnote 18 namely productivity in the non-farm business sector, manufacturing sector and manufacturing durable goods sector. Data are quarterly seasonally adjusted values for the percentage change at an annual rate. The non-farm business sector series covers the period between 1970Q1 and 2018Q4, with the other two series available from 1987Q1 to 2018Q4; see Fig. 1. The final 20 quarterly observations are used for evaluating out-of-sample forecasting performance. Although the selection of 20 quarters reflects the total sample sizes available, especially for the sectoral series, a robustness analysis is discussed below in relation to this choice.

Forecasts are based on a simple autoregressive model with a maximum lag length of five. Given this maximum, all possible combinations of lags are considered (allowing “gaps”) and a choice among these made using the Hannan–Quinn information criterion, with the final 20 observations excluded.Footnote 19 Although no lags are selected for non-farm business sector productivity,Footnote 20 an AR(1) forecast model is employed to allow possible dynamics,

The forecast model for the other series is selected as

Before turning to the forecasts, Table 6 reports the results of break inference (maximum one coefficient and one variance break) obtained by application of the two-step method of Sect. 2.2 to the full sample of data for the respective model (11) or ( 12), together with the unconditional mean and residual standard deviations in the implied regimes (that is, up to and subsequent to an estimated break). No coefficient break is detected in (total) non-farm business sector productivity growth, but a break is found in the residual variance at 1983Q2, which implies a substantial reduction. Previous analyses (Hansen 2001; Benati 2007) note that it can be difficult to detect a mean break in US labour productivity, possibly because any change is gradual, while a variance break at 1983Q2 is in line with many studies relating to the so-called Great Moderation for the US (McConnell and Perez-Quiros 2000; Summers 2005). Coefficient breaks are detected for the other series in Table 6, with large mean reductions; the EM confidence set in particular indicates substantial uncertainty about the coefficient break dates. A variance break for durable goods sector productivity growth is also detected, which may or may not be concurrent with the coefficient break date. Although not shown, analysis of the full sample data does not point to any break during our post-sample forecast period.Footnote 21

To conduct the forecasts, data to 2013Q4 are initially used for estimation and testing, with a one-step ahead forecast computed for 2014Q1. Structural break tests (for coefficients and, where appropriate, residual variances) allow a singleFootnote 22 break with trimming of \(\epsilon =10\%\). Data for 2014Q1 are added and forecasts for 2014Q2 are computed in the same way, and so on through the remaining period. Although repeated application of structural break tests may raise a multiple testing problem (Robbins 1970; Chu et al. 1996) , generally the same conclusions are reached as to the existence or non-existence of breaks with the estimates of break dates remaining at the same temporal locations.

Table 7 shows that no method exhibits a forecast accuracy gain over the full sample benchmark model for non-farm business sector productivity growth; this is in line with the simulation results of Sect. 4 when no coefficient break occurs, as indicated in Table 6 for this series. Although not always the case in the simulations when a variance decrease applies, the two-step procedure here leads to reduced forecast errors (measured by MSFE) compared to HC inference. Averaging using either the BP confidence interval or the EM set improves accuracy over the post-break estimator, with the EM set leading to more accurate forecasts. Again in line with Sect. 4, for both types of inference the relative MSFE is reduced to close to one by use of the EM set and stepwise testing; for HC inference, the reduction is about 15% compared with the post-break method. The trade-off method also reduces MSFE compared to the post-break estimator, but is inferior to averaging over the EM set with HC inference (as in the simulations), while cross-validation loses very little compared with the benchmark model.

Since large breaks apparently occur in the coefficients of the two manufacturing sector productivity series (Table 6), it is unsurprising that all methods perform better in Table 7 in forecasting these series than the full sample benchmark model, with this especially true for methods which use information about the estimated break date. Our confidence interval/set methods perform well for both series, with averaging using the EM set yielding the smallest MSFE value across all methods. HC and two-step procedures lead to almost identical results for manufacturing productivity growth, where there is apparently no variance break, except when the stepwise testing procedure is used in combination with averaging over a confidence interval or set. The situation where stepwise testing leads to poorer forecasts than averaging alone was also noted in our simulations and can be associated with the variance decrease. For the manufacturing durable goods series, which apparently experiences both coefficient and variance reductions, two-step inference leads to more accurate forecasts than does the use of HC inference across all methods. Indeed, the improvements here from two-step inference are more impressive than indicated for DGP14 in Sect. 4. Finally, the relatively poor performance of the methods in Panel C of Table 7 is unsurprising, since the coefficient break occurs towards the end of the estimation period.

The Diebold and Mariano (1995) test is used to check whether the differences in forecast accuracy between a given forecast method and the full sample benchmark model are statistically significant. Improvements are significant at 10% or less for most methods when analysing the manufacturing sector productivity series. Two-step inference generally delivers forecasts that significantly improve on the benchmark for the manufacturing durable goods sector model, but this is not the case when HC inference is employed. No statistical evidence is found against equal predictive accuracy for all methods against the benchmark for the non-farm business sector; this is unsurprising since there is apparently no coefficient break for this case (Table 6).

Finally, to check the robustness of the results to the choice of the out-of-sample window, forecast accuracy measures are re-calculated for forecast samples of 15, 25 and 30 quarters. The results in Appendix Table 12 show that the good performance of the proposed confidence interval/set methods remains robust, yielding the smallest MSFE value for most forecast samples. Other results are also generally robust, except that (compared with Table 7) forecast accuracy relative to the benchmark deteriorates in the manufacturing and manufacturing durables series when the forecast window length is 30 quarters. This relates to the coefficient break detected in each case around 2010, leaving relatively few observations for estimation of the forecast models in some sub-samples.

6 Conclusion

This paper investigates the usefulness for forecasting of employing a wider range of information relating to structural break testing than implied by the use of a point estimate of the break date in the model’s coefficients. In particular, we propose using a forecast combination approach based on the confidence interval or confidence set for the estimated break date, thereby avoiding using a single and potentially poor break date estimate. In this context, we investigate the confidence interval associated with Bai and Perron (1998) and the confidence set of Eo and Morley (2015). Our simulation results show that the Eo and Morley (2015) set is particularly useful for this purpose and performs well relative to other methods, including those based on a point estimate of the break date (namely post-break and trade-off methods) and others that do not use any break information (cross-validation and averaging across all possible windows). Although testing whether breaks apply to individual coefficients can further improve forecast accuracy, it is not recommended that such a testing down procedure be used when the disturbance variance declines during the sample period.

A second issue related to inference that we examine concerns the treatment of possible breaks in the disturbance variance, comparing results based on heteroskedasticity consistent coefficient break tests with two-step inference and use of FGLS estimation. Our results show that two-step inference generally reduces forecast errors in moderate or small samples when the true coefficients are constant over time or when the variance exhibits change at the same time or subsequent to a coefficient break. Further, when two-step inference does not lead to improved forecast accuracy, its use does not involve a substantive deterioration either.

An application to US productivity growth underlines the practical usefulness of the methods proposed in the paper for forecasting in the presence of structural breaks. Our analysis considers the situation where at most one structural break applies to each of the coefficients and the disturbance variance, with the two possible breaks not necessarily concurrent; in further work, we plan to examine situations where these characteristics may each be subject to multiple breaks.

Data and computer code availability

The data used in the empirical section of this study are openly available in FRED, Federal Reserve Bank of St. Louis; at https://fred.stlouisfed.org, reference number (PRS85006091; PRS30006092; PRS31006092). Computer code to obtain empirical and simulation results of the paper is available from either author upon reasonable request. The code is based on codes for the Bai and Perron (2003) and Eo and Morley (2015) procedures made available by Pierre Perron and James Morley, respectively, on their websites.

Notes

We assume pre-sample observations are available such that (1) can be applied for \(t=1\).

Preliminary investigation indicated that the forecast performance was little affected by the adoption of a 10% significance level.

For the case of multiple breaks, Tian and Anderson (2014) find that weighting forecasts according to the location of the window within the sample performs well. However, we do not include this as our interest focuses on single breaks.

Our initial investigations also included the form of cross-validation that uses estimated break date information, with results qualitatively similar to those shown.

Since lagged \(y_{t}\) is included as a regressor, a coefficient break in (1) implies a break in the regressor matrix one period later. Although the dates do not quite coincide, it is appropriate to allow for the distribution of regressors to change when testing for a coefficient break.

Although the results presented in the paper make the realistic assumption that neither the presence nor the date of any break is known, we also obtained results for known break dates. Footnotes below sometimes refer to these results, which can be obtained from the authors on request.

We also computed forecasts \({\widehat{y}}_{T+h}\) (\(h=2,3\)) by estimating the true (constant parameter) AR(1) model for \(x_{t}\) and using this in conjunction with the specification of the \(y_{t}\) model resulting from the procedures considered in Sect. 2 to obtain iterated multi-horizon forecasts. The MSFE results show the same patterns as those for one-step ahead and are available from the authors on request.

The initial seed is set for each DGP so that all forecasting methods are evaluated based on exactly the same sample data.

We thank a referee for this suggestion.

We thank a referee for prompting us to this discussion and additional results.

Note, however, that Pesaran and Timmermann (2007) examine only concurrent coefficient and variance breaks and hence do not consider HC or two-step inference.

Even with known break dates, the relative MSFE values for DGP9 are close to, and sometimes a little larger than unity; the relative MSFE for the post-break estimator is then 0.980. Similar comments apply for DGP13, where the post-break estimator with known break dates is 1.016.

A similar pattern of results applies when the break dates are known, with the use of all two-step (FGLS) methods improving on the use of HC inference.

Data are retrieved from FRED, Federal Reserve Bank of St. Louis; https://fred.stlouisfed.org/series.

We also considered re-selecting models as each observation is added during the pseudo forecast period. However, the selected models remain unaltered, except that the last (fourth) lag is dropped for the manufacturing durable goods sector for some estimation samples.

Akaike, Schwarz and Hannan–Quinn information criteria also selected no lags.

This was confirmed by applying the Bai and Perron (1998) multiple coefficient breaks test procedure with HC inference over the full sample, allowing a maximum of 3 breaks.

We also experimented by allowing a maximum of three structural breaks, but found no additional breaks except for one additional variance break in each of the manufacturing and durable goods productivity series. Allowing for these was found to make no substantive change in the forecast errors.

References

Altansukh G, Becker R, Bratsiotis G, Osborn DR (2017) What is the globalisation of inflation? J Econ Dyn Control 74:1–27

Bai J (1997) Estimation of a change point in multiple regression models. Rev Econ Stat 79:551–563

Bai J, Perron P (1998) Estimating and testing linear models with multiple structural changes. Econometrica 66:47–78

Bai J, Perron P (2006) Multiple structural change models: a simulation analysis. In: Corbea D, Durlauf S, Hansen BE (eds) Econometric theory and practice: frontiers of analysis and applied research. Cambridge University Press, Cambridge, pp 212–237

Bataa E, Osborn DR, Sensier M, van Dijk D (2013) Structural breaks in the international dynamics of inflation. Rev Econ Stat 95:646–659

Benati L (2007) Drift and breaks in labor productivity. J Econ Dyn Control 31:2847–2877

Boot T, Pick A (2020) Does modeling a structural break improve forecast accuracy? J Econom 215:35–59

Chang SY, Perron P (2018) A comparison of alternative methods to construct confidence intervals for the estimate of a break date in linear regression models. Econom Rev 37:577–601. https://doi.org/10.1080/07474938.2015.1122142

Chu C-SJ, Stinchcombe M, White H (1996) Monitoring structural change. Econometrica 64:1045–1065

Clark TE, McCracken MW (2005) The power of tests of predictive ability in the presence of structural breaks. J Econom 124:1–31

Davidian M, Carroll RJ (1987) Variance function estimation. J Am Stat Assoc 82:1079–1091

Diebold FX, Mariano RS (1995) Comparing predictive accuracy. J Bus Econ Stat 13:253–263

Eklund J, Kapetanios G, Price S (2013) Robust forecast methods and monitoring during structural change. Manch Sch 81:3–27

Elliott G (2005) Forecasting when there is a single break. Manuscript. University of California, San Diego

Elliott G, Müller UK (2007) Confidence sets for the date of a single break in linear time series regressions. J Econom 141:1196–1218

Eo Y, Morley J (2015) Likelihood-ratio-based confidence sets for the timing of structural breaks: likelihood-ratio-based confidence sets. Quant Econ 6:463–497

Hansen BE (2001) The new econometrics of structural change: dating breaks in U.S. labor productivity. J Econ Perspect 15:117–128

Hendry DF (2000) On detectable and non-detectable structural change. Struct Chang Econ Dyn 11:45–65

Hendry DF, Clements MP (2003) Economic forecasting: some lessons from recent research. Econ Model 20:301–329

Inoue A, Jin L, Rossi B (2017) Rolling window selection for out-of-sample forecasting with time-varying parameters. J Econom 196:55–67

Jorgenson DW, Ho MS, Stiroh KJ (2008) A retrospective look at the U.S. productivity growth resurgence. J Econ Perspect 22:3–24

Koo B, Seo MH (2015) Structural-break models under mis-specification: implications for forecasting. J Econom 188:166–181

McConnell MM, Perez-Quiros G (2000) Output fluctuations in the united states: what has changed since the early 1980s? Am Econ Rev 90:1464–1476

Paye BS, Timmermann A (2006) Instability of return prediction models. J Empir Financ 13:274–315

Pesaran MH, Pick A (2011) Forecast combination across estimation windows. J Bus Econ Stat 29:307–318

Pesaran MH, Timmermann A (2004) How costly is it to ignore breaks when forecasting the direction of a time series? Int J Forecast 20:411–425

Pesaran MH, Timmermann A (2005) Small sample properties of forecasts from autoregressive models under structural breaks. J Econom 129:183–217

Pesaran MH, Timmermann A (2007) Selection of estimation window in the presence of breaks. J Econom 137:134–161

Pesaran MH, Pick A, Pranovich M (2013) Optimal forecasts in the presence of structural breaks. J Econom 177:134–152

Pitarakis J-Y (2004) Least squares estimation and tests of breaks in mean and variance under misspecification. Econom J 7:32–54

Robbins H (1970) Statistical methods related to the law of the iterated logarithm. Ann Math Stat 41:1397–1409

Stock JH, Watson MW (1996) Evidence on structural instability in macroeconomic time series relations. J Bus Econ Stat 14:11–30

Summers PM (2005) What caused the great moderation? Some cross-country evidence. Econ Rev Federal Reserve Bank Kansas City 90:5–32

Syverson C (2017) Challenges to mismeasurement explanations for the US productivity slowdown. J Econ Perspect 31:165–186

Tian J, Anderson HM (2014) Forecast combinations under structural break uncertainty. Int J Forecast 30:161–175

Acknowledgements

The authors would like to thank Heather Anderson, Jing Tian and the editor and referees of this journal for their helpful comments on an earlier version of the paper. We also thank Ralf Becker for his many comments at all stages of this work. However, the authors take full responsibility for any errors or omissions.

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Appendices

Appendix A: Other forecast methods

1.1 Cross-validation

The cross-validation approach proposed by Pesaran and Timmermann (2007) considers all possible estimation windows of different lengths and chooses the single window which achieves the smallest pseudo out of sample forecast error. Specifically, for each possible starting point m for the estimation window, the one which generates the smallest MSFE is selected as

where \({{\hat{\beta }}}_{m:t}\) is the OLS estimate based on the observation window \(\left[ m:t\right] \) and \(m\in 1,\ldots ,T-{\tilde{w}}-\) w, having a minimum estimation window w and reserving the last \({\tilde{w}}\) observations for the pseudo out of sample evaluation. The forecast model uses \({{\hat{\beta }}}_{m^{*}:T}^{\prime }\) estimated over the sample \([m^{*}:T]\). We use w=0.1T and \({\tilde{w}}\)=0.25T.

1.2 Trade-off

This method trades off bias against forecast error variance by selecting \( v_{1}\) to minimize (Pesaran and Timmermann 2007)

where\(~{\mu =({\hat{\beta }}}_{2}-{{\hat{\beta }}}_{1}\mathbf {)/} {\hat{\sigma }}_{2},~\psi =({\hat{\sigma }}_{1}^{2}-{\hat{\sigma }}_{2}^{2})/\hat{ \sigma }_{2}^{2},\) \(\lambda =v_{1}/v\) and \(v=v_{1}+v_{2},\) with \(v_{1}\) and \( v_{2}\) the number of pre- and post-break observations, respectively, \({ {\hat{\beta }}}_{i}\) and \({\hat{\sigma }}_{i}^{2}\) (\(i=1,2\)) the respective coefficient and variance estimates, and

With \(v_{1}\) selected to minimize this function, the coefficient vector used in forecasting is \({{\hat{\beta }}}_{{\hat{T}}_{c}-v_{1}+1:T}\) estimated over the sample of \([{\hat{T}}_{c}-v_{1}+1:T].\)

1.3 Forecast combination over estimation samples

Forecasts using the same model estimated over different sizes of windows are averaged to generate a single forecast for \(T+1\) (Pesaran and Timmermann 2007; Pesaran and Pick 2011). Specifically, in our notation and using equal weights,

Using weights proportional to the inverse of the associated pseudo out of sample MSFE values (Pesaran and Timmermann 2007), the cross-validation weighted average is

Appendix B: MSFE ratios, no disturbance in the forecast period

See Table 8.

Appendix C: Larger sample performance tables

See Table 9.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Altansukh, G., Osborn, D.R. Using structural break inference for forecasting time series. Empir Econ 63, 1–41 (2022). https://doi.org/10.1007/s00181-021-02137-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-021-02137-w