Abstract

In this article, we analyze the growth pattern of COVID-19 pandemic in India from March 4 to July 11 using regression analysis (exponential and polynomial), auto-regressive integrated moving averages (ARIMA) model as well as exponential smoothing and Holt–Winters models. We found that the growth of COVID-19 cases follows a power regime of \(({t}^{2}, t,...)\) after the exponential growth. We found the optimal change points from where the COVID-19 cases shifted their course of growth from exponential to quadratic and then from quadratic to linear. After that, we saw a sudden spike in the course of the spread of COVID-19 and the growth moved from linear to quadratic and then to quartic, which is alarming. We have also found the best fitted regression models using the various criteria, such as significant p-values, coefficients of determination and ANOVA, etc. Further, we search the best-fitting ARIMA model for the data using the AIC (Akaike Information Criterion) and provide the forecast of COVID-19 cases for future days. We also use usual exponential smoothing and Holt–Winters models for forecasting purpose. We further found that the ARIMA (5, 2, 5) model is the best-fitting model for COVID-19 cases in India.

Similar content being viewed by others

Introduction

The COVID-19 pandemic has created a lot of havoc in the world. It is caused by a virus called SARS-CoV-2, which comes from the family of coronaviruses and is believed to be originated from the unhygienic wet seafood market in Wuhan, China but it has now infected around 215 countries of the world. With more than 13.2 million people affected around the world and more than 575,000 deaths (As of July 14, 2020), it has forced people to stay in their homes and has caused huge devastation in the world economy (Singh and Singh 2020; Ministry of Health and Family Welfare 2020; Gupta et al. 2019).

In India, the first case of COVID-19 was reported on 30th January, which was linked to the Wuhan city of China (as the patient has travel history to the city). On 4th March, India saw a sudden hike in the number of cases and since then, the numbers are increasing day by day. As of 14th July, India has more than 908,000 cases with more than 23,000 deaths and is world’s 3rd most infected country (https://www.worldometers.info/coronavirus/).

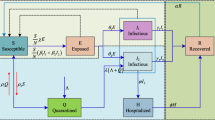

Since the outbreak of the pandemic, scientists across the world have been indulged in the studies regarding the spread of the virus. Lin et al. (2020) suggested the use of the SEIR (Susceptible– Exposed–Infectious–Removed) model for the spread in China and studied the importance of government-implemented restrictions on containing the infection. As the disease grew further, Ivorra et al. (2019) suggested a θ-SEIHRD model that took into account various special features of the disease. It also included asymptomatic cases into account (around 51%) to forecast the total cases in China (around 168,500). Giordano et al. (2003) also suggested an extended SIR model called SIDHARTHE model for cases in Italy which was more customized for COVID-19 to effectively model the course of the pandemic to help plan a better control strategy.

Petropoulos and Makridakis (2020) suggested the use of exponential smoothing method to model the trend of the virus, globally. Kumar et al. (2020) gave a review on the various aspects of modern technology used to fight against COVID-19 crisis.

Apart from the epidemiological models, various data-oriented models were also suggested to model the cases and predict future cases for various disease outbreaks from time to time. Various time-series models were also suggested to model the cases and predict future cases. ARIMA and Seasonal ARIMA models are widely used by researchers to model and predict the cases of various outbreaks. In 2005, Earnest et al. (2005) conducted a research to model and predict the cases of SARS in Singapore and predict the hospital supplies needed using this model. Gaudart et al. (2009) modelled malaria incidence in the Savannah area of Mali using ARIMA. Zhang et al. (2013) compared Seasonal ARIMA model with three other time-series models to compare Typhoid fever incidence in China. Polwiang (2020) also used this model to determine the time-series pattern of Dengue fever in Bangkok.

For COVID-19 as well, various researchers tried to model the cases through ARIMA. Ceylan (2020) suggested the use of Auto-Regressive Integrated Moving Average (ARIMA) model to develop and predict the epidemiological trend of COVID-19 for better allocation of resources and proper containment of the virus in Italy, Spain and France. Chintalapudi (2020) suggested its use for predicting the number of cases and deaths post 60-days lockdown in Italy. Fanelli and Francesco (2020) analyzed the dynamics of COVID-19 in China, Italy and France using iterative time-lag maps. It further used SIRD model to model and predict the cases and deaths in these countries. Zhang et al. (2020) developed a segmented Poisson model to analyze the daily new cases of six countries to find a peak point in the cases.

Since the spread of the virus started to grow in India, various measures were taken by the Indian Government to contain it. A nationwide lockdown was announced on March 25 to April 14, which was later extended to May 3. The whole country was divided into containment zones (where large number of cases were observed from a relatively smaller region), red zones (districts where risk of transmission was high and had higher doubling rates), green zones (districts with no confirmed case from last 21 days) and orange zones (which did not fall into the above three zones). After the further extension of the lockdown till May 17, various economic activities were allowed to start (with high surveillance) in areas of less transmission. Further, the lockdown was extended to May 31 and some more economic activities have been allowed as per the transmission rates, which are the rates at which infectious cases cause new cases in the population, i.e. the rate of spread of the disease. This was further extended to June 8, with very less rules and especially the states were given the responsibility of setting the lockdown rules. The air and rail transport became open for general public. Post June 8, we see that the restrictions are nominal with even shopping malls and religious places open for general public. Now, the responsibility of imposing restrictions lies with the respective State Governments.

On the other hand, Indian scientists and researchers are also working on addressing the issues arising from the pandemic, including production of PPE kits and test kits as well as studying the behaviour of spread of the disease and other aspects of management. Various mathematical and statistical methods have been used for predicting the possible spread of COVID-19. The classical epidemiological models (SIR, SEIR, SIQR etc.) suggested the increasing trend of the virus and predicted the peaks of the pandemic. Early researches showed the pandemic to reach its peak by mid-May. They also showed that the basic reproduction number (R0) and the doubling rates are lower in India, with comparison to European nations and the USA. A tree-based model was proposed by Arti and Bhatnagar (2020) and Bhatnagar (2020) to study and predict the trends. They suggest that lockdown and social distancing in India have played a significant role to control the infection rates. But now, as the lockdown restrictions are minimal, the cases in India are growing at an alarming high rate. Chatterjee et al. (2020) suggest growth of the pandemic through power law and its saturation at the later stages. Due to the complexities in the epidemic models of COVID-19, various researchers have been focusing on the data to forecast the future cases. Chatterjee et al. (2020), Verma et al. (2020) and Ziff and Ziff (2020) suggest that after exponential growth, the total count follows a power regime of t3, t2, t and \(\sqrt{\mathrm{t}}\) before flattening out, where ‘t’ refers to time. It can, therefore, be realized that there is an urgent need to model and forecast the growth of COVID-19 in India as the virus is in the growing stage here.

In India, the most affected states are Maharashtra with over 260,000 cases (as of 14 July 2020), Tamil Nadu (around 142,000 cases), Delhi (around 113,000 cases) and Gujarat (around 42,000 cases). The greatest number of cases per million has been seen in the national capital of Delhi (5740 cases per million) (Refer https://nhm.gov.in/New_Updates_2018/Report_Population_Projection_2019.pdf for population estimates). Many states and union territories like, Kerala, Karnataka, Andaman and Nicobar Islands, Daman and Diu, etc. which had recovered from majority of the cases have experienced a second wave of infections. This might be attributed to decreased travel restrictions and minimal lockdown measures. In their research, Singh and Jadaun (2020) studied the significance of lockdown in India and suggested that the new COVID-19 cases would stop by the end of August in India with around 350,000 total cases. While some states may see an early stopping of new cases, such as Telangana (mid-June), Uttar Pradesh and West Bengal (July end) etc., the badly affected states of Maharashtra, Tamil Nadu and Gujarat will achieve this by August end.

Since a proven vaccine and medication is yet to be developed by the researchers then in such a scenario, modelling the present situation and forecasting the future outcome becomes crucially important to utilize our resources in the most optimal way. Therefore, the article aims to study the growth curve of COVID-19 cases in India and forecast its future course. Since the disease is still in its growing age and very dynamic in nature, no model can guarantee for perfect validity for future. We, therefore, need to develop the understanding of the present situation of the pandemic.

In this article, we first study the growth curve using regression methods (exponential, linear and polynomial etc.) and propose an optimal model for fitting the cases till July 10. Further, we propose the use of time-series models for forecasting the future observations on COVID-19 cases. Here, we reach the best-fitted ARIMA model for forecasting the COVID-19 cases. We also compare these results with Exponential Smoothing (Holt–Winters) model. This study will help us to understand the course of spread of SARS-CoV-2 in India better and help the government and the people to optimally use the resources available to them.

Statistical Methodologies

In this section, we briefly present the statistical techniques used for analyzing the COVID-19 cases in India. Here, we used usual regression (exponential, polynomial), times-series (ARIMA) and exponential smoothing models.

Exponential–Polynomial Regression

Regression is a statistical technique that attempts to estimate the strength and nature of relationship between a dependent variable and a series of independent variables. Regression analyses may be linear and non-linear. A regression is called linear when it is linear in parameters, e.g. \(y={\beta }_{0}+{\beta }_{1}t+\in\) and \(y={\beta }_{0}+{\beta }_{1}t+{\beta }_{2}{t}^{2}+{\beta }_{3}{t}^{3}+\in\), \(\in \sim N\left(0,{\sigma }^{2}\right),\) where \(y\) is response variable, \(t\) denotes the indepenet variable, \({\beta }_{0}\) is the intercept and other βs are known as slopes.

A non-linear regression is a regression when it is non-linear in its parameters, e.g. \(y={\theta }_{1}{e}^{{\theta }_{2}x}+\epsilon .\) In the beginning of the spread of a disease, we see that the new cases are directly proportional to the existing infected cases and may be represented by \(\frac{\mathrm{d}y(t)}{\mathrm{d}t}=ky(t)\), where \(k\) is the proportionality constant. Solving this differential equation, we get that, at the beginning of a pandemic,

Thus, at the beginning of a disease, the growth curve of the cases grows exponentially.

As the disease spreads in a region, governments start to take action and people start becoming conscious about the disease. Thus, after some time, the disease starts to follow a polynomial growth rather than continuing to grow exponentially.

In order to fit an exponential regression to our data, we linearize the equation by taking the natural logarithm of the equation and convert it to a linear regression in first order.

We estimate the parameters of a linear regression of order \(p\) as follows:

Let the model of linear regression of order \(p\) be: \({y}_{i}={\beta }_{0}+\sum_{j=}^{p}{x}_{i}^{j}+\in\) with \(\in \sim N\left(0,{\sigma }^{2}\right)\) and \(i=\mathrm{1,2},.., N\). Let \(E=\sum_{i=1}^{N}{\left\{{y}_{i}-{\beta }_{0}-\sum_{j=}^{p}{\beta }_{j}{x}_{i}^{j}\right\}}^{2}\) represent the residual sum of square (RSS).

We get the best estimates of these coefficients by solving the following normal equations: \(\frac{\partial \mathrm{E}}{\partial {\beta }_{0}}=0\), \(\frac{\partial \mathrm{E}}{\partial {\beta }_{1}}=0\),…,\(\frac{\partial \mathrm{E}}{\partial {\beta }_{p}}=0,\) which minimizes RSS. This technique is referred to as the ordinary least squares (OLS). We will use this technique of the OLS to estimate the coefficients of our proposed model. (Refer Montgomery et al. (2012).

Since we know that the growth curve of the disease changes after some time point, exponential to polynomial, we propose to use the following joint regression model with change point \(\mu ,\)

where we take \({f}_{1}\left(t\right)={\theta }_{1}{e}^{{\theta }_{2}t}\), \({f}_{2}\left(t\right)={\beta }_{0}+{\beta }_{1}t+{\beta }_{2}{t}^{2}+\dots +{\beta }_{p}{t}^{p}+\in ,\) \(\in \sim N(0,{\sigma }^{2})\) and \(p\) is the order of the polynomial regression model and \(t\) stands for the time (an independent variable).

During the analysis, we found that a suitable choice of \({f}_{2}\left(t\right)\) is a quadratic or a cubic model. Once the order of the polynomial is kept fixed, an optimum value of the change point can be obtained by minimizing the residuals/errors. We can obtain the OLS estimates of the parameters of the model (1) as given below:

The least square estimates (LSEs) of the parameters, \(\Theta =\left\{{\theta }_{1},{\theta }_{2},\mu , {\beta }_{0},{\beta }_{1},{\beta }_{2},{\beta }_{3},\dots \dots ,{\beta }_{p}\right\}\) can be obtained by minimizing the residual sum of squares (RSS) as given by:

where \({\widehat{y}}_{i}^{exp}\) and \({\widehat{y}}_{i}^{poly}\) are the estimates value of \({y}_{i}\) from the exponential and polynomial regression models, respectively, and \(N\) is the size of the dataset.

The LSEs of \(\Theta =\left\{{\theta }_{1},{\theta }_{2},\mu , {\beta }_{0},{\beta }_{1},{\beta }_{2},{\beta }_{3},\dots \dots ,{\beta }_{p}\right\}\) can be obtained as the simultaneous solution of the following normal equations, \(\frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\theta }_{1}}=0, \frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\theta }_{2}}=0,\frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial \mu }=0, \frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\beta }_{0}}=0, \frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\beta }_{1}}=0\), \(\frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\beta }_{2}}=0\), \(\frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\beta }_{3}}=0,\dots \dots , \frac{\partial \mathrm{RSS}\left(\Theta \right)}{\partial {\beta }_{p}}=0.\) Solution to these equations is difficult since the parameter \(\mu\) is decenter time point.

We suggest to use the following algorithm while \(\mu\) is kept fixed.

Algorithm 1

In order to find the optimal value of µ, i.e. the turning point between the exponential and polynomial growth, we will use the technique of minimizing the residual sum squares in “Analysis of COVID-19 Cases in India”.

We will use MAPE (Mean Absolute Percentage Error) to evaluate the performance of the mode.

where \({y}_{t}\) is the observed value at time point \(t\) and \({\widehat{y}}_{t}\) is an estimate of \({y}_{t}.\)

In order to make the results easy to interpret, we will also use Accuracy (%).

ARIMA Model

The Auto-Regressive Integrated Moving Averages method gauges the strength of one dependent variable relative to other changing variables. It is one of the most used time-series models in diverse fields of data analysis as it takes into account the changing of trends, periodic changes as well as random disturbances in the time-series data. It is used for both better understanding of the data as well as forecasting, see Brockwell et al. (1996).

Autoregressive model (AR) is effectively merged with the Moving Averages model (MA) to formulate a useful time-series model, ARIMA model. The Autoregression (AR) element of the model shows a changing variable that regresses on its own prior values and the Moving Average (MA) element incorporates the dependency between an observation and a residual error from a moving average model applied to prior observations. However, this model can only be applied to stationary data. Since many real-life datasets consist of an element of non-stationarity, to model such datasets, ARIMA model was developed. This model is open for non-stationary data as the Integrated (I) factor of the model represents the differencing of raw observations to allow the time-series to become stationary.

Here, we may refer the reader to follow Box et al. (2008, 2015) for more details on ARIMA model, estimation and its application.

The general forms of \(AR (p)\) and \(MA (q)\) models can be, respectively, represented as the following equations:

where \({\varnothing }\) s and θs are auto-regressive and moving averages parameters, respectively, \({Y}_{t}\) represents value of time-series at time point \(t\), \({\varepsilon }_{t}\) represents the random disturbance at time point t and is assumed to be independently and identically distributed (i.i.d.) with mean 0 and variance \({\sigma }^{2}.\)

The \(\mathrm{ARMA} (p, q)\) model can be represented as:

where α is an intercept.

The differenced stationary time-series can be modelled as an ARMA model to use ARIMA model on the time-series data (Ceylan 2020; He and Tao 2018; Manikandan et al. 2016). The ARIMA model is generally denoted as \(\mathrm{ARIMA} (p, d, q)\) where, \(p\) is the order of auto-regression, \(d\) is the degree of difference and \(q\) is the order of moving average.

The degree of difference, i.e. \(d\) is a transformation (operator) that is used to make the time-series stationary as it removes the increasing trends. A higher value of \(d\) indicates positive autocorrelations out to a high number of lags.

The first step to model the time-series by ARIMA is to determine the time-series data for stationarity. The Augmented Dickey–Fuller (ADF) test may be applied to determine if the time series after differencing is stationary or not. The ADF test is applied to test the null hypothesis for the presence of a unit root (which indicates non-stationarity of the series).

In order to deduce the \(ARIMA (p, d, q)\) model, we can proceed as follows:

We have the \(ARMA (p^\prime, q)\) represented as follows (as per Eq. 5)

It can be equivalently written as:

where L is the lag operator, such that- \({L}^{a}\left({Y}_{t}\right)={Y}_{t-a}\).

Now, assume that the polynomial \((1-\sum_{i=1}^{{p}^{^{\prime}}}{\varnothing }_{i}{L}^{i})\) has a unit root (i.e. a factor of \((1-L)\)) of multiplicity d. Then, Eq. (6) can be re-written as:

Or,

This can be generalized as:

This defines an \(\mathrm{ARIMA} (p,d,q)\) process with drift \(\frac{\delta }{1-\sum {\varphi }_{i}}\).

The second step is to plot the graphs of the Autocorrelation function (ACF) and the Partial Autocorrelation Function (PACF) to determine the most-likely values of \(p\) and \(q\).

The final step is to obtain the optimal values of \(p\), \(d\) and \(q\) using the AIC (Akaike Information Criterion), for more details see https://en.wikipedia.org/wiki/Akaike_information_criterion. These information criteria may be used for selecting the best-fitted models. Lower the values of criteria, higher will be its relative quality. The AIC is given by:

where K is the number of model parameters, \(\mathcal{L~}\mathrm{~ is ~the~ maximized~ value~ of~ log}-\mathrm{~likelihood~ function}\).

Exponential Smoothing

Exponential smoothing is one of the simple techniques to model time-series data where the past observations are assigned weights that are exponentially decreasing over time. We propose the following models, for modelling of COVID-19 cases [see Holt (1957) and Winters (1960)].

For single exponential smoothing, let the raw observations be denoted by \(\{{\mathrm{y}}_{t}\}\) and \(\{{\mathrm{s}}_{t}\}\) denote the best estimate of trend at time \(\mathrm{t}.\) Then, \({s}_{0}={y}_{0}\), \({s}_{t}=\alpha {y}_{t}+\left(1-\alpha \right)\left({s}_{t-1}\right)\), where \(\alpha \in \left(\mathrm{0,1}\right)\) denotes the data smoothing factor.

For double exponential (Holt–Winters) smoothing, let the raw observations be denoted by \(\{{\mathrm{y}}_{t}\}\), smoothened values \(\{{\mathrm{s}}_{t}\}\), and \(\{{\mathrm{b}}_{t}\}\) denotes the best estimate of trend at time \({t}\). Then,

where \(\alpha \in \left(\mathrm{0,1}\right)\) denotes the data smoothing factor and \(\beta \in \left(\mathrm{0,1}\right)\) denotes the trend smoothing factor. For the forecast at \(t=(N+m)\) days, (\({F}_{N+m}\)) is calculated by

Analysis of COVID-19 Cases in India

For this study, we have used the data available at GitHub, provided by Centre for Systems Science and Engineering (CSSE) at John Hopkins University (see https://github.com/CSSEGISandData/COVID-19/blob/master/csse_covid_19_data/csse_covid_19_time_series/time_series_covid19_confirmed_global.csv). For this study, we use R software. (see R Core Team 2020).

Exponential–Polynomial Regressions

We have used the data from March 4 to July 11 for continuity of the data.

We know that at the beginning of the spread of the disease in India, the growth was exponential and after some time, it was shifted to polynomial. We first obtain optimum turning point of the growth, i.e. when did the growth rate of the disease shifted to polynomial regime from the exponential. We consider both quadratic and cubic regression model for second part of the data. We will also discuss the types of polynomial growth (with their equations) in India.

In order to find the turning point of the growth curve, we follow the Algorithm 1, given in the previous section. Using that, we evaluate the RSS for all the days (from March 4) and find the date on which it is minimum. The change points of growth curve for cubic and quadratic regressions are presented in Fig. 1 depending upon the size of the data set. From Fig. 1, we can confirm that the growth rate of COVID-19 cases was exponential till April 5 and then after it follows the polynomial growth regime while we use the COVID-19 cases till July 11 (Table 1).

We call the region of exponential growth in India as Region I. The coefficients of the model are presented in Table 2.

We see that after the exponential regime (till April 5), the growth curve follows a polynomial growth till May 2. After this, we again see a change in the behavior of the growth curve. In Tables 3, 4, 5 and 6, we try to model these growth curves through regression analysis.

Having evaluated the coefficients for various models (i.e. linear, quadratic and cubic) as well as the important statistics (i.e. R2 values, p values of the models as well as individual coefficients and F-statistic), we will select the best-fitting models. In order to select the best-fitting models for Region II (April 6 to May 2); III (May 3 to May 15), IV (May 16 to May 31) and V (June 1 to July 11), we have the following steps. We select that model which has high R2 values, significant p value, high F-statistic and where the p values of all the variables are significant.

We see for Region II, from Table 3, that the linear model is having a relatively lower F-statistic and R2 values in comparison to the Quadratic and Cubic models. So, we eliminate the possibility of linear fitting. Further, we see that the p values, F-statistics and the R2 values are quite significant in both Quadratic as well as the Cubic models. But, if we look at the individual p values of the coefficients, we see that the individual p values are not significant for the Cubic model. On the other hand, the individual p values are significant for the Quadratic model. Thus, we can conclude that the Quadratic model is the best-fitting model for Region II (April 6 to May 2).

For Region III, from Table 4, that all the three models have high F-statistic values, high p values and high R2 values. But we notice that the coefficient individual p values are not significant in both Quadratic and Cubic models. Thus, we conclude that the Linear model is the best-fitting model for Region III (May 3 to May 15).

For Region IV, from Table 5, we see that the R2 values for all the models are very high. All the models also have significant p values. The F-statistic of both Quadratic and Cubic models are also high. But, the coefficient individual p values are not significant in the Cubic model. Thus, we conclude that the Quadratic model is the best-fitting model for Region IV (May 16 to May 31).

For Region V, from Table 6, we see that the R2 values of all the models are very high (quadratic, cubic, quartic and quintic models have exceptionally high). All the models also have significant p-values. The F-statistic of Quadratic, Cubic, Quartic and Quintic models is high. F-statistic value of Quartic model is the highest. The coefficient individual p values of Quartic model are also significant. Thus, we conclude that the Quartic model is the best-fitting model for Region V (June 1 to July 11).

Note For Region V, due to spike in the cases, we also checked the fitting of exponential curve in this region (Table 7).

Let the exponential model be- \(y\left(t\right)=\alpha {e}^{\beta t}\)

We obtained the following parameter values:

The RSE for this model is 4178 and MAPE (%) is 0.85%. Both of these values are quite larger than those of Quartic model (Refer Table 9 for RSE and MAPE values of Quartic model in Region V). Thus, we conclude that Quartic model is the best-fitting model for Region V (1st June to 11th June).

All the ANOVA tables (Refer to Table 8) for Region II, III, IV and V suggest significant p-values for its coefficients and suggest that the models fit well the respective regions.

Thus, according to our study, the growth of the virus was exponentially increasing from March 4 to April 5. Then after, the virus grew by following a quadratic rate from April 6 to May 2. After May 3, we experienced a linear growth. But after May 15 to May 31, we experienced a sudden rise in the rate of growth of the virus and have seen quadratic growth again. Further, for the period of June 1 to July 11, we see experienced a quartic (4-degree polynomial) growth, which is very alarming (see Table 9 for best-fitted regression models). Figure 2 shows the best-fitted regression models to the daily cumulative cases of COVID-19 in India from March 4 to July 11 (Table 10).

Time-series Models Fitting

We use the daily time-series data of number of cumulative confirmed cases from March 4 to July 10.

First, we check the stationarity of the transformed time-series using ADF Tests. Dickey–Fuller statistic is 6.3915 with p value 0.99 which indicates that the growth of COVID-19 cases is not stationary. The ARIMA models may be useful over the ARMA models. The ACF and PACF plots are shown in Fig. 3.

We then obtain the optimal ARIMA parameters (\(p\), \(d\), \(q\)) using the AIC. We take various possible combinations of (\(p\), \(d\), \(q\)) and compute the AIC. Then, select the best-fitted ARIMA model that has the lowest AIC among all considered models. According to the AIC, the ARIMA (5, 2, 5) is the best-fitted model for the COVID-19 cases, India (see Table 11). Estimates of ARIMA (5, 2, 5) parameters and MAPE are shown in Table 11.

Interpretation of the Parameters

We have selected the model parameters using the Akaike Information Criterion. We obtained the parameters as: \(p=5, d=2 \mathrm{~and~} q=5.\) As \(p=5\), it means that the order (number of time lags) of Autoregression part of the model is 5. In general, we can say that the cumulative cases of COVID-19 in a day are dependent on the cases of previous 5 days. As \(q=5\), the present value is dependent on the moving average (residuals) of previous 5 days. As \(d=2,\) the series \({y}_{t}^{*}={y}_{t}-2{y}_{t-1}+{y}_{t-2}\) is stationary. A higher value of d indicates positive autocorrelations out to a high number of lags. Thus, we can have the equation for our model, using Eq. 8 as:

where all the symbols have their meanings as per “ARIMA Model”.

Estimates of the Holt–Winters exponential smoothing and exponential smoothing models are given in Table 12. According to the MAPE and accuracy measures, the ARIMA (5, 2, 5) is a better model than the Holt–Winters exponential smoothing and usual exponential smoothing models. From this, we can conclude that the ARIMA model is the best fit for the cases of COVID-19, followed by Holt–Winters model. The forecasting values along with 95% confidence intervals are shown in Table 13 and Fig. 4. We have used actual data from 11th June to validate the model.

Even though most of the actual cases are covered in the 95% confidence intervals of the ARIMA and Holt–Winters forecasts, they are seen to be nearer to the Upper Limits of the Confidence Intervals and are deviated from the estimates. It might be possible that in the future days, the forecasts might underestimate the actual cases. This might be attributed to the changing pattern of the growth of the pandemic in our country as seen in the regression analysis. Thus, we suggest a segment-wise time-series models to forecast the future cases in a more accurate manner.

We present the segment-wise ARIMA and Holt–Winters models for 1st June to 10th July.

We have seen that our time-series data are non-stationary and, thus, we select the most optimal values of \((p, d, q)\), which has the least AIC. According to AIC, (5, 2, 3) is the best-fitting model for the time-series data from June 1 to July 10, with AIC = 634.18. Estimates of ARIMA (5, 2, 3) model with the corresponding MAPE and Accuracy are given in Table 14 (Fig. 5).

From Fig. 6, we deduce that the optimal value of \(d\) is 2, as the time series becomes stationary with differencing degree = 2.

Estimates of the Holt–Winters exponential smoothing and exponential smoothing models are given in Table 15. According to the MAPE and accuracy measures, the ARIMA (5, 2, 3) is a better model than the Holt–Winters exponential smoothing and usual exponential smoothing models. From this, we can conclude that the ARIMA model is the best fit for the cases of COVID-19, followed by Holt–Winters model. The forecasting values along with 95% confidence intervals are shown in Table 16 and Fig. 4. We have used actual data from 11 June to validate the model. We observe that the ARIMA model captures the trend well and estimates the cumulative cases properly.

From the interpretations of both the fitted ARIMA models, we can say that as the values of \(p\) and \(d\) are 5 and 2, respectively; the daily cumulative cases are dependent on the cases of previous 5 days. Also, to convert the time series of daily cases into stationary, we need differencing degree of 2.

Conclusion and Future Scope

From the regression analysis, we conclude that the spread of COVID-19 disease grew exponentially from March 3 to April 5. Further, from April 6 to May 2, the cases followed a quadratic regression. From May 3 to May 15, we see a linear growth of the pandemic with average daily cases of 3584. After May 15 to May 31, we again saw a spike in the cases that lead to a quadratic growth of the pandemic. And, from June 1 to July 11, we saw a major spike in the growth of the pandemic as it has followed quartic growth.

Verma et al. (2020) showed the four stages of the epidemic, S1: exponential, S2: power law, S3: linear and S4: flat. We saw that the course of COVID-19 in India followed this regime till May 15. But after the linear trend from May 3 to May 15, the spread has again reached the quadratic growth and from June 1 to July 11, India is witnessing a quartic growth. This might be attributed to the relaxation of lockdown measures in the country. Though it was much likely that the cases would start to reduce post-linear stage growth as the total cases may start to follow a square root equation, i.e. \(y\left(t\right)\sim \sqrt{t}.\) And this might lead to reduction in the daily number of cases (as\(y^{\prime}(t)\sim 1/\sqrt{t}\),) leading to flattening of the curve. But, due to reduced restrictions, we see a reverse trend, which might be alarming and suggest the imposition of strict lockdown to reverse this trend of pandemic growth. If we continue to open our economy in this way, we might go back to the exponential growth of the pandemic and this would lead to huge destruction to human lives and cause a greater impact on our economy.

We also observe that some cities have been the hotspots of the disease, such as Delhi (more than 131,000 cases), Mumbai (more than 94,000 cases), Chennai (more than 78,000 cases), Thane (more than 63,000 cases), etc. as on 14 June, 2020. While the other states and cities have seen a slower growth of the pandemic, these cities have seen explosive growths. Due to the opening of air and rail transport in the country, the virus is likely to spread in the other regions as well as people from these cities (especially metro cities) are travelling to different states. Thus, it is highly advisable that the country should go back to its lockdown phase until we see reduction in trend.

In time-series analysis, we conclude that the ARIMA (5, 2, 5) is the best-fitting model for the cases of COVID-19 from 4th March to 10th July with an accuracy of 97.38%. The basic exponential smoothing is not very accurate for our case, but we see that the Holt–Winters model is around 97.11% accurate. Both ARIMA (5, 2, 5) and Holt–Winters models suggest a rise in the number of cases in the coming days. We observed that both the ARIMA and Holt–Winters models capture the data well and the actual data from 11th July validate the forecasts well as they lie in the predicted confidence intervals. But, while validating the model, the actual values are always near to the Upper Confidence Limits, it might be possible that in further days, our model might underestimate the cases. This might be possible because of the changing trend of the growth of the pandemic in India.

Thus, we used segmented time-series models and took data from 1st June to 10th July to build separate ARIMA and Holt–Winters models. We concluded that ARIMA (5, 2, 3) is the best-fitting model for COVID-19 cases in the given time period with an accuracy of 99.86%. The basic exponential smoothing is not very accurate or this case as well but, the Holt–Winters model is around 99.78% accurate. We also observe that the ARIMA and Holt–Winters models capture the data well and the actual data from 11th July validate the forecasts and lie near to the estimates.

We may also conclude that the cases of COVID-19 will rise in the coming days and the situation may turn alarming if proper measures are not followed. Since the economic activities have started in the country, people need to be more careful while going out. And explosion of the pandemic in the whole country can cause a serious damage to human lives, healthcare system as well as the economy of the country. Thus, there is an urgent need of imposing strict lockdown measures to curb the growth of the pandemic. We must also learn to lead our lives by following all the precautions even if the lockdown restrictions are relaxed and the economic activities are resumed.

Comparison of Indian scenario with that of other countries might not prove fruitful at this stage because of the demographic differences and/or the characteristics of the disease. Also, comparison of the Indian context with that of the other countries of the world will require to study the spread of the pandemic in those countries in depth and might be considered as an altogether in the future studies.

This study was limited to data-driven models using the total COVID-19 cases. In the future studies, the other co-factors (associated with the demographics, social, cultural and medical infrastructure, etc.) can be taken to considerations.

References

Arti MK, Kushagra B (2020) Modeling and predictions for COVID 19 spread in India, https://doi.org/10.13140/RG.2.2.11427.81444

Bhatnagar MR (2020) COVID-19: mathematical modeling and predictions, submitted to ARXIV. Online available at: https://web.iitd.ac.in/~manav/COVID.pdf

Box GEP, Jenkins GM, Reinsel GC (2008) Time analysis. Wiley, Hoboken

Box GEP, Jenkins GM, Reinsel GC, Ljung GM (2015) Time series analysis: forecasting and control. Wiley, Hoboken

Brockwell PJ, Davis RA (1996) Introduction to time series and forecasting. Springer, Berlin

Ceylan Z (2020) Estimation of COVID-19 prevalence in Italy, Spain, and France. Total Environ Sci Total Environ 729:138817

Chatterjee S, Shayak B, Asad A, Bhattacharya S, Alam S, Verma MK (2020) Evolution of COVID-19 pandemic: power law growth and saturation. medRxiv. https://doi.org/10.1101/2020.05.05.20091389

Chintalapudi N, Gopi B (2020) Amenta Francesco COVID-19 virus outbreak forecasting of registered and recovered cases after sixty day lockdown in Italy: a data driven model approach. J Microb Immunol Infect. https://doi.org/10.1016/j.jmii.2020.04.004

Earnest A, Chen MI, Ng D, Leo YS (2005) Using autoregressive integrated moving average (ARIMA) models to predict and monitor the number of beds occupied during a SARS outbreak in a tertiary hospital in Singapore. BMC Health Serv Res 5:1–8. https://doi.org/10.1186/1472-6963-5-36

Fanelli D, Francesco P (2020) Analysis and forecast of COVID-19 spreading in China, Italy and France. Chaos Solitons Fractals Nonlinear Sci Nonequilibrium Complex Phenomena 134:109761

Gaudart J, Touré O, Dessay N, Dicko AL, Ranque S, Forest L, Demongeot J, Doumbo OK (2009) Modelling malaria incidence with environmental dependency in a locality of Sudanese savannah area. Mali Malar J. https://doi.org/10.1186/1475-2875-8-61

Giordano G, Blanchini F, Bruno R, Colaneri P, Filippo A, Matteo A (2020) Force, a SIDARTHE model of COVID-19 epidemic in Italy, arXiv:2003.09861 [q-bio.PE]

Gupta PK, Bhaskar P, Maheshwari S (2020) Coronavirus 2019 (COVID-19) Outbreak in India: a perspective so far. J Clin Exp Invest. 11(4):em00744

He Z, Tao H (2018) International journal of infectious diseases epidemiology and ARIMA model of positive-rate of influenza viruses among children in Wuhan, China: a nine-year retrospective study. Int J Infect Dis 74:61–70. https://doi.org/10.1016/j.ijid.2018.07.003

Holt CE (1957) Forecasting seasonal and trends by exponentially weighted averages (O.N.R. Memorandum No. 52). Carnegie Institute of Technology, Pittsburgh. https://doi.org/10.1016/j.ijforecast.2003.09.015

Ivorra B, Ferrández MR, Vela-Pérez M, Ramos AM (2020) Mathematical modeling of the spread of the coronavirus disease 2019 (COVID-19) considering its particular characteristics. The case of China. Commun Nonlinear Sci Numerical Simulat 88:105303

Kumar A, Gupta PK, Srivastava A (2020) A review of modern technologies for tackling COVID-19 pandemic. Diabetes Metab Syndr 14(4):569–573

Lin Q, Zhao S, Gao D, Lou Y, Yang S, Musa SS, Wang MH, Cai Y, Wang W, Yang L, He D (2020) A conceptual model for the coronavirus disease 2019 (COVID-19) outbreak in Wuhan, China with individual reaction and governmental action. Int J Infect Dis 93:211–216

Manikandan M, Velavan A, Singh Z, Purty AJ, Bazroy J, Kannan S (2016) Forecasting the trend in cases of Ebola virus disease in West African countries using auto regressive integrated moving average models. Int J Community Med Public Health 3:615–618

Ministry of Health and Family Welfare (2020) Government of India. Available at https://www.mohfw.gov.in/

Montgomery DC, Peck EA, Vining GG (2012) Introduction to linear regression analysis. Wiley, Hoboken

Petropoulos F, Makridakis S (2020) Forecasting the novel coronavirus COVID-19. PLoS ONE 15(3):e0231236. https://doi.org/10.1371/journal.pone.0231236

Polwiang S (2020) The time series seasonal patterns of dengue fever and associated weather variables in Bangkok (2003–2017). BMC Infect Dis 20:208. https://doi.org/10.1186/s12879-020-4902-6

R Core Team (2020) R: language and environment for statistical computing. R foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/

Singh B, Jadaun GS (2020) Modeling tempo of COVID-19 pandemic in India and significance of lockdown. Medrxiv. https://doi.org/10.1101/2020.05.15.20103325

Verma MK, Asad A, Chatterjee S (2020) COVID-19 pandemic: power law spread and flattening of the curve. Trans Indian Natl Acad Eng. https://doi.org/10.1007/s41403-020-00104-y

Winters PR (1960) Forecasting sales by exponentially weighted moving averages. Manage Sci 6:324–342

Zhang X, Liu Y, Yang M, Zhang T, Young AA, Li X (2013) Comparative study of four time series methods in forecasting typhoid fever incidence in China. PLoS ONE. https://doi.org/10.1371/journal.pone.0063116

Zhang X, Ma R, Wang L (2020) Predicting turning point, duration and attack rate of COVID-19 outbreaks in major Western countries. Chaos Solitons Fractals Nonlinear Sci Nonequilibrium Complex Phenomena 135:109829

Ziff AL, Ziff RM (2020) Fractal kinetics of COVID-19 pandemic. medRxiv. https://doi.org/10.1101/2020.02.16.20023820

Acknowledgements

Dr. Vikas Kumar Sharma greatly acknowledges the financial support from Science and Engineering Research Board, Department of Science & Technology, Govt. of India, under the scheme Early Career Research Award (file no.: ECR/2017/002416).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sharma, V.K., Nigam, U. Modeling and Forecasting of COVID-19 Growth Curve in India. Trans Indian Natl. Acad. Eng. 5, 697–710 (2020). https://doi.org/10.1007/s41403-020-00165-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41403-020-00165-z