Abstract

In the world’s water scarce regions, groundwater as an important and strategic resource needs proper assessment. An accurate forecasting needs to be performed in order to make a better identification of fluctuating nature of groundwater levels. In this study, groundwater level fluctuations of Kabudarahang aquifer was synchronized and verified. Investigation was conducted with usage and application of time series models. Groundwater level data during 2003–2014 are used for calibration and analyses were performed using Box-Jenkins models. Residual error analysis and comparison of observed and calculated groundwater levels were performed. Then a prediction model for groundwater level in Kabudarahang aquifer developed. The model was used for predicting the groundwater level during 2014–2017. Model results showed that the groundwater level in this aquifer will endure a 5 m decline for the next three upcoming years.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Proper management of water resources needs analysis of different hydrological parameters dynamic and complex nature. Groundwater level analysis requires design and assessment in a proper development program. Water level in aquifer is an important parameter in groundwater hydrology; therefore a precise analysis of its temporal and spatial changes can provide valuable information in the aquifer system behavior (Aflatooni and Mardaneh 2011). Groundwater level assessment is a main information source about potential of hydrologic stress within a groundwater system. Groundwater exploitation and land use data during short term and long term periods are valuable in groundwater system evaluations (Winter et al. 2000; Moon et al. 2004; Ahmadi and Sedghamiz 2007).

Models can act as illustrations of simple to complicated hydrogeological occurrences in groundwater assessment strategy (Ahmadi and Sedghamiz 2007; Salami and Ehteshami 2015; Ehteshami et al. 1991). A complex model simulates actions and reactions within each natural process such as groundwater (Ehteshami et al. 1991; Ehteshami et al. 2013; Ehteshami and Biglarijoo 2014). A sufficient model selection has significant importance with regard to many different aspects of groundwater resource management strategy. Proposed models include Man-Kendal method, t test and Cradock tests (Ehteshami et al. 2016), artificial neural network approach (Polemio and Casarano 2008; Salami and Ehteshami 2016), and time series analysis methods like spectral and correlation analysis (Aflatooni and Mardaneh 2011; Nayak et al. 2006; Chen et al. 2004; Lee et al. 2005), and moving average (Rajmohan et al. 2007; Reghunath et al. 2005). In regions where accurate hydrologic data are inaccessible the groundwater level fluctuations can be predicted using stochastic methods like principle component analysis (PCA) and cross correlation analysis methods (Moustadraf et al. 2008).

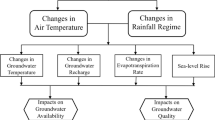

Conceptual and physical models are the main tools for representing the hydrological variables and perceiving physical processes in a system (Anderson and Woessner 1992). Groundwater systems not only have a dynamic nature but also change in response to climatic stresses and anthropologic activities (Alley et al. 2002). Human induced stresses on groundwater resources include population growth, increasing agricultural productions and rapid expansion of urban areas (Gunatilaka 2005). The main human intervention in groundwater resources is excessive extraction or overuse of resources, particularly in vast urban areas of several Asian countries like China, India, Indonesia, Nepal, Philippine, Thailand and Vietnam (UNEP 2002, ADB 2007, WEPA 2007). While physical modeling is suitable in data scarce conditions, presence of several factors such as anthropologic effects (human interferences) makes accurate determination of hydrological parameters so difficult, therefore application of time series models in these environments are preferred (Kim et al. 2005). A number of time series methods are used in various groundwater studies in order to analyze groundwater data in response to independent stresses (Ganoulis and Morel-Seytoux 1985; Gurdak et al. 2007; Perry 1994; Perry and Hsu 2000; Yu and Chu 2012).

In order to evaluate the groundwater potential often a systematic approach with ability of trend decomposition, analysis of time series and frequency analysis and comparing between various types of data and frequencies in hydrologic setting and between different basins is required for local and regional assessments (Hanson et al. 2004). A coherent and reasonable decomposition method can separate and quantitatively analyze the effects of independent factors on groundwater and also identify the relations and complexities between such parameters in hydrological processes and climatological changes (Hanson et al. 2004; Mayer and Congdon 2008). Various frequency analysis methods can extract seasonal characteristics. Detrending is the process of eliminating a trend from a time series in order to remove a property that can cause long-term effects on time series such as groundwater extraction and irrigation application of groundwater which can lead to irregularities in some embedded cyclic signals (Hanson et al. 2004; Gardner and Heilweil 2009; Prinos et al. 2002).

There are number of different methods and techniques for analyzing groundwater table fluctuation through probability characteristics, time series methods, synthetic data generation, multiple regression, group theory, pattern recognition and neural network methods (Adhikary et al. 2012). A time series model can be defined as an empirical model for stochastic modeling and forecasting temporal behavior of hydrologic systems (Brockwell and Davis 2002). The stochastic time series models are useful and popular tools for medium-range forecasting and synthetic data generation. Several stochastic time series models including the Markov, Box- Jenkins (BJ) Seasonal Autoregressive Integrated Moving Average (SARIMA), deseasonalized Autoregressive Moving Average (ARMA), Periodic Autoregressive (PAR), Transfer Function Noise (TFN) and Periodic Transfer Function Noise (PTFN), can be used for these purposes (Box et al. 2008). The Markov, ARMA and SARIMA models are univariate models and the PAR, TFN and PTFN models are multivariate models. Furthermore, the PAR and PTFN models are periodic multivariate models (Hipel and Mcleod 1994).

The selection of a proper modelling method for a particular problem depends on many factors such as the number of series that are being modelled, required accuracy, modelling costs, ease of model usage and results interpretation, etc. (Mondal and Wasimi 2007) Several applications of all of these models for analysis of fluctuations of groundwater level over time are vastly used in several groundwater hydrology applications (Mondal and Wasimi 2006). Furthermore, they have extensive use in various areas of science and engineering such as forecasting of river discharge and synthetic data generation (Salas and Obeysekera 1982; Mondal and Wasimi 2003) stream flow and water quality data prediction (Mondal and Wasimi 2005; Kurunc et al. 2005). Water resource monitoring and assessment (Mondal and Wasimi 2003) and drought period simulation (Durdu 2010). When a relatively few number of series need to be modeled and a large expenditure of time and effort can be justified using the BJ Method (Seasonal ARIMA) is of priority (Mondal and Wasimi 2007). The purpose of this study is to develop SARIMA models for application in groundwater level fluctuation modeling by developing equations through usage of seasonal ARIMA algorithms.

Materials and methods

Study area

Kabudarahang as one of the main aquifers in Northern part of Hamedan County covers 3448 Km2 of Gharachay river basin. The Kabudarahang County with an average altitude of 1680 m above mean sea level is located at approximately 35°12′30″ north latitude, 48°43′26″ east longitude within a 60 km distance from the capital city of the Hamdan province, Iran with an area of 1186 Km2. The mean annual regional precipitation for a recorded 36 years is 332.7 mm (Fig. 1).

Time series modeling

Time series modeling or in other words describing the behavior of a time series in mathematics language includes three common steps: identifying the experimental model, estimating the model parameters (fitting), and verification of the model. In order to proper identification of an experimental model, it is recommended that at least 50 observations of the regarded series must be available. In analyzing hydrological and environmental time series with application of stochastic and statistical theories it is assumed that all variables have a normal distribution. First step in modeling a time series is to draw it as a time series diagram which can give a boost for identification of trends, variance non-stationarity, seasonality (periodicity) and other types of irregularities in data.

Most of time series data doesn’t follow a normal distribution, thus before any modeling or analyzing processes they need to be normalized by means of transfer functions (Fig. 2). As a pretreatment step, a variety of different transfer functions and statistical tests are available to prepare data before any modeling which includes Box- Cox transformation and non-stationarity in mean or variance. These tests can be categorized in two main groups: independence tests (for time), and normal distribution tests. Also prior to any modeling process the conditions of time series are tested for goodness of fit, well-fitting criteria, level of reliability and statistical parameters limits.

Tests of independence

For modeling the irregular (random noise) component, the time series should be time independent, which means each value should be independent from its previous and subsequent values. For instance, after removing the trend, seasonality and shock components, the mean annual precipitation should be independent of previous and the next year mean precipitation in order to be time independent. Correlogram (autocorrelation function) is a useful device for describing the behavior of stationary processes which can determine if they are independent of time. In fact the autocorrelation function shows the variations of autocorrelation coefficients through different time lags. The autocorrelation coefficient at lag K that is the correlation between \(X_{t}\) and \(X_{t + k}\) can be shown by \(r_{k}\) which is:

where \(C_{k} \left( { = E\left[ {\left( {X_{t + k} - \bar{X}} \right)\left( {X_{t} - \bar{X}} \right)} \right]} \right)\) is defined as autocovariance function and therefore \({\text{C}}_{0}\) also can be defined as the variance of the time series. The Anderson method, turning point method and Porte Manteau method are three principal methods for analyzing time independency of time series and to diagnose independency of time in periodic series the cumulative period gram method can be used.

Anderson test of independence in time

In this method confidence limits of 95 and 99 % for the autocorrelation coefficient are defined as:

where N is the number of the sample and k is the lag. The autocorrelation functions within these limits are independent of time and else they have time dependency. It is noteworthy to mention that if for each value of k, \({\text{r}}_{\text{k}} = 0\) it implies that the time series is quite independent of time or serially independence (Yurekli et al. 2004).

Ests of normality

There are several methods to check the normality of time series, one of which is a common method that is to plot the time series in a normal probability plot and checking if the plots form a straight line. If they form a rather straight line it implies that the time series has a normal distribution, otherwise the normality assumption is refuted. In addition to this method, the two tests of Chi square test and skewness test are applicable for examining normal distribution of time series. The normal distribution of groundwater data is tested by Chi square test as follows.

Chi square test

This method is preferably used for clustered data. At first the normal distribution function is fitted to the sample time series, and then the goodness of fit is quantitatively evaluated as probability percentile. When \(X_{t}\) denote the sample time series with the mean \(\bar{X}\) and variance \(\hat{\sigma }\). This series can be classified into k groups with the attributed probability of \(\frac{1}{k}\) for each in an ascending manner. The values of \(u_{1} ,u_{2} ,u_{3} , \ldots , u_{k - 1}\) for the cumulative probability of \(\frac{1}{k},\frac{2}{k},\frac{3}{k}, \ldots ,\frac{k - 1}{k}\) can be obtained from the normal distribution table. Therefore the following values can be calculated using the obtained values from the table and the mean and variance of the sample time series such as:

Let \(N_{i}\) denote absolute frequency of the ordered sample series for group i. Therefore the number of points which are expected to set in each group is equal to \(\frac{N}{k}\). Now Chi square test with freedom degree of (k-2) is applied considering the confidence level of \(\alpha\). Finally the normality of the sample time series can be evaluated by comparing the results with the following value:

In this case, if the value \(\chi_{1 - \alpha }^{2} \left( {K - 2} \right)\) obtained from the Chi square table is greater than \(\chi^{2}\) from the formula, the normal assumption for distribution of time series \(X_{t}\) is true, otherwise the assumption of normal distribution is refuted (Karamouz et al. 2012). Summary of Properties of autoregressive (AR), moving average (MA), and mixed autoregressive moving average (ARMA) processes show that in Table 1.

Drawing graphs of the ACF and PACF

The autocorrelation function (ACF) and partial autocorrelation function (PACF) are useful tools for identifying models. The type and order of the process can be determined by means of graphical analysis using these diagrams. In practice, to obtain a useful estimate of the autocorrelation function, a minimum sample population (n = number of observations) of 50 is recommended (Mondal and Wasimi 2003) and the ACFs and PACFs should be calculated and depicted for k = 1, 2, 3, …, K where K is not larger than \(\frac{n}{4}\), for a more reliable identification of the model. For a given observed time series of \(Z_{1} ,\;Z_{2} ,\; \ldots ,\;Z_{n}\), the sample ACF is defined by:

Hence, the graph in which \(\hat{\rho }_{k}\) is plotted against k is known as the sample correlogram. To calculate the sample PACF (\(\hat{\phi }_{kk}\)), (Chandler and Scott 2011) represents following equations:

and:

The ACF and PACF diagrams of groundwater level fluctuation time series of Kabudarahang plain are shown in Fig. 3.

Model selection

Selecting the most reliable modeling technique between several available models for seasonal forecasting and analyzing requires an inductive approach. The techniques are such as: (1) exponential smoothing; (2) Markov models; (3) decomposition and trend extrapolation method; (4) Holt–Winters method; and (5) Box-Jenkins Seasonal Autoregressive Integrated Moving Average (SARIMA). The model selection depends on many factors such as: number of series to be forecasted, required accuracy of forecasts, model facility and proficiency, modeling costs, interpretative outputs, etc.

Decomposition method

An alternative way of modeling time series data is based on the decomposition of the model into trend, seasonal, and noise components. The classical decomposition partitions a signal into three elemental components called trend, periodicity and random or irregular components and can be written in multiplicative form as

X is the time series data, mt is the trend component, st is the periodic component, and yt is random noise or irregular component. There are a number of techniques available to evaluate trends within datasets. The simplest way is to apply a simple linear regression analysis or some low-order polynomial regression (Durbin 1960). Moving average, e.g. a 12-month moving average, is an alternative detrending method to remove small-scale structures, e.g. noises, and short periods from a time series.

In extrapolation of trend curves method for decomposition of time series, a trend line is fitted to the smoothed data using the least squares regression. Then the series become detrended by either dividing the data by the trend component (multiplicative model) or subtracting the trend component from the data (additive model). However, Minitab trend analysis tool uses the multiplicative model by default. Subsequently a centered moving average with a length equal to the length of the seasonal cycle is applied to smooth the detrended data. At the next step the raw seasonal values are calculated by either dividing the moving average into multiplicative model or subtracts it from the additive model. The median of the raw seasonal values for corresponding periods of time in the seasonal cycles are determined and adjusted to constitute the seasonal indices which are used to seasonally adjust the data. Therefore, the seasonal adjustment procedure is applied at this step by the iterative application of moving averages according to sequence of the seasonal adjustment procedure (Chatfield 2011).

Seasonal ARIMA model: SARIMA(p,d,q) × (P,D,Q)s

ARIMA models generally have both non-seasonal and seasonal components. The general non-seasonal ARIMA model is AR to order p and MA to order q and operates on the dth difference of the time series z t ; thus, a model of the ARIMA family is classified by three parameters (p, d, q) that can have zero or positive integral values. have generalized the ARIMA model to deal with seasonality and have defined a general multiplicative seasonal ARIMA model, commonly known as a seasonal ARIMA model. In this study the general mixed seasonal model is denoted as: ARIMA(P,D,Q)s. Where, P = order of the seasonal autoregressive process, D = number of seasonal differencing, Q = order of the seasonal moving average and s = the number of the seasonality. The seasonal ARIMA model described as ARIMA (p, d, q).(P, D, Q)S, where (p, d, q) non-seasonal part of the model and (P, D, Q) seasonal part of the model with a seasonality S, can be written as:

where, ϕ(B) and ϕ(B) are polynomials of order p and q, respectively; \({{\Phi }}\left( {{\text{B}}^{\text{s}} } \right)\) and \({{\Theta }}\left( {{\text{B}}^{\text{s}} } \right)\) are the polynomials in \({\text{B}}^{\text{s}}\) of degrees P and Q respectively; p equals order of non-seasonal autoregressive operator; d equals, number of regular differencing; q order of the non-seasonal moving average; P order of seasonal autoregression; Q order of seasonal moving average. The ordinary and seasonal difference components are designated by:

In derivations of d and D; B is the backward shift operator, d is the number of regular differences, D is the number of seasonal differences, S = seasonality. Zt denotes observed value at time t, where t = 1, 2…k; and at is the Gaussian white noise or estimated residual at time t.

Holt–Winters forecasting method

Exponential smoothing can be easily generalized to time series which include trends and seasonal changes. This practice is known as Holt-Winters method and was introduced by Winters and Chatfield to describe trend and seasonal components which are synchronized using exponential smoothing algorithms (Montgomery et al. 2008). By assuming that observed parameters are monthly and \({\text{m}}_{\text{t}}\) represents average estimation, \({\text{r}}_{\text{t}}\) represents trend estimation (expected increase or reduction per month in current average) and \({\text{S}}_{\text{t}}\) represents seasonal operating of month t. In this case since every new observation is possible, all three components are synchronized. If seasonal variations are multiplicative, synchronizing equations would be (Mondal and Wasimi 2005):

where \(x_{t}\) denotes the latest observation and \({{\upalpha }}\), \({{\upbeta }}\), \({{\upgamma }}\) are constants which \(0 < \alpha , \beta ,\gamma < 1\), so the predictions about time t are:

If variations are cumulative, seasonal equations would be:

To see whether the cumulative seasonal variable is better or the multiplicative seasonal variable, data diagram should be tested. Therefore, if the time series exhibits an obvious trend or seasonal pattern it indicates that the appropriate model is chosen. Moreover, if the seasonal period doesn’t include 12 months observations, the equations should be corrected. Basic values of \(s_{t} ,\;r,\;m_{t}\) can be selected in a relatively inexperienced way using data of first two years considering three constants \(\alpha ,\beta ,\gamma\) with the difference of minimum quantity equals to \(\mathop \sum \nolimits_{i = 2,5}^{N} e_{i}^{2}\).

Model verification

In order to evaluate the models the following indicators are used:

As it is presented the minimum residuals are belonging to the SARIMA(1, 1, 0)(1, 1, 1)12 model which has the best fitting to observed data.

Results and discussion

A comparative study on state-of-the-art prediction tools such as Box-Jenkins and Holt-Winters have been used to evaluate groundwater level fluctuations. Also, the extrapolation of trend curve was performed to smooth the detrended data. The assumption of zero constant term (Constant, SAR, AR) proved to be acceptable considering satisfactory statistical t test and P test values. Therefore the model can be reliably fitted without a constant term in order to represent stochastic trends. For instance, for some SARIMA models P value is greater than 0.05. Thus the assumption of \(H_{0} :\theta_{0} = 0 ,\quad H_{1} :\theta_{1} = 0 ,\quad H_{2} :\theta_{2} = 0\) should be accepted with 95 % confidence level, for other models also the zero assumption of constant term and SAR and AR coefficients are reasonable since the modeled differencing series has a nearly zero mean. In order to evaluate the eligibility of the model a residual analysis of the fitted model performed. Residual analysis results of the fitted model in the Kabudarahang plain’s hydrographs are shown in Table 2. The normal probability plot and histogram of residual values confirm that the normal assumption can be accepted.

Similarly, these verifications have been applied on other models too. Furthermore, the Porte-Manteau method is used as a more formal method for model verification which is based on autocorrelation of residuals. These results for different models are evaluated. Since the p value, considering different lags for the model, is desirably more than 0.05 the assumption of zero autocorrelations up to lag 48 is acceptable such as, \(\left( {H_{0} :\rho_{1} = \rho_{2} = \cdots = \rho_{48} = 0} \right)\).

Finally the Akaike criterion is used for model selection. Considering Akaike standard as:

Therefore SARIMA(1,1,0)(1,1,1)12 selected as the best model among other seasonal models of Box-Jenkins for groundwater level simulation due to smaller Akaike value in comparison with other models(Tables 3, 4).

The results of evaluated models and the real values of groundwater level (during 2003–2014) and anticipated values (during 2014–2017), Holt model, extrapolation of trend and Box-Jenkins model for level changes, SARIMA(1,1,0)(1,1,1)12 model reveals more correlation between measured groundwater level fluctuation data and simulated values (Tables 3, 4). Figure 4 shows measured and forecasted groundwater depths. As it is shown, the selected models predict an overall decreasing trend in mean ground water levels which results in a 5 m decline during three upcoming years. The forecasted results and measured data show a good agreement (R2 = 98 %).

Conclusions

This study presents a data conservative approach of time series modeling to evaluate the groundwater fluctuations in semi-arid area of Kabudarahang plain. Several stochastic models were developed for groundwater assessment. Then, after data preparation, diagnostic checking, and model verification, by usage of Akaike criteria, adequate model was selected. Consequently, the selected model was used to forecast the groundwater table for the next 3 years. As it is discussed the seasonal ARIMA algorithms proved to be a useful tool for simulation and short-term forecasting with a reasonably high correlation coefficient of 98 % to the measured data considering their dynamic and complex nature of groundwater. The presented results may be useful for regulatory agencies to make the best use of funds available for monitoring groundwater level fluctuations.

References

ADB (2007) Recent advances in water resources development and management in developing countries in Asia. Asian Water Development Outlook. Asian Development Bank, Manila

Adhikary SK, Rahman M, Gupta AD (2012) A stochasticmodelling technique for predicting groundwater table fluctuations with time series analysis. Int J Appl Sci Eng Res 1(2):238–249

Aflatooni M, Mardaneh M (2011) Time series analysis of groundwater tablefluctuations due to temperature and rainfall change in Shiraz plain. Int J Water Resour Environ Eng 3:176–188

Ahmadi SH, Sedghamiz A (2007) Geostatistical analysis of spatial and temporal variations of groundwater level. Environ Monit Assess 129(1–3):277–294

Alley WM, Healy RW, LaBaugh JW, Reilly TE (2002) Flow and storage in groundwater systems. Science 296:1985–1990

Anderson MP, Woessner WW (1992) Applied groundwater modeling: simulation of flow and advective transport. Academic Press, New York

Box GEP, Jenkins GM, Reinsel GC (2008) Time series analysis: forecasting and control, 4th edn. Prentice Hall, Englewood Cliffs

Brockwell PJ, Davis RA (2002) Introduction to time series and forecasting, vol 1. Taylor & Francis, London

Chandler RE, Scott EM (2011) Statistical methods for trend detection and analysis in the environmental sciences. Wiley, Chichester, UK

Chatfield C (2011) The analysis of time series. Wiley, London

Chen Z, Grasby S, Osadetz KG (2004) Relation between climate variability and groundwater levels in the upper carbonate aquifer, southern Manitoba, Canada. J Hydrol 290:243–262

Durbin J (1960) The fitting of time-series models. Rev Inst Int Stat 28(3):233–244

Durdu ÖF (2010) Stochastic approaches for time series forecasting of boron: a case study of Western Turkey. Environ Monit Assess 169(1–4):687–701

Ehteshami M, Biglarijoo N (2014) Determination of nitrate concentration in groundwater in agricultural area in Babol County. Iran J Health Sci. 2(4):1–9

Ehteshami M, Peralta RC, Eisele H, Deer H, Tindall T (1991) Assessing pesticide contamination to ground water: a rapid approach. J Ground water 29(6):862–886

Ehteshami M, Langeroudi AS, Tavassoli S (2013) Simulation of nitrate contamination in groundwater caused by livestock industry (Case study: Rey). J Environ Protect 4(7):91–97. doi:10.4236/jep.47A011

Ehteshami M, Dolatabadi Farahani N, Tavassoli S (2016) Simulation of nitrate contamination in groundwater using artificial neural networks. J Model Earth Syst Environ (Springer) 2:28. doi:10.1007/s40808-016-0080-3

Ganoulis J, Morel-Seytoux H (1985) Application of stochastic methods to the study of aquifer systems. UNESCO, Technical documents in hydrology, Paris

Gardner PM, Heilweil VM (2009) Evaluation of the effects of precipitation on ground-water levels from wells in selected alluvialaquifers in Utah and Arizona, 1936–2005. US Geological Survey Scientific Investigations Report, Utah and Arizona

Gunatilaka A (2005) Groundwater woes of Asia. Asian Water. January/February, 2005, pp 19–23

Gurdak JJ, Hanson RT, McMahon PB, Bruce BW, McCray JE, Thyne GD (2007) Climate variability controls on unsaturated water and chemical movement, High Plains Aquifer, USA. Vadose Zone J 6(3):533–547

Hanson RT, Newhouse MW, Dettinger MD (2004) A methodology to assess relations between climatic variability and variations in hydrologic time series in the southwestern United States. J Hydrol 287(1):252–269

Hipel KW, Mcleod AI (1994) Time series modelling of water resources and environmental systems. Elsevier Science, Amsterdam

Karamouz M, Nazif S, Falahi M (2012) Hydrology and hydroclimatology: principles and applications. Taylor & Francis, London

Kim SJ, Hyun Y, Lee KK (2005) Time series modeling for evaluation of groundwater discharge rates into an urban subway system. Geosci J 9(1):15–22

Kurunc A, Yurekli K, Cevik O (2005) Performance of two stochastic approaches for forecasting water quality and stream flow data from Yesilirmak River, Turkey. Environ Modell Softw 20(9):1195–1200

Lee J, Choi Y, Kim MJ, Lee KK (2005) Evaluation of hydrologic data obtained from a local groundwater monitoring network in a metropolitan city, Korea. Hydrol Processes 19(13):2525–2537

Mayer TD, Congdon RD (2008) Evaluating climate variability and pumping effects in statistical analyses. Ground Water 46(42):212–227

Mondal M, Wasimi S (2003) Forecasting of seasonal flow of the Ganges River in Bangladesh with SARIMA model. In: Second annual paper meet and international conference on civil engineering, Dhaka

Mondal M, Wasimi S (2005) Periodic transfer function-noise model for forecasting. J Hydrol Eng 10(5):353–362

Mondal M, Wasimi S (2006) Generating and forecasting monthly flows of the Ganges river with PAR model. J Hydrol 323:41–56

Mondal M, Wasimi S (2007) Choice of model type in stochastic river hydrology. In: 1st international conference on water and flood management, 12–14 March 2007. Dhaka, Bangladesh

Montgomery DC, Jennings CL, Kulahci M (2008) Introduction to time series analysis and forecasting, 2nd edn. Wiley, London

Moon SK, Woo NC, Lee KS (2004) Statistical analysis of hydrographs and water-table fluctuation to estimate groundwater recharge. J Hydrol 292:198–209

Moustadraf J, Razack M, Sinan M (2008) Evaluation of the impacts of climate changes on the coastal Chaouia aquifer, Morocco, using numerical modeling. Hydrogeol J 16:1411–1426

Nayak CP, Sayajirao YR, Sudheer KP (2006) Groundwater level forecasting in a shallow aquifer using artificial neural network approach. Water Resour Manage 20:77–90

Perry CA (1994) Solar-irradiance variations and regional precipitation fluctuations in the western United States. Int J Climatol 14:969–983

Perry CA, Hsu KJ (2000) Geophysical, archaeological, and historical evidence support a solar-output model for climate change. Proc Natl Acad Sci 97(23):1244–1248

Polemio M, Casarano D (2008) Climate change, drought and groundwater availability in southern Italy. Geol Soc Spec Publ 288:239–251

Prinos ST, Leitz AC, Irvin RB (2002) Design of a real-time ground-water level monitoring network and portrayal of hydrologic data in southern Florida. US geological survey water-resources investigations repor, Florida, pp 01–4275

Rajmohan N, Al-Futaisi A, Jamrah A (2007) Evaluation of long-term groundwater level data in regular monitoring wells, Barka, Sultanate of Oman. Hydrol Process 21:3367–3379

Reghunath R, Murthy T, Raghavan B (2005) Time series analysis to monitor and assess water resources: a moving average approach. Environ Monit Assess 109(1–3):65–72

Salami ES, Ehteshami M (2015) Simulation evaluation and prediction modeling of river water quality properties (Case study: Ireland Rivers). Int J Eng Sci Technol (Springer) 12(10):3235–3242. doi:10.1007/s13762-015-0800-7

Salami ES, Ehteshami M (2016) Application of artificial neural networks to estimating DO and salinity in San Joaquin River basin. Desalination Water Treat. 57(11):4888–4897. doi:10.1080/19443994.2014.995713

Salas JD, Obeysekera J (1982) ARMA model identification of geophysical time series. Water Resour Res 18:1011–1021

United Nations Environment Programme (UNEP) (2002) Global environment outlook 3. Nairobi, Kenya

WEPA (2007) Water Environment Partnership in Asia (WEPA). http://www.wepa-db.net/index.htm

Winter TC, Mallory SE, Allen TR, Rosenberry DO (2000) The use of principal component analysis for interpreting ground water hydrographs. Groundwater 32:234–246

Yu HL, Chu HJ (2012) Recharge signal identification based on groundwater level observations. Environ Monit Assess 184:5971–5982

Yurekli K, Kurunc A, Cevik O (2004) Simulation of drought periods using stochastic models. J Eng Environ Sci 28(3):181–190

Acknowledgments

The authors are grateful to Hamadan Regional Water Company for providing required long term data and documents. We also wish to thank Mr. Rabbani for his technical and logistical assistance in modeling and analysis.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Khorasani, M., Ehteshami, M., Ghadimi, H. et al. Simulation and analysis of temporal changes of groundwater depth using time series modeling. Model. Earth Syst. Environ. 2, 90 (2016). https://doi.org/10.1007/s40808-016-0164-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40808-016-0164-0