Abstract

The general method of graph coarsening or graph reduction has been a remarkably useful and ubiquitous tool in scientific computing and it is now just starting to have a similar impact in machine learning. The goal of this paper is to take a broad look into coarsening techniques that have been successfully deployed in scientific computing and see how similar principles are finding their way in more recent applications related to machine learning. In scientific computing, coarsening plays a central role in algebraic multigrid methods as well as the related class of multilevel incomplete LU factorizations. In machine learning, graph coarsening goes under various names, e.g., graph downsampling or graph reduction. Its goal in most cases is to replace some original graph by one which has fewer nodes, but whose structure and characteristics are similar to those of the original graph. As will be seen, a common strategy in these methods is to rely on spectral properties to define the coarse graph.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The idea of ‘coarsening,’ i.e., exploiting a smaller set in place of a larger or ‘finer’ set has had numerous uses across many disciplines of science and engineering. The term ‘coarsening’ employed here is prevalent in scientific computing, where it refers to the usage of coarse meshes to solve a given problem by, e.g., multigrid (MG) or algebraic multigrid (AMG) methods. On the other hand, the terms ‘graph downsampling,’ ‘graph reduction,’ ‘hierarchical methods,’ and ‘pooling’ are common in machine learning. Similarly, the related idea of clustering is an important tool in data-based applications. Here, the analogous term employed in scientific computing is ‘partitioning.’ These notions—graph partitioning, clustering, coarsening—are strongly inter-related. It is possible to use partitioning for the task of clustering data, by first building a graph that models the data which we then partition. Also, coarsening plays an important role in developing effective graph partitioning methods. Further, note that it is possible to partition a graph by just finding some clustering of the nodes, using a method from data sciences such as the K-means algorithm.

In scientific computing, the best known instance of coarsening techniques is in MG and AMG methods [28, 58, 101, 116]. Classical MG methods started with the independent works of Bakhvalov [10] and Brandt [24]. The important discovery revealed by these pioneering articles is that relaxation methods for solving linear systems tend to stall after a few steps, because they have difficulty in reducing high-frequency components of the error. Because the eigenvectors associated with a coarser mesh are direct restrictions of those on the fine mesh, the idea is to project the problem into an ‘equivalent’ problem on the coarse mesh for error correction and then interpolate the solution back into the fine level. This basic 2-level scheme can be extended to a multilevel one in a variety of ways. MG does not use graph coarsening specifically because it relies on a mesh and it is more natural to define a coarse mesh using processes obtained from the discretization of the physical domain. On the other hand, AMG aims at general problems that do not necessarily have a mesh associated with them. For AMG, the graph representation of the problem at a certain level is explicitly ‘coarsened’ by using various mechanisms [28, 101, 102]. Since these mechanisms are geared toward a certain class of problems, essentially originating from Poisson-like partial differential equations, researchers later sought to extend AMG ideas in order to define algebraic techniques based on incomplete LU (ILU) factorizations [4,5,6,7,8,9, 11, 23, 90, 118].

One can say that the idea of coarsening a graph in data-related applications started with the 1939 article of Kron [72], whose aim was to downsample electrical networks. Kron used his deep intuition to define coarsening techniques that rely on Schur complements, with the goal of obtaining sparse graphs. The justifications for the proposed technique were based on intuition rooted on knowledge about electrical networks. The related technique, widely known as Kron reduction, was revived by Dörfler and Bullo [46] who provided a more rigorous theoretical foundation. Later Shuman et al. [109] extended the Kron reduction into a multilevel framework. In parallel with this line of work, a number of authors developed techniques that bypassed the need to form or approximate the Schur complement relying instead on node aggregation and matching [35, 62, 64, 74, 95, 96, 106, 115].

Applications of graph coarsening in machine learning generally fall in two categories. First, coarsening is instrumental in graph embeddings. When dealing with learning tasks on graphs, it is very convenient to represent a node with a vector in \({\mathbb {R}}^d\) where d is small. The mapping from a node to the representing vector is termed node (or vertex) embedding and finding such embeddings tends to be costly. Hence, the idea is to coarsen the graph first, perform some embedding at the coarse level, and then refine-propagate the embedding back to the upper level; see [35, 42, 74, 94] for examples of such techniques. The second category of applications is when invoking pooling on graphs, in the context of graph neural networks (GNNs) [77, 126, 127]. However, in the latest development of GNNs, coarsening is not performed on the given graph at the outset. Instead, coarsening is part of the neural network and it is learned from the data. Another class of applications of coarsening is that of graph filtering, as illustrated by the articles [109, 110].

The goal of this paper is to show how the idea of coarsening has been exploited in scientific computing and how it is now emerging in machine learning. While the problems under consideration in scientific computing are fundamentally different from those of machine learning, the basic ingredients used in both methods are striking by their similarity. The paper starts with a discussion of graph coarsening in scientific computing (Section 2), followed by a section on graph coarsening in machine learning (Section 3). We also present some newly developed coarsening methods and results, in the context of machine learning, in Sections 4–5.

1.1 Notation and preliminaries

We denote by \(G = (V,E)\) a graph with n nodes and m edges, where V is the node set and E is the edge set. The weights of the edges of G are stored in a matrix A, so \(a_{ij}\) is the weight of the edge \((i,j) \in E\). In most cases we will assume that the graph is undirected. We sometimes use \(G=(V,E,A)\) to denote the graph, when A is emphasized.

The sum of row i of A is called the degree of node i and the diagonal matrix of the degrees is called the degree matrix:

With this notation, the graph Laplacian matrix L is defined as:

This definition implies that \(L {\mathbf {1}}= 0 \) where \({\mathbf {1}}\) is the vector of all ones, i.e., \({\mathbf {1}}\) is an eigenvector associated with the eigenvalue zero. In the simplest case, each weight \(a_{ij}\) is either zero (not adjacent) or one (adjacent). A simple, yet very useful, property of graph Laplacians is that for any vector x, we have the relation

The normalized Laplacian is defined as follows:

Note that the diagonal entries of \({\widehat{L}}\) are all ones. The matrix is again singular and has the null vector \(D^{1/2} {\mathbf {1}}\).

We will also make use of the incidence matrix denoted by \(B\in {\mathbb {R}}^{n\times m}\). A column \(b_e\) of B represents an edge \(e\in E\) between nodes i and j with weight \(a_{ij}\), and its k-th entry is defined as follows:

Note that the two nonzero values of \(b_e(k)\) have opposite signs, but we have a choice regarding which of i and j is assigned the negative sign. Unless otherwise specified, we simply assign the negative sign to the smaller of i and j. As is well-known, the graph Laplacian can be defined from the incidence matrix through the relation \(L = BB^{T}\).

1.2 Terminology and notation specific to machine learning

In data-related applications, graph nodes are often equipped with feature vectors and labels. We use an \(n\times d\) matrix X to denote the feature matrix, whose i-th row is the feature vector of node i. We use an \(n\times c\) matrix Y to denote the label matrix, where c is the number of categories. When a node i belongs to category j, \(Y_{ij}=1\) while \(Y_{ij'}=0\) for all \(j'\ne j\). Each row of Y is called a ‘one-hot’ vector. When there are only two categories, the \(n\times 2\) matrix Y can equivalently be represented by an \(n\times 1\) binary vector y in a straightforward manner.

For example, in a transaction graph, where nodes represent account holders and edges denote transactions between accounts, a node may have \(d=4\) features: account balance, account active days, number of incoming transactions, and number of outgoing transactions; as well as \(c=3\) categories: individual, non-financial institution, and financial institution. A typical task is to predict the account category given the features.

The feature matrix X provides complementary information to a graph \(G=(V,E,A)\) that captures relations between data items. Thus, a feature matrix is often associated with a graph. One should not confuse the feature matrix with a data matrix, which is often used in the situation where a graph structure is not available, but may be constructed based on the information of the data items. The notation of a data matrix by convention clashes with X; for the moment let us use Z instead to denote it, where the i-th row of Z is \(z_i\). One may construct a graph G from Z. For example, in a k-nearest neighbors (kNN) graph, there is an edge from node i to node j if and only if j is an index of the element among the k smallest elements of \(\{r_{ij}=\Vert z_j-z_i\Vert \mid j\ne i\}\). One may even define the weighted adjacency matrix A as \(a_{ij}=e^{-r_{ij}}\) when there is an edge (i, j) and \(a_{ij}=0\) otherwise. In this case, the constructed graph is entirely decided by the data matrix Z, rather than by holding complementary information to it, as is done with the feature matrix.

2 Graph coarsening in scientific computing

Given a graph \(G=(V,E)\), the goal of graph coarsening is to find a smaller graph \(G_c=(V_c,E_c)\) with \(n_c\) nodes and \(m_c\) edges, where \(n_c < n\), which is a good approximation of G in some sense. Specifically, we would like the coarse graph to provide a faithful representation of the structure of the original graph. We denote the adjacency matrix of \(G_c\) by \(A_c\) and the graph Laplacian of \(G_c\) by \(L_c\).

We will first elaborate on one of the most important scenarios that invoke coarsening (Section 2.1) and then discuss several representative approaches to it (Sections 2.2 to 2.5). Note that in practice, coarsening often proceeds recursively on the resulting graphs; by doing so, we obtain a hierarchy of approximations to the original graph.

2.1 Multilevel methods for linear systems: AMG and multilevel ILU

Graph coarsening strategies are usually invoked when solving linear systems of equations, by multilevel methods such as (A)MG [58, 101, 116] or Schur-based multilevel techniques [7, 11, 37, 79, 80, 90, 105]. In (A)MG, this amounts to selecting a subset of the original (fine) grid, known as the ‘coarse grid.’ In AMG, the selection of coarse nodes is made in a number of different ways. The classical Ruge-Stüben strategy [101] selects coarse nodes based on the number of ‘strong connections’ that a node has. Here, nodes i and j are strongly connected if \(a_{ij}\) has a large magnitude relative to other nonzero off-diagonal elements or row i. The net effect of this strategy is that each fine node is strongly coupled with the coarse set. In other methods, the strength of connection is defined from the speed with which components of a relaxation scheme for solving the homogeneous system \(Au = 0\) converge to zero, see [25, 36, 98] and Section 2.4 for additional details. For multilevel Schur-based methods, such as multilevel ILU, the coarsening strategy may correspond to selecting from the adjacency graph of the original matrix, a subset of nodes that form an independent set [105], or a subset of nodes that satisfy good diagonal dominance properties [103] or that limit the growth in the inverse LU factors of the ILU factorization [21, 22].

The coarsening strategy can be expanded into a multilevel framework by repeating the process described above on the graph associated with the nodes in the coarse set. Let \(G_0\) be the original graph G and let \(G_1, G_2, \ldots , G_h\) be a sequence of coarse graphs such that \(G_\ell = (V_\ell , E_\ell )\) is obtained by coarsening on \(G_{\ell -1}\) for \(1\le \ell < h\). Let \(A^{(0)} \equiv A\) and \(A^{(\ell )}\) be the matrix associated with the \(\ell \)-th level. The graph \(G_{\ell }\) admits a splitting into coarse nodes, \(C_{\ell }\), and fine nodes, \(F_{\ell }\), so that the linear system at the \(\ell \)-th level, which consists of the matrix \(A^{(\ell )}\) and the right-hand side \(f^{(\ell )}\) can be reordered as follows:

Note that it is also possible to list the fine nodes first followed by the coarse nodes; see [90]. The coarser-level graph \(G_{\ell +1}\) as well as the new system consisting of the matrix \(A^{(\ell +1)}\) and the right-hand side \(f^{(\ell +1)}\) at the next level, are constructed from \(G_{\ell }\) and \(A^{(\ell )}\). These are built in a number of different ways depending on the method under consideration. For the graph, we can for example set two coarse nodes to be adjacent in \(G_{\ell +1}\), if their representative children are adjacent in \(G_{\ell }\). One common way to do this is to define two coarse nodes to be adjacent in \(G_{\ell +1}\) if they are parents of adjacent nodes in \(G_{\ell }\). Next we discuss coarsening in the specific context of AMG.

2.1.1 Algebraic multigrid

AMG techniques are all about generalizing the interpolation and restriction operations of standard MG. The coarsening process identifies for each fine node a set of nearest neighbors from the coarse set. Using various arguments on the strength of connection between nodes, AMG expresses a fine node i as a linear combination of a selected number of nearest neighbors that form a set \(C_i\), see Fig. 2. To simplify notation, we consider only one level of coarsening and drop the subscript \(\ell \).

If C is the set of coarse nodes and F is the set of fine nodes, we can define related subspaces \({\mathcal {X}}_C\) and \({\mathcal {X}}_F\) of the original space \({\mathcal {X}}={\mathbb {R}}^n\). In fact we can write \({\mathcal {X}} = {\mathcal {X}}_C \oplus {\mathcal {X}}_F\). Then, given a vector x with components in the coarse space \({\mathcal {X}}_C\), we associate a vector Px in the original space \({\mathcal {X}}\), whose i-th component is defined as follows [104, 13.6.2]:

The mapping P sends a point of \({\mathcal {X}}_C\) into a point \(y = Px\) of \({\mathcal {X}}\). The value of y at a coarse point, a node in C, is the same as its starting value. The value at another node, one in F, is defined from interpolated values at a few coarse points. Thus, P is known as the interpolation operator.

The transpose of P represents the restriction, or coarsening mapping. In the context of AMG, it projects a point in \({\mathcal {X}}\) into a point in \({\mathcal {X}}_C\). Each node in C is a linear combination of nodes of the original graph.

If we now return to the multilevel case where \(P_{\ell }\) denotes a corresponding interpolation operator at the \(\ell \)-th level, then AMG defines the linear system at the next level using Galerkin projection, where the matrix and right-hand side are, respectively,

Recall that we started with the original system \(A^{(0)} x = f^{(0)}\), which corresponds to \(\ell = 0\). AMG methods rely on a wide variety of iterative procedures that consist of exploiting different levels for building an approximate solution. It is important to note here that the whole AMG scheme depends entirely on defining a sequence of interpolation operators \(P_\ell \) for \(\ell = 0, 1,\ldots \) Once the \(P_{\ell }\)’s are defined, one can design various ‘cycles’ in which the process goes back and forth from the finest level to the coarsest one in an iterative procedure.

When defining the interpolation operator \(P_{\ell }\), there are two possible extremes worth noting, even though these extremes are not used in AMG in practice. On the one end, we find the trivial interpolation in which the \(p_{ij}\)’s in equation (7) are all set to zero. In this case, referring to (6), \(A^{(\ell +1)}\) is simply \(A^{(\ell +1)} = A_{CC}^{(\ell )}\).

The other extreme is the perfect interpolation case which yields the Schur complement system. Here the interpolation operator is

The right reduced matrix \(A^{(\ell )} P_{\ell } \) involves the Schur complement matrix associated with the coarse block:

We also clearly have \( A^{(\ell +1)} = P_{\ell }^T A^{(\ell )} P_{\ell } = S_\ell . \) The exact solution of (6) can be written in the form \([u_{C}^{(\ell )} ; u_{F}^{(\ell )}] \) were matlab notation is used, i.e., [x; y] is the concatenation of the vector x followed by the vector y. Then, if we denote by \(w_C^{(\ell )} \) the coarse solution \( w_C^{(\ell )} = [A_{CC}^{(\ell )}]^{-1}f_C^{(\ell )}\), it can be seen that \(u_F^{(\ell )}\) is the solution of the Schur complement system \( S_\ell u_F^{(\ell )} = f_F^{(\ell )} - A_{FC}^{(\ell )} w_C^{(\ell )}\). In addition, once \(u_F^{(\ell )}\) has been computed, the whole solution of (6) can be perfectly reconstructed via substitution since we have:

This approach is nothing but a block form of Gaussian elimination and it is generally costly although there are practical alternatives discussed in the literature [11, 21, 82, 118] that are based on Schur complements. However, it is worth pointing out that, viewed from this angle, the goal of all AMG methods is essentially to find inexpensive approximations to the Schur complement system.

2.1.2 Multilevel ILU preconditioners based on coarsening

The issue of finding a good ordering for ILU generated a great deal of research interest in the past; see, e.g., [17,18,19, 26, 27, 38,39,40, 45, 103]. A class of techniques presented in [90] consisted of preprocessing the linear system with an ordering based on coarsening. Thus, for a one-level ordering the matrix is ordered as shown in (6). Then in a second level coarsening, \(A_{22}^{(0)}\) is in turn reordered and we end up with a matrix like:Footnote 1

This is repeated with \(A_{22}^{(1)}\) and further down for a few levels. Then the idea is simply to perform an ILU factorization of the resulting reordered system. Next, we describe a method based on this general approach.

The first ingredient of the method is to define a weight \(w_{ij} \) for each nonzero pair (i, j). This will set an order in which to visit the edges of the graph. The strategies described next are ‘static’ in that given a certain matrix A (one of the \(A^{(\ell )}\)’s), these weights are precomputed, in contrast with dynamic ones used in, e.g., [78, 103, 105]. If \({nnz_i} \) is the number of nonzero entries of row i and \({nnz_j}\) is the number of nonzero entries of column j, we define the weights as follows:

where matlab notation is exploited and \(\Vert \cdot \Vert _1\) is the usual 1-norm. The two terms in the brackets of (9) represent the importance of \(|a_{ij}|\) relative to the other elements in the same row and column, respectively. If our goal is to put large entries in the (1,2) block of the matrix when it is permuted (block \(A_{CF}^{(\ell )}\) in Equation (6)), then we need to traverse the graph starting from the largest to smallest \(w_{ij}\).

The above defines an order in which to visit edges. Next, each time an edge (i, j) is visited we need to determine which one of i and j will be selected as a coarse node. This requires a ‘preference’ measure, or weight, for each node. When \(a_{kk} \ne 0\) we define the impact of ‘pivot’ k as the average potential fill-in created when eliminating unknown k. In the formula \(a_{ij} = a_{ij} - a_{ik}\times a_{kj}/a_{kk}\) employed in the k-th step of Gaussian elimination, the term \(-a_{ik}\times a_{kj} / a_{kk} \) is a potential fill-in. This is a very crude approximation because it assumes that the entries have not changed. We define the ‘impact’ of the diagonal entry k as the inverse of the quantity:

When visiting edge (k, l), we add k to the coarse set if \({\phi _k>\phi _l}\) and l otherwise.

Here, we show an example on the matrix ‘Raefsky3’, which is of size 21,200 and has 1,488,768 nonzero elements. It comes from a fluid structure interaction turbulence problem and can be obtained from the suite-sparse collectionFootnote 2. Figure 3 (left) shows the pattern of the reordered matrix according to the coarsening strategy described above, using four levels of coarsening. The original matrix is not shown but, as expected, its pattern is very similar to that of the (1,1) block of the reordered matrix, and has roughly twice the size.

For this particular matrix, standard ILU-based strategies perform poorly. Using matlab, we applied GMRES(50) to the system, preconditioned with ILU (‘crout’ version) with a drop tolerance of 0.01. The resulting iterates stagnate as indicated by the top curve in the right side of Fig. 3. The number 2.62 is the ‘fill-factor,’ which is the ratio of the number of nonzero elements of the LU factors over the the number of nonzero elements of the original matrix. When comparing preconditioners of this type, we strive to ensure that these fill-factors are about the same for the preconditioners being compared. Next we perform an ILU factorization (’crout’ version again) on the reordered system using the coarsening described above. In order to achieve a fill-factor similar to that of the standard factorization we lowered the drop-tolerance to 0.0008. The new method converged in 29 iterations with a fill factor of 2.57. We also tested a more traditional preconditioner based on block Gauss-Seidel, exploiting the block structure shown on the left side of Figure 3. Each block Gauss-Seidel step requires solving a system with the diagonal blocks of the reordered matrix. These systems are approximately solved using a simple ILU(0) (‘nofill’) factorization. Note that the fill-factor here is very low (0.33). Each preconditioning step consists of 10 Gauss-Seidel iterations. As is shown, this also converges, although more slowly.

2.2 Coarsening approach: Pairwise aggregation

The broad class of ‘pairwise aggregation’ techniques, e.g., [37, 85, 89, 116, 119, 120], is a strategy that seeks to simply coalesce two adjacent nodes in a graph into a single node, based on some measure of nearness or similarity. The technique is based on edge collapsing [63], which is a well known method in the multilevel graph partitioning literature. In this method, the collapsing edges are usually selected using the maximal matching method. A matching of a graph \(G=\left( V,E \right) \) is a set of edges \({\widetilde{E}}\), \({\widetilde{E}}\subseteq E\), such that no two edges in \({\widetilde{E}}\) have a node in common. A maximal matching is a matching that cannot be augmented by additional edges to obtain a larger matching in the sense of inclusion. Coarsening schemes based on edge matching have been in use in the AMG literature for decades [101]. For each node i, a coarsening algorithm starts from building a set \(S_i\) of nodes that are ‘strongly connected’ to i by using some measure of connection strength. The graph nodes are traversed in a certain order of preference and the next unmarked node in this order, say j, is selected as a coarse node. The priority measure of the traversal is updated after each insertion of a coarse node. There are a number of ways to find a maximal matching for coarsening a graph.

The heavy-edge matching (HEM) approach, e.g., [67], is a greedy matching algorithm that works with the weight matrix A of the graph. It simply matches a node i with its largest off-diagonal neighbor \(j_{max}\); i.e., we have \( | a_{ij_{max}} | = \max _{j \in adj (i), j\ne i} | a_{ij}|\), where adj(i) denotes the adjacency (or nearest-neighbor) set of node i. When selecting the largest neighboring entry, ties are broken arbitrarily. If \(j_{max}\) is already matched with some node \(k\ne i\) seen before, i.e., \(p(k) = j_{max}\), then node i is left unmatched and considered as a singleton. Otherwise, we match i with j and the result is \(p(i)=j_{max}\).

A version of HEM is shown in Algorithm 1, modified from [90]. The algorithm proceeds by exploiting a greedy approach. It scans all edges (i, j) in decreasing value of their weight \(a_{ij}\). If neither i nor j has defined parents, it creates a new coarse node labeled new and sets the parents of i and j to be new. After the loop is completed, there will be singletons; i.e., node that have not been assigned a parent in the loop. As shown in lines 10–16, a ‘singleton’ node is either added as a coarse nodes, if it is disconnected (‘real singleton’), or it is lumped as a child of an already generated coarse node (‘left-over singleton’). Figure 4 gives an illustration of this step.

The coarsening process. Original and coarse nodes are colored in black and yellow, respectively. Dashed arrow indicates parent-child relationship. Solid line represents heaviest-weighted edge. Left: A coarse node is created from two adjacent nodes 1 and 2. Middle: A coarse node is created from a true singleton node 3. Right: A left-over singleton node 4 is attached to a coarse, nearest neighbor node 2

2.3 Coarsening approach: Independent sets

It is also possible to exploit independent sets (see, e.g., [96]) for coarsening graphs. Recall that an independent set \({\mathcal {S}}\) is a subset of V that consists of nodes that are not adjacent to each other; i.e., no pair (v, w) where \(v, w \in {\mathcal {S}}\) is linked by an edge. An independent set \({\mathcal {S}}\) is maximal if no (strict) superset of \({\mathcal {S}}\) forms another independent set. One can use the nodes of a carefully selected independent set to form a coarse graph; i.e., we can define \(V_c = {\mathcal {S}}\). Then, it is relatively easy to determine the edges and weights between these nodes by using information from the original graph. For example, in [96] an edge is inserted between v and w of \(V_c\) if there is at least one node y in \(V \backslash V_c\) such that \((v,y) \in E\) and \((w,y) \in E\). This will produce the edge set \(E_c\) needed to form the coarse graph \(G_c\).

Let L be the graph Laplacian, reordered such that the \(n_c\) nodes of \(V_c\) are listed first. Then L will have the following structure, where \(D_c \ \in \ {\mathbb {R}}^{n_c\times n_c}\) :

Since the nodes associated with the (1,1) block in the above matrix form an independent set, it is clear that the matrix \(D_c\) is diagonal. Coarsening by independent sets will consist of taking the matrix \(D_c\) and adding off diagonal elements to it to obtain the adjacency matrix \(A_c\). An important observation to be made here is that the edges added by the independent set coarsening are those obtained from the Schur complement with respect to B, where B is replaced by a diagonal matrix. Let us assume that B is replaced by a matrix \(D_f\). The resulting Schur complement is

The nonzero pattern of \(S_c\) is the same as that of \(FF^T\), which is in turn the sum of the patters of \(f_j f_j^T\) where \(f_j\) is the j-th column of F. If \(f_j \) has nonzero entries in locations i and k, then we will have nonzero entries in the positions (i, i), (i, k), (k, i) and (k, k) of \(S_c\). Next, we will define \(D_f\) more specifically. Let \(D_f\) be the diagonal of row-sums of \(F^T\) and assume for now that these are all nonzero. This is the same diagonal as the one used for the graph Laplacian except that the summation ignores the entries \(a_{ij}\) when i and j are both in \( V\backslash V_c\). In matlab notation: \(D_f = \text{ diag }(\text{ diag }F^T {\mathbf {1}})\). In this situation, \(S_c\) becomes a Laplacian.

Proposition 2.1

Let B be replaced by \(D_f\), defined as the diagonal of the row-sums of \(F^T\). Then \(D_f\) is invertible. Let \(L_c = D_c - F D_f^{-1}F^T\). Then the graph of \(L_c\) is \(G_c\), the graph of the independent set coarsening of G. In addition, \(L_c\) is a graph Laplacian; specifically, it is the graph Laplacian of \(G_c\).

Proof

Because the independent set is maximal, we cannot have a zero diagonal element in \(D_f\). Indeed, if the opposite was true, then one row, say row k, of \(F^T\) would be zero. This would mean that we could add node k to the independent set, because it is not coupled with any element of \({\mathcal {S}}\). The result would be another independent set that includes \({\mathcal {S}}\), contradicting maximality.

It was shown above that the adjacency graph of \(F F^T\), which is the same as that of \(L_c\), is exactly \(G_c\). It is left to show that \(L_c\) is a Laplacian. Since \(D_f\) and F have nonnegative entries, the off-diagonal of \(L_c \) are clearly negative. Next, note that \(F^T {\mathbf {1}}= D_f {\mathbf {1}}\) and hence

Thus, \(L_c\) is indeed a Laplacian. \(\square \)

2.4 Coarsening approach: Algebraic distance

Researchers in AMG methods defined a notion of ‘algebraic distance’ between nodes based on relaxation procedures. This notion is motivated by the bootstrap AMG (BAMG) method [25] for solving linear systems. AMG creates a coarse problem by trying to exploit some rules of ‘closeness’ between variables. In BAMG, this notion of closeness is defined from running a few steps of Gauss-Seidel relaxations, starting with some random initial guess for solving the related homogeneous system \(Ax= 0\). The speed of convergence of the iterate determines the closeness between variables. This is exploited to aggregate the unknowns and define restriction and interpolation operators [98]. In [36] this general idea was extended for use on graph Laplacians. In the referenced paper, Gauss-Seidel is replaced by Jacobi overrelaxation.

Algorithm 2 shows how these distances are calculated. They depend on two parameters: the over relaxation parameter \(\omega \) and the number of steps, k. It can be shown that the distances \(s_{ij}^{(k)}\) converge to zero [36] as \(k\rightarrow \infty \). However, it was argued in [36] that the speed of decay of \(s_{ij}^{(k)}\) is an indicator of relative strength of connection between i and j. In other words, the important measure is the magnitude – in relative terms – of \(s_{ij}^{(k)}\) for different (i, j) pairs.

Note that the iteration is of the form \(x^{(j)} = H x^{(j-1)}\), where H is the iteration matrix

Because \(D-A\) is a graph Laplacian, the largest eigenvalue of H is \(\lambda _1 =1\). It is then suggested to scale these scalars by \(\lambda _2^k\), where \(\lambda _2\) is the second largest eigenvalue in modulus. In general, it is sufficient to iterate for a few steps and stop at a step k when one observes the scaled quantities start to settle.

As can be seen, a coarsening method based on algebraic distances is rather different from the previous two methods. Instead of working on the graph directly we now use our intuition on the iteration matrix to extract intuitive information on what may be termed a relative distance between variables. If two variables are close with respect to this distance, they may be aggregated or merged.

Ultimately, as was shown in [36], what is important is the decay of the component of the vector \(x^{(k)}-x^{(k-1)}\) in the second eigenvector. This distance between two vectors is indeed dominated by the component in the second eigenvector.

This brings up the question as to whether or not we can directly examine spectral information and infer from it a notion of distance on nodes. Spectral graph coarsening addresses this and will be examined in Section 4.

2.5 Techniques related to coarsening

Graph coarsening is a graph reduction technique, in the sense that it aims at reducing the size of the original graph while attempting to preserve its properties. There exist a number of other techniques in the same category. These include graph summarization [75, 86], graph compression [51], and graph sketching [71]. These are more common in machine learning and the tasks they address are specific to the underlying applications. In the following we discuss methods that are more akin to standard coarsening methods.

2.5.1 Graph reduction: Kron

The Kron reduction of networks was proposed back in 1939 [72], as a means to obtain lower dimensional electrically equivalent circuits in circuit theory. Its popularity gained momentum across fields after the appearance of a thorough analysis of the method in [46]. The method starts with a weighted graph \(G = (V, E, A)\) and the associated graph Laplacian L, along with a set \(V_1\) of nodes which is a strict subset of V. Such a subset can be obtained by downsampling, for example, although in the original application of circuits it is a set of nodes at the boundary of the circuit. The method essentially defines a coarse graph from the Schur complement of the original adjacency graph with respect to this downsampled set.

The goal is to form a reduced graph on \(V_1\). This is viewed from the angle of Laplacians. If we order the nodes of \(V_1\) first, followed by those of the complement set to \(V_1\) in V, the Laplacian can be written in block form as follows:

The Kron reduction of L is defined as the Schur complement of the original Laplacian relative to \(L_{22}\); i.e.,

This turns out to be a proper graph Laplacian as was proved in [46], along with a few other properties. We can therefore associate a set of weights \(A_{ij}^{(1)}\) for the reduced graph, defined from \(L(V_1)\):

An example is shown in Fig. 5.

A multiscale version of the Kron reduction, called the pyramid transform, was proposed in [109], specifically for applications that involve signal processing on graphs [110]. It was developed as a multiscale (i.e., ‘multilevel’ in the scientific computing jargon) extension of a similar scheme invented in the late 1980s for image processing [31]. The extension is from regular data (discrete time signals, images) to irregular data (graphs, networks) as well as from one level to multiple levels.

An original feature of the paper [109] is the use of spectral information for coarsening the graph. Specifically, a departure from traditional coarsening methods such as those described in Sections 2.2 and 2.3 is that the separation into coarse and fine nodes is obtained from the ‘polarity’ (i.e., the sign of the entries) of the eigenvector associated with the largest eigenvalue of the graph Laplacian. The motivation of the authors is a theorem by Roth [99], which deals with bipartite graphs. The idea of exploiting spectral information was exploited earlier by Aspvall and Gilbert [3] for the problem of graph coloring, an important ingredient of many linear algebra techniques. Another original feature of the paper [109] is the use of spectral methods for sparsifying the Schur complement. As was mentioned earlier, the Schur complement will typically be dense, if not full in most situations. The authors invoke ‘sparsification’ to reduce the number of edges. We will cover sparsification in Section 2.5.3.

Example

As an example, we return to the illustration of Fig. 5. Using normalized Laplacians, we find that the largest eigenvector separates the graph in two parts according to its polarity, namely \(V_1 = \{ 1, 5, 6, 9, 10 \} \) and \(V_2=\{2, 3, 4, 7, 8, 11\}\). Thus, it is able to discover \(V_1\), the rather natural independent set we selected earlier.

One important question that can be asked is why resort to the Schur complement as a means of graph reduction? A number of properties regarding the Kron reduction were established in [46] to provide justifications. Prominent among these is the fact that the resistance distance [49] between nodes of the coarse graph are preserved. The resistance distance involves the pseudo-inverse of the Laplacian.

2.5.2 Relationship between Kron reduction and independent set coarsening

Instead of invoking independent sets, the Kron reduction, as it is used in [109], ‘downsamples’ nodes by means of spectral information. While these samples may form an independent set, this is not guaranteed. Just like independent set coarsening, the coarse graph is built from the Schur complement associated with the complement of the independent set (so-called node cover). These two ways of coarsening are illustrated in Fig. 6.

Unlike independent set coarsening, the matrix \(L_{22}\) involved the Schur complement of (15) is not approximated by a diagonal before inversion, in effort to reduce the fill-ins introduced. Instead, spectral ‘sparsification’ is invoked. In the survey paper [46], the set \(V_1\) represents a set of ‘boundary points’ in an electrical network. Improved sparsity is achieved by other means than those employed in Section 2.3, specifically by eliminating a selection of internal nodes instead of all of them, in the Gaussian elimination process.

2.5.3 Graph sparsification

Graph sparsification methods aim at finding a sparse approximation of the original graph by trimming out edges from the graph [112, 113]. Here, the number of nodes remains the same but the number of edges |E| can be much lower than in the original graph. This is motivated by the argument that in some applications, the graphs that model the data tend to be rather dense and that many edges can be removed without negatively impacting the result of methods that exploit these graphs; see, e.g., [65].

Different measures of closeness between the sparsified and the original graph have been proposed for this purpose, resulting in a wide range of strategies such as spanners [2], cut sparsifiers [66], and spectral sparsifiers [113]. Graph sparsification methods can be beneficial when dealing with high density graphs and come with rigorous theoretical guarantees [13]; see also [16].

We already mentioned at the end of Section 2.5.1 one specific use of graph sparsifiers in the context of multilevel graph coarsening. To sparsify the successive Schur complement obtained by the multilevel scheme, the authors of [109] resorted to a spectral sparsifier developed in [112]. The algorithm exploits a distance measure based on effective resistances [49]. This notion was briefly mentioned in the context of the Kron reduction in Section 2.5.1 and will be discussed in some detail in Section 5.4. The sparsification algorithm of Spielman and Srivastava [112] samples edges according to a probability defined from the original weights of the graph and these effective resistances. It is shown [112] that the graph Laplacian spectrum and resistance distances between nodes are approximately preserved with high probability, if the number of samples is high enough. If \({{\widetilde{G}}}\) is the sparsified version of G, and if \({{\widetilde{L}}}\) and L are their respective Laplacians, the main goal of spectral sparsifiers is to preserve the quadratic form associated with the Laplacians. The graph \(\widetilde{G}\) is said to be a \(\sigma \)-spectral approximation of G if for all \(x \ \in \ {\mathbb {R}}^n\),

A trivial observation for \(\sigma \)-similar (\(\sigma >1\)) graphs is that their Rayleigh quotients

for the same nonzero vector x satisfy the double inequality:

Thus, these Rayleigh quotients are, in relative terms, within a factor of \(\sigma -1\) of each other:

This has an impact on eigenvalues. If \(\sigma \) is close to one, then clearly the eigenvalues of L and \({{\widetilde{L}}}\) will be close to each other, thanks to the Courant–Fisher min-max characterization of eigenvalues [55]. In what follows, \(S_k\) represents a generic k-dimensional subspace of \({\mathbb {R}}^n\) and eigenvalues are sorted decreasingly. In this situation, the theorem states that the k-th eigenvalue of the Laplacian L satisfies:

The above maximum is achieved by a set, denote by \(S_*\) (which is just the linear span of the set of eigenvectors \(u_1, \cdots , u_k\)). Then

The exact same relation as (16) holds if L and \({{\widetilde{L}}}\) are interchanged. Therefore, the above relation also holds if \(\lambda \) and \({{\widetilde{\lambda }}}\) are interchanged, which leads to the following double inequality, valid for \(k=1, 2, \cdots , n\):

It is also interesting to note a link with preconditioning techniques. When solving symmetric positive definite linear systems of equations, it is common to approximate the original matrix A by a preconditioner which we denote here by \({{\widetilde{A}}}\). Two desirable properties that must be satisfied by a preconditioner \({{\widetilde{A}}}\) are that (i) it is inexpensive to apply \(\widetilde{A}^{-1}\) to a vector; and (ii) the condition number of \({{\widetilde{A}}} ^{-1}A\) is (much) smaller than that of A. The second condition translates into the condition that \( (x^TAx)/(x^T{{\widetilde{A}}} x) \) be small. If we assume that

then the condition number of the preconditioned matrix, which is the ratio of the largest to the smallest eigenvalues of \({{\widetilde{A}}}^{-1}A\), will be bounded by \(\sigma ^2\). For additional details see [113] where this specific viewpoint was explicitly adopted, as well as [13, 112] among others.

2.5.4 Graph partitioning

The main goal here is to put in contrast the problem of coarsening with that of graph partitioning. To this end, a brief background is needed. In spectral graph partitioning [12, 52, 92], the important equality (3) satisfied by any Laplacian L is exploited. If x is a vector of entries \(+1\) or \(-1\), encoding membership of node i to one of two subgraphs, then the value of \(x^T L x\) is equal to 4 times the number of edge-cuts between the two graphs with this 2-way partitioning. We could try to find an optimal 2-way partitioning by minimizing the number of edge cuts, i.e., by minimizing \(x^T Lx\) subject to the condition that the two subgraphs are of equal size, i.e., subject to \({\mathbf {1}}^T x = 0\). Since this optimization problem is hard to solve, it is common to ‘relax’ it by replacing the conditions \(x \in \{-1,1\}^n, x^T {\mathbf {1}}=0\) with \(x \in {\mathbb {R}}^n, \Vert x\Vert = 1, x^T {\mathbf {1}}=0\). This leads to the definition of the Fiedler vector, which is the second smallest eigenvector of the Laplacian. Recall that the smallest eigenvalue of the graph Laplacian is zero and that when the graph is connected, this eigenvalue is simple and the vector \({\mathbf {1}}\) is a corresponding eigenvector.

It is interesting to note the similarity between spectral graph partitioning and Kron reduction. In both cases, the polarity of an eigenvector is used to partition the graph in two subgraphs. In the case of Kron reduction, it is the vector associated with the largest eigenvalue that defines the partitions; and one of these partitions is selected as the ‘coarse’, or the ‘downsampled’ set, according to the terminology in [109]. For graph partitioning, what is done instead is to use the eigenvector at the other end, the one next to the smallest, since the smallest is a constant vector. If we reformulate the problem back in terms of assignment labels of \(\pm 1\), then this would lead to the interpretation that in one case, we try to minimize edge cuts (partitioning) and in the other, we try to maximize them (Kron reduction).

An illustration is shown in Fig. 7 with a small finite element mesh. As can be seen, using the second smallest eigenvector tends to color the graph in such a way that nearest neighbors of a node are mostly of the same color, while using the largest eigenvector tends to color the graph in such a way that nearest neighbors of a node are mostly of a different color. Another way to look at this is that using the second smallest eigenvector gives domains that tend to be ‘fat’ whereas the largest eigenvector gives domains that tend to be ‘thin’, like unions of lines separating each other. Another fact shown by this simple example is that neither of the two sets obtained is close to being an independent set.

The following property is straightforward to prove.

Proposition 2.2

Assume that the graph has no isolated node and that the components \(\xi _1, \xi _2, \cdots , \xi _n\) of the largest eigenvector \(u_1\) are nonzero. Let \(V_+\) and \(V_-\) be the two subgraphs obtained from the polarities of the largest eigenvector. Then each node of \(V_+\) (resp. \(V_-\)) must have at least one adjacent node from \(V_-\) (resp. \(V_+\)).

Proof

The i-th row of the relation \(L u_1 = \lambda _1 u_1\) yields: (recall definition (2))

Note that \((\lambda _1 - d_i) > 0\) (due to assumption). Then, if \(\xi _i \ne 0\) (left side) then at least one of the \(\xi _j\)’s, \(j \ne i\) (right side) must be of the opposite sign. \(\square \)

3 Graph coarsening in machine learning

In this section, we discuss existing methods related to graph coarsening in machine learning and discuss how they are employed in typical applications. We begin by defining the types of problems encountered in machine learning and the related terminology. The terms ‘graphs’ and ‘networks’ are often used interchangeably in the literature – although the term networks is often employed specifically for certain types of applications, whereas graphs are more general. For example, ‘social networks’ are common for applications that model friendship or co-authorship, while one speaks of graphs when modeling chemical compounds. What muddles the terminology is the term ‘graph neural networks,’ which are neural networks (a type of machine learning model) for graphs. Furthermore, there are ‘protein-protein interaction networks,’ which are graphs of proteins; that is, each graph node is a protein, which by itself is a molecular graph.

Some commonly encountered graph tasks include: node classification (determine the label of a node in a given graph), link prediction (predict whether or not an edge exists between a pair of nodes), graph classification (determine the label of the graph itself), and graph clustering (group nodes that are most alike together). The task of node classification is also often called semi-supervised learning on graphs, which mainly refers to the setting where only a small number of data points are equipped with ground-truth labels for learning the classification model, but the graph structure that connects the data points offers useful priors that may help the model improve prediction. One of the key concepts in a majority of the methods for solving these tasks is embedding, which is vector in \({\mathbb {R}}^d\) used to represent a node \(v\in V\) or indeed the entire graph \(G=(V,E)\).

3.1 Graph clustering and GraClus

As was seen earlier, graph coarsening is a basic ingredient of multilevel graph partitioning, where each level is a coarse version of the graph in the past level. The same graph partitioning tools can be used in data-related applications (e.g., graph clustering), but the requirement of having partitions of equal size is no longer relevant. This observation led to the development of approaches specifically for data applications; see, e.g., [43]. The method developed in [43] uses a simple greedy graph coarsening approach whereby nodes are visited in a random order. When visiting a node, the algorithm merges it with the unvisited nearest neighbor that maximizes a certain measure based on edge and node weights. The visited node and the selected neighbor are then marked as visited. The algorithm developed in [43], which is known as GraClus, uses different tools from those of graph partitioning. Because it is used for data applications, the refinement phase exploits a kernel K-means technique instead of the usual Kernighan–Lin procedure [68].

3.2 Multilevel graph coarsening for node embedding

In one form of node embedding, one seeks a mapping \(\Phi \) from the node set V of a graph \(G=(V,E)\) to the space \({\mathbb {R}}^{n \times d}\) where \(n = | V|\) and

In other words, each node is mapped to a vector in d-dimensional space. Here, the dimension d is usually much smaller than n. Many embedding methods have been developed and used effectively to solve a variety of problems; see, e.g., [1, 14, 33, 35, 57, 100, 115, 123] and [56] for a survey. The idea of applying coarsening to obtain embedding for large graphs has been gaining ground in recent years; see, [35, 42, 50, 74, 94].

The authors of [35] present a method dubbed hierarchical representation learning for networks (HARP), which exploits coarsening to facilitate and improve graph embedding. The method starts by performing a sequence of \(\ell \) graph coarsening steps to produce graphs \(G_1, G_2, \cdots , G_{\ell }\) from the initial graph \(G_0\). Then, an embedding is performed on the final level to produce the mapping \(\Phi _{\ell }\). This embedding is then propagated back to the original level by proceeding as follows. Starting from level \(i=\ell \), the mapping \(\Phi _i\) is naturally prolongated (‘extrapolated’) from level i to level \(i-1\) to yield a mapping \(\Phi _{i-1}'\). An extra step is taken to refine this embedding and obtain \(\Phi _{i-1}\). This specific step is rather reminiscent of the ‘post-smoothing’ steps invoked in various MG schemes for solving linear systems. Post-smoothing consists of a few steps of smoothing operations, typically a standard relaxation method (e.g., Gauss-Seidel), applied after an approximate solution is prolonged from a coarse level. The MILE method described in [74] is rather similar to the HARP approach described above, the main difference being that the refinement proposed in this method exploits neural networks.

HARP and MILE are general frameworks that use coarsening to improve graph embedding. In the remainder of this section, we give an illustrative example to demonstrate the effectiveness of HARP. We examine the performance improvement of three widely used graph embedding algorithms: DeepWalk [91], LINE [115], and Node2vec [57], each combined with HARP. Furthermore, because HARP is a general framework and we have the freedom to choose the coarsening method it uses, we examine the impact of different coarsening methods on the performance improvement. Three coarsening methods are tested: HEM (Section 2.2), algebraic distance (Section 2.4), and the LESC method to be introduced in Section 5 (it is similar to HEM but uses spectral information to define the visiting order of nodes).

We evaluate the HARP framework on a node classification task with the Citeseer graph [107]. Given a graph G where some nodes are labeled, the task of node classification amounts to predicting the labels of the remaining nodes. Citeseer is a citation graph of computer science publications, consisting of 3.3K nodes and 4.5K edges. The label of each node indicates the subject area of the paper. We first generate the node embedding for each node using the HARP method. Then, a fraction of the nodes are randomly sampled to form the training set and the remaining is used for testing. We train a logistic regression model [20] by using the training data and evaluate the classification performance on the test data. We use the macro-average F1 score [93] as the performance metric, which is the mean of the F1 score for each label category.Footnote 3 Figure 8 shows the score under different training set sizes.

As can be seen the HARP framework consistently improves all these embedding methods, especially LINE and DeepWalk. Each coarsening approach used inside the HARP framework improves the performance to a different degree, with the LESC approach generally outperforming the others.

3.3 Graph neural networks and graph pooling

GNNs have recently attracted a great deal of interest in various disciplines, including biology [44], chemistry [122], and social networks [125], where data is modeled by graphs. A major class of GNNs are those of a convolution style that generalize lattice convolutions in convolutional neural networks (CNNs). A standard convolution applies a filter on a signal; in CNNs, the signal is a 2-dimensional image and the filter has a very small support—say, a \(3\times 3\) window. Convolution-style GNNs generalize the regularity of such a filter to irregularly connected node pairs [29, 60, 83]. Specifically, the regular window is replaced by the 1-hop neighborhood of a node.

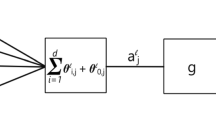

One such representative GNN is the graph convolutional network (GCN) [69]. A 2-layer GCN maps a feature matrix X to the label matrix Y (see notation introduced in Section 1.2) by including the graph adjacency matrix A in the mapping:

where \({\widehat{A}}\) is a normalization of A through \({\widehat{A}}={\widetilde{D}}^{-1/2}{\widetilde{A}}{\widetilde{D}}^{-1/2}\), \({\widetilde{A}}=A+I\), \({\widetilde{D}}=\text{ diag }({\widetilde{d}}_i)\), and \({\widetilde{d}}_i=\sum _j{\widetilde{a}}_{ij}\). Therefore, matrix products such as \({\widehat{A}}X\) denote the convolution by using a 1-hop neighborhood filter.

The following concepts are for neural networks. The functions \({{\,\mathrm{ReLU}\,}}\) and \({{\,\mathrm{softmax}\,}}\) are nonlinear activation functions: \({{\,\mathrm{ReLU}\,}}(x)=\max \{x,0\}\) is an elementwise function, while \({{\,\mathrm{softmax}\,}}(x)=[e^{-x_1}/c, \ldots , e^{-x_d}/c]\) with \(c=\sum _je^{-x_j}\) is a vector function; it acts on each row if the input is a matrix. The matrices \(W^0\) and \(W^1\) are called weight matrices. Their contents are not manually specified but learned through minimizing the discrepancy between \({\widetilde{Y}}\) and the ground truth label matrix Y.

Besides GCN, the literature has seen a large number of generalizations of lattice convolution to convolutions in the graph context, including for example, spectral [30, 41, 61, 69] and spatial [59, 84, 88, 121, 124, 127] schemes.

GNNs such as (22) essentially produce a mapping \(\Phi : v \in V \longrightarrow \Phi (v) \ \in \ {\mathbb {R}}^c\) for every node v in the graph, if we read only one row of \({\widetilde{Y}}\) in (22). This mapping is almost identical to the form (21) discussed in the context of node embedding; the only nominal difference is that the output is in a c-dimensional space while the node embedding is in d-dimensional space. This difference is caused by the need for the output \({\widetilde{Y}}\) to be matched with the ground truth Y that has c columns (c label categories); while for node embedding, the embedding dimension d may be arbitrary. However, if our purpose is not to match \({\widetilde{Y}}\) with Y, we can adjust the number of columns of \(W^1\) so that the right-hand side of (22) can be repurposed for producing a node embedding. More significantly, we may take the elementwise minimum, the average, or the weighted average of the node embedding vectors to form an embedding vector for the entire graph \(G=(V,E)\) [48]. In other words, a GNN, with a slight modification, can produce a mapping

Now that we see how GNNs can be utilized to produce a graph embedding through the mapping \(\Psi \), a straightforward application of coarsening is to use \(\Psi (G_c)\) in place of \(\Psi (G)\) for the classification of G. This simple idea can be made more sophisticated in two ways. First, suppose we perform a multilevel coarsening resulting in a sequence of increasingly coarser graphs \(G=G_0,G_1,G_2,\ldots ,G_\ell \). We may concatenate the vectors \(\Psi (G_0),\Psi (G_1),\ldots ,\Psi (G_\ell )\) and treat the resulting vector as the embedding of G. We use the concatenation result (say \(\psi _G\)) to classify G, though building a linear classifier that takes \(\psi _G\) as input and outputs the class label.

Second, we introduce the concept of pooling. Pooling in neural networks amounts to taking a min/max or (weighted) average of a group of elements and reducing it to a single element. In the context of graphs, pooling is particularly relevant to coarsening, since if we recall the Galerkin projection \(f^{(\ell +1)}=P_{\ell }^Tf^{(\ell )}\) in AMG (see (8)), the interpolation matrix \(P_{\ell }\) plays the role of pooling: each column of \(P_{\ell }\) defines the weights in the averaging of nodes in the last graph into a node in the coarse graph. Hence, in the context of GNNs, we call \(P_{\ell }\) a pooling matrix. This matrix can be obtained directly through the definition of a coarsening method [41, 111], or it can be unspecified but learned through training [53, 73, 126]. By using successive poolings that form a hierarchy, recent work [53, 70, 73, 126] has shown that hierarchical pooling improves graph classification performance.

4 Spectral coarsening

While the terms ‘spectral graph partitioning’ and ‘spectral clustering’ are quite well-known, the term ‘spectral graph coarsening’ is less explored in the literature. There are two aspects, and therefore possible directions, to spectral coarsening. First, it may be desirable in various tasks to preserve the spectral properties of the original graph, as is the case in the local variation method proposed by [76]. The second aspect is that one may wish to apply spectral information for coarsening. The method proposed by [109] falls in this category. It uses the eigenvector of the graph Laplacian to select a set of nodes for Kron reduction [46] discussed earlier.

In this section, we present an approach for the first aspect; whereas in Section 5, we develop an approach related to the second aspect. Regarding the first aspect, eigenvectors of the graph Laplacian encapsulate much information on the structure of the graph. For example, the first few eigenvectors are often used for partitioning the graph into more or less equal partitions. Therefore, the first question we will ask is whether or not it is possible to coarsen a graph in such a way that eigenvectors are ‘preserved.’ Of course, the coarse graph and the original one have different sizes so we will have to clarify what is meant by this.

4.1 Coarsening and lifting

Recall from AMG that coarsening is represented by the matrix \(P^T\in {\mathbb {R}}^{n_c\times n}\). It helps to view a coarse node as a linear combination of a set of nodes in the original graph. Let the k-th coarse node be denoted by \(v_k^{(c)}\) and the set be \(S_k\). The weights are \(p_{ik}\) for each \(v_i\in S_k\):

The coarse adjacency matrix is then defined as:

A similar framework of writing a coarsened matrix in the form of (25) is adopted in [76]. Note that in general, \(A_c\) is not binary. Here, we assign the diagonal entries of \(A_c\) to 0 and all non-zero entries to 1.

The original graph G and its coarse graph \(G_c\) have a different number of nodes. If we wish to compare the properties of these two graphs, it is necessary to ‘lift’ the graph Laplacian of \(G_c\) into a matrix that has the same size as that of G. Let L and \(L_c\) denote the Laplacian of the original graph and the coarse graph, respectively. One way to construct a matrix \(L_c\) that is guaranteed to be a graph Laplacian is as follows [64, 76]:

where \(Q\in {\mathbb {R}}^{n\times n_c}\) is a sparse matrix with

In the simplest case, the entries P in (24) can be defined as \(p_{ik} = 1/|S_k|\) for all \(v_i\in S_k\). Then, the resulting P is the pseudoinverse of \(Q^T\), with \(Q^TP = I_{n_c}\). Therefore, the lifted counterpart of \(L_c\), denoted by \(L_l\), is defined as

because \(PQ^T\) is a projector.

4.2 The projection method viewpoint

If we extend the matrix P as an orthonormal matrix, then \(PP^T\) is a projector and the lifted Laplacian defined in (28) becomes \(L_l=PP^TLPP^T\). It is useful to view spectral coarsening from the projection method [104] angle.

Consider an orthogonal projector \(\pi \) and a general (symmetric) matrix A. In an orthogonal projection method on a subspace \({\mathcal {V}}\), we seek an approximate eigenpair \({{\tilde{\lambda }}}, {{\tilde{u}}}\), where \({{\tilde{u}}} \ \in \ {\mathcal {V}}\) such that

If \(V=[v_1, v_2, \cdots , v_k]\) is an orthonormal basis of \({\mathcal {V}}\) and the approximate eigenvector is written as \(\tilde{u} = V y\), then the previous equation immediately leads to the problem

The eigenvalue \({{\tilde{\lambda }}} \) is known as a Ritz value and \({{\tilde{u}}} \) is the associated Ritz vector.

Recall the orthogonal projector \(\pi \) onto the columns of P; that is, \(\pi =PP^T\). If we look at the specific case under consideration, we notice that this is precisely what is being done and that the basis vectors V are just the columns of P.

When analyzing errors for projection methods, the orthogonal projector \(\pi \) represented by the matrix \(P P^T\) plays a particularly important role. Specifically, a number of results are known that can be expressed based on the distance \(\Vert (I - \pi ) u\Vert \) where u is an eigenvector of A; see, e.g., [104]. The norm \(\Vert (I - \pi ) u\Vert \) represents the distance of u to the range of \(\pi \) in \({\mathbb {R}}^n\).

4.3 Eigenvector preserving coarsening

Based on the interpretation of projection methods, it is desirable to construct a projector that preserves eigenvectors. We say that a given eigenvector u is exactly preserved or just ‘preserved’ by the coarsening if \((I-\pi ) u = 0\). If this is the case then when we solve the projected problem (29), we will find that \(y = P^T u\) is an eigenvector of \(V^T A V\) associated with the eigenvalue \(\lambda \):

The Ritz vector is \({{\tilde{u}}} = P y = P P^T u = \pi u = u \) which is clearly an eigenvector.

What might be more interesting is the more practical situation in which \((I-\pi ) u \) is not zero but just small. In this case, there are established bounds [104] for the angle between the exact and approximate eigenvectors based on the quantity \(\epsilon = \Vert (I-\pi ) u\Vert _2\).

In what follows, we instantiate the general matrix A by the graph Laplacian matrix L and consider the preservation of its eigenvectors. From many machine learning methods (e.g., the Laplacian eigenmap [15]), the bottom eigenvectors of L carry the crucial information of a dataset. Thus, they are the ones that we want to preserve.

4.4 Preserving one eigenvector

If we want to coarsen the graph into k nodes, we partition an eigenvector u into k parts. Up to permutations of the elements of u, let us write, in matlab notation

Then, we let

Clearly, P is orthonormal and satisfies \(u=PP^Tu\). In other words, the matrix P so defined preserves the eigenvector u of the graph Laplacian in coarsening.

The square of an element of u is called the leverage score of the corresponding node (see Section 5.1). Then, each \(\Vert u_i\Vert ^2\) is the leverage score of the i-th coarse node. In other words, if a collection of nodes of the original graph is grouped into a coarse node, then the sum of their leverage scores is the leverage score of the coarse node.

4.5 Preserving m eigenvectors

The one-eigenvector case can be easily extended to m eigenvectors. Let U be the matrix of these eigenvectors; that is, U has m columns, each of which is an eigenvector. We partition U similarly to the preceding subsection, as

Then, we define the matrix P in the following way:

where for each partition i, \(U_i=P_iR_i\). The equality \(U_i=P_iR_i\) can be any factorization that results in orthonormal matrices \(P_i\) (so that P is orthonormal). A straightforward choice is the QR factorization. Alternatively, one may use the polar factorization, where \(P_i\) and \(R_i\) are the unitary polar factor and the symmetric positive definite polar factor, respectively. This factorization is conceptually closer to the one-eigenvector case.

In contrast with the one-eigenvector case, now a collection of nodes of the original graph is grouped into m coarse nodes, which are all pairwise connected in the coarse graph. The total number of nodes in the coarse graph is mk. Because the Frobenius norm of \(U_i\) is equal to that of \(R_i\), we see that the sum of the leverage scores of the original nodes in a partition is the same as that of the m resulting coarse nodes.

It is not hard to see that each eigenvector in U is preserved. Indeed, if u is the j-th column of U, then \(u = U e_j\) and, using matlab notation,

where we have set \(\rho _i = R_i e_j\) for \(i=1,\cdots , k\). Therefore, u is in the range of P and as such it will be left invariant by the projector \(\pi \): If \(\rho = [ \rho _1 \ ; \ \rho _2 \ ; \ \cdots \ ; \ \rho _k ]\) then \(\pi u = P P^T (P \rho ) = P \rho = u\).

Clearly, it is not necessary to use a regular fixed and equal-sized splitting for the rows of U (and u); i.e., we can select any grouping of the rows that can be convenient for, say, preserving locality, or reflecting some clustering.

5 Coarsening based on leverage scores

Spectral coarsening may have desirable qualities when considering spectral properties, but these methods face a number of practical difficulties. Among them is the fact that the coarsened graph tends to be dense. For this reason, spectral methods will be invoked mostly as a tool to provide an ordering of the importance of the nodes—which will in turn be used for defining a traversal order in other coarsening approaches (e.g., HEM). This has been a common theme in the literature [35, 64, 76].

5.1 Leverage scores

Let A be a general matrix and let U be an orthonormal matrix, whose range is the same as that of A. The leverage score [47] of the i-th row of A is defined as the squared norm of the i-th row of U:

Clearly, the leverage score is invariant to the choice of the orthonormal basis of the range of A.

Leverage scores defined in the form (30) have been used primarily for (rectangular) matrices A that represent data. In these cases, the columns of U are the dominant singular vectors of A and the matrix \(\pi =UU^T\) is an orthogonal projector that projects a vector in \({\mathbb {R}}^n\) onto the span of U. The vector of leverage scores, \(\eta \), is the diagonal of this projector. This quantity appears also in a different context in quantum physics, where the index i represents a location in space, \(\eta _i\) represents the electron density in position i, and the projector \(\pi \) is the density matrix in the idealistic case of zero temperature. See Section 3.4 of [81].

5.2 Leverage-score coarsening (LESC)

For coarsening methods such as Algorithm 1, the traversal order in the coarsening process can have a major impact on the quality of the results. Instead of the heavy edge matching strategy, we now consider using leverage scores to measure the importance of a node. Exploiting what we know from spectral graph theory, we will use the bottom eigenvectors of the graph Laplacian L to form U. However, we find that in many cases, the traversal order is sensitive to the number of eigenvectors, r. To lessen the impact of r on the outcome, we weigh individual entries \(U_{ik}\) in (30) by using the eigenvalues \(\lambda _1, \ldots , \lambda _r\) of L. Specifically, we define

where \(\tau \) represents a decay factor of the weights. This leads to a modification of HEM that is based on leverage scores (31) which we call leverage score coarsening, or LESC for short.

Algorithm 3 describes the LESC procedure. Its main differences from HEM (Algorithm 1) lie in (i) the traversal order and (ii) the handling of singletons. While HEM proceeds by the heaviest edge, LESC scans nodes in decreasing \(\eta \) values. At the beginning of each for-loop, LESC selects the next unvisited node i with the highest leverage score and selects from its unvisited neighbors a node j, where the edge (i, j) has the heaviest edge weight, to create a coarse node.

The way in which LESC handles the singletons is elaborated in lines 14–25 of Algorithm 3. During the traversal, we append a singleton to a set named Single. Depending on the desired coarse graph size \(n_c\), there are two ways to assign parents to the singletons: lines 20–22 handle a real singleton and lines 23–25 handle a leftover singleton. This extra step outside HEM better preserves the local structure surrounding high-degree nodes, as well as the global structure of the graph.

For an illustration, we visualize the coarse graphs produced by the following five coarsening methods: HEM, local variation (LV) [76], algebraic distance, Kron reduction, and LESC. The original graph is selected from the D&D protein data set; see the Section 5.5 for a detailed description. The coarse graphs are shown in Figure 9. We observe from the figure that the global structure of the graph is well preserved in each coarse graph. Moreover, the connection chains of the nodes are well preserved. Note also that, as expected, the coarse graph from Kron reduction is denser than the other coarse graphs.

5.3 Interpretations

Leverage scores defined in (30) have been used primarily for matrices that represent data. The form used by us, (31), stabilizes the ordering of the scores when the number r of dominant eigenvectors varies. When \(\tau \) or r is large, there is little difference between the value (31) and the following one that uses all eigenvectors:

The form (32) can lead to interesting interpretations and results.

First, observe that if we denote by \(e_i\) the i-th column of the identity matrix, then

That is, \(\eta _i\) is nothing but the i-th diagonal entry of the matrix \(H \equiv \exp (- 2\tau L)\). If the adjacency matrix A is doubly stochastic, then \(L = I - A\) and \(H = \exp (- 2\tau L) = \exp (- 2\tau I + 2\tau A) = e^{- 2\tau } \cdot \exp (2\tau A) \). Therefore, since A has nonnegative entries, so does H. Then, H is a stochastic matrix (in fact, doubly stochastic because of symmetry). To see this, we have \(L{\mathbf {1}}=0\) and thus by the Taylor series of the matrix exponential, \(H{\mathbf {1}}={\mathbf {1}}\). Now that H is a stochastic matrix, the leverage score \(\eta _i\) (i-th diagonal entry of H) represents the self-probability of state i.

There exists another interpretation from the transient solutions of Markov chains; see Chapter 8 of [114]. In the normalized case, the negative Laplacian \(-L\) plays the role of the matrix Q in the notation of continuous time Markov chains (see Section 1.4 of [114]). Given an initial probability distribution \(\pi (0) \ \in \ {\mathbb {R}}^{1 \times n}\), the transient solution of the chain at time t is \(\pi (t) = \pi (0) \exp ( t Q) = \pi (0) \exp ( -t L) \). If \(\pi (0) = e_i^T \), then \(\pi (t)\) carries the probabilities for each state at time t. In particular, the i-th entry (which coincides with the leverage score \(\eta _i\) if \(t=2\tau \)) is the probability of remaining in state i.

5.4 Alternative definition

The definition of \(\eta _i\) in (31) modifies the standard leverage score by using decaying weights, to reduce sensitivity of the number of eigenvectors used. In principle, any decreasing function of eigenvalues can be used to get distinguishable leverage scores of a Laplacian. We consider the following alternative, which is related to the pseudoinverse of the Laplacian:

Several points are worth noting. First, weighted leverage scores emphasize eigenvectors corresponding to small non-zero eigenvalues. Hence, weighted leverage scores reveal the contribution of nodes to the global structure. Second, a smaller weighted leverage score indicates a higher topological importance of a node. Third, calculating the complete set of eigenvectors of L is expensive. Given a parameter r, we can further define r-truncated weighted leverage scores using only r eigenpairs:

For simplicity, we refer to these numbers as leverage scores of L, and use \(\eta = [\eta _1,\cdots ,\eta _n]\) to denote them. A visual example of using \(\eta \) to define the traversal order in Algorithm 3 is given in Figure 10.

The definition (34) has a direct connection with the pseudoinverse of the Laplacian. In particular, the vector \(\eta \) is equal to the diagonal of \(L^\dagger \). To see this, we first notice that L and \(L^\dagger \) have the same set of eigenvectors, and nontrivial eigenvalues are reciprocals of each other. We then write \(L^\dagger \) as \(L^\dagger = U\Sigma ^\dagger U^T\), where \(\Sigma ^\dagger \) is a diagonal matrix with \(0<1/\lambda _n\le \cdots \le 1/\lambda _2\) on the diagonal. Diagonal entries of \(\Sigma ^\dagger \) are non-negative, so we can write

from which we get

The pseudoinverse of L has long been used to denote node importance. The article [117] provides a rather detailed description of the link between \(L^\dagger \) and the various definitions of network properties. The effective resistance distance between nodes a and b is given by \(\omega _{ab} = L^\dagger _{aa} + L^\dagger _{bb} - 2 L^\dagger _{ab}\). The trace of \(L^\dagger \) defines a graph metric called effective graph resistance [49], which is related to random walks [34] and the betweenness centrality [87]. In addition, the columns \(s_j\) of the matrix \(U \sqrt{\Sigma ^\dagger }\) in (36) have a particular significance. The squared distance between two columns \(s_a\) and \(s_b\) is equal to \(\omega _{ab}\) and based on this notion of distance, [97] propose to use \((L_{ii}^\dagger )^{-1}\) as a measure of the topological centrality of a node i. The smaller the distance, the higher its topological centrality. This metric has been used to quantify the roles of nodes in independent networks [108]. Therefore, by using \(\eta \), LESC prioritizes nodes with high importance with respect to this metric.

The leverage score vector \(\eta \) is also related to the change of the Laplacian pseudoinverse when merging a pair of nodes in coarsening. We follow the work by [62] to elucidate this. To simplify the comparison between two matrices with different sizes, consider the following perspective: during coarsening, instead of merging a pair of nodes and reducing the graph size by one, we assign the corresponding edge with an edge weight \(+\infty \). To avoid possible confusion with \(L_c\), we use \(L_{\infty }\) to denote the coarse graph Laplacian (the Laplacian of the \(+\infty \)-weighted graph). Suppose we assign an edge \(e(v_i,v_j)\) with the \(+\infty \) weight, then the difference between L and \(L_{\infty }\) is

where \(b_e\) is defined in (5). Then, the change in \(L^{\dagger }\) is given by the Woodbury matrix identity [54]:

Thus, the magnitude of \(\Delta L^\dagger \) can be defined by the Frobenius norm:

The following result bounds the magnitude of \(\Delta L^\dagger \) by using leverage scores.

Proposition 5.1

Let the graph be connected. The magnitude of the difference between \(L^\dagger \) and \(L_\infty ^\dagger \) caused by assigning the \(+\infty \) edge weight to an edge e(i, j) is bounded by

where \(\kappa \) denotes the effective condition number (i.e., the largest singular value divided by the smallest nonzero singular value).

Proof

Let \(L^{\dagger }=U\Lambda U^T\) be the spectral decomposition of \(L^{\dagger }\) with eigenvalues sorted nonincreasingly: \(\mu _1 \ge \cdots \ge \mu _{n-1} > 0 = \mu _n\). Further, let \(x=\Lambda ^{1/2}U^Tb_e\). Then,

Now consider a lower bound and an upper bound of \(b_e^TL^{\dagger }b_e\). Since \(b_e\) is orthogonal to the vector of all ones (an eigenvector associated with \(\mu _n\)), we have

On the other hand, note that \(b_e^TL^{\dagger }b_e=L^{\dagger }_{ii}+L^{\dagger }_{jj}-2L^{\dagger }_{ij}\) and that \((L^{\dagger }_{ij})^2 \le L^{\dagger }_{ii}L^{\dagger }_{jj}\) (by positive semidefiniteness). Then,

Invoking both the lower bound and the upper bound, we obtain

Then, by noting that \(\mu _1/\mu _{n-1}\) is the effective condition number of \(L^{\dagger }\) (as well as L), we conclude the proof. \(\square \)

5.5 Experimental results

Here, we show an experiment to demonstrate the effective use of LESC to speed up the training of GNNs. We use the leverage scores defined in (35) to conduct the experiments.

We use three data sets for evaluation: D&D [44], REDDIT-BINARY (REBI), and REDDIT-MULTI-5K (RE5K) [125]. The first is a protein data set and the label categories are enzymes and non-enzymes. Each protein is represented by a graph, where nodes represent amino acids and they are connected if the two acids are less than six Angstroms apart. The last two are are social network data sets collected from the online discussion forum Reddit. Each discussion thread is treated as one graph, in which a node represents a user and there is an edge between two nodes if either of the two users respond to each other’s comment. The label categories are community types and discussion topics, respectively. Statistics of the data sets are given in Table 2.

We focus on the task of graph classification. Given a collection of graphs, where some graphs are labeled, this task is to predict the labels of the remaining graphs. For example, protein graphs in D&D will be classified as enzymes or non-enzymes. As motivated in Section 3.3, we classify the coarsened graphs, which are smaller but retain the structural information of the original graphs. We use four GNNs (SortPool [127], DiffPool [126], TopKPool [32, 53], and SAGPool [73]) to perform the task and investigate the change of training time and prediction accuracy under three coarsening schemes (HEM, local variation (LV) [76], and LESC).

Figure 11 summarizes the time (bars) and accuracy (percentages) results. Each column is for one GNN and each row is for one data set. Inside a panel, three coarsening methods are compared, each using three coarsening levels.

When comparing times, note that applying coarsening to graph classification incurs two costs: the time to perform coarsening (as preprocessing) and the time for training. Therefore, we normalize the overall time by that of training a GNN without using coarsening. Hence, a relative time \(<1\) indicates improvement. In fact, the relative time is just the reciprocal of the speedup.