Abstract

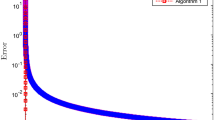

In this paper, we study the strong convergence of two Mann-type inertial extragradient algorithms, which are devised with a new step size, for solving a variational inequality problem with a monotone and Lipschitz continuous operator in real Hilbert spaces. Strong convergence theorems for the suggested algorithms are proved without the prior knowledge of the Lipschitz constant of the operator. Finally, we provide some numerical experiments to illustrate the performance of the proposed algorithms and provide a comparison with related ones.

Similar content being viewed by others

References

An NT, Nam NM, Qin X (2020) Solving \( k \)-center problems involving sets based on optimization techniques. J Glob Optim 76:189–209

Ansari QH, Islam M, Yao JC (2020) Nonsmooth variational inequalities on Hadamard manifolds. Appl Anal 99:340–358

Beck A, Guttmann-Beck N (2019) FOM—a MATLAB toolbox of first-order methods for solving convex optimization problems. Optim Methods Softw 34:172–193

Censor Y, Gibali A, Reich S (2011) The subgradient extragradient method for solving variational inequalities in Hilbert space. J Optim Theory Appl 148:318–335

Cho SY, Kang SM (2012) Approximation of common solutions of variational inequalities via strict pseudocontractions. Acta Math Sci 32:1607–1618

Cho SY, Li W, Kang SM (2013) Convergence analysis of an iterative algorithm for monotone operators. J Inequal Appl 2013:199

Fan J, Liu L, Qin X (2020) A subgradient extragradient algorithm with inertial effects for solving strongly pseudomonotone variational inequalities. Optimization 69:2199–2215

Korpelevich GM (1976) The extragradient method for finding saddle points and other problems. Ekonomikai Matematicheskie Metody 12:747–756

Kraikaew R, Saejung S (2014) Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J Optim Theory Appl 163:399–412

Liu LS (1995) Ishikawa and Mann iteration process with errors for nonlinear strongly accretive mappings in Banach space. J Math Anal Appl 194:114–125

Liu L (2019) A hybrid steepest descent method for solving split feasibility problems involving nonexpansive mappings. J Nonlinear Convex Anal 20:471–488

Liu L, Qin X, Agarwal RP (2019) Iterative methods for fixed points and zero points of nonlinear mappings with applications. Optimization. https://doi.org/10.1080/02331934.2019.1613404

Maingé PE (2008) A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J Control Optim 47:1499–1515

Qin X, An NT (2019) Smoothing algorithms for computing the projection onto a Minkowski sum of convex sets. Comput Optim Appl 74:821–850

Qin X, Wang L, Yao JC (2020) Inertial splitting method for maximal monotone mappings. J Nonlinear Convex Anal 21:2325–2333

Sahu DR, Yao JC, Verma M, Shukla KK (2020) Convergence rate analysis of proximal gradient methods with applications to composite minimization problems. Optimization. https://doi.org/10.1080/02331934.2019.1702040

Shehu Y, Iyiola OS (2017) Strong convergence result for monotone variational inequalities. Numer Algorithms 76:259–282

Shehu Y, Iyiola OS, Li XH, Dong Q-L (2019) Convergence analysis of projection method for variational inequalities. Comput Appl Math 38:161

Tan B, Li S (2020) Strong convergence of inertial Mann algorithms for solving hierarchical fixed point problems. J Nonlinear Var Anal 4:337–355

Tan B, Xu S (2020) Strong convergence of two inertial projection algorithms in Hilbert spaces. J Appl Numer Optim 2:171–186

Tan B, Xu S, Li S (2020) Inertial shrinking projection algorithms for solving hierarchical variational inequality problems. J Nonlinear Convex Anal 21:871–884

Thong DV, Hieu DV (2019) Strong convergence of extragradient methods with a new step size for solving variational inequality problems. Comput Appl Math 38:136

Tseng P (2000) A modified forward–backward splitting method for maximal monotone mappings. SIAM J Control Optim 38:431–446

Wang F, Pham H (2019) On a new algorithm for solving variational inequality and fixed point problems. J Nonlinear Var Anal 3:225–233

Wang X, Ou X, Zhang T, Chen JW (2019) An alternate minimization method beyond positive definite proximal regularization: convergence and complexity. J Nonlinear Var Anal 3:333–355

Xu HK (2002) Iterative algorithms for nonlinear operators. J Lond Math Soc 66:240–256

Yang J, Liu H (2019) Strong convergence result for solving monotone variational inequalities in Hilbert space. Numer Algorithms 80:741–752

Zhou Z, Tan B, Li S (2020) A new accelerated self-adaptive stepsize algorithm with excellent stability for split common fixed point problems. Comput Appl Math 39:220

Acknowledgements

The authors are very grateful to the editor and the anonymous referee for their constructive comments, which significantly improved the original manuscript. We would also like to thank Professor Xiaolong Qin for reading the initial manuscript and giving us many useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Baisheng Yan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tan, B., Fan, J. & Li, S. Self-adaptive inertial extragradient algorithms for solving variational inequality problems. Comp. Appl. Math. 40, 19 (2021). https://doi.org/10.1007/s40314-020-01393-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-020-01393-3

Keywords

- Variational inequality problem

- Subgradient extragradient algorithm

- Tseng’s extragradient algorithm

- Inertial method

- Mann-type method