Abstract

There is emerging interest in the use of discrete choice experiments as a means of quantifying the perceived balance between benefits and risks (quantitative benefit-risk assessment) of new healthcare interventions, such as medicines, under assessment by regulatory agencies. For stated preference data on benefit-risk assessment to be used in regulatory decision making, the methods to generate these data must be valid, reliable and capable of producing meaningful estimates understood by decision makers. Some reporting guidelines exist for discrete choice experiments, and for related methods such as conjoint analysis. However, existing guidelines focus on reporting standards, are general in focus and do not consider the requirements for using discrete choice experiments specifically for quantifying benefit-risk assessments in the context of regulatory decision making. This opinion piece outlines the current state of play in using discrete choice experiments for benefit-risk assessment and proposes key areas needing to be addressed to demonstrate that discrete choice experiments are an appropriate and valid stated preference elicitation method in this context. Methodological research is required to establish: how robust the results of discrete choice experiments are to formats and methods of risk communication; how information in the discrete choice experiment can be presented effectually to respondents; whose preferences should be elicited; the correct underlying utility function and analytical model; the impact of heterogeneity in preferences; and the generalisability of the results. We believe these methodological issues should be addressed, alongside developing a ‘reference case’, before agencies can safely and confidently use discrete choice experiments for quantitative benefit-risk assessment in the context of regulatory decision making for new medicines and healthcare products.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

There is an increasing interest in using discrete choice experiments to quantify individuals’ preferences for benefits and risks (benefit-risk assessment) associated with healthcare, generally, and new technologies and pharmaceuticals, specifically. |

If preference data are to be used in a regulatory context for new pharmaceuticals, they should be generated using robust and reliable methods. |

There remain key issues requiring further research to establish whether discrete choice experiments can be used with confidence in the regulatory context. |

1 Introduction

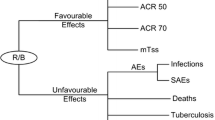

The elicitation of stated preferences using discrete choice experiments (DCEs) is receiving increased attention by health services researchers and policy makers [1]. A particular area of focus is using DCEs as a means of providing information about stated preferences for the perceived balance between the benefits (favourable effects or wanted outcomes) and harms (undesirable effects or unwanted outcomes) [2]. Termed quantitative benefit-risk assessment, the use of DCEs is being considered by national agencies [1] to inform the regulation of new medicines or healthcare products [1, 3,4,5]. In October 2016, the US Food and Drug Administration (FDA) released recommendations for quantifying stated preferences for benefit-risk assessment, using the patient perspective, to inform the regulation of medical devices. These FDA recommendations explicitly cited DCEs as the favoured stated preference elicitation method [6]. Mühlbacher et al. [4] provide an overview of the current European perspective on approaches to benefit-risk assessment. Ongoing projects have also been funded, for example, the Innovative Medicines Initiative-funded ‘PREFER’, to evaluate the role of DCEs, alongside other methods, to inform benefit-risk assessment [7]. This opinion piece aims to identify and discuss the need for key methodological and empirical evidence to inform if, and how, to use DCEs for quantitative benefit-risk assessment in the regulatory context.

Given the emerging interest in using DCEs for quantitative benefit-risk assessment, it is necessary but not sufficient to first establish that the method is reliable and the results generalisable. Although external validity has been stated as the ability to predict real-market behaviour from stated preferences [8], other terms to describe the credibility of DCEs have not been explicitly defined in the literature. For the purpose of this editorial, we use the Streiner et al. [9] definition where a reliable method is one capable of producing consistent estimates, minimising the error inherent in any measurement tool. We define generalisability as the extent to which the results of a study can be transferred by a decision maker to the relevant population and/or healthcare system [10].

Alongside empirical evidence on the methodological reliability of DCEs, a key requirement is a clear description of the key steps in using DCEs as a method (such as a ‘reference case’) and an associated process to use quantitative benefit-risk assessment to inform regulatory decisions. Close analogies can be made with the emergence of reporting criteria [11] and methods guides [12] for using decision-analytic cost-effectiveness analysis (CEA) to inform health technology assessment used in reimbursement decisions and/or the production of clinical guidelines.

Although guidelines exist for the best practice of reporting DCEs [13,14,15,16], and related albeit conceptually different methods such as conjoint analysis, these are general in focus and do not consider the requirements for using these methods in the context of regulatory decision making. Furthermore, the guidelines do not explicitly consider collecting information on quantitative benefit-risk assessment where, as noted by Hauber et al. [15], the analysis is often concerned with in-sample assessment rather than forecasting and predicting demand. The FDA ‘recommendations’ for patient preference information are similarly vaguely defined and generally refer the reader to the existing reporting guidelines [14]. The FDA recommendations do provide the caveat that much research is still required in the area: “FDA acknowledges that quantitative patient preference assessment is an active and evolving research area. We intend this guidance to serve as a catalyst for advancement of the science…” (p. 8).

Although there are examples of high-quality applied studies of DCEs eliciting preferences for the trade-off between benefit and risk in specific examples of healthcare [18,19,20,21,22,23,24,24], methodological advancements to improve or determine the reliability and validity of stated preference methods in the context of informing regulatory decision making have been limited. Existing methodological literature for healthcare DCEs generally focusses on quantitative investigations including, but not limited to: the effect of different choice question styles [26,27,27]; generating and testing efficient experimental designs [28, 29]; and how to take account of preference heterogeneity with more sophisticated econometric models [31,32,32]. There is some empirical evidence on the role of qualitative methods to identify and select attributes and levels for the DCE [34,35,35] and a review of the state of play for using qualitative methods [36]. We suggest that there are further key areas requiring a supportive evidence base to inform whether we are ready to use DCEs to inform quantitative benefit-risk assessment in regulatory decision making. These areas are now described.

2 Communicating Risk

The quantification of preferences for benefit-risk assessment using DCEs relies on risk information being communicated effectively alongside information on the benefits from the medicine or healthcare intervention. Importantly, differences in the type and magnitude of risk must be understood and appreciated by respondents when making their choices. There is a substantial evidence base suggesting a general lack of understanding about ‘risk’ across all demographics (see [37, 38]). Of specific relevance, there is also evidence to suggest risk has not always been successfully communicated to respondents of DCEs [39]. de Bekker-Grob et al. [40] summarised: “Studies have continued to include risk as an attribute. [Previous reviews] noted the difficulties individuals have understanding risk, and they commented on health economists giving little consideration to explaining the risk attribute to respondents. There appears to be little progress…”.

If risk attributes are not understood by respondents during a benefit-risk assessment, then key principles that underpin the use of DCEs will not be upheld. If attributes are not understood as intended, both response and statistical efficiency may be compromised. In this situation, respondents are likely to fail to complete the DCE as intended by not basing choices on a trade-off between risk and the attributes capturing the impact of benefit, resulting in non-compensatory decision making. Importantly, the assumption of continuity in preferences (continuity axiom) may be violated. In an extreme instance, the respondent may engage in deterministic choice making, resulting in an inherent bias in the preference weight estimates and, therefore, the generated marginal rates of substitution used to present the benefit-risk trade-off. If a respondent completely ignores attribute(s), non-attendance may be identified retrospectively in a step-wise analysis of DCE data [41, 42]. However, pre-emptive solutions include the use of appropriate methods to present and describe risk [39] and an iterative prescriptive approach to DCE design tailored to the specific sample. An iterative approach would also allow the use of a Bayesian experimental design whereby priors from a pilot study could be used to improve the statistical efficiency of the DCE, if so desired by the analyst. There is some evidence that the analyst needs to consider the trade-off between design and respondent efficiency [28, 43]. However, the suitability of different experimental designs, particularly in the context of benefit-risk trade-offs, requires further investigation.

No gold standard methods exist for appropriate and valid approaches to risk communication [39] but outside of the DCE literature there are emerging examples of good practice [44, 45]. Risk is a multi-faceted concept. When considering their preferences for risk alongside benefit, individuals may take into account many issues such as the severity of the possible outcome and its irreversibility, the baseline level of risk that is typical for everyone, the duration of risk exposure and whether they will return to a baseline level, the degree of certainty surrounding the risk figure, the objectivity of the risk, preformed perceptions about the individual likelihood of the risk, and risk latency, relating to whether the risk will occur now or in the future [46]. Many of these components are left unexplained in healthcare DCEs, requiring respondents to infer their own values. If respondents’ true interpretation of risk is unobservable to the researcher (for example, they believe the risk is not for a lifetime or that they have taken preventative measures to reduce it), it may result in upwardly biased estimates of acceptability thresholds. Providing insufficient information in a DCE may impede the individual’s ability to make real-life-reflecting choices in the hypothetical choice sets.

As benefit-risk assessments in regulatory decisions are often made for new medicines or healthcare products, there are both aleatory and epistemic risks. New products have epistemic risks related to the uncertainty surrounding the benefit-risk estimates. Aleatory risks refer to the chance of an event occurring, for example, experiencing a side effect. There is some research investigating how imprecision affects valuations of healthcare [47], but further research is required to understand how epistemic risks are traded off against benefits and whether they should be communicated in the same formats. For example, epistemic risks may require confidence intervals or a further description of the ‘unknown’.

The effectiveness of the format to communicate risk or approach to explain the risk will depend on the multi-faceted nature of risk but especially the magnitude of the risk (in terms of both its likelihood and the seriousness of the hazard) [48]. In addition, effective risk communication will be driven by the sample completing the DCE and how the survey is administered (face-to-face; online). The framing used to present the risk, such as positive (e.g. probability of survival) or negative representations (e.g. probability of death) will have an influence [49, 50]; although there is a lack of empirical evidence supporting which framing is best used. Possible approaches to risk communication that may help include pictorial representations of the numerical component including comparative graphs, risk grids or risk ladders [52,53,53]. There are some emerging examples of DCEs employing pictorial representations as advocated in the risk communication literature more generally [23, 54, 55]. However, as yet, there is mixed evidence of the relative effectiveness of the formats and approaches to risk communication in the context of benefit-risk assessment [56, 57]. Demonstrating that the results of DCEs are robust to biases, or at minimum establishing the direction of the potential bias, is vital information if the method is to be used in regulatory decision making.

3 Role of Training Materials

Training materials are a key but under-developed component of DCEs, which are often unpublished and unavailable despite the rising number of journals providing online appendices for supplementary documents. As proposed in the guidelines produced by the International Society For Pharmacoeconomics and Outcomes Research [14], all DCEs should contain an introductory section explaining the role of the survey and its content including the nature of the selected attributes and levels before the choice sets are presented. It is currently unclear the degree to which authors follow these guidelines or the quality of explanations given to survey respondents. A developing approach to improve training materials is the use of interactive tools that capitalise on the move towards using online survey administration methods [58]. Using such engaging training materials, as opposed to a reliance on written information, is in keeping with a core psychology model used to explain health behaviour under risk, called the Capability, Opportunity and Motivation framework that suggests these three factors drive an individual’s decision to choose a type of healthcare. Consistent with each of these factors, using an interactive tool potentially provides respondents with the capability, opportunity and motivation (by providing relevant information) to complete a DCE. If, as Garris et al. [59] suggest, using an interactive training tool (sometimes called a ‘serious game’) causes respondents to become more engaged, then the choice data may be of higher quality; testable through appraisal of the completion rates, opt-outs or internal validity test results. Comprehension tests may also be included to assess the effectiveness of training materials on respondents’ understanding. However, more empirical evidence is needed to test the relative impact of using interactive training materials compared with standard written information.

4 Need to Take Account of Attitudes

There is evidence to suggest that individuals’ inherent feelings and thoughts (their ‘attitudes’) may explain the stated choices and hence influence preferences. For example, one study found health literacy was associated with preferences for vaccinations [60] and another study [61] found substantial improvements in model fit and more precisely estimated parameters when attitudinal and choice data were combined in a study measuring preferences for healthcare plans. There are concerns that measures of attitudes are proxy measures for latent unobservable variables and could therefore induce measurement error [62,63,64]. Furthermore, if unobservable variables that predict choice also predict attitudes, endogeneity bias may also be introduced. Some commentators [65] suggest that the use of hybrid models that jointly model attitudes and preferences are required. However, the approaches to measure attitudes need further attention alongside which models should be used to combine attitudinal and preference data.

5 Whose Risk Preferences?

Defining the relevant study population is an enduring challenge for the administration of DCEs. The selection of the relevant viewpoint for elicited preferences is a particularly important methodological issue to address when using the results to inform regulatory decision making. Outside of DCEs, and in health economics more generally, it has been argued that the general public’s (tax-payers’) preferences should be taken into account for a publically-funded health service [66, 67]. There is some consensus (based on advice from the Panel on Cost-Effectiveness in Health and Medicine) that preferences taken from the public contain a broad range of views, thus making them the most informative for policy makers in healthcare [68]. Although some have argued benefit-risk assessment is fundamentally different to that of CEA as individual patients bear the risk [69]; regulatory decisions are still made at a population level and are not individualised until the clinic.

Clinicians may also have views to be incorporated [66]. Clinicians’ preferences may be seen as part of the wider public view, and their preferences are arguably well informed in a market where information asymmetry is a widespread problem [70]. As a result, their opinions have been incorporated into many decision making processes at all levels from clinician representatives on regulatory advisory committees to the physician-patient discussion in the clinic [71].

Existing FDA recommendations explicitly state benefit-risk trade-off preference data should be patient centred with preferences elicited from ‘well-informed patients’ (p. 11). Restricting preferences for benefit-risk trade-offs exclusively to patients would present a profound shift in UK decision and policy making. Traditionally, public input has been core to decision making based on the underlying argument that social welfare can only be maximised when a societal perspective is taken [72]. Incorporating patients’ preferences into decision making provides a fundamentally different approach to that currently taken by bodies such as the National Institute for Health and Care Excellence, which currently make decisions using preference weights representing the view of the public as inputs into model-based CEA [12, 73]. In the CEA literature, debates about the role of public and patient preferences have started with investigations into the drivers of any differences [74]. However, differences in quantitative benefit-risk assessments elicited by DCEs will be difficult to establish, as preference weights estimated in the regression model are confounded with error variance. Therefore, it is difficult to disentangle heterogeneity in preferences with heterogeneity in error variance termed ‘scale heterogeneity’ or ‘choice consistency’ [75].

Given the status quo recommending the use of public preference weights for other types of economic evidence in decision making, together with the absence of empirical evidence on whether the public or a patient’s preferences take priority, a clear rationale explaining if and why patients, as opposed to the public, clinicians or other stakeholders, should be sampled in DCEs eliciting preferences for benefit-risk trade-offs is required.

6 Analysing Benefit-Risk Preference Data

Regression-based approaches such as the conditional logit model are often used to provide an estimate of average preferences for the sampled population. It is unlikely, however, that everyone in a population shares the same preferences. The degree of preference heterogeneity for benefit-risk trade-offs can be assessed with models which allow for error variance and more flexible distributions of the estimated preference parameters [76, 77]. The rising popularity of the use of mixed logit (continuous distributions) and latent class analysis (discrete distributions) to analyse DCE data has been noted [58]. However, the availability of such methods to identify the presence, and in some cases potential causes, of preference heterogeneity, is not sufficient. It is not clear what a regulatory decision maker should do if preferences for benefit-risk trade-offs are found to be highly heterogeneous. How should the regulators interpret or act on this information? If preference groups can be distinguished by observable characteristics (such as age, sex, experiences or cultural factors), then regulators might seek to tailor their decisions accordingly. Ultimately, acting on this information in a regulatory capacity is advocating a stratified approach to medicine, with strata defined by preferences [78]. If identified preference sub-groups are latent (unobservable), it is not clear how regulators can incorporate this knowledge about unobservable characteristics of the respondents into their decisions. In the presence of identifiable or unknown drivers of preference heterogeneity, further work is needed to understand the implications for creating preference sub-groups within a population.

The majority of healthcare DCE data are analysed under random utility theory frameworks; however, there is some evidence to suggest that alternative analytical perspectives (such as random regret minimisation, elimination by aspects or decision field theory [79]) may be more appropriate. In a study comparing random utility maximisation and random regret minimisation, the authors found in some DCE data the trade-offs implied by the two models differed substantially [80]. Random regret minimisation could be more appropriate when modelling benefit-risk trade-offs. Therefore, the research question context and statistical properties of different models require further investigation to prove the robustness of DCEs as a valuation method.

Regardless of the theoretical stance, studies often assume preferences are linear and do not explore, or report the results of, alternative specifications of the utility function [81]. Misspecification of the utility function could result in upwardly biased estimates and the erroneous conclusion that individuals are willing to accept high levels of risk.

7 Understanding the Generalisability of the Results

To be useful in a regulatory decision making context, in keeping with the use of model-based CEA to inform resource allocation [12], the degree of uncertainty in the results must be quantified. Uncertainty is currently often left unreported in the estimation of marginal rates of substitution such as willingness to accept risk or willingness to pay. Currently, the ratio of two statistically significant coefficients is sometimes assumed to be statistically significant and the confidence interval for the ratio is not disclosed. However, the estimated parameters used in these ratio calculations have a probability distribution that can be specified; therefore, the uncertainty in the marginal rates of substitution can also be presented. Approaches for estimating the uncertainty in the estimated ratio include the Delta or Krinsky–Robb methods [82].

Establishing the validity (the degree to which demand is predicted) of DCEs is vital when using the data to forecast [8]; however, in the context of benefit-risk assessment, its reliability (the ability of the DCE to capture preferences) is of primary importance. Once decision makers are confident that the survey is capable of eliciting preferences from a sample, they must also consider whether these elicited views represent those of the relevant population. The extent to which DCE results may be generalisable can be informed by clearly reporting the context and describing the relevant population of the study. The perceived generalisability of results will also influence the degree of confidence in the estimated benefit-risk ratio. When making an assessment regarding the benefit-risk balance of healthcare interventions, regulators should be clear of the samples whose preferences were elicited as well as the degree of uncertainty in the trade-off estimations.

8 Conclusion

The concept of quantifying preferences for benefit-risk trade-offs is attractive for regulatory decision makers who are aiming to achieve consistency and transparency in their judgements. However, there is currently a lack of sufficient evidence supporting the use of DCEs for this purpose. We acknowledge that others, such as the Medical Device Innovation Consortium’s Patient Centred Benefit-Risk Project report, have identified similar points for future research [83]. We conclude that we are not yet ready to suggest the use of DCEs to provide quantitative evidence of risk-benefit assessment. Key areas for methodological research must be addressed before we believe regulatory agencies can safely use DCEs for benefit-risk assessment. Alongside these methodological issues, we suggest a need for clear processes describing how to use DCE data in regulatory decision making and also further development of existing reporting guidelines [13, 14] to use as the basis for a ‘reference case’ to provide direction on how to appropriately develop evidence of quantitative benefit-risk assessment.

References

Reed SD, Lavezzari G. International experiences in quantitative benefit-risk analysis to support regulatory decisions. Value Health. 2016;19:727–9.

Hauber A, Fairchild AO, Johnson F. Quantifying benefit-risk preferences for medical interventions: an overview of a growing empirical literature. Appl Health Econ Health Policy. 2013;11:319–29.

Ho MP, Gonzalez JM, Lerner HP, et al. Incorporating patient-preference evidence into regulatory decision making. Surg Endosc Other Interv Tech. 2015;29:2984–93.

Muhlbacher AC, Juhnke C, Beyer AR, Garner S. Patient-focused benefit-risk analysis to inform regulatory decisions: the European Union perspective. Value Health. 2016;19:734–40.

Johnson FR, Zhou M. Patient preferences in regulatory benefit-risk assessments: a US perspective. Value Health. 2016;19:741–5.

US Food and Drug Administration. Patient preference information voluntary submission, review in premarket approval applications, humanitarian device exemption applications and de novo requests, and inclusion in decision summaries and device labeling. US Dep Heal Hum Serv Food Drug Adm Cent Devices Radiol Health. 2016; FDA-2015-D. https://www.fda.gov/downloads/medicaldevices/deviceregulationandguidance/guidancedocuments/ucm446680.pdf. Accessed 18 May 2017.

Innovative Medicines Initiative. Patient preferences in benefit-risk assessments during the drug life cycle (PREFER) project. Grant Agreement. No. 115966; 2016.

Lancsar E, Swait J. Reconceptualising the external validity of discrete choice experiments. Pharmacoeconomics. 2014;32:951–65.

Streiner D, Norman G, Cairney J. Health measurement scales. 5th ed. Oxford: Oxford University Press; 2015.

Sculpher MJ, Pang FS, Manca A, et al. Generalisability in economic evaluation studies in healthcare: a review and case studies. Health Technol Assess. 2004;8:iii–iv, 1–192.

Husereau D, Drummond M, Petrou S, et al. Consolidated health economic evaluation reporting standards (CHEERS) statement. BMJ. 2013;346:f1049.

NICE. Guide to the methods of technology appraisal. Process Methods Guide. 2013;1–102. https://www.nice.org.uk/process/pmg9/resources/guide-to-themethods-of-technology-appraisal-2013-pdf-2007975843781. Accessed 18 May 2017.

Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics. 2008;26:661–77.

Bridges JF, Hauber AB, Marshall D, Lloyd A, Prosser LA, Regier DA, Johnson FR, Mauskopf J. Conjoint analysis applications in health—a checklist: a report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14(4):403–13.

Hauber A, Gonzalez JM, Groothuis-Oudshoorn C, et al. Statistical methods for the analysis of discrete-choice experiments: a report of the ISPOR Conjoint Analysis Good Research Practices Task Force (forthcoming). Value Health. 2016;19:300–15.

Johnson F, Lancsar E, Marshall D, et al. Constructing experimental designs for discrete-choice experiments: report of the ISPOR conjoint analysis experimental design good research practices task. Value Health. 2013;16:3–13.

Johnson F, Van Houtven G, Özdemir S, et al. Multiple sclerosis patients’ benefit-risk preferences: serious adverse event risks versus treatment efficacy. J Neurol. 2009;256:554–62.

Johnson F, Özdemir S, Mansfield C, et al. Are adult patients more tolerant of treatment risks than parents of juvenile patients? Risk Anal. 2010;29:121–36.

Hauber AB, Arden NK, Mohamed AF, et al. A discrete-choice experiment of United Kingdom patients’ willingness to risk adverse events for improved function and pain control in osteoarthritis. Osteoarthr Cartil. 2013;21:289–97.

Levitan B, Markowitz M, Mohamed AF, et al. Patients’ preferences related to benefits, risks, and formulations of schizophrenia treatment. Psychiatr Serv. 2015;66:719–26.

Johnson F, Hauber AB, Özdemir S, et al. Are gastroenterologists less tolerant of treatment risks than patients? Benefit-risk preferences in Crohn’s disease management. J Manag Care Pharm. 2010;16:616–28.

Bewtra M, Fairchild AO, Gilroy E, et al. Inflammatory bowel disease patients’ willingness to accept medication risk to avoid future disease relapse. Am J Gastroenterol. 2015;110:1–7.

Hauber AB, Johnson F, Grotzinger KM, Özdemir S. Patients’ benefit-risk preferences for chronic idiopathic thrombocytopenic purpura therapies. Ann Pharmacother. 2010;44:479–88.

Johnson F, Özdemir S, Mansfield C, et al. Crohn’s disease patients’ risk-benefit preferences: serious adverse event risks versus treatment efficacy. Gastroenterology. 2007;133:769–79.

De Bekker-Grob EW, Hol L, Donkers B, et al. Labeled versus unlabeled discrete choice experiments in health economics: an application to colorectal cancer screening. Value Health. 2010;13:315–23.

Potoglou D, Burge P, Flynn T, et al. Best-worst scaling vs. discrete choice experiments: an empirical comparison using social care data. Soc Sci Med. 2011;72:1717–27.

Whitty J, Walker R, Golenko X, Ratcliffe J. A think aloud study comparing the validity and acceptability of discrete choice and best worst scaling methods. PLoS One. 2014;9:e90635.

Flynn TN, Bilger M, Malhotra C, Finkelstein EA. Are efficient designs used in discrete choice experiments too difficult for some respondents? A case study eliciting preferences for end-of-life care. Pharmacoeconomics. 2016;34:273–84.

Bech M, Kjaer T, Lauridsen J. Does the number of choice sets matter? Results from a web survey applying a discrete choice experiment. Health Econ. 2011;20:273–86.

Eberth B, Watson V, Ryan M, et al. Does one size fit all? Investigating heterogeneity in men’s preferences for benign prostatic hyperplasia treatment using mixed logit analysis. Med Decis Mak. 2009;29:707–15.

Hole AR. Modelling heterogeneity in patients’ preferences for the attributes of a general practitioner appointment. J Health Econ. 2008;27:1078–94.

Mentzakis E, Ryan M, Mcnamee P. Using discrete choice experiments to value informal care tasks: exploring preference heterogeneity. Health Econ. 2011;20:930–44.

Kløjgaard M, Bech M, Søgaard R. Designing a stated choice experiment: the value of a qualitative process. J Choice Model. 2012;5:1–18.

Coast J, Al-Janabi H, Sutton E, et al. Using qualitative methods for attribute development for discrete choice experiments: issues and recommendations. Health Econ. 2012;21:730–41.

Coast J, Horrocks SA. Developing attributes and levels for discrete choice experiments using qualitative methods. J Health Serv Res Policy. 2007;12:25–30.

Vass C, Rigby D, Payne K. The role of qualitative research methods in discrete choice experiments: a systematic review and survey of authors. Med Decis Mak. 2017;37:298–313.

Lipkus I, Samsa G, Rimer B. General performance on a numeracy scale among highly educated samples. Med Decis Mak. 2001;21:37–44.

Gigerenzer G, Gaissmaier W, Kurz-Milcke E, et al. Helping doctors and patients make sense of health statistics. Psychol Sci Public Interest. 2007;8:53–96.

Harrison M, Rigby D, Vass C, et al. Risk as an attribute in discrete choice experiments: a critical review. Patient. 2014;7:151–70.

De Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ. 2012;21:145–72.

Lagarde M. Investigating attribute non-attendance and its consequences in choice experiments with latent class models. Health Econ. 2012;22:554–67.

Scarpa R, Gilbride T, Campbell D, Hensher D. Modelling attribute non-attendance in choice experiments for rural landscape valuation. Eur Rev Agric Econ. 2009;36:151–74.

Rose J, Bliemer M. Constructing efficient stated choice experimental designs. Transp Rev. 2009;29:587–617.

Fagerlin A, Zikmund-Fisher B, Ubel PA. Helping patients decide: ten steps to better risk communication. J Natl Cancer Inst. 2011;103:1–8.

Fagerlin A, Ubel P, Smith D, Zikmund-Fisher B. Making numbers matter: present and future research in risk communication. Am J Health Behav. 2007;31(Suppl. 1):S47–56.

Hammitt JK, Graham JD. Willingness to pay for health protection: inadequate sensitivity to probability? J Risk Uncertain. 1999;18:33–62.

Bansback N, Harrison M, Marra C. Does introducing imprecision around probabilities for benefit and harm influence the way people value treatments? Med Decis Mak. 2016;36:490–502.

Ahmed H, Naik G, Willoughby H, Edwards A. Communicating risk. BMJ. 2012;344:1–7.

Veldwijk J, Essers BA, Lambooij MS, et al. Survival or mortality: does risk attribute framing influence decision-making behavior in a discrete choice experiment? Value Health. 2016;19(2):202–9.

Howard K, Salkeld G. Does attribute framing in discrete choice experiments influence willingness to pay? Results from a discrete choice experiment in screening for colorectal cancer. Value Health. 2009;12:354–63.

Schapira MM, Nattinger AB, McHorney CA. Frequency or probability? A qualitative study of risk communication formats used in health care. Med Decis Mak. 2001;21:459–67.

Ancker J, Senathirajah Y, Kukafka R, Starren J. Design features of graphs in health risk communication: a systematic review. J Am Med Inform Assoc. 2006;13:608–19.

Galesic M, Garcia-Retamero R, Gigerenzer G. Using icon arrays to communicate medical risks: overcoming low numeracy. Health Psychol. 2009;28:210–6.

Hauber AB, Gonzalez J, Schenkel B, et al. The value to patients of reducing lesion severity in plaque psoriasis. J Dermatolog Treat. 2011;22:266–75.

Johnson F, Hauber AB, Özdemir S, Lynd L. Quantifying women’s stated benefit-risk trade-off preferences for IBS treatment outcomes. Value Health. 2010;13:418–23.

Veldwijk J, Lambooij MS, van Til JA, et al. Words or graphics to present a discrete choice experiment: does it matter? Patient Educ Couns. 2015;98:1376–84.

Vass C. Using discrete choice experiment to value benefits and risks in primary care (PhD thesis). Manchester: The University of Manchester; 2015.

Clark M, Determann D, Petrou S, et al. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32:883–902.

Garris R, Ahlers R, Driskell JE. Games, motivation, and learning: a research and practice model. Simul gaming. 2002;33(4):441–67.

Veldwijk J, van der Heide I, Rademakers J, et al. Preferences for vaccination: does health literacy make a difference? Med Decis Mak. 2015;35:948–58.

Harris K, Keane M. A model of health plan choice: inferring preferences and perceptions from a combination of revealed preference and attitudinal data. J Econ. 1999;89:131–57.

Hess S, Beharry-Borg N. Accounting for latent attitudes in willingness-to-pay studies: the case of coastal water quality improvements in Tobago. Environ Resour Econ. 2011;52:109–31.

Ashok K, Dillon WR, Yuan S. Extending discrete choice models to incorporate attitudinal and other latent variables. J Mark Res. 2002;39:31–46.

Ben-Akiva M, Mcfadden D, Train K, et al. Hybrid choice models: progress and challenges. Mark Lett. 2002;133:163–75.

Kim J, Rasouli S, Timmermans H. Hybrid choice models: principles and recent progress incorporating social influence and nonlinear utility functions. Procedia Environ Sci. 2014;22:20–34.

De Wit GA, Busschbach JJV, De Charro FT. Sensitivity and perspective in the valuation of health status: whose values count? Health Econ. 2000;9:109–26.

Hadorn DC. The role of public values in setting health care priorities. Soc Sci Med. 1991;32:773–81.

Weinstein M, Siegel J, Gold M. Recommendations of the panel on cost-effectiveness in health and medicine. JAMA. 1996;276:1253–8.

Johnson FR, Hauber AB, Zhang J. Quantifying patient preferences to inform benefit-risk evaluations. In: Sashegyi A, Felli J, Noel R, editors. Benefit-risk assessment in pharmaceutical research and development. Boca Raton: CRC Press; 2014. p. 37–58.

Gafni A, Charles C, Whelan T. The physician-patient encounter: the physician as a perfect agent for the patient versus the informed treatment decision-making model. Soc Sci Med. 1998;47:347–54.

Cox V, Scott MC. FDA advisory committee meetings: what they are, why they happen, and what they mean for regulatory professionals. Regul Rapp. 2014;11:5–8.

Drummond M, Schulpher M, Claxton K, et al. Methods for the economic evaluation of health care programmes. 4th ed. Oxford: Oxford University Press; 2015.

Mühlbacher A, Juhnke C. Patient preferences versus physicians’ judgement: does it make a difference in healthcare decision making? Appl Health Econ Health Policy. 2013;11:163–80.

Brazier J, Akehurst R, Brennan A, et al. Should patients have a greater role in valuing health states? Appl Health Econ Health Policy. 2005;4:201–8.

Swait J, Louviere J. The role of the scale parameter in the estimation and comparison of multinomial logit models. J Mark Res. 1993;30:305–14.

Greene WH, Hensher D. A latent class model for discrete choice analysis: contrasts with mixed logit. Transp Res Part B Methodol. 2003;37:681–98.

Hole AR. Small-sample properties of tests for heteroscedasticity in the conditional logit model. Health Econometrics and Data Group, University of York Working Papers. 2006;06/04.

Rogowski W, Payne K, Schnell-Inderst P, et al. Concepts of “personalization”in personalized medicine: implications for economic evaluation. Pharmacoeconomics. 2015;33:49–59.

McFadden D. Econometric models for probabilistic choice among products. J Bus. 1980;53:S13–29.

de Bekker-Grob EW, Chorus CG. Random regret-based discrete-choice modelling: an application to healthcare. Pharmacoeconomics. 2013;31:623–34.

Van der Pol M, Currie G, Kromm S, Ryan M. Specification of the utility function in discrete choice experiments. Value Health. 2014;17:297–301.

Armstrong P, Garrido R, Ortúzar JDD. Confidence intervals to bound the value of time. Transp Res Part E Logist Transp Rev. 2001;37:143–61.

Medical Device Innovation Consortium. Medical Device Innovation Consortium Patient Centred Benefit-Risk Project report: a framework for incorporating information on patient preferences regarding benefit and risk into regulatory assessments of new medical technology. 2017. http://mdic.org/wp-content/uploads/2015/05/MDIC_PCBR_Framework_Web1.pdf. Accessed 28 Mar 2017.

Acknowledgements

This article was made possible by a grant awarded by The Swedish Foundation for Humanities and Social Sciences for a project entitled ‘Mind the Risk’. Funding was provided by Riksbankens Jubileumsfond (Grant No. M13-0260:1).

Author information

Authors and Affiliations

Contributions

CMV contributed to the conception and writing of this article. CMV gave her final approval of the submitted and revised version of the document. KP contributed to the conception and writing of this article. KP gave her final approval of the submitted and revised version of the document. No one else contributed to this article.

Corresponding author

Ethics declarations

Funding

Caroline M. Vass and Katherine Payne were supported in the preparation and submission of this article by Mind the Risk, from The Swedish Foundation for Humanities and Social Sciences. The views and opinions expressed are those of the authors, and not necessarily those of other Mind the Risk members or The Swedish Foundation for Humanities and Social Sciences.

Conflict of Interest

Caroline M. Vass and Katherine Payne have no conflicts of interest directly relevant to the content of this article.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Vass, C.M., Payne, K. Using Discrete Choice Experiments to Inform the Benefit-Risk Assessment of Medicines: Are We Ready Yet?. PharmacoEconomics 35, 859–866 (2017). https://doi.org/10.1007/s40273-017-0518-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-017-0518-0