Abstract

Background

There has been only limited evaluation of statistical methods for identifying safety risks of drug exposure in observational healthcare data. Simulations can support empirical evaluation, but have not been shown to adequately model the real-world phenomena that challenge observational analyses.

Objectives

To design and evaluate a probabilistic framework (OSIM2) for generating simulated observational healthcare data, and to use this data for evaluating the performance of methods in identifying associations between drug exposure and health outcomes of interest.

Research Design

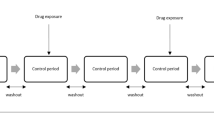

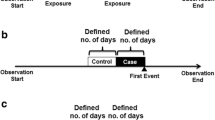

Seven observational designs, including case–control, cohort, self-controlled case series, and self-controlled cohort design were applied to 399 drug-outcome scenarios in 6 simulated datasets with no effect and injected relative risks of 1.25, 1.5, 2, 4, and 10, respectively.

Subjects

Longitudinal data for 10 million simulated patients were generated using a model derived from an administrative claims database, with associated demographics, periods of drug exposure derived from pharmacy dispensings, and medical conditions derived from diagnoses on medical claims.

Measures

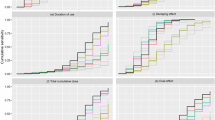

Simulation validation was performed through descriptive comparison with real source data. Method performance was evaluated using Area Under ROC Curve (AUC), bias, and mean squared error.

Results

OSIM2 replicates prevalence and types of confounding observed in real claims data. When simulated data are injected with relative risks (RR) ≥ 2, all designs have good predictive accuracy (AUC > 0.90), but when RR < 2, no methods achieve 100 % predictions. Each method exhibits a different bias profile, which changes with the effect size.

Conclusions

OSIM2 can support methodological research. Results from simulation suggest method operating characteristics are far from nominal properties.

Similar content being viewed by others

References

Ryan PB, Madigan D, Stang PE, Marc Overhage J, Racoosin JA, Hartzema AG, et al. Empirical assessment of methods for risk identification in healthcare data: results from the experiments of the Observational Medical Outcomes Partnership. Stat Med. 2012;31(30):4401–15.

Schuemie MJ, Coloma PM, Straatman H, Herings RM, Trifirò G, Matthews JN, et al. Using electronic health care records for drug safety signal detection: a comparative evaluation of statistical methods. Med Care. 2012;50(10):890–7.

Murray RE, Ryan PB, Reisinger SJ. Design and validation of a data simulation model for longitudinal healthcare data. AMIA Annu Symp Proc. 2011;2011:1176–85.

Fewell Z, Davey Smith G, Sterne JA. The impact of residual and unmeasured confounding in epidemiologic studies: a simulation study. Am J Epidemiol. 2007;166(6):646–55.

Setoguchi S, Schneeweiss S, Brookhart MA, Glynn RJ, Cook EF. Evaluating uses of data mining techniques in propensity score estimation: a simulation study. Pharmacoepidemiol Drug Saf. 2008;17(6):546–55.

Ryan PB, Schuemie MJ, Welebob E, Duke J, Valentine S, Hartzema AG. Defining a reference set to support methodological research in drug safety. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0097-8.

Madigan D, Schuemie MJ, Ryan PB. Empirical performance of the case–control design: lessons for developing a risk identification and analysis system. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0105-z.

Ryan PB, Schuemie MJ, Gruber S, Zorych I, Madigan D. Empirical performance of a new user cohort method: lessons for developing a risk identification and analysis system. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0099-6.

DuMouchel B, Ryan PB, Schuemie MJ, Madigan D. Evaluation of disproportionality safety signaling applied to health care databases. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0106-y.

Norén GN, Bergvall T, Ryan PB, Juhlin K, Schuemie MJ, Madigan D. Empirical performance of the calibrated self-controlled cohort analysis within Temporal Pattern Discovery: lessons for developing a risk identification and analysis system. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0095-x.

Schuemie MJ, Madigan D, Ryan PB. Empirical performance of Longitudinal Gamma Poisson Shrinker (LGPS) and Longitudinal Evaluation of Observational Profiles of Adverse events Related to Drugs (LEOPARD): lessons for developing a risk identification and analysis system. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0107-x.

Ryan PB, Schuemie MJ, Madigan D. Empirical performance of the self-controlled cohort design: lessons for developing a risk identification and analysis system. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0101-3.

Suchard MA, Zorych I, Simpson SE, Schuemie MJ, Ryan PB, Madigan D. Empirical performance of the self-controlled case series design: lessons for developing a risk identification and analysis system. Drug Saf (in this supplement issue). doi:10.1007/s40264-013-0100-4.

Armstrong B. A simple estimator of minimum detectable relative risk, sample size, or power in cohort studies. Am J Epidemiol. 1987;126(2):356–8.

Myers JA, Rassen JA, Gagne JJ, Huybrechts KF, Schneeweiss S, Rothman KJ, et al. Effects of adjusting for instrumental variables on bias and precision of effect estimates. Am J Epidemiol. 2011;174(11):1213–22.

Liu W, Brookhart MA, Schneeweiss S, Mi X, Setoguchi S. Implications of M bias in epidemiologic studies: a simulation study. Am J Epidemiol. 2012;176(10):938–48.

Temple R. Meta-analysis and epidemiologic studies in drug development and postmarketing surveillance. JAMA. 1999;281(9):841–4.

Acknowledgments

The Observational Medical Outcomes Partnership is funded by the Foundation for the National Institutes of Health (FNIH) through generous contributions from the following: Abbott, Amgen Inc., AstraZeneca, Bayer Healthcare Pharmaceuticals, Inc., Biogen Idec, Bristol-Myers Squibb, Eli Lilly & Company, GlaxoSmithKline, Janssen Research and Development, Lundbeck, Inc., Merck & Co., Inc., Novartis Pharmaceuticals Corporation, Pfizer Inc, Pharmaceutical Research Manufacturers of America (PhRMA), Roche, Sanofi-aventis, Schering-Plough Corporation, and Takeda. Drs. Ryan and Schuemie are employees of Janssen Research and Development. Dr. Schuemie received a fellowship from the Office of Medical Policy, Center for Drug Evaluation and Research, Food and Drug Administration. This work was supported by UBC/ProSanos for implementing OSIM2 through funding by FNIH. The authors thank Susan Gruber for the OSIM2 assessment she shared with the OMOP Statistics working group.

This article was published in a supplement sponsored by the Foundation for the National Institutes of Health (FNIH). The supplement was guest edited by Stephen J.W. Evans. It was peer reviewed by Olaf H. Klungel who received a small honorarium to cover out-of-pocket expenses. S.J.W.E has received travel funding from the FNIH to travel to the OMOP symposium and received a fee from FNIH for the review of a protocol for OMOP. O.H.K has received funding for the IMI-PROTECT project from the Innovative Medicines Initiative Joint Undertaking (http://www.imi.europa.eu) under Grant Agreement no 115004, resources of which are composed of financial contribution from the European Union’s Seventh Framework Programme (FP7/2007-2013) and EFPIA companies’ in kind contribution.

Author information

Authors and Affiliations

Corresponding author

Additional information

The OMOP research used data from Truven Health Analytics (formerly the Health Business of Thomson Reuters), and includes MarketScan® Research Databases, represented with MarketScan Lab Supplemental (MSLR, 1.2 m persons), MarketScan Medicare Supplemental Beneficiaries (MDCR, 4.6 m persons), MarketScan Multi-State Medicaid (MDCD, 10.8 m persons), MarketScan Commercial Claims and Encounters (CCAE, 46.5 m persons). Data also provided by Quintiles® Practice Research Database (formerly General Electric’s Electronic Health Record, 11.2 m persons) database. GE is an electronic health record database while the other four databases contain administrative claims data.

Rights and permissions

About this article

Cite this article

Ryan, P.B., Schuemie, M.J. Evaluating Performance of Risk Identification Methods Through a Large-Scale Simulation of Observational Data. Drug Saf 36 (Suppl 1), 171–180 (2013). https://doi.org/10.1007/s40264-013-0110-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40264-013-0110-2